Abstract

Modeling and motion extraction of human upper limbs are essential for interpreting the natural behavior of upper limb. Owing to the high degrees of freedom (DOF) and highly dynamic nature, existing upper limb modeling methods have limited applications. This study proposes a generic modeling and motion extraction method, named Primitive-Based triangular body segment method (P-BTBS), which follows the physiology of upper limbs, allows high accuracy of motion angles, and describes upper-limb motions with high accuracy. For utilizing the upper-limb modular motion model, the motion angles and bones can be selected as per the research topics (The generic nature of the study targets). Additionally, P-BTBS is suitable in most scenarios for estimating spatial coordinates (The generic nature of equipment and technology). Experiments in continuous motions with seven DOFs and upper-limb motion description validated the excellent performance and robustness of P-BTBS in extracting motion information and describing upper-limb motions, respectively. P-BTBS provides a new perspective and mathematical tool for human understanding and exploration of upper-limb motions, which theoretically supports upper-limb research.

Similar content being viewed by others

Introduction

In the past decade, the field of medical robotics has gained momentum and technology has matured1. However, more than one billion people worldwide are disabled, and the number is growing rapidly2. Among them, more than 80% of people disabled due to stroke suffer from upper-limb disability, and only 10% can regain partial mobility of the upper limb after treatment3. Limb motion is highly correlated with neuronal activity (neural plasticity)4,5, which is a potential mechanism for upper-limb motion recovery6,7. Based on this, upper-limb rehabilitation robotics has flourished, providing strong support for upper-limb motion recovery8. Upper-limb rehabilitation robots have the advantages and potential of compensating for motor deficits, motion enhancement, restoration of upper-limb functions, and rehabilitation task performance9,10,11,12.

Despite this, there is still conflict between patients with upper limb disabilities and those undergoing medical rehabilitation. The upper-limb rehabilitation equipment (exoskeleton6,12,13 and end-effector12,14) widely accepted mode of rehabilitation, drives or compensates for the motion of the affected limb, and provides intense rehabilitative training. However, the global outbreak of COVID-19 has severely hampered the rehabilitation of disabled people2 and has greatly reduced the efficiency of rehabilitation. Furthermore, moderate-to-severe patients cannot independently move the affected limb during the pre-rehabilitation period; they can only receive passive rehabilitation training. This model fails to play the key role of the healthy limb and is inefficient in rehabilitation.

The rehabilitation efficacy can be improved by organically integrating the upper-limb rehabilitation robot with the patient’s sense of autonomy. Research on human7,15,16,17 and marmoset4 brains has shown that self-directed actions can repair damaged neural circuits in the brain to some extent. Furthermore, observational learning leads to the activation of the mirror neural system in the observer’s brain18 (observed with fMRI19) and had been shown to improve upper-limb motor function18,20. Mirroring motions21 and observational learning are both initiated by the patient's brain; therefore, the rehabilitation effect can be reversed to act directly on the brain, thus increasing the rehabilitation efficacy. Therefore, extracting motion information from the upper limb and implementing it in the rehabilitation process can lead to optimal upper-limb rehabilitation effects.

Information regarding the motion of the human upper limb consists mainly of motion angles and holistic motion. For upper-limb rehabilitation motion, the holistic motion of the upper limb is of significant concern. The method of analyzing human upper-limb motion information can advance the research process of upper-limb motion recovery. The scope of the analysis should include the major human upper-limb motor bones: clavicle, humerus, forearm, and palm.

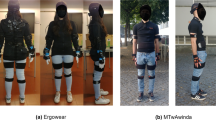

Numerous scholars have analyzed and explored upper-limb motion from different perspectives. Motion capture systems (e.g. VICON), which have become more popular in recent years and can provide accurate information on upper-limb motions, are generally utilized as experimental tools, and are not widely promoted because of their high cost and low interference resistance performance22,23. The Kinect depth camera24 acquired 3D spatial points from different parts of the upper limb and processed the data that can be utilized to build a remote system for assessing upper-limb motility25, determining upper-limb kinematic parameters (joint range of motion, displacement in the local coordinate system, joint smoothness, upper-limb length, etc.)26,27, solving upper-limb joint motion angles28,29,30, combining sliding mode control algorithms for human–machine interaction31, applying Kalman filtering techniques to fuse multiple Kinect data and track human motion32, etc. While Kinect’s human skeleton recognition technology offers the advantages of high flexibility, low cost, and non-invasiveness33, the number of human joint spatial points identified and extracted from the upper limb is very limited. Innovative approaches (multi-camera data fusion32 and inertial sensor compensation34,35) and wearable devices36,37,38 have been developed to improve the accuracy of Kinect depth camera data and measure upper-limb motion angles. However, the simplification of the upper limb to six degrees of freedom (DOFs) and below is inconsistent with the physiological properties of the upper limb. Meanwhile, a sizable research has been conducted for modeling upper-limb motion, including dissecting shoulder motion during the design of the upper-limb exoskeleton (ARMin series39,40,41, HARMONY42, CLEVERarm43, WINDER44, ChARMin45, etc.) and developing Denavit-Hartenberg based models of upper limb forward and reverse kinematics and dynamics46,47, hybrid twist-based model of shoulder kinematics48, rigid body model describing the kinematics of the scapula relative to the sternum49, and a musculoskeletal model of the upper limb50,51,52, etc. The established kinematic models describe localized motions of the upper limb and thus cannot completely describe the overall upper-limb motions.

In this study, we propose a general modelling and kinematic angle-solving method for simplified models of upper limbs with 1–8 DOFs (rotational) and named Primitive-Based triangular body segment method (P-BTBS). P-BTBS defines a triangular primitive space (TPS), maps the problem in Euclidean space to TPS, is utilized for inverse solving of 1–8 DOFs of the upper limb, and provides a mathematical description of upper-limb motions. The results largely satisfied realistic motion of the upper limbs. The coordinate information of a maximum of six spatial points and only one coordinate system transformation is required to analyze and extract the upper-limb motion information. In engineering applications, the Kinect’s human skeleton recognition technique is the optimal method for extracting 3D coordinates, and the six spatial points are largely consistent with those identified by this technique. Therefore, the P-BTBS (Fig. 1) has generic nature of the equipment, technology, and study targets. The ingenious and convenient modelling and calculation method is in line with the physiological characteristics of the upper limb, providing new ideas for upper-limb motion research and theoretical support for upper-limb mirroring rehabilitation21,53,54, motor function assessment42,55,56, upper-limb motion recognition36,57, etc. In conclusion, this study achieved ingenious and convenient kinematic analysis and description that better matched the physiological characteristics of the human upper limb by utilizing multi-spatial fusion, and provided theoretical support to advance the engineering application of upper limb modelling and motion extraction in various research fields.

Overview of P-BTBS. The study targets of P-BTBS are the human upper limb (especially the abnormal upper limb, which is a global problem). The two dark blue triangles indicate the Euclidean space and Triangular primitive space (TPS), respectively. P-BTBS is aimed at dissecting upper-limb motions from the complex composition of the brain, nerves, muscles, and bones. The P-BTBS is suitable for various equipment and technologies (e.g. depth cameras and motion capture systems), and can be applied to a wide range of research areas (especially in the field of the medical robot).

Results

Sources of inspiration

Inspired by the fact that, defect-free Sierpiński triangles can be self-assembled on a silver surface in the microscopic world58, this led us to realize that matter moves with the property of triangles. Then we started to think about whether this was a base motion, which was the origin of the TPS.

Simplified model of the upper limb

As shown in Fig. 2a, the clavicle, scapula, humerus, forearm, and hand were the main components of the upper limb. The hand is composed of many bones including five metacarpals. The joint houses the main components of the upper-limb motion: the sternoclavicular joint (SC), elbow joint (EL), acromioclavicular joint (AC), glenohumeral joint (GH), scapulothoracic joint (ST), wrist joint (WR), and other joints in the palm. Both the EL and WR are composite joints.

Overview of P-BTBS application object and method. (a) Four groups of bones and five joints (the scapulothoracic joint was not considered) are involved in this study. Each group of bones is given a distinctive color, and the body segments are represented by the corresponding color for the different bones. (b) A spatial triangle in Euclidean space consists of three spatial points with an area of S, the projected areas in the X–Y, X–Z, and Y–Z planes are Sm, Sn, and Su, respectively. A spatial triangle in the triangular primitive space (TPS) is represented by a point whose coordinates are (Sm, Sn, Su). (c) The coordinates PW in the world coordinate system can be expressed as PL in the local coordinate system utilizing a coordinate system transformation (rotation \(\begin{array}{*{20}c} {\text{w}} \\ {\text{L}} \\ \end{array} R\) and translation PLORG). Red points indicate actual spatial points on the joints (The locations are shown in Table 1).

To simulate the skeletal motions of the upper limb, the discontinuous line segments are divided, each of which is called the body segment (BS). In this article, the red, green, golden, and dark blue BSs indicate the clavicle, humerus, forearm, and metacarpal, respectively. The red, green, and golden BSs are equal to the length of the clavicle, humerus, and forearm, respectively, and the dark blue BS is equal to the length of the third metacarpal (longest metacarpal). The coordinate system was established using the International Society of Biomechanics (ISB) recommended coordinate system59. In numerous studies, scholars have mechanically simplified the joints of the upper limbs. The following simplifications were made in this study:

-

1.

SC is a rotary joint47,60 with two DOFs rotating around the X- and Y-axes (the basal joint of the entire upper limb and serves as a mechanical support).

-

2.

GH is a ball joint47,61,62 with three DOFs rotating around the X-, Y-, and Z-axes (the largest range of motion joint in the upper limb and greatly extending upper limb motion).

-

3.

EL is a rotary joint47,62 with one DOF rotating around the Z-axes (the composite joint structure contributes significantly to the stability of forearm motion).

-

4.

WR is a rotary joint47,63 with two DOFs rotating around the X- and Z-axes (the composite joint structure contributes significantly to the sophisticated motions and stability of the palm).

-

5.

The clavicle is horizontal (parallel to the X–Z plane) when the arm is in its natural downward state.

The motions of the scapula are special among the limb bones because the motion of the joints connected to the scapula is somewhat complex (sliding and rotation of the SC; translation and rotation of the AC)64 and therefore cannot be conventionally simplified. The scapula has many muscles attached to it, which are used to maintain the stability of the shoulder joint and even the head and neck position and to provide strength for the motion of the upper limbs. Therefore, the most prominent role of the scapula should be reflected in biomechanics49, without denying that the scapula increases the flexibility of the upper limb in terms of kinematics. In addition, the scapula is located under the skin, which makes observing its motion in a non-invasive way difficult65, therefore the visual motion of the scapula was not considered in this study, but the upper-limb motion was observed directly by P-BTBS.

Upper-limb modular motion model and coordinate system transformation

The locations of all actual spatial points and bones are presented in Table 1. The length and spatial geometry relationships are shown in Fig. 3, and the angle between the clavicle and frontal plane was 20 degree66. The geometric features of the virtual spatial points can be obtained according to the definition of triangular spatial points, and the coordinates of the virtual spatial points are calculated using the coordinates of the actual spatial points. The spatial coordinates of P1–2 and P5–7 can be easily obtained as shown in Fig. 3. The spatial coordinates of P11 and P12 can be estimated using Eq. (1), which is obtained from the vertical and length relationships:

where i = 11 or 12, l10,13 denotes the length of vector \(\overrightarrow {L}_{10,13}\).

The definition of upper-limb modular motion model and the relationships between geometric features. Black points indicate virtual spatial points (calculated from actual spatial points), points 1, 2, and 3 are fixed points, and the remaining points are all moving points. Spatial point 3 is the origin of the local coordinate system. (a,b) Figures define the two spatial isosceles triangles of the clavicle and show the initial state of the natural dropping of the arms. (c,d) Figures define the three spatial isosceles triangles of the humerus, \(l_{5,8} \bot l_{6,8} \bot l_{7,8}\). (e,f) Figures define the spatial triangles of the forearm and two spatial isosceles triangles of the metacarpal, \(l_{9,10} \bot l_{10,11} \bot l_{10,12}\). The motion information represented by the spatial triangles is shown in Table 2.

The local coordinate system was established using ISB’s suggested method, with the origin located at point 3. As shown in Fig. 2a,c rotation \(\begin{array}{*{20}c} {\text{w}} \\ {\text{L}} \\ \end{array} R\) and translation PLORG transformation relationship exists between local and world coordinate systems. Rotational transformations follow the form of Z-Y-X Euler angles. The coordinates of spatial point 3 in the world coordinate system are (x3, y3, z3) as a result of Eq. (2).

The rotation matrix of Euler angles as shown in Eq. (3),

where cx = cos(x) and sx = sin(x); α, β, and γ denote the rotation angles around the Z, Y, and X axes, respectively.

In popular spatial position acquisition techniques (e.g. motion capture systems and depth cameras etc.) the posture of the world coordinate system can be adjusted. So, the singularity problem of Euler angles can therefore be mitigated by adjusting the position of the world coordinate system to avoid a 90-degree rotation of the intermediate axes.

As shown in Fig. 3a and b, the coordinates of spatial point 4 are known in the local coordinate system, as shown in Eq. (4),

where l3,4 denotes the length of the vector \(\overrightarrow {L}_{3,4}\).

The transformation relationship for coordinate point 4 is given by Eq. (5), where \({}_{{}}^{\text{W}} P_{4}\) are the coordinates of point 4 in the world coordinate system and acquired by motion capture system.

Substituting Eqs. (2), (3) and (4) into Eq. (5), and a function of α, β, and γ can be determined. As shown in Fig. 6c and d, for the known world coordinate system β = 0°and γ = 90°. The value of α = 88.82° was determined using the Trust-Region-Dogleg algorithm.

Given the values of α, β, and γ, the local coordinates of all spatial points can be evaluated using Eq. (6).

The Euclidean space

The triangular transformation relationship of the simplified upper limb model is presented in Table 2. The triangles can be selected according to the DOFs required to estimate the motion angles and describe the motion of the upper limb, which reflects the generality of the model. If the required DOFs are equal to i, there exist \({\text{C}}_{8}^{i}\) choices.

The motion angles of the upper limb were estimated using Eqs. (7), (8), (9), (10) and (11).

As shown in Fig. 3c and d, \(\overrightarrow {L}_{5,8}\),\(\overrightarrow {L}_{6,8}\), and \(\overrightarrow {L}_{8,7}\) are the normal vectors of the X–Z, X–Y, and Y–Z planes, respectively.

The normal vectors of planes ∆5,8,9, ∆6,8,9, and ∆7,8,9 can be expressed using Eq. (12).

Substituting the normal vectors \({\vec{\mathbf{\mathcal{A}}}},\,\,{\vec{\mathbf{\mathcal{B}}}}\), and \({\vec{\mathbf{\mathcal{C}}}}\) into Eq. (13) to estimate θ3–5:

The calculation of Eqs. (7) to (13) involves only trigonometric functions, which improve the speed of computer response and increase the robustness of P-BTBS.

The upper limb was divided into four segments: clavicle, humerus, forearm, and metacarpal, the lengths and numbers of which are denoted as L1, L2, L3, and L4, respectively. One body segment BS-h (h = 1, 2, 3, 4) is described by k spatial triangles, and the spatial triangle number k ranges from 1 to 3. One spatial triangle, ST(h,k), can be characterized using three spatial points (i.e. points A, B, and C). These three points were selected in a clockwise order. As shown in Fig. 2b, the area of ST(h,k) is denoted as S(h,k), and the projected areas in the X–Y, X–Z, and Y–Z planes are Sm, Sn, and Su, respectively, and can be calculated using Eq. (14),

where det(STx) denotes the determinant of matrix STx.

Area S(h,k) can be estimated using the projected areas in Eq. (14), as shown in Eq. (15),

where \(\vec{\user2{m}}\), \(\vec{\user2{n}}\), and \(\vec{\user2{u}}\) are the unit normal vectors of the X–Y–Z coordinate system.

The area of the h-th BS can be defined as SBS-h, as shown in Eq. (16).

The area of each BS can be estimated using the matrix sum of squares, according to Eq. (16), and matrix Eh was utilized to characterize the BS, as shown in Eq. (17),

where \({}^{\Sigma }P_{x}\) = xx + yx + zx. Pi and Pj are the actual spatial points of BS-h, Pd, …, Pf are virtual spatial points of BS-h, and fi j are functions of x, y, and z.

Vector \(E_{h}^{\text{P}}\) denotes the third column of matrix Eh, as shown in Eq. (18).

The body segment matrix EO in Euclidean space can be determined using Eq. (19),

where Lh denotes the length of the h-th BS.

Triangular primitive space (TPS)

As shown in Fig. 2b, the basic physical quantities in the coordinate system M–N–U in the TPS are the projected areas of the spatial triangle in the X–Y, X–Z, and Y–Z planes, respectively, as shown in Eq. (20). Therefore, a point PTPS in the TPS is equivalent to a spatial triangle in Euclidean space.

The metric distance between point PTPS (Mi, Ni, Ui) and origin point OM- N-U can be estimated using Eqs. (14) and (20), as shown in Eq. (21). Thus, according to Eqs. (20) and (21) and the physical significance of the point in the TPS, there is no singularity issue.

In TPS, a BS is expressed as three points, which has four cases, as shown in Fig. 4. Each case has three points, (Mi, Ni, Ui), (Mj, Nj, Uj), and (Mk, Nk, Uk), where case 4 can be considered as three coincident points located at the origin of the coordinates.

A plane STPS in TPS can be represented as a matrix \(S_{TPS}^{h}\) using three points, as shown in Eq. (22), and det(\(S_{TPS}^{h}\)) = 0 is the equation for this plane.

where h = 1, 2, 3, 4.

The body segment matrix ET in the TPS can be determined using the planes of the four body segments, as shown in Eq. (23).

Action representation and inverse solving

The matrices representing the holistic motion of the upper limb, named the upper-limb body segment matrices, are obtained from the Euclidean space and TPS, respectively, as shown in Eqs. (19) and (23), respectively.

In scientific research and engineering applications, attention has been paid to investigating the upper-limb motion of different bones with diverse rotational DOFs according to various research scenarios and engineering needs. P-BTBS was used to build spatial triangles to estimate the rotation DOFs of bones of interest. A spatial triangle represents the spatial state of the corresponding bone, as shown in Fig. 5.

Diagram of various motions of upper limb and the corresponding spatial triangles. Arbitrary selection of spatial triangles according to the required DOFs. (a) Attention to just one DOF of motion of the hand. (b) Attention to one DOF of clavicle and humerus each. (c) Attention to one DOF of the clavicle and two DOFs of the humerus. (d) Focus on two DOFs of the clavicle, one DOF of the forearm, and one DOF of the palm.

The coordinates of the points in Fig. 4 were first determined by solving matrices ET and \(S_{TPS}^{h}\), then substituted into Eq. (21) to estimate the area, |S(h,k)|, of the corresponding spatial triangle. Obtaining Lh using the matrix EO is straightforward.

Substituting Lh and |S(h,k)| into Eq. (24) to estimate the motion angle of the BS-1-Scapula, BS-3-Forearm, and BS-4-Metacarpal.

θ3-5 for BS-2-Humerus can be estimated by solving Eq. (25), which was optimized using Eq. (13),

where ψ1 = x8–x9, ψ2 = y8–y9, ψ3 = z8–z9.

The coordinates of BS-2-Humerus in the TPS can be calculated using ET with h = 2, i = 3, j = 4, and k = 5 by solving Eq. (22). Substituting the coordinates of BS-2-Humerus and L2 into Eq. (26) to estimate ψ1–3.

The motion angles θ3–5 can finally be estimated by substituting Eq. (26) into Eq. (25).

Simplified model of humeral motion

Humeral motions can be divided into two categories when the initial posture of the arms is in a natural downward state. One category is the combined motion of the X and Y axes, where the X–Y plane is parallel to the sagittal plane, as shown in Fig. 6h. When the initial motion of the humerus tends to move away from the sagittal plane, humerus posture can be decomposed using rotational DOFs around the X and Y axes. This decomposition results from the fact that two DOFs in the X and Y axes can determine any position of the humeral end in front of the sagittal plane.

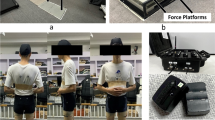

Experimental overview and humerus-motion simplified model. Motion capture lens (a) height and (b) layout. (c) Position and (d) posture of the world coordinate system. The red, green, and blue axes are the X, Y, and Z axes, respectively. The X–Y plane is parallel to the ground, and the local coordinate system is rotated 0° and 90° around the Y and X axes of the world coordinate system, respectively. (e–g) The six points labelled in the diagram are required for the P-BTBS, the remaining points are necessary for the motion capture system to calculate the exact values. The red triangle symbols show the placement of the triangular rulers. (h) and (i) The sagittal plane is parallel to the X–Y plane. The frontal plane is parallel to the Y–Z plane. The origin of the coordinate system is located at spatial point 3.

The other is the combined motion of the Y and Z axes, and the Y–Z plane is parallel to the frontal plane, as shown in Fig. 6i. When the initial motion of the humerus moves away from the frontal plane, humerus posture can be decomposed using rotational DOFs around the Y and Z axes. This decomposition results from the fact that two DOFs in the Y and Z axes can determine any position of the humeral end in front of the coronal plane.

Determining the motion tendency of the humerus during the initial stages is important. Moving from the initial state, the motion category can be determined by comparing the magnitudes of θ4 and θ5. For θ4 < θ5, the motion belongs to the first category. Otherwise, motion belongs to the second category.

Joint angle estimation during continuous motion

For healthy individuals, continuous motion is one of the most important motions in daily life. Continuous motion is a crucial rehabilitation aim for people undergoing upper-limb motion recovery.

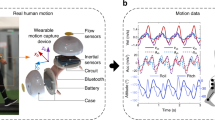

To verify the performance of P-BTBS in solving joint angles during continuous upper-limb motion, motion capture experiments for seven DOFs upper limbs were performed. The experimental setup is illustrated in Fig. 6a and b, in which eight motion capture cameras were evenly distributed around the circumference. The circumference, height, and frame rate were approximately 3.8 m, 2 m, and 60 Hz, respectively. The participants stood at the center of the circumference. And the experimental procedure is shown in Supplementary Video 1.

The participants were in a good physical condition, without upper-limb injuries suffered within a month, and without strenuous exercise performed within a week. The skin near the marker point was first washed with medicinal alcohol before the experiment. The black bandages were then wrapped around key positions to minimize the effect of skin motion, as shown in Fig. 6e–g. Moreover, the black bandage was wrapped around the abdominal area to reduce the influence of the vertical motion of abdominal fat. The inclination angle was approximately 30°. The motion of a rigid body can be captured using a motion capture system with the highest accuracy. To obtain the exact values of the joint angles, several triangular rules were pasted onto the body as rigid bodies.

The P-BTBS is a general method for eight DOFs. To demonstrate the generality and advantage of handling highly redundant upper-limb motions, seven DOFs of the upper limb were chosen as the experimental target. Six upper-limb spatial points (M3, M4, M8, M9, M10, and M13) were selected, as shown in Fig. 6e–g. Seven spatial triangles were built, as shown in Fig. 5. The experimental motions are shown in Fig. 7a, where the initial state was not in naturally downward. The initial state was set up to avoid obscuring the side-marker points of the abdomen. The simplified model of humeral motion evidently shows that the experimental motions belong to the second category.

The experiment of upper-limb motion information extraction during continuous motion. The calculated and exact values are the results of the P-BTBS and motion capture system, respectively. The positive and negative results of subtracting the calculated value from the exact value are filled in the graph in light blue and light orange, respectively. The total error for each group of results is expressed as Root Mean Squared Error (RMSE). (a) Five consecutive upper-limb motions, repeated twice. The initial state is not in the natural downward state to avoid the upper limb obscuring the marker point during the initial. Action 1: a forward lift of the humerus. Action 2: a bend of the elbow. Action 3: a turn of the humerus around the Y axis. Action 4: an internal wrist snap, finishing with a return to the initial position and proceeding to the next motion. (b,c) Two angles of rotational motion of the clavicle. (d,e). The two angles of rotational motion of the humerus. As shown in the simplified model of humeral motion, the angle θ5 has no contribution to the action before the frontal plane, so is not calculated and measured. (f,g). The angle of rotation motion of the forearm and palm.

The experimental motions first started with the initial state and were lifted forward on the humerus to perform motion 1. The elbow joint was bent to perform motion 2, the humerus was rotated around the Y-axis to perform motion 3, and the wrist joint was inwardly bent to perform motion 4. Finally, experimental motion returned to its initial state and repeated the next set of cycles. Two sets of identical experimental motions were used.

The coordinates of the six marker points were obtained from the motion capture system, and the raw data were smoothed using the sliding average filtering algorithm. The calculated values were estimated using P-BTBS. The exact values were acquired from the motion capture system and smoothed using a sliding average filtering algorithm. The motion capture system and P-BTBS differently defined rotation and the values could be opposite; therefore, the exact values were taken as the opposite. In accordance with the simplified model of humeral motion, the θ5 of humerus was not significant in the second category, which does not help determine humeral posture. The angular variation of the remaining six DOFs is shown in Fig. 7b–g.

Root Mean Square Error (RMSE) was used as the error evaluation method in this study, which is widely used in engineering measurements. In practical applications, RMSE is sensitive to the data and measures the deviation between the measured value and the true value, thus reflecting the precision of the measurement method.

The experimental motions were executed twice, and all data had two cycles. The shoulder complex was moved passively throughout the experiment; therefore, the two rotation angles of the clavicle changed minimally. The motion angle θ1 of the clavicle ranged from 0.8733° to 8.9923° with a RMSE of 1.3877°. A forward arm extension was included in the experimental motions, and the θ2 of clavicle varied more than θ1. The motion angle θ2 of the clavicle ranged from − 12.5627° to 1.4329° with an RMSE of 1.0119°. The motion angle θ3 of the humerus ranged from 1.9542° to 38.7424° with an RMSE of 2.0933°. In its initial state, the humerus was lifted forward; therefore, the motion angle θ4 of the humerus ranged from 36.2078° to 85.1273° with an RMSE of 2.2824°. The motion angle θ6 of the forearm ranged from 2.5508° to 89.7897° with an RMSE of 0.9814°. The motion angle θ7 of the hand ranged from − 51.3814° to 7.6649° with an RMSE of 1.8875°.

The exact values obtained from the motion capture system were the gold standard compared with the P-BTBS results and the mean value of RMSE was 1.6074°. Moreover, compared with the traditional vector method, P-BTBS estimated three to eight motion angles with one coordinate transformation, which can reduce the errors caused by multiple transformations.

More importantly, a maximum of six spatial points was required to estimate the eight motion angles of the upper limb. The locations of the six spatial points coincided with those of Kinect's human skeleton recognition technology33, which encourages the application of P-BTBS to most scenarios for estimating the spatial coordinates.

Description of upper-limb motions

Upper-limb motions are complex, unordered, and subconscious. Explaining the subconscious actuation of brain such as picking up objects from a table is challenging. P-BTBS implements the function of describing upper-limb motions through mathematical methods, which provides a way to observe upper-limb motions. P-BTBS constructs a mathematical framework for multi-space fusion and derives models (Eqs. (19) and (23)) for describing upper limb motions in Euclidean space (EO) and TPS (ET), respectively. This mathematical framework not only enriches the motion information mathematically, but more importantly reflects the real upper limb motions, which makes it possible to accurately describe these complex motions of the upper limb.

In the motion description experiments, the participants and experimental field layout were the same as those in the joint angle-estimation experiments. Four motions with the same wiggling amplitude were performed, as shown in Fig. 8a and b. The experimental procedure is shown in Supplementary Video 2. Fixed motions were not chosen because the high contingencies were not sufficient to demonstrate the ability of the P-BTBS to describe upper limb motions. Dynamic wiggling motions are more in line with real-world upper limb motions because of the dynamic nature of the motions in the real world. The four sets of experimental motions were designed because they encompassed the range of upper limb motions (front and side of the body) in everyday life.

The experiment of upper-limb motions description. (a,b) The four groups of motions are described. Each set of motions is performed with the same waggle amplitude. (c) The 2-Norm of ET matrices obtained in TPS is calculated for each frame, with each group of values having a cyclical variation around its mean value, and having a visible difference in the mean values. Just one point per ten data points is displayed, the rest are skipped. (d) The 2-Norm and eigenvalues’ mean value of EO matrix in Euclidean space are calculated for each frame to form a 2D planar map. The points representing the different motions appear in different regions of the 2D plane. Just one point per twenty data points is displayed, the rest are skipped. (e) Principal component analysis (PCA) and data downscaling are performed on the three-dimensional features of each frame, with the three-dimensional features being the 2-Norm of EO, the eigenvalues’ mean value of EO and the 2-Norm of ET, respectively. Just one point per twenty data points is displayed, the rest are skipped.

The EO and ET of each frame were calculated in Euclidean space and TPS, respectively, whereas the features were analyzed only in Euclidean space. The independent variables in ET (i.e. M, N, and U) have physical significance only in TPS. Thus, EO and ET are numerical matrices of dimensions 4 × 4 and 6 × 6 in Euclidean space, respectively.

When the one-dimensional feature of P-BTBS was utilized to describe the motions, the 2-Norm of ET was calculated. The variation with 2-Norm characteristics of the four motions is shown in Fig. 8c. The 2-Norm of ET for each motion has a cyclical variation around its mean value.

When the two-dimensional feature of P-BTBS was utilized to describe the motions, the 2-Norm and eigenvalue mean of EO values were calculated. Each EO has four eigenvalues, which can be complex numbers and exist in pairs. Thus, the mean value of the four eigenvalues remains a real number. The mean values of the eigenvalues of EO and 2-Norm were utilized as the X and Y axes, respectively, as shown in Fig. 8d. A wiggling motion can be clustered in a region.

To verify the accuracy of EO and ET in describing upper-limb motions, a three-dimensional (3D) dimension reduction analysis was performed. The 2-Norm of EO, mean of eigenvalues of EO, and 2-Norm of ET construct a 3D feature for each frame. Principal components (PC) 1 and PC 2 are shown in the X and Y axes of the principal component analysis (PCA) diagram, respectively, with PC1 at 93% and PC2 at 5.3%, as shown in Fig. 8e.

One point in the PCA diagram corresponds to the motion frame. The four sets of wiggling motions corresponded to the four 95% confidence ellipses. The confidence ellipses of motions 3 and 4 were close but did not intersect. This is because motions 3 and 4 are similar, but fundamentally different (fixed relative posture of the humerus and forearm, only humerus rotates around the Y-axis), reflecting both the differences and similarities in upper-limb motions.

In conclusion, P-BTBS can classify and cluster upper-limb motions utilizing one-dimensional features (Fig. 8c), two-dimensional features (Fig. 8d), and 3D dimension reduction features (Fig. 8e), which demonstrates its effectiveness.

Discussion

The above modeling process and experimental results demonstrate that the proposed P-BTBS conforms to the physiology66 of human upper limbs, possesses high accuracy of motion angles (mean value of RMSE is 1.6074°), and describes upper-limb motions with high accuracy. Defining generic upper-limb spatial points extends the application scenario of P-BTBS, which satisfies the generic nature of equipment and technology. Modular motion of the upper limb by constructing multi-space-fusion, which satisfies the generic nature of study targets (1–8 DOFs) in accordance with research topics. Moreover, the upper limb is not a continuous kinematics chain due to the presence of the scapula, and P-BTBScan handle this type of issue. In practical applications, the P-BTBS primary coordinate system transformation eliminates the step of defining multiple coordinate systems (compared to the Denavit-Hartenberg model), reduces the impact of cumulative errors on results (compared to the spatial vector method), simplifies the application process, and extends the range of applicability.

P-BTBS provides a new perspective and tool for understanding and exploring upper-limb motions, which can be utilized in a wide range of fields. Specifically, in the following three fields:

-

1.

Upper-limb rehabilitation8,9,10. P-BTBS can provide rehabilitation robots with information on patients’ motions (for example, mirror rehabilitation)21,54 and physician guidance (such as passive intervention rehabilitation). The information organically integrates the upper-limb rehabilitation robot with the patient's autonomous consciousness, which can improve rehabilitation efficacy and alleviate the conflict between patients and medical rehabilitation. Among existing methods, motion capture systems cannot be widely promoted; multi-camera data fusion, inertial sensors, and wearable devices offer limited DOFs of the upper limb (less than 6 DOFs).

-

2.

Assessment of upper-limb motor function55,56. The P-BTBS can extract upper-limb motion angles and analyze the accuracy of reaching motions, which can be utilized to assess upper-limb motor function. Timely identification of the rehabilitation stage is essential for patients with upper-limb motion disorders due to stroke67. Physicians can accurately grasp patients’ rehabilitation stages using P-BTBS and then develop timely rehabilitation strategies. Among existing methods, the assessment of upper limb motor function lacks diversity (only upper limb motion angles can be assessed, or only motion-reaching ability can be assessed).

-

3.

Motion recognition and classification. Motion recognition and classification have been important research directions68,69. Making the computer “see” or “sense” upper-limb motions is an important part of program execution or function implementation. P-BTBS provides a new mathematical tool for computers to observe human upper-limb motions without training redundant neural networks that utilize abundant samples. Existing methods typically use various neural network models to train images, depth images, biosignals, etc., which is a complicated process with many interfering factors and requires abundant samples.

Upper-limb motions involve both rotation of the joints and a small sliding motion of the plane joints (such as the acromioclavicular joint). The scapula maintains biomechanical stability and provides the drive. However, the small sliding motion and scapula were not considered in P-BTBS. The sliding motion was not considered because even sliding motion can improve upper-limb flexibility to some extent66; there is no clear evidence that sliding motion has an absolute effect on upper-limb motions. The scapula is enveloped by skin and muscle tissue, making its observation with non-invasive methods difficult. Thus, the scapula was simplified as a passively moving bone in P-BTBS. In addition, the P-BTBS analyses a maximum of 8 DOFs, which is a potential limitation in some application scenarios where high DOFs (greater than 8 DOFs) are required.

P-BTBS provides an ingenious and convenient solution for analyzing and extracting human upper-limb motion information, which can be used by most equipment for estimating spatial coordinates. The extraction and description of upper-limb motion information can provide theoretical references for practical engineering applications. Moreover, combined with Internet of Things technology, P-BTBS has promising potential for applications, such as upper-limb rehabilitation, motor function assessment, computerized recognition and classification, behavioral monitoring, senior care, human–robot interaction, and robot control strategies.

In the future, P-BTBS will be committed to promoting engineering applications and experimental explorations. P-BTBS can be integrated into upper-limb rehabilitation robots to conduct upper-limb rehabilitation after stroke, which can improve human–machine interaction, enrich rehabilitation modalities, and increase rehabilitation efficacy (Supplementary Video 1).

Methods

Data acquisition and analytics

Eight optical motion capture cameras were used to cover the entire experimental field, and the frame rate was set to 60 Hz. The data were collected and processed offline using a host computer. All raw data were filtered using a sliding average filter algorithm with filter type of moving average and a filter coefficient of 25.

The virtual spatial points P11 and P12 held singular positions when line segment l9,10 was vertically horizontal (ground), resulting in multiple sets of solutions of Eq. (1). In the singular position, the following equation holds, and the coordinates of P11 and P12 are selected as (x10, y10, z10 + L10,13) and (x10 + L10,13, y10, z10), respectively:

Ethics approval and informed consent

All experimental protocols and methods were approved by the Medical Ethics Committee of Harbin Institute of Technology with Ethics No: HIT-2022032 and carried out in accordance with relevant guidelines and regulations. This study confirms that informed consent was obtained from all subjects and/or their legal guardian(s). This study obtained informed consent from all subjects and/or their legal guardian(s) for the publication of identifying information/images in an online open-access publication.

Data availability

The data supporting the findings of this study are available from the corresponding author upon reasonable request.

Code availability

Codes that support the findings of this study are available from the corresponding author upon reasonable request.

References

Dupont, P. E. et al. A decade retrospective of medical robotics research from 2010 to 2020. Sci. Robot. 6(60), eabi8017 (2021).

World Health Organization. In: Disability and health. www.who.int/news-room/fact-sheets/detail/disability-and-health (2022).

Kwakkel, G. et al. Probability of regaining dexterity in the flaccid upper limb: Impact of severity of paresis and time since onset in acute stroke. Stroke 34(9), 2181–2186 (2003).

Ebina, T. et al. Two-photon imaging of neuronal activity in motor cortex of marmosets during upper-limb movement tasks. Nat. Commun. 9(1), 1879 (2018).

Greiner, N. et al. Recruitment of upper-limb motoneurons with epidural electrical stimulation of the cervical spinal cord. Nat. Commun. 12(1), 435 (2021).

Kim, J. et al. Clinical efficacy of upper limb robotic therapy in people with tetraplegia: A pilot randomized controlled trial. Spinal Cord 57(1), 49–57 (2019).

Kolb, B., Gibb, R. & Robinson, T. E. Brain plasticity and behavior. Curr. Dir. Psychol. Sci. 12(1), 1–5 (2003).

Qassim, H. M. & Wan Hasan, W. Z. A review on upper limb rehabilitation robots. Appl. Sci. 10(19), 6976 (2020).

Chiappalone, M. & Semprini, M. Using robots to advance clinical translation in neurorehabilitation. Sci. Robot. 7(64), eabo1966 (2022).

Gupta, A., Singh, A., Verma, V., Mondal, A. K. & Gupta, M. K. Developments and clinical evaluations of robotic exoskeleton technology for human upper-limb rehabilitation. Adv. Robot. 34(15), 1023–1040 (2020).

Gull, M. A., Bai, S. & Bak, T. A. Review on design of upper limb exoskeletons. Robotics 9(1), 16 (2020).

Lee, S. H. et al. Comparisons between end-effector and exoskeleton rehabilitation robots regarding upper extremity function among chronic stroke patients with moderate-to-severe upper limb impairment. Sci. Rep. 10(1), 1–8 (2020).

Basteris, A. & Nijenhuis, S. Training modalities in robot-mediated upper limb rehabilitation in stroke: A framework for classification based on a systematic review. J. Neuroeng. 11, 1–15 (2014).

Molteni, F., Gasperini, G., Cannaviello, G. & Guanziroli, E. Exoskeleton and end-effector robots for upper and lower limbs rehabilitation: Narrative review. PMR 10(9), S174–S188 (2018).

Cramer, S. C. et al. Harnessing neuroplasticity for clinical applications. Brain 134(6), 1591–1609 (2011).

Marins, T. & Tovar-Moll, F. Using neurofeedback to induce and explore brain plasticity. Trends Neurosci. 45, 415–416 (2022).

Kolb, B. & Gibb, R. Searching for the principles of brain plasticity and behavior. Cortex 58, 251–260 (2014).

Buccino, G. Action observation treatment: A novel tool in neurorehabilitation. Philos. Trans. R. Soc. Lond. B Biol. Sci. 369(1644), 20130185 (2014).

Calvo-Merino, B., Glaser, D. E., Grezes, J., Passingham, R. E. & Haggard, P. Action observation and acquired motor skills: An FMRI study with expert dancers. Cereb. Cortex 15(8), 1243–1249 (2005).

Borges, L. R. D. M. et al. Action observation for upper limb rehabilitation after stroke. Cochrane Database Syst. Rev. https://doi.org/10.1002/14651858.CD011887.pub3 (2022).

Bai, Z., Zhang, J., Zhang, Z., Shu, T. & Niu, W. Comparison between movement-based and task-based mirror therapies on improving upper limb functions in patients with stroke: A pilot randomized controlled trial. Front. Neurol. 10, 288 (2019).

Migueles, J. H. et al. Accelerometer data collection and processing criteria to assess physical activity and other outcomes: A systematic review and practical considerations. Sports Med. 47, 1821–1845 (2017).

Bortolini, M., Gamberi, M., Pilati, F. & Regattieri, A. Automatic assessment of the ergonomic risk for manual manufacturing and assembly activities through optical motion capture technology. Procedia CIRP 72, 81–86 (2018).

Tolgyessy, M., Dekan, M., Chovanec, L. & Hubinsky, P. Evaluation of the azure kinect and its comparison to kinect V1 and kinect V2. Sensors 21(2), 413 (2021).

Çubukçu, B., Yüzgeç, U., Zı̇lelı̇, A. & Zı̇lelı̇, R. Kinect-based integrated physiotherapy mentor application for shoulder damage. Fut. Gener. Comput. Syst. 122, 105–116 (2021).

Zhao, K. et al. Upper extremity kinematic parameters: reference ranges based on kinect V2. In: 2021 27th International Conference on Mechatronics and Machine Vision in Practice (M2VIP), IEEE, 30–35 (2021).

Sinha, S., Bhowmick, B., Chakravarty, K., Sinha, A. & Das A. Accurate upper body rehabilitation system using kinect. In: 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE, 4605–4609 (2016).

Neto, J. S. D. C. et al. Dynamic evaluation and treatment of the movement amplitude using kinect sensor. IEEE Access. 6, 17292–17305 (2018).

Kösesoy, İ, Öz, C., Aslan, F., Köroğlu, F. & Yığılıtaş, M. Reliability and validity of an innovative method of ROM measurement using Microsoft Kinect V2. Pamukkale Univ. J. Eng. Sci. 24(5), 915–920 (2018).

Lee, S. H. et al. Measurement of shoulder range of motion in patients with adhesive capsulitis using a kinect. PLoS ONE 10(6), e0129398 (2015).

Ben Abdallah, I., Bouteraa, Y. & Rekik, C. Kinect-based sliding mode control for lynxmotion robotic arm. Adv. Hum.-Comput. Interact. https://doi.org/10.1155/2016/7921295 (2016).

Moon, S., Park, Y., Ko, D. W. & Suh, I. H. Multiple kinect sensor fusion for human skeleton tracking using kalman filtering. Int. J. Adv. Robot. Syst. 13(2), 65 (2016).

Rashid, F., Suriani, N. S., Nazari, A. & Science, C. Kinect-based physiotherapy and assessment: A comprehensive review. Indones. J. Electr. Eng. Comput. Sci. 5(3), 401–408 (2017).

Du, Y.-C., Shih, C.-B., Fan, S.-C., Lin, H.-T. & Chen, P.-J. An IMU-compensated skeletal tracking system using kinect for the upper limb. Microsyst. Technol. 24, 4317–4327 (2018).

Wijayapala, M. P., Premaratne, H. L. & Jayamanne, I. T. Motion tracking by sensors for real-time human skeleton animation. Int. J. Adv. ICT Emerg. Reg. (ICTer) 9(2), 10–21 (2016).

Liu, S., Zhang, J., Zhang, Y. & Zhu, R. A wearable motion capture device able to detect dynamic motion of human limbs. Nat. Commun. 11(1), 5615 (2020).

Milazzo, M. et al. AUTOMA: A wearable device to assess the upper limb muscular activity in patients with neuromuscular disorders. Acta Myol. 40(4), 143–151 (2021).

Walmsley, C. P. et al. Measurement of upper limb range of motion using wearable sensors: A systematic review. Sports Med. Open 4, 1–22 (2018).

Nef, T. & Riener, R. ARMin - Design of a novel arm rehabilitation robot. In: 9th International Conference on Rehabilitation Robotics, IEEE, 57–60 (2005).

Mihelj, M., Nef, T. & Riener, R. ARMin II-7 DoF rehabilitation robot: Mechanics and kinematics. In: Proceedings 2007 IEEE International Conference on Robotics and Automation, IEEE, 4120–4125 (2007).

Nef, T., Guidali, M. & Riener, R. ARMin III—Arm therapy exoskeleton with an ergonomic shoulder actuation. Appl. Bionics Biomech. 6(2), 127–142 (2009).

De Oliveira, A. C., Sulzer, J. S. & Deshpande, A. D. Assessment of upper-extremity joint angles using harmony exoskeleton. IEEE Trans. Neural Syst. Rehabil. Eng. 29, 916–925 (2021).

Zeiaee, A., Zarrin, R. S., Eib, A., Langari, R. & Tafreshi, R. CLEVERarm: A lightweight and compact exoskeleton for upper-limb rehabilitation. IEEE Robot. Autom. Lett. 7(2), 1880–1887 (2022).

Park, D. et al. WINDER:Shoulder-sidewinder (shoulder-side wearable industrial ergonomic robot): Design and evaluation of shoulder wearable robot with mechanisms to compensate for joint misalignment. IEEE Trans. Robot. 38(3), 1460–1471 (2021).

Keller, U., van Hedel, H. J. A., Klamroth-Marganska, V. & Riener, R. ChARMin: The first actuated exoskeleton robot for pediatric arm rehabilitation. IEEE/ASME Trans. Mechatron. 21, 2201–2213 (2016).

Risteiu, M. N., Rosca, S. D. & Leba, M. 3D modelling and simulation of human upper limb. IOP Conf. Ser. 572(1), 012094 (2019).

Rossa, C., Najafi, M., Tavakoli, M. & Adams, K. Robotic rehabilitation and assistance for individuals with movement disorders based on a kinematic model of the upper limb. IEEE Trans. Med. Robot. Bionics 3, 190–203 (2021).

Krishnan, R., Bjrsell, N. & Smith, C. Invariant spatial parametrization of human thoracohumeral kinematics: A feasibility study. In 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2016) (eds Krishnan, R. et al.) 4469–4476 (IEEE, 2016).

Seth, A., Matias, R., Veloso, A. P. & Delp, S. L. A biomechanical model of the scapulothoracic joint to accurately capture scapular kinematics during shoulder movements. PLoS ONE 11, e0141028 (2016).

Seth, A., Dong, M., Matias, R. & Delp, S. Muscle contributions to upper-extremity movement and work from a musculoskeletal model of the human shoulder. Front. Neurorobot. 13, 90 (2019).

Saul, K. R. et al. Benchmarking of dynamic simulation predictions in two software platforms using an upper limb musculoskeletal model. Comput. Methods Biomech. Biomed. Eng. 18, 1445–1458 (2015).

Nasiri, R., Aftabi, H. & Ahmadabadi, M. N. Human-in-the-loop weight compensation in upper limb wearable robots towards total muscles’ effort minimization. IEEE Robot. Autom. Lett. 7, 3273–3278 (2022).

Xiao, W. et al. AI-driven rehabilitation and assistive robotic system with intelligent PID controller based on RBF neural networks. Neural Comput. Appl. https://doi.org/10.1007/s00521-021-06785-y (2022).

Santiago, A. B. G., Cáceres, C. M. M. & Hernández-Morante, J. J. Effectiveness of intensively applied mirror therapy in older patients with post-stroke hemiplegia: A preliminary trial. Eur. Neurol. 85, 291–299 (2022).

Pan, B. et al. Motor function assessment of upper limb in stroke patients. J. Healthcare Eng. 2021, 6621950 (2021).

Balasubramanian, S., Colombo, R., Sterpi, I., Sanguineti, V. & Burdet, E. Robotic assessment of upper limb motor function after stroke. Am. J. Phys. Med. Rehabil. 91, S255-269 (2012).

Da Gama, A. E. F., Chaves, Td. M., Fallavollita, P., Figueiredo, L. S. & Teichrieb, V. Rehabilitation motion recognition based on the international biomechanical standards. Expert Syst. Appl. 116, 396–409 (2019).

Tait, S. L. Surface chemistry: Self-assembling Sierpinski triangles. Nat. Chem. 7, 370–371 (2015).

Wu, G. et al. ISB recommendation on definitions of joint coordinate systems of various joints for the reporting of human joint motion–Part II: Shoulder, elbow, wrist and hand. J. Biomech. 38, 981–992 (2005).

Schnorenberg, A. J. & Slavens, B. A. Effect of rotation sequence on thoracohumeral joint kinematics during various shoulder postures. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2021, 4912–4915 (2021).

Madrigal, J. A. B., Negrete, J. C., Guerrero, R. M., Rodríguez, L. A. C. & Sossa, H. 3D motion tracking of the shoulder joint with respect to the thorax using MARG sensors and data fusion algorithm. Biocybern. Biomed. Eng. 40, 1205–1224 (2020).

Latella, C. et al. Analysis of human whole-body joint torques during overhead work with a passive exoskeleton. IEEE Trans. Hum.-Mach. Syst. 52(5), 1060–1068 (2021).

Puchinger, M., Stefanek, P., Gstaltner, K., Pandy, M. G. & Gfohler, M. In vivo biomechanical assessment of a novel handle-based wheelchair drive. IEEE Trans. Neural Syst. Rehabil. Eng. 29, 1669–1678 (2021).

Du, T. & Yanai, T. Critical scapula motions for preventing subacromial impingement in fully-tethered front-crawl swimming. Sports Biomech. 21, 121–141 (2022).

Srikumaran, U. et al. Scapular winging: A great masquerader of shoulder disorders: AAOS exhibit selection. JBJS 96, e122 (2014).

Yeşilyaprak, S. S. Upper extremity. In Comparative Kinesiology of the Human Body (ed. Yeşilyaprak, S. S.) 157–282 (Elsevier, 2020).

Ingram, L. A., Butler, A. A., Brodie, M. A., Lord, S. R. & Gandevia, S. C. Quantifying upper limb motor impairment in chronic stroke: A physiological profiling approach. J. Appl. Physiol. 131, 949–965 (2021).

Yu, J., Qin, M. & Zhou, S. Dynamic gesture recognition based on 2D convolutional neural network and feature fusion. Sci. Rep. 12, 4345 (2022).

Golestani, N. & Moghaddam, M. Human activity recognition using magnetic induction-based motion signals and deep recurrent neural networks. Nat. Commun. 11, 1551 (2020).

Acknowledgements

We thank Bo Huang, Jianwen Zhao, and Ming Zhong (State Key Laboratory of Robotics and System, Harbin Institute of Technology) for their helpful discussions. This work is supported by the National Natural Science Foundation of China (#91648106) and a grant from the Tianzhi Institute of Innovation and Technology (#TZ-ZZ-202002).

Author information

Authors and Affiliations

Contributions

H.W. and Y.Y. conceptualized the TPS and upper limb motion mechanism. H.W. proposed P-BTBS, designed the experiment system, and analyzed data. H.W., S.P. and J.W. performed the experiments. Y.Y. was the supervisor of the research. H.W. prepared Figs. 1, 2, 3 and 4 and J.G. prepared Figs. 5, 6, 7and 8. H.W. and J.G. co-wrote the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary Video 1.

Supplementary Video 2.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, H., Guo, J., Pei, S. et al. Upper limb modeling and motion extraction based on multi-space-fusion. Sci Rep 13, 16101 (2023). https://doi.org/10.1038/s41598-023-36767-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-36767-0

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.