Abstract

Coastal communities in various regions of the world are exposed to risk from tsunami inundation, requiring reliable modeling tools for implementing effective disaster preparedness and management strategies. This study advocates for comprehensive multi-variable models and emphasizes the limitations of traditional univariate fragility functions by leveraging a large, detailed dataset of ex-post damage surveys for the 2011 Great East Japan tsunami, hydrodynamic modeling of the event, and advanced machine learning techniques. It investigates the complex interplay of factors influencing building vulnerability to tsunami, with a specific focus on the hydrodynamic effects associated to tsunami propagation on land. Novel synthetic variables representing shielding and debris impact mechanisms prove to be suitable proxies for water velocity, offering a practical solution for rapid damage assessments, especially in post-event scenarios or large-scale analyses. Machine learning then emerges as a promising approach to tackle the complexities of vulnerability assessment, while providing valuable and interpretable insights.

Similar content being viewed by others

Introduction

Tsunamis, as devastating natural phenomena, present challenging threats to coastal communities across the globe. As such, gaining a profound understanding of their behavior and potential impacts stands as an essential requirement for effective disaster preparedness and mitigation.

However, attaining this objective remains a complex task, given the multifaceted interplay of factors influencing assets’ vulnerability to tsunamis. If focusing on buildings, these factors encompass their shape, structural features, construction material, foundation type and the characteristics of the surrounding environment1,2,3. From a modeling perspective, fragility functions serve as the standard tool for quantitatively assessing the probability of a building to reach or exceed a certain damage state based on a tsunami intensity measure, under the simplifying assumption that a single hazard variable alone determines the level of damage4,5.

The development of fragility functions relies on three essential components: tsunami inundation data, damage data and a model linking the two. The method for damage data collection distinguishes them into: empirical functions, deriving data from ex-post tsunami surveys or physical experiments; synthetic functions, relying on expert damage elicitation; analytical functions, based on numerical simulations of structural damage; and hybrid functions based on a combination of these techniques. In the literature, empirical fragility functions are the most prevalent among the available options, with inundation depth primarily used as the key intensity measure, due to its ease of measurement in post-tsunami surveys of water marks1,3,5.

However, it is widely acknowledged that relying solely on maximum water depth does not fully capture the complex damage mechanisms during tsunami inundation. As a result, various studies have highlighted the importance of expressing fragility functions in terms of additional tsunami intensity measures, such as flow velocity, momentum flux or hydrodynamic force to enhance the accuracy of damage estimations4,6,7,8,9,10,11,12,13. Nevertheless, estimating velocity still remains a challenge in the field of tsunami research, particularly when considering its importance for enhancing damage predictions. While numerical simulations can be employed, they often demand extensive computational resources and may not consistently yield accurate results relative to the complexity of the task. In light of these challenges, many researchers have proposed practical methods to estimate tsunami flow velocities (vc) from inundation depth (h) based on Bernoulli’s theorem and field or experimental data, which have resulted in identified relationships ranging between \(0.7\sqrt{{gh}}\) and \(2.0\sqrt{{gh}}\)11,14,15,16\(,\) where g is the gravitational acceleration.

Furthermore, when considering a specific intensity measure, the degree of variability introduced by interconnected factors cannot be treated in isolation through the development of separate fragility functions for each variable. Neglecting these interdependencies can indeed lead to inaccurate assessments of structural vulnerability and associated risk. Consequently, researchers are increasingly adopting multi-variable models to address these mutual interactions among the variables at stake and to account for the uncertainty arising from the combined effects of the different damage-influencing variables in the modeling process.

In recent years, as emerged for other natural hazards17,18,19,20, advancements in machine learning have shown promise in addressing the challenges posed by the multi-dimensionality of tsunami damage mechanisms21,22,23.

In this context, Di Bacco et al.23 implemented different machine learning algorithms on an enhanced version of the building damage dataset compiled by the MLIT (Ministry of Land, Infrastructure, Transport and Tourism of Japan) for the 2011 Great East Japan event24,25. The aim was to capture nonlinear interactions among descriptive variables and analyze the relative importance of the different features on modeling accuracy. In addition to traditional vulnerability factors, the study considered several building- and site-related features that are often regarded as important in the literature but are practically overlooked in existing damage models. These factors, assessed by using a buffer-based approach, included proxies for the potential shielding effect occurring between interacting buildings or seawalls, as well as for the impact of collapsed buildings acting as a source of debris8,9,26,27,28,29. From a physical point of view, obstructions can be seen as barriers reducing water velocity and impact forces on other structures, while debris from collapsed buildings can negatively amplify the damaging effects of the tsunami. Therefore, the global variables introduced by Di Bacco et al.23 may be theoretically deemed as proxies for flow velocity, capturing the hydrodynamic effects associated with tsunami propagation. This potential would allow for their practical application in rapid tsunami damage assessments, particularly when direct water velocity data are unavailable (e.g., in the aftermath of an event or for large-scale analysis). The first results from ref. 23 revealed that the new factors describing the mutual interaction between inundated buildings played a critical role in tsunami damage modeling, even surpassing the importance of typical vulnerability features used in fragility functions, such as the structural type and the number of floors (NF) of the buildings.

Based on these considerations, the present study employs a machine learning approach on the extended MLIT dataset to assess the relative importance of various features in driving tsunami damage, with a specific focus on the influence of variables directly or indirectly linked to water velocity. Specifically, we examine the effect on predictive model accuracy of three different approaches for representing hydrodynamic impacts on buildings, as follows:

-

1.

Direct velocity estimation: this approach involves utilizing water velocity data at the building’s location, acquired through a detailed hydrodynamic model of the event (vsim);

-

2.

Indirect velocity estimation: water velocity is assessed by leveraging established empirical relationships as a function of inundation depth (vc);

-

3.

Synthetic buffer-related proxies: these variables, introduced by Di Bacco et al.23, are employed to represent the possible reduction or amplification of the hydrodynamic effects on impacted buildings generated by shielding and debris impact mechanisms.

Moreover, our analysis extends beyond the initial findings to achieve a comprehensive understanding of the intricate relationships among the variables that influence tsunami damage mechanisms. This approach aims at addressing a conventional critique to machine learning, which often faces concerns due to its perceived black-box nature30,31,32. We then proceed to demonstrate that explicit and informative insights can be still derived also from such data-driven approaches. This will be achieved by representing modeling outcomes using traditional fragility functions with the indication of confidence intervals, which can provide valuable information about the inherent complexity stemming from the multi-variable nature of tsunami damage, thus serving as a means to highlight potential limitations associated with current standard approaches employed for tsunami vulnerability assessment.

Results and discussion

Synthetic variables for shielding mechanism and debris impact as proxies for water velocity

To comprehensively analyze the individual contributions of the three approaches for accounting for water velocity, we systematically trained different eXtra Trees (XT) models33, each featuring a unique combination of input variables. The reference scenario (ID0) serves as both the initial benchmark and foundational baseline, encompassing the minimum set of variables retained across all subsequent scenarios. This baseline incorporates only basic input variables sourced from the original MLIT database, further enriched with some of the geospatial variables introduced by Di Bacco et al. characterized by the most straightforward computation23. Subsequently, the additional models are generated by iteratively introducing velocity-related (directly or indirectly) features into the model. This stepwise approach allows us to isolate the incremental improvements in predictive accuracy attributed to each individual component under consideration. Table 1 in “Methods” offers a concise overview of all tested variables, with those included in the reference scenario highlighted in italics.

The core results of the analysis aimed at assessing the predictive performance variability among the various trained models are summarized in Fig. 1, which illustrates the global average accuracy (expressed in terms of hit rate (HR) on the test set) achieved by each model across ten training sessions. In the figure, each column represents a specific combination of input features, with x markers indicating excluded variables during each model training. Insights into the importance of individual input features on the models’ predictive performance are provided by the circles, the size of which corresponds to the mean decrease in accuracy (mda) when each single variable is randomly shuffled.

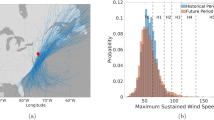

The pair plot in Fig. 2, illustrating the correlations and distributions among considered velocity-related variables as well as Distance across the seven damage classes in the MLIT dataset, has been generated to support the interpretation of the results and enrich the discussion. This graphical representation employs scatter plots to display the relationships between each pair of variables, while the diagonal axis represents kernel density plots for the individual features.

The baseline model (ID0), established as a reference due to its exclusion of any velocity information, attains an average accuracy of 0.836. In ID1, the model exclusively incorporates the direct contribution of vsim, resulting in a modest improvement, with accuracy reaching 0.848. The subsequent model, ID2, closely resembling ID1 but replacing vsim with vc, demonstrates a decline in performance, with an accuracy value of 0.828. This decrease is attributed to the redundancy between vc and inundation depth (h), both in their shared importance as variables and in the decrease of h’s importance compared to the previous case. Essentially, when both variables are included, the model might become confused because h, which could have been a relevant variable when introduced alone, may now appear less important due to the addition of vc, which basically provides the same information in a different format.

The analysis proceeds with the introduction of buffer-related proxies to account for possible dynamic water effects on damage. Initially, we isolate the effect of the two considered mechanisms: the shielding (ID3) exerted by structures within the buffers (NShArea and NSW) and the debris impact (NDIArea, ID4). In both instances, we observe an enhancement in accuracy, with values reaching 0.877 and 0.865, respectively. Their combined effect is considered in model ID5, yielding only a marginal overall performance improvement (0.878), due to the noticeable correlation between NShArea and NDIArea, especially for the more severe damage levels (Fig. 2), with the two variables sharing their overall importance. Combination ID6, with the addition of vc, does not exhibit an increase in accuracy compared to the previous model (0.871), thus confirming the redundant contribution of a variable directly derived from another.

In the subsequent three input feature combinations, we explore the possible improvements in accuracy through the inclusion of vsim in conjunction with the considered proxies. In the case of ID7, where vsim is combined solely with shielding effect, no enhancement is observed (0.870) compared to the corresponding simple ID3. Similarly, when replacing shielding with the debris proxy (ID8), an overall accuracy of 0.867 is achieved, closely resembling the performance of ID4, lacking direct velocity input. The highest accuracy (0.889) is instead obtained when all three contributions are included simultaneously. Hence, the inclusion of vsim appears to result only in a marginal enhancement of model performance, with also an overall lower importance compared to the considered two proxies. From a physical perspective, albeit without a noticeable correlation between the data points of vsim and NShArea (Fig. 2), this result can be explained by recognizing that flow velocity indirectly encapsulates the shielding effect arising from the presence of buildings, which are typically represented in hydrodynamic models as obstructions to wave propagation or through an increase in bottom friction for urban areas8,34,35,36. Since this alteration induced by the presence of buildings directly influences the hydrodynamic characteristics of the tsunami on land, the resulting values of vsim offer limited additional improvement to the model’s predictive ability compared to what is already provided by h and NShArea. Moreover, the very weak correlation of the considered proxies with the primary response variable h (Fig. 2) reinforces their importance in the framework of a machine learning approach, since they provide distinct input information compared to flow velocity, which, instead, is directly related to h, as discussed for vc. Such observations then support the idea of regarding these proxies as suitable variables for capturing dynamic water effects on buildings.

In all previous combinations, observed field values (hMLIT) served as the primary data source for inundation depth information. However, for a more comprehensive analysis, we also introduced feature combination ID10, similar to ID9 but employing simulated inundation depths (hsim) in place of hMLIT. This model achieves accuracy levels comparable to its counterparts and exhibits a consistent feature importance pattern, albeit with a slight increase in the importance of the Distance variable.

For completeness, normalized confusion matrices, describing hit and misclassification rates among the different damage classes, are reported in Supplementary Fig. S1. These matrices reveal uniform error patterns across all models, with Class 5 consistently exhibiting higher misclassification rates, as a result of its underrepresentation in the dataset, as illustrated in Fig. 2. Concerning the potential influence of such dataset imbalance on the results, it is worth noting that, for the primary aim of this study, it does not alter the overall outcomes in terms of relative importance of the various features on damage predictions, as affecting all trained models in the same way.

Explainable machine learning: model’s outcomes represented as traditional fragility functions with confidence intervals

Delving further into the analysis of the results, the objective shifts toward gaining a thorough understanding of the relationships between the variables influencing the damage mechanisms. Indeed, while we have shown that the inclusion of water velocity components or the adoption of a more comprehensive multi-variable approach enhances tsunami damage predictions, machine learning algorithms have often been criticized for their inherent black-box nature30,31,32.

To address this challenge, we have chosen to embrace the concept of “explanation through visualization” by illustrating how it remains possible to derive explicit and informative insights from the outcomes derived from a machine learning approach, all while embracing the inherent complexity arising from the multi-variable nature of the problem at hand.

The results of trained models are then translated into the form of traditional fragility functions, expressing the probability of exceeding a certain damage state as a function of inundation depth, for fixed values of the feature under investigation, distinguished for velocity-related (Fig. 3), site-dependent (Fig. 4) and structural building attributes (Fig. 5). In addition to the central value, the derived functions incorporate the 10th–90th confidence intervals to provide a comprehensive representation of predictive uncertainty associated with them.

Starting with the analysis of the fragility functions obtained for fixed values of velocity-related variables (Fig. 3), it is possible to observe the substantial impact of the hydrodynamic effects, especially in more severe inundation scenarios. Notably, differences in the median fragility functions for the more damaging states (DS ≥ 5) are only evident when velocity reaches high values (around 10 m/s), while those for 0.1 and 2 m/s are practically overlapping, albeit featuring a wide uncertainty band, demonstrating how the several additional explicative variables included into the model affect the damage process. More pronounced differences in the fragilities become apparent for lower damage states, under shallower water depths (h < 2 m) and slower flow velocities, although a substantial portion of the predictive power in non-structural damage scenarios predominantly relies on the inundation depth8,11,13. The velocity proxy accounting for the shielding effect (NShArea) mirrors the behavior observed for vsim, but with greater variability for DS7.

For instance, the probability of reaching DS7 with an inundation depth of 4 m drops from ~70% for an isolated building (NShArea = 0) to roughly 40% for one located in a densely populated area (NShArea = 0.5). This substantial variation not only highlights the influence of this variable for describing the damage mechanism, but also explains its profound impact on the models’ predictive performance shown in Fig. 1. Conversely, for less severe DS, the central values of the three considered fragility functions tend to converge onto a single line, indicating that the shielding mechanism primarily influences the process leading to the total destruction of buildings. Distinct patterns emerge for the velocity proxy related to debris impact (NDIArea), particularly for DS ≥ 5, emphasizing its crucial role in predicting relevant structural damages.

For example, at an inundation depth of 4 m, the probability of reaching DS7 is 40% when NDIArea = 0 (i.e., no washed-away structures in the buffer area for the considered building), but it rises to ~90% when NDIArea = 0.3 (i.e., 30% of the buffer area with washed-away buildings). Moreover, similarly to NshArea, the width of the uncertainty band generally narrows with decreasing damage state, thus suggesting that inundation depth acts as the main predictor for low entity damages. These results represent an advancement beyond the work of Reese et al.26, who first attempted to incorporate information on shielding and debris mechanisms into fragility functions based on a limited number of field observations for the 2009 South Pacific tsunami, and Charvet et al.8, who investigated the possible effect of debris impacts (through the use of a binary variable) on damage levels for the 2011 Great East Japan event.

Concerning morphological variables, Fig. 4 well represents the amplification effect induced by ria-type coasts, especially for the higher damage states, consistently with prior literature8,11,13,37,38. However, above 6 m, the median fragility curve for the plain coastal areas exceeds that of the ria-type region, in line with findings by Suppasri et al.37,38, who also described a similar trend pattern. Nevertheless, it is worth observing that the variability introduced by other contributing features muddles the differences between the two coastal types, with the magnitude of the uncertainty band almost eclipsing the noticeable distinctions in the central values. This observation highlights the imperative need to move beyond the use of traditional univariate fragility functions, in favor of multi-variable models, intrinsically capable of taking these complex interactions into account. Distance from the coast has emerged as a pivotal factor in predictive accuracy (Fig. 1) and this is also evident in the corresponding fragility functions computed for Distance values of 170, 950 and 2600 m (Fig. 4). Obviously, a clear negative correlation exists between Distance and inundation depth (Fig. 2), with structures closer to the coast being more susceptible to damage, especially in case of structural damages. In detail, more pronounced differences in the fragility patterns are observed for DS5 and DS6, where the probability of exceeding these damage states with a 2 m depth is almost null for buildings located within a distance of 1 km from the coast, while it increases to over 80% for those in close proximity to the coastline. This mirrors the observations resulting for NDIArea (Fig. 3), where greater distances result in less damage potential from washed-away buildings.

Figure 5 illustrates the fragility functions categorized by structural types (BS) and building characteristics represented in terms of NF. Overall, the observed patterns align with the findings discussed in the preceding figures. When focusing on the median curves, it becomes evident that these features exert minimal influence on the occurrence of non-structural damages, with overlapping curves and relatively narrow uncertainty bands for DS ≤ 5, owing to the mentioned dominance of inundation depth as main damage predictive variable in such cases.

However, for the more severe damage states, distinctions become more marked. Reinforced-concrete (RC) buildings exhibit lower vulnerability, followed by steel, masonry and wood structures, with the latter two showing only minor differences among them. A similar trend is also evident for NF, with taller buildings being less vulnerable than shorter ones under severe damage scenarios. The most relevant differences emerge when transitioning from single or two-story buildings to multi-story dwellings. However, once again, it is worth noting that, beyond these general patterns, also highlighted in previous studies1,5,8,11,26,34,37, the influence of other factors tends to blur the distinctions among the central values of the different typologies, as visible, for instance, for the confidence interval for steel buildings, which encompasses both median fragility functions for wood and masonry structures.

Conclusions

Employing a data-driven approach, the present study has focused on the complex multi-variable nature of tsunami vulnerability and on the significance of incorporating into the modeling variables that directly or indirectly reflect the hydrodynamic impact of water on buildings, spanning various levels of detail, ranging from point information on tsunami velocity to synthetic indicators that consider the effects induced by interacting structures within the inundated area.

A pivotal finding from this study was the crucial role played by synthetic buffer-related proxies for predicting tsunami damage. These proxies, representing potential shielding and debris impact mechanisms in tsunami propagation on land, demonstrated a remarkable ability to match the performance of models relying on direct velocity measurements, thereby underscoring their capacity to encapsulate critical information essential for representing tsunami damage mechanisms on buildings.

These results contribute a practical dimension to the domain of disaster response, highlighting the value of straightforward and versatile modeling approaches. The proposed proxy variables can indeed prove valuable in rapid damage assessment scenarios where acquiring extensive velocity data may be challenging or time-sensitive, such as in post-event situations or large-scale assessments, thus potentially leading to more efficient allocation of resources for damage assessment.

Finally, this study emphasized not only the significance of embracing comprehensive multi-variable tsunami vulnerability models, but also the inherent limitations of conventional approaches centered on simplistic univariate fragility functions when tackling intricate processes like tsunami inundations. The adoption of machine learning approaches then emerges as a viable solution in navigating the complexities of tsunami vulnerability assessment, with the potential to yield valuable and interpretable insights when employed thoughtfully. In this context, while the presented modeling framework holds considerable promise for replication across diverse geographical regions and contexts, a primary barrier to its widespread application lies in the scarcity of comprehensive ex-post damage datasets for both model training (in case of new models) and validation (in case of a spatial transfer of models developed across diverse regions). This challenge highlights not only the critical need for the establishment of standardized procedures for collecting damage and ancillary data in the aftermath of tsunami events, but also the importance of an open distribution of resulting datasets within the research community.

Methods

The extended MLIT dataset

In its original form, the MLIT dataset consisted of a polygon shapefile containing building scale information on observed damage (Damage, categorized into seven damage classes: no damage (DS1), minor, moderate, major and complete damage (respectively, from DS 2 to 5), collapse (DS6) and washed away (DS7)) and on other associated damage explicative factors24,39. Alongside inundation depth at the building location (hMLIT), this dataset included structural type (BS), number of floors (NF) and the intended use (Use) of the building. In the present study, we included an indirect estimation of tsunami velocity (vc) by employing the established relationship rooted in Bernoulli’s theorem, expressing it as a function of inundation depth, \({v}_{{{{{{\rm{c}}}}}}}=c\sqrt{g{h}_{{{{{{\rm{MLIT}}}}}}}}\)15\({{{{{\rm{;}}}}}}\) in this study, the value of 0.7 has been considered merely as a reference for c (which typically ranges between 0.7 and 2), given that, in a machine learning framework, the specific value of a constant has no impact on the results. To enhance the dataset’s comprehensiveness, Di Bacco et al.23 introduced several other possible damage explicative factors, compiling an extended version of the database25. These supplementary variables encompassed building geometry, including the building’s footprint area (FA), as well as various coast-related parameters such as the distance between each building and the coastline (Distance), the building’s orientation (BOrient), the direction of tsunami wave approach (WDir) and a binary factor (CoastType) used to distinguish between plain and ria coastal types within the impacted region. The dataset was also enriched with synthetic variables serving as proxies for the hydrodynamic effects of the tsunami in terms of potential shielding and debris impact mechanisms. In detail, NShArea and NSW denote the fraction of the area spanning from each considered building to the coastline (with a predefined buffer geometry) that is occupied by structures and seawalls, as follows:

where Ntot and NSW are the total number of buildings and seawalls within a buffer area Ab, with Ai, Li and hi, respectively, indicating the FA of the i-th building in the buffer, the length and the height of the i-th seawall element.

Di Bacco et al.23 established a criterion for the definition of the buffer geometry drawing inspiration from the work of Naito et al.27, who assumed debris propagation from dispersed vessels toward a direction perpendicular to the coast, with a 45° spread angle that could increase in areas near the coast due to drawdown. In detail, these polygons are characterized by extreme points represented by the centroid of the examined building and the nearest point on the coastline. Di Bacco et al.23 tested two different shapes, finding no substantial effects of buffer geometry on the accuracy of developed damage models. In the present study, we then opted for their proposed narrower geometry, as exemplified in Fig. 6.

A similar approach was also adopted for the debris impact proxy, NDIArea, which accounted for only washed-away buildings (Nwa) in the buffer area (Fig. 6):

Hydrodynamic model of the 2011 Great East Japan tsunami

Hydrodynamic data for the 2011 event were derived from a two-dimensional nonlinear shallow water model, coupled with a sediment transport model, TUNAMI-STM40,41,42. In the TUNAMI model, the governing shallow water equations are discretized by finite difference method on staggered leap-frog structured grids. By coupling with the STM, the simulation can resolve tsunami-induced morphological change and resulting variation in the flow field, by yielding a better match to the observed tsunami inundation43. Accuracy and reliability of both hydrodynamic and sediment transport model components have been previously validated and documented in referenced studies40,41,42,44,45,46,47.

The computational domain was subdivided into multiple layered domains (R1–R6), each characterized by distinct spatial resolutions and extents, using a nesting grid system to optimize computational efficiency and resolution. Domain R1 covered the entire interplate tsunami source region along the Japan Trench, featuring a spatial resolution of 1215 m. Domains R2–R6 exhibited varying spatial resolutions, ranging from 405 to 5 m, with a constant ratio of 1/345. The digital elevation model for the domains R5 and R6 was generated using the pre-2011 tsunami, LIDAR- or photogrammetry-based high-resolution data provided by the Geospatial Information Authority of Japan. To enhance the accuracy of velocity estimations and reduce associated uncertainties stemming from coarser grid sizes, our analysis exclusively considered buildings located within Region R6, for a total of 97,852 impacted elements in the coastal areas of Miyako, Rikuzentakata, Minami-Sanriku, Kesennuma, Ishinomaki, Sendai Port and Sendai Airport (Fig. 2).

The simulations reproduced tsunami propagation and inundation for 3 h from the earthquake, then captured the effects of both the primary wave and the subsequent backwash. Inundation depth (hsim) and velocity (vsim) values sampled at the buildings’ location represent the maximum simulated values for the event; this approach is based on the assumption that observed damage is triggered by overall maximum demands, despite the potential variation in the timing of occurrence for the maximum depth and velocity at a given location.

The tsunami source model, developed by tsunami waveform inversion considering temporal and spatial evolution of the fault rupture48, was used to derive initial tsunami waveform in the source region. The transport formula proposed by Takahashi et al.49 was used for sediment transport modeling, adopting a uniform value of grain size of 0.267 mm for all calculations.

Damage model development and assessment of the feature importance

Multi-variable XT models were trained for damage level prediction using the input features listed in Table 1, under different combinations identified to highlight the marginal gain in accuracy attributed to velocity-related variables (Fig. 1). The models were implemented in Python using the scikit-learn library50. Model learning was based on the following procedure: a portion (5%) of the observed data were holdout as a validation set for hyperparameter tuning and the remaining data were then divided into training (95%) and test (5%) sets. The metric chosen to evaluate models’ accuracy was the relative HR, which quantifies the ratio of correct predictions to the total number of predictions. In addition to this global metric, normalized confusion matrices, describing hit and misclassification rates across the various damage classes, were computed for each model to provide more comprehensive insights on the observed accuracy.

For each model, an initial random search was conducted to fine-tune hyperparameters and assess validation accuracy. In line with Di Bacco et al.23, we fixed the number of trees at 130 and performed the splitting process by evaluating the decrease in Gini impurity on random subsets containing 60% of the training data.

Finally, feature importance for each model was assessed using the relative mean decrease in accuracy (mda) as a measure. This involved systematically shuffling individual features51 and quantifying the resulting change in accuracy (accs) in comparison to its original value (acc0):

Representation of model outcomes through tsunami fragility functions

The methodology for generating tsunami fragility functions with 10th–90th confidence intervals across various key features (categorized as velocity-related (vsim, NShArea, NDIArea), site-dependent (CoastType, Distance), and structural building attributes (BS, NF), indicated in the following as “Investigated Feature” (IF)) initiates with the training of an XT model employing the full set of input features, as in model ID9 (Fig. 1); only for velocity-related attributes, to discern the singular contribution of each of them into the fragilities, homologous features are sequentially excluded during model training (i.e., the following feature combinations are considered: ID1, ID3 and ID4 (Fig. 1), respectively for vsim, NShArea, NDIArea).

Then, a dataset for plotting the fragility functions is constructed by sampling data points from the extended MLIT dataset, ensuring a robust representation while avoiding the need to assume any correlation among the input features.

In line with conventional practices in tsunami literature1,3,5, inundation depth is adopted as the primary intensity measure for constructing the fragility functions. Percentiles of hMLIT are computed at 5% intervals, resulting in 21 distinct values including also the minimum and maximum hMLIT values, totaling 20 values by averaging interval extremes. For each hMLIT interval, 20 buildings are sampled with replacement and desired values for the IF (such as BS = “RC”, “Wood”, “Steel”, or “Masonry”), are assigned. This process yields a structured dataset consisting of 20 inputs · 20 intervals · n values for investigation. For each (hMLIT, IF) pair, 20 damage state predictions are generated. To create the fragility functions, the probabilities of reaching each damage state are calculated by counting the relative number of times a specific damage state is reached or exceeded across the 20 predictions.

This procedure is iterated 100 times for each (hMLIT, IF) combination, resulting in 100 values for each point along the fragility curves. Finally, for each (hMLIT, IF) pairing, values representing the 10th percentile, median, and 90th percentile are computed and incorporated into monotonically increasing functions as confidence intervals, providing a comprehensive representation of predictive uncertainty.

Data availability

The extended MLIT dataset used in this study is accessible in Mendeley data (Scorzini et al. 2024, “Extended MLIT dataset for the 2011 Great East Japan tsunami with the inclusion of velocity information”. doi: 10.17632/5m3n2hjwkh.1).

References

Dall’Osso, F., Dominey-Howes, D., Tarbotton, C., Summerhayes, S. & Withycombe, G. Revision and improvement of the PTVA-3 model for assessing tsunami building vulnerability using “international expert judgment”: introducing the PTVA-4 model. Nat. Hazards 83, 1229–1256 (2016).

Tarbotton, C., Dall’Osso, F., Dominey-Howes, D. & Goff, J. The use of empirical vulnerability functions to assess the response of buildings to tsunami impact: comparative review and summary of best practice. Earth Sci. Rev. 142, 120–134 (2015).

Charvet, I., Macabuag, J. & Rossetto, T. Estimating tsunami-induced building damage through fragility functions: critical review and research needs. Front. Built Environ. 3, 1–22 (2017).

Koshimura, S., Namegaya, Y. & Yanagisawa, H. Tsunami fragility: a new measure to identify tsunami damage. J. Disaster Res. 4, 479–488 (2009).

Nanayakkara, K. I. U. & Dias, W. P. S. Fragility curves for structures under tsunami loading. Nat. Hazards 80, 471–486 (2016).

Koshimura, S., Oie, T., Yanagisawa, H. & Imamura, F. Developing fragility functions for tsunami damage estimation using numerical model and post-tsunami data from Banda Aceh, Indonesia. Coast. Eng. J. 51, 243–273 (2009).

Leone, F., Lavigne, F., Paris, R., Denain, J. C. & Vinet, F. A spatial analysis of the December 26th, 2004 tsunami-induced damages: lessons learned for a better risk assessment integrating buildings vulnerability. Appl. Geogr. 31, 363–375 (2011).

Charvet, I., Suppasri, A., Kimura, H., Sugawara, D. & Imamura, F. A multivariate generalized linear tsunami fragility model for Kesennuma City based on maximum flow depths, velocities and debris impact, with evaluation of predictive accuracy. Nat. Hazards 79, 2073–2099 (2015).

Macabuag, J. et al. A proposed methodology for deriving tsunami fragility functions for buildings using optimum intensity measures. Nat. Hazards 84, 1257–1285 (2016).

Attary, N., van de Lindt, J. W., Unnikrishnan, V. U., Barbosa, A. R. & Cox, D. T. Methodology for development of physics-based tsunami fragilities. J. Struct. Eng. 143, 04016223 (2017).

De Risi, R., Goda, K., Yasuda, T. & Mori, N. Is flow velocity important in tsunami empirical fragility modeling? Earth Sci. Rev. 166, 64–82 (2017).

Park, H., Cox, D. T. & Barbosa, A. R. Comparison of inundation depth and momentum flux based fragilities for probabilistic tsunami damage assessment and uncertainty analysis. Coast. Eng. 122, 10–26 (2017).

Song, J., De Risi, R. & Goda, K. Influence of flow velocity on tsunami loss estimation. Geosciences 7, 114 (2017).

Lukkunaprasit, P., Ruangrassamee, A., Stitmannaithum, B., Chintanapakdee, C. & Thanasisathit, N. Calibration of tsunami loading on a damaged building. J. Earthq. Tsunami 4, 105–114 (2010).

Matsutomi, H. & Okamoto, K. Inundation flow velocity of tsunami on land. Isl. Arc 19, 443–457 (2010).

Foytong, P., Ruangrassamee, A., Shoji, G., Hiraki, Y. & Ezura, Y. Analysis of tsunami flow velocities during the March 2011 Tohoku, Japan, Tsunami. Earthq. Spectra 29, 161–181 (2013).

Xie, Y., Ebad Sichani, M., Padgett, J. E. & DesRoches, R. The promise of implementing machine learning in earthquake engineering: a state-of-the-art review. Earthq. Spectra 36, 1769–1801 (2020).

Szczyrba, L., Zhang, Y., Pamukcu, D., Eroglu, D. I. & Weiss, R. Quantifying the role of vulnerability in hurricane damage via a machine learning case study. Nat. Hazards Rev. 22, 04021028 (2021).

Alipour, A., Ahmadalipour, A., Abbaszadeh, P. & Moradkhani, H. Leveraging machine learning for predicting flash flood damage in the Southeast US. Environ. Res. Lett. 15, 024011 (2020).

Wagenaar, D. et al. Invited perspectives: how machine learning will change flood risk and impact assessment. Nat. Hazards Earth Syst. Sci. 20, 1149–1161 (2020).

Saengtabtim, K., Leelawat, N., Tang, J., Treeranurat, W., Wisittiwong, N., Suppasri, A., Pakoksung, K., Imamura, F., Takahashi, N. & Charvet, I. Predictive analysis of the building damage from the 2011 Great East Japan tsunami using decision tree classification related algorithms. IEEE Access 9, 31065–31077 (2021).

Vescovo, R., Adriano, B., Mas, E. & Koshimura, S. Beyond tsunami fragility functions: experimental assessment for building damage estimation. Sci. Rep. 13, 14337 (2023).

Di Bacco, M., Rotello, P., Suppasri, A. & Scorzini, A. R. Leveraging data driven approaches for enhanced tsunami damage modelling: insights from the 2011 Great East Japan event. Environ. Model. Softw. 160, 105604 (2023).

Ministry of Land, Infrastructure, Transport and Tourism of Japan (MLIT). Survey Tsunami Damage Condition (accessed 10 October 2023) http://fukkou.csis.u-tokyo.ac.jp/ (2012).

Scorzini, A. R., Di Bacco, M., Sugawara, D. & Suppasri, A. Extended MLIT dataset for the 2011 Great East Japan tsunami with inclusion of velocity information. Mendeley Data, V1. https://doi.org/10.17632/5m3n2hjwkh.1 (2024).

Reese, S., Bradley, B. A., Bind, J., Smart, G., Power, W. & Sturman, J. Empirical building fragilities from observed damage in the 2009 South Pacific tsunami. Earth Sci. Rev. 107, 156–173 (2011).

Naito, C., Cercone, C., Riggs, H. R. & Cox, D. Procedure for site assessment of the potential for tsunami debris impact. J. Waterw. Port Coast. Ocean Eng. 140, 223–232 (2014).

Nistor, I., Goseberg, N. & Stolle, J. Tsunami-driven debris motion and loads: a critical review. Front. Built Environ. 3, 1–11 (2017).

Ma, X., Zhang, W., Li, X. & Ding, Z. Evaluating tsunami damage of wood residential buildings in a coastal community considering waterborne debris from buildings. Eng. Struct. 244, 112761 (2021).

Murdoch, W. J., Singh, C., Kumbier, K., Abbasi-Asl, R. & Yu, B. Definitions, methods, and applications in interpretable machine learning. Proc. Natl Acad. Sci. USA. 116, 22071–22080 (2019).

Razavi, S. et al. The future of sensitivity analysis: an essential discipline for systems modeling and policy support. Environ. Model. Softw. 137, 104954 (2021).

Marcinkevičs, R. & Vogt, J. E. Interpretable and explainable machine learning: a methods‐centric overview with concrete examples. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 13, e1493 (2023).

Geurts, P., Ernst, D. & Wehenkel, L. Extremely randomized trees. Mach. Learn. 63, 3–42 (2006).

Cox, D., Tomita, T., Lynett, P. & Holman, R. Tsunami inundation with macro-roughness in the constructed environment. Coast. Eng. 1, 1421–1432 (2008).

Schubert, J. E., Sanders, B. F., Smith, M. J. & Wright, N. G. Unstructured mesh generation and landcover-based resistance for hydrodynamic modeling of urban flooding. Adv. Water Resour. 31, 1603–1621 (2008).

Bricker, J. D., Gibson, S., Takagi, H. & Imamura, F. On the need for larger Manning’s roughness coefficients in depth-integrated tsunami inundation models. Coast. Eng. J. 57, 1550005–1 (2015).

Suppasri, A. et al. Building damage characteristics based on surveyed data and fragility curves of the 2011 Great East Japan tsunami. Nat. Hazards 66, 319–341 (2013).

Suppasri, A., Charvet, I., Imai, K. & Imamura, F. Fragility curves based on data from the 2011 Tohoku-oki tsunami in Ishinomaki city, with discussion of parameters influencing building damage. Earthq. Spectra 31, 841–868 (2015).

Leelawat, N., Suppasri, A., Charvet, I. & Imamura, F. Building damage from the 2011 Great East Japan tsunami: quantitative assessment of influential factors: a new perspective on building damage analysis. Nat. Hazards 73, 449–471 (2014).

Sugawara, D., Takahashi, T. & Imamura, F. Sediment transport due to the 2011 Tohoku-oki tsunami at Sendai: results from numerical modeling. Mar. Geol. 358, 18–37 (2014).

Yamashita, K. et al. Numerical simulations of large-scale sediment transport caused by the 2011 Tohoku Earthquake Tsunami in Hirota Bay, Southern Sanriku Coast. Coast. Eng. J. 58, 1640015 (2016).

Yamashita, K., Shigihara, Y., Sugawara, D., Arikawa, T., Takahashi, T. & Imamura, F. Effect of sediment transport on tsunami hazard and building damage – an integrated simulation of tsunami inundation, sediment transport and drifting vessels in Kesennuma City, Miyagi Prefecture during the Great East Japan Earthquake. J. Jpn. Soc. Civ. Eng. Ser. B2 Coast. Eng. 73, I_355–I_360 (2017).

Sugawara, D. Evolution of numerical modeling as a tool for predicting tsunami-induced morphological changes in coastal areas: a review since the 2011 Tohoku Earthquake in The 2011 Japan Earthquake and Tsunami: Reconstruction and Restoration: Insights and Assessment after 5 Years. Advances in Natural and Technological Hazards Research (eds Santiago-Fandino, V. et al.) 451–467 (Springer, 2018).

Hayashi, S. & Koshimura, S. Measurement of the 2011 Tohoku tsunami flow velocity by the aerial video analysis. J. Jpn. Soc. Civ. Eng. Ser. B2 Coast. Eng. 68, 366–370 (2012).

Sugawara, D. & Goto, K. Numerical modeling of the 2011 Tohoku-oki tsunami in the offshore and onshore of Sendai Plain, Japan. Sediment. Geol. 282, 110–123 (2012).

Yamashita, K., Yamazaki, Y., Bai, Y., Takahashi, T., Imamura, F. & Cheung, K. F. Modeling of sediment transport in rapidly-varying flow for coastal morphological changes caused by tsunamis. Mar. Geol. 449, 106823 (2022).

Masuda, H., Sugawara, D., Abe, T. & Goto, K. To what extent tsunami source information can be extracted from tsunami deposits? Implications from the 2011 Tohoku-oki tsunami deposits and sediment transport simulations. Prog. Earth Planet. Sci. 9, 65 (2022).

Satake, K., Fujii, Y., Harada, T. & Namegaya, Y. Time and space distribution of coseismic slip of the 2011 Tohoku earthquake as inferred from tsunami waveform data. Bull. Seismol. Soc. Am. 103, 1473–1492 (2013).

Takahashi, T., Kurokawa, T., Fujita, M. & Shimada, H. Hydraulic experiment on sediment transport due to tsunamis with various sand grain size. J. Jpn. Soc. Civ. Eng. Ser. B2 Coast. Eng. 67, 231–235 (2011).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Acknowledgements

This work was supported by the Italian Ministry of University and Research, within the framework of the project Dipartimenti di Eccellenza 2023–2027 at the University of L’Aquila.

Author information

Authors and Affiliations

Contributions

Anna Rita Scorzini: conceptualization, methodology, data curation, investigation, visualization, resources, writing – original draft, writing – review & editing. Mario Di Bacco: conceptualization, methodology, data curation, formal analysis, investigation, visualization, writing – review & editing. Daisuke Sugawara: data curation, writing – review & editing. Anawat Suppasri: validation, writing – review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Earth & Environment thanks Yuchen Wang and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editors: Olusegun Dada and Joe Aslin. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Scorzini, A.R., Di Bacco, M., Sugawara, D. et al. Machine learning and hydrodynamic proxies for enhanced rapid tsunami vulnerability assessment. Commun Earth Environ 5, 301 (2024). https://doi.org/10.1038/s43247-024-01468-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s43247-024-01468-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.