Abstract

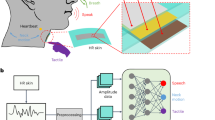

Researchers have recently been pursuing technologies for universal speech recognition and interaction that can work well with subtle sounds or noisy environments. Multichannel acoustic sensors can improve the accuracy of recognition of sound but lead to large devices that cannot be worn. To solve this problem, we propose a graphene-based intelligent, wearable artificial throat (AT) that is sensitive to human speech and vocalization-related motions. Its perception of the mixed modalities of acoustic signals and mechanical motions enables the AT to acquire signals with a low fundamental frequency while remaining noise resistant. The experimental results showed that the mixed-modality AT can detect basic speech elements (phonemes, tones and words) with an average accuracy of 99.05%. We further demonstrated its interactive applications for speech recognition and voice reproduction for the vocally disabled. It was able to recognize everyday words vaguely spoken by a patient with laryngectomy with an accuracy of over 90% through an ensemble AI model. The recognized content was synthesized into speech and played on the AT to rehabilitate the capability of the patient for vocalization. Its feasible fabrication process, stable performance, resistance to noise and integrated vocalization make the AT a promising tool for next-generation speech recognition and interaction systems.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The collected AT speech spectrums for classification in Fig. 4a are provided via Zenodo (https://doi.org/10.5281/zenodo.7396184)45. A detailed manual for the data analysis and deployment can be found in Supplementary Note 1 and the Zenodo repository. Other study findings are available from T.-L.R. on reasonable request, and in accordance with the Institutional Review Board at Tsinghua University. Source Data are provided with this paper.

Code availability

The code used to implement the classification tasks and the ensemble model in this study are available from Zenodo (https://doi.org/10.5281/zenodo.7396184)45.

References

Gonzalez-Lopez, J. A., Gomez-Alanis, A., Martin Donas, J. M., Perez-Cordoba, J. L. & Gomez, A. M. Silent speech interfaces for speech restoration: a review. IEEE Access 8, 177995–178021 (2020).

Betts, B. & Jorgensen, C. Small Vocabulary Recognition Using Surface Electromyography in an Acoustically Harsh Environment (NASA, Ames Research Center, 2005).

Wood, N. L. & Cowan, N. The cocktail party phenomenon revisited: attention and memory in the classic selective listening procedure of Cherry (1953). J. Exp. Psychol. Gen. 124, 243 (1995).

Lopez-Meyer, P., del Hoyo Ontiveros, J. A., Lu, H. & Stemmer, G. Efficient end-to-end audio embeddings generation for audio classification on target applications. In ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 601–605 (IEEE, 2021); https://doi.org/10.1109/ICASSP39728.2021.9414229

Wang, D. X., Jiang, M. S., Niu, F. L., Cao, Y. D. & Zhou, C. X. Speech enhancement control design algorithm for dual-microphone systems using β-NMF in a complex environment. Complexity https://doi.org/10.1155/2018/6153451 (2018).

Akbari, H., Arora, H., Cao, L. & Mesgarani, N. Lip2AudSpec: speech reconstruction from silent lip movements video. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2516–2520 (IEEE, 2018).

Chung, J. S., Senior, A., Vinyals, O. & Zisserman, A. Lip reading sentences in the wild. In Proc. 30th IEEE Conference on Computer Vision and Pattern Recognition 3444–3450 (IEEE, 2017).

Pass, A., Zhang, J. & Stewart, D. AN investigation into features for multi-view lipreading. In 2010 IEEE International Conference on Image Processing 2417–2420 (IEEE, 2010); https://doi.org/10.1109/ICIP.2010.5650963

Herff, C. et al. Brain-to-text: decoding spoken phrases from phone representations in the brain. Front. Neurosci. 9, 1–11 (2015).

Anumanchipalli, G. K., Chartier, J. & Chang, E. F. Speech synthesis from neural decoding of spoken sentences. Nature 568, 493–498 (2019).

Schultz, T. & Wand, M. Modeling coarticulation in EMG-based continuous speech recognition. Speech Commun. 52, 341–353 (2010).

Wand, M., Janke, M. & Schultz, A. T. Tackling speaking mode varieties in EMG-based speech recognition. IEEE Trans. Biomed. Eng. 61, 2515–2526 (2014).

Janke, M. & Diener, L. EMG-to-speech: direct generation of speech from facial electromyographic signals. IEEE/ACM Trans. Audio Speech Lang. Process. 25, 2375–2385 (2017).

Kim, K. K. et al. A deep-learned skin sensor decoding the epicentral human motions. Nat. Commun. 11, 1–8 (2020).

Su, M. et al. Nanoparticle based curve arrays for multirecognition flexible electronics. Adv. Mater. 28, 1369–1374 (2016).

Tao, L. Q. et al. An intelligent artificial throat with sound-sensing ability based on laser induced graphene. Nat. Commun. 8, 1–8 (2017).

Wei, Y. et al. A wearable skinlike ultra-sensitive artificial graphene throat. ACS Nano 13, 8639–8647 (2019).

Aytar, Y., Vondrick, C. & Torralba, A. SoundNet: learning sound representations from unlabeled video. Adv. Neural Inf. Process. Syst. 29, 892–900 (2016).

Boddapati, V., Petef, A., Rasmusson, J. & Lundberg, L. Classifying environmental sounds using image recognition networks. Procedia Comput. Sci. 112, 2048–2056 (2017).

Becker, S., Ackermann, M., Lapuschkin, S., Müller, K.-R. & Samek, W. Interpreting and explaining deep neural networks for classification of audio signals. Preprint at https://doi.org/10.48550/arXiv.1807.03418 (2019).

Hershey, S. et al. CNN architectures for large-scale audio classification. In 2017 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP) 131–135 (2017); https://doi.org/10.1109/ICASSP.2017.7952132

Titze, I. & Alipour, F. The Myoelastic–Aerodynamic Theory of Phonation 227–244 (American Speech–Language–Hearing Association, 2006).

Elmiyeh, B. et al. Surgical voice restoration after total laryngectomy: an overview. Indian J. Cancer 47, 239–247 (2010).

Qi, Y. & Weinberg, B. Characteristics of voicing source waveforms produced by esophageal and tracheoesophageal speakers. J. Speech Hear. Res. 38, 536–548 (1995).

Liu, W. et al. Stable wearable strain sensors on textiles by direct laser writing of graphene. ACS Appl. Nano Mater. 3, 283–293 (2020).

Chhetry, A. et al. MoS2-decorated laser-induced graphene for a highly sensitive, hysteresis-free, and reliable piezoresistive strain sensor. ACS Appl. Mater. Interfaces 11, 22531–22542 (2019).

Deng, N. Q. et al. Black phosphorus junctions and their electrical and optoelectronic applications. J. Semicond. 42, 081001 (2021).

Zhao, S., Ran, W., Wang, L. & Shen, G. Interlocked MXene/rGO aerogel with excellent mechanical stability for a health-monitoring device. J. Semicond. 43, 082601 (2022).

Asadzadeh, S. S., Moosavi, A., Huynh, C. & Saleki, O. Thermo acoustic study of carbon nanotubes in near and far field: theory, simulation, and experiment. J. Appl. Phys. 117, 095101 (2015).

Fitch, J. L. & Holbrook, A. Modal vocal fundamental frequency of young adults. JAMA Otolaryngol. Head Neck Surg. 92, 379–382 (1970).

Maas, A. L. et al. Building DNN acoustic models for large vocabulary speech recognition. Comput. Speech Lang. 41, 195–213 (2017).

Huang, J., Lu, H., Lopez Meyer, P., Cordourier, H. & Del Hoyo Ontiveros, J. Acoustic scene classification using deep learning-based ensemble averaging. In Proc. Detection and Classification of Acoustic Scenes and Events 2019 Workshop 94–98 (New York Univ., 2019); https://doi.org/10.33682/8rd2-g787

Kumar, A., Khadkevich, M. & Fugen, C. Knowledge transfer from weakly labeled audio using convolutional neural network for sound events and scenes. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing 326–330 (IEEE, 2018).

Selvaraju, R. R. et al. Grad-CAM: visual explanations from deep networks via gradient-based localization. In Proc. IEEE International Conference on Computer Vision 618–626 (IEEE, 2017).

Siegel, R. L. et al. Colorectal cancer statistics, 2020. CA: Cancer J. Clin. 70, 145–164 (2020).

Ferlay, J. et al. Estimating the global cancer incidence and mortality in 2018: GLOBOCAN sources and methods. Int J Cancer 144, 1941–1953 (2019).

Burmeister, B. H., Dickie, G., Smithers, B. M., Hodge, R. & Morton, K. Thirty-four patients with carcinoma of the cervical esophagus treated with chemoradiation therapy. JAMA Otolaryngol. Head Neck Surg. 126, 205–208 (2000).

Takebayashi, K. et al. Comparison of curative surgery and definitive chemoradiotherapy as initial treatment for patients with cervical esophageal cancer. Dis. Esophagus 30, 1–5 (2017).

Luo, Z. et al. Hierarchical Harris hawks optimization for epileptic seizure classification. Comput. Biol. Med. 145, 105397 (2022).

Jin, W., Dong, S., Dong, C. & Ye, X. Hybrid ensemble model for differential diagnosis between COVID-19 and common viral pneumonia by chest X-ray radiograph. Comput. Biol. Med. 131, 104252 (2021).

Meltzner, G. S. et al. Silent speech recognition as an alternative communication device for persons with laryngectomy. IEEE/ACM Trans. Audio Speech Lang. Process 25, 2386–2398 (2017).

Gonzalez, T. F. Handbook of Approximation Algorithms and Metaheuristics (Chapman and Hall/CRC, 2007); https://doi.org/10.1201/9781420010749

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. Rethinking the inception architecture for computer vision. In Proc. IEEE Computer Society Conference on Computer Vision and Pattern Recognition 2818–2826 (IEEE, 2016).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE Computer Society Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016).

Yang, Q., Jin. W. & Zhang, Q. A COLLECTION OF SAMPLE CODES of ‘Mixed-Modality Speech Recognition and Interaction Using a Single-Device as Wearable Artificial Throat’ (v.3) (Zenodo, 2022); https://doi.org/10.5281/zenodo.7396184

Kang, D. et al. Ultrasensitive mechanical crack-based sensor inspired by the spider sensory system. Nature 516, 222–226 (2014).

Park, B. et al. Dramatically enhanced mechanosensitivity and signal-to-noise ratio of nanoscale crack-based sensors: effect of crack depth. Adv. Mater. 28, 8130–8137 (2016).

Yang, T., Wang, W., Huang, Y., Jiang, X. & Zhao, X. Accurate monitoring of small strain for timbre recognition via ductile fragmentation of functionalized graphene multilayers. ACS Appl. Mater. Interfaces 12, 57352–57361 (2020).

Jin, M. L. et al. An ultrasensitive, visco-poroelastic artificial mechanotransducer skin inspired by piezo2 protein in mammalian Merkel cells. Adv. Mater. 29, 1–9 (2017).

Lee, J. H. et al. Highly sensitive stretchable transparent piezoelectric nanogenerators. Energy Environ. Sci. 6, 169–175 (2013).

Lang, C., Fang, J., Shao, H., Ding, X. & Lin, T. High-sensitivity acoustic sensors from nanofibre webs. Nat. Commun. 7, 1–7 (2016).

Qiu, L. et al. Ultrafast dynamic piezoresistive response of graphene-based cellular elastomers. Adv. Mater. 28, 194–200 (2016).

Jin, Y. et al. Deep‐learning‐enabled MXene‐based artificial throat: toward sound detection and speech recognition. Adv. Mater. Technol. 2000262, 2000262 (2020).

Deng, C. et al. Ultrasensitive and highly stretchable multifunctional strain sensors with timbre-recognition ability based on vertical graphene. Adv. Funct. Mater. 29, 1–11 (2019).

Ravenscroft, D. et al. Machine learning methods for automatic silent speech recognition using a wearable graphene strain gauge sensor. Sensors 22, 299 (2021).

Liu, Y. et al. Epidermal mechano-acoustic sensing electronics for cardiovascular diagnostics and human-machine interfaces. Sci. Adv. 2, e1101185 (2016).

Yang, J. et al. Eardrum-Inspired active sensors for self-powered cardiovascular system characterization and throat-attached anti-interference voice recognition. Adv. Mater. 27, 1316–1326 (2015).

Fan, X. et al. Ultrathin, rollable, paper-based triboelectric nanogenerator for acoustic energy harvesting and self-powered sound recording. ACS Nano 9, 4236–4243 (2015).

Liu, H. et al. An epidermal sEMG tattoo-like patch as a new human–machine interface for patients with loss of voice. Microsyst. Nanoeng. 6, 1–13 (2020).

Yatani, K. & Truong, K. N. BodyScope: a wearable acoustic sensor for activity recognition. In Proc. 2012 ACM Conference on Ubiquitous Computing—UbiComp’12 341 (ACM, 2012); https://doi.org/10.1145/2370216.2370269

Kapur, A., Kapur, S. & Maes, P. AlterEgo: a personalized wearable silent speech interface. In IUI '18: 23rd International Conference on Intelligent User Interfaces 43–53 (ACM, 2018); https://doi.org/10.1145/3172944.3172977

Acknowledgements

This work was supported in part by: the National Natural Science Foundation of China under grant nos. 62022047 and 61874065 (to H.T.), and U20A20168 and 51861145202 (to T.R.); the National Key R&D Program (grant nos. 2022YFB3204100, 2021YFC3002200 and 2020YFA0709800); JIAOT (grant no. KF202204); the STI 2030—Major Projects (grant no. 2022ZD0209200 to H.T.); the Fok Ying-Tong Education Foundation (grant no. 171051 to H.T.); the Beijing Natural Science Foundation (grant no. M22020 to H.T.); the Beijing National Research Center for Information Science and Technology Youth Innovation Fund (grant no. BNR2021RC01007 to H.T.); the State Key Laboratory of New Ceramic and Fine Processing Tsinghua University (grant no. KF202109 to H.T.); the Tsinghua–Foshan Innovation Special Fund (TFISF) (grant no. 2021THFS0217 to H.T.); the Research Fund from Beijing Innovation Center for Future Chips (T.R.); the Center for Flexible Electronics Technology, Daikin–Tsinghua Union Program of ‘The Etching Rates of Different Gases’; and the Independent Research Program of Tsinghua University (grant no. 20193080047 to H.T.). This work is also supported by the Opening Project of the Key Laboratory of Microelectronic Devices and Integrated Technology, Institute of Microelectronics, Chinese Academy of Sciences (H.T.); the Tsinghua–Toyota Joint Research Fund (H.T.); and the Guoqiang Institute, Tsinghua University (T.R.).

Author information

Authors and Affiliations

Contributions

Q.Y., W.J. and H.T. conceived and designed the experiments. Q.S., W.J. and Q.H. contributed to sensor fabrication and dataset collection. Q.Y., Q.H., W.Y., G.Z. and X.S. performed data characterizations. G.Z. simulated the strain distribution of the honeycomb structure. W.J. performed the classification task using AI methods. Q.S. and W.J. analysed the data and wrote the paper. T.R., H.T., Y.Y. and Q.L. supervised the project. All authors discussed the results and commented on the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Masafumi Nishimura and Huijun Ding for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Note 1, Figs. 1–19 and Tables 1–9.

Demonstration of the speech perception interference and recognition experiments.

The recognized daily short sentences of a laryngectomy patient generated by the graphene AT.

Source data

Source Data Fig. 2

Statistical Source Data.

Source Data Fig. 3

Statistical Source Data.

Source Data Fig. 4

Statistical Source Data.

Source Data Fig. 5

Statistical Source Data.

Source Data Fig. 6

Statistical Source Data.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, Q., Jin, W., Zhang, Q. et al. Mixed-modality speech recognition and interaction using a wearable artificial throat. Nat Mach Intell 5, 169–180 (2023). https://doi.org/10.1038/s42256-023-00616-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-023-00616-6

This article is cited by

-

Speaking without vocal folds using a machine-learning-assisted wearable sensing-actuation system

Nature Communications (2024)

-

Ultrasensitive textile strain sensors redefine wearable silent speech interfaces with high machine learning efficiency

npj Flexible Electronics (2024)

-

A fully integrated, standalone stretchable device platform with in-sensor adaptive machine learning for rehabilitation

Nature Communications (2023)

-

Artificial intelligence-powered electronic skin

Nature Machine Intelligence (2023)

-

Scalable and eco-friendly flexible loudspeakers for distributed human-machine interactions

npj Flexible Electronics (2023)