Abstract

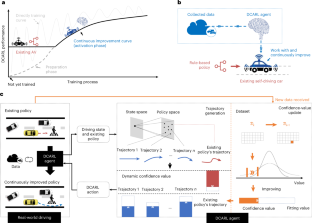

Today’s self-driving vehicles have achieved impressive driving capabilities, but still suffer from uncertain performance in long-tail cases. Training a reinforcement-learning-based self-driving algorithm with more data does not always lead to better performance, which is a safety concern. Here we present a dynamic confidence-aware reinforcement learning (DCARL) technology for guaranteed continuous improvement. Continuously improving means that more training always improves or maintains its current performance. Our technique enables performance improvement using the data collected during driving, and does not need a lengthy pre-training phase. We evaluate the proposed technology both using simulations and on an experimental vehicle. The results show that the proposed DCARL method enables continuous improvement in various cases, and, in the meantime, matches or outperforms the default self-driving policy at any stage. This technology was demonstrated and evaluated on the vehicle at the 2022 Beijing Winter Olympic Games.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The Supplementary Software file contains the minimum data to run and render the results for all three experiments. These data are also available in a public repository at https://github.com/zhcao92/DCARL (ref. 37).

Code availability

The source code of the self-driving experiments is available at https://github.com/zhcao92/DCARL (ref. 38). It contains the proposed DCARL planning algorithms as well as the used perception, localization and control algorithms in our self-driving cars.

References

Sutton, R. S. & Barto, A. G. Reinforcement Learning: An Introduction (MIT Press, 2018).

Silver, D. et al. A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science 362, 1140–1144 (2018).

Silver, D. et al. Mastering the game of Go with deep neural networks and tree search. Nature 529, 484–489 (2016).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015).

Ye, F., Zhang, S., Wang, P. & Chan, C.-Y. A survey of deep reinforcement learning algorithms for motion planning and control of autonomous vehicles. In 2021 IEEE Intelligent Vehicles Symposium (IV) 1073–1080 (IEEE, 2021).

Zhu, Z. & Zhao, H. A survey of deep RL and IL for autonomous driving policy learning. IEEE Trans. Intell. Transp. Syst. 23, 14043–14065 (2022).

Aradi, S. Survey of deep reinforcement learning for motion planning of autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 23, 740–759 (2022).

Cao, Z. et al. Highway exiting planner for automated vehicles using reinforcement learning. IEEE Trans. Intell. Transp. Syst. 22, 990–1000 (2020).

Stilgoe, J. Self-driving cars will take a while to get right. Nat. Mach. Intell. 1, 202–203 (2019).

Kalra, N. & Paddock, S. M. Driving to safety: How many miles of driving would it take to demonstrate autonomous vehicle reliability? Transp. Res. Part A 94, 182–193 (2016).

Disengagement reports. California DMV https://www.dmv.ca.gov/portal/vehicle-industry-services/autonomous-vehicles/disengagement-reports/ (2021).

Li, G. et al. Decision making of autonomous vehicles in lane change scenarios: deep reinforcement learning approaches with risk awareness. Transp. Res. Part C 134, 103452 (2022).

Shu, H., Liu, T., Mu, X. & Cao, D. Driving tasks transfer using deep reinforcement learning for decision-making of autonomous vehicles in unsignalized intersection. IEEE Trans. Veh. Technol. 71, 41–52 (2021).

Pek, C., Manzinger, S., Koschi, M. & Althoff, M. Using online verification to prevent autonomous vehicles from causing accidents. Nat. Mach. Intell. 2, 518–528 (2020).

Xu, S., Peng, H., Lu, P., Zhu, M. & Tang, Y. Design and experiments of safeguard protected preview lane keeping control for autonomous vehicles. IEEE Access 8, 29944–29953 (2020).

Yang, J., Zhang, J., Xi, M., Lei, Y. & Sun, Y. A deep reinforcement learning algorithm suitable for autonomous vehicles: double bootstrapped soft-actor-critic-discrete. IEEE Trans. Cogn. Dev. Syst. https://doi.org/10.1109/TCDS.2021.3092715 (2021).

Schwall, M., Daniel, T., Victor, T., Favaro, F. & Hohnhold, H. Waymo public road safety performance data. Preprint at arXiv https://doi.org/10.48550/arXiv.2011.00038 (2020).

Fan, H. et al. Baidu Apollo EM motion planner. Preprint at arXiv https://doi.org/10.48550/arXiv.1807.08048 (2018).

Kato, S. et al. Autoware on board: enabling autonomous vehicles with embedded systems. In 2018 ACM/IEEE 9th International Conference on Cyber-Physical Systems 287–296 (IEEE, 2018).

Cao, Z., Xu, S., Peng, H., Yang, D. & Zidek, R. Confidence-aware reinforcement learning for self-driving cars. IEEE Trans. Intell. Transp. Syst. 23, 7419–7430 (2022).

Thomas, P. S. et al. Preventing undesirable behavior of intelligent machines. Science 366, 999–1004 (2019).

Levine, S., Kumar, A., Tucker, G. & Fu, J. Offline reinforcement learning: tutorial, review, and perspectives on open problems. Preprint at arXiv https://doi.org/10.48550/arXiv.2005.01643 (2020).

Garcıa, J. & Fernández, F. A comprehensive survey on safe reinforcement learning. J. Mach. Learn. Res. 16, 1437–1480 (2015).

Achiam, J., Held, D., Tamar, A. & Abbeel, P. Constrained policy optimization. In International Conference on Machine Learning 22–31 (JMLR, 2017).

Berkenkamp, F., Turchetta, M., Schoellig, A. & Krause, A. Safe model-based reinforcement learning with stability guarantees. Adv. Neural Inf. Process. Syst. 30, 908-919 (2017).

Ghadirzadeh, A., Maki, A., Kragic, D. & Björkman, M. Deep predictive policy training using reinforcement learning. In 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems 2351–2358 (IEEE, 2017).

Abbeel, P. & Ng, A. Y. Apprenticeship learning via inverse reinforcement learning. In Proc. Twenty-first International Conference on Machine Learning, 1 (Association for Computing Machinery, 2004).

Abbeel, P. & Ng, A. Y. Exploration and apprenticeship learning in reinforcement learning. In Proc. 22nd International Conference on Machine Learning 1–8 (Association for Computing Machinery, 2005).

Ross, S., Gordon, G. & Bagnell, D. A reduction of imitation learning and structured prediction to no-regret online learning. In Gordon, G., Dunson, D. & Dudík, M. (eds) Proc. Fourteenth International Conference on Artificial Intelligence and Statistics, 627–635 (JMLR, 2011).

Zhang, J. & Cho, K. Query-efficient imitation learning for end-to-end autonomous driving. In Thirty-First AAAI Conference on Artificial Intelligence (AAAI), 2891–2897 (AAAI Press, 2017).

Bicer, Y., Alizadeh, A., Ure, N. K., Erdogan, A. & Kizilirmak, O. Sample efficient interactive end-to-end deep learning for self-driving cars with selective multi-class safe dataset aggregation. In 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems 2629–2634 (IEEE, 2019).

Alshiekh, M. et al Safe reinforcement learning via shielding. In Proc. Thirty-Second AAAI Conference on Artificial Intelligence Vol. 32, 2669-2678 (AAAI Press, 2018).

Brun, W., Keren, G., Kirkeboen, G. & Montgomery, H. Perspectives on Thinking, Judging, and Decision Making (Universitetsforlaget, 2011).

Dabney, W. et al. A distributional code for value in dopamine-based reinforcement learning. Nature 577, 671–675 (2020).

Cao, Z. et al. A geometry-driven car-following distance estimation algorithm robust to road slopes. Transp. Res. Part C 102, 274–288 (2019).

Xu, S. et al. System and experiments of model-driven motion planning and control for autonomous vehicles. IEEE Trans. Syst. Man. Cybern. Syst. 52, 5975–5988 (2022).

Cao, Z. Codes and data for dynamic confidence-aware reinforcement learning. DCARL. Zenodo https://zenodo.org/badge/latestdoi/578512035 (2022).

Kochenderfer, M. J. Decision Making Under Uncertainty: Theory and Application (MIT Press, 2015).

Ivanovic, B. et al. Heterogeneous-agent trajectory forecasting incorporating class uncertainty. In 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 12196–12203 (IEEE, 2022).

Yang, Y., Zha, K., Chen, Y., Wang, H. & Katabi, D. Delving into deep imbalanced regression. In International Conference on Machine Learning 11842–11851 (PMLR, 2021).

Efron, B. & Tibshirani, R. J. An Introduction to the Bootstrap (CRC Press, 1994).

Dosovitskiy, A., Ros, G., Codevilla, F., Lopez, A. & Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the 1st Annual Conference on Robot Learning, 1–16 (PMLR, 2017).

Acknowledgements

This work is supported by the National Natural Science Foundation of China (NSFC) (U1864203 (D.Y.), 52102460 (Z.C.), 61903220 (K.J.)), China Postdoctoral Science Foundation (2021M701883 (Z.C.)) and Beijing Municipal Science and Technology Commission (Z221100008122011 (D.Y.)). It is also funded by the Tsinghua University-Toyota Joint Center (D.Y.).

Author information

Authors and Affiliations

Contributions

Z.C., S.X., D.Y. and H.P. developed the performance improvement technique, which can outperform the existing self-driving policy. Z.C. and W.Z. developed the continuous improvement technique using the worst confidence value. Z.C., S.X. and W.Z. designed the whole self-driving platform in the real world. Z.C., K.J. and D.Y. designed and conducted the experiments and collected the data.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Ali Alizadeh and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Essential supplementary description for the proposed technology, detailed setting and results for the experiments, descriptions of the data file and vehicles.

Supplementary Video 1

Evaluation results on running self-driving vehicle.

Supplementary Video 2

Continuous performance improvement using confidence value.

Supplementary Video 3

Comparing with classical value-based RL agent.

Supplementary Software

Software to run and render the results of experiments 1 to 3.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cao, Z., Jiang, K., Zhou, W. et al. Continuous improvement of self-driving cars using dynamic confidence-aware reinforcement learning. Nat Mach Intell 5, 145–158 (2023). https://doi.org/10.1038/s42256-023-00610-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-023-00610-y

This article is cited by

-

Stable training via elastic adaptive deep reinforcement learning for autonomous navigation of intelligent vehicles

Communications Engineering (2024)

-

Novel multiple access protocols against Q-learning-based tunnel monitoring using flying ad hoc networks

Wireless Networks (2024)