Abstract

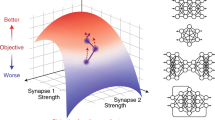

Much of recent machine learning has focused on deep learning, in which neural network weights are trained through variants of stochastic gradient descent. An alternative approach comes from the field of neuroevolution, which harnesses evolutionary algorithms to optimize neural networks, inspired by the fact that natural brains themselves are the products of an evolutionary process. Neuroevolution enables important capabilities that are typically unavailable to gradient-based approaches, including learning neural network building blocks (for example activation functions), hyperparameters, architectures and even the algorithms for learning themselves. Neuroevolution also differs from deep learning (and deep reinforcement learning) by maintaining a population of solutions during search, enabling extreme exploration and massive parallelization. Finally, because neuroevolution research has (until recently) developed largely in isolation from gradient-based neural network research, it has developed many unique and effective techniques that should be effective in other machine learning areas too. This Review looks at several key aspects of modern neuroevolution, including large-scale computing, the benefits of novelty and diversity, the power of indirect encoding, and the field’s contributions to meta-learning and architecture search. Our hope is to inspire renewed interest in the field as it meets the potential of the increasing computation available today, to highlight how many of its ideas can provide an exciting resource for inspiration and hybridization to the deep learning, deep reinforcement learning and machine learning communities, and to explain how neuroevolution could prove to be a critical tool in the long-term pursuit of artificial general intelligence.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, Cambridge, 2016).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015).

Sutton, R. S. & Barto, A. G. Reinforcement Learning: An Introduction (MIT Press, Cambridge, 2018).

Rumelhart, D. E., Hinton, G. E. & Williams, R. J. Learning representations by back-propagating errors. Nature 323, 533–536 (1986).

De Jong, K. A. Evolutionary Computation: A Unified Perspective (MIT Press, Cambridge, 2002).

Gruau, F. Automatic definition of modular neural networks. Adapt. Behav. 3, 151–183 (1994).

Yao, X. A review of evolutionary artificial neural networks. Int. J. Intell. Syst. 8, 539–567 (1993).

Floreano, D., Dürr, P. & Mattiussi, C. Neuroevolution: from architectures to learning. Evol. Intell. 1, 47–62 (2008).

Soltoggio, A., Stanley, K. O. & Risi, S. Born to learn: the inspiration, progress, and future of evolved plastic artificial neural networks. Neural Netw. 108, 48–67 (2018).

Dasgupta, D. & McGregor, D. Designing application-specific neural networks using the structured genetic algorithm. In Proc. COGANN-92: International Workshop on Combinations of Genetic Algorithms and Neural Networks 87–96 (IEEE, 1992).

Pujol, J. C. F. & Poli, R. Evolving the topology and the weights of neural networks using a dual representation. Appl. Intell. J. 8, 73–84 (1998).

Bongard, J. C. & Pfeifer, R. in Morpho-functional Machines: The New Species (eds Hara, F. & Pfeifer, R.) 237–258 (Springer, Tokyo, 2003).

Gruau, F. Genetic synthesis of modular neural networks. In Proc. 5th International Conference on Genetic Algorithms (ed. Forrest, S.) 318–325 (Morgan Kaufmann, San Francisco, 1993).

Khan, M. M., Ahmad, A. M., Khan, G. M. & Miller, J. F. Fast learning neural networks using cartesian genetic programming. Neurocomputing 121, 274–289 (2013).

Turner, A. J. & Miller, J. F. Neuroevolution: evolving heterogeneous artificial neural networks. Evol. Intell. 7, 135–154 (2014).

Mattiussi, C. & Floreano, D. Analog genetic encoding for the evolution of circuits and networks. IEEE Trans. Evol. Comput. 11, 596–607 (2006).

Stanley, K. O. & Miikkulainen, R. Evolving neural networks through augmenting topologies. Evol. Comput. 10, 99–127 (2002).

Moriarty, D. E. & Miikkulainen, R. Evolving obstacle avoidance behavior in a robot arm. In From Animals to Animats 4: Proc. 4th International Conference on Simulation of Adaptive Behavior (eds Maes, P. et al.) 468–475 (MIT Press, Cambridge, 1996).

Nolfi, S. & Floreano, D. Evolutionary Robotics (MIT Press, Cambridge, 2000).

Hornby, G et al. Evolving robust gaits with AIBO. In Proc. IEEE Conference on Robotics and Automation 3040–3045 (IEEE, 2000).

Lipson, H. & Pollack, J. B. Automatic design and manufacture of robotic lifeforms. Nature 406, 974–978 (2000).

Aaltonen, T. et al. Measurement of the top quark mass with dilepton events selected using neuroevolution at CDF. Phys. Rev. Lett. 102, 2001 (2009).

Togelius, J., Yannakakis, G. N., Stanley, K. O. & Browne, C. Search-based procedural content generation: a taxonomy and survey. IEEE Trans. Comput. Intell. AI Games 3, 172–186 (2011).

Stanley, K. O., Bryant, B. D. & Miikkulainen, R. Real-time neuroevolution in the NERO video game. IEEE Trans. Evol. Comput. 9, 653–668 (2005).

Clune, J., Mouret, J.-B. & Lipson, H. The evolutionary origins of modularity. Proc. R. Soc. B 280, 20122863 (2013).

Huizinga, J., Mouret, J.-B. & Clune, J. Evolving neural networks that are both modular and regular: Hyperneat plus the connection cost technique. In Proc. Genetic and Evolutionary Computation Conference (GECCO) 697–704 (ACM, 2014).

Polyak, B. T. Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 4, 1–17 (1964).

Qian, N. On the momentum term in gradient descent learning algorithms. Neural Netw. 12, 145–151 (1999).

LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 86, 2278–2324 (1998).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

Dahl, G., Yu, D., Deng, L. & Acero, A. Context-dependent pre-trained deep neural networks for large vocabulary speech recognition. IEEE Trans. Audio Speech Lang. Process. 20, 30–42 (2012).

Hinton, G. et al. Deep neural networks for acoustic modeling in speech recognition: the shared views of four research groups. IEEE Signal Process. Mag. 29, 82–97 (2012).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems 25 (NIPS 2012) (eds. Pereira, F. et al.) 1097–1105 (NIPS, 2012).

Lillicrap, T. P. et al. Continuous control with deep reinforcement learning. Preprint at https://arxiv.org/abs/1509.02971 (2016).

Schulman, J., Levine, S., Abbeel, P., Jordan, M. & Moritz, P. Trust region policy optimization. J. Mach. Learn. Res. 37, 1889–1897 (2015).

Schulman, J., Wolski, F., Dhariwal, P., Radford, A. & Klimov, O. Proximal policy optimization algorithms. Preprint at https://arxiv.org/abs/1707.06347 (2017).

Salimans, T., Ho, J., Chen, X. & Sutskever, I. Evolution strategies as a scalable alternative to reinforcement learning. Preprint at https://arxiv.org/abs/1703.03864 (2017).

Rechenberg, I. in Simulationsmethoden in der Medizin und Biologie 83–114 (Springer, Hannover, 1978).

Wierstra, D. et al. Natural evolution strategies. J. Mach. Learn. Res. 15, 949–980 (2014).

Mnih, V. et al. Asynchronous methods for deep reinforcement learning. In International Conference on Machine Learning 1928–1937 (PMLR, 2016).

Such, F. P. et al. Deep neuroevolution: genetic algorithms are a competitive alternative for training deep neural networks for reinforcement learning. Preprint at https://arxiv.org/abs/1712.06567 (2017).

Hessel, M. et al. Rainbow: combining improvements in deep reinforcement learning. In Proc. 2018 AAAI Conference on Artificial Intelligence (AAAI, 2017).

Horgan, D. et al. Distributed prioritized experience replay. In Proc. 2018 International Conference on Learning Representations (OpenReview, 2018).

Mania, H., Guy, A. & Recht, B. Simple random search provides a competitive approach to reinforcement learning. Preprint at https://arxiv.org/abs/1803.07055 (2018).

Clune, J., Stanley, K. O., Pennock, R. T. & Ofria, C. On the performance of indirect encoding across the continuum of regularity. IEEE Trans. Evol. Comput. 15, 346–367 (2011).

Cully, A., Clune, J., Tarapore, D. & Mouret, J.-B. Robots that can adapt like animals. Nature 521, 503–507 (2015).

Lehman, J., Chen, J., Clune, J. & Stanley, K. O. Safe mutations for deep and recurrent neural networks through output gradients. In Proc. Genetic and Evolutionary Computation Conference (GECCO) 117–124 (ACM, 2018).

Gangwani, T. & Peng, J. Genetic policy optimization. In Proc. 2018 International Conference on Learning Representations (OpenReview, 2018).

Fortunato, M. et al. Noisy networks for exploration. In Proc. 2018 International Conference on Learning Representations (OpenReview, 2018).

Plappert, M. et al. Parameter space noise for exploration. In Proc. 2018 International Conference on Learning Representations (OpenReview, 2018).

Lehman, J. et al. The surprising creativity of digital evolution: A collection of anecdotes from the evolutionary computation and artificial life research communities. Preprint at https://arxiv.org/abs/1803.03453 (2018).

Conti, E. et al. Improving exploration in evolutionary strategies for deep reinforcement learning via a population of novelty-seeking agents. Advances in Neural Information Processing Systems (NIPS) (Curran Associates, Red Hook, 2018).

Stanton, C. & Clune, J. Deep curiosity search: Intra-life exploration improves performance on challenging deep reinforcement learning problems. Preprint at https://arxiv.org/abs/1806.00553 (2018).

Stanley, K. O. & Miikkulainen, R. A taxonomy for artificial embryogeny. Artif. Life 9, 93–130 (2003).

Rawal, A. & Miikkulainen, R. From nodes to networks: evolving recurrent neural networks. Preprint at https://arxiv.org/abs/1803.04439 (2018).

Real, E., Aggarwal, A., Huang, Y. & Le, Q. V. Regularized evolution for image classifier architecture search. Preprint at https://arxiv.org/abs/1802.01548 (2018).

Dawkins, R. The Extended Phenotype: The Gene as the Unit of Selection (Freeman, Oxford, 1982).

Gould, S. J. Full House (Harvard Univ. Press, Cambridge, 2011).

Goldberg, D. E. & Richardson, J. Genetic algorithms with sharing for multimodal function optimization. In Proc. 2nd International Conference on Genetic Algorithms 41–49 (L. Erlbaum, Hillsdale, 1987).

Mahfoud, S. W. Niching Methods for Genetic Algorithms. PhD thesis, Univ. Illinois at Urbana-Champaign (1995).

Jong, De, K. A. An Analysis of the Behavior of a Class of Genetic Adaptive Systems. PhD thesis, Univ. Michigan (1975).

Lehman, J. & Stanley, K. O. Abandoning objectives: evolution through the search for novelty alone. Evol. Comput. 19, 189–223 (2011).

Neyshabur, B., Salakhutdinov, R. R. & Srebro, N. Path-SGD: path-normalized optimization in deep neural networks. In Advances in Neural Information Processing Systems 28 (NIPS 2015) 2422–2430 (MIT Press, Cambridge, 2015).

Radcliffe, N. J. Genetic set recombination and its application to neural network topology optimisation. Neural Comput. Appl. 1, 67–90 (1993).

Benson-Amram, S. & Holekamp, K. E. Innovative problem solving by wild spotted hyenas. Proc. R. Soc. B 279, 4087–4095 (2012).

Kanter, R. M. The Change Masters: Binnovation and Entrepreneturship in the American Corporation (Simon & Schuster, New York, 1984).

Mouret, J.-B. & Doncieux, S. Encouraging behavioral diversity in evolutionary robotics: an empirical study. Evol. Comput. 20, 91–133 (2012).

Mengistu, H., Lehman, J. & Clune, J. Evolvability search: directly selecting for evolvability in order to study and produce it. In Proc. Genetic and Evolutionary Computation Conference (GECCO) 141–148 (ACM, 2016).

Gravina, D., Liapis, A. & Yannakakis, G. Surprise search: beyond objectives and novelty. In Proc. Genetic and Evolutionary Computation Conference (GECCO) 677–684 (ACM, 2016).

Deb, K., Pratap, A., Agarwal, S. & Meyarivan, T. A. M. T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evolut. Comput. 6, 182–197 (2002).

Zitzler, E. & Thiele, L. Multiobjective evolutionary algorithms: a comparative case study and the strength pareto approach. IEEE Trans. Evolut. Comput. 3, 257–271 (1999).

Pugh, J. K., Soros, L. B. & Stanley, K. O. Quality diversity: a new frontier for evolutionary computation. Front. Robot. AI 3, 40 (2016).

Lehman, J. & Stanley, K. O. Evolving a diversity of virtual creatures through novelty search and local competition. In Proc. 13th Annual Conference on Genetic and Evolutionary Computation (GECCO) 211–218 (ACM, 2011).

Mouret, J.-B. & Clune, J. Illuminating search spaces by mapping elites. Preprint at https://arxiv.org/abs/1504.04909 (2015).

Brant, J. C. & Stanley, K. O. Minimal criterion coevolution: a new approach to open-ended search. In Proc. Genetic and Evolutionary Computation Conference (GECCO) 67–74 (ACM, 2017).

Hodjat, B., Shahrzad, H. & Miikkulainen, R. Distributed age-layered novelty search. In Proc. 15th International Conference on the Synthesis and Simulation of Living Systems (Alife XV) 131–138 (MIT Press, Cambridge, 2016).

Huizinga, J., Mouret, J.-B. & Clune, J. Does aligning phenotypic and genotypic modularity improve the evolution of neural networks? In Proc. Genetic and Evolutionary Computation Conference (GECCO) 125–132 (ACM, 2016).

Meyerson, E. & Miikkulainen, R. Discovering evolutionary stepping stones through behavior domination. In Proc. Genetic and Evolutionary Computation Conference (GECCO) 139–146 (ACM, 2017).

Nguyen, A., Yosinski, J. & Clune, J. Understanding innovation engines: automated creativity and improved stochastic optimization via deep learning. Evol. Comput. 24, 545–572 (2016).

Herculano-Houzel, S. The human brain in numbers: a linearly scaled-up primate brain. Front. Hum. Neurosci. 3, 31 (2009).

Venter, J. C. et al. The sequence of the human genome. Science 291, 1304–1351 (2001).

Striedter, G. F. Principles of Brain Evolution (Sinauer Associates, Sunderland, 2005).

Russakovsky, O. et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vision. 115, 211–252 (2015).

Turing, A. The chemical basis of morphogenesis. Phil. Trans. R. Soc. B 237, 37–72 (1952).

Lindenmayer, A. Mathematical models for cellular interactions in development I. Filaments with one-sided inputs. J. Theor. Biol. 18, 280–299 (1968).

Bongard, J. C. & Pfeifer, R . Repeated structure and dissociation of genotypic and phenotypic complexity in artificial ontogeny. In Proc. Genetic and Evolutionary Computation Conference (GECCO) 829–836 (Kaufmann, 2001).

Hornby, G. S. & Pollack, J. B. Creating high-level components with a generative representation for body-brain evolution. Artif. Life 8, 223–246 (2002).

Stanley, K. O. Compositional pattern producing networks: a novel abstraction of development. Genet. Program. Evol. Mach. Spec. Issue Dev. Syst. 8, 131–162 (2007).

Meinhardt, H. Models of Biological Pattern Formation (Academic, London, 1982).

Secretan, J. et al. Picbreeder: a case study in collaborative evolutionary exploration of design space. Evol. Comput. 19, 345–371 (2011).

Clune, J. & Lipson, H. Evolving three-dimensional objects with a generative encoding inspired by developmental biology. In Proc. European Conference on Artificial Life 144–148 (MIT Press, Cambridge, 2011).

Cheney, N, MacCurdy, R, Clune, J. & Lipson, H. Unshackling evolution: evolving soft robots with multiple materials and a powerful generative encoding. In Proc. Genetic and Evolutionary Computation Conference (GECCO) (ACM, 2013).

Nguyen, A., Yosinski, J. & Clune, J. Deep neural networks are easily fooled: High confidence predictions for unrecognizable images. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (IEEE, 2015).

Huizinga, J., Stanley, K. O. & Clune, J. The emergence of canalization and evolvability in an open-ended, interactive evolutionary system. Artif. Life 24, 157–181 (2018).

Liu, R. et al. An intriguing failing of convolutional neural networks and the coordconv solution. In Proc. 2018 Conference on Neural Information Processing Systems (NIPS) (Curran Associates, Red Hook, 2018).

Gauci, J. & Stanley, K. O. Autonomous evolution of topographic regularities in artificial neural networks. Neural Comput. 22, 1860–1898 (2010).

Stanley, K. O., D’Ambrosio, D. B. & Gauci, J. A hypercube-based indirect encoding for evolving large-scale neural networks. Artif. Life 15, 185–212 (2009).

Fernando, C. et al. Convolution by evolution: differentiable pattern producing networks. In Proc. Genetic and Evolutionary Computation Conference (GECCO) 109–116 (ACM, 2016).

Ha, D., Dai, A. & Le, Q. V. Hypernetworks. In Proc. 2017 International Conference on Learning Representations Vol. 2 (OpenReview, 2017).

van Steenkiste, S., Koutník, J., Driessens, K. & Schmidhuber, J. A wavelet-based encoding for neuroevolution. In Proc. Genetic and Evolutionary Computation Conference (GECCO) 517–524 (ACM, 2016).

Koutnik, J., Gomez, F. & Schmidhuber, J. Evolving neural networks in compressed weight space. In Proc. Genetic and Evolutionary Computation Conference (GECCO) 619–626 (ACM, 2010).

Hausknecht, M., Lehman, J., Miikkulainen, R. & Stone, P. A neuroevolution approach to general atari game playing. IEEE Trans. Comput. Intell. AI Games 6, 355–366 (2014).

Turner, A. J. & Miller, J. F. Recurrent cartesian genetic programming of artificial neural networks. Genet. Program. Evol. Mach. 18, 185–212 (2017).

Risi, S. & Stanley, K. O. Indirectly encoding neural plasticity as a pattern of local rules. In Proc 11th International Conference on Simulation of Adaptive Behavior (Springer, New York, 2010).

Risi, S. & Stanley, K. O. An enhanced hypercube-based encoding for evolving the placement, densty and connectivity of neurons. Artif. Life J. 18, 331–363 (2012).

Schmidhuber, J. Evolutionary Principles in Self-referential Learning, or on Learning How to Learn: The Meta-meta-...Hook. PhD thesis, Technische Univ. München (1987).

Duan, Y. et al. RL2: Fast reinforcement learning via slow reinforcement learning. Preprint at https://arxiv.org/abs/1611.02779 (2016).

Finn, C, Abbeel, P. & Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proc. 34th International Conference on Machine Learning 1126–1135 (PMLR, 2017).

Miconi, T. Learning to learn with backpropagation of Hebbian plasticity. Preprint at https://arxiv.org/abs/1609.02228 (2016).

Wang, J. X. et al. Learning to reinforcement learn. Preprint at https://arxiv.org/abs/1611.05763 (2016).

Floreano, D. & Urzelai, J. Evolutionary robots with on-line self-organization and behavioral fitness. Neural Netw. 13, 431–4434 (2000).

Floreano, D. & Mondada, F. Evolution of plastic neurocontrollers for situated agents. IEEE Trans. Syst. Man. Cybern. 26, 396–407 (1996).

Hebb, D. O. The Organization of Behavior: A Neuropsychological Theory (Wiley, Hoboken, 1949).

Soltoggio, A., Bullinaria, A. J., Mattiussi, C., Dürr, P. & Floreano, D. Evolutionary advantages of neuromodulated plasticity in dynamic, reward-based scenarios. In Proc. 11th International Conference on Artificial Life (Alife XI) (eds Bullock, S. et al.) 569–576 (MIT Press, Cambridge, 2008).

Risi, S. & Stanley, K. O. A unified approach to evolving plasticity and neural geometry. In Proc. International Joint Conference on Neural Networks (IJCNN-2012) (IEEE, 2012).

Tonelli, P. & Mouret, J.-B. On the relationships between generative encodings, regularity, and learning abilities when evolving plastic artificial neural networks. PLoS One 8, e79138 (2013).

Barnes, J. M. & Underwood, B. J. ‘Fate’ of first-list associations in transfer theory. J. Exp. Psychol. 58, 97 (1959).

French, R. M. Catastrophic forgetting in connectionist networks. Trends Cogn. Sci. 3, 128–135 (1999).

Ellefsen, K. O., Mouret, J.-B., Clune, J. & Bongard, J. C. Neural modularity helps organisms evolve to learn new skills without forgetting old skills. PLoS Comput. Biol. 11, e1004128 (2015).

Velez, R. & Clune, J. Diffusion-based neuromodulation can eliminate catastrophic forgetting in simple neural networks. PLoS One 12, e0187736 (2017).

Kirkpatrick, J. et al. Overcoming catastrophic forgetting in neural networks. Proc. Natl Acad. Sci. USA 114, 3521–3526 (2017).

Zenke, F, Poole, B. & Ganguli, S. Continual learning through synaptic intelligence. In Proc. 34th International Conference on Machine Learning 3987–3995 (PMLR, 2017).

Miikkulainen, R. et al. Evolving deep neural networks. Preprint at https://arxiv.org/abs/1703.00548 (2017).

He, K., Zhang, X., Ren, S. & Sun, J. Identity mappings in deep residual networks. In European Conference on Computer Vision 630–645 (Springer, Berlin, 2016).

G. Huang,Liu, Z. Van Der Maaten, L. &Weinberger, K. Q. Densely connected convolutional networks. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (IEEE, 2017).

Szegedy, C., Ioffe, S. & Vanhoucke, V. Inception-v4, Inception-ResNet and the impact of residual connections on learning. In Proc. 2017 AAAI Conference on Artificial Intelligence 4278–4284 (AAAI, 2017).

Real, E. et al. Large-scale evolution of image classifiers. In Proc. 34th International Conference on Machine Learning (eds Precup, D. & Teh, Y. W.) 2902–2911 (PLMR, 2017).

Zoph, B., Vasudevan, V., Shlens, J. & Le, Q. V. Learning transferable architectures for scalable image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 8697–8710 (IEEE, 2018).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016).

Cubuk, E. D., Zoph, B., Mane, D., Vasudevan, V. & Le, Q. V. Autoaugment: learning augmentation policies from data. Preprint at https://arxiv.org/abs/1805.09501 (2018).

Schmidhuber, J. Deep learning in neural networks: an overview. Neural Netw. 61, 85–117 (2015).

Greff, K., Srivastava, R. K., Koutník, J., Steunebrink, B. R. & Schmidhuber, J. LSTM: a search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 28, 2222–2232 (2017).

Melis, G., Dyer, C. & Blunsom, P. On the state of the art of evaluation in neural language models. In Proc. 2018 International Conference on Learning Representations (OpenReview, 2018).

Zoph, B. & Le, Q. V. Neural architecture search with reinforcement learning. In Proc. 2017 International Conference on Learning Representations (OpenReview, 2017).

Marcus, M. P., Santorini, B. & Marcinkiewicz, M. A. Building a large annotated corpus of English: the Penn treebank. Comput. Linguist. 19, 313–330 (1993).

Caruana, R. Multitask learning. Mach. Learn. 28, 41–75 (1997).

Meyerson, E. & Miikkulainen, R. Pseudo-task augmentation: from deep multitask learning to intratask sharing—and back. In Proc. 35th International Conference on Machine Learning (PMLR, 2018).

Liang, J., Meyerson, E. & Miikkulainen, R. Evolutionary architecture search for deep multitask networks. In Proc. Genetic and Evolutionary Computation Conference (GECCO) 466–473 (ACM, 2018).

Elsken, T., Metzen, J. H. & Hutter, F. Neural architecture search: a survey. Preprint at https://arxiv.org/abs/1808.05377 (2017).

Fernando, C. et al. Pathnet: evolution channels gradient descent in super neural networks. Preprint at https://arxiv.org/abs/1701.08734 (2017).

Houthooft, R. et al. Evolved policy gradients. Preprint at https://arxiv.org/abs/1802.04821 (2018).

Wang, C., Xu, C., Yao, X. & Tao, D. Evolutionary generative adversarial networks. Preprint at https://arxiv.org/abs/1803.00657 (2018).

Jaderberg, M. et al. Population based training of neural networks. Preprint at https://arxiv.org/abs/1711.09846 (2017).

Jaderberg, M. et al. Human-level performance in first-person multiplayer games with population-based deep reinforcement learning. Preprint at https://arxiv.org/abs/1807.01281 (2018).

Eysenbach, B., Gupta, A., Ibarz, J. & Levine, S. Diversity is all you need: learning skills without a reward function. Preprint at https://arxiv.org/abs/1802.06070 (2018).

Miconi, T., Clune, J. & Stanley, K. O. Differentiable plasticity: training plastic neural networks with backpropagation. Proc. International Conference on Machine Learning 3556–3565 (PMLR, 2018).

Mordvintsev, A., Pezzotti, N., Schubert, L. & Olah, C. Differentiable image parameterizations. Distill 3, e12 (2018).

Bansal, T., Pachocki, J., Sidor, S., Sutskever, I. & Mordatch, I. Emergent complexity via multi-agent competition. In Proc. 2018 International Conference on Learning Representations (OpenReview, 2018).

Silver, D. et al. Mastering the game of Go without human knowledge. Nature 550, 354–359 (2017).

Paredis, J. Coevolutionary computation. Artif. Life 2, 355–375 (1995).

Pollack, J. B., Blair, A. D. & Land, M. Coevolution of a backgammon player. In Proc. 5th International Workshop on Artificial Life: Synthesis and Simulation of Living Systems (ALIFE-96) (eds Langton. C. G. & Shimohara, K.) (MIT Press, Cambridge, 1996).

Potter, M. A. & De Jong, K. A. Evolving neural networks with collaborative species. In Proc. 1995 Summer Computer Simulation Conference 340–345 (Society for Computer Simulation, 1995).

Rosin, C. D. & Belew, R. K. Methods for competitive co-evolution: finding opponents worth beating. In Proc. 1995 International Conference on Genetic Algorithms 373–381 (Morgan Kaufmann, Burlington, 1995).

Cussat-Blanc, S., Harrington, K. & Pollack, J. Gene regulatory network evolution through augmenting topologies. IEEE Trans. Evolut. Comput. 19, 823–837 (2015).

Auerbach, J. E. & Bongard, J. C.On the relationship between environmental and morphological complexity in evolved robots. In Proc. Genetic and Evolutionary Computation Conference (GECCO) 521–528 (ACM, 2012).

Pfeifer, R. & Bongard, J. How the Body Shapes the Way We Think: A New View of Intelligence (MIT Press, Cambridge, 2006).

Howard, D. et al. Evolving embodied intelligence from materials to machines. Nat. Mach. Intell. https://doi.org/10.1038/s42256-018-0009-9 (2019).

Stanley, K. O., Lehman, J. & Soros, L. Open-endedness: the last grand challenge you’ve never heard of. O’Reilly Online https://www.oreilly.com/ideas/open-endedness-the-last-grand-challenge-youve-never-heard-of (2017).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Stanley, K.O., Clune, J., Lehman, J. et al. Designing neural networks through neuroevolution. Nat Mach Intell 1, 24–35 (2019). https://doi.org/10.1038/s42256-018-0006-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-018-0006-z

This article is cited by

-

Harnessing deep learning for population genetic inference

Nature Reviews Genetics (2024)

-

Hybrid self-attention NEAT: a novel evolutionary self-attention approach to improve the NEAT algorithm in high dimensional inputs

Evolving Systems (2024)

-

Automatic DNN architecture design using CPSOTJUTT for power system inspection

Journal of Big Data (2023)

-

Reinforcement learned adversarial agent (ReLAA) for active fault detection and prediction in space habitats

npj Microgravity (2023)

-

Evolving scattering networks for engineering disorder

Nature Computational Science (2023)