Abstract

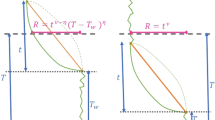

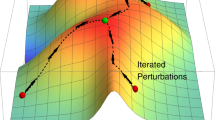

Complex behaviour emerges from interactions between objects produced by different generating mechanisms. Yet to decode their causal origin(s) from observations remains one of the most fundamental challenges in science. Here we introduce a universal, unsupervised and parameter-free model-oriented approach, based on the seminal concept and the first principles of algorithmic probability, to decompose an observation into its most likely algorithmic generative models. Our approach uses a perturbation-based causal calculus to infer model representations. We demonstrate its ability to deconvolve interacting mechanisms regardless of whether the resultant objects are bit strings, space–time evolution diagrams, images or networks. Although this is mostly a conceptual contribution and an algorithmic framework, we also provide numerical evidence evaluating the ability of our methods to extract models from data produced by discrete dynamical systems such as cellular automata and complex networks. We think that these separating techniques can contribute to tackling the challenge of causation, thus complementing statistically oriented approaches.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data that support the plots within this paper are available from the corresponding author upon request.

References

Zenil, H. et al. An algorithmic information calculus for causal discovery and reprogramming systems. Preprint at https://doi.org/10.2139/ssrn.3193409 (2018).

Zenil, H., Kiani, N. A., Zea, A. A., Rueda-Toicen, A. & Tegnér, J. Data dimension reduction and network sparsification based on minimal algorithmic information loss. Preprint at https://arxiv.org/abs/1802.05843 (2018).

Lloyd, S. P. Least squares quantization in PCM. IEEE Trans. Inform. Theory 28, 129–137 (1982).

Kaufman, L. & Rousseeuw, P. J. in Statistical Data Analysis Based on the L1-Norm and Related Methods (North-Holland, Amsterdam, 1987).

Ben-Hur, A., Horn, D., Siegelmann, H. & Vapnik, V. N. Support vector clustering. J. Mach. Learn. Res. 2, 125–137 (2001).

Milo, R. et al. Network motifs: simple building blocks of complex networks. Science 298, 824–827 (2002).

Newman, M. E. J. Finding community structure in networks using the eigenvectors of matrices. Phys. Rev. E 74, 036104 (2006).

Benczur, A. & Karger, D. R. Approximating s-t minimum cuts in O(n 2)-time. In Proc. Twenty-Eighth Annual ACM Symposium on the Theory of Computing 47–55 (ACM, 1996).

Spielman, D. A. & Srivastava, N. Graph sparsification by effective resistances. In Proc. Fortieth Annual ACM Symposium on Theory of Computing 563–568 (ACM, 2008).

Spielman, D. A. & Teng, S.-H. Spectral sparsification of graphs. SIAM J. Comput. 40, 981–1025 (2011).

Liu, M., Liu, B. & Wei, F. Graphs determined by their (signless) Laplacian spectra. Electron. J. Linear Algebra 22, 112–124 (2011).

Granger, C. W. J. Investigating causal relations by econometric models and cross-spectral methods. Econometrica 37, 424–438 (1969).

Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 85, 461–464 (2000).

Pearl, J. Causality: Models, Reasoning and Inference (Cambridge University Press, Cambridge, 2000).

Solomonoff, R. J. A formal theory of inductive inference: parts 1 and 2. Inform. Control 7, 1–22–224–254 (1964).

Watanabe, S. in Frontiers of Pattern Recognition (ed. Watanabe, S.) 561–568 (Academic Press, New York, 1972).

Williams, P. L. & Beer, R. D. Nonnegative decomposition of multivariate information. Preprint at https://arxiv.org/abs/1004.2515 (2010).

Lizier, J. T., Bertschinger, N., Jost, J. & Wibral, M. Information decomposition of target effects from multi-source interactions: perspectives on previous, current and future work. Entropy 20, 307 (2018).

Li, M. & Vitányi, P. M. B. An Introduction to Kolmogorov Complexity and Its Applications 3rd edn (Springer, New York, 2009).

Li, M., Chen, X., Li, X., Ma, B. & Vitányi, P. M. B. The similarity metric. IEEE Trans. Inf. Theory 50, 3250–3264 (2004).

Bennett, C. H., Gács, P., Li, M., Vitányi, P. M. B. & Zurek, W. H. Information distance. IEEE Trans. Inf. Theory 44, 1407–1423 (1998).

Cilibrasi, R. & Vitanyi, P. M. B. Clustering by compression. IEEE Trans. Inf. Theory 51, 1523–1545 (2005).

Shannon, C. E. A mathematical theory of communication. Bell Syst. Tech. J. 27, 379–423 (1948).

Ince, R. A. A. Measuring multivariate redundant information with pointwise common change in surprisal. Entropy 19, 318 (2017).

Strelioff, C. C. & Crutchfield, J. P. Bayesian structural inference for hidden processes. Phys. Rev. E 89, 042119 (2014).

Shalizi, C. R. & Crutchfield, J. P. Computational mechanics: pattern and prediction, structure and simplicity. J. Stat. Phys. 104, 819–881 (2001).

Delahaye, J.-P. & Zenil, H. Numerical evaluation of the complexity of short strings: a glance into the innermost structure of algorithmic randomness. Appl. Math. Comput. 219, 63–77 (2012).

Soler-Toscano, F., Zenil, H., Delahaye, J.-P. & Gauvrit, N. Calculating Kolmogorov complexity from the frequency output distributions of small Turing machines. PLoS ONE 9, e96223 (2014).

Hutter, M. Universal Artificial Intelligence (EATCS Series, Springer, Berlin, 2005).

Gauvrit, N., Zenil, H. & Tegnér, J. in Representation and Reality: Humans, Animals and Machines (eds Dodig-Crnkovic, G. & Giovagnoli, R.) 117–139 (Springer, Berlin,Berlin, 2017).

Rissanen, J. Modeling by shortest data description. Automatica 14, 465–658 (1978).

Levin, L. A. Universal search problems. Probl. Inform. Transm. 9, 265–266 (1973).

Schmidhuber, J. The speed prior: a new simplicity measure yielding, near-optimal computable predictions. In Proc. 15th annual conference on Computational Learning Theory (COLT 2002) (eds Kivinen, J. & Sloan, R. H.) 216–228 (Springer, Sydney, 2002).

Daley, R. P. Minimal-program complexity of pseudo-recursive and pseudo-random sequences. Math. Syst. Theory 9, 83–94 (1975).

Zenil, H., Badillo, L., Hernández-Orozco, S. & Hernández-Quiroz, F. Coding-theorem like behaviour and emergence of the universal distribution from resource-bounded algorithmic probability. Int. J. Parallel Emergent Distrib. Syst. https://doi.org/10.1080/17445760.2018.1448932 (2018).

Hernández-Orallo, J. Computational measures of information gain and reinforcement in inference processes. AI Commun. 13, 49–50 (2000).

Hernández-Orallo, J. Universal and cognitive notions of part. In Proc. 4th Systems Science European Congress 711–722 (EC, 1999).

Solomonoff, R. J. The time scale of artificial intelligence: reflections on social effects. Human. Syst. Manag. 5, 149–153 (1985).

Zenil, H. et al. A decomposition method for global evaluation of Shannon entropy and local estimations of algorithmic complexity. Entropy 20, 605 (2018).

Chaitin, G. J. On the length of programs for computing finite binary sequences. J. ACM 13, 547–569 (1966).

Levin, L. A. Laws of information conservation (non-growth) and aspects of the foundation of probability theory. Probl. Inf. Transm. 10, 206–210 (1974).

Zenil, H., Kiani, N. A. & Tegnér, J. Symmetry and correspondence of algorithmic complexity over geometric, spatial and topological representations. Entropy 20, 534 (2018).

Zenil, H., Soler-Toscano, F., Delahaye, J.-P. & Gauvrit, N. Two-dimensional Kolmogorov complexity and validation of the coding theorem method by compressibility. PeerJ Comput. Sci. 1, e23 (2013).

Riedel, J. & Zenil, H. Rule primality and compositional emergence of Turing-universality from elementary cellular automata. J. Cell. Autom. 13, 479–497 (2018).

Pearl, J. To build truly intelligent machines, teach them cause and effect. Quanta Magazine (15 May 2018).

Minsky, M. The limits of understanding. World Science Festival https://www.worldsciencefestival.com/videos/the-limits-of-understanding/(2014).

Acknowledgements

H.Z. was supported by Swedish Research Council (Vetenskapsrådet) grant number 2015-05299. J.T. was supported by the King Abdullah University of Science and Technology.

Author information

Authors and Affiliations

Contributions

H.Z., N.A.K. and J.T. conceived and designed the algorithms. H.Z. designed the experiments and carried out the calculations and numerical experiments. A.A.Z. and H.Z. conceived the online tool to illustrate the method applied to simple examples based on this paper. All authors contributed to the writing of the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Methods, Figures and References.

Rights and permissions

About this article

Cite this article

Zenil, H., Kiani, N.A., Zea, A.A. et al. Causal deconvolution by algorithmic generative models. Nat Mach Intell 1, 58–66 (2019). https://doi.org/10.1038/s42256-018-0005-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-018-0005-0

This article is cited by

-

Automated causal inference in application to randomized controlled clinical trials

Nature Machine Intelligence (2022)