Abstract

Bits manipulation in traditional memory writing is commonly done through quasi-static operations. While simple to model, this method is known to reduce memory capacity. We demonstrate how a reinforcement learning agent can exploit the dynamical response of a simple multi-bit mechanical system to restore its memory. To do so, we introduce a model framework consisting of a chain of bi-stable springs manipulated on one end by the external action of the agent. We show that the agent learns how to reach all available states for three springs, even though some states are not reachable through adiabatic manipulation, and that training is significantly improved using transfer learning techniques. Interestingly, the agent also points to an optimal system design by taking advantage of the underlying physics. Indeed, the control time exhibits a non-monotonic dependence on the internal dissipation, reaching a minimum at a cross-over shown to verify a mechanically motivated scaling relation.

Similar content being viewed by others

Introduction

At first sight, memory seems like a fragile property of carefully crafted devices. However, upon closer inspection, various forms of information retention are present in a wide array of disordered systems1. Surprisingly, while they are governed by a very rugged and complex energy landscape, different disordered systems display similar history-dependent dynamical2,3,4,5 and static6,7 responses. These observations led to a recent surge of interest in the Preisach model of hysteresis8,9. The Preisach model views hysteresis cycles as two-state systems, an embryonic form of non-volatile memory. Note that other forms of memory with a different underlying structure exist10,11. Combining these bistable elements in larger structures generates multi-stable systems with a memory capacity scaling exponentially with the number of elements. The simplicity of the model makes it applicable in many areas of physics including photonic devices12, glassy13, plastic14,15 and granular16,17 systems, spin ice18, cellular automata19 or even crumpled sheets20 and origami bellows21,22. More importantly, the Preisach model captures some remarkable features of complex real-life systems, such as return point memory16,17,23,24,25. Furthermore, the model allows for information to be written and read from the underlying system, making the internal memory mechanism adequate to store data. For this purpose, however, the Preisach model presents a major limitation: it stays grounded in the quasi-static framework of adiabatic transformations. As a result, the specific characteristics of each hysteresis cycle26 or the addition of internal coupling20,22,27 can considerably shrink the set of reachable stable states and effectively reduce the memory capacity of the system. In this paper, we show that a controller, in the form of a reinforcement learning agent, can go beyond this limitation and take advantage of the dynamics to reach all stable states, including adiabatically inaccessible states, effectively restoring the memory of the system to its full capacity. We base our study a chain of bi-stable springs, a usual model framework22,28, with three coupled units and internal dissipation controlled by a force applied on the last mass. Positional actuation would result in non-local interactions, which are known to significantly alter the memory structure of the system29. After successfully training the agent on a specified set of physical parameters, we demonstrate that transfer learning30,31 accelerates the training on different parameters and extends the region of parameter space that leads to learning convergence. Finally, we investigate the change of the dynamical protocol proposed by the trained control process for a single transition between two states as a function of the dissipation’s amplitude. The transition duration presents a minimum for a critical value of the dissipation that appears to verify a physically motivated scaling relation, pointing to the fact that the agent learns how to harness the physics of the system to its advantage.

Results

Model for a chain of bi-stable springs

We consider a one-dimensional multi-stable mechanical system composed of n identical masses m connected by bi-stable springs in series, as shown in Fig. 1a). For each spring i, we set a reference length li such that the deformation ui of the spring reads

where xi(t) is the position of the i-th mass at time t. The tunable bi-stability of each spring is achieved through a generic quartic potential. We thus obtain the cubic force equations

with k > 0 is the stiffness of the spring, \({\delta }_{i}^{(0)}\, > \, 0\) and \({\delta }_{i}^{(1)}\, > \, 0\) correspond to meta stable configurations 0 and 1, and ui = 0 corresponds to an unstable equilibrium. Other works on similar systems also considered trilinear forms14,28. Still, our choice generates a smooth mechanical response and keeps its amplitude moderate for low deformation. This behavior plays a crucial role in the scaling analysis that we will present later. The first spring of the chain is attached to a fixed wall, a condition that imposes x0 = 0, and we apply an external force Fe to the last mass. Finally, we consider that the system is bathed in an environmental fluid, resulting in a viscous force Ff,i being exerted on each mass such that

where the dot indicates the derivative with respect to time \(\dot{x}=\frac{{{{{{\rm{d}}}}}}x}{{{{{{\rm{d}}}}}}t}\) and η is a viscous coefficient.

a Schematics view of the model. Each unit, characterized by a color, corresponds to a bistable spring while each circle corresponds to a mass. The first unit is attached to a fixed wall and an external force Fe is applied to the last mass. b Deformation u of each unit under external load Fe (see “Methods” for more details on the springs' parameters). The switching fields \({F}_{i}^{\pm }\) are defined through Eq. (4). c Transition graph where the nodes represent the stable configurations and the arrows the quasi-statically achievable transitions between them. The states 001 and 101 are unreachable “Garden of Eden” states with this choice of disorder.

Let us first consider the case n = 1 where the system is a single bi-stable spring attached to a mass. The solutions for mechanical equilibrium, schematized for three different springs in Fig. 1b, displays two critical amplitudes F± such that

where u± are both solutions of \(\frac{{{{{{{{\rm{d}}}}}}}}F}{{{{{{{{\rm{d}}}}}}}}u}=0\). Note that F+ > 0 > F− since we defined both δ(0) and δ(1) to be positive. These two forces are essential to describe the stability of the system. Indeed, we observe two branches σ of stable configurations, that we call 0 (for u < 0) and 1 (for u > 0). Both branches present a solution for the mechanical equilibrium F(u) + Fe = 0 when Fe remains in the range [F−, F+]: the system has two stable states. However, as soon as Fe gets beyond this range, either the branch σ = 0 (for Fe > F+) or σ = 1 (for Fe < F−) vanishes.

These dynamical properties lead to an hysteresis cycle that can be described with a simple experiment. We start with the spring at rest with σ = 0, a configuration which corresponds to u = −δ(0), and slowly pull on its free end, increasing Fe while keeping mechanical equilibrium. As a response, the system slightly stretches and u increases. This continues until Fe = F+ where the branch σ = 0 disappears. At this point, if we continue to increase Fe, the system necessarily jumps to the branch σ = 1, with u > 0. If we now decrease Fe, u decreases accordingly until Fe = F− where the system has to jump back to the original branch σ = 0. If we only consider the stability branches, the described hysteresis cycle corresponds to a so-called hysteron, the basic block of the Preisach model8. In a Preisach model, a set of n independent hysterons is actuated through an external field. Each hysteron has two states, 0 and 1, and two switching fields, \({F}_{i}^{+}\) and \({F}_{i}^{-}\), that characterize when each hysteron σi switches between states. As long as the switching fields are unique and the hysterons are independent, i.e. the switching fields do not change with the state of the system, and the Preisach model is able to predict the possible transitions between the 2n configurations. Our system, n bi-stable spring/mass in series under quasi-static actuation, fulfills the local mechanical equilibrium and independence assumptions.

To get a better visual representation of the model’s predictions, the quasi-statically achievable transitions between states are modeled as the edges of a directed graph, where the nodes represent the stable configurations. The allowed transitions correspond to the switch of a single hysteron. The exact topology of the transition diagram exclusively depends on the relative values of the switching fields26. If the order of the positive and negative switching fields are opposite, i.e. \(0 < {F}_{i}^{+}\, < \, {F}_{j}^{+}\) and \(0 > {F}_{i}^{-}\, > \, {F}_{j}^{-}\) for all i < j, every state is reachable from any other state. Otherwise, there exists isolated configurations which can never be reached again once left. As is customary, we call these unreachable configurations “Garden of Eden” (GoE) states (see previous work by Jules et al.22 for a historical account). The number of GoE states depends on the permutation in the order of the switching fields26. In real systems, these permutations might come from defects in the conception of the bistable units with close switching fields, for instance. An illustration for the case n = 3 is given in Fig. 1c where \({F}_{1}^{-}\, < \, {F}_{2}^{-}\, < \, {F}_{3}^{-}\) and \({F}_{2}^{+}\, < \, {F}_{3}^{+}\, < \, {F}_{1}^{+}\). In this configuration, both 001 and 101 are GoE states. The existence of GoE states severely limits the total number of reachable states, and as a result, the amount of information that can be stored in the system.

A solution to overcome this limitation is to break the quasi-static assumption and to take advantage of the dynamics as a means to reach GoE configurations. However, this approach foregoes a major upside of the Preisach model, its simplicity. Indeed, applying Newton’s second law of motion to each mass yields a description of the dynamical evolution for the system through a system of n coupled non-linear differential equations

While providing a clear experimental protocol to make the system change configuration is straightforward in the quasi-static regime, the non-linear response of the springs and the coupling between the equations make a similar analysis a hefty challenge in the dynamical case. In particular, other methods of dynamical control like linear quadratic regulators do not manage to properly handle the transitions to GoE state for all physical parameters and initial conditions (see Supplementary Note 1). In the following, we demonstrate how the use of artificial neural networks and reinforcement learning unlocks this feat.

Reinforcement learning to control the dynamics

Reinforcement Learning (RL) is a computational paradigm that consists in optimizing, through trial-and-error, the actions of an agent that interacts with an environment. RL has been shown to be an effective method to control multistable systems with nonlinear dynamics32,33,34,35. In RL, the optimization aims to maximize the cumulative reward associated with the accomplishment of a given task. At each step t, the environment is described by an observable state st. The agent uses this information, in combination with a policy μ, to decide the action at to be taken, i.e. μ(st) = at. This action brings the environment to a new state st+1, and grants the agent with a reward rt quantifying its success with respect to the final objective. An episode ends when that goal is reached or, if not, after a finite time. The goal of training is to learn a policy that maximizes the agent’s cumulative reward over an episode. When the control space is continuous, one can resort to policy gradient based methods such as REINFORCE36. Modern versions of such method are embodied by actor-critic architectures37, which are based on two Artificial Neural Networks (ANN) learning in tandem. One network, called the actor, generates a sensory-motor representation of the problem in the form of a mapping of its parameter space θ into the space of policies, such that μ = μθ. This setup limits the type of policy considered and makes the search for an optimal policy computationally tractable. The optimization of the actor necessitates an estimation of the expected reward at long time. This is the role of the second ANN, the critic, which learns to evaluate the decisions of the actor, and how it should adjust them. This is done through the same bootstrapping of the Bellman’s equation as that used in Q-learning38. The successive trials—resulting in multiple episodes—are stacked in a finite memory queue (FIFO), or replay buffer, and after each trial the ANN tandem is trained on that buffer, thus progressively improving their decision policy and the quality of the memory. After testing multiple RL algorithms (see Supplementary Note 2), we found that an architecture based on the Twin Delayed Deep Deterministic Policy Gradient (TD3)39 worked best for the task at hand.

In our system, the environment consists in the positions and velocities of the masses, an action is a choice for the values of the force applied to the last mass in the chain at time t, and the goal is to bring the system close to a given meta-stable memory state in a given time \({t}_{\max }\)—close enough that it cannot switch states if let free to evolve. At the start of an episode, the environment is randomly initialized, and a random target state is set. The information provided as an input to the networks includes the position x and velocity \(\dot{x}\) of all the masses in addition to the one-hot encoded target configuration. Then the policy decides on the force Fe applied to reach the next step. Fe is taken from a predefined interval \([-{F}_{\max },{F}_{\max }]\). Here, we set \({F}_{\max }\) to 1 N. With this external force, the dynamical evolution of the system for a single time step is simulated by solving the differential equations (6) numerically with a Runge-Kutta method of order 4. The reward from this action is computed relative to the newly reached state: we give a penalty (rt < 0) with an amplitude proportional to the velocity of the masses and to the distance of the masses from their target rest positions (see Eq. (12)). After every step, the replay buffer is updated with the corresponding data. The critic is optimized with a batch of data every step, while the actor is optimized every two steps. The episode stops if the system is sufficiently close to rest in the correct configuration, in which case a large positive reward (rt ≫ 1) is granted, or after \({t}_{\max }\). Then a new episode is started, and the algorithm is repeated for a predefined number of episodes. More details on the learning protocol are available in Methods.

Using the described method, we trained our ANNs on a chain of three bi-stable springs specifically designed to display GoE states, as detailed in “Methods” and illustrated in Fig. 1c. Interestingly, the networks achieve a 100% success rate at reaching any target state—including the GoE states—in less than 10,000 episodes, as shown in Fig. 2a. This approach remains successful even if we only keep the first two decimals of the environment states, a promising behavior for real-life applications, but fails if only the positions or velocities of the masses are observed (see Supplementary Note 3). We thus accomplished our initial objective and designed a reliable method that produces protocols for the transitions to any stable configurations, restoring the memory of the device to its full capacity.

The training is done on the model presented in Fig. 1 with different values of the viscous coefficient. a Evolution of the success rate over a sliding span of 100 episodes during training. For the blue, green, and orange curves, the artificial neural network (ANN) was initialized randomly. For the purple curve, the ANN was initialized using the weights of a previously model trained with η = 4 kg s−1. b Learning time against the viscous coefficient with and without transfer learning.

In order to evaluate the robustness of the decision making process of the policy and gain insights on the mechanisms involved, we study how the physical parameters of the system influence the designed solutions. We chose to focus on a quantity that deeply affects the dynamics of the masses and has simple qualitative interpretation: the viscous coefficient η. To observe its effect on the designed policy, we trained agents with random initialization of weights for a range of η while keeping all other physical parameters fixed. The learning time, defined as the number of episodes before the success rate reaches 80% during training, is shown in Fig. 2b as a function of η. Even though the algorithm manages to learn the transitions for a wide range of η, the learning time varies significantly. Notably, the learning time gets longer for very low viscous coefficients (here η < 0.1 kg s−1) but also seems to diverge at very high η.

Due to the continuous nature of the system, we expect minor modifications of the physical parameters to not catastrophically change the dynamics. With this assumption, we employed Transfer Learning (TL) techniques30,31,40 to accelerate the learning phase. We initialized the ANNs with the weights of ANNs already trained on a similar physical system instead of random weights. The expectation is that some physical principles learned during training remain applicable for solving the new problem. Thus, the transfer of weights initializes the networks closer to a good solution. Please note this assumption might not hold if the change of parameters leads to the appearance of a significantly different solution40, for instance in the presence of bifurcations. In our system, TL results in quicker learning. In Fig. 2b, we compare the learning time, defined as the number of episodes it takes for the success rate to reach 0.8 for the first time, for networks initialized with and without TL. For networks trained with TL, we slowly increased η from 2 up to 10 kg s−1 and decreased it from 2 to 0 kg s−1, transferring the learned weights at each increment. TL effectively divides by up to 30 the learning time for very high viscosity and allows to reach otherwise non-converging regions. We also noticed that smaller increments of the parameters lead to faster learning (see Supplementary Note 4). Consequently, we can use finer discretizations to explore the physical parameters while keeping computation time reasonable.

By mixing RL and TL, we generated an algorithm that quickly produces precise transition protocols to any stable state for chains of bi-stable springs, including GoE states. In the next section, we analyze the properties of the force signals produced by the ANN and investigate how they relate to the dynamics of the system as the viscous coefficient η is varied.

How damping affects the control strategy

The intensity of the damping impacts the dynamical response of the system, which significantly affects the actuation protocol proposed by the ANN. To study these variations, we selected a unique transition (111 → 001), recorded the signal of the force generated by the agent, and computed the corresponding mechanical energy injected into the system at each time step for different values of η, as shown in Fig. 3. Please note that the state 001 is a GoE state. We observe that the relation between η and the time it takes to reach the target state is non-monotonic. We identify the minimum episode duration, corresponding to the most efficient actuation, with a critical viscous coefficient η = ηc. Interestingly, this minimum also marks the transition between two qualitatively different behaviors of the control force: a high-viscosity regime (η > ηc) and a low-viscosity regime (η < ηc) as shown in Fig. 3. In the high-viscosity regime, Fe always saturates its limit value, and only changes sign a few times per episode. Remarkably, each sign change occurs roughly when a mass is placed at the correct position. In contrast, the force signal in the low-viscosity regime appears less structured, with large fluctuations between consecutive steps.

The figure corresponds to the transition 111→001 for different values of the viscous coefficient. a Force signal and b injected energy during the transition. The injected energy is computed by multiplying the chainʼs elongation with the value of the external force. c Force signal and d deformation of each bi-stable spring during the transition for η = 0 kg s−1 (low-viscosity regime). The colors blue, orange, and green correspond to the deformation of the first, second, and third spring, respectively. The subplots for the deformations all have the same height with edge values [−0.07, 0.19] meter. The dashed lines represent the stable equilibria δ(0) (black) and δ(1) (red). e Force signal and f deformation of each bi-stable spring during the transition for η = 9 kg s−1 (high-viscosity regime). The subplots for the deformations all have the same height with edge values [−0.14, 0.24] meter.

In order to qualitatively explain these different behaviors, we focus our analysis on the energy transfer between the external controller and the system. The starting and the final states are stable configurations at rest. Consequently, they both correspond to local energy minima and the agent has to provide mechanical energy to the system in an effort to overcome the energy barriers between these configurations. After crossing the barriers, the surplus of kinetic energy has to be removed to slow down the masses and trap them in the well associated with the targeted minimum. In the low-viscosity regime, the internal energy dissipated due to viscosity is small. As a result, the protocols require phases where the agent is actively draining energy from the system. After a short initial phase of a few steps, where much energy is introduced into the system by setting the external load to its maximal value, a substantial fraction of the remainder of the episode involves careful adjustments to remove the kinetic energy. We expect this precise control of the system’s internal energy to be the underlying reason behind the increase of the learning time at low η. In the high-viscosity regime on the other hand, the viscosity is able to dissipate the extra energy without further intervention. However, it also slows the dynamic, effectively translating into increased episode duration.

While we established the characteristics of the designed protocols in both regimes, we have yet to define a quantitative estimation of the crossover between regimes.

Drawing inspiration from these protocols, we consider a simplified situation where the external force saturates the constraint \({F}_{e}(t)={F}_{\max }\), and where the switching fields are very small, i.e. \({F}_{\max }\gg | {F}_{i}^{\pm }| ,\quad i=1,2,3\).

Since all the masses start in a stable equilibrium, the mechanical response of the chain to the external load is very soft, at least for small enough displacements at the start of the episode.

Thus, we can approximates the dynamics of the last mass by the ordinary differential equation

which solves into

with a relaxation time \(\tau =\frac{m}{\eta }\) and a saturation velocity \({v}_{\max }=\frac{{F}_{\max }}{\eta }\). The relaxation time τ corresponds to the time it takes for dissipation to take over inertia. This transition is also associated to a length scale Lη such that

Let us now define more precisely what we mean by small displacement. With our assumptions, and due to the asymptotic shape of the potential, the typical relative distance Le for which the mechanical response becomes of the same magnitude as the external load verifies

It is thus clear that if Lη ≪ Le, the system will be dominated by dissipation and converge to equilibrium without further oscillations. On the other hand, if Lη ≫ Le, neighboring masses will rapidly feel differential forces and inertia will dominate. Interestingly, equating these two length scales allows to point to a critical dissipation at the frontier of these two regimes

To test this prediction, we investigate how the damping crossover ηc observed in designed policies varies as the masses m and the maximum force \({F}_{\max }\) are varied, exploring more than two orders of magnitude for both parameters. As shown in Fig. 4, the results present an excellent agreement with the proposed scaling argument [11].

Discussions

We have shown a proof of concept of general memory writing operations in a strongly non-linear system of coupled bi-stable springs by a reinforcement learning agent. In particular, this technique generates transition protocols that dynamically reach otherwise unreachable memory states. Since the system is deterministic, these protocols can be directly implemented with open loop controller for any state to state transition. Furthermore, we kept the same training algorithm for any physical configuration, even in regimes where the dynamics become chaotic at very low damping (see Supplementary Note 5). The agent appears to learn how to harness the physics underlying the behavior of the system: its control strategy changes qualitatively as the viscous coefficient is varied, from a relatively simple actuation in the large dissipation regime to a jerky dynamical behavior aimed at extracting the excess energy in the small dissipation regime, two significantly different modes of control. This transition coincides with a change in the system’s internal response, from an over-damped to an inertial response. In that sense, the networks were able to gather and share solutions to the challenges it was asked to tackle, which helped the authors to gain some insightful knowledge about the physics of the memory system, though it is unclear at this point whether this result is specific to this controller. Key stakes of future works include upscaling and devising a method to read the internal state of the system in a non-destructive way from sensing its response, two clearly challenging tasks. For the former, a method would be to identify the cognitive structures established by the agent to complete the learned tasks, i.e. by rationalizing its neural activity and learning dynamics, and use this knowledge to learn transitions for a higher number of coupled units. Indeed, while we managed to successfully train networks on a system of up to six springs, systems with more than four springs required a significant change in the architecture of the neural network (see Supplementary Note 6). Another promising path is to look into better optimization of the control signal. Here, we present how the ANNs found one method to reach GoE states. We expect further tweaking of the reward, for instance by penalizing longer episodes or higher energy transfer between the agent and the system, to lead to better control patterns. Finally, our study is anchored on the Preisach model, a generic model applicable to a large variety of physical systems. We believe the reinforcement learning method to reach GoE states remains relevant in many real-life situations. We leave the confirmation to future studies.

Methods

Physical parameters of the three springs system

The physical parameters for the three springs system that we consider in Figs. 1, 2 and 3 are detailed in Table 1. All length are in meters. This choice leads to a single permutation in the order of the switching fields and the presence of two GoE states.

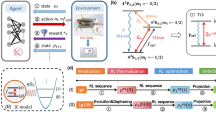

Learning protocol

We used a TD3 agent previously implemented41 combined with Gym environments42 to solve our control problem. We provide a schematic view of the learning protocol in Fig. 5 and we present more details about the TD3 algorithm and Gym environments in Supplementary Note 7. The precise architecture of both the policy (Actor) and the Q-functions (Critics) are summarized in Fig. 6 and Table 2. We start the training with random initial policy and Q-functions parameters. The weights and biases of each of layer are sampled from \(U(-\sqrt{l},\sqrt{l})\) where U is the uniform distribution and \(l=\frac{1}{{{{{{\rm{in}}}}}}\,{{\mbox{\_}}}{{{{{\rm{features}}}}}}{{\mbox{\_}}}\,l}\) , in_features_l being the size of the input of the layer.

The schematics details the input and the output associated with the actor and the critic. “Target" corresponds to the target configuration (001 for instance) and “DNN" corresponds to the neural networks detailed in Table 2.

The task of the agent involves reaching a stable configuration close to rest, starting from random initial conditions. At the beginning of an episode, a target state is randomly chosen and the initial positions and velocities are randomly sampled from the respective intervals [\({\delta }_{i}^{(0)}\) − 0.2, \({\delta }_{i}^{(1)}\) + 0.2] and [−0.1, 0.1]. For the first 10,000 steps, actions are sampled uniformly from [−\({F}_{\max }\), \({F}_{\max }\)] without concerting the policy or Q-functions. Once this exploratory phase is completed, the agent starts following the policy generated by the ANNs. At each time step t, the agent observes the current state of the system st (composed of the position, the velocity and the target state of each mass) and chooses a force Fe(t) in the interval [−\({F}_{\max }\), \({F}_{\max }\)] in consequence. The selected force, to which is added a noise taken from a Gaussian distribution of mean 0 and standard deviation 0.1, brings the system to a new state st+1 computed by Runge-Kutta method of order 4. The parameters controlling the added noise were taken directly fromthe original implementation41 without further optimizations. At each time step (constant Fe(t)), the RK4 method is done through 10 successive iterations for a total duration of 0.1 s. Each of those iterations changes the state of the system. Once the numerical resolution is completed, the agent receives a reward rt given by function (12)

where target is a variable equal to 0 or 1, \({u}_{i}^{(t+1)}\) and \({\dot{{x}_{i}}}^{(t+1)}\) are respectively the displacement and velocity of the ith mass at time t+1. The parameters of the environment are summarized in Table 3.

All the steps are stocked in the replay buffer, which possesses a finite maximal size of 1e6 steps. Each new step overwrites the oldest stored one when the buffer is full. This process allows for the continuous improvement of the available training dataset during training. The Q-functions and the policy are then updated. The Q-functions are updated at every step, while the policy is updated every two steps. Both the Q-functions and the policy are updated using the Adam algorithm43 with a learning rate of 0.001 and a batch size of 100 experiences randomly sampled from the Replay Buffer. The hyperparameters for optimization are summarized in Table 4. The operation goes on until either the agent reaches the goal, at which point it receives a reward of 50, or 200 steps are exceeded. At this stage, the environment is reset, giving place to a new episode. This algorithm is repeated for a predefined number of episodes.

Transfer learning

We employed TL when changing only a single physical parameter for all of the numerical experiments presented in this paper. For the initial exploration presented in Fig. 3, we started with \({F}_{\max }=1\) N, m = 1 kg and η = 2 kg s−1. Then, we slowly explore the space of η with TL as detailed in Results. We used these new trained networks as the starting point of another TL, this time either changing the mass m of the maximum external force \({F}_{\max }\). These led to the scaling of ηc with respect to these parameters presented in Fig. 4.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

The code used to produce the results of this study is available online44.

References

Keim, N. C., Paulsen, J. D., Zeravcic, Z., Sastry, S. & Nagel, S. R. Memory formation in matter. Rev. Mod. Phys. 91, 035002 (2019).

Kovacs, A. Glass transition in amorphous polymers: a phenomenological study. Adv. Polym. Sci. 3, 394–507 (1963).

Strukov, D. B., Snider, G. S., Stewart, D. R. & Williams, R. S. The missing memristor found. Nature 453, 80–83 (2018).

Prados, A. & Trizac, E. Kovacs-like memory effect in driven granular gases. Phys. Rev. Lett. 112, 198001 (2014).

Jules, T., Lechenault, F. & Adda-Bedia, M. Plasticity and aging of folded elastic sheets. Phys. Rev. E 102, 033005 (2020).

Matan, K., Williams, R. B., Witten, T. A. & Nagel, S. R. Crumpling a thin sheet. Phys. Rev. Lett. 88, 076101 (2002).

Diani, J., Fayolle, B. & Gilormini, P. A review on the mullins effect. Eur. Polym. J. 45, 601–612 (2009).

Preisach, F. Über die magnetische Nachwirkung. Z. f.ür. Phys. 94, 277–302 (1935).

Mayergoyz, I. D. Mathematical models of hysteresis. Phys. Rev. Lett. 56, 1518–1521 (1986).

Abu-Mostafa, Y. & Jacques, J. S. Information capacity of the hopfield model. IEEE Trans. Inf. Theory 31, 461–464 (1985).

Deng, K., Zhu, S., Bao, G., Fu, J. & Zeng, Z. Multistability of dynamic memristor delayed cellular neural networks with application to associative memories. In IEEE Transactions on Neural Networks and Learning Systems 1–13 (2021).

Valagiannopoulos, C., Sarsen, A. & Alu, A. Angular memory of photonic metasurfaces. IEEE Trans. Antennas Propag. 69, 7720–7728 (2021).

Lindeman, C. W. & Nagel, S. R. Multiple memory formation in glassy landscapes. Sci. Adv. 7, eabg7133 (2021).

Puglisi, G. & Truskinovsky, L. A mechanism of transformational plasticity. Contin. Mech. Thermodyn. 14, 437–457 (2002).

Regev, I., Attia, I., Dahmen, K., Sastry, S. & Mungan, M. Topology of the energy landscape of sheared amorphous solids and the irreversibility transition. Phys. Rev. E 103, 062614 (2021).

Keim, N. C., Hass, J., Kroger, B. & Wieker, D. Global memory from local hysteresis in an amorphous solid. Phys. Rev. Res. 2, 012004 (2020).

Keim, N. C. & Paulsen, J. D. Multiperiodic orbits from interacting soft spots in cyclically sheared amorphous solids. Sci. Adv. 7, eabg7685 (2021).

Libál, A., Reichhardt, C. & Reichhardt, C. O. Hysteresis and return-point memory in colloidal artificial spin ice systems. Phys. Rev. E 86, 021406 (2012).

Goicoechea, J. & Ortín, J. Hysteresis and return-point memory in deterministic cellular automata. Phys. Rev. Lett. 72, 2203 (1994).

Bense, H. & van Hecke, M. Complex pathways and memory in compressed corrugated sheets. Proc. Natl Acad. Sci. USA https://www.pnas.org/doi/10.1073/pnas.2111436118 (2021).

Yasuda, H., Tachi, T., Lee, M. & Yang, J. Origami-based tunable truss structures for non-volatile mechanical memory operation. Nat. Commun. 8, 1–7 (2017).

Jules, T., Reid, A., Daniels, K. E., Mungan, M. & Lechenault, F. Delicate memory structure of origami switches. Phys. Rev. Res. 4, 013128 (2022).

Barker, J. A., Schreiber, D. E., Huthand, B. G. & Everett, D. H. Magnetic hysteresis and minor loops: models and experiments. Proc. R. Soc. Lond. A. Math. Phys. Sci. 386, 251–261 (1983).

Deutsch, J. M., Dhar, A. & Narayan, O. Return to return point memory. Phys. Rev. Lett. 92, 227203 (2004).

Mungan, M. & Terzi, M. M. The structure of state transition graphs in systems with return point memory: I. General theory. Ann. Henri Poincaré 20, 2819–2872 (2019).

Terzi, M. M. & Mungan, M. State transition graph of the preisach model and the role of return-point memory. Phys. Rev. E 102, 012122 (2020).

van Hecke, M. Profusion of transition pathways for interacting hysterons. Phys. Rev. E 104, 054608 (2021).

Puglisi, G. & Truskinovsky, L. Rate independent hysteresis in a bi-stable chain. J. Mech. Phys. Solids 50, 165–187 (2002).

Rogers, R. C. & Truskinovsky, L. Discretization and hysteresis. Phys. B: Condens. Matter 233, 370–375 (1997).

Pan, S. J. & Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22, 1345–1359 (2009).

Taylor, M. E. & Stone, P. Transfer learning for reinforcement learning domains: A survey. J. Mach. Learn. Res. https://www.jmlr.org/papers/v10/taylor09a.html (2009).

Gadaleta, S. & Dangelmayr, G. Optimal chaos control through reinforcement learning. Chaos: Interdiscip. J. Nonlinear Sci. 9, 775–788 (1999).

Gadaleta, S. & Dangelmayr, G. Learning to control a complex multistable system. Phys. Rev. E 63, 036217 (2001).

Wang, X.-S., Turner, J. D. & Mann, B. P. Constrained attractor selection using deep reinforcement learning. J. Vib. Control 27, 502–514 (2021).

Pisarchik, A. N. & Feudel, U. Control of multistability. Phys. Rep. 540, 167–218 (2014).

Williams, R. J. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach. Learn. 8, 229–256 (1992).

Konda, V. & Tsitsiklis, J. Actor-critic algorithms. In Advances in Neural Information Processing Systems https://papers.nips.cc/paper/1999/hash/6449f44a102fde848669bdd9eb6b76fa-Abstract.html (1999).

Grondman, I., Vaandrager, M., Busoniu, L., Babuska, R. & Schuitema, E. Efficient model learning methods for actor–critic control. IEEE Trans. Syst., Man, Cybern., Part B (Cybern.) 42, 591–602 (2011).

Fujimoto, S., Hoof, H. & Meger, D. Addressing function approximation error in actor-critic methods. In International Conference on Machine Learning 1587–1596 (PMLR, 2018).

Weiss, K., Khoshgoftaar, T. M. & Wang, D. A survey of transfer learning. J. Big Data 3, 9 (2016).

Fujita, Y., Nagarajan, P., Kataoka, T. & Ishikawa, T. Chainerrl: a deep reinforcement learning library. J. Mach. Learn. Res. 22, 1–14 (2021).

Brockman, G. et al. Openai gym. Preprint at https://arxiv.org/abs/1606.01540 (2016).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. Preprint at https://arxiv.org/abs/1412.6980 (2014).

Michel, L., Jules, T. & Douin, A. laura042/Multistable_memory_system: v0. https://doi.org/10.5281/zenodo.6514157 (2022).

Acknowledgements

Research by T.J. was supported in part by the Raymond and Beverly Sackler Post-Doctoral Scholarship.

Author information

Authors and Affiliations

Contributions

T.J., F.L., and A.D. designed research; T.J., L.M., and A.D. wrote algorithms; L.M. produced and analyzed data; T.J. produced scaling analysis; T.J., L.M., and F.L. wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Physics thanks the anonymous reviewers for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jules, T., Michel, L., Douin, A. et al. When the dynamical writing of coupled memories with reinforcement learning meets physical bounds. Commun Phys 6, 25 (2023). https://doi.org/10.1038/s42005-023-01142-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42005-023-01142-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.