Abstract

High-performance fuel design is imperative to achieve cleaner burning and high-efficiency engine systems. We introduce a data-driven artificial intelligence (AI) framework to design liquid fuels exhibiting tailor-made properties for combustion engine applications to improve efficiency and lower carbon emissions. The fuel design approach is a constrained optimization task integrating two parts: (i) a deep learning (DL) model to predict the properties of pure components and mixtures and (ii) search algorithms to efficiently navigate in the chemical space. Our approach presents the mixture-hidden vector as a linear combination of each single component’s vectors in each blend and incorporates it into the network architecture (the mixing operator (MO)). We demonstrate that the DL model exhibits similar accuracy as competing computational techniques in predicting the properties for pure components, while the search tool can generate multiple candidate fuel mixtures. The integrated framework was evaluated to showcase the design of high-octane and low-sooting tendency fuel that is subject to gasoline specification constraints. This AI fuel design methodology enables rapidly developing fuel formulations to optimize engine efficiency and lower emissions.

Similar content being viewed by others

Introduction

The transport sector contributes to approximately a 25% of total global CO2 emissions. Note that >95% of transport energy originates from liquid hydrocarbon fuels1, primarily used to power combustion engines. There is a pressing requirement to lower transport-sector greenhouse gas and criteria pollutant emissions by developing more efficient powertrain technology and low carbon fuel formulations.

Engines’ environmental performance can be improved significantly by optimizing the fuel ignition quality and its sooting propensity. Engine knock, governed by fuel autoignition resistance, limits a spark-ignited engine’s ability to operate at its highest efficiency point. The research octane number (RON) and motor octane number (MON) are experimentally measured in cooperative fuel research engines at operating conditions according to ASTM standards2,3 and commonly used to assess fuel’s knocking behavior. Sooting propensity of a fuel is related to an engine’s particulate matter emissions. A high sooting fuel typically impacts engine efficiency through higher particulate filter backpressure4 and more frequent filter regenerations5 to achieve emission regulations. Various metrics have been proposed to characterize the chemical propensity of the fuel to form soot, including smoke point, threshold sooting index6, oxygen extended sooting index7, fuel equivalent sooting index8, etc. An alternative approach, the Yield Sooting Index (YSI)9, offers an advantage of more precise measurements for aromatics and is based on measurement of a maximum soot volume fraction. The formulation of fuels characterized by high knock resistance and low-sooting propensity could aid the transition to cleaner engines and fuels.

The traditional approach to fuel design is empirical and tedious, comprising (i) determining a potential blendstock, (ii) characterizing combustion-related properties of a candidate using experiments and simulations, and (iii) extensive research on understanding the effect of candidate's molecular structure on properties of the base fuel10. The challenges associated with this empirical approach reinforce the requirement for data-driven discovery of materials in multiple application areas, including clean energy, aerospace industry, and drug discovery11. Inverse fuel design is intrinsically distinct from the conventional approach. Rather than exhaustive parameter characterization from structures, the properties are selected beforehand, and new fuel candidates are obtained that match the requirements. In the inverse mode, the main driver for innovation is reverting mapping from structural information to properties.

The inverse fuel design problem is typically described as a constrained optimization task in which a mixture is formulated from a set of pure components in a chemical space to match the target properties. The corresponding workflow comprises two main parts: (1) accurate and rapid evaluation of chemical properties and (2) a robust and scalable search method to navigate in the chemical space and identify potential candidate mixtures. This two-step design process’s integrity can be ensured provided the tool offers a continuous and differentiable chemical space representation for various species; thus, it would allow direct optimization of properties using gradient-based methods. Here, machine learning (ML) algorithms, such as deep learning (DL)-based models, have a substantial advantage over other methods for inverse fuel design12.

DL has been successfully applied to cheminformatics and material science for tasks such as computing molecular properties, accurately predicting their interaction, and de novo generation of new molecules13,14. In the context of inverse design, generative models have been reported as promising tool for de novo molecule design using simplified molecular-input line-entry system (SMILES) representation and recurrent neural networks (RNN)12, a deep neural network architecture allowing modeling in the time domain. Several studies have been reported using ML to screen multiple combustion-related properties simultaneously on a molecular level15,16. The domain of applicability of these models covers a wide variety of hydrocarbon fuels, but they cannot be extended beyond pure molecules to encompass complex fuel mixtures. Screening mixtures instead of pure species is necessary to enable the discovery of novel combinations that improve fuel performance.

Because practical liquid fuels involve hundreds of species, the prediction of mixtures properties remains one of the key bottlenecks for the inverse fuel design. Algebraic mixing rules were proposed for iso-octane, n-heptane and toluene mixtures17, however, such approach is inapplicable to estimate properties of complex blends, e.g., containing oxygenates18,19,20,21,22,23,24,25. Alternatively, previously developed techniques for mixture screening mostly feature feed-forward networks with configurations unsuitable for the inverse design mode26,27,28,29. Note that details on the analysis of recent advancements in DL relevant to inverse fuel design are provided in the Supplementary Note 1.

To ensure eligibility of predictive model’s configuration for screening on a mixture level, it’s essential capability is an input representation applicable to pure components and mixtures. Moreover, mixing rules must be inherently implemented in the algorithm’s learning process to predict how interactions between molecules correlate with the specific property.

Data-driven fuel design framework

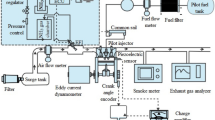

This work introduces a simple but elegant data-driven framework to inversely design fuels satisfying desired target properties. In particular, the AI fuel design tool was built on top of an end-to-end DL model based on recurrent and fully connected (FC) layers to predict three combustion properties of pure components and blends, namely, RON, MON, and YSI. Figure 1 shows a schematic of the entire network architecture. The curated database, on which the model was trained, contains single species from 19 molecular classes with oxygenates accounting for >20% of the pure species dataset, surrogate fuels mostly containing 2-10 pure components and complex mixtures, including gasoline.

We propose a linear mixing operator (MO) implanted into the training loop, the algorithmic advancement that enables direct connectivity between molecular and mixture representations, thus, fuel screening on a mixture level. In particular, the MO linearly combines latent vectors of pure components and their respective compositions to identify latent representation for mixtures.

The intuition behind MO is similar to the concept of embeddings in the latent space that are commonly used in Natural Language Processing. One such example is word2vec, which is an efficient technique for learning distributed vector representations of words that capture accurate syntactic and semantic word relationships30. Similarly, in our case a single word represents single species and mixtures correspond to phrases, which are a weighted combination of words in the hidden space. In addition to MO, we propose two robust and scalable search algorithms to navigate a well-defined chemical space and design fuels as mixtures satisfying constraints and target properties. The schematic diagram for the backward fuel design workflow is illustrated in Supplementary Fig. S1.

The evaluation demonstrated that the joint-properties predictive model acheives sufficiently high prediction accuracies while allowing for extracting latent representations for pure species and blends. We provide a complete evaluation of this fuel design framework across two tasks: the feed-forward predictive model and inverse design of the tool. First, we demonstrate model’s performance on the test set and compare it to multiple baselines. Then, formulated mixtures are analyzed to assess the proposed search approaches’ capability.

Results and discussion

Performance on the test set

We report proposed model’s performance in terms of coefficient of determination R2 and mean absolute error (MAE). Figure 2 shows the parity plots for the model’s independent test set, where model demonstrates acceptable performance by reaching R2 > 0.92 across all three target properties. Additionally, Table S1 in Supplementary Material reports R2 and MAE for each of the two inputs, i.e., single species and blends.

Looking at ON mapping, the model achieves higher prediction accuracy on mixtures, more represented in this database regarding single hydrocarbons. For the sooting index, the model shows good generalization capability in the target estimation of pure components on different numerical scales. Moreover, the model sustains its performance for YSI mixture predictions, although it is being the least represented data type in the database.

Comparison with competing techniques

We compared the predictive model’s performance with (1) three data-driven models developed for predicting RON, MON, and YSI of pure components and (2) the linear-mixing rule for predicting ON of mixtures. The first baseline is the end-to-end learning model based on a graph neural network (GNN)15 developed for simultaneously predicting the derived cetane number (DCN), RON, and MON of single species. For a fairer analysis, the performance comparison was assessed on fifteen individual components from our test set that were excluded from the baseline model’s training set. Table 1 reports the resulting head-to-head analysis where the proposed model outperforms the baseline model with significant deviation on MAE on RON predictions. In addition, Table 1 compares the models’ performance on the respective full test sets. The proposed model shows a lower R2 coefficient for RON observations than the baseline model, and both models show similar R2 on MON data. However, MAE on RON predictions is similar for both models, whereas error on the proposed model’s MON predictions is slightly less than the GNN model. To summarize, both models demonstrate reasonably satisfactory performance, and the head-to-head analysis confirms the current model’s flexibility to predict the ON of a single species accurately.

To demonstrate accuracy in the ON predictions of blends, we report a performance comparison using the linear-by-mole mixing rule in Table 2. MAEs were calculated for the ON predictions of 69 mixtures of varying sizes in the independent test set. The algebraic mixing rule is a naive model regarding data-driven models. Nevertheless, this analysis provides a perspective on the proposed model’s MO’s generalization capability. To summarize, the model exhibits superior performance for blends across varied sizes when compared to naive baseline. Moreover, this model’s RON MAE decreases with the mixture size, whereas this qualitative trend is not evident with the naive baseline.

Finally, Table 3 compares the proposed model’s YSI predictions to two baseline models. The artificial neural network (ANN) model31 was trained to fit the YSI of pure hydrocarbons measured on different numerical scales. The proposed model’s resulting median absolute error, MedAE, on the test set (69 species) is similar to the respective baseline model’s MedAE test sets (56 species). The second baseline, the quantitative structure-activity relationship (QSAR) model32, was trained on low soot scale data. The MedAE evaluated on 59 components is similar to the proposed model’s resulting MedAE on 43 test set components from the low soot scale. Table 3 reports MedAE on mixtures, slightly higher than MedAE for single components, explained by the scarcity of YSI measurements for blends in the training set. To summarize, the proposed model reaches decent performance on YSI predictions on multiple numerical scales.

Analysis of obtained candidates

This section describes a post-screening analysis of potential fuel candidates obtained from the proposed data-driven fuel design framework. Building upon the shoulders of MO and features of generated mixture latent representation, we introduce the second part of the fuel design framework, a search tool described in Section ‘Exploring chemical space: Inverse fuel design’, to screen fuels on a mixture level. Since the latent space is a continuous and differentiable vector space, it allows direct gradient-based optimization of target properties. Based on this feature, two algorithms, full-scope search (see details in Section ‘Full-scope search’) and greedy search (see details in Section “Greedy” search’) have been proposed to formulate mixtures with desired target properties and that are subject to linear constraints. The search was performed for the target properties of RON = 95, MON = 85, and YSI = 60. Section ‘Constraints and targets’ describes the linear constraints, bounds, and details of the chemical space. From the results, 20 mixtures with 5-26 components were reported using a full-scope search, whereas the greedy search generated 66 mixtures with 3-6 components. These 86 fuel candidates exhibited three properties closest to the target RON, MON, and YSI values.

As a postprocessing step of obtained candidates, the Reid vapor pressure (RVP) of mixtures was estimated from their bubble pressure point at 37.8 oC using the universal quasichemical functional group activity coefficient (UNIFAC) model with the Huron-Vidal mixing rule and Peng-Robinson equation of state. They were implemented as in the Phasepy library33, individual Antoine coefficients were extracted from the Yaws handbook34. Five of 86 mixtures exhibited RVP in an acceptable range (50 kPa ≤ RVP ≤ 100 kPa).

Table 4 lists the five mixtures with an estimated lower heating value (LHV, Joback method), viscosity at 15 oC (Saldana data35 for pure components with the Grunberg-Nissan mixing rule), and density (PubChem data for pure components with harmonic by-mass mixing rule36). Pie charts with the detailed composition of the five mixtures are provided in the Supplementary Material (Fig. S2).

Blend 17–1 shows a high density, preventing its use as drop-in gasoline. Mixture 22-13’s viscosity lies at the gasoline range’s higher boundary, indicating a higher Sauter droplet diameter than mixture 18-17.

For all mixtures, the Jaccard-Tanimoto similarity score37 for component pairs (based on RDKit fingerprints) demonstrates a log-normal distribution (provided in Supplementary Data 1), 12–13% of these component pairs exhibiting significant similarity (score > 0.5)38. In addition to multibranched paraffin (representing 16–36% liquid volume), all candidate mixtures contain C1-C4 alcohols (5–30%), C5-C8 cycloalkanes (3–35%), and C4-C8 alkenes (17–22%) conferring a high-octane sensitivity to the mixture. Note that the significant olefin content in the blends calls for autoxidation deposit and ozone formation potential assessment. In all blends, except for mixture 6-1, cycloalkenes (cyclohexene, ethylidene, and cyclopentane) and phenol ethers (anisole and phenetole) are present. Both families involve high-octane sensitivity compounds, put forward as potential octane boosters39,40,41. For certain mixtures (18-17, 26-20), primarily C5-C6 alkyl esters are present because lighter esters (such as methyl acetate) exhibit insufficient octane sensitivity. These esters should be compatible with fluorocarbon elastomers if used at low liquid fractions (<5%)42.

To summarize, the obtained mixtures illustrate that the proposed method can spot compounds previously identified in the fuel science community43 and suggest out-of-the-box gasoline components, screened at the mixture level. Among the new candidate components, tetramethoxymethane, primarily produced for pharmaceutical applications, can be discarded because its environmental properties stand beyond recommended limits44,45 (Supplementary Table S2). However, isopentyl acetate, a flavor enhancer previously not featured in transport applications, appears as a promising low-level component in gasoline blendstocks.

Component molecular weight distributions reveal a near mono-mode at 100 g/mol, except for mixtures 26-20 and 22-13, showing a marked multimodal molecular weight distributions (Fig. 3). This idiosyncratic distribution should impact gas-phase composition distribution in the combustion chamber and reactivity at the gasoline spray periphery46,47. Based on previous studies48, light alcohols should first evaporate, enhancing knock resistance through high heat of vaporization. Alkenes and cycloalkanes would evaporate in turn, followed by C5-C6 esters and finally large alkanes and phenol ethers. Blend 26-20 has a greater fraction of heavy components (140–150 g/mol range) in comparison to 22-13, implying likely higher levels of unburnt hydrocarbon emissions at the exhaust.

a mixture 6-1 with predicted properties 95/85/60 (mse = 0.00), b mixture 26-20: 94.7/85.4/59.8 (mse = 0.09), c mixture 18-17: 95.4/85.1/59.6 (mse = 0.09), d mixture 22-13: 94.9/85.3/59.6 (mse = 0.07). Components were classified by families of species: alcohols (blue), aromatics (red), esters (purple), olefins (orange), paraffins (white), cycloalkanes (grey) and ethers (green).

The analysis concluded that mixture 22-13 is the most promising candidate for a 95/85/60 gasoline blendstock. Individual component availabilities and mixture cold-weather performance should be studied to characterize the drop-in character on such a blendstock fully.

Conclusion

This work introduced a conceptually simple and fully data-driven framework to design fuel mixtures matching desired combustion-related properties, enabling high-octane mixtures and low engine-out soot emissions. The proposed workflow comprises the joint-property predictive model and search approach. In the first step, the feed-forward network with RNN and FC layers was trained on a comprehensive database, including RON, MON, and YSI measurements from the literature of single species from 19 molecular classes, surrogate fuels, and complex mixtures. The innovative part of the network is an adaptation of MO, a mechanism generating mixture-hidden representation by performing linear combinations on hidden vectors of pure components. MO enables using a mixture’s detailed composition and species’ molecular information as a direct input to the model without preprocessing. The extensive assessment of the DL model with competing baselines for pure components across diverse modalities demonstrates that the proposed model consistently performs reasonably well on the unseen test set. Moreover, the proposed model achieves 93–96% accuracy for R2 on mixtures for three properties.

The features of MO used jointly with the direct computational graph’s structure in the neural network allowed formulating the fuel design problem to solve it using a standard optimization technique. Here, full-scope and greedy search methods were proposed to identify suitable mixtures in the chemical space. The former generates mixtures with 5-26 components, whereas the latter formulates blends with fewer components. Using the proposed workflow, 86 gasoline candidates were determined with target RON = 95, MON = 85, and YSI = 60 properties. After additional screening with RVP, density, viscosity, and LHV, one mixture containing 22 components was preserved as the most promising candidate. In future work, we plan to extend the current database by curating other relevant properties, such as RVP, viscosity, density, and LHV, essential criteria in fuel screening. Ultimately, future comparison of short-listed mixtures experimental properties with predictions will allow us to further improve tool accuracy and valorize the current framework. Moreover, future workflow versions should preclude formulations based on component availability. We expect our simple and practical framework will serve as a solid baseline and help ease future research designing liquid energy carriers.

Methods

Data curation

The database of experimentally obtained measurements for the three combustion-related properties (RON, MON, and YSI) for single hydrocarbons and mixtures was curated from many literature sources. Table 5 summarizes the elements in the collected database, where the entire dataset is classified into three subpopulations: pure components, ≤ 10-component blends (mostly surrogates), and complex fuels with more than 10 components.

We extended the RON/MON pure components database published by Schweidtmann et al.15 and the Yale University YSI database49,50 by adding measurements for oxygenated compounds51,52. The curated data include data for single species from 19 molecular classes with 365 RON, 333 MON, and 451 YSI observations. More interestingly, oxygenated compounds account for approximately 20% and 50% of single component ON and YSI databases, respectively.

For mixtures with 2-10 components, ON data were collected for 372 blends, including 22 hydrocarbon representatives from five molecular classes (n-alkanes, isoalkanes, alkenes, cycloalkanes, and aromatics) blended with five oxygenated hydrocarbons, four alcohols (methanol, ethanol, 2-propanol, and prenol), and MTBE19,27,53,54,55,56,57. Furthermore, detailed hydrocarbon analysis and ON measurements, characterizing an ignition quality of 76 real fuels, were extracted from the literature. These complex mixtures include 30 fuels for advanced combustion engines (FACEs) mixed with ethanol58,59,60,61, Haltermann and Coryton gasoline fuels62, 3 FACE F + terpineol23, 36 reformulated blendstocks for oxygenated blending blended with prenol/other C5 alcohols63, and five test gasoline fuels64.

The literature scarcely reported YSI measurements for mixtures for the third property database. Overall, only 40 measurements of the sooting index for mixtures and their detailed compositions were found. These values were identified for diesel, gasoline, and jet fuel surrogates65,66,67 and co-optima test gasoline and its surrogates68. YSI quantification is based on measurement of a maximum soot volume fraction (Mi) directly proportional to the sooting propensity. Mi is measured on the centerline of a coflow methane/air nonpremixed flame doped with 400 ppm test fuel and converted to an apparatus-independent YSI using the following equation69:

where A and B are the two reference compounds. In analogy to octane rating, the numerical scale, which is used to translate the measured quantity Mi to YSI, is defined by lower and upper endpoint species and the values assigned to them, YSIA and YSIB. Multiple numerical scales were reported in the literature to accurately assess the YSI of hydrocarbons whose sooting propensity is too different to capture in a single experimental setup. Four ratings were identified in measurements of the curated YSI database, and the summary is shown in Supplementary Table S3. Furthermore, different experimental techniques were used to measure Mi quantity, including color-ratio pyrometry, light extinction measurement, and laser-induced incandescence. Supplementary Fig. S3 depicts the data distribution histograms for ON and YSI databases.

Train and test split

In Table 5, the curated database contains values of three properties for pure components and mixtures; however, only 141 data points have all three measurements available, and the remaining 1018 observations have at least one missing property. The customized hierarchical stratified sampling was used to split the dataset to ensure that observations from all relevant subpopulations were included in the training/validation and testing sets. The entire population was divided into six nonoverlapping subsets based on the availability of specific properties, e.g., Subset 1 (Sub 1) contains observations with all three properties (RON, MON, and YSI). Two nonoverlapping strata were defined within each subset: single species and mixture observations. Next, each subset was randomly split into 85% train/validation and 15% test set using stratified sampling in the scikit-learn library70 to ensure 15% of each stratum (pure species and mixtures) was randomly sampled into the test set. Each subset’s final train and test sets were merged, and Table S4 in Supplementary Material reports the resultant datasets. Training and test datasets for pure components and mixtures are provided in Supplementary Data 2 and Supplementary Data 3.

Predictive DL model

Molecular representation

As a molecular input to the predictive model, we used a one-dimensional text representation, SMILES strings, with molecular descriptors calculated using the Mordred platform71. SMILES nomenclature is based on small and natural grammar, providing rigorous structure notation derived from molecular graph theory principles72. SMILES strings are widely used to represent molecules for chemical information processing tasks, such as property prediction and inverse molecular design. Aromatic SMILES were identified for 649 pure species using the Chemical Identifier Resolver tool developed by the National Cancer Institute73. Mordred is an open-source molecular-descriptor-calculation software generating more than 1800 2D and 3D descriptors. Generated SMILES strings were converted to a binary matrix using one-hot encoding. As data preprocessing step, Mordred descriptors were normalized using a min-max scaler in an open-source ML library scikit-learn70. More specifically, descriptors of the pure components in the unseen test set were normalized based on the scaling factors fitted on the species in the training set’s descriptors.

Network architecture

The end-to-end DL model incorporates three smaller networks (Extractor 1, Extractor 2, and Predictor) and an MO (see Fig. 1). The proposed model structure is conceptually simple. The molecular fingerprint is encoded via Extractor 1 and Extractor 2, the MO generates mixture fingerprints based on linear operation, and the predictor maps fingerprints to the target properties.

Extractor 1 and Extractor 2

To take advantage of the sequential nature of the text representation and allow dependence modeling through sequence between each character in a SMILES string, we used one of the RNN’s architecture, namely, the long-short-term memory (LSTM) cell74. Compared to conventional feed-forward neural network architecture, RNNs include a specific unit in architecture called memory blocks in recurrent hidden layers.

The proposed Extractor 1 architecture includes three stacked LSTM layers with the descending dimensionality of output features. Thus, LSTM Encoder extracts the most informative features from SMILES string to a vector, referred here as ’SMILES fingerprint’. Extractor 2 maps Mordred descriptors to a Mordred fingerprint. It includes three sequential FC layers with a rectified linear activation function used as output units and the last FC layer with linear hidden units. In the next layer, two fingerprints are concatenated along the second dimension into a vector referred to as a latent or hidden space representation for pure components, denoted as ai. The parameters of Extractors 1 and 2 are trained to transform the original data, molecular information, into another representation, a vector with the most semantic features for predicting joint properties.

Mixing operator (MO)

Another essential design consideration is defining latent space representation for mixtures, which can be directly used to predict target properties of the given blend. Here, hidden space representation of a mixture is defined as a linear combination of single component vectors based on their respective compositions. This definition can be expressed as a matrix-vector multiplication performed in a MO:

where m is the dimension of the latent space vector, \(A\in {{\mathbb{R}}}^{m\times n}\) is a matrix containing latent vectors ai’s of n single species, \({{{{{{{\boldsymbol{x}}}}}}}}\in {{\mathbb{R}}}^{n}\) with \(\mathop{\sum }\nolimits_{i = 1}^{n}{x}_{i}=1\) and xi ≥ 0 for all i ∈ {1, 2, …, n} is a vector of respective compositions of n pure components, and \({{{{{{{\boldsymbol{b}}}}}}}}\in {{\mathbb{R}}}^{m}\) is the resultant latent representation of the mixture.

Predictor

The latent vectors, generated from Extractors 1 and 2 for single species and the MO for mixtures, are further processed using the predictor network that maps fingerprints to the three combustion-related properties. The predictor network comprises three FC layers with rectified linear activation functions and a final linear layer.

Several numerical scales in the curated YSI database (Supplementary Table S3) can be an additional bottleneck in modeling the joint-property predictive model. Therefore, to extend the model’s capability to evaluate YSI on any given scale, we predict Mi, MA and MB from the molecular structural information of the test fuel (i) and two reference compounds (A and B). The last step is postprocessing predictions and calculating the test fuel’s YSI value using Eq. (1). Therefore, the model’s input includes SMILES, Mordred descriptors of pure components, compositions for mixtures, and the scale on which YSI is estimated, namely, SMILES and Mordred descriptors of lower and upper endpoint species (A, B) and their assigned YSI values.

Since the scale-space of the three output variables is significantly different (Supplementary Fig. S2) and the error function (MSE loss) is scale-sensitive, the weighted loss function is used to train the model.

The proposed model’s architecture exhibits numerous hyperparameters to be tuned, including batch size (B), learning rate (lr), predictor architecture, and Extractors 1 and 2. The final output sizes of the latter two determine the optimal dimension of the latent space vector (m) for pure components and mixtures. The hyperparameter tuning was performed using an adaptive experimentation platform using the Bayesian optimization algorithm (https://ax.dev/). The optimal hyperparameter settings were based on the validation set, comprising 15% of the training set. After the tuning, the optimal hidden vector dimension was 24 (i.e., m = 24), reported in Supplementary Table S5 with the other parameters.

Exploring chemical space: Inverse fuel design

Our primary objectives with the search tool are to design mixtures that

-

match target RON, MON and YSI,

-

are subject to known physical constraints, e.g. gasoline specifications, and

-

are of widely varying size, i.e., different number of blendstocks in a mixture.

To match these goals, we propose a full-scope search, a search procedure performed on the entire chemical space generated from the available database. During iterative testing, it was observed that the full-scope search tends to find optimal solutions, i.e., mixtures, containing anywhere between 5-26 single components. This may be caused by the high dimensionality of the search problem since in this study the chemical space was mapped by 514 pure components. Unfortunately, sparsity in the output solution vector x cannot be directly enforced as sparsity-enforcing penalties such as ℓ∞ or ℓ1 norms75 cannot be formulated as a vectorized linear function as required in Eq. (3). To offer the ability to obtain fuels of smaller sizes, we propose the second search approach, the greedy search. This approach exploits the solutions found by the full-scope search and reduces them in size to find mixtures with potentially fewer components, e.g., three to six pure species.

Full-scope search

Since DL is a form of a feature learning based on the nonlinear mappings and the resulting problem is highly non-convex, we first provide a set of k candidates which are starting points for the search. These are chosen as the closest points from the curated database (Table 5) to the vector in the latent space corresponding to the target properties, where the distance is defined as the MSE. The pseudocode for candidate search is provided in Table 6.

We subsequently describe the optimization workflow for the full scope search, further documented in Algorithm 2 in Table 7. The objective is to find a set of optimal composition vectors denoted by x⋆, which can be written as the following optimization problem

where c(x) is a vectorized linear function with its upper and lower bound, e.g., \(\mathop{\sum }\nolimits_{i = 1}^{n}{x}_{i}=1\) is encoded here. xu and xl are the upper and lower bounds for the composition vector, respectively. Matrix A contains a latent representation of all pure components as columns, therefore, Ax refers to the mixture’s representation (see (2)). Vector y contains target properties as entries. We solve this problem using optimize subpackage in the open-source scientific Python computing library—scipy76. We call the optimize function k times, each time with a different starting point, which were obtained using Algorithm 1 in Table 6. We output all the possible solutions with a loss smaller than the given threshold ϵ. The pseudocode is provided below.

For more efficient optimization, we provide an optimizer with a gradient that can be efficiently calculated and extracted using the automatic differentiation module of PyTorch77, a Python open-source library used for implementing DL architecture. The automatic differentiation library provides a functional interface, tracking tensors and all performed operations in a directed acyclic graph, where inputs are leaves, and output tensors are the roots.

"Greedy" search

To generate mixtures of reduced size, e.g., 3–6 components, we adopt a “greedy” search based on the traversal depth-first search algorithm78, where the tree’s root is the initial mixture M found from the general solution with many number of components. The tree’s nodes are generated by removing components individually as shown on the left side of the diagram and rescaling the composition by satisfying the constraints. If the constraints are satisfied, the node is added to the graph. The constraints were matched using Dykstra’s method to compute a point in the intersection of convex sets79. Depth-first search recursively conducts an exhaustive search of all nodes by proceeding, if possible, else to backtrack to the neighbors of all upper levels until the solution is found. Visualization of this search approach is illustrated in Supplementary Fig. S4.

Constraints and targets

The chemical space was limited to the CHO space to evaluate the search tool. The following criteria were used to exclude species from the search:

-

alkynes (sooting components), aldehydes (unstable components)

-

components with molecular weight outside of range 45–150 g/mol,

-

sooting components with more than one aromatic ring,

-

sooting components with aromatic ring and and extra unsaturation (e.g. styrene, indene),

-

sooting components with more than 3 unsaturations (excluding aromatic, e.g. octatetraene)

In this work, the considered chemical space includes only molecules that were present as a single component or part of blends in the curated database. However, in general, any new pure component outside of the database can be added to the chemical space to design mixtures by providing molecular information in terms of SMILES string and molecular descriptors generated by Mordred, i.e., the inputs to Extractor 1 and 2, without need to know its experimentally measured properties.

The default linear constraint corresponds to the requirement that the sum of the compositions in a given mixture, x, must be equal to one \(\mathop{\sum }\nolimits_{i = 1}^{n}{x}_{i}=1\). Other requirements in implementing the search approach follow European gasoline specifications80 and are summarized in Table S6 in Supplementary Material. An additional constraint was specified to consider a maximum volume threshold (10%) for the transporting fuels containing 3, 4, 7 and 8 aliphatic rings (saturated and unsaturated).

To identify promising gasoline blends, RON = 95, MON = 85 and YSI = 60 target values were screened. The YSI was estimated on a ‘unified” scale, Table S3 in Supplementary Material provides details on YSI scales.

Data availability

The authors declare that the data supporting the findings of this study are available within supplementary information files.

Code availability

Codes developed in this work can be available upon reasonable request to the corresponding author via e-mail.

References

Administration, U. E. I. International energy outlook 2019 (2019).

ASTM. Standard test method for research octane number of spark-ignition engine fuel. ASTM D2699-12 (2012).

ASTM. Standard test method for motor octane number of spark ignition engine fuel. ASTM D2700-18 (2011).

Szybist, J. P. et al. What fuel properties enable higher thermal efficiency in spark-ignited engines? Prog. Energy Combust. Sci. 82, 100876 (2021).

Adam, F., Olfert, J., Wong, K.-F., Kunert, S. & Richter, J. M.Effect of engine-out soot emissions and the frequency of regeneration on gasoline particulate filter efficiency. Tech. Rep., SAE Technical Paper (2020).

Calcote, H. & Manos, D. Effect of molecular structure on incipient soot formation. Combust. Flame 49, 289–304 (1983).

Barrientos, E. J., Lapuerta, M. & Boehman, A. L. Group additivity in soot formation for the example of c-5 oxygenated hydrocarbon fuels. Combust. Flame 160, 1484–1498 (2013).

Lemaire, R., Lapalme, D. & Seers, P. Analysis of the sooting propensity of c-4 and c-5 oxygenates: Comparison of sooting indexes issued from laser-based experiments and group additivity approaches. Combust. Flame 162, 3140–3155 (2015).

McEnally, C. S. & Pfefferle, L. D. Improved sooting tendency measurements for aromatic hydrocarbons and their implications for naphthalene formation pathways. Combust. Flame 148, 210–222 (2007).

Dryer, F. L. Chemical kinetic and combustion characteristics of transportation fuels. Proc. Combust. Inst. 35, 117–144 (2015).

Virshup, A. M., Contreras-García, J., Wipf, P., Yang, W. & Beratan, D. N. Stochastic voyages into uncharted chemical space produce a representative library of all possible drug-like compounds. J. Am. Chem. Soc. 135, 7296–7303 (2013).

Schwalbe-Koda, D. & Gómez-Bombarelli, R.Generative Models for Automatic Chemical Design, 445–467, https://doi.org/10.1007/978-3-030-40245-7_21 (Springer International Publishing, Cham, 2020).

Jing, Y., Bian, Y., Hu, Z., Wang, L. & Xie, X.-Q. S. Deep learning for drug design: an artificial intelligence paradigm for drug discovery in the big data era. AAPS J. 20, 1–10 (2018).

Sanchez-Lengeling, B. & Aspuru-Guzik, A. Inverse molecular design using machine learning: Generative models for matter engineering. Science 361, 360–365 (2018).

Schweidtmann, A. M. et al. Graph neural networks for prediction of fuel ignition quality. Energy fuels 34, 11395–11407 (2020).

Li, G. et al. Machine learning enabled high-throughput screening of hydrocarbon molecules for the design of next generation fuels. Fuel 265, 116968 (2020).

Knop, V., Loos, M., Pera, C. & Jeuland, N. A linear-by-mole blending rule for octane numbers of n-heptane/iso-octane/toluene mixtures. Fuel 115, 666–673 (2014).

Anderson, J. E. et al. Octane numbers of ethanol-gasoline blends: Measurements and novel estimation method from molar composition. https://doi.org/10.4271/2012-01-1274 (SAE International, 2012).

Foong, T. M. et al. The octane numbers of ethanol blended with gasoline and its surrogates. Fuel 115, 727–739 (2014).

Solaka Aronsson, H., Tuner, M. & Johansson, B. Using oxygenated gasoline surrogate compositions to map ron and mon. https://doi.org/10.4271/2014-01-1303 (SAE International, 2014).

Hirshfeld, D. S., Kolb, J. A., Anderson, J. E., Studzinski, W. & Frusti, J. Refining economics of us gasoline: octane ratings and ethanol content. Environ. Sci. Technol. 48, 11064–11071 (2014).

Alleman, T. L., McCormick, R. L. & Yanowitz, J. Properties of ethanol fuel blends made with natural gasoline. Energy Fuels 29, 5095–5102 (2015).

Vallinayagam, R. et al. Terpineol as a novel octane booster for extending the knock limit of gasoline. Fuel 187, 9–15 (2017).

Christensen, E., Yanowitz, J., Ratcliff, M. & McCormick, R. L. Renewable oxygenate blending effects on gasoline properties. Energy Fuels 25, 4723–4733 (2011).

Tarazanov, S. et al. Assessment of the chemical stability of furfural derivatives and the mixtures as fuel components. Fuel 271, 117594 (2020).

Abdul Jameel, A. G., Naser, N., Emwas, A.-H., Dooley, S. & Sarathy, S. M. Predicting fuel ignition quality using 1h nmr spectroscopy and multiple linear regression. Energy Fuels 30, 9819–9835 (2016).

Abdul Jameel, A. G., Van Oudenhoven, V., Emwas, A.-H. & Sarathy, S. M. Predicting octane number using nuclear magnetic resonance spectroscopy and artificial neural networks. Energy fuels 32, 6309–6329 (2018).

de Paulo, J. M., Barros, J. E. & Barbeira, P. J. A pls regression model using flame spectroscopy emission for determination of octane numbers in gasoline. Fuel 176, 216–221 (2016).

Li, R., Herreros, J. M., Tsolakis, A. & Yang, W. Machine learning-quantitative structure property relationship (ml-qspr) method for fuel physicochemical properties prediction of multiple fuel types. Fuel 304, 121437 (2021).

Mikolov, T., Sutskever, I., Chen, K., Corrado, G. S. & Dean, J.Distributed representations of words and phrases and their compositionality. Adv. in neural information processing systems. 26 (2013).

Kessler, T. et al. A comparison of computational models for predicting yield sooting index. Proc. Combust. Inst. 38, 1385–1393 (2021).

St. John, P. C. et al. A quantitative model for the prediction of sooting tendency from molecular structure. Energy Fuels 31, 9983–9990 (2017).

Chaparro, G. & Mejía, A. Phasepy: a python based framework for fluid phase equilibria and interfacial properties computation. J. Computational Chem. 41, 2504–2526 (2020).

Yaws, C. The Yaws handbook of vapor pressure: Antoine coefficients (Gulf Professional Publishing, Houston, Texas, 2015).

Saldana, D. A. et al. Prediction of density and viscosity of biofuel compounds using machine learning methods. Energy fuels 26, 2416–2426 (2012).

Dahmen, M. & Marquardt, W. Model-based formulation of biofuel blends by simultaneous product and pathway design. Energy Fuels 31, 4096–4121 (2017).

Rogers, D. J. & Tanimoto, T. T. A computer program for classifying plants. Science 132, 1115–1118 (1960).

Gao, M. & Skolnick, J. A comprehensive survey of small-molecule binding pockets in proteins. PLoS computational Biol. 9, e1003302 (2013).

Giarracca, L. et al. Experimental and kinetic modeling of the ignition delays of cyclohexane, cyclohexene, and cyclohexadienes: Effect of unsaturation. Proc. Combust. Inst. 38, 1017–1024 (2021).

McCormick, R. L. et al. Properties of oxygenates found in upgraded biomass pyrolysis oil as components of spark and compression ignition engine fuels. Energy Fuels 29, 2453–2461 (2015).

Badia, J., Ramírez, E., Bringué, R., Cunill, F. & Delgado, J. New octane booster molecules for modern gasoline composition. Energy Fuels 35, 10949–10997 (2021).

He, P. W. Y. Effects of gasoline with ester additives on the swelling property of rubbers. China Pet. Process. Petrochemical Technol. 20, 44 (2018).

Hoppe, F. et al. Tailor-made fuels for future engine concepts. Int. J. Engine Res. 17, 16–27 (2016).

Alleman, T. & Smith, D. Toxicology and biodegradability of tier three gasoline blendstocks: Literature review of available data https://www.osti.gov/biblio/1568051 (2019).

Magulova, K. Stockholm convention on persistent organic pollutants: triggering, streamlining and catalyzing global scientific exchange. Atmos. Pollut. Res. 3, 366–368 (2012).

Aghahossein Shirazi, S. et al. Effects of dual-alcohol gasoline blends on physiochemical properties and volatility behavior. Fuel 252, 542–552 (2019).

Han, Y. et al. Experimental study of the effect of gasoline components on fuel economy, combustion and emissions in gdi engine. Fuel 216, 371–380 (2018).

Rhoads, R., Burke, S., Windom, B., Ratcliff, M. & McCormick, R. Measured and predicted vapor liquid equilibrium of ethanol-gasoline fuels with insight on the influence of azeotrope interactions on aromatic species enrichment and particulate matter formation in spark ignition engines. https://doi.org/10.4271/2018-01-0361 (SAE International, 2018).

McEnally, C. S., Das, D. D. & Pfefferle, L. D. Yield Sooting Index Database Volume 2: Sooting Tendencies of a Wide Range of Fuel Compounds on a Unified Scale https://doi.org/10.7910/DVN/7HGFT8 (2017).

Das, D. D., St. John, P. C., McEnally, C. S., Kim, S. & Pfefferle, L. D. Measuring and predicting sooting tendencies of oxygenates, alkanes, alkenes, cycloalkanes, and aromatics on a unified scale. Combust. Flame 190, 349–364 (2018).

Zhu, J. et al. Experimental and theoretical study of the soot-forming tendencies of furans as potential biofuels. Tech. Rep., Yale Univ., New Haven, CT (United States) (2020).

National Renewable Energy Laboratory. Co-optimization of fuels & engines: Fuel properties database https://www.nrel.gov/transportation/fuels-properties-database/ (2018).

Ershov, M. A. et al. Hybrid low-carbon high-octane oxygenated gasoline based on low-octane hydrocarbon fractions. Sci. Total Environ. 756, 142715 (2021).

Zervas, E., Montagne, X. & Lahaye, J. Influence of fuel and air/fuel equivalence ratio on the emission of hydrocarbons from a si engine. 1. experimental findings. Fuel 83, 2301–2311 (2004).

Morgan, N. et al. Mapping surrogate gasoline compositions into ron/mon space. Combust. Flame 157, 1122–1131 (2010).

da Silva Jr., A., Hauber, J., Cancino, L. & Huber, K. The research octane numbers of ethanol-containing gasoline surrogates. Fuel 243, 306–313 (2019).

Hoth, A., Kolodziej, C. P., Rockstroh, T. & Wallner, T. Combustion characteristics of prf and tsf ethanol blends with ron 98 in an instrumented cfr engine. https://doi.org/10.4271/2018-01-1672 (SAE International, 2018).

Sarathy, S. M. et al. Ignition of alkane-rich face gasoline fuels and their surrogate mixtures. Proc. Combust. Inst. 35, 249–257 (2015).

Sarathy, S. M. et al. Compositional effects on the ignition of face gasolines. Combust. Flame 169, 171–193 (2016).

Javed, T. et al. Ignition studies of two low-octane gasolines. Combust. Flame 185, 152–159 (2017).

Badra, J., AlRamadan, A. S. & Sarathy, S. M. Optimization of the octane response of gasoline/ethanol blends. Appl. Energy 203, 778–793 (2017).

Lee, C. et al. Autoignition characteristics of oxygenated gasolines. Combust. Flame 186, 114–128 (2017).

Monroe, E. et al. Discovery of novel octane hyperboosting phenomenon in prenol biofuel/gasoline blends. Fuel 239, 1143–1148 (2019).

McCormick, R. L. et al. Co-optimization of fuels & engines: properties of co-optima core research gasolines. Tech. Rep. https://doi.org/10.2172/1467176 (2018).

Gao, Z., Cheng, X., Ren, F., Zhu, L. & Huang, Z. Compositional effects on sooting tendencies of diesel surrogate fuels with four components. Energy Fuels 34, 8796–8807 (2020).

Das, D. D. et al. Sooting tendencies of diesel fuels, jet fuels, and their surrogates in diffusion flames. Fuel 197, 445–458 (2017).

Kashif, M., Bonnety, J., Matynia, A., Da Costa, P. & Legros, G. Sooting propensities of some gasoline surrogate fuels: Combined effects of fuel blending and air vitiation. Combust. Flame 162, 1840–1847 (2015).

McEnally, C. S. et al. Sooting tendencies of co-optima test gasolines and their surrogates. Proc. Combust. Inst. 37, 961–968 (2019).

McEnally, C. S. & Pfefferle, L. D. Improved sooting tendency measurements for aromatic hydrocarbons and their implications for naphthalene formation pathways. Combust. Flame 148, 210–222 (2007).

Pedregosa, F. et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Moriwaki, H., Tian, Y.-S., Kawashita, N. & Takagi, T. Mordred: a molecular descriptor calculator. J. cheminformatics 10, 1–14 (2018).

Weininger, D. Smiles, a chemical language and information system. 1. introduction to methodology and encoding rules. J. Chem. Inf. computer Sci. 28, 31–36 (1988).

Sitzmann, M. Nci/cadd chemical identifier resolver. https://cactus.nci.nih.gov/chemical/structure (2009).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

Bach, F., Jenatton, R., Mairal, J. & Obozinski, G. et al. Optimization with sparsity-inducing penalties. Found. Trends® Mach. Learn. 4, 1–106 (2012).

Virtanen, P. et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Methods 17, 261–272 (2020).

Paszke, A. et al. Automatic differentiation in pytorch (2017).

Tarjan, R. Depth-first search and linear graph algorithms. SIAM J. Comput. 1, 146–160 (1972).

Dykstra, R. L. An algorithm for restricted least squares regression. J. Am. Stat. Assoc. 78, 837–842 (1983).

Pöttering, H. & Necas, P. Directive 2009/30/ec of the european parliament and of the council of 23 april 2009 amending directive 98/70/ec as regards the specification of petrol, diesel and gas-oil introducing a mechanism to monitor and reduce greenhouse gas emissions and amending council directive 1999/32/ec as regards the specification of fuel used by inland waterway vessels and repealing directive 93/12/ec. J. Eur. Union 140, 88–112 (2009).

Acknowledgements

This paper is based on work supported by the Saudi Aramco Research and Development Center FUELCOM3 Program under Master Research Agreement Number 6600024505/01. FUELCOM (Fuel Combustion for Advanced Engines) is a collaborative research undertaking between Saudi Aramco and KAUST, intended to address the fundamental aspects of hydrocarbon fuel combustion in engines, and develop fuel/engine design tools suitable for advanced combustion modes.

Author information

Authors and Affiliations

Contributions

N.K. conceptualized the study, conducted literature review, curated the data, analyzed results and wrote the paper. N.K. and S.H. developed the framework and wrote the codes. A.N. conceptualized the study, co-supervised the project, analyzed obtained candidates and wrote the paper. J.W. contributed to the analysis of candidates and reviewed the manuscript. S.M.S. conceptualized the study, planned and co-supervised the project, contributed to writing and review of the paper. All authors discussed the results and commented on the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Chemistry thanks Athanasios Tsolakis and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kuzhagaliyeva, N., Horváth, S., Williams, J. et al. Artificial intelligence-driven design of fuel mixtures. Commun Chem 5, 111 (2022). https://doi.org/10.1038/s42004-022-00722-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42004-022-00722-3

This article is cited by

-

Prediction of Yield Sooting Index Utilizing Artificial Neural Networks and Adaptive-Network-Based Fuzzy Inference Systems

Arabian Journal for Science and Engineering (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.