Abstract

Molten salts are important thermal conductors used in molten salt reactors and solar applications. To use molten salts safely, accurate knowledge of their thermophysical properties is necessary. However, it is experimentally challenging to measure these properties and a comprehensive evaluation of the full chemical space is unfeasible. Computational methods provide an alternative route to access these properties. Here, we summarize the developments in methods over the last 70 years and cluster them into three relevant eras. We review the main advances and limitations of each era and conclude with an optimistic perspective for the next decade, which will likely be dominated by emerging machine learning techniques. This article is aimed to help researchers in peripheral scientific domains understand the current challenges of molten salt simulation and identify opportunities to contribute.

Similar content being viewed by others

Introduction

General introduction to molten salts

Molten salts are ionic mixtures that are solid at standard temperature and pressure and liquid at elevated temperatures. Molten salts have a high heat capacity and good thermal conductivity which makes them useful as heat exchangers and thermal capacitors in applications such as molten salt reactors and next generation solar systems1. Molten salts include simple systems such as alkali-halides, oxides, and various other salts. For practical applications, complex salts, such as LiF-LiBe2 (Flibe), are of greater interest; opening the door to a large set of salt mixtures to be explored. Extracting thermophysical properties from molten salts experimentally is challenging due to their toxicity, high temperature, and cost of doing so. This motivates the accurate characterization of molten salts through computational methods.

Initial interest in molten salt simulations is associated with the molten salt reactor experiments conducted at the Oak Ridge National Laboratories during 1965–1969. Molten salt reactors are nuclear fission reactors in which the primary coolant and/or fuel is a molten salt mixture. Molten salt reactors are considered promising candidates for Generation IV reactor designs which have sparked new interest and investigation into computational methods to improve understanding of molten salt properties2,3. A visualization of a molten salt simulation box is shown in Fig. 1.

Each ion type is represented by a color—Fluorine in green, Sodium in blue, Potassium in pink, and Lithium in orange. a Simulation size used with a Machine Learning Potential with a total simulation size of 1024 atoms and a side length of 30 nm. b Simulation size used with DFT calculations with a total simulation size of 200 atoms and a side length of 17 nm.

Throughout the last 70 years, various breakthroughs in molten salt simulations can be traced back to novel theoretical approaches, experimental techniques, materials, and increased computational power2,3. Within this review, we focus on three principal epochs: Early simulations from 1930–1990, Progression of DFT-based methods from 1990–2021, and the Machine Learning Era starting in 2020. For each period, we review the new technologies developed followed by their achievements and limitations. During a time when Machine Learning is disrupting many industries and research fields, this review can serve as an introduction for tangentially interested research groups not yet familiar with the potential impact of improved simulation techniques for molten salts.

Introduction to thermophysical properties

The aim of computational simulations of molten salts is the accurate prediction of thermophysical properties. These properties are required to simulate complex fluid dynamics and chemical interactions within molten mixtures. Highly accurate and fast computational explorations of different molten salts could replace expensive and hazardous experiments. The properties of interest are categorized into two classes: (1) Properties that can be derived from short simulations of small systems with only a few explicitly modeled atoms, like heat capacity, density, etc.; (2) Properties that require long simulation times and large system sizes before converging to experimental accuracies, like diffusion, thermal conductivity, etc. Figure 2 summarizes reported properties from reviewed articles and the simulations sizes and durations used to calculate them. Modeling the second class of properties has driven the exploration of more efficient simulation techniques, albeit often at the cost of accuracy.

The derivation of thermodynamic properties for a molten salt requires the atomistic simulation of a system consisting of a specified number (N) of atoms from each chemical component (for example Flibe has been simulated with 100 F, 200 Li, and 400 Be atoms). Various methods exist for simulating the movement of atoms in these systems. Common elements of most simulations are: (1) Each atom is modeled as a point in space with a given position, velocity, and charge attributes; (2) The interaction between atoms is calculated to yield a potential energy term; (3) Forces per atom are calculated as the negative gradient of the potential energy term at a given position; (4) An integrator is used to solve Newton’s equations of motion for each atom and to update the positions. Executing such simulations under defined constraints—such as constant pressure (P), temperature (T), energy (E), or volume (V)—results in dynamic trajectories of the system. A trajectory is an ordered collection of frames, where each frame represents a snapshot in time of the atomic positions of the simulated system. Figure 3 shows a decision flow chart that can be used to select the right simulation for a desired thermophysical property.

Each property is followed by the equation number listed in the text that can be used to derive the property from the simulation data. The arrows connecting the properties with the simulation ensemble (NPT, NVE, and NVT) are again marked with the equation numbers. For example, thermal expansion can be calculated from NVE simulation with Eq. 3 or from NPT simulation with Eq. 2, however, the NPT simulation is the preferred route. Reverse non-equilibrium molecular dynamics (RNEMD) and equilibrium molecular dynamic (EMD) are explained further in the text.

The theories of statistical mechanics provide the recipes to derive thermophysical properties from such simulation trajectories. Structural properties are far more accurate to obtain experimentally. These properties are experimentally derived through neutron scattering experiments and Extended X-ray Absorption Fine Structure. The most common derived properties for MD are the partial radial distribution function, coordination number, angular distribution function, bond angle, and the structure factor. A comprehensive summary of all thermophysical and structural properties is given below.

Overview of the mathematics behind thermophysical properties

Temperature

where N is the number of atoms, m is the mass, and kB is Boltzmann’s constant. Velocities, vi, can be calculated for each particle by taking the change in their position between time trajectory frames.

Density

where N is number of atoms, M is molar mass, Nα is Avogadro’s number, and V is the equilibrated volume of the simulation cell of the given temperature in the isothermal-isobaric (NPT) ensemble. An alternative to determining the density through the NPT ensemble is to use previously measured experimental values for the density. Using experimental densities in some cases is more accurate in the calculation of the other properties such as diffusion, viscosity, thermal, or electrical conductivity. In a nuclear system, high-density molten salts increase neutron production which causes the system to approach criticality. However, if the density is too low the salt will only sustain the system for a short period. An alternative method to find the equilibration volume is to run canonical ensembles (NVT) at different volumes and then fit the pressures and volumes with a Murnaghan equation of state to find the equilibration volume and bulk modulus4.

Thermal expansion

Equation (3) is more commonly used than (4). The results of the NPT ensemble can be used to fit the relation between density and temperature. The relation between density and temperature can be used to find the thermal expansion at a specific density using Eq. (3). Equation (4) can be used by running many NVT ensembles and then using the Murnaghan equation of state to find the equilibration volume for different temperatures5. Fitting a line to the relation between the equilibration volumes and temperatures yields the thermal expansion at a specific volume.

Heat capacity

where CP is the heat capacity at constant pressure, H is the specific enthalpy, T is temperature, and U is the system’s total internal energy including both kinetic and potential energy. This can be simulated in NPT or the NVT for a range of target temperatures.

Equation (6) is the heat capacity at a constant volume. For MS CV is usually close to CP and has very little temperature dependence.

are other approximations commonly used, where \(\left\langle \delta {E}^{2}\right\rangle =\left\langle {E}^{2}\right\rangle -{\left\langle E\right\rangle }^{2}\) and 〈E〉 denotes the average. T is the temperature and kB is the Boltzmann constant.

Equation (9) is another approximation similar to Eq. (7):

where ΔE is the fluctuation in energy6.

Diffusion coefficients

where \(\langle {|\delta {{{{{{\boldsymbol{r}}}}}}}_{i}\left(t\right)|}^{2}\rangle\) is the mean square displacement of the element α, meaning: square the displacements δr of each particle i at various times t and then take the average. The six in the denominator results from being 3-dimensional diffusion. After calculating diffusion coefficients, it is common to find the Arrhenius relationship for temperature and diffusion coefficients:

where Eα is the activation energy of diffusion, R is the ideal gas constant, and T is the temperature. This relationship is used to find the diffusion activation energy. Alternatively, the solubility is defined by:

where Hα is the enthalpy of mixing and Sα is the solubility as the temperature goes to infinity.

Viscosity

uses the Green-Kubo relation through the integration of the shear stress autocorrelation function under an NVT ensemble where σ is the virial pressure tensor. It is averaged with respect to the off-diagonal components (i.e., α ≠ β).

can be used to calculate viscosity using a reverse non-equilibrium molecular dynamics (RNEMD) method in the microcanonical (NVE) ensemble. Where L is the size of the simulation box, T is the temperature, v is the velocity of the particles, m is their mass, and x, y, z are the coordinates. \(\eta =\frac{{k}_{B}T}{2\pi D\lambda }\), is a third option that is more of an approximation than the others. Where λ is the step length of ion diffusion usually assumed to be the diameter of the ion.

Viscosity also follows the Arrhenius relationship in Eq. (11) just like diffusion. And the activation energies of the viscosity can be found from it:

Electrical conductivity

is the most frequently used method using the Green-Kubo relation and the autocorrelation function where \({J}_{z}\left(t\right)=\mathop{\sum }\nolimits_{i=1}^{N}{z}_{i}e{v}_{i}\left(t\right)\). Here \({z}_{i}e\) is the charge of the particle i, V is volume, kB is the Boltzmann constant, and T is temperature.

resembles the diffusion equation and employs the mean squared displacement (MSD) of the particle α. Notice this is an EMD method using the NVT ensemble. Equation (16) using the autocorrelation function is considered more accurate than using the MSD.

A third uncommon approximation can be made with: \({{\sigma }}=D\frac{n{{Z}^{2}e}^{2}}{{k}_{B}T}\).

Thermal conductivity

uses the Green-Kubo relation through the integration of the autocorrelation of JE, simulated in the NVT ensemble. Where \({J}_{E}=\frac{1}{V}\left[\mathop{\sum }\nolimits_{i=1}^{N}{E}_{i}{v}_{i}+\frac{1}{2}\mathop{\sum }\nolimits_{j\ne i}^{N}\left({r}_{{ij}}{f}_{{ij}}\right){v}_{i}\right]\) and \({E}_{i}=\frac{1}{2}[{m}_{i}{v}_{i}^{2}+\mathop{\sum }\nolimits_{j\ne i}^{N}{U}_{{ij}}(r_{ij})]\) is a summation of kinetic and potential energies. Where \({r}_{{ij}}{f}_{{ij}}\) is the position and force on a particle, V is the volume, v is the velocity, m is the mass, kB is Boltzmann’s constant and T is the temperature.

There is also a useful RNEMD method for calculating the thermal conductivity in NVE similar to Eq. (14):

where L is the size of the simulation box, T is the temperature, v is the velocity of the particles, m is their mass, and x, y, z are the coordinates.

Partial radial distribution function (PRDF)

PRDF, sometimes referred to as RDF, is defined7 as:

or alternatively8 as

where ρβ is the number density of species β, Nα is the number of the respective species α, and Nαβ (r) is the mean number of β ions lying in a sphere of a radius r centered on an α ion. This function can be thought of as measuring the correlation between two atoms.

Coordination number (CN)

The CN is defined as:

where rmin the position of the first valley in the PRDF.

Angular distribution function

The angular distribution function can be thought of as the correlation between three particle types and can be derived from the bond angle shown below.

Bond angle

The bond angle is defined as:

where i,j,k are the central atom and two arbitrary atoms indices, respectively. This function can be thought to encode the atomic configuration types and the correlation between three-particle types7.

Structure factor (S)

The structure factor is defined as the Fourier transform of the PRDF in the following manner:

where ρα is the number density of the α ions9.

Outline of this manuscript

This review presents the evolution of computational simulations in three eras, culminating in the combination of molecular dynamic simulations with machine learned potentials that are trained on ab-initio data. This new breed of simulation techniques enables the accurate simulation of large molten salt systems for extended periods of time, sufficient to predict thermophysical properties otherwise not accessible. Current techniques, however, rely on outdated neural network approaches that are insufficient to capture the interactions between complex molten salt mixtures. We present the strengths and weaknesses of the different methods and provide insights where new methods could overcome the existing challenges.

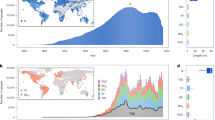

Method

We followed a systematic literature review process to gather the relevant publications for this review. The keywords ionic liquid, molten salt, and molten salt reactor were used in the advanced search of Google scholar to identify a list of 138 potentially relevant papers. After reading the abstracts, the papers were sorted into potentially relevant and not-relevant papers. Each potentially relevant paper was read in detail and summarized by the authors. This process resulted in 57 papers found to be of relevance. In a second step, the cited references of each relevant paper and the citing references were collected and checked for relevance. At the end of this process, 95 total papers were identified. An additional search for machine learning papers was conducted, which added 24 papers to the total relevant number of papers for this review. Figure 4 shows a breakdown of related publications per year and is indicative of the wave-like interest in molten salts throughout the different eras.

Generally relevant papers in blue. Papers that use machine learning and apply it to molten salts are shown proportionately in hatched-red. Important milestones are marked and include: Monte Carlo (MC) being developed, Density Functional Theory (DFT) being developed, the Molten Salt Reactor Experiment (MSRE) going critical at Oak Ridge National Labs, the first molten salt (MS) simulation, Molecular dynamics (MD) begin to be simulated with Density Functional Theory, Ab Initio Molecular Dynamics (AIMD) are applied to molten salts, and machine learning being applied to Molten Salts.

Discussion

Early simulations 1933–1990

The development of quantum mechanics and the Born-Oppenheimer approximation led to the first potentials for alkali halides that were fitted semi-empirically in the condensed phase. Starting in 1933, computationally derived properties were being reported alongside the development of the Born-Huggins-Mayer (BHM) model10. However, limited by the potential form and the lack of computational power, the first set of estimated parameters was rather approximate to experiment11.

The development of the Monte Carlo method in 1953 opened new simulation possibilities and Tessman et al. found polarizabilities of the alkali halides, which were essential for more accurate simulations12. New ionic salt models were developed to improve the accuracy of derived behavior. In 1958, Dick and Overhauser developed the shell model as the first attempt to capture induced polarization effects in ions13. By 1959, the Molecular Dynamics method was introduced which enabled the simulation of trajectories for molten salts, and consequentially the development of interatomic potentials continued to grow14. Tusi and Fumi modified the Born-Mayer-Huggins potential (BHMTF) and some parameters in 1964 using semi-empirical methods and improved predicted properties, such as densities, diffusion, and heat capacity11,15. Besides the still limited computational resources, many of the developed potentials suffered from inaccuracies in fitted parameters—such as Van-der-Waals coefficients—, lack of treatment of many-body interactions, and, especially for the shell model, arbitrary assumptions made about potential forms, all of which left much room for improvement5.

A breakthrough for atomistic simulation was the development of density functional theory (DFT) by Hohenberg and Kohn in 196416. With the ability to calculate accurate potentials, ab-initio calculations became possible and opened the door to computational—albeit not very feasible—first-principle simulations. During the same time, molten salt experiments climaxed with the execution of the Oak Ridge Molten Salt Reactor Project between 1965 and 1969. Consequent publications in 1970 described the chemical considerations that motivated the choice of specific salts in Oak Ridge; such salts being of the alkali halide family as well as others17,18.

The first simulation of molten salts used KCl and was reported in 1971 by Wood, Cock, and Singer using Monte Carlo with the BHMTF potential19. Others continued through 1976 developing various rigid and polarizable models to investigate alkali halides11. From these investigations, it became apparent that many-body interactions would need to be included in some explicit manner. In 1983, Tang and Toennie introduced a universal dispersion damping function that could be applied to Born-Mayer type potentials which were one of the first attempts towards the inclusion of many-body effects20. In 1985, Madden and Fowler expanded this idea using their asymptotic model of polarization, expanded terms beyond the dipole-induced dipole model, which was comparable to LiF simulations as obtained from Hartree-Fock calculations21. It was also during 1985 that ab-initio MD (CPMD), by Car and Parrinello, was introduced; it coupled molecular dynamics with a DFT potential22. The tools of this era laid the framework upon which the next would be built.

Progression of DFT-based methods 1990–2021

Increased computational power and theoretical insights advanced inter-atomic potentials and density functional theory to the level of usefulness and reliability. Both fields were developed by different research groups whose insights influenced each other. Here, we will first review the progression of DFT-based methods, resulting in dispersion corrected ab-initio molecular dynamics (AIMD) methods. Afterward, we will discuss the advancements of inter-atomic potentials, as they were strongly influenced by the increase in accuracy and feasibility of DFT models.

In 1993, Barnnet and Lanmarn introduced Born-Oppenheimer molecular dynamics with DFT which enabled larger simulation timesteps resulting in a better temporal sampling of still very restricted simulations23. Later that year, Kresse and Hafner introduced the method of initializing Car-Parrinello Molecular Dynamics with energy minimization schemes for metals, resulting in additional speed up24. In 1998, Alfe extracted the diffusion coefficients using CPMD for liquid aluminum and thereby demonstrated the feasibility of DFT methods to extract transport properties in condensed materials25. In 2003, Aguado et al. developed an ab-initio process using many condensed phases of MgO with CATSTEP DFT to parametrize coefficients for the aspherical ion model (AIM) representing a breakthrough for polarizable models26. In 2005, Hazebroucq used a tight-binding density functional to calculate and investigate diffusion in NaCl and KCl for a specific experimental volume, a step towards full AIMD27.

In 2006 Madden et al. highlighted the need to control the dispersion interaction as it—despite being only a tiny fraction of the interaction energies of an ion pair—strongly impacted phase transition behavior, such as transition pressures28. It had been a well-known problem up to this time that dispersion interactions in DFT calculations were not accurate. Grimme tackled the problem of dispersion and published a first empirical correction in 2006 for DFT followed by a second in 2010, practically resolving the issue29. In 2006, Klix investigated the diffusion of tritium in Flibe, using CPMD, and named the method ab-initio molecular dynamics30. This simulation was the first time that a molten salt had dynamical behavior derived using ab-intio methods.

In 2014, Corradini investigated dispersion in LiF with both DFT and molecular dynamics and found that dispersion is significant in NPT simulations: it strongly affected melting point calculations and resulted in underestimated equilibrium densities by 15% when omitted and thereby verified the importance of dispersion in molten salt calculations31. In 2015, Anderson used AIMD to model FLiNaK and Flibe and extracted thermodynamic properties such as density, diffusion, and thermal expansion which were validated by experimental measurements32. From 2016–2021 multiple papers investigated the thermophysical behaviors for various salts using AIMD3,33,34,35,36,37,38,39,40,41,42,43. Few of the papers investigated thermodynamical quantities and even less investigated kinetic properties (diffusion and viscosity)34,37,42. Short timescales and a small number of ions render such calculated properties unreliable which most, if not all, DFT-based calculations had in common.

Optical properties such as vibrational spectra are more accessible experimentally than computationally and only a small number of DFT studies investigated them33,44. In 2021, Khagendra et al. reported mechanical properties of Flibe using DFT suggesting that these properties could validate models44. However, as these properties are not consistently presented throughout the literature there is currently limited development in this domain.

The development of interatomic potentials for molten salts between 1990 and 2008 converged in the Dipole-induced polarizable ion model (PIM). The basic idea of PIM is that a sufficiently complex additive forcefield can be fitted to DFT data to result in accurate energy estimates. These energies can in turn be used to propagate the atoms in the model forward in time. Accurate DFT models allowed for parametrization of complex forcefields; for example in 2003 the first fitted dipole model was produced26. In 2008, Salanne developed PIM and defined the most frequently used interatomic potential for the next decade45. Throughout 2008–2020, PIM was used to derive various properties for many salts which were often validated by experiments1,2,3,6,7,9,31,32,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80. From 2018 until today, a revival of sorts, led to alternative potentials, such as the Sharma−Emerson−Margulis (SEM) Drude oscillator model81, to be developed.

The quest for the PIM began in 1993 when Madden and Wilson introduced the method of parametrization via CPMD simulation for halides on a rigid ion model (RIM) potential plus a dipole term (see Section “Summary of Potentials” for an overview of interatomic potentials). This initial model was a response to the earlier shell model; to correct the apparent lack of justification for the potential form82. Since oxides could not be accurately described by polarization effects, the Compressible Ion Model was developed in 199683. In the same year, Madden and Wilson enhanced the 1993 model with the addition of a quadrupole using the asymptotic model of polarization and applied it to AgCl systems84. Wilson and Madden suggested that ionic salt interactions can be described by four terms: induction/polarization, dispersion, compression, and shape effects85.

Another stride was taken in 1998 when Rowley and Jemmer published the Aspherical Ion model which combined the Compressible Ion Model with polarization effects from the asymptotic model of induced polarization25. This model was used to investigate the polarizabilities and hyperpolarizabilities of LiF, NaF, KF, LiCl, NaCl, KCl, LiBr, MgO, CaO by Jemmer, Madden, Wilson, and Fowler86.

The importance of polarization was further evidenced in a 2001 study by Hutchinsons, describing trichloride system phase transitions87. In 2002, Domene used AIM as a starting point to derive an ion model including dipole and quadrupole moments resulting in another polarizable ion model which they used to investigate properties of MgF2, CaF2, LiF, and NaF88.

Computationally less demanding interatomic potentials based on the Born-Huggins-Mayer-Tusi-Fumi (BHMTF), or rigid ion model (RIM), also found application in this century. In 2004, Galamba used BHMTF and born-dispersion parameters to calculate the thermal conductivity of NaCl and KCl—although overpredicting it by 10–20%89. Brookes used a simpler Born-Mayer potential in 2004 to calculate diffusion and viscosity relationships for KCl, KLi, Sc3Cl, Y3Cl, and La3Cl90.

In 2006, Madden et al. described the method of first-principle parameterization for molten salts as well as potential forms for polarizable ion models developed up to that point28. Heaton et al. were first to simulate Flibe with the BHMTF model and Tang-Teonnie’s dispersion damping91.

By 2007, Galamba investigated the theory of thermal conductivity for Alkali-Halides and derived thermal conductivity of NaCl based on BHMTF including non-equilibrium molecular dynamics, but still overestimated measured properties92. In a series of papers from 2008 to 2009, Salanne reported polarizabilities of LiF, NaF, KF, and CsF using DFT condensed phases method, produced the modern PIM, and applied it to LiF-NaF-KF, NaF-ZrF4, LiF-NaF-ZrF4 to derive various thermodynamic properties, including experimentally validated electrical conductivities and diffusion coefficients45,46,47.

Others built on the works by Salanne and Madden, such as Merlet et al. who reported in 2010 multiple diffusion and transport coefficients for various LiF-KF compositions49. Also in 2010, Olivert reported the structural factors of LiF-ZrF4 from a combination of experiments and PIM-based simulations50. In 2011, Salanne and Madden reviewed the importance of polarization effects and promoted the use of PIM52.

In 2012, Salanne described a method for calculating thermal conductivity using the Green-Kubo formalism and applied it to NaCl, MgO, Mg2SiO453. Another review by Salanne in 2012 compared AIM with PIM and published reusable parameters for alkali halides54. In an interesting 2013 study by Benes, heat capacity was determined experimentally for CsF and used to validate DFT and PIM models. While heat capacity was in good agreement with the DFT results, MD simulations did not agree with experimentally determined values for enthalpy of formation55. In 2014, Liu investigated Li-Th4, an important MSR salt, using PIM60.

In 2018, Abramo deployed RIM to model NaCl-KCl liquid-vapor phase, concluding that RIM works well for alkali halides69. In 2019, Guo used PIM to derive thermodynamic properties for Li, Na, K-ThF474. In 2020 Sharma introduced the SEM-drude model which offered an alternative to PIM with 30 times faster execution times81.

As computational power grew, more accurate DFT-based AIMD simulations became available and spawned sustained interest in the fitting of interatomic potentials with functional forms that could allow rapid evaluation for longer simulation times. The literature up to this point suggests many successful proofs of principle, but a limited number of computational assays that could replace experiments. With Moore’s law working in its favor, AIMD might one day become feasible for relevant simulation times and system sizes to derive all thermodynamic potentials, but until then, alternative approaches with higher computational turnaround will remain of high interest.

Machine Learning Era 2020—ongoing

Setting the stage—the promises of machine learning potentials

Machine learning originated from the work by McCulloch and Pitts, who in 1943 proposed artificial neural networks (ANN) as biologically inspired computation architectures93. Many decades passed before useful ANN were constructed, mainly due to increases in computational power and available datasets. Today, old theories are frequently rediscovered and used to design the next breakthrough algorithm, such as ANNs, convolutional neural networks (CNN), or graph neural networks (GNN). Deep learning, a subset of machine learning, serves as a general function approximator that can be trained to mimic any expensive analytical or empirical function, often with substantially reduced execution time. For molten salt simulations, a deep learning method can potentially address the two main problems of existing methods: (1) The limited scalability of ab-initio methods due to their complexity and computational cost for large systems and long timescales; (2) The poor accuracy of efficiently parametrized forcefields due to their limited expressibility.

Novel machine learning tools are frequently evaluated on well-established benchmarks before they find broad adoption. Traditional computer science tasks with well-respected benchmarks, such as data clustering, image annotations, or natural language processing tend to serve as the battleground where new machine learning algorithms prove themselves. Fields like chemical simulations, typically only deploy these models after a knowledge transfer phase. A recent example for this pattern is the attention mechanism94 that substantially improved natural language processing models in a transformer architecture95 and has since influenced models like AlphaFold96.

Managing expectations—the first applications of neural networks to molten salts

A review of machine learning models in the field of molten salt simulations is not expected to identify novel machine learning architectures, but rather show the application of well-established methods in a new context. Indeed, before 2020, only one machine learning inspired method was used to predict saturation pressure of pure ionic liquids: Hekayati et al. trained a simple ANN on 325 experimental vapor pressure points and showed that the resulting model could reproduce the experimental values97. However, this model was not generalizable and serves only as a historical footnote. The successful applications of machine learning techniques to molten salt simulations began in 2020, using ideas from Behler-Parinello98 and Bartok99. Another related discipline that recently started using ML models is that of nanofluid simulations, however, these typically deal with bulk solvent instead of atomistic simulations and are out of scope of this review100,101,102,103,104,105,106,107.

The methods based on Behler-Parinello98 and Bartok99 have common elements depicted in Fig. 5. Both methods require a set of atomistic configurations and associated energies, typically obtained from ab-initio molecular dynamics of small systems (~100 atoms) and short simulation times (<100 ps). Both are trained to predict the energy of each atom in a configuration so that their sum equals the total energy of the system. The differences and current adoptions follow.

A short ab-initio molecular dynamics simulation of a small system generates a training set. One of four commonly used models is fitted or trained. A larger system is then simulated using the custom trained potentials for extended periods. The resulting trajectories are analyzed and thermophysical properties are extracted.

Behler-Parinello proposed a simple feed forward neural network, or multilayer perceptron, that predicts the total energy of a molten salt system as the sum of individual energy contributions of each atom98. This idea is like that of the empirical potentials discussed in section “Progression of DFT-based methods 1990–2021”. Although, instead of pre-defining a functional form, this approach allows the network to be dynamically optimized during training, potentially considering many-body effects and polarization without explicitly defining them. The main contribution of this work was the definition of a symmetry function that translated the neighborhood of each atom into an input vector for the neural network. For atomistic problems, one of the biggest challenges is to find an appropriate way to represent a collection of atoms so that a trained neural network will obey expected invariances (translation, rotation, atom replacement) of the real world. The original work was only applied to pure silicon systems, so it did not need to deal with different atomistic species.

Going deeper—extension of basic neural networks for molten salt simulations

The Behler-Parinello98 concept was further extended by Han et al.108 and Zhang et al.109 in 2018 to yield what they coin a deep potential. In mainstream machine learning literature, the word deep corresponds to a large number of fitting parameters in a network, frequently inside the hidden layers of a network, as opposed to shallow networks with few fitting parameters. The default architecture of deep potential uses five hidden layers with a decreasing number of nodes (240, 120, 60, 30, and 10). Instead of using a symmetry function like Behler-Parinello, deep potential expresses cartesian coordinates of a configuration in local reference frames of each atom.

The main concern with the deep potential method is that a single neural network must be trained for each new configuration and that this network does not explicitly support different atom types. This means two things: (1) It is not possible to train a deep potential for one molten salt mixture and then to transfer it to a slightly different one (FLi to Flibe for example); (2) The neural network is trained on the average distribution of atomic species within a cutoff distance. If on average a NaCl system has 10 Na and 10 Cl atoms as closest neighbors to each atom, then the deep potential will have optimized the weights for the first 10 input nodes for Cl and the last 10 for Na, as they simply sort the neighbor by atom type. If an atom has by chance 8 Na and 12 Cl closest neighbors, the weights for input nodes 9-10 would treat Cl atoms like Na atoms.

Surprisingly, this systematic error has not been more thoroughly discussed by the authors in this or their two influential follow-up papers DeepMD-Kit110 and DP-Gen111, which both use this same approach. For users of deep potential, it is especially important to highlight the compounding effect of this setup for complicated molten salt mixtures. With an increasing number of atomic species in the system, the fluctuations in neighborhoods will prohibit accurate energy assessment for individual atoms, while providing decent predictions on average.

The problem of generalizability of the original Behler-Parinello98 approach was already addressed in 2017 by Smith et al. in their highly influential paper that introduced the ANI-1 network for biochemical simulation.112 Here, the authors used heavily modified symmetry functions that support encoding of recognizable features in the molecular representations and differentiation between atomic species. Most notably, they train neural networks for atomic species, instead of using a single network for all atoms. While this increases the generalizability of the models, which were trained on small molecules but tested with good accuracy on a larger test set, these networks need to be re-trained simultaneously whenever a new atomic species is added to the data set. The first ANI-1 model supported only 4 atom types (H, O, C, N), the ANI-2 model113 expanded this to 7 atom types (S, F, Cl). While the ANI-1x dataset114 is available, the ANI-2 dataset has not been made publicly available. The authors report empirical evidence “that discriminating between atomic numbers allows for training to much lower error on diverse multi-molecule training sets and permits better transferability”114.

The molten salt community has access to the optimized symmetry functions of Smith et al. through the Properties from Artificial Neural Network Architectures (PANNA)115 software suite that was developed to support the training of Behler-Parinello models. Similar to DeepMD-kit, PANNA integrates with the popular large-scale atomistic/molecular massively parallel simulator (LAMPPS)116 MD package.

A popular alternative to training custom neural networks for molten salt simulations is fitting of a Gaussian Approximation Potential (GAP) as introduced by Bartok et al.99 This method uses ab initio configurations to fit a gaussian potential for each atom in the configuration. While these potentials have hyperparameters that can be optimized, the actual fitting of the potentials is fully deterministic opposed to the stochastic processes used in the training of Behler-Parinello ANNs. While GAP does not fully qualify therefore as a machine learning model, the current literature often describes the fitting of the potentials with the same vocabulary (hyper parameter tuning, test/validation/training datasets, etc). Contrary to empirical potentials discussed earlier, GAP is theoretically able to model any complex potential energy landscape, given a sufficiently large dataset of ab initio configurations and energies for fitting.

First proofs of principle—review of applications of neural networks to molten salts

The following overview of recent publications will use either GAP or ANN based methods. All of them follow the same strategy of first running short and small AIMD simulations, fitting a potential specific to their current salt mixture, and then running MD with that potential. Due to limited integrations, all simulations listed here use the LAMPPS package. This strategy overcomes the prohibitive cost of running long AIMD for large systems and holds the promise of higher accuracy than empirical potentials with their limited expressibility.

In 2020, Tovey et al. investigated NaCl with the GAP potential fitted on a AIMD dataset of 1000 configurations, for a system of 64 ions for 14 ps117. MD in LAMPPS was afterward run for up to 10 thousand ions for 30 ns. The resulting PRDF agrees very well with data from beam line experiments. Sivaraman et al. trained a GAP model on AIMD configurations from LiCl simulations and reports good alignment between resulting MD and AIMD simulations118. Nguyen et al. investigated actinide molten salts by training a GAP on AIMD configurations and showed good agreement between experiments and simulations of ThCl4–NaCl and UCl3–NaCl119.

Li et al. fitted a Behler-Parinello model with PANNA to AIMD data of NaCl and showed good agreement between the resulting MD trajectory and the AIMD data120. Lam et al. also used the Behler-Parinello approach with PANNA to train an ANN for LiF and Flibe121. For LiF the mean average error (MAE) of the energy was less than 2.1 and 2.3 meV/atom for liquid and solid LiF respectively which is near the precision of the DFT calculations they used to generate the reference data. For Flibe the MAE in energy was 2.10 meV and a MAE in force of 0.084 eV/Å which is the same order of error inherent in DFT calculations. In a calculation of the ionic diffusion coefficients of Be, F, and Li, all were within ±10–15% error from the DFT calculations which are within the uncertainty of typical experiments.

The Y. Lu lab used the DeepMD-kit to train a deep potential for ZnCl2 on AIMD data with 108 atoms simulated for 30 ps122. Larger MD with 1980 atoms and 100 ps duration was compared with a PIM model that simulated 768 atoms for 500 ps. Extracted properties aligned very well between all models, and the authors suggested a deeper comparison between ML and PIM accuracies. In a follow-up work by the Lu lab, the potential was extended to ZnCL2 mixtures. The resulting ML trajectories showed reasonable agreement within 26% of experiment values for thermal conductivity using the RNEMD method, within 6.6% for specific heat capacity, and within 4.2% for density. These larger uncertainties might well be related to the previously discussed problem of deep potential only training a single ANN. For increasingly complex mixtures, the assignment of atom types to correct input nodes becomes error-prone and should be carefully evaluated in such studies.

The G. Lu lab has embraced the deep potential method and published a series of experiments between 2020 and 2022, first to simulate MgCl2123 and then MgCl2-KCl124. Their strategy is always to run a short AIMD simulation of <100 atoms, use DeepMD-Kit to fit a deep potential, and then follow up with a longer MD simulation using the trained potential. They report consistently good agreement with experimental values for similar studies investigating LiCl-KCl mixture125, alkali chlorides126, lanthanum chlorides127, KCl-CaCl2128, Li2CO3-Na2CO3129, and SrCl2130.

Rodrigues et al. used DeepMD-Kit to fit existing AIMD data for LiF and Flibe to a deep potential before conducting MD that resulted in good agreement with the AIMD data131. Li et al. investigate the interactions of Uranium in NaCL132. They found that a deep potential model trained on AIMD data outperformed classical PIM models. Lee et al. showed also that FLiNaK can be simulated with higher accuracy using a deep potential model than a RIM model trained on AIMD data133. It will be interesting to see if additional reports will substantiate the evidence for deep potentials being superior to the current state-of-the-art empirical potentials and supersede them in the near future.

Evaluating the status quo—how far can current neural networks go?

This comprehensive review of machine learning methods for molten salt simulations clearly shows one shortcoming of the applied methodology. None of these papers has reused a model fitted by other groups. Compared with PIM parameters that allow potential reuse by other groups, this breed of ANNs or GAPs always requires new potential fitting before investigating a novel salt mixture. The core problem is not the available algorithms, as demonstrated by the extensible ANI/ANI2 networks that build off a modified Behler-Parinello approach. It is rather that the recent studies are conducted by users and not developers of ML software. Promising new ML models, like graph neural networks such as the SE(3)-transformer134 are likely going to outperform the current deep potential and Behler-Parinello methods as soon as they become easily trainable and integrated with favorite simulations tools. Especially for more complex mixtures, it will be necessary to make the shift to more extensible architectures, as current approaches suffer too much from the limited expressibility of atom or isotope types. In general, it is worthwhile to remember that machine learning can be optimized through three different approaches: (1) increasing datasets, (2) improved training strategies, (3) better network architectures. While some recent papers try to improve accuracies through increased datasets120, others apply strategies such as active learning135 to reduce the necessary number of training data.

It would be beneficial if a shared database of AIMD configurations of multiple salts would be made available (similar to the ANI dataset), ideally in conjecture with experimentally collected thermophysical properties to create community benchmarks.

As these first studies prove the value of machine learning for replacing ab-initio MD methods and empirical force fields, the current breed of applications only scratches the surface of what is possible. Deep learning does not need to be limited to a specific set of atom species, but novel network architectures, such as graph-based neural networks like the SE(3)-transformer134, could potentially generalize over the entire periodic table of elements and the various isotopes encountered in molten salt reactor simulations. Training of such a general-purpose machine learning model would however require a more community-oriented sharing of training data through open databases and reproducible benchmarks.

Once a more general neural network is available, integration with high-performance MD codes, such as OpenMM136 or Gromacs137, could increase the usage further than PANNA or DeepMD-kit’s current scopes. The limited support of machine learning potential in LAMMPS is currently restricted to Tensorflow implementations, the popular PyTorch library however can more easily be integrated with OpenMM. Coupled with the right simulation tools, accurate potentials could be used not only to investigate defined salt mixtures but rather to propose ideal mixtures for desired properties. In an unsupervised optimization process, molten salt constituents and ratios could be developed that outperform known mixtures and result in safer and more efficient reactors and solar systems.

Summary of potentials

This section shows the functional forms of all commonly used potentials in molten salt simulations in order of first publication.

Born-Huggins-Mayer (BHM)10

The Born-Huggins-Mayer (BHM)10 model which was developed in 1933 is a pair-potential for akali halides. α is the Madelung constant for the associated ionic crystal. C, D are van der Wall constants calculated by Mayer138. βij is the Pauling factor139. b is an arbitrarily chosen factor. σi,σj are the radii of ions. rij is the separation distance of the ions. ρ is empirically determined.

Shell Model13

The Shell Model13 which was developed in 1958 represents an ion composed of a core and shell of opposing charge. The pair potential consists of three terms: electrostatic, short-range, and self-energy interactions which depend on four generalized coordinates. p is the polarization, x+,x− is the distance of the core center to the respective lattice site, d+,d− is the distance of the core site to the shell center, e is the elementary charge, E is the macroscopic electric field, P = Np is the definition of polarization where n+, n− is the number of electrons, N is the number of ions per unit volume, R0 is the lattice separation, and ρ is the same as in the BHM model. D is the exchange charge polarization coefficient.

BHM-Tusi-Fumi (BHMTF)11,15

BHM-Tusi-Fumi (BHMTF)16 model which was developed in 1964 where the effective pair potential differs from the original BHM model by allowing ρ to vary from salt to salt determined semi-empirically and using the effective charge Zi Zj of ion pairs. ni,nj is the number of electrons in the outer shell. All the other parameters are the same as the BHM model11.

Tang-Toennies damping function for dispersion20

The damping function developed in 1984 represents a generalized way to damp the polarization dispersion energy. The accuracy was verified on several ab-initio calculations.

Madden-Wilson Model82

The Madden-Wilson model developed in 1993 includes the original BHM terms with a change of the first coulombic charge to include the variable charge, ν, of the ion and a dipole potential. The dipoles are approximated with the form given, where di is the rod length of the dipoles.

Compressible Ion Model83

This pair potential, developed in 1996, is for oxide-type salts. It is the BHM potential with dispersion Tang-Toennies damping functions and modification of the repulsion term. The ionic radii are allowed to vary and described through the relation \({\sigma }_{i}={\bar{\sigma }}_{i}+{\delta }_{i}\) where \(\bar{\sigma }\) is the average ionic radii and δi describes instantaneous changes based on the environment. The first two terms account for the repulsion term in BHM while the third term, u--, accounts for a frozen oxide-oxide interaction.

Polarizable Ion Model—induced dipole and quadrupole88

This potential, developed in 2002, depends on the dipole moment, μ, the quadrupole moment, θ, and Q, the formal charge of the ion. The \({T}_{\alpha \beta \gamma }^{i}\ldots\) is the interaction tensors, while ki are proportional to polarizabilities.

Aspherical Ion Model25,28

This model, developed partially in 1998 and finished in 2006 combines the Compressible Ion Model and the Polarizable model.

Polarizable Ion Model—induce dipole (PIM)45

The model, developed in 2008 is reduced to dipole contribution plus the BHMTF model. The dipole moments are calculated self consistently.

Behler-Parinello Neural Network (BPNN)98

where \({w}_{{ij}}^{k}\) is the weight parameter connecting node j in layer k with node i in layer k – 1, and \({w}_{0j}^{k}\) is a bias weight that is used as an adjustable offset for the activation functions \({f}_{a}^{k}\): hyperbolic tangent for hidden layers and liner function for output. Weights were initially randomly chosen but error functions can be minimized to obtain correct weights. \({G}_{i}^{\mu }\) is a symmetry function of the cartesian coordinates \({R}_{i}^{\alpha }\) of the local environment of the i atom. The locality is determined by the function

where Rc is some predetermined cutoff radius. The radial symmetry function is

where η,Rs are parameters. The angular symmetry function is

where \(\xi ,\lambda \in \left\{-1,1\right\}\) are new parameters and \({{\cos }}{\theta }_{{ijk}}=\frac{{{{{{{\bf{R}}}}}}}_{{ij}}\cdot {{{{{{\bf{R}}}}}}}_{{jk}}}{{R}_{{ij}}{R}_{{jk}}}\) with i being the central atom and \({{{{{{\bf{R}}}}}}}_{\alpha \beta }={{{{{{\bf{R}}}}}}}_{\alpha }-{{{{{{\bf{R}}}}}}}_{\beta }\)

Deep potential108

where f,w comes from a fully-connected feedforward neural network. Ii is the input vector of the function: it takes in the positions of all the atoms (in cartesian or some polar-like coordinate system \(\left\{\frac{1}{r},{{\cos }}\;\theta ,{{\cos }}\;\phi ,{{\sin }}\;\phi \right\}\)) centered on the i atom with a cutoff of the nearest Nc neighbor atoms determined from the max number of atoms within the cutoff radius of Rc. The weights are constrained to be the same for atom-types α. The ordering of the inputs into Ii at the first level is atom-type α and at the second level ascending distance from the i atom.

Deep Potential Molecular Dynamics (DeepMD)109

where Ei comes from Deep Potential except for Ii is called Dij:

where Rij is the separation between atoms i,j and \({{{{{{\bf{x}}}}}}}_{{ij}}^{\alpha }\) are the cartesian components of the separation vector Rij and D is ambiguous in which situation which case should be used. The parameters are determined by the Adams method, see the associated paper for more details. The parameters are from the fitting functions which are of the similar form described in BPNN.

Gaussian Approximation Potentials (GAP)99

represents the total energy of the system where rij is the separation vector and ϵ is the atomic energy function. GAP uses a localized version of the above equation replacing the separation vector with the truncated spectrum space: \(\epsilon \left(\left\{{{{{{{\bf{r}}}}}}}_{ij}\right\}\right)\to \epsilon ({{{{{\bf{b}}}}}})\). The spectrum space is constructed from the local atomic density of each atom i with a cutoff radius determining the locality and projected into 4D spherical harmonics which encapsulates the 3D spherical coordinates. The projections form a basis set with the Clebsch-Gordon coefficients to determine the intensity of each projection.

where the index i is dropped in the equations above and the following definitions: \({U}_{{m}^{{\prime} }m}^{j}\)are the Wigner matrices (4D spherical harmonics), ρ is the local atomic density of the i atom, \({C}_{{j}_{1}{m}_{1}{j}_{2}{m}_{2}}^{{jm}}\) are the Clebsch-Gordon coefficients, and the total angular momentum is truncated, \(j,{j}_{1},{j}_{2}\le {J}_{{\max }}\), to the associated atomic neighborhood.

The atomic energy function is approximated as a series of Gaussians:

where n,l run over the reference configuration and spectrum components, αn is the fitting parameter, and θl is a hyper parameter. The function is fit by least-squares fitting using the covariance matrix:

where δ, σ are hyperparameters and y is the set of reference energies.

Comparing accuracy of models based on derived properties

Sections “Early simulations 1933–1990, Progression of DFT-based methods 1990–2021, Machine Learning Era 2020—ongoing” have summarized the various computational methods deployed to simulate molten salts: ab-initio molecular dynamics (AIMD), rigid ion model (RIM), polarizable ion model (PIM), Gaussian Approximation Potential (GAP), and deep potential learning methods (DeePMD). A comprehensive review of all computational potentials reported has been provided in Section 3.4. Here, we use metanalysis to provide a comparison between the different methods.

Comparing the accuracy of models quantitatively is impractical due to the lack of consistency in reporting properties across the literature and the nature of the derived properties. For thermophysical properties, the data is primarily in graphical form to capture the relationship between temperature and that property, but for structural properties, some numerical values, such as coordination number and position of local minimums and maximums, are reported in the literature.

We compare the methods using the first peak position of the PDF of Li-Cl ions as shown in Table 1. This value represents the average bond length of the Li-Cl ion and presents a reasonable way to compare the accuracy of models. AIMD and Experiment agree well, with AIMD capturing the descending behavior with respect to increasing temperature. PIM, GAP, and DeePMD agree reasonably well with AIMD (the value of GAP was not directly reported but extracted from a graph by visual approximation). RIM does not capture the temperature dependent behavior, which underlines the importance of polarization effects as widely described in the literature.

Another frequently simulated salt is Flibe, for the first peak position of the partial radial distribution function we find good agreement across PIM80, RIM140, AIMD35,44, X-ray diffraction experiment141, and ML methods121,131. Only the modified RIM shows disagreement of 0.1 Å for the fluoride-fluoride pair (see Supplementary Data 1—Table S1).

Multiple thermophysical properties of Flibe have been reported across different methods. For density: RIM agrees to 0.5% with experiment142; PIM didn’t report density but demonstrated good agreement with experimental molar volumes80; similarly, the DFT methods reported agreement with experimental equilibrium volumes143,144 by (9–18)% with better agreement using PBE with dispersion corrections (vdw-DF145) with 2% inaccuracy146; ANI-1 underpredicted density by 6%, potentially because this study didn’t use a training dataset that corrected for dispersion121; DeePMD underpredicted density by 14% compared to experiment131.

For diffusion, it is difficult to define an accurate benchmark as there have not been many experimental measurements and the accuracy of AIMD is limited due to short time scales. Compared to AIMD, ANI-1 and DeePMD show 10–15% agreement while PIM shows agreement to within 30%. There is no report for RIM on diffusion coefficients, but we don’t expect it to be better based on the viscosity and electrical conductivity data.

For Electrical conductivity: RIM is close to experimental values for higher temperatures but deviates much further for lower temperatures, PIM shows better agreement with experimental data, and DeePMD appears to do worse than PIM but not by much. Thermal conductivity was overestimated by 20% with PIM compared to accepted experimental results. DPMD finds better agreement than PIM. ANI-1 didn’t report thermal or electrical conductivities; however, we expect a similar or even better performance to DeePMD.

For viscosity, DeePMD performs best followed by PIM then by RIM, other methods did not report viscosities. Lam et al. compared computational times of PIM, AIMD, and AN1-1 and found that the computational time in ascending order was PIM, ANI-1, then AIMD demonstrating that PIM is faster than ANI-1121.

It is unclear what may definitively be said about the best model due to inconsistency in reported values across all thermophysical properties. The ML methods show promise with ANI-1 and GAP demonstrating the greatest accuracy but are suspected to suffer from transferability across compositions where PIM may outperform due to its parameters originating from a larger composition space91.

Conclusions and outlook

Accurate prediction of thermophysical properties of molten salts can have an immeasurable impact on our society as new reactor and solar power systems are being developed. Throughout the last 80 years, breakthroughs in theory, computational power, and experiments have substantially advanced our ability to extract the necessary properties from simulations. Today, we witness the advent of machine learning in molten salt simulations and foresee unprecedented improvements in our abilities to design and use new molten salt mixtures. Machine learning models present a valuable midground between the accuracy and efficiency of classical force fields and ab-inito calculations. Thermophysical properties derived from extended simulations will be able to fit more accurate computational fluid dynamic models and help in designing superior molten salt systems.

To support the development of next-generation machine learning methods we identify the following concrete needs: (1) Existing data from DFT calculations should be made freely accessible and transparent to enable data science groups to train novel models; (2) high-performance integrations of machine learning forces into existing molecular dynamics toolkits should be extended beyond the current LAMMPS integrations; (3) reusability of machine learning models should be increased by proper sharing and documentation of models; (4) a set of experimental benchmarks should be defined for a representable set of molten salts to allow for reproducible assessment of the quality of predicted thermophysical properties; (5) an open library for the analysis of trajectories and extraction of thermophysical properties should be made available to render results amongst studies more comparable.

Additional opportunities that may lead to improved machine learning models include the following: (1) Design of custom machine learning architectures for molten salts that incorporate inductive biases, which will require close collaboration between chemists and computer scientists; (2) introduction of superior active learning techniques to develop optimal training strategies; (3) development of architectures that can generalize beyond a few atom types, ideally to the space of all relevant molten salts and solvents.

The grand challenge of molten salt simulations is optimizing salt mixtures for desired thermophysical properties. Current machine learning models are still limited to predictions for mixtures that were used to train them and small variations in experimental conditions, such as temperature. We expect that better datasets, training strategies, and architectures will soon overcome these limitations.

References

Pan, G. et al. Finite-size effects on thermal property predictions of molten salts. Solar Energy Mater. Solar Cell. 221, 110884 (2020).

DeFever, R. S., Wang, H., Zhang, Y. & Maginn, E. J. Melting points of alkali chlorides evaluated for a polarizable and non-polarizable model. J. Chem. Phys. 153, 011101 (2020).

Li, B., Dai, S. & Jiang, D.-E. First-Principles Molecular Dynamics Simulations of UCln–NaCl (n = 3, 4) Molten Salts. ACS Appl. Energy Mater. 2, 2122–2128 (2019).

Murnaghan, F. Proceedings of the National Academy of Sciences of the United States. America 30, 244–247 (1944).

Bengtson, A., Nam, H. O., Saha, S., Sakidja, R. & Morgan, D. First-principles molecular dynamics modeling of the LiCl–KCl molten salt system. Comput. Mater. Sci. 83, 362–370 (2014).

Rong, Z., Ding, J., Wang, W., Pan, G. & Liu, S. Ab-initio molecular dynamics calculation on microstructures and thermophysical properties of NaCl–CaCl2–MgCl2 for concentrating solar power. Solar Energy Mater. Solar Cell. 216, 110696 (2020).

Wang, J., Sun, Z., Lu, G. & Yu, J. Molecular Dynamics Simulations of the Local Structures and Transport Coefficients of Molten Alkali Chlorides. J. Phys. Chem. B 118, 10196–10206 (2014).

Walz, M.-M. & van der Spoel, D. Molten alkali halides—temperature dependence of structure, dynamics and thermodynamics. Phys. Chem. Chem. Phys. 21, 18516–18524 (2019).

Abramo, M. C. et al. Structure factors and x-ray diffraction intensities in molten alkali halides. J. Phys. Commun. 4, 075017 (2020).

Huggins, M. L. & Mayer, J. E. Interatomic Distances in Crystals of the Alkali Halides. J. Chem. Phys. 1, 643–646 (1933).

Sangster, M. J. L. & Dixon, M. Interionic potentials in alkali halides and their use in simulations of the molten salts. Adv. Phys. 25, 247–342 (1976).

Tessman, J. R., Kahn, A. H. & Shockley, W. Electronic Polarizabilities of Ions in Crystals. Phys. Rev.92, 890–895 (1953).

Dick, B. G. & Overhauser, A. W. Theory of the Dielectric Constants of Alkali Halide Crystals. Phys. Rev. 112, 90–103 (1958).

Alder, B. J. & Wainwright, T. E. Studies in Molecular Dynamics. I. General Method. J. Chem. Phys. 31, 459–466 (1959).

Fumi, F. G. & Tosi, M. P. Ionic sizes and born repulsive parameters in the NaCl-type alkali halides—I: The Huggins-Mayer and Pauling forms. J. Phys. Chem. Solids 25, 31–43 (1964).

Hohenberg, P. & Kohn, W. Inhomogeneous Electron Gas. Phys. Rev. 136, B864–B871 (1964).

Leblanc, D. Molten salt reactors: A new beginning for an old idea. Nucl. Eng. Design 240, 1644–1656 (2010).

Grimes, W. R. Molten-Salt Reactor Chemistry. Nucl. Appl. Technol. 8, 137–155 (1970).

Woodcock, L. V. & Singer, K. Thermodynamic and structural properties of liquid ionic salts obtained by Monte Carlo computation. Part 1.—Potassium chloride. Trans. Faraday Soc. 67, 12–30 (1971).

Tang, K. T. & J. P. T. An improved simple model for the van der Waals potential based on universal damping functions for the dispersion coefficients. J. Chem. Phys. 80, 3726–3741 (1984).

Fowler, P. & Madden, P. Fluctuating dipoles and polarizabilities in ionic materials: Calculations on LiF. Phys. Rev. B 31, 5443–5455 (1985).

Car, R. & Parrinello, M. Unified Approach for Molecular Dynamics and Density-Functional Theory. Phys. Rev. Lett. 55, 2471–2474 (1985).

Barnett, R. N. & Landman, U. Born-Oppenheimer molecular-dynamics simulations of finite systems: Structure and dynamics of (H2O)2. Phys. Rev. B 48, 2081–2097 (1993).

Kresse, G. & Hafner, J. Ab initio molecular dynamics for liquid metals. Phys. Rev. B 47, 558–561 (1993).

Rowley, A. J., J̈emmer, P., Wilson, M. & Madden, P. A. Evaluation of the many-body contributions to the interionic interactions in MgO. J. Chem. Phys. 108, 10209–10219 (1998).

Andre ́s Aguado, L. B. Sandro Jahn & Paul A. Madden, Multipoles and interaction potentials in ionic materials fromplanewave-DFT calculations. Faraday Discussions 124, 171–184 (2003).

Hazebroucq, S. et al. Density-functional-based molecular-dynamics simulations of molten salts. J. Chem. Phys. 123, 134510 (2005).

Madden, P. A., Andres Aguadoc, R. H. & Jahn, S. From first-principles to material properties. J. Mol. Struct. 771, 9–18 (2006).

Jones, R. O. Density functional theory: Its origins, rise to prominence, and future. Rev. Modern Phys. 87, 897–923 (2015).

Klix, A., Suzuki, A. & Terai, T. Study of tritium migration in liquid Li2BeF4 with ab initio molecular dynamics. Fusion Eng. Design 81, 713–717 (2006).

Corradini, D., Marrocchelli, D., Madden, P. A. & Salanne, M. The effect of dispersion interactions on the properties of LiF in condensed phases. J. Phys. Condens. Matter 26, 244103 (2014).

Anderson, M. et al. Heat Transfer Salts for Nuclear Reactor Systems—Chemistry Control, Corrosion Mitigation, and Modeling; DOE/NEUP-10-905; Other: 10-905; TRN: US1500352 United States; Univ. of Wisconsin, Madison, WI (United States); Univ. of California, Berkeley, CA (United States); Pacific Northwest National Lab. (PNNL), Richland, WA (United States): 2015; p Medium: ED; Size: 216 p.

Dai, J., Han, H., Li, Q. & Huai, P. First-principle investigation of the structure and vibrational spectra of the local structures in LiF–BeF2 Molten Salts. J. Mol. Liquids 213, 17–22 (2016).

Kwon, C., Kang, J., Kang, W., Kwak, D. & Han, B. First principles study of the thermodynamic and kinetic properties of U in an electrorefining system using molybdenum cathode and LiCl-KCl eutectic molten salt. Electrochim. Acta. 195, 216–222 (2016).

Liu, S. et al. Investigation on molecular structure of molten Li2BeF4 (FLiBe) salt by infrared absorption spectra and density functional theory (DFT). J. Mol. Liquids 242, 1052–1057 (2017).

Kwon, C., Noh, S. H., Chun, H., Hwang, I. S. & Han, B. First principles computational studies of spontaneous reduction reaction of Eu(III) in eutectic LiCl-KCl molten salt. Int. J. Energy Res. 42, 2757–2765 (2018).

Mukhopadhyay, S. & Demmel, F. Modelling of structure and dynamics of molten NaF using first principles molecular dynamics. AIP Conf. Proc. 1969, 030001 (2018).

Guo, H. et al. First-principles molecular dynamics investigation on KF-NaF-AlF3 molten salt system. Chem. Phys. Lett. 730, 587–593 (2019).

Li, J., Guo, H., Zhang, H., Li, T. & Gong, Y. First-principles molecular dynamics simulation of the ionic structure and electronic properties of Na3AlF6 molten salt. Chem. Phys. Lett. 718, 63–68 (2019).

Xi, J., Jiang, H., Liu, C., Morgan, D. & Szlufarska, I. Corrosion of Si, C, and SiC in molten salt. Corrosion Sci. 146, 1–9 (2019).

Gill, S. K. et al. Connections between the Speciation and Solubility of Ni(II) and Co(II) in Molten ZnCl(2). J. Phys. Chem. B 124, 1253–1258 (2020).

Duemmler, K. et al. Evaluation of thermophysical properties of the LiCl-KCl system via ab initio and experimental methods. J. Nucl. Mater. 559, 153414 (2021).

Liu, X., Li, Y., Wang, B. & Wang, C. Raman and density functional theory studies of lutecium fluoride and oxyfluoride structures in molten FLiNaK. Spectrochim. Acta Part A 251, 119435 (2021).

Baral, K. et al. Temperature-dependent properties of molten Li2BeF4 Salt using Ab initio molecular dynamics. ACS omega 19822–19835 (2021).

Salanne, M. et al. Polarizabilities of individual molecules and ions in liquids from first principles. J. Phys. Condens. Matter 20, 494207 (2008).

Salanne, M. et al. Transport in molten LiF–NaF–ZrF4 mixtures: A combined computational and experimental approach. J. Fluor. Chem. 130, 61–66 (2009).

Salanne, M., Simon, C., Turq, P. & Madden, P. A. Heat-transport properties of molten fluorides: Determination from first-principles. J. Fluor. Chem. 130, 38–44 (2009).

Sarou-Kanian, V. et al. Diffusion coefficients and local structure in basic molten fluorides: in situNMR measurements and molecular dynamics simulations. Phys. Chem. Chem. Phys. 11, 11501–11506 (2009).

Merlet, C., Madden, P. A. & Salanne, M. Internal mobilities and diffusion in an ionic liquid mixture. Phys. Chem. Chem. Phys. 12, 14109 (2010).

Pauvert, O. et al. In Situ Experimental Evidence for a Nonmonotonous Structural Evolution with Composition in the Molten LiF−ZrF4System. J. Phys. Chem. B 114, 6472–6479 (2010).

Pauvert, O. et al. Ion Specific Effects on the Structure of Molten AF-ZrF4Systems (A+= Li+, Na+, and K+). J. Phys. Chem. B 115, 9160–9167 (2011).

Salanne, M. & Madden, P. A. Polarization effects in ionic solids and melts. Mol. Phys. 109, 2299–2315 (2011).

Salanne, M., Marrocchelli, D., Merlet, C., Ohtori, N. & Madden, P. A. Thermal conductivity of ionic systems from equilibrium molecular dynamics. J. Phys. Condens. Matter 23, 102101 (2011).

Salanne, M. et al. Including many-body effects in models for ionic liquids. Theor. Chem. Accounts 131, 1143 (2012).

Beneš, O. et al. A comprehensive study of the heat capacity of CsF from T=5 K to T=1400 K. J. Chem. Thermodyn. 57, 92–100 (2013).

Dewan, L. C., Simon, C., Madden, P. A., Hobbs, L. W. & Salanne, M. Molecular dynamics simulation of the thermodynamic and transport properties of the molten salt fast reactor fuel LiF–ThF4. J. Nucl. Mater. 434, 322–327 (2013).

Sooby, E. et al. Candidate molten salt investigation for an accelerator driven subcritical core. J. Nucl. Mater. 440, 298–303 (2013).

Bessada, C. et al. In Situ Experimental Approach of Speciation in Molten Fluorides: A Combination of NMR, EXAFS, and Molecular Dynamics. In Molten Salts Chemistry and Technology. (eds M. Gaune-Escard, G. M. H.) 219–228, (2014).

Ishii, Y., Sato, K., Salanne, M., Madden, P. A. & Ohtori, N. Thermal Conductivity of Molten Alkali Metal Fluorides (LiF, NaF, KF) and Their Mixtures. J. Phys. Chem. B 118, 3385–3391 (2014).

Liu, J. B. et al. Theoretical studies of structure and dynamics of molten salts: the LiF-ThF4 system. J. Phys. Chem. B 118, 13954–62 (2014).

Chakraborty, B. Sign Crossover in All Maxwell-Stefan Diffusivities for Molten Salt LiF-BeF2: A Molecular Dynamics Study. J. Phys. Chem. B 119, 10652–63 (2015).

Dai, J., Long, D., Huai, P. & Li, Q. Molecular dynamics studies of the structure of pure molten ThF4 and ThF4–LiF–BeF2 melts. J. Mol. Liquids 211, 747–753 (2015).

Dario Corradini, Y. I., N. Ohtori, M. Salanne, DFT-based polarizable force field for TiO2 and SiO2. Model. Simul. Mater. Sci. Eng. 23, 074005 (2015).

Gheribi, A. E., Salanne, M. & Chartrand, P. Thermal transport properties of halide solid solutions: Experiments vs equilibrium molecular dynamics. J. Chem. Phys. 142, 124109 (2015).

Gheribi, A. E. & Chartrand, P. Thermal conductivity of molten salt mixtures: Theoretical model supported by equilibrium molecular dynamics simulations. J. Chem. Phys. 144, 084506 (2016).

Gheribi, A. E., Salanne, M. & Chartrand, P. Formulation of Temperature-Dependent Thermal Conductivity of NaF, β-Na3AlF6, Na5Al3F14, and Molten Na3AlF6 Supported by Equilibrium Molecular Dynamics and Density Functional Theory. J. Phys. Chem. C 120, 22873–22886 (2016).

Shengjie Wang, H. L., Huiqiu, D., Shifang, X. & Wangyu, H. A molecular dynamics study of the transport properties of LiF-BeF2-ThF4molten salt. J. Mol. Liquids 234, 220–226 (2017).

Shishido, H., Yusa, N., Hashizume, H., Ishii, Y. & Ohtori, N. Thermal Design Investigation for a Flinabe Blanket System. Fusion Sci. Technol. 72, 382–388 (2017).

Abramo, M. C. et al. Molecular dynamics determination of liquid-vapor coexistence in molten alkali halides. Phys. Rev. E 98, 010103 (2018).

Dai, J.-X. et al. Molecular dynamics investigation on the local structures and transport properties of uranium ion in LiCl-KCl molten salt. J. Nucl. Mater. 511, 75–82 (2018).

Gheribi, A. E. et al. On the determination of ion transport numbers in molten salts using molecular dynamics. Electrochim. Acta. 274, 266–273 (2018).

Liu, J. B., Chen, X., Lu, J. B., Cui, H. Q. & Li, J. Polarizable force field parameterization and theoretical simulations of ThCl(4) -LiCl molten salts. J. Comput. Chem. 39, 2432–2438 (2018).

Gheribi, A. E. et al. Study of the Partial Charge Transport Properties in the Molten Alumina via Molecular Dynamics. ACS Omega. 4, 8022–8030 (2019).

Guo, X. et al. Theoretical evaluation of microscopic structural and macroscopic thermo-physical properties of molten AF-ThF4 systems (A+=Li+, Na+ and K+). J. Mol. Liquids 277, 409–417 (2019).

Wu, F. et al. Elucidating Ionic Correlations Beyond Simple Charge Alternation in Molten MgCl2–KCl Mixtures. J. Phys. Chem. Lett. 10, 7603–7610 (2019).

Wu, J., Ni, H., Liang, W., Lu, G. & Yu, J. Molecular dynamics simulation on local structure and thermodynamic properties of molten ternary chlorides systems for thermal energy storage. Comput. Mater. Sci. 170, 109051 (2019).

Bessada, C. et al. Investigation of ionic local structure in molten salt fast reactor LiF-ThF4-UF4 fuel by EXAFS experiments and molecular dynamics simulations. J. Mol. Liquids 307, 112927 (2020).

Dai, J.-X., Zhang, W., Ren, C.-L. & Guo, X.-J. Prediction of dynamics properties of ThF4-based fluoride molten salts by molecular dynamic simulation. J. Mol. Liquids 318, 114059 (2020).

Li, B., Dai, S. & Jiang, D.-E. Molecular dynamics simulations of structural and transport properties of molten NaCl-UCl3 using the polarizable-ion model. J. Mol. Liquids 299, 112184 (2020).

Smith, A. L., Capelli, E., Konings, R. J. M. & Gheribi, A. E. A new approach for coupled modelling of the structural and thermo-physical properties of molten salts. Case of a polymeric liquid LiF-BeF2. J. Mol. Liquids 299, 112165 (2020).

Sharma, S. et al. SEM-Drude Model for the Accurate and Efficient Simulation of MgCl2–KCl Mixtures in the Condensed Phase. J. Phys. Chem. A 124, 7832–7842 (2020).

Wilson, M. & Madden, P. A. Polarization effects in ionic systems from first principles. J. Phys. Condens. Matter 5, 2687–2706 (1993).

Wilson, M., Madden, P. A., Pyper, N. C. & Harding, J. H. Molecular dynamics simulations of compressible ions. J. Chem. Phys. 104, 8068–8081 (1996).

Wilson, M., Madden, P. A. & Costa-Cabral, B. J. Quadrupole Polarization in Simulations of Ionic Systems: Application to AgCl. J. Phys. Chem. 100, 1227–1237 (1996).

Wilson, P. A. M. A. M. ‘Covalent’ effects in ‘ionic’ systems. Chem. Soc. Rev. 25, 339–350 (1996).

J̈emmer, P., Wilson, M., Madden, P. A. & Fowler, P. W. Dipole and quadrupole polarization in ionic systems: Ab initio studies. J. Chem. Phys. 111, 2038–2049 (1999).

Hutchinson, F., Wilson, M. & Madden, P. A. A unified description of MCI3 systems with a polarizable ion simulation model. Mol. Phys. 99, 811–824 (2001).

Carmen Domene, P. W. F., Wilson, M. & Madden, P. A. A transferable representation of the induced multipoles in ionic crystals. Mol. Phys. 100, 3847–3865 (2002).

Galamba, N., Nieto de Castro, C. A. & Ely, J. F. Thermal conductivity of molten alkali halides from equilibrium moleculardynamics simulations. J. Chem. Phys. 120, 8676–8682 (2004).

Richard Brookes, A. D. Gyanprakash Ketwaroo, & Paul A. Madden, Diffusion Coefficients in Ionic Liquids: Relationship to the Viscosity. J. Phys. Chem. B 109, 6485–6490 (2004).

Heaton, R. J. et al. A First-Principles Description of Liquid BeF2and Its Mixtures with LiF: 1. PotentialDevelopment and Pure BeF. J. Phys. Chem. B 110, 11454–11460 (2006).

Galamba, N., Nieto de Castro, C. A. & Ely, J. F. Equilibrium and nonequilibrium moleculardynamics simulations of the thermalconductivity of molten alkali halides. J. Chem. Phys. 126, 204511 (2007).

McCulloch, W. S. & Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Mathem. Biophys. 5, 115–133 (1943).

Vaswani, A. et al. Attention is all you need. Adv. Neural Inform. Process. Syst. 30, (2017).

Devlin, J., Chang, M.-W., Lee, K., Toutanova, K., Bert. Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 2018.

Jumper, J. et al. Highly accurate protein structure prediction with AlphaFold. Nature 596, 583–589 (2021).

Hekayati, J. & Rahimpour, M. R. Estimation of the saturation pressure of pure ionic liquids using MLP artificial neural networks and the revised isofugacity criterion. J. Mol. Liquids 230, 85–95 (2017).

Behler, J. & Parrinello, M. Generalized Neural-Network Representation of High-Dimensional Potential-Energy Surfaces. Phys. Rev. Lett. 98, 146401 (2007).

Bartók, A. P., Payne, M. C., Kondor, R. & Csányi, G. Gaussian Approximation Potentials: The Accuracy of Quantum Mechanics, without the Electrons. Phys. Rev. Lett. 104, 136403 (2010).

Ma, T., Guo, Z., Lin, M. & Wang, Q. Recent trends on nanofluid heat transfer machine learning research applied to renewable energy. Renew. Susta. Energy Rev. 138, 110494 (2021).

Hassanpour, M., Vaferi, B. & Masoumi, M. E. Estimation of pool boiling heat transfer coefficient of alumina water-based nanofluids by various artificial intelligence (AI) approaches. Appl. Thermal Eng. 128, 1208–1222 (2018).

Jamei, M. et al. On the specific heat capacity estimation of metal oxide-based nanofluid for energy perspective–A comprehensive assessment of data analysis techniques. Int. Commun. Heat Mass Transfer 123, 105217 (2021).

Jamei, M., Ahmadianfar, I., Olumegbon, I. A., Karbasi, M. & Asadi, A. On the assessment of specific heat capacity of nanofluids for solar energy applications: Application of Gaussian process regression (GPR) approach. J. Energy Storage 33, 102067 (2021).

Adun, H., Wole-Osho, I., Okonkwo, E. C., Kavaz, D. & Dagbasi, M. A critical review of specific heat capacity of hybrid nanofluids for thermal energy applications. J. Mol. Liquids 340, 116890 (2021).

Jafari, K., Fatemi, M. H. & Estellé, P. Deep eutectic solvents (DESs): A short overview of the thermophysical properties and current use as base fluid for heat transfer nanofluids. J. Mol. Liquids 321, 114752 (2021).

Jamei, M. et al. Estimating the density of hybrid nanofluids for thermal energy application: Application of non-parametric and evolutionary polynomial regression data-intelligent techniques. Measurement 189, 110524 (2022).

Parida, D. R., Dani, N. & Basu, S. Data-driven analysis of molten-salt nanofluids for specific heat enhancement using unsupervised machine learning methodologies. Solar Energy 227, 447–456 (2021).