Abstract

Artificial intelligence (AI) in the domain of healthcare is increasing in prominence. Acceptance is an indispensable prerequisite for the widespread implementation of AI. The aim of this integrative review is to explore barriers and facilitators influencing healthcare professionals’ acceptance of AI in the hospital setting. Forty-two articles met the inclusion criteria for this review. Pertinent elements to the study such as the type of AI, factors influencing acceptance, and the participants’ profession were extracted from the included studies, and the studies were appraised for their quality. The data extraction and results were presented according to the Unified Theory of Acceptance and Use of Technology (UTAUT) model. The included studies revealed a variety of facilitating and hindering factors for AI acceptance in the hospital setting. Clinical decision support systems (CDSS) were the AI form included in most studies (n = 21). Heterogeneous results with regard to the perceptions of the effects of AI on error occurrence, alert sensitivity and timely resources were reported. In contrast, fear of a loss of (professional) autonomy and difficulties in integrating AI into clinical workflows were unanimously reported to be hindering factors. On the other hand, training for the use of AI facilitated acceptance. Heterogeneous results may be explained by differences in the application and functioning of the different AI systems as well as inter-professional and interdisciplinary disparities. To conclude, in order to facilitate acceptance of AI among healthcare professionals it is advisable to integrate end-users in the early stages of AI development as well as to offer needs-adjusted training for the use of AI in healthcare and providing adequate infrastructure.

Similar content being viewed by others

Introduction

Artificial intelligence (AI) is associated with the mechanization of intelligent human behaviour1, especially to display intelligent human-like thinking and reasoning2. AI is a domain of computer science that is involved in the development of technology that is able to excerpt underlying information from a data set and transform them into operative knowledge. This transformation is based on algorithms that could either be predetermined or adaptive3. The term AI was coined in 1956 by John McCarthy but is often connected to the now so-called Turing test. The latter being a hypothetical setup to test, whether or not a machine was able to exhibit intelligent behaviour. Many methods—e.g. knowledge graphs or machine learning techniques have been applied to approximate such behaviour; often without reaching applicability due to computational limits4. However, computational limits have seemingly been overcome in many applications5. With the increased proliferation of novel AI solutions, issues of reliability, correctness, understanding and trustworthiness have come to the forefront. These issues and the expansion into applications not yet covered by AI solutions mean that the potential of AI technologies has not been fully applied yet, and the continuing growth in the development of AI technologies does not cease to promise new perspectives2. Many fields that introduced this new form of intelligence in their domains have witnessed a growth in productivity and efficacy1. However, the advantages and disadvantages of AI have to be weighed against one another prior to widespread introduction1.

The characterization that defines AI as systems that exhibit behaviours or decisions commonly attributed to human intelligence and cognition is widely accepted2. The typically necessary components of such decisions include recognition of a complex situation, the ability to abstract, and the application of factual knowledge6. Not all components are always present. Not in every case these systems are “learning”5. Decisive for the differentiation to classical systems is that AI systems evaluate complex situations individually and are not based on simple a priori known parameterizations with few input variables5.

AI developers are trying to apply their technologies in many fields such as engineering, gaming and education1. Lately, the development of AI technologies has expanded to medical practice and its implementation in complex healthcare work environments has begun1,7,8,9,10,11. Choudhury et al.12 have defined AI in healthcare as ‘an adaptive technology leveraging advanced statistical algorithm(s) to analyse structured and unstructured medical data, often retrospectively, with the final goal of predicting a future outcome, identifying hidden patterns, and extracting actionable information with clinical and situational relevance’ (p. 107)12. While AI systems can be applied in the supporting functions (e.g. administrative, legal, financial tasks) around healthcare with similar risks and rewards as in other industries, application to the primary functions of healthcare put a higher demand on suppliers due to regulation and possible impact. While otherwise, typical statistical fluctuations might not be acceptable in the healthcare setting, approaches using knowledge graphs or rule-based techniques, even in combination with machine learning, can lead to intelligent systems, that are robust enough to withstand the scrutiny of governing bodies and medical guidelines. Furthermore, refraining from systems that act fully on their own, but offer support to a licensed professional overseeing the actual application, can be made to satisfy legal and regulatory hurdles1.

Until now, AI has been established in the healthcare sector with the purpose of proposing efficient and practice-oriented solutions for patients and healthcare providers. In this field, AI is being developed to benefit healthcare professionals such as physicians and nurses in decision-making, diagnosis, prognosis, treatment and relief from physically demanding tasks1,11,13. However, they are not being extended to larger settings. Ethical issues, lack of standardization, and unclear legal liability are among the challenges that face the widespread of AI in healthcare today14.

Newly introduced change and its implementation are being faced with mixed attitudes and feelings by healthcare professionals1,13. Accepting change is not a simple process. Humans are known to resist change in exchange for the comfortable status quo. However, in order to improve efficiency and workflow in the long run, acceptance is a key element to adopting and implementing newly introduced changes such as AI in daily practice5,15.

In the context of technology, acceptance is defined as the willingness, intention and internal motivation to use a technology as a result of positive attitudes towards the technology or system16. Acceptance of AI systems plays a similar role as with the introduction of all other new tools. However, the less predictable handling of complex situations and the desired human-like behaviour quickly lead to more resistance15. On the side of the developers of these systems, acceptability rather than acceptance is studied. This is usually associated with terms like comprehensibility or transparency, which are supposed to lead directly to acceptability17. This is applied at the technical and legal level and in decisions about deployment at the management level. The level of acceptance by the user eludes such approaches and should instead be evaluated directly. Only through this step acceptance may be traced back to acceptability17.

This integrative review aims to unravel the variety of reported causes for the limited acceptance as well as facilitating factors for the acceptance of AI usage in the hospital setting to date. The assessment and analysis of reasons for distrust and limited usage are of utmost importance to face the increasing demands and challenges of the healthcare system as well as for the development of adequate, needs-driven AI systems while acknowledging their associated limitations. This includes the identification of factors influencing the acceptance of AI as well as a discussion of the mechanisms associated with the acceptance of AI in light of current literature. This review’s findings aim to serve as a basis for further practical recommendations to improve healthcare workers’ acceptance of AI in the hospital setting and thereby harness the full potential of AI.

Results

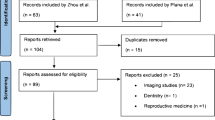

As shown in Fig. 1, the database search generated (n = 21,114) references. After deleting duplicates, sorting the articles according to the inclusion and exclusion criteria and applying forward citation tracking, a total of (n = 42) articles were included in this review.

Most studies were carried out in Europe (n = 13) followed by Asia (n = 12) and North America (n = 10). Further studies were conducted in Africa (n = 4) and Australia (n = 2). One international study was carried out in 25 different countries. There were qualitative studies (n = 18), quantitative studies (n = 16) and studies with a mixed-method approach (n = 8). All study participants were healthcare professionals working in a hospital setting. Instruments for data collection included interviews and surveys. The sample size of included studies varied between 12 and 562 and the age of participants ranged between 18 and 71 years (age reported in 21 studies). The average score for critical appraisal measured by means of the MMAT was 4.45 (Table 3).

In the following paragraphs, the results of our findings will be presented with reference to the UTAUT model. Table 1 represents a summary of the results in relation to the four main UTAUT aspects.

Performance expectancy

Heterogeneous findings are reported with respect to healthcare professionals’ confidence that using AI systems will benefit their performance. In the included studies, results reflecting on performance expectancy were reported with regards to alerts and medical errors, and the accuracy of AI technologies.

In three studies handling the adoption of clinical decision support systems (CDSS), participants indicated that in acute hospital settings, CDSS reduced the rate of medical errors through warnings and recommendations18,19,20. On the other hand, in one study about the barriers to adopting CDSS, participants reported that CDSS induced errors in emergency care settings21. AI in neurosurgery was the topic of a study in which 85% of 100 surgeons, anaesthetists and nurses considered alerts to be useful in the early detection of complications22. Similar results were reported in a study that evidenced that 90% of participating pharmacists and physicians (36/40) considered that an automated electronic alert improved the care of patients with acute kidney injury23. These findings were also supported in further studies about healthcare professionals’ perception of CDSSs in which participants described alerts as effective in drawing attention to key aspects18,24. Nevertheless, in one study about barriers to the uptake CDSSs, respondents found the number of alerts to be excessive25. In addition, in three studies, participating physicians and nurses mentioned fatigue resulting from frequent alerts26,27,28. Moreover, Kanagasundaram et al.29 reported that some physicians dismissed alerts29.

Healthcare professionals’ estimation of the accuracy of technologies based on AI was inconsistent. Results of a study showed that 22.5% of staff from a radiology department (N = 118) deemed AI-based diagnostic tools to be superior to radiologists in the near future30. However, only 12.2% (N = 204) claimed that they would “always use AI when making medical decisions in the near future”30. A study by O’Leary in which doctors, nurses and physiotherapists’ appraisal of the diagnostic abilities of AI support systems in view of rare or unusual diseases was investigated, found that 82% of respondents (N = 19) considered the tool to be useful31. Jauk et al.32 concluded that 14.9% of participating doctors and nurses (7/47) did not believe that a machine learning system could detect early-stage delirium32. Similarly, 49.3% of physicians (277/562) in a study assessing the use of AI in ophthalmology indicated that the quality of the system was difficult to guarantee33. In three studies that assessed healthcare professionals’ attitudes towards CDSS, findings implied that participants doubted the CDSS and diagnostics systems’ accuracy as they considered the quality of resulting information to be insufficient for decision making21,28,34. In another study on the same topic, physicians reported that CDSSs are useful but that their functions are limited27. Similarly, technical issues that might affect an AI system and render its results inconsistent were found to negatively affect the performance expectancy of physicians, nurses and operating room personnel and resulted in frustration10,19,22,25,34. In addition, in a study that investigated their attitudes towards potential robot’s use in a paediatric unit, nurses reported that they were sceptical of the abilities of the system35. Similarly, nurses stated in a study about adopting a CDSS that technical issues might affect the system and render its results inconsistent26.

Nevertheless, in qualitative studies on the topics of implementing AI in radiology and integrating a machine learning system into clinical workflow, physicians and nurses perceived AI to be accurate and based on sufficient scientific evidence in terms of diagnostics, objectivity and quality of information8,32,35,36.

Effort expectancy

Heterogeneous findings were also reported with respect to how easy the users believe it is to use a system. In the included studies, results reflecting on effort expectancy were reported in regard to time and workload, transparency and adaptability of the system, the system’s characteristics and training to use the system.

Efficiency with respect to time and workload was a recurrent theme in several included articles10,18,20,26,35,36,37,38. In a study by McBride et al.39 on robots in surgery, physicians were concerned about an increased operative time in robotic-assisted surgeries, whereas nursing and support theatre staff did not share these concerns39. However, in a study about the acceptance of a machine learning predictive system, 89.4% of nurses and doctors (42/47) did not report an increase in workload when using the algorithm in their clinical routine32. In a qualitative study about physicians’ adoption of CDSSs, participants reported CDSSs to be time-consuming37. Moreover, in a study about the attitude of radiologists towards AI, 51.9% of respondents (N = 204) appraised AI-based diagnostic tools to save time for radiologists30. Besides timely invests, McBride et al.39 reported that 52.6% of nursing staff (40/76) and 59.6% of medical staff (28/47) showed concerns that robotic-assisted surgery would increase financial pressure39.

In a study about the adoption of AI, physicians stated that a lack of transparency and adaptability of a CDSS system or machine learning system aiding diagnostics would negatively affect its adoption39. Moreover, participants of a study about the acceptance of a predictive machine learning system, argued that protocols founding the systems should be comprehensive and evidence-based32. A tendency to reject the systems was evidenced when participants reported unfamiliarity. This was stated in a study about the experience with a CDSS implemented in paediatrics29.

The system’s characteristics also seem to affect the expected effort to use a system which in turn influences its acceptance40. Participants of a study about the perception of a CDSS reported that when the system was perceived as intuitive, easily understood, and simple it was highly regarded by participants41. However, when the system was complex and required added tasks, such as reported in a study about integrating machine learning in the workflow, it was deemed undesirable36. In one study addressing the overall perception of AI by healthcare professionals, at least 70% of respondents (67/96) agreed on each item referring to the ease of use of AI-based systems42. However, Jauk et al.32 reported that 38.3% of users (18/47) of a machine learning algorithm reported that they were not able to integrate the system into their clinical routine32. In a study by Tscholl et al.43, 82% of anaesthesiologists (31/36) agreed or strongly agreed with the statement that the technology was “intuitive and easy to learn”43. When participants believed the AI-based system was aligned with their tasks, had consistent reporting of values and required minimal time and effort, they welcomed it43.

Other studies about CDSS systems reported that participants considered the systems to be inadequate, limited and inoperative in clinical practice19,38,44. A standardized CDSS system with clear guidelines seemed appealing to participants who approved of structured systems and commented positively on their ease of use27,34. Conversely, in a study about AI in radiology, participants reported that the system lacked standardization and automation and was therefore deemed unreliable10.

The importance of training for the successful implementation of AI systems was stressed upon in several studies. In one study referring to a continuative, predictive monitoring system41 and two addressing machine learning systems10,45, participants reported a lack of experience with the systems which resulted in feeling overwhelmed38,43,46. Alumran et al.47 observed that about half (53.49%) of nurses (N = 71) who did not use an AI system also did not participate in prior training47. Half of those receiving one training used the system whereas taking two training courses resulted in the use of the system in 83% of trained nurses. When taking three training courses this percentage increased to 100%47.

Social influence

The description of how much of an effect the opinion of others has on the study participants believing that they should use the AI systems was reported on in several studies. Results of studies reflecting on social influence were reported in regard to the influencing effects on decision-making and communication in the workplace.

In two studies about the acceptance and adoption of CDSS, physicians reported that their decision to use the system was independent of the opinion of supervisors and colleagues18,24. However, they reported that patients’ satisfaction with an AI system positively influenced their acceptance18,24.

One facilitating factor to the adoption of CDSS systems was believed to be communication between (potential) users of the systems25. Some studies pointed out the positive effects of CDSS systems and computerized diagnostic systems on the improvement of interdisciplinary practice and communication25,36. Nevertheless, in one study, physicians suggested that CDSS systems may reduce time spent with patients37. In view of the use of robotics in pediatrics, nurses emphasized that working with robots would have a negative effect on patients due to a reduction in human touch and connection35.

Facilitating conditions

Healthcare professionals’ views on organizational support to use the system were discussed in several included studies. The main discussion themes on this topic were legal liability, the organizational culture of accepting or rejecting AI systems and organizational infrastructures.

Concerns about legal liability and accountability were raised in several studies. Medical practitioners in a study about a diagnostic CDSS did not have a clear understanding of who would be accountable in case of a system error, which resulted in confusion and fear of the system48. Only 5.3% of respondents (N = 204) in a study about the attitude of radiologists towards AI stated that they would assume legal responsibility for imaging results provided by AI30. In two of the reviewed publications, participants addressed the topic of data protection. They mentioned the importance of maintaining data privacy as a positive aspect in the acceptance of AI systems, especially in CDSSs24,25.

In a study about the implementation of AI in radiology, the effect of organizational culture on the acceptance of the system versus the resistance to change was discussed. Several participants mentioned structuring the adoption on the system by selecting champions and expert groups10,32. However, in another study reporting on a wound-related CDSS, some nurses preferred to base their behaviour on their own decision-making process and feared that their organization was forcing them to do otherwise34.

The importance of an adequate infrastructure to implement AI systems as well as space and monetary resources were stressed18,49. The fact that AI systems oftentimes are in need of high-speed internet with a stable connection rendered them inoperable in the face of unavailability of good internet conditions which was expressed as problematic by some participants41,42. Additionally, in a study by Catho et al.37 on the adoption of CDSSs, several participating physicians highlighted the importance of providing technical support to users in order to increase acceptance of the system37.

Gender

Only three studies investigated whether there was an effect of gender on acceptance. None of them found significant effects50,51,52.

Age

With respect to age, three studies investigated whether there was an effect of age on the use of AI. Two studies did not observe an effect50,52. Walter et al.53 found that 55.8% of younger participants claimed that they would use automated pain recognition. In the older age group, only 40.4% of respondents reported that they would use the system (N = 102)53.

Experience

Stifter et al.51 reported that participants with less than one year of experience reported higher levels of perceived ease of use, perceived usefulness and acceptability of a CDSS than those with more than one year of experience, although the last was statistically non-significant51. In contrast, So et al.42 reported a statistically significant positive correlation between working experience and use of AI42. Similarly, Alumran et al.47 observed that an increase in working experience correlated with the use of an electronic triage system47.

Voluntariness of use

Participants of the included studies talked about the fear of AI replacing healthcare professionals as well as a loss of autonomy related to the use of AI. These two aspects could have an effect on the voluntariness to use AI systems.

Participants raised the concern that AI may replace healthcare professionals in their duties at some point. Among respondents, 54.9% reported that physician candidates should opt for “specialty areas where AI cannot dominate”39. Similarly, 6.3% of respondents expected AI to completely replace radiologists in the future39. In a study by Zheng et al.33, 24% of respondents (135/562) denied the claim that AI would completely replace physicians in ophthalmology54. Nevertheless, 77% of physicians and 57.9% of other professional technicians believed that AI would at least partially replace physicians in ophthalmology54. These findings were also replicated in two qualitative studies that explored the acceptance and adoption of CDSS in which physicians vocalized their fear of being replaced by the systems, and of their work becoming outdated18,47.

In a study about confidence in AI, physicians revealed a fear of loss of autonomy in stressful situations2,47. Nurses who participated in a study about the potential use of robots in paediatrics’ units expressed the concern that robots may limit the development of clinical skills29.

In a study assessing the acceptance of a CDSS in neurosurgery, senior physicians and nurses suggested that junior colleagues should refer to them for guidance and final decisions and not to an AI-based system28. They feared that blindly following the recommendations of AI-based systems may negatively impact decision-making processes28. Similarly, in a study about CDSS in electronic prescribing, junior nurses claimed that they preferred to seek advice from senior nurses instead of an AI-based system, especially in situations in which the system was deemed complex35. In addition, in two studies about the acceptance of two different CDSS systems, junior physicians were more open to the use of AI systems than their seniors25,48.

Discussion

The present review included 42 studies and sought to integrate findings about the influencing factors on the acceptance of AI by healthcare professionals in the hospital setting. All findings and evidence were structured with reference to the UTAUT model40. Based on the included studies (N = 42), acceptance was primarily studied for CDSSs (N = 21).

An important factor that could affect the acceptance of AI in healthcare is safety. Different AI systems could lead to different risks of error occurrence which affect the acceptance of the system among healthcare professionals. Although it can be stated that AI-based prediction systems have shown to result in lower error rates than traditional systems55,56, it may be argued that systems taking over simple tasks are deemed more reliable and trustworthy and are therefore more widely accepted than AI-based systems operating on complex tasks such as surgical robots. Furthermore, Choudhury et al.3, who studied the acceptability of an AI-based blood utilization calculator argued that AI-based systems are often based on data from a norm-typical patient population; however, if the system is applied to unanticipated patient populations (e.g. patients with sickle cell disease), the AI-based recommendation may become inadequate. Such a sample selection bias may not only endanger patient safety but is also likely to increase levels of scepticism about performance expectancy resulting in decreased acceptance among healthcare professionals3,57. Moreover, the safety of a system might be affected by technical complications that may influence the quality of the system’s output and therefore limit healthcare professionals’ trust in the system58,59. Besides technical complications, insufficient data and information may compromise the accuracy and validity of AI output60. By consequence, ensuring high-quality input data as well as ensuring that the system is applied to the anticipated patient population is of utmost importance to AI-based systems’ acceptance60.

Additionally, another aspect of safety that was reported to affect effort expectancy and therefore acceptance, is the degree of alert sensitivity of an AI system61. The phenomenon of alarm fatigue which refers to “characteristics that increase a clinician’s response time and/or decrease the response rate to a clinical alarm as a result of too many alarms”62 is a result of the AI system and could affect the safety of patient care. To sum up, overly sensitive alarms may induce desensitization and alert dismissal29. Although the function is to hint at potential medical complications, overly sensitive alarms may paradoxically lead to risks to patient safety due to desensitization and alert dismissal in critical situations62. Therefore, alarm sensitivity is a factor that might have an effect on healthcare professionals’ acceptance of an AI system and should be taken into consideration when designing AI-based systems in order to enhance acceptance and usage of the systems63.

Furthermore, differences in AI acceptance between various occupational groups is a factor that could influence the acceptance of an AI system in a healthcare setting. In this review, we observed a tendency of respondents to perceive AI-based systems more negatively if one’s own professional group was using the AI system rather than another professional group39. We could not find more information to back up this theory in the literature. It would therefore be interesting to follow up if the use of the AI system by one’s own professional group does indeed affect his or her perception of the system.

Human factors such as personality and experience were found to affect the perception of an AI system. Depending on the healthcare professional, their needs and the work environment, the acceptance of an AI system might differ3. The same AI system might be perceived as helpful by a person and would therefore be accepted while another professional might find that the system could hold up their work and would therefore deem it as unacceptable3. Moreover, as found in our review and supported by the literature, more experienced healthcare professionals tend to trust their knowledge and experience more than an AI system. Consequently, they might override the system’s recommendations and make their own decisions based on their personal judgement3. This might be related to their fear of losing autonomy in a situation where the AI system is recommending something that is not in line with their critical thinking process.

In addition, time and staff resources are factors that could potentially affect the acceptance of AI systems in healthcare. These factors were perceived differently by different disciplines. With regards to robotic-assisted surgery, medical staff anticipated an increase in operating time and the diagnostic process39. Other studies reported that 89.4% of users expected an increase in workload when using a machine-learning algorithm in their clinical routines32. Moreover, physicians are often under time constraints during their visits to patients and are overloaded with documentation work. Therefore, they might accept an AI system such as a CDSS if they witness that it might reduce their workload and assist them3. In order to facilitate the acceptance and thus implementation of AI systems in clinical settings, it is of utmost importance to integrate these systems into clinical routines and workflows, thereby allowing to reduce the workload.

Interestingly, AI-based systems for the support of the diagnostic process seem to be more established in radiology than among other medical disciplines30. This indicates differences in the levels of AI acceptance among healthcare professionals between medical specialties. In implementation studies with reference to AI in radiology, transformative changes with regards to improvements in diagnostic accuracy and value of image analysis were reported64,65. This raises the question of whether healthcare professionals in the area of radiology are more technically inclined and specialize on the basis of this enhanced interest or whether innovations of AI in radiology are more easily and better integrated into existing routines and are therefore more widely established and accepted as reported by Recht and Bryan (2017)64 and Mayo and Leung (2018)65. Furthermore, insufficient knowledge of the limits and potentials of AI technologies’ use may impact healthcare professionals’ acceptance negatively8,11. However, as cited many times in the literature, a former introduction to the technology as well as proper training and education on the correct usage of AI might encourage users to accept this technology within their field3,7,8,13,66,67. Moreover, transparency in AI data processing is of utmost importance when AI is introduced to healthcare. If the user is able to acknowledge the benefit of the technology and comprehends what AI-based recommendations are based upon, his or her acceptance towards it increases13,15,68,69. On the other hand, when the user perceives the use of the AI technology as a threat then his or her level of acceptance decreases68. Based on a study reporting the effects of training on acceptance of an AI-based system, it can be stated that the number of training correlated positively with the percentage of participating nurses using the system47. In medical education, the necessity to provide training in AI beyond clinical and biomedical skills is emphasized70,71. Nonetheless, training requires time and several studies have reported that healthcare professionals lack the time outside their official duty hours to learn how to use new AI-based technologies7,8,15,68. Thus, it is an organizational duty to not only offer training for potential users of the AI systems but also to provide staff with timely resources to take part in this training to foster AI acceptance. Furthermore, it should be discussed whether trainings in AI should be integrated early into the educational curriculum72,73. Kolachalama and Garg (2018) emphasize the need to integrate expertise from data science and to focus on topics of literacy and practical guidelines in such trainings71. Nevertheless, intrinsic motivation to participate in training may also contribute to the seemingly positive effects of the training on the use behaviour observed in the study by Alumran et al. (2020)47.

It is important to note that we were not able to replicate the findings of the effect of gender on technology acceptance as proposed by the UTAUT model. In contrast to the UTAUT model, we argue that in this case, there is probably no effect of gender on AI acceptance. However, with regard to age, contradictory results were reported both in our review as well as in the literature. For example, two studies from the literature showed that age impacts trust in AI and that the younger generation leans more toward trusting AI systems than their older counterparts74,75. On the contrary, a study by Choudhoury and Asan (2022)76 revealed that age did not play a significant role in trusting or intending to use AI.

Nevertheless, training and providing adequate infrastructure with respect to technical support and internet access were unanimously found to be facilitating factors for the acceptance and implementation of AI-based systems in the hospital context and should therefore be considered by the management levels of hospitals1,13. To continue, especially with reference to alert systems, aspects such as the alert sensitivity of an AI system and potential consequences in case of elevated sensitivity levels such as alert fatigue and alert dismissal should be kept in mind when determining the safety of a system61,63. In order to design a user-friendly AI-based system and enhance its acceptance, it is of utmost importance to involve healthcare professionals early on in the designing stages of the system77. We recommend the implementation of user-centred design78 during the development of an AI system in healthcare, which would allow the involvement of healthcare professionals in the different stages of the development and evaluation of a system. By incorporating the abilities, characteristics and boundaries of healthcare professionals, the development would result in a secure, uncomplicated and effective AI system. This resulting system would receive high acceptance rates because of healthcare professionals participating in its creation and its integration into clinical routines and workflows would be uncomplicated. Moreover, we also propose longer and intensive research to understand how AI as a complex intervention affects work processes and how people react to it and behave with it. A better understanding of AI-assisted work and decision-making processes could thus be continuously incorporated and the further development of AI systems would profit from it. Finally, in order to facilitate usability and intuitive handling of AI in clinical routine, we recommend to implement training in regards to the theoretical basics, ethical considerations and limitations in view of AI as well as practical skills of usage as early as in undergraduate education.

Reasons for the limited acceptance among healthcare professionals are manifold: Personal fears related to a loss of professional autonomy, lack of integration in clinical workflow and routines, overly sensitive settings for alarm systems, and loss of patient contact are reported. Also, technical reservations such as unintuitive user interfaces and technical limitations such as the unavailability of strong internet connections impede comprehensive usage and acceptance of AI. Hesitation to accept AI in the healthcare setting has to be acknowledged by those in charge of implementing AI technologies in hospital settings. Once the causes of hesitation are known and personal fears and concerns are recognized, appropriate interventions such as training, reliability of AI systems and their ease of use may aid in overcoming the indecisiveness to accept AI in order to allow users to be keen, satisfied and enthusiastic about the technologies.

Methods

An integrative review of the acceptance of AI among healthcare professionals in the hospital setting was performed. The review protocol was registered in the PROSPERO Database (CRD42021251518). Integrative reviews allow us to reflect on and assess the strength of scientific evidence, identify particular clinical issues, recognize gaps in the current literature, and evaluate the need for further research. An integrative review is based on prior extensive research on a specified topic by means of a literature search79. This type of review is of complex nature which makes it prone to the risk of bias. To reduce bias, specific methods are required. Therefore, this review is based on the methodological framework proposed by Whittemore and Knafl80. Initially, the topic of interest and the significance of the review is identified. Then, the literature is explored systematically according to a set of identified eligibility criteria. After that, relevant inputs from the included studies are extracted and their quality is appraised. Finally, the outcomes of the studies included in this review are presented and relevance and recommendations for future research are consequently made.

The results of the reviewed articles are presented based on the unified theory of acceptance and use of technology (UTAUT). This theory explains a user’s intention to use information technology systems. It is based on various information technology acceptance models, one of them being the technology acceptance model (TAM)40. The UTAUT consists of four main aspects: performance expectancy, effort expectancy, social influences, and facilitating conditions, next to four regulating factors: gender, age, experience and voluntariness of use, which affect the four main aspects40 (Table 2).

Data collection

Data were sought from records in various databases and grey literature sources. We systematically searched the databases MEDLINE via PubMed, Cochrane Library via Wiley Interscience, Embase and ScienceDirect via Elsevier, Institution of Electrical and Electronics Engineers (IEEE) Xplore via IEEE, Web of Science via Clarivate Analytics, as well as the Cumulative Index to Nursing and Allied Health Literature (CINAHL) via EBSCO for qualitative, quantitative and mixed methods studies. Furthermore, grey literature was searched by means of the dissertation databases Bielefeld Academic Search Engine via BASE, ProQuest, Technische Informationsbibliothek (TIB) as well as the DART Europe E-Theses Portal.

Studies that align with the aim of this study and its research questions were searched for. Keywords were joined using Boolean terms, medical subject headings, and truncation. In close collaboration with a librarian from the local medical university library, the following search string was generated: (Artificial Intelligence OR Machine Learning OR Deep Learning OR Neural Network OR Technol* System OR Smart System OR Intelligent System OR Assistive System OR Decision Support System OR Human–Computer Interaction OR Human Machine Interaction OR Cognitive System OR Decision Engineering OR Natural Language Understanding) AND (Approval OR Intention to Use OR Acceptance OR Adoption OR Acceptability) AND (Nurse OR Doctors OR Physician OR MD OR Clinician OR Healthcare professional OR Healthcare OR Healthcare Worker) AND (Hospital OR Acute Care OR Inpatient care OR Standard Care OR Intensive Care OR Intermediate Care OR Ward). In a subsequent phase, google scholar forward citation tracking was applied to articles included in the database search.

Inclusion criteria

Quantitative, qualitative and mixed methods original studies published from 2010 up to and including June 2022, in which participants are healthcare professionals and whose clinical fields of work are directly affected by AI (e.g., physicians, nurses, pharmacists, imaging technicians, physiotherapists) were assessed and explored. Studies written in English or German and investigating factors of AI acceptance were considered for review. Other inclusion criteria included studies taking place in hospital settings and studies that describe the development of AI systems with the involvement of healthcare professionals.

Exclusion criteria

Studies, in which participants were care recipients and family members as well as studies taking place in ambulatory settings, hospices, nursing homes or rehabilitation centres were excluded.

Screening and extraction process

All studies that resulted from the search were exported to the RAYYAN software, which was used for the screening process48. Duplicates were deleted. The remaining research articles were screened separately by two independent reviewers based on title and abstract (M.M. and S.L.). Conflicts between the reviewers were resolved through discussion. The eligibility of relevant studies was appraised based on independent full-text reading by the same two authors. If assessed differently, conflicts were discussed. An extraction table was created by the two reviewers to gather and extract data from the included studies (Table 3).

Quality appraisal

The quality of all included articles were critically assessed by means of the Mixed Methods Appraisal Tool (MMAT) by two authors (M.M. and S.L.)81. The MMAT assesses the study quality on the basis of five quality criteria. These criteria include the appropriateness of the research question, of the data collection methods and of the measurement instruments. Ultimately, each study attains a score from zero to five. The higher the score attained, the greater the quality of the appraised study81.

Quality appraisal of studies included in integrative reviews improves rigour and diminishes the risk for bias80.

Future directions

Most studies assessed the age of participants. Unfortunately, just four studies assessed the correlation between participant age and levels of acceptance whereof only two observed an effect of age on AI acceptance. In view of the UTAUT model which assumes an effect of age on technology acceptance, it would be of interest to see whether the UTAUT still represents current findings in technology acceptance. Since its publication, the development and use of technology in the wider population have increased substantially. It cannot be ruled out that the availability and integration of technology in the broader population may alter the influence of factors such as age defined in the UTAUT. As a consequence, it would be of interest to re-evaluate the UTAUT model.

Limitations

We found mixed findings with respect to different AI systems. Most studies addressed CDSSs. It can be argued that by including different types of AI-based systems in the study, interfering variables due to differential proceedings in the handling and function of the systems may have distorted the reported results. It would be of interest to investigate differential hindering and facilitating factors for the acceptance of AI for different kinds of AI-based systems.

In this integrative review, various perspectives of healthcare professionals in hospital settings regarding the acceptance of AI were revealed. Many facilitating factors to the acceptance of AI as well as limiting factors were discussed. Factors related to acceptance or limited acceptance were discussed in association with the characteristics of the UTAUT model. After reviewing 42 studies and discussing them in rapport with studies from the literature, we conclude that hesitation to accept AI in the healthcare setting has to be acknowledged by those in charge of implementing AI technologies in hospital settings. Once the causes of hesitation are known and personal fears and concerns are recognized, appropriate interventions such as training, reliability of AI systems and their ease of use may aid in overcoming the indecisiveness to accept AI in order to allow users to be keen, satisfied and enthusiastic about the technologies.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The data that support the findings of this study are available from the corresponding authors upon reasonable request.

Change history

11 July 2023

A Correction to this paper has been published: https://doi.org/10.1038/s41746-023-00874-z

References

Maskara, R., Bhootra, V., Thakkar, D. & Nishkalank, N. A study on the perception of medical professionals towards artificial intelligence. Int. J. Multidiscip. Res. Dev. 4, 34–39 (2017).

Oh, S. et al. Physician confidence in artificial intelligence: an online mobile survey. J. Med. Internet Res. 21, e12422 (2019).

Choudhury, A., Asan, O. & Medow, J. E. Clinicians’ perceptions of an artificial intelligence–based blood utilization calculator: qualitative exploratory study. JMIR Hum. Factors 9, 1–9 (2022).

Pallay, C. Vom Turing-Test zum General Problem Solver. Die Pionierjahre der künstlichen Intelligenz. in Philosophisches Handbuch Künstliche Intelligenz (ed. Mainzer, K.) 1–20 (Springer Fachmedien Wiesbaden, 2020). https://doi.org/10.1007/978-3-658-23715-8_3-1.

Liyanage, H. et al. Artificial intelligence in primary health care: perceptions, issues, and challenges. Yearb. Med. Inform. 28, 41–46 (2019).

Dimiduk, D. M., Holm, E. A. & Niezgoda, S. R. Perspectives on the impact of machine learning, deep learning, and artificial intelligence on materials, processes, and structures engineering. Integr. Mater. Manuf. Innov. 7, 157–172 (2018).

Aapro, M. et al. Digital health for optimal supportive care in oncology: benefits, limits, and future perspectives. Support. Care Cancer 28, 4589–4612 (2020).

Lugtenberg, M., Weenink, J. W., Van Der Weijden, T., Westert, G. P. & Kool, R. B. Implementation of multiple-domain covering computerized decision support systems in primary care: a focus group study on perceived barriers. BMC Med. Inform. Decis. Mak. 15, 1–11 (2015).

Radionova, N. et al. The views of physicians and nurses on the potentials of an electronic assessment system for recognizing the needs of patients in palliative care. BMC Palliat. Care 19, 1–9 (2020).

Strohm, L., Hehakaya, C., Ranschaert, E. R., Boon, W. P. C. & Moors, E. H. M. Implementation of artificial intelligence (AI) applications in radiology: hindering and facilitating factors. Eur. Radiol. 30, 5525–5532 (2020).

Waymel, Q., Badr, S., Demondion, X., Cotten, A. & Jacques, T. Impact of the rise of artificial intelligence in radiology: what do radiologists think? Diagn. Interv. Imaging 100, 327–336 (2019).

Choudhury, A., Saremi, M. L. & Urena, E. Perception, trust, and accountability affecting acceptance of artificial intelligence: from research to clinician viewpoint. In Diverse Perspectives and State-of-the-Art Approaches to the Utilization of Data-Driven Clinical Decision Support Systems 105–124 (IGI Global, 2023).

Abdullah, R. & Fakieh, B. Health care employees’ perceptions of the use of artificial intelligence applications: survey study. J. Med. Internet Res. 22, 1–8 (2020).

Jiang, L. et al. Opportunities and challenges of artificial intelligence in the medical field: current application, emerging problems, and problem-solving strategies. J. Int. Med. Res. 49, 1–11 (2021).

Fan, W., Liu, J., Zhu, S. & Pardalos, P. M. Investigating the impacting factors for the healthcare professionals to adopt artificial intelligence-based medical diagnosis support system (AIMDSS). Ann. Oper. Res. 294, 567–592 (2020).

Chismar, W. G. & Wiley-Patton, S. Does the extended technology acceptance model apply to physicians. In Proc. 36th Annual Hawaii International Conference on System Sciences, HICSS 2003 (ed. Sprague, R. H. Jr) (IEEE Computer Society, 2003).

Schmidt, P., Biessmann, F. & Teubner, T. Transparency and trust in artificial intelligence systems. J. Decis. Syst. 29, 260–278 (2020).

Aljarboa, S., Shah, M. & Kerr, D. Perceptions of the adoption of clinical decision support systems in the Saudi healthcare sector. In Proc. 24th Asia-Pacific Decision Science Institute International Conference (eds Blake, J., Miah, S. J., Houghton, L. & Kerr, D.) 40–53 (Asia Pacific Decision Sciences Institute, 2019).

Prakash, A. V. & Das, S. Medical practitioner’s adoption of intelligent clinical diagnostic decision support systems: a mixed-methods study. Inf. Manag. 58, 103524 (2021).

Nydert, P., Vég, A., Bastholm-Rahmner, P. & Lindemalm, S. Pediatricians’ understanding and experiences of an electronic clinical-decision-support-system. Online J. Public Health Inform. 9, e200 (2017).

Petitgand, C., Motulsky, A., Denis, J. L. & Régis, C. Investigating the barriers to physician adoption of an artificial intelligence-based decision support system in emergency care: an interpretative qualitative study. Stud. Health Technol. Inform. 270, 1001–1005 (2018).

Horsfall, H. L. et al. Attitudes of the surgical team toward artificial intelligence in neurosurgery: international 2-stage cross-sectional survey. World Neurosurg. 146, e724–e730 (2021).

Oh, J., Bia, J. R., Ubaid-Ullah, M., Testani, J. M. & Wilson, F. P. Provider acceptance of an automated electronic alert for acute kidney injury. Clin. Kidney J. 9, 567–571 (2016).

Aljarboa, S. & Miah, S. J. Acceptance of clinical decision support systems in Saudi healthcare organisations. Inf. Dev. https://doi.org/10.1177/02666669211025076 (2021).

Liberati, E. G. et al. What hinders the uptake of computerized decision support systems in hospitals? A qualitative study and framework for implementation. Implement. Sci. 12, 1–13 (2017).

Blanco, N. et al. Health care worker perceptions toward computerized clinical decision support tools for Clostridium difficile infection reduction: a qualitative study at 2 hospitals. Am. J. Infect. Control 46, 1160–1166 (2018).

Grau, L. E., Weiss, J., O’Leary, T. K., Camenga, D. & Bernstein, S. L. Electronic decision support for treatment of hospitalized smokers: a qualitative analysis of physicians’ knowledge, attitudes, and practices. Drug Alcohol Depend. 194, 296–301 (2019).

English, D., Ankem, K. & English, K. Acceptance of clinical decision support surveillance technology in the clinical pharmacy. Inform. Health Soc. Care 42, 135–152 (2017).

Kanagasundaram, N. S. et al. Computerized clinical decision support for the early recognition and management of acute kidney injury: a qualitative evaluation of end-user experience. Clin. Kidney J. 9, 57–62 (2016).

Yurdaisik, I. & Aksoy, S. H. Evaluation of knowledge and attitudes of radiology department workers about artificial intelligence. Ann. Clin. Anal. Med. 12, 186–190 (2021).

O’Leary, P., Carroll, N. & Richardson, I. The practitioner’s perspective on clinical pathway support systems. In IEEE International Conference on Healthcare Informatics 194–201 (IEEE, 2014).

Jauk, S. et al. Technology acceptance of a machine learning algorithm predicting delirium in a clinical setting: a mixed-methods study. J. Med. Syst. 45, 48 (2021).

Zheng, B. et al. Attitudes of medical workers in China toward artificial intelligence in ophthalmology: a comparative survey. BMC Health Serv. Res. 21, 1067 (2021).

Khong, P. C. B., Hoi, S. Y., Holroyd, E. & Wang, W. Nurses’ clinical decision making on adopting a wound clinical decision support system. Comput. Inform., Nurs. 33, 295–305 (2015).

Liang, H.-F., Wu, K.-M., Weng, C.-H. & Hsieh, H.-W. Nurses’ views on the potential use of robots in the pediatric unit. J. Pediatr. Nurs. 47, e58–e64 (2019).

Panicker, R. O. & Sabu, M. K. Factors influencing the adoption of computerized medical diagnosing system for tuberculosis. Int. J. Inf. Technol. 12, 503–512 (2020).

Catho, G. et al. Factors determining the adherence to antimicrobial guidelines and the adoption of computerised decision support systems by physicians: a qualitative study in three European hospitals. Int. J. Med. Inform. 141, 104233 (2020).

Omar, A., Ellenius, J. & Lindemalm, S. Evaluation of electronic prescribing decision support system at a tertiary care pediatric hospital: the user acceptance perspective. Stud. Health Technol. Inform. 234, 256–261 (2017).

McBride, K. E., Steffens, D., Duncan, K., Bannon, P. G. & Solomon, M. J. Knowledge and attitudes of theatre staff prior to the implementation of robotic-assisted surgery in the public sector. PLoS ONE 14, e0213840 (2019).

Venkatesh, V., Morris, M. G., Davis, G. B. & Davis, F. D. User acceptance of information technology: toward a unified view. MIS Q. 27, 425–478 (2003).

Kitzmiller, R. R. et al. Diffusing an innovation: clinician perceptions of continuous predictive analytics monitoring in intensive care. Appl. Clin. Inform. 10, 295–306 (2019).

So, S., Ismail, M. R. & Jaafar, S. Exploring acceptance of artificial intelligence amongst healthcare personnel: a case in a private medical centre. Int. J. Adv. Eng. Manag. 3, 56–65 (2021).

Tscholl, D. W., Weiss, M., Handschin, L., Spahn, D. R. & Nöthiger, C. B. User perceptions of avatar-based patient monitoring: a mixed qualitative and quantitative study. BMC Anesthesiol. 18, 188 (2018).

Chow, A., Lye, D. C. B. & Arah, O. A. Psychosocial determinants of physicians’ acceptance of recommendations by antibiotic computerised decision support systems: a mixed methods study. Int. J. Antimicrob. Agents 45, 295–304 (2015).

Sandhu, S. et al. Integrating a machine learning system into clinical workflows: qualitative study. J. Med. Internet Res. 22, e22421 (2020).

Elahi, C. et al. An attitude survey and assessment of the feasibility, acceptability, and usability of a traumatic brain injury decision support tool in Uganda. World Neurosurg. 139, 495–504 (2020).

Alumran, A. et al. Utilization of an electronic triage system by emergency department nurses. J. Multidiscip. Healthc. 13, 339–344 (2020).

Ouzzani, M., Hammady, H., Fedorowicz, Z. & Elmagarmid, A. Rayyan-a web and mobile app for systematic reviews. Syst. Rev. 5, 210 (2016).

Pumplun, L., Fecho, M., Wahl, N., Peters, F. & Buxmann, P. Adoption of machine learning systems for medical diagnostics in clinics: qualitative interview study. J. Med. Internet Res. 23, e29301 (2021).

Schulte, A. et al. Automatic speech recognition in the operating room–An essential contemporary tool or a redundant gadget? A survey evaluation among physicians in form of a qualitative study. Ann. Med. Surg. 59, 81–85 (2020).

Stifter, J. et al. Acceptability of clinical decision support interface prototypes for a nursing electronic health record to facilitate supportive care outcomes. Int. J. Nurs. Knowl. 29, 242–252 (2018).

Norton, W. E. et al. Acceptability of the decision support for safer surgery tool. Am. J. Surg. 209, 977–984 (2015).

Walter, S. et al. “What about automated pain recognition for routine clinical use?” A survey of physicians and nursing staff on expectations, requirements, and acceptance. Front. Med. 7, 566278 (2020).

Jones, E. K., Banks, A., Melton, G. B., Porta, C. M. & Tignanelli, C. J. Barriers to and facilitators for acceptance of comprehensive clinical decision support system–driven care maps for patients with thoracic trauma: interview study among health care providers and nurses. JMIR Hum. Factors 9, e29019 (2022).

Weng, S. F., Reps, J., Kai, J., Garibaldi, J. M. & Qureshi, N. Can machine-learning improve cardiovascular risk prediction using routine clinical data? Stephen. PLoS ONE 12, 1–14 (2017).

Liu, T., Fan, W. & Wu, C. A hybrid machine learning approach to cerebral stroke prediction based on imbalanced medical dataset. Artif. Intell. Med. 101, 101723 (2019).

Challen, R. et al. Artificial intelligence, bias and clinical safety. BMJ Qual. Saf. 28, 231–237 (2019).

Bedaf, S., Marti, P., Amirabdollahian, F. & de Witte, L. A multi-perspective evaluation of a service robot for seniors: the voice of different stakeholders. Disabil. Rehabil. Assist. Technol. 13, 592–599 (2018).

Hebesberger, D., Koertner, T., Gisinger, C. & Pripfl, J. A long-term autonomous robot at a care hospital: a mixed methods study on social acceptance and experiences of staff and older adults. Int. J. Soc. Robot. 9, 417–429 (2017).

Varshney, K. R. Engineering safety in machine learning. In 2016 Information Theory Applications Work (ITA) 2016 (Institute of Electrical and Electronics Engineers (IEEE), 2017).

Ko, Y. et al. Practitioners’ views on computerized drug-drug interaction alerts in the VA system. J. Am. Med. Inform. Assoc. 14, 56–64 (2007).

Ruskin, K. J. & Hueske-Kraus, D. Alarm fatigue: Impacts on patient safety. Curr. Opin. Anaesthesiol. 28, 685–690 (2015).

Poncette, A.-S. et al. Improvements in patient monitoring in the intensive care unit: survey study. J. Med. Internet Res. 22, e19091 (2020).

Recht, M. & Bryan, R. N. Artificial intelligence: threat or boon to radiologists? J. Am. Coll. Radiol. 14, 1476–1480 (2017).

Mayo, R. C. & Leung, J. Artificial intelligence and deep learning—radiology’s next frontier? Clin. Imaging 49, 87–88 (2018).

Sarwar, S. et al. Physician perspectives on integration of artificial intelligence into diagnostic pathology. npj Digit. Med. 2, 1–7 (2019).

Rogove, H. J., McArthur, D., Demaerschalk, B. M. & Vespa, P. M. Barriers to telemedicine: survey of current users in acute care units. Telemed. e-Health 18, 48–53 (2012).

Safi, S., Thiessen, T. & Schmailzl, K. J. G. Acceptance and resistance of new digital technologies in medicine: qualitative study. J. Med. Internet Res. 7, e11072 (2018).

Bitterman, D. S., Aerts, H. J. W. L. & Mak, R. H. Approaching autonomy in medical artificial intelligence. Lancet Digit. Health 2, e447–e449 (2020).

Wartman, S. A. & Combs, C. D. Medical education must move from the information age to the age of artificial intelligence. Acad. Med. 93, 1107–1109 (2018).

Kolachalama, V. B. & Garg, P. S. Machine learning and medical education. npj Digit. Med. 1, 2–4 (2018).

Paranjape, K., Schinkel, M., Panday, R. N., Car, J. & Nanayakkara, P. Introducing artificial intelligence training in medical education. JMIR Med. Educ. 5, e16048 (2019).

Grunhut, J., Marques, O. & Wyatt, A. T. M. Needs, challenges, and applications of artificial intelligence in medical education curriculum. JMIR Med. Educ. 8, 1–5 (2022).

Hoff, K. A. & Bashir, M. Trust in automation: integrating empirical evidence on factors that influence trust. Hum. Factors 57, 407–434 (2014).

Oksanen, A., Savela, N., Latikka, R. & Koivula, A. Trust toward robots and artificial intelligence: an experimental approach to human–technology interactions online. Front. Psychol. 11, 568256 (2020).

Choudhury, A. & Asan, O. Impact of cognitive workload and situation awareness on clinicians’ willingness to use an artificial intelligence system in clinical practice. IISE Trans. Healthc. Syst. Eng. 1–12 (2022) https://doi.org/10.1080/24725579.2022.2127035.

Kolltveit, B. C. H. et al. Telemedicine in diabetes foot care delivery: Health care professionals’ experience. BMC Health Serv. Res. 16, 1–8 (2016).

Abras, C., Maloney-Krichmar, D. & Preece, J. User-centered design. In Encyclopedia of Human–Computer Interaction Vol. 37 (ed. Bainbridge, W.) 445–456 (SAGE Publications, 2004).

Russell, C. L. An overview of the integrative research review. Prog. Transplant. 15, 8–13 (2005).

Whittemore, R. & Knafl, K. The integrative review: updated methodology. J. Adv. Nurs. 52, 546–553 (2005).

Hong, Q. N. et al. The Mixed Methods Appraisal Tool (MMAT) version 2018 for information professionals and researchers. Educ. Inf. 34, 285–291 (2018).

Hand, M. et al. A clinical decision support system to assist pediatric oncofertility: a short report. J. Adolesc. Young-Adult Oncol. 7, 509–513 (2018).

Hsiao, J.-L., Wu, W.-C. & Chen, R.-F. Factors of accepting pain management decision support systems by nurse anesthetists. BMC Med. Inform. Decis. Mak. 13, 1–13 (2013).

Lin, H.-C. et al. From precision education to precision medicine: factors affecting medical staffs intention to learn to use AI applications in hospitals. Technol. Soc. 24, 123–137 (2021).

Esmaeilzadeh, P., Sambasivan, M., Kumar, N. & Nezakati, H. Adoption of clinical decision support systems in a developing country: antecedents and outcomes of physician’s threat to perceived professional autonomy. Int. J. Med. Inform. 84, 548–560 (2015).

Strohm, L. et al. Factors influencing the adoption of computerized medical diagnosing system for tuberculosis. JMIR Hum. Factors 9, 1–12 (2021).

Zhai, H. et al. Radiation oncologists’ perceptions of adopting an artificial intelligence-assisted contouring technology: model development and questionnaire study. J. Med. Internet Res. 23, 1–16 (2021).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

S.L., M.M. and A.S. made substantial contributions regarding study conceptualization and design. S.L. and M.M. conducted the search, the data analysis and interpretation of the data, and S.L. and M.M. wrote the manuscript. A.S. was involved in the analysis and interpretation of the data. A.S. critically reviewed the manuscript for important intellectual content, supervised the study and supported S.L. and M.M. as senior investigators. S.S., A.L., H.S. and C.B. reviewed and made substantial contributions to the manuscript. All authors contributed to the conceptualization and design of this study, including the preparation of study material, and reviewed and revised the manuscript. All authors agree to be accountable for all aspects of this work and approve the final manuscript as submitted.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lambert, S.I., Madi, M., Sopka, S. et al. An integrative review on the acceptance of artificial intelligence among healthcare professionals in hospitals. npj Digit. Med. 6, 111 (2023). https://doi.org/10.1038/s41746-023-00852-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-023-00852-5

This article is cited by

-

Assessing the research landscape and clinical utility of large language models: a scoping review

BMC Medical Informatics and Decision Making (2024)

-

Ethical use of artificial intelligence to prevent sudden cardiac death: an interview study of patient perspectives

BMC Medical Ethics (2024)

-

Nurses’ perceptions, experience and knowledge regarding artificial intelligence: results from a cross-sectional online survey in Germany

BMC Nursing (2024)

-

Generative AI in healthcare: an implementation science informed translational path on application, integration and governance

Implementation Science (2024)

-

Robot-assisted surgery and artificial intelligence-based tumour diagnostics: social preferences with a representative cross-sectional survey

BMC Medical Informatics and Decision Making (2024)