Abstract

For judicial democracy, many societies adopt jury trials, where verdicts are made by a unanimous vote of, conventionally, 12 lay citizens. Here, using the majority-vote model, we show that such jury sizes achieve the best balance between the accuracy of verdicts and the time spent for unanimous decision-making. First, we identify two determinants of the efficient jury size: the opinion homogeneity in a community decreases the optimal jury size by affecting the accuracy of verdicts; the anti-conformity tendency in the community also reduces the efficient jury size by prolonging the time to reach unanimous verdicts. Moreover, we find an inverse correlation between these two determinants, which prevents over-shrinking and excessive expansion of the efficient jury size. Finally, by applying these findings into real-life settings, we narrow down the efficient jury size to 11.8 ± 3.0. Given that such a simple toy model can explain the jury sizes in the actual societies, the number of jurors may have been implicitly optimised for efficient unanimous decision-making throughout human history.

Similar content being viewed by others

Introduction

Jury trials are regarded as an embodiment of democracy in courtrooms (Ellsworth, 1989; Ellsworth and Getman, 1987), and it has been repeatedly debated whether decisions made by jurors sufficiently represent opinions of the entire community (Ellsworth, 1989; Saks and Marti, 1997). In this sense, ideally, juries may have to consist of all the community members or a sufficiently large number of them; however, in reality, it is impractical to implement such a direct or quasi-direct democracy into every trial. Instead, since the mid-twelfth century at latest, juries have tended to comprise ~12 individuals, especially when they are requested to reach unanimous verdicts (Warren, 1973). In fact, despite some studies suggesting the superiority of much smaller juries (Fay et al., 2000; Nagel and Neef, 1975), the conventional ~12-juror systems have still survived (Forsyth and Macdonnell, 2009).

Why has such a specific jury size been widely chosen? Throughout a line of psychological and sociological studies on various properties of the jury system—for example, its impartiality (Hamilton, 1978; Stephan, 1974; Young et al., 2014), consistency (Davis et al., 1976; Werner et al., 1985) and accuracy (Garrett et al., 2020; Ross et al., 2019)—some researches raised multiple societal and political events that could have shaped the current number of jurors (Ellsworth, 1989; Faust, 1959; Hans, 2008; Maccoun, 1989; Thomas and Fink, 1963). A meta-analysis of 17 experimental and observational studies on group decision-making demonstrated sociological and democratic advantages in 12-juror systems over 6-juror ones (Saks and Marti, 1997).

In the meantime, given such robustness and ubiquitousness of the juries with ~12 lay citizens (Warren, 1973; Forsyth and Macdonnell, 2009), more purely statistical reasonings may underlie this specific jury size.

Here, we searched for such an account by examining a hypothesis that juries consisting of ~12 individuals can achieve the best balance between the accuracy of jury verdicts and the time to reach unanimous decisions. Based on previous literature (Saks and Marti, 1997) and the concept of jury systems (Ellsworth, 1989; Ellsworth and Getman, 1987), the verdict accuracy was defined as how accurately the jury verdicts represented decisions that would be made by the full community members (Fig. 1a). The deliberation time—so-called consensus time (Krapivsky and Redner, 2003; Masuda, 2014)—was defined as how many voting steps were required for the jury to reach a unanimous consensus.

a–c We evaluated the efficiency of juries with N jurors by comparing the verdict accuracy with the deliberation time (a) using the majority-vote model with noise on a complete graph (b). The verdict accuracy 〈Accuracy〉 was defined as whether the jury verdicts σjury was the same as the verdicts hypothetically made by the full community members σComm. We then quantified the beneficial effect of N jurors on the verdict accuracy Δ〈Accuracy〉N by calculating how better the verdict accuracy in the N-juror system 〈Accuracy〉N was compared to 〈Accuracy〉2, the accuracy of the most basic collective decision-making system. The deliberation time T was summarised by its median value 〈T〉 because T showed a skewed distribution (c). Finally, we estimated the jury efficiency by calculating the ratio of Δ〈Accuracy〉 to 〈T〉. In the majority-vote model (b), the jurors change their opinions to the majority opinion at a time point with a probability 1–q or to the minor one with a probability q. If the opinion in the jury is equally divided, the jurors change their opinions randomly. The q is a noise parameter, which is also stated as social temperature (Vilela and Stanley, 2018) and anti-conformity index (Nowak and Sznajd-Weron, 2019). The FMajor represents the proportion of the major opinion in the entire community. d. The graph shows an association between the number of jurors N and the critical noise qc in the majority-vote model on complete graphs (Chen et al., 2015). In the range of q was set to [0.05, 0.2] so that q is less than qc even when the number of the jurors is relatively small (e.g., N ~ 10).

We estimated these two metrics using a majority-vote model with noise (Liggett, 2005; Oliveira, 1992; Tome et al., 1991) on a fully connected complete network (Fig. 1b). In this widely used model (Chen et al., 2017; Costa and de Souza, 2005; Lima, 2010, 2012; Melo et al., 2010; Vilela and Moreira, 2009; Vilela et al., 2012; Vilela and Stanley, 2018), each node represents each individual who has either of two dichotomic opinion status (here, guilty or not guilty) at every time point. Their opinions change over time: each member adopts the majority opinion in its connected neighbours (i.e., all the other members in this study) at the previous time point with a probability 1 – q and chooses the minority opinion with a probability q. This noise parameter q is often called a social temperature (Vilela and Stanley, 2018) or an anti-conformity index (Nowak and Sznajd-Weron, 2019) and supposedly represents the degree to which individuals do not obey the majority opinion in their acquaintances.

With this model, we simulated opinion dynamics between jurors and found that the most efficient jury size was largely affected by the opinion homogeneity and anti-conformity tendency in the original community. In addition, these two factors were inversely correlated, which was considered to prevent over-shrinking/expansion of the efficient jury size. Finally, we applied these findings into real-life networks and narrowed down the most efficient jury size to 11.8 ± 3.0.

Model

Majority-vote model

Using the majority-vote model with noise q (0 ≤ q ≤ 0.5; Fig. 1b) (Liggett, 2005; Oliveira, 1992; Tome et al., 1991), we numerically analysed opinion dynamics in which N jurors (N ≥ 2) continued discussions until they reached a unanimous verdict. Given actual jury deliberation, we assumed that all the jurors interact with all the other members and implemented the majority-vote model on a fully connected complete graph.

At a time point t (t = 0, 1, 2, …), each juror had a dichotomic opinion σi(t)·(σi(t) = ±1, i = 0,1,3,…N). The initial opinion σi(t = 0) was defined by random sampling from a community with 105 individuals whose opinions were divided into 1 or –1 at the ratio of FMajor to 1–FMajor (0.5 < FMajor ≤ 1). At the following time point t + 1, the jurors choose the majority at the previous time point with a probability 1 – q or the opposite opinion with a probability q. Therefore, individual decision-making at a given time point can be partially affected by its own opinion at the previous time point.

When the opinions were equally divided at the time point t, σi(t + 1) was determined at random.

We repeated this opinion update until all the opinions became the same. The time point when such unanimity was achieved was defined as the deliberation time T, and the unanimous verdict was also recorded as a jury verdict.

We conducted these calculations 106 times (Fig. 1a). That is, for one set of N, FMajor and q, we simulated 106 different jury trials, and consequently obtained 106 pairs of T and verdict accuracy score.

Deliberation time

The deliberation time T was summarised by the median of T, 〈T〉N because it showed a skewed distribution (Fig. 1c).

Verdict accuracy

Regarding the jury verdict, we first evaluated its accuracy by comparing it with that made by all the community members from which the jurors were selected. In other words, the verdict accuracy was defined as how accurately the jury verdicts represented the community decisions.

The community verdicts were estimated in essentially the same manner as the jury verdicts were, except for the following two points. First, we assumed that the verdicts of the community, which hypothetically consisted of 105 individuals, were made through full interactions between all the 105 individuals, not randomly selected N members. Second, the community verdicts were made based on a majority-vote rule, not on unanimity, because the opinions in the community were not likely to converge to unanimous ones. The community verdicts were defined by the majority opinions at t = 100.

When the jury verdict was the same as the community verdict, the verdict accuracy score was set at 1. Otherwise, it was set at 0.

Next, we calculated the average of the accuracy score 〈Accuracy〉N across the 106 jury trials. To infer effects of the jury size on the verdict accuracy, we then compared this 〈Accuracy〉N with the accuracy of the most basic group decision-making (i.e., verdict accuracy of 2-juror juries 〈Accuracy〉2). That is, the jury effect on the verdict accuracy Δ〈Accuracy〉N was defined as 〈Accuracy〉N − 〈Accuracy〉2.

Jury efficiency and efficient jury size

Finally, the jury efficiency was calculated as Δ〈Accuracy〉N/〈T〉N. We repeated this calculation for different N (2 ≤ N ≤ 50) and searched for the most efficient jury size Nefficient. Technically, we differentiated the fitted polynomial curve and defined Nefficient as N for a local maximum of the jury efficiency.

Brute-force search and ranges of parameters

Using this procedure, we conducted a brute-force search for the most efficient jury size in wide ranges of FMajor and q.

Regarding FMajor, we set its range at 0.52 ≤ FMajor ≤ 0.66 because the jury efficiency is likely to matter particularly when the community opinions on trials are not so homogeneous but somewhat divided. After all, if supermajority exists in the community (i.e., FMajor > 2/3), the jury can reach a verdict representing such a dominant community opinion in a relatively short deliberation time.

Regarding q, we set its range to [0.05, 0.2] so as to increase the possibility that the jury can reach a unanimous verdict. Previous analytical studies (Chen et al., 2015; Fronczak and Fronczak, 2017) demonstrated that, in the majority-vote model on a complete network with N nodes, the critical noise value qc can be described as \(\frac{1}{2} - \frac{1}{2}\sqrt {\frac{\pi }{{2\left( {N - 1} \right)}}}\) (Fig. 1d). Given this, to enhance the tendency for the network to reach the ordered state (i.e., ferromagnetic state), we made q less than qc even when the number of the nodes in the network is relatively small (e.g., N ~ 10).

Two community factors and efficient jury size

To examine effects of FMajor and q on Nefficient, we first conducted regression analyses. Based on the regression equations, we explored a simplified expression of Nefficient and found that Nefficient can be described as \(\frac{{\beta _{{\mathrm{Acc}}}}}{{\beta _{{\mathrm{Time}}}}}\), where βAcc and βTime were regression coefficients. Next, we calculated Pearson’s correlation coefficients r between the two components of Nefficient—βAcc and βTime— and the two community parameters—FMajor and q. The correlation coefficients were statistically compared in tests of the significance of the difference of the correlation coefficient.

Two community factors in real-life data

Finally, we examined an association between FMajor and q in real-life networks. The following three real-life network structures were obtained from Stanford Large Network Data set Collection (https://snap.stanford.edu/data/) (Leskovec and Sosic, 2016): collaboration network (ca-CondMat), email network (email-Eu-core) and Facebook network (ego-Facebook) (Table 1). All the network structures were used as undirected unweighted graphs.

We simulated opinion dynamics on these three networks using the same majority-vote model. First, we set q (0.05 ≤ q ≤ 0.15) and the initial opinion homogeneity FMajor_initial (0.52 ≤ FMajor_initial ≤ 0.66). An initial opinion of each individual was randomly assigned so that the opinion bias in the entire network met the FMajor_initial. Then, we updated the opinions until the opinion homogeneity in the community reached a plateau FMajor_converge. The plateau was defined as a period where the fluctuation in FMajor was within 0.1 over the 10 opinion updates. We repeated this procedure for different FMajor_initial and found that, when the q was constant, the community opinion homogeneity tended to converge to the same FMajor_converge regardless of FMajor_initial. We then calculated this FMajor_converge for different q and examined correlations between FMajor_converge and q.

By applying this FMajor–q association to the results of the brute-force analysis, we narrowed down the efficient jury size.

Results

Brute-force search for efficient jury size

As an example, let us consider a jury trial employing 12 jurors who were randomly selected from a community with a 60/40 opinion split on the verdict for the trial (FMajor = 0.6 in Fig. 1a). Also, we set the noise parameter q in the majority-vote model at 0.075 (Fig. 1b), which is smaller than the critical noise qc (Fig. 1d) and should increase the possibility that the jury reaches a unanimous verdict. With this setting, we simulated 106 different opinion dynamics and obtained 106 different deliberation time lengths T and unanimous jury verdicts.

The deliberation time to reach a unanimous verdict showed a skewed distribution (Fig. 1c); thus, we adopted the median of the time, 3.76, as a representative deliberation time length 〈T〉12.

To estimate the verdict accuracy, we first calculated how many verdicts of jury were the same as those that would be made by the full community members (here, hypothetically 105 individuals). We then divided the number of the accurate verdicts by the total number of the simulated opinion dynamics (i.e., 106) and obtained the verdict accuracy 〈Accuracy〉12 = 0.75.

Next, we measured the beneficial effect of the jury systems on the verdict accuracy, Δ〈Accuracy〉12, by comparing 〈Accuracy〉12 with the accuracy of the most basic collective decision-making system (i.e., 〈Accuracy〉2 = 0.60).

Finally, we calculated the ratio of Δ〈Accuracy〉12 to 〈T〉12 to determine the jury efficiency (here, Δ〈Accuracy〉12/〈T〉12 = (〈Accuracy〉12 − 〈Accuracy〉2)/〈T〉12 = 0.040).

Based on this definition, we then searched for the most efficient jury size. In this setting of FMajor and q, we repeated the calculation of the jury efficiency for different jury sizes (2 ≤ N ≤ 50). Both the 〈T〉 and Δ〈Accuracy〉 increased along with the number of the jurors (Fig. 2a, b), and the jury efficiency showed a peak at Nefficient = 12.1 (Fig. 2c).

In an example case (FMajor = 0.6 and q = 0.075), the deliberation time 〈T〉 and verdict accuracy change Δ〈Accuracy〉 increased along with the jury size N (a and b), and the jury efficiency showed a peak at N = 12.1 (c). The y-axes in the panels a and b are in a logarithmic scale. We conducted such a search for Nefficient in a brute-force manner in broader ranges of FMajor and q.

In the same manner, we conducted a brute-force search for the most efficient jury size by independently changing the two parameters in broader ranges (0.52 ≤ FMajor ≤ 0.66 and 0.05 ≤ q ≤ 0.2). Nefficient was found between 6.70 (FMajor = 0.66 and q = 0.2) and 18.40 (FMajor = 0.52 and q = 0.05) (Fig. 2d).

Simple expression of efficient jury size

Next, to infer determinants of the efficient jury size, we explored a simple expression of Nefficient.

In the above example, 〈T〉 showed an exponential increase when N increased (R2* = 0.96; Fig. 2a), whereas Δ〈Accuracy〉 increased more slowly in a manner that was well fitted to a log–log linear regression model (R2* = 0.95; Fig. 2b).

Such associations were seen in a broader parameter space (0.52 ≤ FMajor ≤ 0.66 and 0.05 ≤ q ≤ 0.2; R2* ≥ 0.92), which enabled us to describe 〈T〉 and Δ〈Accuracy〉 as ln 〈T〉 = βTimeN + εTime and ln Δ〈Accuracy〉 = βAcclnN + εAcc, respectively. βTime and βAcc represent coefficients and the εTime and εAcc denote intercepts in the regression models.

Given them, the derivative of the logarithm of the jury efficiency can be described as \(\frac{d}{{dN}}\ln \left( {{\Delta}\langle {\mathrm{Accuracy}}\rangle /\langle T\rangle } \right) = \beta _{{\mathrm{Acc}}}/N - \beta _{{\mathrm{Time}}}\), and thus, the jury efficiency should peak when N is βAcc/βTime. This indication was validated by a significant correlation between βAcc/βTime and Nefficient (R2* = 0.78, coefficient of variation = 0.14; Fig. 3a).

a The most efficient jury size Nefficient could be approximated by βAcc/βTime, where βAcc and βTime are regression coefficients in ln Δ〈Accuracy〉 = βAcclnN + εAcc and ln〈T〉 = βTimeN + εTime. CV represents the coefficient of variation and R2* denotes the adjusted coefficient of determination in a regression model using Nefficient = βAcc/βTime. b–e We calculated βAcc and βTime in broader ranges of FMajor and q (b) and estimated correlation coefficients between them. The panels c and d show the exemplary results of the correlation analyses. βAcc was specifically correlated with FMajor (c), whereas βTime was exclusively correlated with q (d). These observations indicate that larger FMajor decreases Nefficient by reducing βAcc, whereas larger q decreases Nefficient by increasing βTime (e). In the panels c and d, r shows a correlation coefficient and P indicates the statistical significance in a test of the significance of the difference of the correlation coefficient.

Jury size and two community factors

Based on this simple expression of Nefficient, we examined how the efficient jury size was affected by the two community properties —FMajor and q. We first calculated βAcc and βTime in wide ranges of FMajor and q (Fig. 3b) and then estimated correlation coefficients between them.

βAcc was negatively associated with FMajor (r ≤ –0.96, P < 10–3, PBonferroni < 0.05; e.g., the left panel in Fig. 3c) but not with q (|r | <0.14, P > 0.76; e.g., the right panel in Fig. 3c). Conversely, βTime was positively correlated with q (r ≥ 0.97, P < 10–3, PBonferroni < 0.05; e.g., the right panel in Fig. 3d) but not with FMajor (|r | <0.086, P > 0.85; e.g., the left panel in Fig. 3d).

These results suggest that the larger opinion homogeneity in a community decreases the efficient jury size by dampening the beneficial effects of collective decision-making on the verdict accuracy, whereas the stronger anti-conformity tendency in the community also reduces Nefficient by accelerating the increase in the deliberation time (Fig. 3e).

Why do not jury sizes shrink or expand?

If the two community factors affect the efficient jury size in such seemingly independent manners, Nefficient could overly shrink (e.g., N = 2 or 3) or excessively expand (e.g., N = 100). However, it is difficult to see such extremely small/large juries in real-life settings.

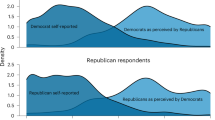

To solve this contradiction, we investigated associations between FMajor and q in real-life data sets. We simulated opinion dynamics on three real-life large-scale social networks (Leskovec and Sosic, 2016) and traced the changes in the opinion homogeneity FMajor when the anti-conformity index q was set as a constant.

In all the three social networks, the opinion homogeneity converged toward a q-dependent specific value even when the initial FMajor was widely varied (e.g., Fig. 4a). Moreover, the convergence value of the opinion homogeneity FMajor_converge were negatively correlated with the anti-conformity index q (r ≥ 0.98, P ≤ 0.0034, PBonferroni < 0.05; Fig. 4b). When we consider this FMajor–q inverse correlation together with the results of the brute-force search for Nefficient (Fig. 2d), the efficient jury size was narrowed down into a range from 8.8 to 14.7 (11.8 ± 3.0) in the three real-life social networks (Fig. 4c).

We examined associations between the opinion homogeneity FMajor and anti-conformity tendency q in the three real-life large-scale social networks (Leskovec and Sosic, 2016). In all the three networks, FMajor converged toward a q-dependent specific value even when the initial FMajor was largely different (a). The convergence FMajor was negatively correlated with q (b). By applying such an inverse association between FMajor and q, we could narrow down Nefficient into 11.8±3.0 (c). These results imply a statistical mechanism, which avoids over-shrinking/expansion of the jury size in the real-life society (d).

These results show that the inverse correlation between the opinion homogeneity and anti-conformity tendency in a community can be one of the key mechanisms that avoid the over-shrinking and excessive expansion of the jury size (Fig. 4d).

Discussion

This numerical study examined opinion dynamics during jury deliberation with the majority-vote model, calculated the efficiency of the jury system and searched for the most efficient jury size. We first found that such an efficient jury size was determined by two community factors via different manners: the larger opinion homogeneity in a community made the efficient jury size more compact by affecting the verdict accuracy, whereas the larger anti-conformity tendency decreased the efficient jury size by accelerating the increases in the deliberation time. These two community factors were inversely correlated with each other, which prevented over-shrinking and excessive expansion of the efficient jury size. By bringing all these findings into real-life networks, the most efficient jury size was narrowed down to 11.8 ± 3.0, which is close to actual jury sizes in most of the jury trial systems (Ellsworth, 1989).

This study has demonstrated that even a simple statistical model can provide an account for the jury sizes seen in different countries; however, such simplicity could impose limitations.

Sociologically, a series of studies have suggested that the jury sizes must have been chosen and changed through a series of societal and political events (Ellsworth, 1989; Hans, 2008; Maccoun, 1989), which were not covered in this research. We did not consider a variety of the jury trial systems, either: in some communities, their jury verdicts are not made by unanimous votes but by majority votes with different criteria (Hans, 2008); some jury systems include professional judges (Hans, 2008).

Also, the model we adopted here may be too simple in statistical and psychological contexts. The majority-vote model with noise has been used to understand various opinion dynamics (Chen et al., 2017; Costa and de Souza, 2005; Lima, 2010, 2012; Melo et al., 2010; Vilela and Moreira, 2009; Vilela et al., 2012; Vilela and Stanley, 2018), but the current form does not consider effects of some social and psychological factors, such as the existence of strong opinion holders in the juries (Vilela and Stanley, 2018) and individual differences in the anti-conformity tendency (Costa and de Souza, 2005; Vilela et al., 2012). This study assumed dichotomy in the opinion distribution; therefore, the current model took into account neither more nuanced differences in opinions (Lima, 2012; Melo et al., 2010) nor effects of confidence levels of individual opinions (Bahrami et al., 2012; Bahrami et al., 2010).

In addition to these limitations, the current results may be able to be validated analytically. A previous work on the majority-vote model on complete graphs used the heterogeneous mean-field theory and successfully identified the critical noise for both the infinite-size and finite-size networks (Chen et al., 2015). Another study adopted the master equation approach and obtained the exact equation for the probability of a given opinion pattern (Fronczak and Fronczak, 2017). Combining these analytical findings would bring us more clear, precise and expandable expressions about quantitative associations between the anti-conformity index, opinion homogeneity in the community, verdict accuracy and deliberation time.

These sociological, statistical, psychological and analytical concerns would have to be investigated in future studies; but the fact that even a simple toy model can explain the actual jury sizes may imply that the number of jurors might have been being implicitly optimised in different communities with different cultures based on a certain common mechanism.

Data availability

The data sets of the three real-life network structures are available in Stanford Large Network Data set Collection (https://snap.stanford.edu/data/). The current work generated neither original data nor novel mathematical model. The codes used here are available from the author upon reasonable requests.

References

Bahrami B, Olsen K, Bang D, Roepstorff A, Rees G, Frith C (2012) Together, slowly but surely: the role of social interaction and feedback on the build-up of benefit in collective decision-making. J Exp Psychol Hum Percept Perform 38:3–8

Bahrami B, Olsen K, Latham PE, Roepstorff A, Rees G, Frith CD (2010) Optimally interacting minds. Science 329:1081–1085

Chen H, Shen C, He G, Zhang H, Hou Z (2015) Critical noise of majority-vote model on complex networks. Phys Rev E Stat Nonlin Soft Matter Phys 91:022816

Chen H, Shen C, Zhang H, Li G, Hou Z, Kurths J (2017) First-order phase transition in a majority-vote model with inertia. Phys Rev E 95:042304

Costa LS, de Souza AJ (2005) Continuous majority-vote model. Phys Rev E Stat Nonlin Soft Matter Phys 71:056124

Davis JH, Stasser G, Spitzer CE, Holt RW (1976) Changes in group members decision preferences during discussion-illustration with mock juries. J Pers Soc Psychol 34:1177–1187

Ellsworth PC (1989) Are twelve heads better than one? Law Contemp Probs 52:205–224

Ellsworth PC, Getman, JG (1987) Social science in legal decision-making. In: Lipson L, Wheeler S (eds) Law and the social sciences. Russell Sage Foundation, pp. 581–636

Faust WL (1959) Group versus individual problem-solving. J Abnorm Psychol 59:68–72

Fay N, Garrod S, Carletta J (2000) Group discussion as interactive dialogue or as serial monologue: the influence of group size. Psychol Sci 11:481–486

Forsyth J, Macdonnell H (2009) Scotland’s unique 15-strong juries will not be abolished. In: McLellan J (eds) Johnston Press (Scotland)

Fronczak A, Fronczak P (2017) Exact solution of the isotropic majority-vote model on complete graphs. Phys Rev E 96:012304

Garrett BL, Crozier WE, Grady R (2020) Error rates, likelihood ratios, and jury evaluation of forensic evidence J Forensic Sci 65:1199–1209

Hamilton VL (1978) Obedience and Responsibility-Jury Simulation. J Pers Soc Psychol 36:126–146

Hans (2008) Jury systems around the world. Cornell Law Faculty Publications. Paper 305

Krapivsky PL, Redner S (2003) Dynamics of majority rule in two-state interacting spin systems. Phys Rev Lett 90:238701

Leskovec J, Sosic, R (2016) SNAP: a general purpose network analysis and graph mining library. ACM Trans Intell Syst Technol 8

Liggett TM (2005) Interacting particle systems. Springer, Berlin; New York, NY

Lima FWS (2010) Analysing and controlling the tax evasion dynamics via majority-vote model. J Phys: Conference Series 246

Lima FWS (2012) Three-state majority-vote model on square lattice. Physica A 391:1753–1758

Maccoun RJ (1989) Experimental research on jury decision-making. Science 244:1046–1050

Masuda N (2014) Voter model on the two-clique graph. Phys Rev E Stat Nonlin Soft Matter Phys 90:012802

Melo DFF, Pereira LFC, Moreira, FGB (2010) The phase diagram and critical behavior of the three-state majority-vote model. J Stat 2010

Nagel SS, Neef M (1975) Deductive modeling to determine an optimum jury size and fraction required to convict. Wash Univ Law Quart 1975:933

Nowak B, Sznajd-Weron K (2019) Homogeneous symmetrical threshold model with nonconformity: independence versus anticonformity. Complexity 2019:1–14

Oliveira MJD (1992) Isotropic majority-vote model on a square lattice. J Stat Phys 66:273

Ross R, Kramer K, Martire KA (2019) Consistent with: what doctors say and jurors hear. Aust J Forensic Sci 51:109–116

Saks MJ, Marti MW (1997) A Meta-analysis of the effects of jury size. Law Human Behav 21:451–467

Stephan C (1974) Sex prejudice in jury simulation. J Psychol 88:305–312

Thomas EJ, Fink CF (1963) Effects of group size. Psychol Bull 60:371–384

Tome T, Oliveira MJD, Santos MA (1991) Non-equilibrium ising model with competing Glauber dynamics. J Phys A 24:3677–3686

Vilela ALM, Moreira FGB (2009) Majority-vote model with different agents. Physica A 388:4171–4178

Vilela ALM, Moreira FGB, de Souza AJF (2012) Majority-vote model with a bimodal distribution of noises. Physica A 391:6456–6462

Vilela ALM, Stanley HE (2018) Effect of strong opinions on the dynamics of the majority-vote model. Sci Rep 8:8709

Warren WL (1973) Henry II. University of California Press, Berkeley

Werner CM, Strube MJ, Cole AM, Kagehiro DK (1985) The impact of case characteristics and prior jury experience on jury verdicts1. J Appl Soc Psychol 15:409–427

Young DM, Levinson JD, Sinnett S (2014) Innocent until primed: mock jurors’ racially biased response to the presumption of innocence. PLoS ONE 9:e92365

Acknowledgements

This work was supported by Grant-in-aid for Research Activity Research Activity from Japan Society for Promotion of Sciences and a support from The University of Tokyo Excellent Young Researcher Project. The author also acknowledges insightful comments from Drs. Yukari Watanabe (Toranomon Hospital, Japan), Naoki Masuda (University at Buffalo, US) and Bahador Bahrami (Ludwig Maximilian University, Germany) and Professor Geraint Rees (UCL, UK).

Author information

Authors and Affiliations

Contributions

TW designed this study, conducted the numerical investigations and wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Watanabe, T. A numerical study on efficient jury size. Humanit Soc Sci Commun 7, 62 (2020). https://doi.org/10.1057/s41599-020-00556-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-020-00556-1