Abstract

In vivo cardiac diffusion tensor imaging (cDTI) is a promising Magnetic Resonance Imaging (MRI) technique for evaluating the microstructure of myocardial tissue in living hearts, providing insights into cardiac function and enabling the development of innovative therapeutic strategies. However, the integration of cDTI into routine clinical practice poses challenging due to the technical obstacles involved in the acquisition, such as low signal-to-noise ratio and prolonged scanning times. In this study, we investigated and implemented three different types of deep learning-based MRI reconstruction models for cDTI reconstruction. We evaluated the performance of these models based on the reconstruction quality assessment, the diffusion tensor parameter assessment as well as the computational cost assessment. Our results indicate that the models discussed in this study can be applied for clinical use at an acceleration factor (AF) of \(\times 2\) and \(\times 4\), with the D5C5 model showing superior fidelity for reconstruction and the SwinMR model providing higher perceptual scores. There is no statistical difference from the reference for all diffusion tensor parameters at AF \(\times 2\) or most DT parameters at AF \(\times 4\), and the quality of most diffusion tensor parameter maps is visually acceptable. SwinMR is recommended as the optimal approach for reconstruction at AF \(\times 2\) and AF \(\times 4\). However, we believe that the models discussed in this study are not yet ready for clinical use at a higher AF. At AF \(\times 8\), the performance of all models discussed remains limited, with only half of the diffusion tensor parameters being recovered to a level with no statistical difference from the reference. Some diffusion tensor parameter maps even provide wrong and misleading information.

Similar content being viewed by others

Introduction

In vivo cardiac diffusion tensor (DT) imaging (cDTI) is an emerging Magnetic Resonance Imaging (MRI) technique that has the potential to describe the microstructure of myocardial tissue in living hearts. The diffusion of water molecules occurs anisotropically due to the microstructure of the myocardium, which can be approximated by fitting three-dimensional (3D) tensors with a specific shape and orientation in cDTI. Various parameters can be derived from the DT, including mean diffusivity (MD) and fractional anisotropy (FA), which are crucial indices that can indicate the structural integrity of myocardial tissues. The helix angle (HA) signifies local cell orientations, while the second eigenvector (E2A) represents the average sheetlet orientation1. The development of cDTI provides insights into the myocardial microstructure and offers new perspectives on the elusive connection between cellular contraction and macroscopic cardiac function1,2. Furthermore, it presents opportunities for novel assessments of the myocardial microstructure and cardiac function, as well as the development and evaluation of innovative therapeutic strategies3. Early exploratory clinical studies, e.g., cardiomyopathy1,2,4, myocardial infarction5,6, have been reported, and shown very promising results and high potential for cDTI to contribute to the clinic.

Despite the numerous advantages, there are still significant technical obstacles that must be overcome to integrate cDTI into routine clinical practice. For the calculation of the DT, diffusion-weighted images (DWIs) with diffusion encoding in at least six distinct directions need to be collected. Due to the movement derived from heart beats and human breath, in vivo cDTI exploits single-shot encoding acquisition for repetitive fast scanning, e.g., single-shot echo planar imaging (SS-EPI) or spiral diffusion-weighted imaging7. The utilisation of these single-shot encoding acquisitions, leading to low signal-to-noise ratio (SNR) images, typically requires multiple repetitions to enhance the accuracy of the DT estimation8,9. Each repetition necessitates an additional breath-hold for the patient when using breath-hold acquisitions, which significantly increases the total scanning time and leads to uncomfortable patient experience.

Numerous studies have been proposed to accelerate cDTI technique, which can be mainly categorised as (1) reducing the total amount of DWIs used for the calculation of the DT; (2) general fast DWI by k-space undersampling and reconstruction using compressed sensing (CS) or deep learning techniques. It is noted that this study focuses on the second strategy.

Deep learning has emerged as a powerful technique for image analysis, capitalising on the non-linear and complex nature of networks through supervised or unsupervised learning, and has found widespread applications in medical image research10. Deep learning-based MRI reconstruction11,12 has gained significant attention, leveraging its capability of learning complex and hierarchical representations from large MRI datasets13.

In this work, we investigated the application of deep learning-based methods for cDTI reconstruction. We explored and implemented three representative deep learning-based models from algorithm unrolling models14,15,16, enhancement-based models17,18,19,20 to emerging generative models21,22,23, on the cDTI dataset with acceleration factor (AF) of \(\times 2\), \(\times 4\) and \(\times 8\), including a Convolutional Neural Network (CNN)-based algorithm unrolling method, i.e., D5C515, a CNN-based and conditional Generative Adversarial Network (GAN)-based method, i.e., DAGAN21, and a Transformer-based and enhancement-based method, i.e., SwinMR19. The performance of these models was evaluated by the reconstruction quality assessment and the DT parameters assessment.

To the best of our knowledge, this work is the first comparison study that focuses on evaluating various deep learning-based models on in vivo cDTI, encompassing algorithm unrolling models, enhancement-based models, and generative models. The purpose of this work is not to propose a new reconstruction model for cDTI. Instead, it aims to validate the performance of existing MRI reconstruction models on the DWI reconstruction and to provide a general framework for a fair comparison.

Our experiments demonstrate that the models discussed in this paper can be applied for clinical use at AF \(\times 2\) and AF \(\times 4\), since both the reconstruction of DWIs and DT parameters reach satisfactory levels. Among these models, D5C5 shows superior fidelity for the reconstruction, while SwinMR provides results with higher perceptual scores. There is no statistical difference from the reference for all the DT parameters at AF \(\times 2\) or most of the DT parameters at AF \(\times 4\). The quality of most the DT parameter maps we considered are visually acceptable. Considering various factors, SwinMR is recommended as the optimal approach for the reconstruction with AF \(\times 2\) and AF \(\times 4\).

However at AF \(\times 8\), the performance of these three models, including the best-performing SwinMR, is still limited. The reconstruction quality is unsatisfactory due to the artefact remaining and the noisy (DAGAN) or hallucinated (SwinMR) estimation. Only half of the DT parameters can be recovered to a level that is no statistical difference from the reference. Some DT parameter maps even provide wrong and misleading information, which is unacceptable and dangerous for clinical use.

Related works

Diffusion tensor MRI acceleration

A major drawback of DTI is its extended scanning time, as it requires multiple DWIs with varying b-value and diffusion gradient directions to calculate the DT. In theory, the estimation of the DT requires only six DWIs with different diffusion gradient directions and one reference image. However, in practical for cDTI, a considerable number of cardiac DWIs and multiple averages are typically required to enhance the accuracy of DT estimation, due to the inherently low SNR of single-shot acquisitions.

Strategies to accelerate the DTI technique have been explored. One technical route aims to reduce the number of DWIs required for the DT estimation24,25,26,27,28,29,30, which can be further categorised into three sub-class.

-

(1)

Learn a direct mapping from DWIs with reduced number of repetitions (or gradient directions), to the DT or DT parameter maps. Ferreira et al.24 proposed a U-Net31-based model for cDTI acceleration, which directly estimated the DT, using DWIs collected within one breath-hold, instead of solving a conventional linear-least-square (LLS) tensor fitting. Karimi et al.25 introduced a Transformer-based model with coarse-and-fine strategy to provide accuracy estimation of the DT, using only six diffusion-weighted measurements. Aliotta et al.32 proposed a neural network for brain DTI, namely DiffNet, which estimated MD and FA maps directly from diffusion-weighted acquisitions with as few as three diffusion-encoding directions. They further improved their method by combining a parallel U-Net for slice-to-slice mapping and a multi-layer perceptron for pixel-to-pixel mapping26. Li et al.27 developed a CNN-based model for brain DTI, i.e., SuperDTI, to generate FA, MD and directionally encoded color maps with as few as six diffusion-weighted acquisitions.

-

(2)

Enhance DWIs (denoising). This kind of methods usually apply only a small amount of enhanced images to achieve comparable estimation results with the results reconstructed using a standard protocol. Tian et al.28 developed a novel DTI processing framework, entitled DeepDTI, which minimised the required data for DTI to six diffusion-weighted images. The core idea of this framework was to use a CNN that took a stack of non-diffusion-weighted (b0) image, six DWIs as well as a anatomical (T1- or T2-weighted) image as input, to produce high-quality b0 images and six DWIs. Phipps et al.29 applied a denoising CNN to enhance the quality of b0 images and corresponding DWIs for cDTI.

-

(3)

Refine the DT quality. Tänzer et al.30 proposed a GAN-based Transformer, aiming to directly enhance the quality of the DT that was calculated with a reduce number of DWIs in an end-to-end manner.

Another technical route follows the general DWIs acceleration by k-space undersampling and reconstruction33,34,35,36. Zhu et al.33 directly estimated the DT from highly undersampled k-space data. Chen et al.34 incorporated a joint sparsity prior of different DWIs with the L1-L2 norm and the DT’s smoothness with the total variational (TV) semi-norm to efficiently expedite DWI reconstruction. Huang et al.35 utilised a local low-rank model and 3D TV constraints to reconstruct the DWIs from undersampled k-space measurements. Teh et al.36 introduced a directed TV-based method for DWI images reconstruction, applying the information on the position and orientation of edges in the reference image.

In addition to these major technical routes, Liu et al.37 explored deep learning-based image synthetics for inter-directional DWIs generation. The true b0 and 6 DWIs were concatenated with the generated data and passed to the CNN-based tensor fitting network.

Deep learning-based reconstruction

The aim of MRI reconstruction is to recover the image of interest \({\textbf{x}} \in {\mathbb {C}}^n\) from the undersampled k-space measurement \({\textbf{y}} \in {\mathbb {C}}^m\), which is mathematically described as an inverse problem:

in which the degradation matrix \({\textbf{A}} \in {\mathbb {C}}^{m \times n}\) can be further presented as the combination of the undersampling trajectory \({\textbf{M}} \in {\mathbb {C}}^{m \times n}\), discrete Fourier transform matrix \(\boldsymbol{\mathcal{F}}\in {\mathbb {C}}^{n \times n}\) and a diagonal matrix that denotes coil sensitivity maps \({\textbf{S}} \in {\mathbb {C}}^{n \times n}\). \(\lambda\) is the coefficient that balances regularisation term \({\mathcal {R}}({\textbf{x}})\).

Deep learning technique has been widely used for MRI reconstruction, among which the three most representative methods are (1) algorithm unrolling models14,15,16, (2) enhancement-based models17,18,19,20, and the emerging (3) generative models21,22,23.

Algorithm unrolling models typically integrate neural networks with traditional CS algorithms, simulating the iterative reconstruction algorithms through learnable iterative blocks12. Yang et al.14 reformulated an Alternating Direction Method of Multipliers (ADMM) algorithm to a multi-stage deep architecture, namely Deep-ADMM-Net, for MRI reconstruction, of which each stage corresponds to an iteration in traditional ADMM algorithm. Some algorithm unrolling-based models defined the regulariser with the denoising residual of a deep denoiser15,16, where Eq. (1) can be reformulated as:

in which \({\textbf{f}}_{\theta }(\cdot )\) is a deep neural network and \({\textbf{x}}_u\) is the undersampled zero-filled images (ZF). Schlemper et al.15 designed a deep cascade of CNNs for cardiac cine reconstruction, in which a spatio-temporal correlations can be also efficiently learned via the data sharing approach. Aggarwal et al.16 proposed a model-based deep learning method, namely MoDL, which exploited a CNN-based regularisation prior with a conjugate gradient-based data consistency (DC) for MRI reconstruction.

Enhancement-based models typically train a deep neural network \({\textbf{f}}_{\theta }(\cdot )\) that directly maps the undersampled k-space measurement \({\textbf{y}}\) or zero-filled images \({\textbf{x}}_u\), to fully-sampled images \({\hat{\textbf{x}}}\) or its residual in an end-to-end manner, which can be formulated as \({\hat{\textbf{x}}} = {\textbf{f}}_{\theta }({\textbf{x}}_u)\) or \({\hat{\textbf{x}}} = {\textbf{f}}_{\theta }({\textbf{x}}_u) + {\textbf{x}}_u\). Hyun et al.17 introduced a CNN-based U-Net for MRI reconstruction. Feng et al.18 exploited the task-specific novel cross-attention and designed an end-to-end Transformer-based model for jointly MRI reconstruction and super-resolution. Huang et al.20 explored the graph representation and the non-Euclidean relationship for MR images, and designed a Vision Graph Neural Network-based U-Net for fast MRI.

Generative models for solving inverse problems represent an emerging and rapidly evolving field of data-driven models. In the area of MRI reconstruction, various models and techniques have been found and achieved promising performance21,22,23,38. Generative models usually focus on learning the true data distribution, instead of heavily replying upon the regularisation term or directly learning an inverse mapping39. For example, Variational Autoencoders40 explicitly learn the data distribution by utilising variational inference to approximate the posterior distribution of latent variables, given the observed data. Generative Adversarial Networks (GANs)41 are able to learn the data distribution implicitly by minimising a statistical discrepancy between the true distribution and the model distribution in a max-min game. Diffusion models42 approximate the gradient of the log probability density of the data, focusing on the score function rather than the density itself39. In the context of MRI reconstruction, Yang et al.21 proposed a de-aliasing Generative Adversarial Networks for MRI reconstruction, in which the U-Net-based generator produced the estimated fully-sampled MRI images in an end-to-end manner. Chung et al.22 introduced a score-based diffusion model for MRI reconstruction with an arbitrary undersampling rate, taking advantage of unconditional training strategy and data consistency conditioning at the inference stage.

Deep learning community constantly provides a wide range of novel and powerful network structures for these MRI reconstruction methods, including CNNs15,17,21,43, Recurrent Neural Networks44,45, Graph Neural Networks20, recently thriving Transformers18,19,46,47, etc. These rapidly evolving deep learning-based networks enable advances in MRI reconstruction.

Methodology

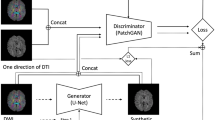

In this study, we implemented three deep learning-based MRI reconstruction methods, namely, DAGAN21, D5C515 and SwinMR19, and assessed their performance on a cDTI dataset. The overall data flow is depicted in Fig. 1.

The data flow of our implementation for cardiac diffusion tensor imaging data. The whole procedure consists (A) data acquisition, (B) data pre-processing, (C) deep learning-based reconstruction, and (D) data post-processing. It is noted that D5C5 does not require the cropping and pasting step and additionally takes the undersampled k-space data and the corresponding undersampling mask as input.

Data acquisition

All data used in this study were approved by the National Research Ethics committee, Bloomsbury with reference number 13/LO/1830, REC reference 10/H0701/112, and IRAS reference number 33773. The study adheres to the principles of the Declaration of Helsinki and the UK Research Governance Framework version 2. All participants provided informed written consent.

Retrospectively acquired cDTI data were acquired using Siemens Skyra 3T MRI scanner and Siemens Vida 3T MRI scanner (Siemens AG, Erlangen, Germany). A diffusion-weighted stimulated echo acquisition mode (STEAM) SS-EPI sequence with reduced phase field-of-view and fat saturation was used. Some MR sequence parameters are listed: \(\text {TR} = 2 \ \text {RR intervals}\); \(\text {TE} = 23 \ \text {ms}\); mSENSE or GRAPPA with \(\text {AF} = 2\); echo train duration \(= 13 \ \text {ms}\); spatial resolution \(= 2.8 \times 2.8 \times 8.0 \ \text {mm}^\text {3}\). Diffusion-weighted images were encoded in six directions with diffusion-weighted of \(\text {b} = 150 \ \text {and} \ 600 \ \text {sec/mm}^\text {2}\) (namely b150 and b600) in a short-axis mid-ventricular slice. Reference images, namely b0, were also acquired with a minor diffusion weighting.

We used 481 cDTI cases including 2 cardiac phases, i.e., diastole (\(n = 232\)) and systole (\(n = 249\)), for the experiments section. The dataset contains 241 healthy cases, 31 amyloidosis (AMYLOID) cases, 47 dilated cardiomyopathy (DCM) cases, 35 in-recovery DCM (rDCM) cases, 39 hypertrophic cardiomyopathy (HCM) cases, 48 HCM genotype-positive-phenotype-negative (HCM G+P-) cases, and 40 acute myocardial infarction (MI) cases. The overall data distribution of our dataset is shown in Table 1. The detailed data distribution per cohort and cardiac phase can be found in Supplementary (Supp.) Table S4.

This work separately discussed the reconstruction of systole and diastole cases. For each deep learning-based methods, two network weights were trained for either systole or diastole reconstruction. In the training stage, we applied a 5-fold-cross-validation strategy, using 169 diastole cases (TrainVal-D) or 183 systole cases (TrainVal-S). In the testing stage, four testing sets were utilised, including a mixed ordinary testing set with diastole cases (Test-D) or systole cases (Test-S) and an out-of-distribution MI testing set with diastole cases (Test-MI-D) or systole cases (Test-MI-S). According to Supp. Table S4, Test-D and Test-S include the data of Health, AMYLOID, rDCM, DCM, HCM and HCM G+P-, which are also included in the TrainVal. For further examining the model robustness and ability to handle out-of-the-distribution data, Test-MI dataset includes only MI cases, which were ‘invisible’ for models during the training stage.

Data pre-processing

In the data pre-processing stage, all DWIs (b0, b150 and b600) were processed following the same protocol.

The pixel intensity ranges of DWIs vary considerably across different b-values. To address this, We normalised all DWIs in the dataset to a pixel intensity range of \(0 \sim 1\) using the max-min method, while the maximum and minimum pixel values of all DWIs were recorded for the pixel intensity range recovery at the beginning of the data post-processing stage.

In our dataset, the majority of DWIs have a resolution of \(256 \times 96\), while a small subset of 2D slices exhibit a resolution of \(256 \times 88\). red In order to standardise the resolution, zero-padding was conducted, turning the images with a resolution of \(256 \times 88\) to a resolution of \(256 \times 96\).

GRAPPA-like Cartesian k-space undersampling masks with AF \(\times 2\), \(\times 4\) and \(\times 8\), generated by the official protocol of fastMRI dataset13, were applied to simulate the k-space undersampling process. Since all the 2D slices have been reconstructed with zero-padding factor of two, the phase encoding (PE) of our undersampling masks was set to 48 instead of 96, for a more realistic simulation. The undersampling masks were then zero-padded from \(128 \times 48\) to \(256 \times 96\) as shown in Fig. 1. More details regarding the undersampling masks can be found in Supp. Figure S1.

For DAGAN and SwinMR, DWIs were further cropped to \(96 \times 96\), as both models only support square-shaped input images.

Deep learning-based cardiac diffusion tensor imaging reconstruction

We implemented and evaluated three deep learning-based models, encompassing an algorithm unrolling model, namely D5C515, an enhancement-based model, namely SwinMR19, and a generative model (for solving inverse problem), namely DAGAN21.

DAGAN

DAGAN21 is a conditional GAN-based and CNN-based model designed for general MRI reconstruction, of which the model structure is presented in Fig. 2. DAGAN comprises two components: a generator and a discriminator, which are trained in an adversarial manner as a two-player game. The generator is a modified CNN-based U-Net31 with a residual connection48, which takes the ZF MR image as input and aims to produce the reconstructed MR image as close as possible to the ground truth image (GT). The discriminator is a standard CNN-based classifier that attempts to distinguish the ‘fake’ reconstructed MR images generated by the generator, from the ground truth MR images.

DAGAN can be trained with a hybrid loss function including an image space L2 loss, a frequency space L2 loss, a perceptual L2 loss based on a pre-trained VGG49, as well as an adversarial loss41 The generator and discriminator were alternatively updated in the training stage, while in the inference stage, only the generator was applied. To adapt DAGAN to the cDTI dataset, except for changing the resolution, we reduced the convolution kernel size of the generator and the discriminator, to lighten the network size, while ensuring the performance. Further implementation details can be found in Supp. Table S1.

The model architecture of DAGAN. (A) the generator of DAGAN is a modified Convolutional Neural Network (CNN)-based U-Net with a residual connection; (B) the discriminator of DAGAN is a standard CNN-based classifier. Conv2D: 2D convolutional layer; Recon: reconstructed MR images; GT: ground truth MR images.

D5C5

D5C515 is a CNN-based model for MRI reconstruction, with its model structure presented in Fig. 3. D5C5 takes the undersampled k-space measurement as well as the ZF MR image as the input, and outputs the reconstructed MR image. It is composed of multiple stages, each comprising a CNN block and a DC layer. The CNN block contains a cascade of convolutional layers with Rectified Linear Units (ReLU) for feature extraction, an optional data sharing (DS) layer for learning spatio-temporal features, as well as a residual connection48. The DC layer takes a linear combination between the output of the CNN block and the undersampled k-space data, enforcing the consistency between the prediction of CNNs and the original k-space measurement.

Although D5C5 is built in an iterative style, similar to other unrolling models, it can be trained in an end-to-end manner, using an image space L2 loss function. To adapt D5C5 for our experiment, we removed the DS module in each stage, since D5C5 was originally proposed for cine MRI reconstruction. Further implementation details can be found in Supp. Table S2.

(A) The model architecture of D5C5. D5C5 has five stages, each comprising a Convolutional Neural Network block (CNN Block) and a data consistency layer (DC). (B) The structure of the CNN Block. One optional data sharing module (DS) and five convolutional layers (Conv Layers) are included in the CNN Block. (C) The structure of the DC. \({\mathcal {M}}\) denotes the undersampling mask, and \(\overline{{\mathcal {M}}} = {\mathcal {I}} - {\mathcal {M}}\). \({\mathcal {F}}\) and \({\mathcal {F}}^{-1}\) denote the Fourier and inverse Fourier transform. \(\lambda\) is an adjustable coefficient controlling the level of DC. It is noted that DS module is not applied for our task.

SwinMR

SwinMR19 is a Swin Transformer-based model for MRI reconstruction, with its model structure shown in Fig. 4. SwinMR takes the ZF MR image as the input and directly outputs the reconstructed image. SwinMR is composed of a CNN-based input module and output module for projecting between the image space and the latent space, a cascade of residual Swin Transformer blocks (RSTBs), and a convolution layer with a residual connection for feature extraction. A patch embedding and a patch unembedding layer are placed at the beginning and end of each RSTB, facilitating the inter-conversion of feature maps and sequences, since the computation of Transformers is based on sequences. Multiple standard Swin Transformer layers (STLs)50 and a single convolutional layer are applied between the patch embedding and unembedding layer.

SwinMR can be trained end-to-end with a hybrid loss function consisting of an image space Charbonnier loss, a frequency space Charbonnier loss, a perceptual L1 loss based on a pre-trained VGG49. Further implementation details can be found in Supp. Table S3.

(A) The model architecture of SwinMR. (B) The structure of the residual Swin Transformer block (RSTB). (C) The structure of the Swin Transformer layer (STL). Conv2D: 2D convolutional layer. MLP: multi-layer perceptron. LN: layer normalisation. Q: query; K: key; V: value. MSA: multi-head self-attention.

Data post-processing

We applied our in-house developed software (MATLAB 2021b, MathWorks, Natick, MA) for cDTI post-processing, following the protocol described in1,24. The post-process procedure for reference data includes: (1) manual removal of low-quality DWIs; (2) DWI registration; (3) semi-manual segmentation for left ventricle (LV) myocardium; (4) DT calculation via the LLS fit; (5) DT parameter calculation including FA, MD, HA and E2A. The initial post-processing of reference data was performed by either Z.K. (7 years of experience), R.R. (3 years of experience) or M.D. (2 years of experience), and subsequently reviewed by P.F. (10 years of experience).

For the post-processing of deep learning-based reconstruction results, the output (\(96 \times 96\)) of DAGAN and SwinMR were ‘pasted’ back to the corresponding zero-filled images (\(256 \times 96\)) at their original position. (This process does not affect the final post-processing results since the region of interest is set in the central \(96 \times 96\) area).

All the DWIs were ‘anti-normalised’ (pixel value range recovery) to their original pixel intensity range using the maximum and minimum values recorded in the pre-processing stage.

The reconstruction results were arranged to construct a new reconstruction dataset with the same structure as the reference dataset. The reconstructed dataset was then automatically post-processed following the configuration of the reference data (e.g., low-quality removal information, registration shifting, segmentation masks) for a fair comparison.

Experiments and results

In this section, the experimental results are presented from three perspectives: (1) the quality of DWI reconstruction, (2) the quality of DT parameter estimation and (3) the assessment of computational cost.

Reconstruction quality assessment

In this study, four metrics were considered to assess the reconstruction quality. Peak Signal-to-Noise Ratio (PSNR) is a simple and commonly used metric for measuring the reconstruction quality, which measures the ratio of the maximum possible power of a signal to the power of corrupting noise. A higher PSNR value indicates a better reconstruction quality. Structural Similarity Index (SSIM) is a perceptual-based metric that measures the similarity between two images by comparing their structural information. A higher SSIM value indicates a better reconstruction quality. Learned Perceptual Image Patch Similarity (LPIPS)51 is a learned metric that measures the perceptual similarity between two images by computing the distance in the latent space using a pre-trained deep neural network. LPIPS has shown a high correlation with human perceptual judgements of the image similarity. A lower LPIPS value indicates a better generated images quality. Fréchet Inception Distance (FID)52 is a learned metric that measures the similarity between two sets of images by comparing their feature statistics, using a pre-trained deep neural network. FID has also shown to have high correlation with human perceptual experience. A lower FID value indicates a better generated images quality.

The visualised samples of the reconstruction on Test-S and Test-D with the undersampling masks of AF \(\times 2\), \(\times 4\) and \(\times 8\). Odd Rows: the ground truth (GT), undersampled k-space zero-filled images (ZF) and the reconstruction results of DAGAN, D5C5 and SwinMR. Even Rows: the corresponding difference (\(\times 10\)) of ZF and the reconstruction results of DAGAN, D5C5 and SwinMR. Row 1–2: AF \(\times 2\); Row 3–4: AF \(\times 4\); Row 5–6: AF \(\times 8\); Col 1–5: the results on testing set Test-S; Col 6–10: the results on testing set Test-D.

Quantitative reconstruction results on the Test-S and Test-D datasets are presented in Table 2. A two-sample t-test was applied for the statistical analysis, and \(^{\star }\) in Table 2 indicates that the specific result distribution is significantly different (\(p < 0.05\)) from the best-resulting distribution. Among the evaluated models, D5C5 demonstrates superior fidelity in the reconstruction, while SwinMR provides results with higher perceptual score.

Visualised samples of the reconstruction results on Test-S and Test-D datasets are shown in Fig. 5.

Diffusion tensor parameter quality assessment

We further evaluated the quality of DT parameters, including FA, MD, E2A and HA, after post-processing.

Differences in DT parameter global mean values between the reference and reconstruction, on systole testing sets (Test-S and Test-MI-S) and diastole testing sets (Test-D and Test-MI-D) are presented in Table 3 and Supp. Table S6, respectively. The mean absolute error for FA, MD and the mean absolute angular error for the HA gradient (HA Slope) and E2A were employed to quantify the difference. The Mann–Whitney test was utilised for the statistical analysis, and \(^{\star }\) in Table 3 and Supp. Table S6 indicates that the specific error distribution is significantly different (\(p < 0.05\)) from the best-resulting distribution. Data point with a green background indicates that the specific distribution of corresponding DT parameter global mean values is NOT significantly different (\(p > 0.05\)) from the reference distribution according to the Mann–Whitney Test.

Overall, SwinMR achieved better or comparable (not significantly different) MD, HA slope and E2A results on all testing sets. DAGAN achieved better or comparable (not significantly different) FA results on all testing sets. D5C5 provided better results only on Test-S at AF \(\times 2\), but it is not significantly better than SwinMR (on MD, HA Slope and E2A) and DAGAN (on FA).

Some cases of visualised DT parameter maps are presented in this study, including FA, MD, HA and absolute value of E2A (|E2A|). The DT parameter maps of a systole healthy case from Test-S with different AFs are shown in Fig. 6 (AF \(\times 2\)), Fig. 7 (AF \(\times 4\)), and Fig. 8 (AF \(\times 8\)). The DT parameter maps of a diastole healthy case from Test-D with different AFs are shown in Figure S2 (AF \(\times 2\)), Figure S3 (AF \(\times 4\)), and Figure S4 (AF \(\times 8\)) in Supplementary. The DT parameter maps of a systole MI case from Test-MI-S with different AFs are shown in Figure S5 (AF \(\times 2\)), Figure S6 (AF \(\times 4\)), and Figure S7 (AF \(\times 8\)) in Supplementary. The DT parameter maps of a diastole MI case from Test-MI-D with different AFs are shown in Figure S8 (AF \(\times 2\)), Figure S9 (AF \(\times 4\)), and Figure S10 (AF \(\times 8\)) in Supplementary.

Computational cost assessment

In this section, we provided the computational cost information for these deep learning-based reconstruction models. In the training stage, D5C515 and DAGAN21 were trained on one NVIDIA RTX 3090 GPU with 24 GB GPU memory, and SwinMR19 was trained on two NVIDIA RTX 3090 GPUs. In the inference stage, all three models were tested on an NVIDIA RTX 3090 GPU (or an Intel Core i9-10980XE CPU). We recorded the inference time on GPU/CPU, the inference memory usage and the number of parameters (#PARAMs) to measure the computational cost. The inference time and memory usage were measured using an input of \(96 \times 96 \times 1\) DWI, averaged over ten repetitions. The memory usage was the maximum usage during inference, measured by ‘nvidia-smi’. The full analysis table can be found in Supp. Table S5.

Discussion

Cardiac DTI has demonstrated significant potential to provide insights into myocardial microstructure and impact the clinical diagnosis of cardiac diseases. However, its use is currently limited to clinical research settings, due to its low SNR and prolonged scanning time. Deep learning-based fast MRI is an emerging technique that can considerably accelerate the imaging readout. This technique directly contributes to reducing image artefacts, including EPI image distortions and cardiac motion artefacts. Additionally, it can be used to increase image spatial resolution, and potentially reduce the total scan time, as fewer repetitions may be needed.

In this study, we have investigated the performance of deep learning-based methods in the context of cDTI reconstruction. We have implemented three deep learning-based MRI reconstruction methods, namely DAGAN21, D5C515 and SwinMR19, on our cDTI dataset. For the principles for the model selection, to best meet the diversity of the research subjects, we have selected one representative model from each of three different types of MRI reconstruction models. From the perspective of network structure, both CNNs and Transformers have been included. It is noted that we do not consider those multi-coil (or sensitivity maps required) models since our experiments are based on the single-channel magnitude data and retrospective k-space undersampling. Experimental results have been reported from the perspective of reconstruction quality assessment and DT estimation quality assessments.

According to Table 2, in the reconstruction tasks with undersampling masks of AF \(\times 2\) and AF \(\times 4\), D5C5 has achieved superior PSNR and SSIM, while SwinMR has achieved better deep learning-based perceptual scores, i.e., LPIPS and FID. In the reconstruction tasks at AF \(\times 8\), SwinMR has outperformed other methods across all the metrics applied.

According to Fig. 5, in the reconstruction tasks of AF \(\times 2\), all three methods have produced fairly good visual reconstruction results. In the reconstruction tasks of AF \(\times 4\), all three methods have successfully recovered overall structure information, whereas they have behaved differently in the recovery of the high-uncertainty area. For example in the experiment on Test-S at AF \(\times 4\) (Row 3–4, Col 1–5, Fig. 5), the red arrows indicate the high-uncertainty area on the LV myocardium due to the signal loss. DAGAN has provided a noisy estimation while SwinMR has clearly preserved this part of information. However, the results of D5C5 have missed the information in this area.

In reconstruction tasks of AF \(\times 8\), neither of three methods has successfully produced visually satisfied reconstruction results. For example in the experiment on Test-S with AF \(\times 8\) (Row 5–6, Col 1–5, Fig. 5), a large amount of visible aliasing artefacts along the PE direction has remained in the reconstruction results of both D5C5 and SwinMR, with D5C5 performing relatively worse than SwinMR. DAGAN, to some extent, has eliminated the aliasing artefacts at the expense of the increased noise, leading to a low-SNR reconstruction. Regarding the recovery of high-uncertainty area (green arrow), D5C5 has reconstructed myocardium severely affected by aliasing artefacts, but has not introduced significant hallucination. DAGAN has produced reconstruction with low SNR and tried to ‘in-paint’ the missing myocardium guided by its prior knowledge. We believe that this phenomenon is partly caused by the adversarial learning mechanism due to the tendency of GAN-based methods to introduce noise and artefacts, which has been reported in previous researches38,53. SwinMR has retained most information of the myocardium, whereas the reconstruction has severely affected by the hallucination. Hallucination is usually defined as the artefacts or incorrect features that occur due to the prior that cannot be produced from the measurements54. Based on the empirically observation, we have found such hallucination was getting stronger at high AF (difficult tasks), or when using a powerful and ‘stronger-prior’ model, for example, Transformers (SwinMR) or generative models (DAGAN).

Regarding the fidelity of the reconstruction, D5C5 has shown superiority on the condition of a relative lower AF, whereas this superiority has been observed disappearance on the condition of a relative higher AF. This phenomenon is due to the utilisation of DC module in D5C5 (Fig. 3), which combines the k-space measurements information with the CNN estimation to keep the consistency. According to Supp. Figure S1, in the reconstruction task at a relative lower AF, a large proportion of information in the final output of D5C5 is provided by the DC module, whereas this proportion is significantly decreased in a relative higher AF reconstruction task (AF \(\times 8\)). Therefore, this kind of unrolling-based methods with DC module is more suitable for the reconstruction at a relative lower AF.

For the perceptual score of the reconstructions, experiments have shown SwinMR outperforms D5C5 and DAGAN on metrics LPIPS and FID. However, even though the perceptual score has a high correlation with the observation of human, it is not always equivalent to a better reconstruction quality38. According to Fig. 5 (green arrow), SwinMR has learnt to estimate a ‘fake’ reconstruction detail (hallucination) for a higher perceptual score, which is totally unacceptable and dangerous for clinical use. We believe this phenomenon is caused by the nature of the Transformer applied in SwinMR, which is powerful enough to estimate and generate details that does not exist originally. In addition, the utilisation of the perceptual VGG-based loss restricts SwinMR to produce more perceptual-similar reconstruction instead of pixel-wise-similar reconstruction.

In general, the differences in tensor parameter global mean values between reference and reconstruction results tended to increase as the AF rises. Regarding the global mean values of FA, DAGAN has demonstrated superiority on the Test-S and Test-MI-S, with its superiority growing as the AF increases. On the Test-D and Test-MI-D, the three methods have yielded similar results, with no statistically significant difference observed. Regarding the global mean values of MD, D5C5 and SwinMR have outperformed DAGAN across all the testing sets. Specifically, D5C5 has delivered better results on Test-S, while SwinMR has excelled on Test-MI-S. On the Test-D and Test-MI-D, SwinMR and D5C5 have achieved similar results with no statistical difference at AF \(\times 2\) and AF \(\times 4\), while SwinMR has surpassed D5C5 at a higher AF (AF \(\times 8\)). For the global mean values of HA Slope, it is clear that SwinMR has outperformed DAGAN and D5C5 on all testing sets, with its superiority being statistically significant on Test-S and Test-D. In terms of the global mean values of E2A, generally, SwinMR has achieved better or comparable results among the three methods, but the differences are typically not statistically significant.

Generally, the quality of DT parameter maps has decreased as the AF increases. We believe that at AF \(\times 2\) and AF \(\times 4\), the DT parameter maps calculated by these three methods can achieve similar level with the reference. For the MI cases from out-of-the-distribution testing set Test-MI, these three methods can successfully preserve the information in lesion area for clinical use. For example at AF \(\times 2\), all three methods have provided visually similar DT parameters maps with the reference (Fig. 6 and Supp. Figure S5). At AF \(\times 4\), all three methods can recover most information of the DT parameters maps. DAGAN has produced produce noisier DT parameter maps, while SwinMR and D5C5 have produced the smoother DT parameter maps, which matches the results from the reconstruction quality assessment. It can be observed that, from the MD map and its corresponding error map, the vertical aliasing (along PE) direction has affected the DT parameter maps (Fig. 7, red arrows). The intensity of the MI area in the MD map of DAGAN has had a trend to decrease, while D5C5 and SwinMR has clearly preserved it (Supp. Figure S1, red arrows).

However, at AF \(\times 8\), the quality of DT parameter maps has been significantly worsened, which also matches the results from the reconstruction quality assessment. For the FA map, a band of higher FA is expected to be observed in the mesocardium for a healthy heart55. However, DAGAN and D5C5 have failed to recover the band of higher FA, where DAGAN has produced very noisy FA map, and D5C5 over-smoothed the FA map and wrongly estimate a highlight area (Supp. Figure S7, blue arrows). For the MD map, the affect from the aliasing observed at AF \(\times 4\), has become more severe. In the healthy case, the highlight area has wrongly appeared in MD maps from all three methods, which is unacceptable for clinical use and may lead to misdiagnosis (Fig. 8, red arrows). In the MI case, the MI lesion area tended to decrease for all the methods, especially for the results of DAGAN, where the lesion area has nearly disappeared (Supp. Figure S7, red arrows). For the HA map, it can be observed that SwinMR can produce relatively smooth HA map, while DAGAN can only reconstruct very noisy one. However, the direction of HA has been wrong estimated in the epicardium of the healthy case (Fig. 8, green arrows). This is not acceptable for clinical use and more likely to lead to misdiagnosis such as MI. For the |E2A| map, DAGAN tended to reconstruct a noisy map, while SwinMR tended to produce a smooth map. All three methods can produce similar results with reference even at AF \(\times 8\).

Through our experiments, we have demonstrated that the models discussed in this paper can be effectively applied for clinical use at AF \(\times 2\) and AF \(\times 4\). However, at AF \(\times 8\), the performance of these three models, including the best-performing SwinMR, has still remained limited.

We have also provided the computational cost analysis for these models (Supp. Table S5). The generator of DAGAN and SwinMR follow a non-iterative style, where DAGAN has achieved the faster inference time but a larger number of parameters, while SwinMR has longer inference time, but smaller number of parameters. This is because a large portion of operations in SwinMR are based on vector multiplication (multi-head self-attention), which are non-parametric, whereas the generator of DAGAN mostly relies on convolution and de-convolution. D5C5, as an algorithm unrolling model, is highly efficient in terms of parameters and memory usage. However, it has a longer inference time compared to DAGAN, due to its iterative style. (Although it is an end-to-end model, modules are arranged iteratively.) It is noted that D5C5 was implemented by Python library ‘Theano’, while DAGAN and SwinMR were implemented by Python library ‘PyTorch’. There might be biases in the measurement of inference time and memory usage due to different libraries.

We hope that this study will serve as a baseline for the future cDTI reconstruction model development. Our findings have indicated that there are still limitations when directly applying these general MRI reconstruction methods for cDTI reconstruction.

There is an absence of restrictions on diffusion. The loss functions utilised in the three models discussed in this study all rely on image domain loss, with D5C5 and DAGAN additionally incorporating the frequency domain loss and the perceptual loss. In other words, there is no diffusion information restriction implemented during the model training stage. For further studies, the diffusion tensor or parameter maps can be jointly considered into the loss function. For example, the utilisation of physics-informed loss is a potential solution for the restriction; a pre-trained neural network mapping from DWIs to DT may help to build the supervised loss for DWI reconstruction, by minimising the distance in ‘diffusion tensor’ space. Moreover, physical constraints on diffusion can be incorporated into the network. For example, the physics information like b-value, diffusion direction, can be fused into the network by a vector-based or a prompt-based embedding.

There is a trade-off between perceptual performance and quantitative performance. Cardiac diffusion tensor MRI is a quantitative technique, which places greater emphasis on contrast, pixel intensity range, and pixel-wise fidelity, also referred to as pixel-wise distance. The microstructural organisation of myocardium revealed by cDTI is sensitive to the pixel intensity of DWIs, which represents the true physiological conditions. Even minor discrepancies can lead to significant errors in interpreting the arrangement of myocardial fibres. However, the models discussed in this study were originally designed for structural MRI. Especially for DAGAN and SwinMR, they tended to pay more attention on the ‘perceptual-similarity’, which can be regarded as the latent space distance. Specifically, the perceptual loss applied for training explicitly restricts the latent space distance, while the adversarial learning mechanism implicitly minimises a statistical discrepancy between two distributions in latent space. A trade-off exists between pixel-wise fidelity and perceptual-similarity56. For example, blurred images generally exhibit better pixel-wise fidelity, while the images with clear but ‘fake’ details tend to have better perceptual-similarity38. This trade-off is observable in the visualised examples provided in Supp. Figure S11.

From another perspective, such ‘fake’ details with high perceptual score but low fidelity can be viewed as hallucinations, which are harmful for clinical use54. Consequently, for further studies, more efforts should be made to consider how to improve the pixel-wise fidelity rather than the perceptual-similarity, or how to prevent the appearance of the ‘fake’ information. We have found more and more researchers in the computer vision community tended to exploit larger and deeper network backbones and leverage emerging generative models for solving inverse problem including MRI reconstruction22. Concurrently, it is becoming essential and urgent to mitigate the incidence of hallucinations, potentially through updating the network structure, incorporating new restrictions, or adopting novel training strategies.

There is a gap between current DT evaluation methods and the true quality of cDTI reconstruction. This study has revealed that the global mean value of diffusion parameters is not always accurate or sensitive enough to evaluate the diffusion tensor quality. For example, Table 3 indicates no statistically significant difference in MD between reconstruction results (even including ZF) and the reference on Test-S, whereas the Fig. 8 shows that the MD maps are entirely unacceptable. This discrepancy arises because the MD value increases and decreases in different parts of the MD map, while the global mean value maintains relative consistency, rendering the global mean MD ineffective in reflecting the quality of the final DT estimation. For future work, in addition to the visualised assessment, we will applied the down-stream task assessment, e.g., utilising a pre-trained pathology classification or detection model to evaluate the reconstruction quality. Theoretically, better classification or detection accuracy corresponds to improved reconstruction results.

There are still limitations for this study. (1) The size of the testing sets is not sufficiently large. The relatively small testing sets enlarge the randomness of experimental results and reduce the reliability of statistical tests. In future studies, we will expand our dataset, especially for the patient data. According to Supp. Table S4, compared to the healthy volunteers, samples from patients in our dataset are insufficient and unbalanced. The lack of patient samples leads to the model’s inability to correctly recover the pathology information, which also results in errors in subsequent DT estimation. With sufficient patient samples, we will consider incorporating pathology information into the reconstruction model, allowing accurate DWI reconstruction and further DT estimation for different types of cardiac diseases. (2) Our simulation experiment is based on the retrospective k-space undersampling on single-channel magnitude DWIs that have been reconstructed by the MR scanner software. However, the raw data acquired from the scanner, prior to reconstruction, is typically multi-channel complex-value data in k-space. The retrospective undersampling step itself removed a large amount of high-frequency noise, leading to unrealistic post-processing results. Additionally, our experiment involves retrospective undersampling using simulation-based GRAPPA-like Cartesian k-space undersampling masks (Supp. Figure S1), which are inconsistent with the equal-spaced readout used in the scanner. In future studies, we aim to conduct our experiment on prospectively acquired multi-channel k-space raw data.

Conclusion

In conclusion, we have investigated the application of deep learning-based methods for accelerating cDTI reconstruction, which has significant potential for improving the integration of cDTI into routine clinical practice. Our study focused on three different models, namely D5C5, DAGAN, and SwinMR, which have been evaluated on the cDTI dataset with the AF of \(\times 2\), \(\times 4\), and \(\times 8\). The results have demonstrated that the examined models can be effectively used for clinical use at AF \(\times 2\) and AF \(\times 4\), with SwinMR being the recommended optimal approach. However, at AF \(\times 8\), the performance of all models has remained limited, and further research is required to improve their performance at a relative higher AF.

Data availability

The data that support the findings of this study are available from Imperial College London but restrictions apply to the availability of these data, which were used under license for the current study, and so are not publicly available. Data are however available from the authors upon reasonable request and with permission of Imperial College London.

References

Ferreira, P. F. et al. In vivo cardiovascular magnetic resonance diffusion tensor imaging shows evidence of abnormal myocardial laminar orientations and mobility in hypertrophic cardiomyopathy. J. Cardiovasc. Magn. Resonan. 16, 1. https://doi.org/10.1186/s12968-014-0087-8 (2014).

Nielles-Vallespin, S. et al. Assessment of myocardial microstructural dynamics by in vivo diffusion tensor cardiac magnetic resonance. J. Am. Coll. Cardiol. 69, 661–676. https://doi.org/10.1016/j.jacc.2016.11.051 (2017).

Khalique, Z. et al. Diffusion tensor cardiovascular magnetic resonance imaging: A clinical perspective. JACC Cardiovasc. Imaging 13, 1235–1255. https://doi.org/10.1016/j.jcmg.2019.07.016 (2020).

Joy, G. et al. Microstructural and microvascular phenotype of sarcomere mutation carriers and overt hypertrophic cardiomyopathy. Circulation 148, 808–818. https://doi.org/10.1161/CIRCULATIONAHA.123.063835 (2023).

Das, A. et al. Pathophysiology of LV remodeling following STEMI. JACC Cardiovasc. Imaging 16, 159–171. https://doi.org/10.1016/j.jcmg.2022.04.002 (2023).

Sharrack, N. et al. The relationship between myocardial microstructure and strain in chronic infarction using cardiovascular magnetic resonance diffusion tensor imaging and feature tracking. J. Cardiovasc. Magn. Reson. 24, 66. https://doi.org/10.1186/s12968-022-00892-y (2022).

Basser, P. J. Inferring Microstructural Features and the physiological state of tissues from diffusion-weighted images. NMR Biomed. 8, 333–344. https://doi.org/10.1002/nbm.1940080707 (1995).

Scott, A. D. et al. The effects of noise in cardiac diffusion tensor imaging and the benefits of averaging complex data. NMR Biomed. 29, 588–599. https://doi.org/10.1002/nbm.3500 (2016).

Ma, S. et al. Accelerated cardiac diffusion tensor imaging using joint low-rank and sparsity constraints. IEEE Trans. Biomed. Eng. 65, 2219–2230. https://doi.org/10.1109/TBME.2017.2787111 (2018).

Shen, D., Wu, G. & Suk, H.-I. Deep learning in medical image analysis. Annu. Rev. Biomed. Eng. 19, 221–248. https://doi.org/10.1146/annurev-bioeng-071516-044442 (2017).

Chandra, S. S. et al. Deep learning in magnetic resonance image reconstruction. J. Med. Imaging Radiat. Oncol. 65, 564–577. https://doi.org/10.1111/1754-9485.13276 (2021).

Chen, Y. et al. AI-based reconstruction for fast MRI-A systematic review and meta-analysis. Proc. IEEE 110, 224–245 (2022).

Zbontar, J. et al. FastMRI: An open dataset and benchmarks for accelerated MRI. arXiv e-prints arXiv:1811.08839 (2018).

yang, y., Sun, J., Li, H. & Xu, Z. Deep ADMM-net for compressive sensing MRI. In Advances in Neural Information Processing Systems, vol. 29 (Curran Associates Inc., 2016).

Schlemper, J., Caballero, J., Hajnal, J. V., Price, A. & Rueckert, D. A deep cascade of convolutional neural networks for MR image reconstruction. In Information Processing in Medical Imaging, 647–658 (Springer, Cham, 2017).

Aggarwal, H. K., Mani, M. P. & Jacob, M. MoDL: Model-based deep learning architecture for inverse problems. IEEE Trans. Med. Imaging 38, 394–405 (2019).

Hyun, C. M., Kim, H. P., Lee, S. M., Lee, S. & Seo, J. K. Deep learning for undersampled MRI reconstruction. Phys. Med. Biol. 63, 135007. https://doi.org/10.1088/1361-6560/aac71a (2018).

Feng, C.-M., Yan, Y., Fu, H., Chen, L. & Xu, Y. Task transformer network for joint MRI reconstruction and super-resolution. In Medical Image Computing and Computer Assisted Intervention – MICCAI 2021, 307–317 (Springer, Cham, 2021).

Huang, J. et al. Swin transformer for fast MRI. Neurocomputing 493, 281–304 (2022).

Huang, J., Aviles-Rivero, A. I., Schönlieb, C.-B. & Yang, G. ViGU: Vision GNN U-net for fast MRI. In 2023 IEEE 20th International Symposium on Biomedical Imaging (ISBI), 1–5, https://doi.org/10.1109/ISBI53787.2023.10230600 (2023).

Yang, G. et al. DAGAN: Deep de-aliasing generative adversarial networks for fast compressed sensing MRI reconstruction. IEEE Trans. Med. Imaging 37, 1310–1321 (2018).

Chung, H. & Ye, J. C. Score-based diffusion models for accelerated MRI. Med. Image Anal. 80, 102479. https://doi.org/10.1016/j.media.2022.102479 (2022).

Huang, J., Aviles-Rivero, A. I., Schönlieb, C.-B. & Yang, G. Cdiffmr: Can we replace the gaussian noise with k-space undersampling for fast MRI? In Medical Image Computing and Computer Assisted Intervention-MICCAI 2023 (eds Greenspan, H. et al.) 3–12 (Springer, Cham, 2023).

Ferreira, P. F. et al. Accelerating cardiac diffusion tensor imaging with a U-net based model: Toward single breath-hold. J. Magn. Reson. Imaging 56, 1691–1704. https://doi.org/10.1002/jmri.28199 (2022).

Karimi, D. & Gholipour, A. Diffusion tensor estimation with transformer neural networks. Artif. Intell. Med. 130, 102330. https://doi.org/10.1016/j.artmed.2022.102330 (2022).

Aliotta, E., Nourzadeh, H. & Patel, S. H. Extracting diffusion tensor fractional anisotropy and mean diffusivity from 3-direction DWI scans using deep learning. Magn. Reson. Med. 85, 845–854. https://doi.org/10.1002/mrm.28470 (2021).

Li, H. et al. SuperDTI: Ultrafast DTI and Fiber Tractography with Deep Learning. Magn. Reson. Med. 86, 3334–3347. https://doi.org/10.1002/mrm.28937 (2021).

Tian, Q. et al. DeepDTI: High-fidelity six-direction diffusion tensor imaging using deep learning. Neuroimage 219, 117017. https://doi.org/10.1016/j.neuroimage.2020.117017 (2020).

Phipps, K. et al. Accelerated in vivo cardiac diffusion-tensor MRI using residual deep learning-based denoising in participants with obesity. Radiol. Cardiothor. Imaging 3, e200580. https://doi.org/10.1148/ryct.2021200580 (2021).

Tänzer, M. et al. Faster diffusion cardiac MRI with deep learning-based breath hold reduction. In Medical Image Understanding and Analysis, 101–115 (Springer, Cham, 2022).

Ronneberger, O., Fischer, P. & Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, 234–241 (Springer, Cham, 2015).

Aliotta, E., Nourzadeh, H., Sanders, J., Muller, D. & Ennis, D. B. Highly accelerated, model-free diffusion tensor MRI reconstruction using neural networks. Med. Phys. 46, 1581–1591. https://doi.org/10.1002/mp.13400 (2019).

Zhu, Y. et al. Direct diffusion tensor estimation using a model-based method with spatial and parametric constraints. Med. Phys. 44, 570–580. https://doi.org/10.1002/mp.12054 (2017).

Chen, G. et al. Angular upsampling in infant diffusion MRI using neighborhood matching in x-q space. Front. Neuroinf.https://doi.org/10.3389/fninf.2018.00057 (2018).

Huang, J., Wang, L., Chu, C., Liu, W. & Zhu, Y. Accelerating cardiac diffusion tensor imaging combining local low-rank and 3D TV constraint. Magn. Reson. Mater. Phys. Biol. Med. 32, 407–422. https://doi.org/10.1007/s10334-019-00747-1 (2019).

Teh, I. et al. Improved compressed sensing and super-resolution of cardiac diffusion MRI with structure-guided total variation. Magn. Reson. Med. 84, 1868–1880. https://doi.org/10.1002/mrm.28245 (2020).

Liu, S. et al. Accelerated cardiac diffusion tensor imaging using deep neural network. Phys. Med. Biol. 68, 025008. https://doi.org/10.1088/1361-6560/acaa86 (2023).

Huang, J., Wu, Y., Wu, H. & Yang, G. Fast MRI reconstruction: How powerful transformers are? In 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), 2066–2070, https://doi.org/10.1109/EMBC48229.2022.9871475 (2022).

Zhao, Z., Ye, J. C. & Bresler, Y. Generative models for inverse imaging problems: From mathematical foundations to physics-driven applications. IEEE Signal Process. Mag. 40, 148–163. https://doi.org/10.1109/MSP.2022.3215282 (2023).

Kingma, D. P. & Welling, M. Auto-encoding variational bayes. arXiv e-prints https://doi.org/10.48550/arXiv.1312.6114.

Goodfellow, I. et al. Generative adversarial networks. Commun. ACM 63, 139–144. https://doi.org/10.1145/3422622 (2020).

Song, Y. et al. Score-based generative modeling through stochastic differential equations. arXiv e-prints arXiv:2011.13456 (2020).

Lee, D., Yoo, J., Tak, S. & Ye, J. C. Deep residual learning for accelerated MRI using magnitude and phase networks. IEEE Trans. Biomed. Eng. 65, 1985–1995. https://doi.org/10.1109/TBME.2018.2821699 (2018).

Guo, P. et al. Over-and-under complete convolutional RNN for MRI reconstruction. In Medical Image Computing and Computer Assisted Intervention – MICCAI 2021, 13–23 (Springer, Cham, 2021).

Chen, E. Z., Wang, P., Chen, X., Chen, T. & Sun, S. Pyramid convolutional RNN for MRI image reconstruction. IEEE Trans. Med. Imaging 41, 2033–2047 (2022).

Huang, J., Xing, X., Gao, Z. & Yang, G. Swin deformable attention U-net transformer (SDAUT) for explainable fast MRI. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2022, 538–548 (Springer, Cham, 2022).

Korkmaz, Y., Dar, S. U. H., Yurt, M., Özbey, M. & Çukur, T. Unsupervised MRI reconstruction via zero-shot learned adversarial transformers. IEEE Trans. Med. Imaging 41, 1747–1763 (2022).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv e-prints https://doi.org/10.48550/arXiv.1409.1556 (2014).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 10012–10022 (2021).

Zhang, R., Isola, P., Efros, A. A., Shechtman, E. & Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 586–595 (2018).

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B. & Hochreiter, S. GANs trained by a two time-scale update rule converge to a local nash equilibrium. Advances in Neural Information Processing Systems30 (2017).

Anwar, S., Khan, S. & Barnes, N. A deep journey into super-resolution: A survey. ACM Comput. Surv. 5, 3. https://doi.org/10.1145/3390462 (2020).

Bhadra, S., Kelkar, V. A., Brooks, F. J. & Anastasio, M. A. On hallucinations in tomographic image reconstruction. IEEE Trans. Med. Imaging 40, 3249–3260. https://doi.org/10.1109/TMI.2021.3077857 (2021).

McGill, L.-A. et al. Heterogeneity of fractional anisotropy and mean diffusivity measurements by in vivo diffusion tensor imaging in normal human hearts. PLoS ONE 10, e0132360 (2015).

Blau, Y. & Michaeli, T. The perception-distortion tradeoff. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018).

Acknowledgements

This study was supported in part by the UKRI Future Leaders Fellowship (MR/V023799/1), BHF (RG/19/1/34160), the ERC IMI (101005122), the H2020 (952172), the MRC (MC/PC/21013), the Royal Society (IEC/NSFC/211235), the NVIDIA Academic Hardware Grant Program, Wellcome Leap Dynamic Resilience, EPSRC (EP/V029428/1, EP/S026045/1, EP/T003553/1, EP/N014588/1, EP/T017961/1), Imperial College London President’s PhD Scholarship and the Cambridge Mathematics of Information in Healthcare Hub (CMIH) Partnership Fund.

Author information

Authors and Affiliations

Contributions

Must include all authors, identified by initials, for example: J.H. and G.Y. conceptualised the study, P.F.F, A.A.R., C.B.S., A.D.S.,S.N.V., and G.Y. guided the methodology development, J.H., P.F.F., S.N.V., and G.Y. conceived the experiments, J.H., P.F.F., L.W., Y.W. and G.Y. conducted the experiments, J.H., P.F.F., L.W., Y.W., S.N.V., and G.Y. analysed the results, Z.K., M.D., R.R., R.D.S., and D.J.P. provided the data including labelling and clinical guidance. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

D.J.P receives research support from Siemens and is a stockholder and director of Cardiovascular Imaging Solutions. The RBH CMR group receives research support from Siemens Healthineers. Others have no Conflicts of Interest.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Huang, J., Ferreira, P.F., Wang, L. et al. Deep learning-based diffusion tensor cardiac magnetic resonance reconstruction: a comparison study. Sci Rep 14, 5658 (2024). https://doi.org/10.1038/s41598-024-55880-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-55880-2

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.