Abstract

Among the most important questions that await an answer in seismology, perhaps one is whether there is a correlation between the magnitudes of two successive seismic events. The answer to this question is considered of fundamental importance given the potential effect in forecasting models, such as Epidemic Type Aftershock Sequence models. After a meta-analysis of 29 papers, we speculate that given the lack of studies carried out with realistic physical models and given the possible bias due to the lack of events recorded in the experimental seismic catalogs, important improvements are necessary on both fronts to be sure to provide a statistically relevant answer.

Similar content being viewed by others

Introduction

The correlation between variables is closely linked to the concept of forecast. In fact, correlations are useful for prediction, even when there is no known causal relationship between the two variables. Clearly, a better model is often possible if a causal mechanism can be determined. In fact, the search for correlations and possibly causal relationships between variables is a constant challenge for statisticians. The fields of applications are obviously the most diverse and range from economic fields such as applications in finance, marketing and risk management1,2 to more technical-scientific uses such as seismic forecasting3,4,5. In the earthquake occurrence, magnitude correlation has much older origins than is thought. In fact, this problem is closely related to seismic predictability. More precisely, from the hypothesis that the seismic process can be described as Self Origanized Critical (SOC)6 phenomena, an intrinsic unpredictability may be attributed to the seismic occurrence. However, strong opposition to this hypothesis has been made by the seismological community. In particular7 showed that the Southern California seismic catalog is not invariant under event reshuffling and therefore, with good confidence level, the theory that earthquakes are a SOC process could be discarded and could no longer be used as a bypass for avoid answering the question “can earthquakes be predicted?”. From this moment on, a back and forth of comments and articles on the subject began. In particular, just as the temporal clustering of earthquakes has been confirmed thanks to Omori observation8, the spatial clustering is now well accepted9. In practice, all the statistical laws that are universally accepted have been implemented in the so-called Epidemic Type Aftershock Sequence (ETAS) model, which represents the gold standard for forecasting and seismic clustering10,11,12,13,14,15,16. The magnitude correlation is discussed, not just to understand the physics of the process, but also to understand whether further improvements to the ETAS model are necessary, i.e., implement the phenomenon of clustering in magnitude. It is important to underline how a modification of an important forecasting tool such as the ETAS model can be of vital importance to obtain even a small improvement on a probable seismic forecast.

However, the study of correlations in seismology is not simple and immediate. In this case the problem is complicated by the intrinsic incompleteness of the experimental catalogs: after the occurrence of a large earthquake, not all events can be recorded due to the overlapping of coda waves. For this reason, the events recorded in the seismic catalogs are not all those that actually occur17,18,19,20. By studying these catalogs, a statistically significant correlation is observed between the magnitudes of subsequent events. The overwhelming majority of the debate focuses precisely on this point. In fact, the presence of these clear correlations can be a spurious effect due to the incompleteness of the catalog. In other words, the criticism of the existence of correlations is related to the fact that, if the catalog were complete, the observed magnitudes would be independent.

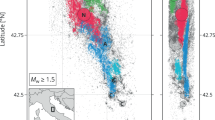

For example, in Fig. 1, from21, the quantity \(\delta P(m_0)\) for different magnitude threshold \(m_{th}\) is plotted. The distribution \(\delta P(m_0)\), as defined in22, represents the difference between the probability \(P(\Delta m < m_0)\) and \(P(\Delta m^* < m_0)\), namely the probability to observe the number of couples of subsequent events with a magnitude difference \(< m_0\). Here \(\Delta m\) is the magnitude difference between the subsequent events and \(m^*\) represents the magnitude difference made with random chosen event inside the catalog. Therefore the magnitude difference computed with \(m^*\) is uncorrelated by definition. In absence of correlations \(\delta P(m_0)\) should not significantly deviate from 0 for all \(m_0\). In Fig. 1, one can observe that reducing \(m_{th}\) the deviation from 0 become more important and the fact that the catalog considered becomes more incomplete for smaller values of \(m_{th}\) suggests that this trend is a direct consequence of the catalog incompleteness.

In order to address the short-term aftershock incompleteness, physical models (Olami-Feder-Christensen (OFC)-like) were used to produce complete synthetic seismic catalogs23. In addition, dynamical scaling topics were also proposed24,25 to explain the correlation between magnitude. In these studies the main assumption is that the magnitude difference between two event i and j fixes a characteristic time, \(\tau _{ij}\), so that the conditional rate is magnitude independent when time is rescaled by \(\tau _{ij}\). In practice \(\rho (m_i(t_i)|m_j(t_j)) = F(\frac{t_i-t_j}{\tau })\) . During the last years a further branching of the studies has been observed, some of which implement an ETAS model with the correlations between magnitude. They also test if this modified ETAS model matches better the experimental data than the original ones, while others that try to understand if there is clustering between the magnitudes with alternative methods.

Results

Analysis and classification

In Table 1 we present a list of 29 papers published between the years 1989 and 2022 to demonstrate that the answer to the question “Do earthquakes in a cluster presents magnitude correlation?” has not yet been provided and further studies are needed. The dataset acronyms used in the studies are shown in Table 2.

Starting from the 1989 study by Bak and Tang6, a series of articles, both statistical and heuristic, and also based on physical models (i.e. spring block models) have been published to give a contribution to the understanding of the phenomenon of correlation between magnitude. This study is also known to have paved the way for statistical mechanics to study the phenomenon of seismic events. In addition to this, it builds a bridge between the community of physicists and of seismologists.

However, analyzing all the studies considered to date, we find that 51% of them reject the hypothesis of magnitude correlation, while the 49%, observe a non-zero correlation.

Investigating the studies chronologically (Fig. 2), we note that the percentage of scientists that accept the magnitude correlation hypothesis it slightly increased over the time between the 2004 and 2009. While, from 2010 on-wards, a substantially constant trend between 40% and 50% is observed, indicating that the debate is still ongoing, and that an answer has not yet been universally accepted by the entire seismological community. This is also confirmed by observing the cumulative number of acceptance and rejection as a function of time of the correlation hypothesis in Fig. 3. In fact, in recent years, the rate of affirmative and negative replies seems to be comparable.

In Fig. 5, studies are separated by type of approach. We observe that the majority of published papers are based on statistical studies. For example, they analyse and test experimental data or verify whether an ETAS model, with magnitude correlations implemented, can fit those experimental data better than an ETAS model with independent magnitudes. It should be added that the statistical studies carried out on real data could even suffer of an imperfect distinction between clustered events (foreshocks, mainshock and aftershocks) and background events. In fact, even slightly mixing the two types of events could lead to a reduction of correlation evidence since, for the background activity, the independence between magnitudes seems to be universally accepted. In practice, even an improvement in declustering techniques could allow a step forward in the study of this phenomenon.

Due to the heterogeneity of the studies in terms of approach to the problem and in terms of experimental data used, we will proceed with a more detailed statistical analysis to make a fair comparison.

Sample size

In this section we will try to understand if the studies are homogeneous in terms of sample size or not. Given the poor statistics of papers, we use bootstrap as a re-sampling technique and compute the p-value. For the procedure we take into consideration only those papers in which the sample size is well declared or easily obtainable from an online seismic catalog. With this choice we get \(s_{no}=(293405,101680,46937,46055,400000,11906,11906,452943,20000,32476)\) and \(s_{yes}=(77955,9586,1000,4984,8502,85862,340000,100000,11535,320000)\) which represent the vectors containing the number of samples considered in the studies that rejected the hypothesis and do not reject the hypothesis, respectively. After re-sampling with replacement obtained numerically with \(N=100,000\) realizations, it is possible to compute the average of each sample, \(\theta _x=(\theta ^i_x)_{i=1,N}\), where the entries of x are yes or no, and calculate the distribution of the difference \(\theta ^i_{no}-\theta ^i_{yes}\). The distributions of the means obtained by dividing the cases yes and no are shown in Fig. 4a, while the distribution of differences is shown in Fig. 4b. For the calculation of the p-value we employ a non-parametric version of a t-test, i.e., a permutation test. In practice we find a p-value counting all the results as or more extreme than the observed result (the difference of the original samples mean) and divide by N. We obtain \(p=0.553\), which means that with a high level of confidence cannot reject the hypothesis that the number of samples, yes and no, are different. Therefore we conclude that the studies are homogeneous in terms of the number of samples used and a comparison can be made correctly.

Results of the bootstrapping inference. (a) Bootstrap distributions of the number of events contained in the data-sets considered in the studies that has rejected the hypothesis of magnitude correlation (no) and accepted the hypothesis (yes). The mean was chosen as statistics. (b) The distribution of the difference \(\theta _{no}-\theta _{yes}\).

Google Scholar citations

Proceeding similarly to the analysis on the size of the samples, in this section we show how not even in this case there is a preponderance of a preference side in the google scholar citations relative to the studies considered. In particular, after the bootstrapping inference procedure, the p-value found is \(p = 0.45\). Therefore quantitatively, even in terms of popularity in the literature, the studies can be considered homogeneous.

Catalog incompleteness

To understand whether in the studies considered there is an association between the accepted hypothesis and the consideration or not of the short-term incompleteness in the instrumental catalog, we use the contingency tables and perform a \(\chi ^2\)-test. Comparison between the observed (Table 3a) and the expected distribution (Table 3b) distribution qualitatively suggests no association between the variables. More quantitatively, the calculation of \(\chi ^2\) yields \(\chi ^2=0.363\) which, for one degree of freedom, confirms the result with a high level of confidence. For this reason we observe no bias in the consideration or not in choosing the incompleteness and therefore the studies can be considered homogeneous also in terms of this variable.

Discussion

Limitations

The purpose of a meta-analysis should be to combine the results in the literature and distill a result that gives a quantitatively and statistically accepted answer, all carried out on the basis of statistical tests. It is important to take into account that not only the single p-value is needed for the purposes of a fair comparison, namely, it is necessary to evaluate the importance of a result and not only the probability of it52,53. For this reason it is strongly suggested to study the Effect Size of the statistical result of each study carried out. For example, if Kendall’s Tau is available, one can easily convert it to study the Effect Size54. Unfortunately this is not possible to do in the present analysis due to a systematic lack of direct correlation tests. In this Section, we show the limitations of each type of study considered.

Heuristic approach

Although heuristic methods are not statistically useful for making inference, they pave the way for new ideas of development and attack on the problem under consideration. The case of the Bak and Tang6 paper is a striking example. The results of7 and28, both heuristics, cancel each other out and do not affect the analysis on the totality of the papers considered.

Physical approach

Although physical methods have the advantage of completely avoiding the short term aftershock incompleteness problem, they are subject to the limit of model imperfection. In fact, nowadays the development of these models proceed precisely in order to obtain a more faithful description of seismicity. In Table 1 There are 4 papers that use this type of approach, 3 of which claim an absence of correlations between magnitudes. Even if the number of results based on a physical model is very small, in this case we would be led to conclude that the absence of correlations between magnitudes is the most likely answer. Unfortunately, the greater presence of correlations between magnitudes is hypothesized to be present between clustered events (mainshock-aftershocks sequences) rather than between background events. Since the physical models used in the studies are OFC type, the answer is biased. In fact, it is well known that OFC-like model55 do not produce aftershocks. This could be consistent with the results in the literature that give a greater probability of rejecting the phenomenon of magnitude correlations.

Statistical approach

Statistical methods are the most numerous in this field of research. Unfortunately, only a few of these directly calculate correlations between magnitudes using a dataset. The others, on the other hand, define and use epidemic models with or without the implementation of the phenomenon of magnitude correlation and evaluate whether the model describes seismicity well.

Unfortunately, with the data obtained from the analyzed scientific studies it is not possible to reach a statistically significant conclusion. In order to carry out a test and to be able to carry out a fair comparison it is necessary, in addition to greater unanimity on the result, also to perform more direct studies on the correlation between magnitudes in order to be able to provide a statistically significant answer on the debate concerning the correlation between magnitude. We expect the result of this analysis to push both geophysics and statistical seismology communities to continue addressing the problem.

Our personal opinion is not to abandon the use of physical models just because they do not perfectly describe realistic seismicity but rather to continue the research towards ever more realistic models. In particular, minimal OFC models have recently been developed, which contain the ingredient of “relaxation”, the so-called Olami-Feder-Christensen with Relaxation (OFCR) models56,57,58,59,60,61,62. In these models aftershocks are observed and in principle these more realistic models could be used to understand more deeply something about the magnitude correlation. For statistical side we infer the improving the quality of instrumental data is fundamental. In fact, as foreshock hypothesis63, “a better knowledge of microseismicity is necessary, which can be done by better assessing issues of data completeness at low magnitudes and/or by improving existing seismic networks to decrease \(M_c\)...”. In fact, the preliminary question on which the multiplicity of studies has focused is whether the apparent correlation between magnitude that is observed is only a spurious effect of the experimental catalog due to its incompleteness, or whether statistically relevant regardless of the lack of data. Therefore, both better quality data sets, and the development of physical models that allow simulating catalogs with the absence of the problem of incompleteness, could be the points on which to put more effort into in the future. Finally, we want to remark that the result of this debate is of fundamental importance for the future of statistical seismology. In fact, it represents a turning point for the development of ETAS models which represent the gold standard for seismic prediction. Implementing or not the correlation between magnitude in an epidemic model represents the next step for the study of clustering and seismic earthquake forecasting.

Data availibility

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Skintzi, V. & Xanthopoulos-Sisinis, S. Evaluation of correlation forecasting models for risk management. J. Forecast. 26, 497–526 (2007).

Ferber, R. Sales forecasting by correlation techniques. J. Mark. 18(3), 219–232 (1954).

Hirose, F., Maeda, K. & Kamigaichi, O. Efficiency of earthquake forecast models based on earth tidal correlation with background seismicity along the Tonga-Kermadec trench. Earth Planets Space 74, 1 (2022).

Pierotti, L., Fidani, C., Facca, G., & Gherardi, F. Earthquake Forecasting Probability by Statistical Correlations Between Low to Moderate Seismic Events and Variations in Geochemical Parameters (2022).

Geller, R. J., Jackson, D. D., Kagan, Y. Y. & Mulargia, F. Earthquakes cannot be predicted. Science 275(5306), 1616–1616 (1997).

Bak, P. & Tang, C. Earthquakes as a self-organized critical phenomenon. J. Geophys. Res.: Solid Earth 94(B11), 15635–15637 (1989).

Yang, X., Du, S. & Ma, J. Do earthquakes exhibit self-organized criticality?. Phys. Rev. Lett. 92, 228501 (2004).

Omori, F. On the after-shocks of earthquakes. J. Coll. Sci. 7, 1 (1894).

Kagan, Y. Y. & Knopoff, L. Spatial distribution of earthquakes: the two-point correlation function. Geophys. J. Roy. Astron. Soc. 62(2), 303–320 (1980).

Ogata, Y. Statistical models for earthquake occurrences and residual analysis for point processes. J. Am. Stat. Assoc. 83(401), 9–27 (1988).

Ogata, Y. Space-time point-process models for earthquake occurrences. Ann. Inst. Stat. Math. 50(2), 379–402 (1998).

Helmstetter, A. & Sornette, D. Importance of direct and indirect triggered seismicity in the etas model of seismicity. Geophys. Res. Lett. 30, 1 (2003).

Console, R., Jackson, D. & Kagan, Y. Using the etas model for catalog declustering and seismic background assessment. Pure Appl. Geophys. 167, 819–830 (2007).

Lombardi, M. & Marzocchi, W. The etas model for daily forecasting of Italian seismicity in the CSEP experiment. Ann. Geophys. 53, 1 (2010).

Zhuang, J. Next-day earthquake forecasts for the japan region generated by the etas model. Earth Planets Space 63, 207–216 (2011).

Zhuang, J. Long-term earthquake forecasts based on the epidemic-type aftershock sequence (etas) model for short-term clustering. Res. Geophys. 2, 1 (2012).

Lippiello, E., Cirillo, A., Godano, G., Papadimitriou, E. & Karakostas, V. Real-time forecast of aftershocks from a single seismic station signal. Geophys. Res. Lett. 43(12), 6252–6258 (2016).

Hainzl, S. Rate-Dependent Incompleteness of Earthquake Catalogs. Seismol. Res. Lett. 87(2A), 337–344 (2016).

de Arcangelis, L., Godano, C. & Lippiello, E. The overlap of aftershock coda waves and short-term postseismic forecasting. J. Geophys. Res.: Solid Earth 123(7), 5661–5674 (2018).

Lippiello, E., Godano, C. & de Arcangelis, L. The relevance of foreshocks in earthquake triggering: A statistical study. Entropy 21(2), 1 (2019).

Davidsen, J. & Green, A. Are earthquake magnitudes clustered?. Phys. Rev. Lett. 106, 108502 (2011).

Lippiello, E., de Arcangelis, L. & Godano, C. Influence of time and space correlations on earthquake magnitude. Phys. Rev. Lett. 100, 038501 (2008).

Lippiello, E., Godano, C. & de Arcangelis, L. Magnitude correlations in the Olami-Feder–Christensen model. EPL (Europhys. Lett.) 102, 59002 (2013).

Lippiello, E., Godano, C. & de Arcangelis, L. Dynamical scaling in branching models for seismicity. Phys. Rev. Lett. 98, 098501 (2007).

Lippiello, E., Bottiglieri, M., Godano, C. & de Arcangelis, L. Dynamical scaling and generalized Omori law. Geophysical Research Letters 34(23), 1 (2007).

Christensen, K., Danon, L., Scanlon, T. & Bak, P. Unified scaling law for earthquakes. Proc. Natl. Acad. Sci. 99, 2509–2513 (2002).

Felzer, K. R., Abercrombie, R. E. & Ekstrom, G. A common origin for aftershocks, foreshocks, and multiplets. Bull. Seismol. Soc. Am. 94(1), 88–98 (2004).

Corral, A. Comment on “do earthquakes exhibit self-organized criticality?’’. Phys. Rev. Lett. 95, 10 (2005).

Helmstetter, As., Kagan, Y. Y. & Jackson, David D. Comparison of short-term and time-independent earthquake forecast models for southern california. Bull. Seismol. Soc. Am. 96(1), 90–106 (2006).

Corral, A. Dependence of earthquake recurrence times and independence of magnitudes on seismicity history. Tectonophysics 424(3), 177–193 (2006).

Caruso, F., Pluchino, A., Latora, V., Rapisarda, A. & Vinciguerra, S. Self-organized criticality and earthquakes. AIP Conf. Proc. 965(1), 281–284 (2007).

Sarlis, N., Skordas, E. & Varotsos, P. Multiplicative cascades and seismicity in natural time. Phys. Rev. E 80, 022102 (2009).

Lippiello, E., de Arcangelis, L. & Godano, C. Time, space and magnitude correlations in earthquake occurrence. Int. J. Mod. Phys. B 23, 5583–5596 (2009).

Yoder, M. R., Turcotte, D. L. & Rundle, J. B. Record-breaking earthquake intervals in a global catalogue and an aftershock sequence. Nonlinear Process. Geophys. 17(2), 169–176 (2010).

Van Aalsburg, J., Newman, W. I., Turcotte, D. L. & Rundle, J. B. Record-breaking earthquakes. Bull. Seismol. Soc. Am. 100(4), 1800–1805 (2010).

Zhang, G., Tirnakli, U., Wang, L. & Chen, T. Self organized criticality in a modified Olami-Feder–Christensen model. Eur. Phys. J. B 82, 83–89 (2010).

Sarlis, N. V. Magnitude correlations in global seismicity. Phys. Rev. E 84, 022101 (2011).

Lin, G., Shearer, P. M. & Hauksson, E. Applying a three-dimensional velocity model, waveform cross correlation, and cluster analysis to locate southern california seismicity from 1981 to 2005. J. Geophys. Res.: Solid Earth 112(B12), 1 (2007).

Lippiello, E., Godano, C. & de Arcangelis, L. The earthquake magnitude is influenced by previous seismicity. Geophys. Res. Lett. 39(5), 1 (2012).

Shearer, Peter, Hauksson, Egill & Lin, Guoqing. Southern california hypocenter relocation with waveform cross-correlation, part 2: Results using source-specific station terms and cluster analysis. Bull. Seismol. Soc. Am. 95(3), 904–915 (2005).

Davidsen, J., Kwiatek, G. & Dresen, G. No evidence of magnitude clustering in an aftershock sequence of nano- and picoseismicity. Phys. Rev. Lett. 108, 038501 (2012).

Kwiatek, G., Plenkers, K., Nakatani, M., Yabe, Y., Dresen, G., & JAGUARS-Group. Frequency-magnitude characteristics down to magnitude -4.4 for induced seismicity recorded at mponeng gold mine, south africa. Bull. Seismol. Soc. Am. 100(3), 1165–1173 (2010).

Nichols, K. & Schoenberg, F. P. Assessing the dependency between the magnitudes of earthquakes and the magnitudes of their aftershocks. Environmetrics 25(3), 143–151 (2014).

Shcherbakov, R., Davidsen, J. & Tiampo, K. F. Record-breaking avalanches in driven threshold systems. Phys. Rev. E 87, 052811 (2013).

Spassiani, I. & Sebastiani, G. Exploring the relationship between the magnitudes of seismic events. J. Geophys. Res.: Solid Earth 121(2), 903–916 (2016).

Hauksson, E., Yang, W. & Shearer, P. M. Waveform relocated earthquake catalog for southern California (1981 to June 2011). Bull. Seismol. Soc. Am. 102(5), 2239–2244 (2012).

Spassiani, I. & Sebastiani, G. Magnitude-dependent epidemic-type aftershock sequences model for earthquakes. Phys. Rev. E 93, 042134 (2016).

Stallone, A. & Marzocchi, W. Empirical evaluation of the magnitude-independence assumption. Geophys. J. Int. 216(2), 820–839 (2018).

Zambrano, M. A. F. Magnitude correlations and criticality in a self-similar model of seismicity. University of Calgary. The Vault: Electronic Theses and Dissertations (2019).

Nandan, S., Ouillon, G. & Sornette, D. Magnitude of earthquakes controls the size distribution of their triggered events. J. Geophys. Res.: Solid Earth 124(3), 2762–2780 (2019).

Nandan, S., Ouillon, G. & Sornette, D. Are large earthquakes preferentially triggered by other large events?. J. Geophys. Res.: Solid Earth 127(8), 1 (2022).

Kirk, R. E. Practical significance: A concept whose time has come. Educ. Psychol. Measur. 56(5), 746–759 (1996).

Shaver, J. P. Chance and nonsense: A conversation about interpreting tests of statistical significance, part 1. Phi Delta Kappan 67, 57–60 (1985).

Walker, D. Jmasm9: Converting Kendall’s tau for correlational or meta-analytic analyses. J. Mod. Appl. Stat. Methods Copyright 2, 525–530 (2003).

Burridge, R. & Knopoff, L. Model and theoretical seismicity. Bull. Seismol. Soc. Am. 57(3), 341–371 (1967).

Jagla, E. A. Realistic spatial and temporal earthquake distributions in a modified Olami-Feder–Christensen model. Phys. Rev. E 81(4), 046117 (2010).

Jagla, K. A., & Kolton, A. B.. A mechanism for spatial and temporal earthquake clustering. J. Geophys. Res.: Solid Earth, 115(B5), 1. 2010.

Jagla, A., Landes, E. F. & Rosso, A. Viscoelastic effects in avalanche dynamics: A key to earthquake statistics. Phys. Rev. Lett. 112, 174301 (2014).

Lippiello, E., Petrillo, G., Landes, F. & Rosso, A. Fault heterogeneity and the connection between aftershocks and afterslip. Bull. Seismol. Soc. Am. 109(3), 1156–1163 (2019).

Petrillo, G., Lippiello, E., Landes, F. P. & Rosso, A. The influence of the brittle-ductile transition zone on aftershock and foreshock occurrence. Nat. Commun. 11(1), 1–10 (2020).

Lippiello, E., Petrillo, G., Landes, F. & Rosso, A. The genesis of aftershocks in spring slider models. Stat. Methods Model. Seismogenesis 1, 131–151 (2021).

Petrillo, G., Rosso, A. & Lippiello, E. Testing of the seismic gap hypothesis in a model with realistic earthquake statistics. J. Geophys. Res.: Solid Earth 127(6), 1 (2022).

Mignan, A. The debate on the prognostic value of earthquake foreshocks: A meta-analysis. Sci. Rep. 4, 4099 (2014).

Acknowledgements

This research activity has been supported by MEXT Project for Seismology TowArd Research innovation with Data of Earthquake (STAR-E Project), Grant No.: JPJ010217. We thank Prof. Eugenio Lippiello for the useful discussions and both anonymous referees for the constructive comments.

Author information

Authors and Affiliations

Contributions

G.P and J.Z. contributed to the research, numerical results and writing of the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Petrillo, G., Zhuang, J. The debate on the earthquake magnitude correlations: a meta-analysis. Sci Rep 12, 20683 (2022). https://doi.org/10.1038/s41598-022-25276-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-25276-1

This article is cited by

-

Tracing the sources of paleotsunamis using Bayesian frameworks

Communications Earth & Environment (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.