Abstract

Behavioural studies revealed that the dog–human relationship resembles the human mother–child bond, but the underlying mechanisms remain unclear. Here, we report the results of a multi-method approach combining fMRI (N = 17), eye-tracking (N = 15), and behavioural preference tests (N = 24) to explore the engagement of an attachment-like system in dogs seeing human faces. We presented morph videos of the caregiver, a familiar person, and a stranger showing either happy or angry facial expressions. Regardless of emotion, viewing the caregiver activated brain regions associated with emotion and attachment processing in humans. In contrast, the stranger elicited activation mainly in brain regions related to visual and motor processing, and the familiar person relatively weak activations overall. While the majority of happy stimuli led to increased activation of the caudate nucleus associated with reward processing, angry stimuli led to activations in limbic regions. Both the eye-tracking and preference test data supported the superior role of the caregiver’s face and were in line with the findings from the fMRI experiment. While preliminary, these findings indicate that cutting across different levels, from brain to behaviour, can provide novel and converging insights into the engagement of the putative attachment system when dogs interact with humans.

Similar content being viewed by others

Introduction

The unique relationship between (pet) dogs and their human caregivers bears a remarkable resemblance to the attachment bond of human infants with their mothers: dogs are dependent on human care and their behaviour seems specifically geared to engage their human partner’s caregiving system1. Some researchers (e.g.2,3,4) have used concepts and methodologies of the human attachment theory5,6 to investigate whether the dog–human relationship conforms to the characteristics of the human attachment bond (reviewed in7).

In humans, the original theory of attachment has focused on parental attachment, the strong and persistent emotional tie between the child and the caregiver that develops very early in life and serves to protect the child5,6. The proximate function is to maintain the proximity between the mother and the child, especially in stressful or dangerous situations8. To distinguish true attachment from other affectional bonds, four behavioural criteria were proposed: (a) staying near to and resisting separation from the attachment figure (proximity maintenance), (b) feeling distress upon involuntary separation from the attachment figure (separation distress), (c) using the attachment figure as a base for exploring the environment free of anxiety (secure base), (d) seeking out the attachment figure for contact and assurance in times of emotional distress (safe haven)9. A classic test paradigm to characterize attachment relationships is the Strange Situation Procedure (SSP), a set of short episodes of mildly stressful situations of separation and reunion in an unfamiliar environment10,11,12. Comparative psychologists not only have described the similarities of the human mother–child bond and the human–dog relationship13,14,15, but also sought empirical evidence by applying modified versions of the Strange Situation Procedure. Indeed, researchers found clear evidence of all four attachment criteria in dogs2,3,16,17,18,19,20,21,22,23,24. Even more striking, the secure base effect in dogs is specific and tuned to the bond with the caregiver25,26.

That the bond between (adult) dogs and the human caregiver is similar to the one between human infants and their mother is an exciting hypothesis, but so far it relies mainly on behavioural and endocrinal evidence. A rigorous test of this hypothesis requires knowledge of the neural networks associated with attachment-related processes. So far, we know that humans share with almost all vertebrates a basic diencephalic and tegmental “social behaviour network”27.

Neuroimaging studies of human mothers viewing their children showed that intimate parent–child emotional states are connected to functionally specialized brain areas28. This includes, foremost, areas of the so-called limbic system, including the amygdala, the ventral striatum, the ventral tegmental area (VTA), the globus pallidus (GP29) and the substantia nigra, as well as the hippocampus30. These areas, in humans but also more generally in mammals, are usually associated with affective processes, and may thus support the activation of human attachment-related functions in parenting. In addition, the orbitofrontal cortex (OFC) and the periaqueductal grey (PAG31), the dorsal anterior cingulate cortex (dACC), the anterior insula (AI) and the ventrolateral prefrontal cortex (VLPFC32,33) show increased activation in mothers upon seeing their own child. Especially seeing their own child’s smiling face caused increased activation of these mesocorticolimbic reward brain regions in their mothers34. Unfortunately, it is not clear if the same brain regions are activated when the child faces its mother.

Several recent studies have investigated how dogs perceive humans, and in particular our faces. These revealed that dogs can assess humans’ attentional states35,36, and discriminate their caregiver from another familiar person37, or from a stranger38; the latter was confirmed by converging evidence from two studies using different methods, combining active choice on a touchscreen device39 and passive looking preference using an eye-tracking device40. Especially interesting is the dog’s ability to discriminate between positive and negative facial expressions of humans and to react appropriately conferring to the valence of the faces41,42,43,44,45,46 (for review see47).

Neuroimaging provides an excellent window into the working brain of humans during perception and the associated mental processes, and this non-invasive approach has now also become available to study dogs and their brains. Training dogs to remain still, wakeful, and attentive during scanning was first achieved a decade ago48,49, and soon it became the preferred non-invasive research technique to understand the neural correlates of canine cognitive functions50,51,52,53,54 (reviewed in55). Six previous studies have already investigated the dog’s brain activities while they watched human faces. While a lack of reporting and analysis standards makes it hard to compare the findings in terms of the precise locations of brain areas that are activated, researchers consistently found areas in the canine temporal lobe that responded significantly more to dynamic56 or static57 images of human faces than to the respective stimuli of everyday objects, especially showing activations in the temporal cortex and caudate nucleus when viewing happy human faces58. Another study59 identified separate temporal brain areas for processing human and dog faces. Furthermore, a recent study60 investigated whether dogs and humans showed a species- or face-sensitivity when being presented with unknown human and dog faces and their occiputs. In contrast to the human participants, they found that the majority of the visually-responsive cortex of the dogs showed a greater conspecific- than face-preference. Two studies, however, found no difference between faces (humans, dogs) and a (scrambled) visual control stimulus56,61. Yet, activity related to internal features of human faces (in contrast to a mono-coloured control surface) in temporo-parietal and occipital regions61 could be identified.

The dog’s great sensitivity to the human face, especially when showing emotional expressions, seemed to us a promising starting point for the investigation of the dog’s neuronal processing of their human attachment figure. Would dogs’ brain responses be similar to those of humans when watching videos of their beloved pet30? To investigate this, and cross-validate our methods, we chose a multi-method approach. Using the same stimuli and, where possible, the same dog subjects, we combined neuroimaging (Experiment 1), eye-tracking (Experiment 2), and behavioural testing (Experiment 3) to explore the canine attachment system on multiple levels. Neuroimaging allowed us to investigate the neural correlates while dogs perceived their human caregiver in comparison to other humans, eye-tracking provided further insights on how dogs perceived the human models focusing on the dogs’ visual exploration, and preference tests explored the dogs’ spontaneous and unrestricted behaviour towards the human faces. In all three experiments, we presented videos transforming from neutral to either happy or angry facial expressions (continuously called “morph videos”) of their human caregiver (caregiver) and an unfamiliar person (stranger). We used dynamic instead of static stimuli to facilitate face recognition by increasing ecological validity and to increase brain activation by supposedly stronger attention (e.g.62). Further, varying emotional facial expressions enabled us to investigate the potential interplay of the attachment system and emotions since both emotions and attachment activate similar brain regions (e.g.63 for review of emotion processing areas). Finally, to control for familiarity26, our study is the first that presented, in addition to the caregiver, the same expressions of another person well-known to the individual dog (familiar person).

In addition to the three experiments we conducted a caregivers’ survey to assess how many hours the primary caregiver and the familiar person actively spent time with the dog per day during the week and on the weekends and the dogs’ age when adopting them. This aimed at getting a glimpse into the dog–human relationship quality and the dogs’ time living together with the caregiver. The dogs of our study spent almost their entire life together with their caregiver and were also involved in many regular activities with them, e.g. daily walks, dog school training and events, or Clever Dog Lab visits for study participation or intense research trainings over years. We thus expected a secure dog–human relationship, and rather subtle attachment-related individual differences across the dogs; this is why we did not test the dogs specifically with the SSP test setup.

Instead, we designed an experiment whose task setup was very similar to the ones of the other two experiments (fMRI, eye-tracking), including a test arena with two computer monitors on the ground simultaneously displaying the same visual stimuli as in these experiments, but where dogs were allowed to immediately react to the stimuli and move freely during the entire test trials.

Since this is the first fMRI study investigating the neural correlates of attachment in dogs (Experiment 1), we aimed to explore whether dogs, similar to humans, recruit the limbic system (e.g. insula, amygdala, dorsal cingulate gyrus30,32,33) and brain regions also associated with reward processing (e.g. caudate nucleus34) when viewing their human caregiver compared to a stranger or familiar person. During the eye-tracking tests (Experiment 2), we anticipated that the dogs would fixate and revisit the caregiver stimuli comparatively more on the screen than the other presented human faces. Based on previous behavioural studies26,37,39,40, we expected the dogs to show a preference for their caregiver over either a stranger or even another familiar person, but this would vary with the facial emotion expressed. We expected the angry facial expression (negative emotion) to evoke more attention and arousal due to being a potential threat or being connected to former unpleasant experiences with angry humans compared to happy faces (positive emotion). The happy faces we predicted to be perceived more positively and with pleasant expectations, e.g. praise, joy, reward64. In the behavioural preference tests (Experiment 3), we expected the dogs to spend more time on the “caregiver’s side” of the test arena, and to prefer to look at and to approach the caregiver stimuli compared to the stranger and the familiar person displayed.

Results

Experiment 1 (fMRI task)

First, we explored the main effects of face identity (caregiver, familiar person, stranger) and emotion (happy, angry), and their interaction. Regarding the main effect of emotion, we found differential activation of hippocampal areas with increased activation in the left hippocampus for happy morph videos and in the right parahippocampal gyrus for angry morph videos. The main effect of face identity revealed activation changes in areas such as the insula, the bilateral dorsal cingulate cortex, and the postcruciate gyrus. The emotion × face identity interaction effect revealed a difference in activation when viewing the different human models, depending on the emotion displayed (see Table 1 for details).

We further explored the differences in activation depending on the human model regardless of the emotion displayed. In comparison to the familiar person, visual presentation of the caregiver elicited increased activation in brain regions such as the bilateral rostral cingulate, the left parahippocampal gyrus, right olfactory gyrus, as well as rostral temporal and parietal regions (see Supplementary Table S3, for details and Fig. 1). Comparing activation between caregiver and stranger, visual presentation of the caregiver led to increased activation in the bilateral insula, the right rostral cingulate gyrus, the left parahippocampal gyrus, as well as rostral parietal and temporal regions. Viewing the stranger increased activation mainly in the bilateral frontal lobe, brainstem, cerebellum, postcruciate gyrus, and the left insula. When comparing visual presentation of the stranger with the familiar person, we found similar activation patterns but with additional activation in the occipital lobe, the right insula, and temporal regions. Comparing visual presentation of the familiar person to the stranger revealed increased activation in the brainstem and frontal lobe, as well as increased activation in the left cerebellum, right occipital lobe, and the left caudal temporal lobe in comparison to the caregiver (see Supplementary Table S3).

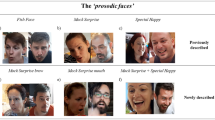

Visual presentation of caregiver (compared to the familiar person or stranger; independent of emotional facial expression) elicited activation increases in areas associated with the attachment system in humans, whereas visual presentation of the stranger (compared to the familiar person) mainly recruited motor and visual processing regions. The caregiver revealed activation in caudate regions for both happy and angry emotional facial expressions. Results are displayed at p < 0.005 with a minimum cluster size of 5 voxels (see Table 2 for details), projected onto the mean structural image derived from all dogs. Coordinates refer to the canine breed-averaged atlas65. The first sagittal and coronal planes (a, first row) and transverse plane (c, last row) show the anatomical locations caudal (C), dorsal (D), and right hemisphere (R); all sagittal and coronal planes displayed have the same orientation. Group-based comparison of caregiver against familiar person (caregiver > familiar person), caregiver against stranger (caregiver > stranger) and stranger against familiar person (stranger > familiar person) are displayed (a) regardless of emotional facial expression, (b) for happy emotional facial expressions, and (c) for angry emotional facial expressions. D dorsal, C caudal, g. gyrus, R right, t t-value.

We finally explored the emotion × attachment interaction effect, separately for both happy and angry morph videos, to investigate a potential modulation of attachment due to the two different emotional facial expressions (see Table 2 for details). Regarding the happy morph videos, we observed a similar pattern of activation as described above, but the majority of happy morph videos additionally led to increased activation of the caudate nucleus. Focusing on the visual presentation of human models with angry emotional facial expression, we again observed the same pattern of activation with the angry caregiver eliciting activation in brain regions associated with human attachment processing; but unexpectedly the angry caregiver also revealed activation in the caudate nucleus similar to the happy caregiver. We focused on the contrasts most relevant for our research question: caregiver > familiar/ stranger, stranger > familiar combined and separate for emotions (see Fig. 1, Table 2); see Supplementary Table S3 for further contrasts in light of full transparency.

Experiment 2: Eye-tracking task

In Experiment 2a, we presented dogs with morph videos of the caregiver and the stranger side by side. We first analysed the relative looking time to the caregiver. The GLMM model including the predictor variables caregiver location, emotion, trial number, and age did not fit the data significantly better than a null model comprising only the control predictors trial number and age and the random effects (χ2 = 2.80, df = 2, p = 0.246; Fig. 2). Next, we analysed the latency of the dogs’ first fixation to one of the morph videos. The dogs’ latency for looking at the stranger was significantly shorter than for looking at the caregiver (χ2 = 5.75, df = 1, p = 0.017; Supplementary Fig. S7, Table S4). The other predictors (emotion, caregiver location, age and trial number) had no significant effect. Finally, we analysed the maximal pupil size. The full model including the predictor variables stimulus, emotion, trial number, and age did not fit the data significantly better than a null model comprising only of the control predictors (age and trial number) and random effects (Supplementary Fig. S9).

Dogs’ relative looking time to the caregiver presented on the left screen side in Experiment 2a (left; caregiver vs. stranger) and 2b (right; caregiver vs. familiar person). The red dash-dotted line represents the chance level (0.5), GLMM: *p < 0.05. The bootstrapped 95% confidence intervals of the model are indicated by the vertical black lines. The grey points represent the individual looking patterns of each dog per experiment. The size of the points is proportional to the number of individuals.

In Experiment 2b, we presented dogs with morph videos of the caregiver and the familiar person. For the relative looking time to the caregiver, the full model including the predictor variables caregiver location, emotion, trial number, and age fitted data significantly better than a null model comprising only the control predictors and random effects (χ2 = 7.68, df = 2, p = 0.021; Supplementary Table S5). We found that when the caregivers were presented on the left side of the screen the dogs looked significantly longer at them than when they were presented on the right side (see Supplementary Fig. S8). The dogs showed a higher relative looking time to the caregiver, i.e. had a significant preference for the left side when the caregiver was displayed on the left side (z = 2.75, p = 0.006; Fig. 2). When the caregiver was on the right side, in contrast, dogs did not show a significant preference for either side (z = − 1.71, p = 0.087). Emotion, age, and trial number had no significant effect on the dogs’ relative looking time to the caregiver (see Supplementary Table S5). Considering the latency to first fixation, the full model including the predictor variables stimulus, emotion, trial number, and age did not fit the data better significantly than a null model comprising only of the control predictors and random effects. Finally, considering the maximal pupil size, we found that the dogs had a significant larger maximal pupil size when looking at the angry faces of the caregiver and the familiar person compared to their happy faces (Supplementary Fig. S9, Table S6). The other predictors (emotion, age and trial number) had no significant effect on the response of the dogs.

Experiment 3: Behavioural preference task

We only found tendencies but no significant effects of the stimuli (caregiver, stranger, familiar person) on the dogs’ behavioural responses (two-tailed Mann–Whitney-U-test, p > 0.05; see Supplementary Table S9). The descriptive and inferential statistical results are presented in the Supplementary Tables S7–S9.

In Experiment 3a, we simultaneously presented the faces of the caregiver and the stranger. We found no significant effects, but, on average, the dogs not only tended to spend more time on the caregiver’s side of the test arena than on the stranger’s side, but also spent a longer residence time close to, more time touching (Area of Interest/ AoI 3, see Fig. 3) and more time looking at the screen showing the caregiver than to the screen showing the stranger (for descriptive statistics, see Supplementary Table S7). Of note, when considering the first choices (entering AoI 2, see Fig. S4), we found that slightly more dogs approached the stranger’s face than the caregiver’s face (see Supplementary Table S7). However, in 31 trials (out of N = 83) dogs did not enter the AoI 2 at all.

The dogs’ average residence time (in seconds) in the Area of Interest 3 in Experiments 3a and 3b. The 95% confidence intervals are indicated by the vertical black lines. The mean residence time for the caregiver is depicted in blue, for the stranger in dark orange, and for the familiar person in green bars.

In Experiment 3b, in which we presented the dogs with the caregiver and the familiar person, no significant effects emerged either (Supplementary Table S8). In general, dogs were not very motivated to explore the presented faces. In 51 trials (out of N = 83) dogs did not even enter the area at close distance to the screen (AoI 2). Still, the dogs showed, on average, a longer residence time in AoI 1 (arena half) and AoI 3 (close to screen; see Fig. 3) on the caregiver’s side than on the familiar person’s side (AoI 1). Additionally, slightly more dogs went first towards the caregiver’s face than to the familiar person’s face (AoI 2, first choice; see Supplementary Table S8).

Discussion

In this multi-method approach to investigate the neuro-cognitive basis of the dog–human relationship we analysed the neural, visual and behavioural responses of pet dogs to dynamic images of human faces. We hypothesized that pet dogs would exhibit considerable differences in all three types of responses to seeing the face of a familiar and an unfamiliar human. In addition, on the basis of solid behavioural evidence for a strong, attachment-like bond to their human caregiver, we hypothesized that the dogs would also show a difference between their human caregiver and another familiar, but not attached person, i.e. preferring or more intense (neural, behavioural) responses. Finally, we investigated whether the dogs’ perception of various humans might differ depending on their displayed emotional facial expression. Combining emotions and attachment as experimental factors was intended to explore whether the attachment system for caregivers was activated regardless of their emotion display, or whether positive and negative displays would result in differential activation. Overall, both main hypotheses could be confirmed, although some details of the results, especially regarding the facial expressions, are more difficult to explain.

As expected, the visual presentation of the human caregiver in Experiment 1 led to increased activation in areas associated with emotion and attachment processing (e.g. caregiver > stranger: bilateral insula, rostral dorsal cingulate gyrus, and happy: caregiver > familiar: amygdala30,63), and brain regions sensitive to reward processing (e.g. happy: caregiver > happy stranger: caudate nucleus48,67,68,69,70). This is in line with another dog fMRI study71, where the dogs were presented with different scents of themselves, a familiar (not the caregiver or handler) and an unfamiliar human and a familiar and an unfamiliar dog during the scans. The authors found that the olfactory bulb/ peduncle of the dogs was similarly activated by all scents but the caudate nucleus was maximally activated in the familiar human condition. Therefore, it was suggested that the dogs were able to distinguish between the different scents and had a positive association with the one of the familiar human. These findings support our results of caudate nucleus activation when perceiving the human caregiver (for both happy and angry faces; happy: caregiver > stranger, angry: caregiver > familiar, angry: caregiver > stranger) which demonstrates the dogs’ capabilities to identify humans olfactorily and visually and distinguish between them according to their roles in the dogs’ lives. In addition, we observed increased activation in motor (e.g. postcruciate gyrus72,73), and further temporal regions, e.g. the rostral suprasylvian and parahippocampal gyrus). Regardless of emotion display, both the hippocampal and rostral cingulate gyrus resulted in increased activation when the dogs saw their primary caregiver in comparison to both the familiar person and stranger (caregiver > stranger, caregiver > familiar). The rostral cingulate gyrus has been hypothesized to play a crucial role for mammalian mother-infant attachment behaviour along with the thalamus (i.e.77). In non-human animals, lesions of the rostral and caudal cingulate gyrus result in impairment of maternal behaviour, e.g. in mice74,75 rats76, and diminished separation cries in squirrel monkeys77. In human mothers, watching their child in a stressful situation31 or listening to their infant crying78 also evoked increased activation in the anterior cingulate cortex among other regions. Further, the rostral cingulate and hippocampal regions along with the bilateral insula and reward regions have also been reported as neural correlates of love79, but romantic not maternal love evoked activation in hippocampal regions80. The involvement of the parahippocampal gyrus might indicate increased arousal due to memories of the primary caregiver evoked by the presented stimuli81 or relatedly increased attention82. In the behavioural preference test, we also found slightly more dogs spending a longer residence time on the caregiver’s side of the arena further suggesting a potential increase in attention. But note that increased parahippocampal activation has also been observed in mothers in response to unfamiliar babies compared to their own ones31. In contrast, visual presentation of the stranger mainly resulted in increased activation in motor72,73 and higher-order visual processing areas (i.e. right medial ectosylvian gyrus56,59,61,83). These results might indicate increased motor inhibition72,84 and visual attention associated with the salience of a novel and ambiguous (potentially threatening or rewarding) agent. In line with this, we found a shorter latency to look at the stranger in the eye-tracking task (Experiment 2a, caregiver vs. stranger), which supports the possible explanation of a higher attention towards the stranger due to novelty effects37,85. Of note, for our fMRI study the dogs were trained to stay motionless in the scanner. However, being exposed to a salient stimulus such as a strange person but also the primary caregiver (caregiver > stranger: precruciate gyrus, premotor cortex) it could have been more demanding for the dogs to stay motionless compared to a familiar person, resulting in an increased motor inhibition (reflected by corresponding differences in motor activation). Lastly, visual presentation of the familiar person elicited no significant difference in comparison to the stranger and, as expected, we did not find any significant activation changes in brain regions associated with attachment processing in humans in comparison to the caregiver for both the stranger and familiar person (caregiver < familiar/ stranger).

Concerning the emotional facial expressions, we found only the caregiver’s face, in contrast to all other presented humans, eliciting similar activation regardless of showing a positive or negative emotion. The display of happy emotional expressions led to activation changes in the caudate nucleus, a brain region previously associated with reward processing (e.g.86), and the perception of human faces in dogs57,58. Other than that, we observed the same pattern as described above with the happy caregiver eliciting activation in limbic regions (i.e. happy caregiver > familiar: bilateral hippocampus, amygdala; happy caregiver > stranger: R insula, rostral cingulate gyrus) among other regions, i.e. visual cortices (happy caregiver > familiar: R occipital gyrus; happy caregiver > stranger: splenial gyrus). Whereas the happy stranger mainly resulted in increased activation in motor (happy stranger > familiar: R postcruciate gyrus), and visual processing areas (i.e. happy stranger > familiar: R splenial gyrus) but also other (happy stranger > familiar: R amygdala) or similar limbic regions (i.e. R dorsal and rostral cingulate gyrus). However, in comparison to the happy familiar person, visual presentation of the happy stranger additionally resulted in increased activations in limbic regions. Again, the familiar person did not lead to increased activation in regions associated with attachment processing compared to the caregiver (happy: caregiver < familiar).

Regarding negative emotional facial expressions, presenting the angry caregiver surprisingly led to increased activation in brain regions associated with reward processing. This might indicate that the attachment figure is positively perceived no matter what emotion he or she shows, and the resulting activation could potentially be related to mechanisms such as (increased) approach motivation87. In line with our findings, studies with human mothers, also reported increased caudate nucleus activation in response to a negative emotional display of their own children (e.g.31), thus the negative display might elicit an even stronger attachment response. Overall, the angry stranger elicited the strongest activation (highest number of activated clusters) again in mainly motor and visual processing areas, potentially reflecting the further increased salience due to a combination of novelty and a threatening emotional display; however, we did not find increased activation in limbic structures (including the amygdala), as was the case for the angry caregiver. Nevertheless, we did observe increased activation in the L insula and parahippocampal gyrus as well as a visual region (L marginal gyrus). This finding is in line with the unexpected caudate nucleus activation for the angry caregiver, again indicating that solely the primary attachment figure does not elicit a threatening response.

By exploiting the eye-tracking method (Experiment 2) we sought to determine the individual looking patterns of the dogs while they perceived different human faces and facial expressions, especially whether the dogs show specific preferences for the caregivers’ faces. Interestingly, when we confronted the dogs with the simultaneous presentation of the caregiver and the stranger, they showed a quicker first fixation of the stranger’s face. Although this might be seen as contradicting a caregiver’s preference, it could be well explained by novelty effects (e.g.85,88,89) or surprise (in human children90). As dogs appear to be generally attracted to novel objects in comparison to familiar ones, it is reasonable to assume that seeing the face of an unfamiliar person elicits a first attentional capture, and that this happens irrespective of the displayed emotion. This makes sense from an evolutionary perspective, because it is necessary to rapidly recognize a potential threat, such as a stranger (in chimpanzees91, humans92). However, this effect seems to be fragile, as it did not survive in terms of longer looking times or pupil size changes. In contrast, a looking time preference was found when we presented the dogs with the faces of their caregiver and a familiar person. Dogs looked longer at their caregiver, but only if her/ his face was presented on the left side of the screen. The fact that the dogs had a general preference for looking on the left side can be explained by a left gaze bias, as was repeatedly found in previous studies42,46,93. The left gaze bias we found likely interacted with the caregiver side bias, with the latter being amplified when the caregiver’s face was shown on the left side and being weakened or even extinguished when shown on the right side of the screen. Interestingly, the laterality we found in Experiment 2a was not confirmed in the fMRI or behavioural preference tests but we cannot tell whether this is due to a higher sensitivity to detect such effects in the eye-tracking experiment, or whether it is a false positive. Note that in another recent comparative dog and human fMRI study investigating species- and face sensitivity the authors also did not find any lateralization effects in the dogs in contrast to the human participants60.

Concerning the facial expressions, the angry faces, but not the happy ones, of both humans had an increasing effect on the dogs’ pupil size, which is well supported by the literature. Not only does the pupil size provide information about mental activity and attention (see94 for review), size changes during stimulus perception reflect emotional arousal related to increased sympathetic activity95,96. Only recently enlarged pupil sizes of dogs were found while looking at angry faces compared to happy faces97. In addition to emotional arousal, threat- and fear-related stimuli are detected faster (e.g.98) and they are also more distracting than positive and neutral stimuli (e.g.99,100), likely due to the immediate relevance of such stimuli to survival throughout the evolutionary history.

Although these measurements reflect the dogs’ interest into the different stimuli, we do not know how they interpret them emotionally, i.e. whether the interest is caused by affiliative motivations. We do not even know if the dogs see the stimuli as representations of human faces. Although dogs are capable of recognizing their human caregiver’s face from photographs101, and not only discriminate between positive and negative facial expressions of humans but react appropriately to the valence of the faces41,42,43,44,45,46,102, we found no such emotion effect in the dogs when looking at the caregiver and the stranger side by side.

To answer the question how pet dogs behaved when exposed to the stimuli in an unrestrained and more natural setting as fMRI and eye-tracking can offer, we conducted a behavioural preference test (Experiment 3). Several experiments have shown that such a test facilitates the assessment of how dogs react spontaneously to human face stimuli by approaching, avoiding, or ignoring them37,103,104,105,106. In contrast to Experiment 1 and 2, the dogs could move freely within a test arena that was equipped with two computer screens showing the different faces simultaneously but 135 cm apart from each other (in the left and right half of the arena, respectively). When confronted with the caregiver’s and the stranger’s face at the same time (Experiment 3a), we expected that they would approach the caregiver and avoid the stranger. We found a tendency, although not significant, in support of this expectation. On average, the dogs spent slightly more time on the caregiver’s side of the arena than on the stranger’s side and, more importantly, spent more time closer to the caregiver’s face and more time looking at it, and touched it more frequently and longer than the screen showing the stranger. This trend would be consistent with previous studies comparing the approach/ avoidance behaviour of dogs24,37,38,39,107. However, due to the non-significant results, we cannot derive strong conclusions here. The same is true for the other trend, when considering the first choices, where a few more dogs went towards the stranger’s screen first than to the caregiver’s screen. An explanation could be offered in terms of neophilia and novelty effects85,88,101. Dogs might have explored the side with the unknown human first, but after this initial exploration they might have decided to stay closer to their caregiver’s face. In general, the small tendencies, also with regard to the comparison between the caregivers’ and the familiar persons’ faces, may indicate that the dogs, as soon as they made first contact with the computer screens, lost interest. Also the artificial, empty and therefore perhaps scary testing arena might have contributed to these weak effects.

Regarding the limited numbers of trials within the different tasks we were confronted with further study limitations. Initially, we planned to do the same behavioural preference test investigating the effect of the different emotions, i.e. displaying the same human stimuli with different emotions, but during Experiment 3 we found the dogs getting quickly bored in the test arena and partly refused to enter the test arena in Experiment 3b. Therefore, we decided to cancel the testing of the effects of facial emotions. By conducting only few test trials (4 trials per experiment including stimuli repetition to counterbalance the sides), we tried to avoid strong habituation effects. From previous eye-tracking studies42,108 in our lab we know that such habituation effects are also relevant for this kind of experiments. During the fMRI scans we tried to balance the amount of necessary stimuli repetitions and the exhausting duration of the scans for the dogs lying motionless in the scanner (two runs of ca. 4.5 min plus an additional minute for preparations (i.e. head localizer scan)). While we consider the number of repetitions per run (30 per emotion, 20 per attachment figure) as sufficient, we would recommend future studies to employ a block design to further increase power and use multiple stimuli per category to prevent potential habituation.

Taken together, the results of the three experiments provide suggestive evidence for attachment-like neural, visual and behavioural responses to the face of the human caregiver. Our results should be treated as preliminary, though, as we decided to choose statistical correction procedures that did not provide strict control of type I errors, but rather aimed to have lower type II error, due to the exploratory nature of our study. For the interpretation of our results, we focused on the clusters in line with findings from previous attachment and emotion processing research and did not discuss all non-expected findings to prevent over-interpretations and speculations. Nevertheless, our aim was to provide other researchers a comprehensive overview of all clusters observed in Experiment 1 to allow future studies probing these results, enabling region of interest analyses based on the coordinates reported in our study and facilitating meta-analyses by providing the unthresholded t-maps on OSF. Thus, future studies should investigate the reproducibility of our results, and potentially expand them to other sensory stimuli (i.e. auditory cues) as well. While the face of a stranger mainly elicited brain activation in motor and higher-order visual processing areas and the face of a familiar person only very weak activations in comparison to both the stranger and the caregiver’s face, the presentation of the human caregiver’s face activated areas associated with emotion and attachment processing. Although the face of a stranger was most attractive at first glance, probably due to novelty effects, a clear preference for the caregiver’s face over the familiar person’s face supported the pattern of brain activations revealed by the fMRI experiment. Finally, the majority of results from the behavioural test showed a larger mean (even though it was not significant) for the dogs’ preference for being close to their caregiver. Still, these findings cannot per se be seen as proof of an attachment relationship, because all our test subjects have lived together with the caregiver for years (see Supplementary Table S1a), which very likely resulted in a positive relationship due to learned associations with rewarding outcomes106.

Attachment is defined as an affectional bond with the added experience of security and comfort obtained from the relationship109. The attachment system is an innate psychobiological system individuals are born with, not just the result of a collection of rewarding experiences6. Like the child-parent attachment the dog-caregiver relationship is not symmetrical, i.e. the attached individual is less cognitively developed and benefits from being attached to a more cognitively sophisticated individual (the mother, the dog’s caregiver), who plays the caregiving role in their relationship110.

The activation pattern we found in this study is not specific enough to make a distinction between true attachment and other affectional bonds. In humans, many researchers have investigated the behavioural development of an infant’s ability to recognize faces in relation to infant-mother attachment111,112,113. However, only a limited number of studies have revealed neural correlates of the mother’s face recognition in infants114.

In conclusion, this study provides a first attempt to combine three sophisticated methods to improve the understanding of the dog–human relationship. Although each method and experimental setup has its limitations, our converging findings are very promising and set the stage for similar future work. Nevertheless, a great deal remains to be learned about the neurophysiological mechanisms of attachment-like affiliative behaviours in dogs.

Methods

Subjects

All subjects were privately owned pet dogs (for details see Supplementary Table S1). The sample of subjects used for the behavioural preference test (Experiment 3) consisted of 24 dogs. Twenty of those had been used for the fMRI task (Experiment 1), and 15 for the eye-tracking test (Experiment 2).

Dog–human relationship

To evaluate the intensity and probable quality of the dog–human relationship, we conducted a caregivers’ survey (N = 15; 14 females, 1 male) to assess the dogs’ age at the time when they have adopted them and how many hours per day the caregiver and the familiar person (N = 15; 6 females, 9 males) on average actively spent with the dog during the week and on the weekends (see Supplementary Table S1a).

Ethical statement

All reported experimental procedures were reviewed and approved by the institutional ethics and animal welfare committee in accordance with the GSP guidelines and national legislation (ETK-21/06/2018, ETK-31/02/2019, ETK-117/07/2019) based on a pilot study at the University of Vienna (ETK-19/03/2016-2, ETK-06/06/2017). The dogs’ human caregivers gave written consent to participate in the studies before the tests were conducted. Additionally, informed consent was given for the publication of identifying images (see Fig. 4, S2; Supplementary Movie S1, S2) in an online open-access publication.

Stimuli

We created short (3 s) videos showing human faces that are changing emotional facial expressions (see Fig. 4), transforming (morphing) from neutral to either happy or angry expression (see Movie S1, S2). The face pictures were taken from the human caregiver of each dog, a familiar person, and a stranger (for details, see Supplementary Material).

Experiment 1: fMRI task

Before the experiment, dogs had received extensive training by a professional dog trainer to habituate to the scanner environment (sounds, moving bed, ear plugs etc.; see108). For data acquisition, awake and unrestrained dogs laid down in prone position on the scanner bed with the head inside the coil, but could leave the scanner at any time using a custom-made ramp. The dog trainer stayed inside the scanner room throughout the entire test trial (run) outside of the dog’s visual field. Data acquisition was aborted if the dog moved extensively, or left the coil. After the scan session, the realignment parameters were inspected. If overall movement exceeded 3 mm, the run was repeated in the next test session. To additionally account for head motion, we calculated the scan-to-scan motion for each dog, referring to the frame wise displacement (FD) between the current scan t and its preceding scan t-1. For each scan exceeding the FD threshold of 0.5 mm, we entered an additional motion regressor to the first-level GLM design matrix115,116. On average, 3.3% (run 1) and 9.8% (run 2) scans were removed (run 1: ~ 9/270 scans; run 2: ~ 26/270 scans). If more than 50% of the scans exceeded the threshold, the entire run was excluded from further analyses. This was the case for one run (56%/151 scans). We truncated a run for one dog to 190 scans due to excessive motion because the dog was not available for another scan session.

The task alternated between the morph videos (500 × 500 pixels) and a black fixation cross in the centre of the screen that served as visual baseline (3–7 s jitter, mean = 5 s; white background); each run started and ended with 10 s of visual baseline. The presentation order of the morph videos was randomized, but the same human model × emotion combination (i.e., angry stranger) was never directly repeated. The task was split into two runs with a duration of 4.5 min (270 volumes) each, but with a short break in-between if dogs completed both runs within one session. One run contained 60 trials (30 per emotion; 20 trials per human model). Scanning was conducted with a 3 T Siemens Skyra MR-system using a 15-channel human knee-coil. Functional volumes were acquired using an echo planar imaging (EPI) sequence (multiband factor: 2) and obtained from 24 axial slices in descending order, covering the whole brain (interleaved acquisition) using an echo planar imaging (EPI) sequence (multiband factor: 2) with a voxel size of 1.5 × 1.5 × 2 mm3 and a 20% slice gap (TR/TE = 1000 ms/38 ms, field of view = 144 × 144 × 58 mm3). An MR-compatible screen (32 inch) at the end of the scanner bore was used for stimulus presentation. An eye-tracking camera (EyeLink 1000 Plus, SR Research, Ontario, Canada) was used to monitor movements of the dogs during scanning. The structural image was acquired in a prior scan session with a voxel size of 0.7 mm isotropic (TR/TE = 2100/3.13 ms, field of view = 230 × 230 × 165 mm3). Data analysis and statistical tests are described in the Supplementary Material.

Experiment 2: Eye-tracking task

The eye-tracking task consisted of two tests (Experiment 2a and b) of four trials each, with at least seven days between them. In each trial the morph video of the human caregiver was presented together with either a stranger (Experiment 2a) or a familiar person (Experiment 2b). Both videos were shown with the same, either happy (two trials) or angry (two trials), facial expression. The location (left, right) of the caregiver as well as the emotion (happy, angry) was counterbalanced across the four trials of each test. The dogs went through a three-point calibration procedure first and then received two test trials in a row. At the beginning of each trial the dog was required to look at a blinking white trigger point (diameter: 7.5 cm) in the centre of the screen to start the 15-s (5 × 3 s) stimulus presentation. After a 5–10 min break, this sequence was repeated once. The dogs were rewarded with food rewards at the end of each two-trial block. Data analysis and statistical tests are described in the Supplementary Material.

Experiment 3: Behavioural preference task

The behavioural preference tests consisted of the measuring of the dogs’ movement patterns inside a rectangular arena facing two videos that were presented simultaneously on two computer screens. The screens were placed opposite to the arena entrance, at a distance of 165 cm on the floor, 135 cm apart from each other (for more details, see Supplementary Material). The dog entered the arena centrally through a tunnel with a trap door and could then move freely for the whole duration of stimulus presentation (10 × 3 s, continuous loop). Like in Experiment 2, the experiment consisted of two tests (Experiment 3a and b) of four trials each, with 1-min breaks between trials and at least seven days between the two experiments. The morph videos were shown in the exact same order and on the same sides (left, right) as in Experiment 2. After each trial, the experimenter called the dog back and went to the corridor outside the test room until the onset of the next trial. The dog was rewarded with a few pieces of dry food at the end of each experiment.

First, we manually cut out the period of stimuli presentation (30 s test trial) from the experiment recordings and then analysed the obtained videos with K9-Blyzer, a software tool which automatically tracked the dog and detected its body parts to analyse the potential behavioural preferences of the dogs towards the different displayed stimuli. Based on the dogs’ body part tracking data (head location, tail and centre of mass in each frame), the system was configured to produce measurements of specified parameters (areas of interest, dogs’ field of view, dog-screen distance) related to the dogs’ stimuli preference. We specified six parameters related to the right and left side/ screen preference (mapped to caregiver, stranger, familiar person), which are described in Supplementary Table S2. The details of the data analysis and statistical values are also provided in the Supplementary Material section.

Data availability

Supplementary results of Experiments 2 and 3 are included in the Supplementary Material file of this article. Additionally, unthresholded statistical maps from Experiment 1 have been uploaded to OSF.io and are available at osf.io/kagy3.

References

Archer, J. Why do people love their pets?. Evol. Hum. Behav. 18, 237–259 (1997).

Palmer, R. & Custance, D. A counterbalanced version of Ainsworth’s strange situation procedure reveals secure-base effects in dog–human relationships. Appl. Anim. Behav. Sci. 109, 306–319 (2008).

Prato-Previde, E., Custance, D. M., Spiezio, C. & Sabatini, F. Is the dog–human relationship an attachment bond? An observational study using Ainsworth’s strange situation. Behaviour 140, 225–254 (2003).

Topàl, J., Miklòsi, A., Csànyi, V. & Dòka, A. Attachment bahavior in dogs. A new application of Ainsworth’ strange situation test. J. Comp. Psychol. 112, 219–229 (1998).

Bowlby, J. The nature of the child’s tie to his mother. Int. J. Psychoanal. 39, 350–373 (1958).

Bowlby, J. Attachment and Loss, Volume I: Attachment. 1, (Basic Books, New York, 1969).

Prato-Previde, E. & Valsecchi, P. The Immaterial Cord: The Dog-Human Attachment Bond. The Social Dog: Behavior and Cognition (Elsevier, Amsterdam, 2014). https://doi.org/10.1016/B978-0-12-407818-5.00006-1.

Julius, H., Beetz, A., Kotrschal, K., Uvnäs-Moberg, K. & Turner, D. Attachment to Pets: An Integrative View of Human-Animal Relationships with Implications for Therapeutic Practice Attachment to Pets An Integrative View of Human-Animal Relationships with Implications for Therapeutic Practice (Hogrefe Publishing, Oxford, 2013).

Cassidy, J. The nature of the child’s ties. In Handbook of Attachment. Theory Research and Clinical Applications 3–24 (The Guilford Press, New York, 1999).

Ainsworth, M. D. S. & Wittig, B. A. Attachment and exploratory behavior of one-year-olds in a strange situation. B. M. Foss (Ed.), Determ. infant Behav. (Vol. 4, pp. 113–136). 4, 113–136 (1969).

Ainsworth, M. D. S. & Bell, S. M. Attachment, exploration, and separation: Illustrated by the behavior of one-year-olds in a strange situation. Child Dev. 41, 49–67 (1970).

Main, M. & Solomon, J. Procedures for identifying infants as disorganized/disoriented during the Ainsworth Strange Situation. In Attachment in the Preschool Years: Theory, Research, and Intervention 121–160 (The University of Chicago Press, Chicago, 1990).

Nagasawa, M., Mogi, K. & Kikusui, T. Attachment between humans and dogs. Jpn. Psychol. Res. 51, 209–221 (2009).

Payne, E., DeAraugo, J., Bennett, P. & McGreevy, P. Exploring the existence and potential underpinnings of dog-human and horse-human attachment bonds. Behav. Processes 125, 114–121 (2016).

Serpell, J. A. Evidence for an association between pet behavior and owner attachment levels. Appl. Anim. Behav. Sci. 47, 49–60 (1996).

Topál, J. et al. Attachment to humans: A comparative study on hand-reared wolves and differently socialized dog puppies. Anim. Behav. 70, 1367–1375 (2005).

Topál, J., Miklósi, Á., Csányi, V. & Dóka, A. Attachment behavior in dogs (Canis familiaris): A new application of Ainsworth’s (1969) Strange Situation Test. J. Comp. Psychol. 112, 219–229 (1998).

Gácsi, M., Topál, J., Miklósi, Á., Dóka, A. & Csányi, V. Attachment behavior of adult dogs (Canis familiaris) living at rescue centers: Forming new bonds. J. Comp. Psychol. 115, 423–431 (2001).

Gácsi, M., Maros, K., Sernkvist, S. & Miklósi, Á. Does the owner provide a secure base? Behavioral and heart rate response to a threatening stranger and to separation in dogs. J. Vet. Behav. 4, 90–91 (2009).

Gácsi, M., Maros, K., Sernkvist, S., Faragó, T. & Miklósi, Á. Human analogue safe haven effect of the owner: Behavioural and heart rate response to stressful social stimuli in dogs. PLoS ONE 8, 2 (2013).

Mariti, C., Ricci, E., Zilocchi, M. & Gazzano, A. Owners as a secure base for their dogs. Behaviour 150, 1275–1294 (2013).

Palestrini, C., Prato-Previde, E., Spiezio, C. & Verga, M. Heart rate and behavioural responses of dogs in the Ainsworth’s strange situation: A pilot study. Appl. Anim. Behav. Sci. 94, 75–88 (2005).

Marinelli, L., Adamelli, S., Normando, S. & Bono, G. Quality of life of the pet dog: Influence of owner and dog’s characteristics. Appl. Anim. Behav. Sci. 108, 143–156 (2007).

Mongillo, P., Bono, G., Regolin, L. & Marinelli, L. Selective attention to humans in companion dogs, Canis familiaris. Anim. Behav. 80, 1057–1063 (2010).

Horn, L., Huber, L. & Range, F. The importance of the secure base effect for domestic dogs—Evidence from a manipulative problem-solving task. PLoS ONE 8, 2 (2013).

Horn, L., Range, F. & Huber, L. Dogs’ attention towards humans depends on their relationship, not only on social familiarity. Anim. Cogn. 16, 435–443 (2013).

Goodson, J. L. The vertebrate social behavior network: Evolutionary themes and variations. Horm. Behav. 48, 11–22 (2005).

Nitschke, J. B. et al. Orbitofrontal cortex tracks positive mood in mothers viewing pictures of their newborn infants. Neuroimage 21, 583–592 (2004).

Atzil, S., Hendler, T. & Feldman, R. Specifying the neurobiological basis of human attachment: Brain, hormones, and behavior in synchronous and intrusive mothers. Neuropsychopharmacology 36, 2603–2615 (2011).

Stoeckel, L. E., Palley, L. S., Gollub, R. L., Niemi, S. M. & Evins, A. E. Patterns of brain activation when mothers view their own child and dog: An fMRI study. PLoS ONE 9, 2 (2014).

Noriuchi, M., Kikuchi, Y. & Senoo, A. The functional neuroanatomy of maternal love: Mother’s response to infant’s attachment behaviors. Biol. Psychiatry 63, 415–423 (2008).

DeWall, C. N. et al. Do neural responses to rejection depend on attachment style? An fMRI study. Soc. Cogn. Affect. Neurosci. 7, 184–192 (2012).

Eisenberger, N. I., Lieberman, M. D. & Williams, K. D. Does rejection hurt? An fMRI study of social exclusion. Science 302, 290–292 (2003).

Strathearn, L., Fonagy, P., Amico, J. & Montague, R. Adult attachment predicts maternal brain and oxytocin response to infant cues. Neuropsychopharmacology 34, 2655–2666 (2009).

Gácsi, M., Miklósi, Á., Varga, O., Topál, J. & Csányi, V. Are readers of our face readers of our minds? Dogs (Canis familiaris) show situation-dependent recognition of human’s attention. Anim. Cogn. 7, 144–153 (2004).

Schwab, C. & Huber, L. Obey or not obey? Dogs (Canis familiaris) behave differently in response to attentional states of their owners. J. Comp. Psychol. 120, 169–175 (2006).

Huber, L., Racca, A., Scaf, B., Virányi, Z. & Range, F. Discrimination of familiar human faces in dogs (Canis familiaris). Learn. Motiv. 44, 258–269 (2013).

Mongillo, P., Scandurra, A., Kramer, R. S. S. & Marinelli, L. Recognition of human faces by dogs (Canis familiaris) requires visibility of head contour. Anim. Cogn. 20, 881–890 (2017).

Range, F., Aust, U., Steurer, M. & Huber, L. Visual categorization of natural stimuli by domestic dogs. Anim. Cogn. 11, 339–347 (2008).

Somppi, S., Törnqvist, H., Hänninen, L., Krause, C. M. & Vainio, O. How dogs scan familiar and inverted faces: An eye movement study. Anim. Cogn. 17, 793–803 (2014).

Albuquerque, N. et al. Dogs recognize dog and human emotions. Biol. Lett. 12, 20150883 (2016).

Barber, A. L. A., Randi, D., Müller, C. A. & Huber, L. The processing of human emotional faces by pet and lab dogs: Evidence for lateralization and experience effects. PLoS ONE 11, 1–22 (2016).

Morisaki, A., Takaoka, A. & Fujita, K. Are dogs sensitive to the emotional state of humans?. J. Vet. Behav. Clin. Appl. Res. 2, 49 (2009).

Müller, C. A., Schmitt, K., Barber, A. L. A. & Huber, L. Dogs can discriminate emotional expressions of human faces. Curr. Biol. 25, 601–605 (2015).

Nagasawa, M., Murai, K., Mogi, K. & Kikusui, T. Dogs can discriminate human smiling faces from blank expressions. Anim. Cogn. 14, 525–533 (2011).

Racca, A., Guo, K., Meints, K. & Mills, D. S. Reading faces: Differential lateral gaze bias in processing canine and human facial expressions in dogs and 4-year-old children. PLoS ONE 7, 1–10 (2012).

Kujala, M. Canine emotions as seen through human social cognition. Anim. Sentien. 2, 1–34 (2017).

Berns, G. S., Brooks, A. M. & Spivak, M. Functional MRI in awake unrestrained dogs. PLoS ONE 7, 2 (2012).

Tóth, L., Gácsi, M., Miklósi, Á., Bogner, P. & Repa, I. Awake dog brain magnetic resonance imaging. J. Vet. Behav. Clin. Appl. Res. 4, 50 (2009).

Berns, G. S. & Cook, P. F. Why did the dog walk into the MRI?. Curr. Dir. Psychol. Sci. 25, 363–369 (2016).

Bunford, N., Andics, A., Kis, A., Miklósi, Á. & Gácsi, M. Canis familiaris as a model for non-invasive comparative neuroscience. Trends Neurosci. 40, 438–452 (2017).

Cook, P. F., Brooks, A., Spivak, M. & Berns, G. S. Regional brain activations in awake unrestrained dogs. J. Vet. Behav. Clin. Appl. Res. 16, 104–112 (2016).

Huber, L. & Lamm, C. Understanding dog cognition by functional magnetic resonance imaging. Learn. Behav. 45, 101–102 (2017).

Thompkins, A. M., Deshpande, G., Waggoner, P. & Katz, J. S. Functional magnetic resonance imaging of the domestic dog: Research, methodology, and conceptual issues. Comp. Cogn. Behav. Rev. 11, 63–82 (2016).

Andics, A. & Miklósi, Á. Neural processes of vocal social perception: Dog–human comparative fMRI studies. Neurosci. Biobehav. Rev. 85, 54–64 (2018).

Dilks, D. D. et al. Awake fMRI reveals a specialized region in dog temporal cortex for face processing. PeerJ 3, e1115 (2015).

Cuaya, L. V., Hernández-Pérez, R. & Concha, L. Our faces in the dog’s brain: Functional imaging reveals temporal cortex activation during perception of human faces. PLoS ONE 11, 1–13 (2016).

Hernández-Pérez, R., Concha, L. & Cuaya, L. V. Decoding human emotional faces in the dog’s brain. bioRxiv https://doi.org/10.1101/134080 (2018).

Thompkins, A. M. et al. Separate brain areas for processing human and dog faces as revealed by awake fMRI in dogs (Canis familiaris). Learn. Behav. 46, 561–573 (2018).

Bunford, N. et al. Comparative brain imaging reveals analogous and divergent patterns of species- and face-sensitivity in humans and dogs. J. Neurosci. 2, 2 (2020).

Szabó, D. et al. On the face of it: No differential sensitivity to internal facial features in the dog brain. Front. Behav. Neurosci. 14, 2 (2020).

Sato, W., Kochiyama, T., Yoshikawa, S., Naito, E. & Matsumura, M. Enhanced neural activity in response to dynamic facial expressions of emotion: an fMRI study. Cogn. Brain Res. 20, 81–91 (2004).

Adolphs, R. Recognizing emotion from facial expressions: Psychological and neurological mechanisms. Behav. Cogn. Neurosci. Rev. 1, 21–62 (2002).

Cuaya, L. V., Hernández-Pérez, R. & Concha, L. Smile at me! dogs activate the temporal cortex towards smiling human faces. bioRxiv https://doi.org/10.1101/134080 (2017).

Nitzsche, B. et al. A stereotaxic breed-averaged, symmetric T2w canine brain atlas including detailed morphological and volumetrical data sets. Neuroimage 187, 93–103 (2019).

Czeibert, K., Andics, A., Petneházy, Ö. & Kubinyi, E. A detailed canine brain label map for neuroimaging analysis. Biol. Futur. 70, 112–120 (2019).

Berns, G. S., Brooks, A. M., Spivak, M. & Levy, K. Functional MRI in awake dogs predicts suitability for assistance work. Sci. Rep. 7, 43704 (2017).

Berns, G. S., Brooks, A. & Spivak, M. Replicability and heterogeneity of awake unrestrained canine fMRI responses. PLoS ONE 8, 2 (2013).

Cook, P. F., Prichard, A., Spivak, M. & Berns, G. S. Awake canine fMRI predicts dogs’ preference for praise versus food. bioRxiv https://doi.org/10.1101/062703 (2016).

Prichard, A., Chhibber, R., Athanassiades, K., Spivak, M. & Berns, G. S. Fast neural learning in dogs: A multimodal sensory fMRI study. Sci. Rep. 8, 1–9 (2018).

Berns, G. S., Brooks, A. M. & Spivak, M. Scent of the familiar: An fMRI study of canine brain responses to familiar and unfamiliar human and dog odors. Behav. Processes 110, 37–46 (2015).

Stepien, I., Stepien, L. & Konorski, J. The effects of bilateral lesions in the motor cortex on type II conditioned reflexes in dogs. Acta Biol. Exp. (Warsz) 20, 211–223 (1960).

Uemura, E. E. Fundamentals of Canine Neuroanatomy and Neurophysiology (John Wiley & Sons Inc, New York, 2015).

Slotnick, B. M. Maternal behavior of mice with cingulate cortical, amygdala, or septal lesions. J. Comp. Physiol. Psychol. 88, 118–127 (1975).

Carlson, N. R. & Thomas, G. J. Maternal behavior of mice with limbic lesions. J. Comp. Phsysiological Psychol. 66, 731–737 (1968).

Slotnick, B. M. Disturbances of maternal behavior in the rat following lesions of the cingulate. Cortex 29, 204–236 (1967).

MacLean, P. D. & Newman, J. D. Role of midline ffontolimbic cortex in production of the isolation call of squirrel monkeys. Brain Res. 450, 111–123 (1988).

Lorberbaum, J. P. et al. A potential role for thalamocingulate circuitry in human maternal behavior. Biol. Psychiatry 51, 431–445 (2002).

Bartels, A. & Zeki, S. The neural basis of romantic love. NeuroReport 11, 3829–3834 (2000).

Bartels, A. & Zeki, S. The neural correlates of maternal and romantic love. Neuroimage 21, 1155–1166 (2004).

Steinmetz, K. R. M. & Kensinger, E. A. The effects of valence and arousal on the neural activity leading to subsequent memory. Psychophysiology 46, 1190–1199 (2009).

Feinstein, J. S., Goldin, P. R., Stein, M. B., Brown, G. G. & Paulus, M. P. Habituation of attentional networks during emotion processing. NeuroReport 13, 1255–1258 (2002).

Boch, M. et al. Tailored haemodynamic response function increases detection power of fMRI in awake dogs (Canis familiaris). Neuroimage 224, 117414 (2020).

Picazio, S. & Koch, G. Is motor inhibition mediated by cerebello-cortical Interactions?. Cerebellum 14, 47–49 (2015).

Kaulfuß, P. & Mills, D. S. Neophilia in domestic dogs (Canis familiaris) and its implication for studies of dog cognition. Anim. Cogn. 11, 553–556 (2008).

Cook, P. F., Spivak, M. & Berns, G. S. One pair of hands is not like another: Caudate BOLD response in dogs depends on signal source and canine temperament. PeerJ 2, e596 (2014).

Delgado, M. R., Stenger, V. A. & Fiez, J. A. Motivation-dependent responses in the human caudate nucleus. Cereb. Cortex 14, 1022–1030 (2004).

Racca, A. et al. Discrimination of human and dog faces and inversion responses in domestic dogs (Canis familiaris). Anim. Cogn. 13, 525–533 (2010).

Gunderson, V. M. & Swartz, K. B. Visual recognition in infant pigtailed macaques after a 24-h delay. Am. J. Primatol. 8, 259–264 (1985).

Sim, Z. L. & Xu, F. Another look at looking time: surprise as rational statistical inference. Top. Cogn. Sci. 11, 154–163 (2019).

Kano, F. & Tomonaga, M. Species difference in the timing of gaze movement between chimpanzees and humans. Anim. Cogn. 14, 879–892 (2011).

Beaton, E. A. et al. Different neural responses to stranger and personally familiar faces in shy and bold adults. Behav. Neurosci. 122, 704–709 (2008).

Guo, K., Meints, K., Hall, C., Hall, S. & Mills, D. Left gaze bias in humans, rhesus monkeys and domestic dogs. Anim. Cogn. 12, 409–418 (2009).

Laeng, B., Sirois, S. & Gredebäck, G. Pupillometry: A window to the preconscious?. Perspect. Psychol. Sci. 7, 18–27 (2012).

Bradley, M. M., Miccoli, L., Escrig, M. A. & Lang, P. J. The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology 45, 602–607 (2008).

Barber, A. L. A., Müller, E. M., Randi, D., Müller, C. A. & Huber, L. Heart rate changes in pet and lab dogs as response to human facial expressions. ARC J. Anim. Vet. Sci. 3, 46–55 (2017).

Somppi, S. et al. Nasal oxytocin treatment biases dogs’ visual attention and emotional response toward positive human facial expressions. Front. Psychol. 8, 2 (2017).

Li, J., Oksama, L., Nummenmaa, L. & Hyönä, J. Angry faces are tracked more easily than neutral faces during multiple identity tracking cognition and emotion. Cogn. Emot. 32, 464–479 (2018).

Eastwood, J. D., Smilek, D. & Merikle, P. M. Differential attentional guidance by unattended faces expressing positive and negative emotion. Percept. Psychophys. 63, 1004–1013 (2001).

Öhman, A., Flykt, A. & Esteves, F. Emotion drives attention_Snakes in the grass.pdf. J. Exp. Psychol. Gen. 130, 466–478 (2001).

Eatherington, C., Mongillo, P., Looke, M. & Marinelli, L. Dogs (Canis familiaris) recognise our faces in photographs: Implications for existing and future research. Anim. Cogn. https://doi.org/10.1007/s10071-020-01382-3 (2020).

Albuquerque, N., Guo, K., Wilkinson, A., Resende, B. & Mills, D. S. Mouth-licking by dogs as a response to emotional stimuli. Behav. Processes 146, 42–45 (2018).

Custance, D. & Mayer, J. Empathic-like responding by domestic dogs (Canis familiaris) to distress in humans: An exploratory study. Anim. Cogn. 15, 851–859 (2012).

Marshall-Pescini, S., Prato-Previde, E. & Valsecchi, P. Are dogs (Canis familiaris) misled more by their owners than by strangers in a food choice task?. Anim. Cogn. 14, 137–142 (2011).

Merola, I., Prato-Previde, E. & Marshall-Pescini, S. Dogs’ social referencing towards owners and strangers. PLoS ONE 7, 2 (2012).

Merola, I., Prato-Previde, E., Lazzaroni, M. & Marshall-Pescini, S. Dogs’ comprehension of referential emotional expressions: Familiar people and familiar emotions are easier. Anim. Cogn. 17, 373–385 (2014).

Steurer, M. M., Aust, U. & Huber, L. The Vienna comparative cognition technology (VCCT): An innovative operant conditioning system for various species and experimental procedures. Behav. Res. Methods 44, 909–918 (2012).

Karl, S., Boch, M., Virányi, Z., Lamm, C. & Huber, L. Training pet dogs for eye-tracking and awake fMRI. Behav. Res. Methods https://doi.org/10.3758/s13428-019-01281-7 (2019).

Ainsworth, M. S. Attachments beyond infancy. Am. Psychol. 44, 709–716 (1989).

Bowlby, J. Attachment and Loss: Attachment (Basic Book, New York, 1982).

Bushnell, I. W. R., Sai, F. & Mullin, J. T. Neonatal recognition of the mother’s face. Br. J. Dev. Psychol. 7, 3–15 (1989).

De Schonen, S. & Mathivet, E. Hemispheric asymmetry in a face discrimination task in infants. Child Dev. 61, 1192–1205 (1990).

Moore, G. A., Cohn, J. F. & Campbell, S. B. Infant affective responses to mother’s still face at 6 months differentially predict externalizing and internalizing behaviors at 18 months. Dev. Psychol. 37, 706–714 (2001).

Minagawa-Kawai, Y. et al. Prefrontal activation associated with social attachment: Facial-emotion recognition in mothers and infants. Cereb. Cortex 19, 284–292 (2009).

Power, J. D., Barnes, K. A., Snyder, A. Z., Schlaggar, B. L. & Petersen, S. E. Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage 59, 2142–2154 (2012).

Power, J. D. et al. Methods to detect, characterize, and remove motion artifact in resting state fMRI. Neuroimage 84, 320–341 (2014).

Acknowledgements

We thank Karin Bayer for administrative support and help during the behavioural preference test, Wolfgang Berger, and Peter Füreder for technical support, Morris Krainz for help with the fMRI data collection, Helena Manzenreiter for help with video coding, Roger Mundry for his advice regarding the statistical analyses of the data, and Jenny L. Essler for proof-reading. We wish to thank Gabriel Malin, Liel Mualem and Nareed Hashem for their help with the automated data analysis. Additionally, we sincerely thank the dog caregivers and their dogs for participation in our study.

Funding

Funding for this study was provided by the Austrian Science Fund (FWF; W1262-B29), by the Vienna Science and Technology Fund (WWTF), the City of Vienna and ithuba Capital AG through project CS18-012, and the Messerli Foundation (Sörenberg, Switzerland). Anna Zamansky has been supported by the Israel Ministry of Science (MOST, Russia-Israel Collaboration).

Author information

Authors and Affiliations

Contributions

S.K., M.B., C.L. and L.H. designed the research; S.K. and M.B. collected the data; S.K., M.B., C.J.V., A.Z., D.v.d.L., and I.C.W. analysed the data; S.K., L.H., M.B., and C.L. drafted the manuscript; all authors discussed the results and contributed to the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Karl, S., Boch, M., Zamansky, A. et al. Exploring the dog–human relationship by combining fMRI, eye-tracking and behavioural measures. Sci Rep 10, 22273 (2020). https://doi.org/10.1038/s41598-020-79247-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-79247-5

This article is cited by

-

Functional mapping of the somatosensory cortex using noninvasive fMRI and touch in awake dogs

Brain Structure and Function (2024)

-

Digitally-enhanced dog behavioral testing

Scientific Reports (2023)

-

Representation of rewards differing in their hedonic valence in the caudate nucleus correlates with the performance in a problem-solving task in dogs (Canis familiaris)

Scientific Reports (2023)

-

Comparative neurogenetics of dog behavior complements efforts towards human neuropsychiatric genetics

Human Genetics (2023)

-

Visual perception of emotion cues in dogs: a critical review of methodologies

Animal Cognition (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.