Abstract

Quantitative structure–property relationships are crucial for the understanding and prediction of the physical properties of complex materials. For fluid flow in porous materials, characterizing the geometry of the pore microstructure facilitates prediction of permeability, a key property that has been extensively studied in material science, geophysics and chemical engineering. In this work, we study the predictability of different structural descriptors via both linear regressions and neural networks. A large data set of 30,000 virtual, porous microstructures of different types, including both granular and continuous solid phases, is created for this end. We compute permeabilities of these structures using the lattice Boltzmann method, and characterize the pore space geometry using one-point correlation functions (porosity, specific surface), two-point surface-surface, surface-void, and void-void correlation functions, as well as the geodesic tortuosity as an implicit descriptor. Then, we study the prediction of the permeability using different combinations of these descriptors. We obtain significant improvements of performance when compared to a Kozeny-Carman regression with only lowest-order descriptors (porosity and specific surface). We find that combining all three two-point correlation functions and tortuosity provides the best prediction of permeability, with the void-void correlation function being the most informative individual descriptor. Moreover, the combination of porosity, specific surface, and geodesic tortuosity provides very good predictive performance. This shows that higher-order correlation functions are extremely useful for forming a general model for predicting physical properties of complex materials. Additionally, our results suggest that artificial neural networks are superior to the more conventional regression methods for establishing quantitative structure–property relationships. We make the data and code used publicly available to facilitate further development of permeability prediction methods.

Similar content being viewed by others

Introduction

The study of how the microstructural morphology of random, heterogeneous, porous materials affects their effective properties, i.e., determining quantitative structure–property relationships, is key for the understanding and prediction of the physical properties of complex materials1. Specifically, understanding how fluid transport properties are related to the microstructure of a porous medium is crucial in a wide range of areas e.g. geological events2, polymeric composites for packaging materials3, catalysis, filtration and separation4, energy, fuels, and electrochemistry5, fiber and textile materials for health care and hygiene6, and porous, biodegradable polymer films for controlled release of medical compounds7. Numerous efforts in determining the physical properties of complex materials have been made since the early work of Maxwell1,8,9,10,11, and such investigations have been enhanced due to the availability of high-resolution 3D images of various types of materials microstructures using X-ray nanotomography12,13 or focused ion beam scanning electron microscopy14, and nuclear magnetic resonance as well15,16.

The porosity \(\phi \) (volume fraction of the pore phase) and specific surface s (pore-solid interface area per unit volume) are perhaps the most basic geometrical characteristics. These two characteristics are the most frequently used in empirical expressions for the permeability. The Kozeny-Carman equation17,18 is the most notable example, usually written as

where k is the permeability and c is the Kozeny-Carman constant. However, the remarkably simple form comes with great limitations. The Kozeny-Carman constant was found not to be a universal quantity. It does not only vary for different systems, but can also depend on the porosity19. Additionally, it does not distinguish portions of pore space that carries significant flow from portions that do not1.

To tackle this difficulty, countless modified versions of the original Kozeny-Carman equation have been proposed. However, these models are usually ad hoc and only applicable to a specific class of structures20. More importantly, although in many cases tortuosity is incorporated in the Kozeny-Carman constant21,22,23,24, it usually only depends on the porosity alone in simplified models, thus the final expression of the permeability is essentially nothing more than a function of porosity and specific surface, i.e., \(f(\phi )/s^2\). However, it is well-known that the microstructure is highly degenerate given only porosity and specific surface25,26, which leads to a wide range of permeabilities as we show later. Thus, any function of the form \(f(\phi )/s^2\) cannot be a general predictor and suffers from the intrinsic variances in the set of infinite degenerate microstructures.

Indeed, accurate prediction of the effective physical properties of the porous media requires a complete quantitative characterization of the microstructure in d-dimensional Euclidean space \(\mathbb {R}^d\) via a variety of n-point correlation functions1. However, while such complete structural information about the medium is generally not available, reduced information in the form of lower-order correlation functions is often very beneficial. Two-point void-void and three-point void-void-void correlation functions have been used to produce both bounds and estimates for the effective electrical conductivity, diffusion coefficient and permeability27,28,29,30,31,32,33,34,35,36. In addition to the void-void correlation function, two-point surface-surface and surface-void correlation functions (where the surface is the interfacial surface between two phases) can also be defined and provide improved reconstructions of two-phase media from imaging data37,38, as well as sharper bounds on permeability compared to only using the void-void correlation function1.

On the other hand, it has been shown that permeability can be simply connected to the electrical formation factor of the porous material39,40. This has been proved rigorously by Avellaneda and Torquato41. However, from a prediction point of view, the formation factor itself needs to be measured experimentally or solved numerically, thus is not that helpful for establishing an explicit link to the microstructure. Although the formation factor is related to the hydraulic tortuosity42, the later also requires heavy computations.

As a complement to rigorous approaches to estimate effective properties from the microstructure, data-driven methodologies to establish structure–property relationships are increasingly being used43,44,45,46,47,48,49. The rapid increase in computational resources facilitates the computation of effective properties for very large data sets (hundreds or thousands) of different microstructures. Moreover, as noted above, affordable high-resolution 3D digitized images of actual microstructures provide valuable data sets. As a consequence, it becomes manageable to generate large numbers of realistic virtual microstructures, and using those to perform exploratory computational screening of structure–property relationships. For example, van der Linden et al.43 use a data set of 536 virtual granular materials, compute 27 geometrical descriptors and use log-linear regression and other statistical learning methods as well as different variable selection schemes to understand the usefulness of the different descriptors for predicting permeability in these systems. Stenzel et al.44 study effective conductivity prediction in 43 virtual realizations of a stochastic spatial network model structure, using porosity and different tortuosity and constrictivity measures. This study was extended to 8,119 microstructures50, which is likely the largest study published before, and the same data set was used again later to predict effective conductivity and permeability45. Barman et al.46 studied effective diffusivity prediction in 36 virtual porous polymer films using tortuosity and constrictivity. In a different direction, there are several attempts to use 2D and 3D convolutional neural networks (CNNs) to extract information directly from the binary image data describing the structure47,48,49,51,52,53 in order to predict effective properties. However, these models are usually difficult to interpret and hard to rescale.

In this work, we are primarily interested in the predictive power of the information content contained in different microstructural descriptors. Specifically, we investigate the two-point surface-surface, surface-void, and void-void correlation functions, and also porosity, specific surface, and geodesic tortuosity using different regression methods. Unlike the hydraulic tortuosity mentioned above, the geodesic tortuosity is a purely geometric quantity that can be computed efficiently, and has been shown to be superior for diffusivity prediction46. We compare different regression methods, including conventional linear regression with linear and quadratic terms, as well as deep artificial neural networks (deep learning). While conventional linear regression has an advantage in so far as the transparency of the prediction mechanism, deep learning has the potential to extract nearly the full information content of the descriptors, providing insight into the utility of the different descriptors for establishing the structure–property relationship. We find that the information content contained in these two-point correlation functions and geodesic tortuosity are indeed helpful to overcome the difficulty of applying a unique Kozeny-Carman-type equation to a variety of distinct microstructures, by yielding much better prediction performance. Moreover, our results suggest that artificial neural networks are superior to the more conventional regression methods for establishing quantitative structure–property relationships.

Consistent with the purpose of the paper, we have generated a large data set of virtual, porous, isotropic, and stationary microstructures of three different types, based on (i) thresholded Gaussian random fields, (ii) thresholded spinodal decomposition simulations of phase separation, and (iii) non-overlapping ellipsoid systems. Varying porosity and length scale and other parameters, we generate 10,000 structures of each of the three types, yielding a data set of 30,000 virtual microstructures in total. This is likely to be the largest data set of virtual microstructures ever created for studying permeability prediction, and covers both granular (ellipsoids) and continuous solid phases to provide a broad variability in the pore space geometry. Fluid flow is simulated using the lattice Boltzmann method. The large number of simulated microstructures makes it feasible to use not only scalar descriptors but also high-dimensional descriptors such as the two-point correlation functions, while still avoiding the well-known ’curse of dimensionality’ in regression caused by having too many dimensions but too little data. To facilitate further investigation and development of permeability prediction methods, we make the microstructural descriptors, the computed permeabilities, the trained models, and the code used herein publicly available54.

The paper is organized as follows. First, we introduce necessary definitions for the geometric descriptors used throughout the paper. Second, we describe how the virtual microstructures are generated, and the flow simulations and computations of permeability are described. Third, computation of the different microstructural descriptors is covered. Fourth, the prediction models for permeability are investigated. Finally, we make concluding remarks and discussions.

Background and definitions

Geodesic tortuosity

We compute geodesic tortuosity in the flow direction according to Barman et al.46 in the following manner. As a first step, a pointwise geodesic tortuosity is computed as \(\tau \left( \mathbf {x}\right) = d\left( \mathbf {x}\right) / d\). Here, d is the length of the microstructure in the flow direction, and \(d\left( \mathbf {x}\right) \) is the length of the shortest path from any inlet pore to any outlet pore through \(\mathbf {x}\). The shortest path is calculated as the sum of two geodesic distance transforms computed in the pore space of the binary voxel array: one using the set of edge voxels constituting the inlet pores as seeding points, and the other using the set of edge voxels constituting the outlet pores as seeding points. Let \(\mathbb {P}\) be the set of voxels for which both geodesic distances are finite, i.e., the set of pore voxels connected to both inlet and outlet. Then, the geodesic tortuosity \(\tau \) can be computed as

In Barman et al.46, it was found that accounting for both inlet and outlet in this manner is superior (in terms of diffusivity prediction) to just accounting for the inlet as is commonly done44,55. Tortuosity calculations were implemented in Matlab (Mathworks, Natick, MA, US).

Correlation functions

Let \(\mathcal {I}\left( \mathbf {x}\right) \) be the indicator function for the void phase (pore space) \(\mathcal {V}_1\), i.e.,

The two-point void-void correlation function is then generally defined by

For statistically homogeneous materials, \(S_2\) is only dependent on the vector difference \(\mathbf {r} = \mathbf {x}_2 - \mathbf {x}_1\). Further, if the material is also statistically isotropic, \(S_2\) is only dependent on the radial distance \(r = |\mathbf {r}|\). Introducing a notation that is consistent with the other correlation functions defined below, the two-point void-void correlation function is now defined as

where the average is taken over all \(\mathbf {x}\) and over all \(\mathbf {r}\) with magnitude r. We proceed to the correlation functions involving the interfacial surface. Let \(\mathcal {M}\left( \mathbf {x}\right) \) be the interface indicator function defined by1

Still assuming ergodicity and statistical isotropy, the surface-void correlation function can be written as

and the surface-surface correlation function can be written as

Importantly, the information content of one-point correlation functions (porosity \(\phi \) and specific surface s) is automatically encoded into these two-point correlation functions. When r goes to infinity, \(F_\text {vv}\left( r\right) \), \(F_\text {sv}\left( r\right) \) and \(F_\text {ss}\left( r\right) \) converge to \(\phi ^2\), \(s\phi \) and \(s^2\) respectively. Interestingly, the slope of \(F_\text {sv}\left( r\right) \) at the origin is proportional to the integrated mean curvature of the system56, which has recently been shown to be a useful predictor of both permeabilities57 and diffusion coefficients58.

Accurate and robust computation of \(F_\text {vv}\), \(F_\text {sv}\), and \(F_\text {ss}\) from discretized structures is a non-trivial task (in particular for the later two). The calculations recently became accessible due to the algorithms devised by Ma and Torquato56. There, the calculations involving the interfacial surface are performed using a scalar field which when thresholded yields the corresponding two-phase medium. The details of the algorithms and the software can be found in Ref56.

Microstructure data preparation

Microstructure generation

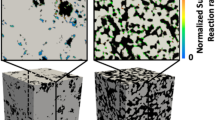

To achieve a large, representative data set, three different types of microstructures that are commonly studied in the materials literature are generated, including (i) thresholded Gaussian random fields, (ii) thresholded spinodal decomposition simulations of phase separation, and (iii) non-overlapping (hard) ellipsoid systems. We simulate 10,000 realizations for each type, with porosities \(\phi \) selected uniformly in \(0.3 \le \phi \le 0.7\) and varying characteristic length scales. In the end, all structures are converted to \(N^3\) binary voxel arrays with \(N = 192\) voxels. In Fig. 1, one example of each type of structure is shown. We verified that the choice of the system volume size is both computationally manageable and representative. The correlation functions are evaluated for integer radii value bins r from 1 to 96 voxels. In Fig. 2, some examples of correlation functions are shown. Note that these correlation functions are considerably distinct from each other, as seen by their different magnitudes and functional shapes. On the other hand, they have already converged to the large-r limits within the sample size. The details of how these samples are generated are presented in the following subsections.

Examples of structures, showing (a) Gaussian random field structure with \(\phi = 0.7\), (b) spinodal decomposition structure with \(\phi = 0.5\), and (c) non-overlapping ellipsoid structure with \(\phi = 0.3\). The figure is produced using ParaView 5.4.1 (http://www.paraview.org, freely available without permission).

Some examples of (a) \({F_{{\text{ss}}}}\), (b) \({F_{{\text{sv}}}}\) , and (c) \({F_{{\text{vv}}}}\) correlation functions. The examples are taken from the three different types of generated microstructures, i.e., thresholded Gaussian random fields (blue), thresholded spinodal decomposition simulations of phase separation (red), and non-overlapping ellipsoid systems (green).

Gaussian random fields

Gaussian random fields are generated according to Lang and Potthoff59. Assuming that we wish to simulate a Gaussian random field \(\mathcal {G}\left( \mathbf {x}\right) \), \(\mathbf {x} \in \mathbb {R}^3\), with mean zero and covariance function \(\Psi \left( \mathbf {x}, \mathbf {y}\right) \), it utilizes the fact that the covariance function can be written

where \(\gamma \left( \mathbf {p}\right) \) is the spectral density of the Gaussian random field and \(\left\langle \cdot , \cdot \right\rangle \) is the inner product. We wish to generate structures with length scale parameter L and resolution \(N^3\) voxels. Letting \(\delta = L / N\) and letting FFT and FFT\(^{-1}\) denote the forward and inverse 3-dimensional Fast Fourier Transforms, this can be performed in the following fashion: Generate an array W where all elements are independent and normal distributed with mean zero and standard deviation \(\delta ^{-3}\) (white noise). Compute \(\mathrm {FFT}(W)\). Define the Fourier space grid by \(\mathbf {p} = \left( p_1, p_2, p_3\right) \), where \(p_1 \in \{- N/(2 L), (- N/2 + 1)/L, ..., (N/2 - 2)/L, (N/2 - 1)/L\}\) and likewise for \(p_2\) and \(p_3\). Compute \(\gamma \left( \mathbf {p}\right) \) on the grid. Compute \(U = \mathrm {FFT}(W) \left( \mathbf {p}\right) \times \gamma \left( \mathbf {p}\right) ^{1/2} / L^3\). Then, obtain the Gaussian random field as FFT\(^{-1}(U)\). We use several different spectral densities. For type (I),

for \(n = 1.95\) and \(l = 1.85\) (power-law)59,60. For type (II),

for \(\alpha = 1.75\) (exponential). For type (III),

for \(\alpha = 1.25\) (Gaussian). For type (IV),

where \(\rho = 1.25\) (circular top-hat). The parameters are chosen such that the corresponding Gaussian random fields have approximately the same characteristic spatial scale. For each spectral density, 2,500 structures are generated using uniformly distributed values of L, \(4 \le L \le 16\). Thresholding then converts the scalar fields to corresponding two-phase media. To obtain a microstructure with a prescribed porosity \(\phi \), the threshold is chosen to be an appropriate percentile of the values of \(\mathcal {G}\). The method is implemented in Matlab (Mathworks, Natick, MA, US). The execution time is approximately 1 s (single core) for each structure.

Spinodal decomposition

The lattice Boltzmann method61,62, a numerical framework for solving partial differential equations based on kinetic theory, is used to simulate phase separation kinetics (spinodal decomposition) using the Navier–Stokes and Cahn–Hilliard equations. Very briefly, the time evolution of a spatially dependent concentration \(C\left( \mathbf {x}, t\right) \), \(0 \le C \le 1\), is described by

As an initial condition, the values of C are uniformly distributed in \(0 \le C \le 1\), independently in all grid points. The phase separation is the coarsening of regions with \(C \approx 0\) and \(C \approx 1\) (ideally equal to 0 and 1). Here, \(\mathbf {u}\) is a fluid velocity governed by the Navier–Stokes equations, M is a mobility, i.e., a diffusion coefficient, and \(\mu \) is the chemical potential. The simulation is performed using a dimensionless time step unity and both the density ratio and viscosity ratio between the phases are unity. The interface width, i.e., the characteristic length scale of the transition between the phases is 5 voxels. The simulations are performed in the resolution \(96^3\) voxels with periodic boundary conditions, and are run until an appropriate degree of coarsening is obtained. The number of iterations K is chosen randomly between 5 and 20,000 such that \(K^{1/3}\) is approximately uniformly distributed; this is because according to the Lifschitz-Slyozov law, the typical length scale in the structure will be proportional to the cubic root of the simulation time. After terminating the simulation, the solutions are upscaled to \(192^3\) voxels and thresholded to obtain the desired porosity. The spinodal decomposition simulations are run using in-house software61,62 with efficient scaling to many cores using the MPI interface. The average execution time is approximately 13 min (32 cores) for each structure, and up to 60 min for the longest computation.

Non-overlapping ellipsoids

Random configurations of non-overlapping, hard ellipsoids are generated using a hard particle Markov Chain Monte Carlo (MCMC) algorithm. The Perram-Wertheim criterion63 for two ellipsoids of arbitrary orientation is used for overlap detection. First, particles are assigned uniformly distributed locations and orientations (the latter encoded using a quaternion representation). Second, the configurations are relaxed by sequentially performing random translations of all particles and then random rotations of all particles until no two particles overlap. Proposed translations and rotations are only accepted if they lead to a lower or equal degree of overlap for the considered particle. These “local” stochastic optimization steps eventually lead to a “global” optimization resulting in no overlap. Third, the configurations are equilibrated by performing a large number of random translations and rotations, ensuring a distribution in location and orientation that is as uniform as possible. Now, if the desired porosity \(\phi \) is larger than 0.50, non-overlapping configurations can be generated easily at constant porosity as described above. Otherwise, as a final step, the configuration is further compressed in small steps, \(\Delta \phi = 10^{-5}\), until the target porosity \(\phi _\text {target}\) is reached (in some cases, the configuration becomes jammed before reaching \(\phi _\text {target}\)). The proposed translations are normal distributed with standard deviation \(\sigma _\text {t}\) in each direction. The proposed rotations are normal distributed with standard deviation \(\sigma _\text {r}\) in a random direction. In every step, \(\sigma _\text {t}\) and \(\sigma _\text {r}\) are chosen in an adaptive fashion to aim for an acceptance probability of 0.25. The number of ellipsoids M is distributed in \(8 \le M \le 512\) such that \(M^{1/3}\) is approximately uniformly distributed, yielding an approximately uniform distribution of length scales. Further, the ellipsoids have semi-axes \((1,1,\eta )\) where \(\eta \) is uniform in \(0.25 \le \eta \le 1\) (oblate) with probability 0.5 and otherwise uniform in \(1 \le \eta \le 4\) (prolate). The random microstructures are generated using in-house developed software implemented in Julia (http://www.julialang.org)64 and available in a Github repository (https://github.com/roding/whitefish_generation, version 0.2). The average execution time is approximately 1 min (single core) for each structure, and up to 30 min for the longest computation. The obtained configurations are further smoothed with a Gaussian filter with \(\sigma = 3\) voxels and thresholded again to regain the original porosity; the reason for this is that computation of some of the correlation functions requires the binary structures to be described as a thresholded version of smooth scalar fields.

Flow simulations

The lattice Boltzmann method61,62, a numerical framework for solving partial differential equations based on kinetic theory, is used to simulate fluid flow through the structures. The Navier–Stokes equations for pressure-driven flow are solved for the steady state using no-slip, bounce-back boundary conditions on the solid/liquid interface and periodic boundary conditions orthogonal to the flow direction. We use the two relaxation time collision model with the free parameter \(\lambda _{eo}=\frac{3}{16}\), which guarantees that the computed permeability is independent of the relaxation time (and thus the viscosity)65. The relaxation time \(\tau = -\frac{1}{\lambda _e}\) is kept at 1.25. The flow is driven by constant pressure difference boundary conditions across the structure in the primary flow direction66, and a linear gradient is used as initial condition. The computational grid coincides with the voxels of the binary structure, i.e., it has \(192^3\) grid points. After convergence to steady state flow, the permeability k is obtained from Darcy’s law,

Here, \(\bar{u}\) is the average velocity, \(\Delta p\) is the applied pressure difference, \(\mu \) is the dynamic viscosity, and d is the length of the microstructure in the flow direction. The permeability is independent of the fluid and the pressure difference and a property solely of the microstructure provided that the Reynolds number is sufficiently small (\(\mathrm {Re} < 0.01\)), which also ensures that the velocity is proportional to the pressure difference. The computed permeabilities have units voxels\(^2\), where the voxels have unit length.

Convergence of the computation is assessed in the following fashion. The energy of the fluid flow field, i.e. the integral of the squared velocity magnitude in the pore space, is computed for each iteration. In each iteration, the coefficient of variation (the standard deviation divided by the mean) of this energy is computed for the latest 500 iterations. The computation is terminated once this coefficient of variation reaches below \(10^{-4}\). The convergence criterion is the same throughout, although the number of iterations required for convergence differs between different types of microstructures. The mean number of iterations needed are approximately 2,840 for Gaussian random fields, 2,330 for spinodal decompositions, and 2,270 for non-overlapping ellipsoids. However, the number of iterations is also highly dependent on e.g. porosity and varies approximately in the range 1,000 to 10,000 for all types of microstructures. The average execution time is 97 s (utilizing 32 cores, with efficient scaling using the MPI interface).

Figure 3 illustrates the result of a flow simulation in one of the Gaussian random field structures.

An example of a simulated steady state flow through a Gaussian random field microstructure with porosity \(\phi = 0.7\). Regions with slow and fast flow are indicated by blue and red flow lines, respectively. The figure is produced using ParaView 5.4.1 (http://www.paraview.org, freely available without permission).

We choose 4,500 miscrostructures, 1,500 for each type, and plot their scaled permeabilities \(ks^2\) versus porosities \(\phi \) in Fig. 4. It is noteworthy that although a clear overall trend can be seen, the scaled permeability is never a function of \(\phi \) alone. In fact, we observe that for the same porosity, the largest scaled permeability is approximately twice as large as the smallest one. This degeneracy of microstructures clearly show why the Kozeny-Carman equation and some of its modifications typically fail for general structures. Consequentially, more detailed information is needed to pinpoint the true permeability on this “band”.

Another interesting observation is that the scaled permeabilities of spinodal decomposition patterns are almost always lower than those of the other two types. It has been shown that spinodal decomposition gives rise to hyperuniform structures in the scaling region67. This observation is consistent with the fact that hyperuniform structures cannot tolerate large “holes” and the pore space is more evenly distributed compared to nonhyperuniform structures, thus their permeabilities are generally lower40.

Microstructural descriptors

We study the performance of the different microstructural descriptors introduced above and the combinations of them for predicting the permeability k (dimension length\(^2\)). The descriptors used are porosity \(\phi \) (dimensionless), specific surface s (dimension 1/length), tortuosity \(\tau \) (dimensionless), the correlation functions \(F_\text {ss}\) (dimension 1/length\(^2\)), \(F_\text {sv}\) (dimension 1/length), and \(F_\text {vv}\) (dimensionless). Additionally, we investigate a particular combination of the correlation functions: inspired by a rigorous upper bound for permeability in isotropic media56, i.e.,

we define a function

which is also used for prediction (F is dimensionless and converges to zero when r goes to infinity). Each correlation function is represented by a 96-dimensional vector. In Table 1, the models, denoted 1 through 7, and the sizes of the corresponding input features are listed. Additionally, we consider a rescaling of the problem, predicting the rescaled, dimensionless permeability \(k s^2\) instead of k directly. For model 1, we remove s from the descriptors since it is already absorbed in the permeability; for models 2, 3, 4, 6, and 7, the correlation functions are rescaled to dimensionless versions where applicable. In Table 2, the modified models, denoted 1\('\) through 7\('\), and the dimensions of the corresponding input vectors (that changes only for model 1\('\)) are listed.

In practice, we use the logarithm of permeabilities, i.e., \(\log _{10} k\) and \(\log _{10} \left( k s^2\right) \), instead of their original values. The reason for using logarithms of the permeabilities is that they span several orders of magnitude. By taking logarithms, the predictions are simplified, and guaranteed to be positive. We also use the logarithms of porosity, specific surface, and tortuosity since we know that models 1 and 1\('\) are naturally multiplicative in these Kozeny-Carman-like equations.

Predictive models

We assess the predictive performance of the different descriptors/inputs using several regression methods, namely, linear regression with linear terms only or combined with quadratic terms, and deep artificial neural networks. The inputs are as described above, with no normalization (such as subtracting feature-wise means; our investigation suggested no improvement from normalization in this setting). For each microstructure class, the data are split randomly into training data (70 %; 7,000 per class), validation data (15 %; 1,500 per class), and test data (15 %; 1,500 per class). In total, the training, validation, and test data sets hence consist of 21,000, 4,500, and 4,500 samples, respectively. The split is kept fixed across all inputs and all regression methods. Training data is used for the actual estimation of a functional relationship mapping input to output. Validation data is used for hyperparameter selection, i.e., finding optimal values for e.g. learning rates for ANNs (in the case of linear regressions, the validation data is not used because we do not have any hyperparameters to optimize). Test data is used for final assessment of the predictive performance. To quantify error/loss in prediction, we use several different measures. Let k be the ’true’ permeability, i.e., the value obtained from the lattice Boltzmann simulations, and let \(\hat{k}\) be the predicted value. We use mean squared error (MSE) in the logarithmic scale,

root mean squared error (RMSE) which is just \(\mathrm {RMSE} = \mathrm {MSE}^{1/2}\), and mean absolute percentage error (MAPE) in the linear scale, i.e.,

Using MSE is the most practical and most common choice for model fitting. However, for final assessment of performance, the linear scale and MAPE is a more straightforward and intuitive choice.

Linear regression with linear terms

First, we consider using linear regression with only linear terms (i.e., only the input descriptors to the power of unity are used). For models 1 and 1\('\), this becomes multiplicative regression in a Kozeny-Carman-like form, i.e.,

and

It is well established that \(a > 0 \), \(b < 0 \), and \(c < 0 \) in this setting (and due to the dimensions, \(b = - 2\) would be preferable).

On the other hand, the rationale behind the linear regression model of correlation functions is inspired by the rigorous bounds involving correlation functions, such as Eq. (16). Since the integral in the bounds can be seen as the inner product between the correlation functions and another predetermined function, it is natural to assume that a functional regression on the correlation functions may yield a reasonable estimation of permeabilities. For correlation functions evaluated on discrete grids, the model essentially becomes a linear regression model. To give a couple of examples of the regressions on correlation functions, model 2 becomes

model 6 becomes

and model 3\('\) becomes

The rest of the models are formulated in an equivalent fashion. For the correlation function-based models, \(\alpha \), \(\beta \), and \(\gamma \) are just vectors of coefficients but can also be thought of as discretized forms of continuous coefficient functions \(\alpha (r)\), \(\beta (r)\), and \(\gamma (r)\). We use least squares fitting, finding the coefficients that minimize the training set MSE. We also include the reference Kozeny-Carman model in this category as a benchmark. Fitting is performed in Matlab (Mathworks, Natick, MA, US).

Specifically, the fitted Kozeny-Carman model writes as

For models 1 and 1\('\), the estimated relationships become

and

Interestingly, the estimated coefficients for models 1 and 1\('\) are quite similar, and they are also comparable to the Kozeny-Carman model. Specifically, even without being forced to have the dimensionally correct exponent − 2 for s, as in the case of model 1\('\), the estimated exponent from the regression (− 1.88) in model 1 is very close.

To get an idea of the dependence of the coefficient functions on r, we plot the estimated \(\alpha (r)/r\) in Fig. 5 as an example. We scale \(\alpha (r)\) by r in order to make it have the appropriate weight consistent with the one that multiplies the correlation functions in the integrand of Eq. (16). It is clear that instead of weighting on different parts of \(F_\text {vv}(r)\) equally, the regression emphasizes on the small-to-intermediate-r behavior of the correlation function as expected.

We also made an attempt to further smooth the coefficient functions according to a proposed functional regression scheme published earlier68. Briefly, the idea is to introduce a penalty term in the least squares objective function that is proportional to the integral of the squared second derivative of the coefficient function, such that the functional form becomes smoother. To exemplify, pick a model with a coefficient function \(\alpha (r)\), and we minimize

for a range of penalty parameters \(\lambda \), and pick \(\lambda \) such that the validation MSE is minimized. Interestingly, the smoothing procedure provides only a negligible improvement in validation and test errors, and a negligible increase in smoothness of the coefficient functions. Thus, we stick to the models without penalties.

The errors for training, validation, and test sets for the aforementioned models are shown in Table 3. Although we perform no hyperparameter optimization for these regression models (such as variable selection), we include the validation set errors for consistency. There are several noteworthy observations about our results. First, the Kozeny-Carman-like model (1 and 1\('\)) with geodesic tortuosity almost reduce the relative error by half compared to the original Kozeny-Carman model, while the combination of all three correlation functions (6 and 6\('\)) also give comparable performance. Interestingly, when we further combine the correlation functions with geodesic tortuosity (7 and 7\('\)), the error shrinks to approximately only one-third of the error generated by the Kozeny-Carman model. Among single correlation functions, the best performance is given by \(F_\text {vv}\), while the worst is given by \(F_\text {ss}\). This result is expected, since \(F_\text {vv}\) alone can yield a bound on the permeability, while \(F_\text {ss}\) alone does not even contain the most important information, i.e., the porosity. The reason we keep model 2 and 2\('\) is indeed just for self-consistency. Interestingly, the compound correlation function F performs relatively poorly. This is probably due to the fact that it washes out the information content contained in individual correlation functions. However, its error can be interpreted as a lower bound on how good the bound in Eq. (16) would work on our data set. To better visualize our findings, the predicted values vs the true (simulated) values and histograms of relative errors for a few selected models are shown in Fig. 6. It is obvious that by adding geodesic tortuosity and correlation functions the prediction error can be greatly reduced. It can also be seen that although the Kozeny-Carman-like model (Fig. 6b) and the correlation function based one (Fig. 6c) has similar MAPE, the error distribution of the correlation function based one is more symmetric.

Linear regression with quadratic terms

Second, we generalize the previous models that utilized linear terms only to incorporate both linear and quadratic terms. For the correlation function models, we use both the correlation functions themselves and their squares. For example, we use both \(F_\text {ss}\) and \(F^2_\text {ss}\) as input data in model 2. It is worth to point out that we use only pure quadratic terms, such as \(F^2_\text {ss}(r_i)\), but not mixed quadratic terms, such as \(F_\text {ss}(r_i) F_\text {ss}(r_j)\) for \(i \ne j\). We include models 1 and 1\('\) in this investigation as well, mainly for completeness, and add terms of the type \(\left( \log _{10} \phi \right) ^2\). Fitting is performed in Matlab (Mathworks, Natick, MA, US). The errors for training, validation, and test sets are again shown in Table 4. In Fig. 7, the predicted values vs the true (simulated) values and histograms of relative errors for a few selected models are shown.

Importantly, we note that adding the quadratic terms leads to an improvement for every model. This suggests that the relation between the microstructural descriptors and the permeability can be quite complex such that a simple linear model may not be able to fully capture it. However, the relative rank of performances roughly remain the same, showing the validity of our previous arguments. The estimated coefficient functions are quite noisy and their physical meaning is not obvious. We also make an attempt to use a full quadratic model, incorporating also mixed terms such as \(F_\text {ss}(r_i) F_\text {ss}(r_j)\), or even mixed between correlation functions, such as \(F_\text {sv}(r_i) F_\text {vv}(r_j)\). The numbers of variables in the models then become very large, leading to ill-conditioned estimation problems. We investigated whether the Lasso variable selection technique69, which forces a variable number of coefficients in a linear model to become zero by penalizing the sum of absolute values of the coefficients, could act as an efficient means of reducing the model dimensionality. However, it turns out that no amount of Lasso regularization can decrease the validation MSE in this case. There are two likely reasons for this: Lasso is primarily intended for high-dimensional variable spaces where a large fraction of the variables contain little information and mostly noise, and can be disregarded easily. This is likely not the case for the correlation functions. Also, because the values are taken from continuous functions, they are strongly correlated, which is known to compromise the underlying rationale of Lasso.

Neural networks

The complexity of the linear regression models could be further increased, for example by incorporating pure cubic terms. Although we expect to see further improvements, the linear regression model can quickly become ill-conditioned and intractable on this track. For this reason, we proceed to consider deep neural networks, which can potentially fully capture the complex structure–property relationships. Thus we can exploit the complete information content contained in the descriptors.

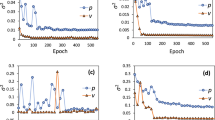

We use four fully-connected hidden layers with 128 nodes each and rectified linear unit (ReLU) activations. Given that the input dimension is n, and the output dimension is unity, the number of weights in the network is \((n+1) \times 128 + 3 \times 129 \times 128 + 128 + 1\), i.e., there are between 50,049 and 86,785 weights to be optimized. The network is shown in Fig. 8. Random initial weights are selected using the Glorot/Xavier uniform initializer. The networks are trained using the Adam optimizer70 with learning rate \(10^{-4}\), batch size 128, and mean squared error loss. All models are trained 100 times for 10,000 epochs using different random weight initializations, and the models with the globally minimal validation loss (MSE) are selected (hence utilizing early stopping, but performed over multiple realizations/initializations). The reason for this procedure is to minimize the impact of the random weight initializations. The models are implemented in TensorFlow 2.1.0 (http://www.tensorflow.org)71. An example of training and validation loss curves is shown in Fig. 9. Again, the errors for training, validation, and test sets are shown in Table 5. In Fig. 10, the predicted values vs the true (simulated) values are shown. Indeed, the neural networks based regressions perform noticeably better than the linear counterpart. Again, we see that the combination of correlation functions and geodesic tortuosity gives the best performance, achieving an impressive MAPE that is less than \(4\%\). We also notice that all correlation function based models (except for \(F_\text {ss}\) and F for aforementioned reasons) perform very well, and all better than the Kozeny-Carman-like model.

The topology of the neural network. The input variables are denoted \(x_1\) to \(x_n\), where the input dimension n is either 2, 3, 96, 288, or 289. There are four fully-connected hidden layers with 128 nodes each. The k:th node of the l:th layer is here denoted by \(h_k^l\). Rectified linear unit (ReLU) activations are used for the hidden layers. The output y is just the logarithm of the permeability. The figure is produced by Victor Wåhlstrand Skärström in TikZ/LaTeX (MikTeX distribution version 20.6.29, http://www.miktex.org, freely available without permission).

To gain some understanding of the neural network and how the prediction is performed, we perform for the case of model 4 an analysis of the network’s sensitivity with respect to perturbations in the input. Specifically, for the test set, we add random Gaussian noise to \(F_\text {vv}(r)\) for one r value at a time. The perturbation in the output is quantified by the standard deviation of the difference between the original prediction and the perturbed prediction. In Fig. 11, we show the results for \(\sigma = 0.02\) (it turns out that for a broad range of perturbations, \(0.0001 \le \sigma \le 0.1\), the result changes only by a constant scaling). We see a rough resemblance to Fig. 5 in the sense that large magnitudes are mostly found for small r, indicating that to some extent the models are using the same information in the correlation function.

Conclusions and discussion

We have studied data-driven structure–property relationships between fluid permeabilities and a variety of microstructural descriptors in a large data set of 30,000 virtual, porous microstructures of different types. The data set includes both granular and continuous solid phases, and is the largest one ever generated for the study of transport properties to our knowledge. To characterize the pore space geometry, we computed one-point correlation functions (porosity, specific surface), two-point surface-surface, surface-void, and void-void correlation functions, and geodesic tortuosity. Different combinations of these descriptors were used as input for different statistical learning methods, including linear regression with linear and quadratic terms, as well as deep neural networks. We find that the performance improves as the regression models become more complex, suggesting the complex relationship between the structural descriptors and the physical properties. Sufficiently large neural networks are able to fully capture the information content of the descriptors and reveal their utilities. With higher-order descriptors, we obtain significant improvements of performance when compared to a Kozeny-Carman regression with only lowest-order descriptors (porosity and specific surface). We found that combining all three two-point correlation functions and tortuosity provides the best prediction of permeability. The void-void correlation function was found to be the most informative individual descriptor. Also, the combination of porosity, specific surface, and geodesic tortuosity provides comparable predictive performance, in spite of its simplicity. Indeed, this shows that the greater information content contained in higher-order correlation functions are extremely useful for predicting physical properties of complex materials. Moreover, our work demonstrates that advanced machine learning methods can be very useful in establishing structure–property relationships.

An interesting observation is that in general the rescaling of permeabilities seem to improve the performance of the simple linear model, as seen from Table 3 that all models except the Kozeny-Carman-like one outperform their unscaled counterparts. However, as we add quadratic terms and the predictive model becomes more complex, the advantage of rescaling does not hold any more. Finally, for the highly nonlinear neural networks, the relative performance is completely inverted. This may suggest that the relation between the permeability and the specific surface cannot be captured by a simple rescaling, and doing so may reduce the information content contained in the original data. These observed effects of rescaling may lead to some guidelines on developing physics-aware machine learning models for other physical properties as well.

As a final remark, we emphasize that by incorporating microstructures with different length scales in our data set we make our models very robust and can be applied to real-world data. Since the permeability has the dimension \(L^2\), we can easily obtain the permeability of a sample with a different length scale but the same microstructure. However, there is no universally applicable characteristic microstructural length to scale the permeability that enables a comparison of permeabilities for different microstructures. For example, for sphere packings the natural choice can be the radii of particles, but for continuous structures we need to resort to other quantities40. Thus, by training our models on samples with varying length scales, we circumvent this problem by only requiring a rescaling to the right order of magnitude. Finally, we make the data and code used publicly available to facilitate further development of permeability prediction methods54.

Data availability

The data, i.e. microstructural descriptors and computed permeabilities, together with the trained models and the code used are publicly available via a repository54.

References

Torquato, S. Random Heterogeneous Materials: Microstructure and Macroscopic Properties (Springer, New York, 2013).

Vasseur, J., Wadsworth, F. B. & Dingwell, D. B. Permeability of polydisperse magma foam. Geology 48(6), 536–540 (2020).

Silvestre, C., Duraccio, D. & Cimmino, S. Food packaging based on polymer nanomaterials. Prog. Polym. Sci. 36, 1766–1782 (2011).

Slater, A. & Cooper, A. Function-led design of new porous materials. Science 348, aaa8075 (2015).

Stamenkovic, V., Strmcnik, D., Lopes, P. & Markovic, N. Energy and fuels from electrochemical interfaces. Nat. Mater. 16, 57–69 (2017).

van Langenhove, L. Smart Textiles for Medicine and Healthcare: Materials, Systems and Applications (Elsevier, Amsterdam, 2007).

Marucci, M. et al. New insights on how to adjust the release profile from coated pellets by varying the molecular weight of ethyl cellulose in the coating film. Int. J. Pharm. 458, 218–223 (2013).

Milton, G. & Sawicki, A. Theory of composites. Cambridge monographs on applied and computational mathematics. Appl. Mech. Rev. 56, B27–B28 (2003).

Sahimi, M. Flow and Transport in Porous Media and Fractured Rock: From Classical Methods to Modern Approaches (Wiley, Hoboken, 2011).

Huang, S., Wu, Y., Meng, X., Liu, L. & Ji, M. Recent advances on microscopic pore characteristics of low permeability sandstone reservoirs. Adv. Geo-Energy Res. 2, 122–134 (2018).

Huang, H. et al. Effects of pore-throat structure on gas permeability in the tight sandstone reservoirs of the Upper Triassic Yanchang formation in the Western Ordos Basin. China. J. Petrol. Sci. Eng. 162, 602–616 (2018).

Blunt, M. J. et al. Pore-scale imaging and modelling. Adv. Water Resour. 51, 197–216 (2013).

Lee, S.-H., Chang, W.-S., Han, S.-M., Kim, D.-H. & Kim, J.-K. Synchrotron x-ray nanotomography and three-dimensional nanoscale imaging analysis of pore structure-function in nanoporous polymeric membranes. J. Membr. Sci. 535, 28–34 (2017).

Gunda, N. et al. Focused ion beam-scanning electron microscopy on solid-oxide fuel-cell electrode: Image analysis and computing effective transport properties. J. Power Sources 196, 3592–3603 (2011).

Ge, X., Fan, Y., Zhu, X., Chen, Y. & Li, R. Determination of nuclear magnetic resonance T2 cutoff value based on multifractal theory—An application in sandstone with complex pore structure. Geophysics 80, D11–D21 (2015).

Yao, Y. & Liu, D. Comparison of low-field NMR and mercury intrusion porosimetry in characterizing pore size distributions of coals. Fuel 95, 152–158 (2012).

Kozeny, J. . Über kapillare leitung des wassers im boden:(aufstieg, versickerung und anwendung auf die bewässerung). Sitz. Ber. Akad. Wiss, Wien, Math. Nat. 136, 271–306 (1927).

Carman, P. Fluid flow through granular beds. Trans. Inst. Chem. Eng. 15, 150–166 (1937).

Kaviany, M. Principles of heat transfer in porous media (Springer, New York, 2012).

Xu, P. & Yu, B. Developing a new form of permeability and Kozeny-Carman constant for homogeneous porous media by means of fractal geometry. Adv. Water Resour. 31, 74–81 (2008).

Mauret, E. . & Renaud, M. . Transport phenomena in multi-particle systems—I. Limits of applicability of capillary model in high voidage beds-application to fixed beds of fibers and fluidized beds of spheres. Chem. Eng. Sci. 52, 1807–1817 (1997).

Mota, M., Teixeira, J., Bowen, W. & Yelshin, A. Binary spherical particle mixed beds: Porosity and permeability relationship measurement. Trans. Filtr. Soc. 1, 101–106 (2001).

Plessis, J. D. & Masliyah, J. Flow through isotropic granular porous media. Transport Porous Med. 6, 207–221 (1991).

Ahmadi, M., Mohammadi, S. & Hayati, A. Analytical derivation of tortuosity and permeability of monosized spheres: A volume averaging approach. Phys. Rev. E 83, 026312 (2011).

Jiao, Y., Stillinger, F. & Torquato, S. A superior descriptor of random textures and its predictive capacity. Proc. Natl. Acad. Sci. 106, 17634–17639 (2009).

Gommes, C., Jiao, Y. & Torquato, S. Microstructural degeneracy associated with a two-point correlation function and its information content. Phys. Rev. E 85, 051140 (2012).

Torquato, S. Random heterogeneous media: Microstructure and improved bounds on effective properties. Appl. Mech. Rev. 44, 37–76 (1991).

Jiao, Y. & Torquato, S. Quantitative characterization of the microstructure and transport properties of biopolymer networks. Phys. Biol. 9, 036009 (2012).

Prager, S. Viscous flow through porous media. Phys. Fluids 4, 1477–1482 (1961).

Weissberg, H. & Prager, S. Viscous flow through porous media. II. approximate three-point correlation function. Phys. Fluids 5, 1390–1392 (1962).

Weissberg, H. & Prager, S. Viscous flow through porous media. III. Upper bounds on the permeability for a simple random geometry. Phys. Fluids 13, 2958–2965 (1970).

Berryman, J. & Milton, G. Normalization constraint for variational bounds on fluid permeability. J. Chem. Phys. 83, 754–760 (1985).

Berryman, J. Bounds on fluid permeability for viscous flow through porous media. J. Chem. Phys. 82, 1459–1467 (1985).

Rubinstein, J. & Torquato, S. Flow in random porous media: Mathematical formulation, variational principles, and rigorous bounds. J. Fluid Mech. 206, 25–46 (1989).

Liasneuski, H. et al. Impact of microstructure on the effective diffusivity in random packings of hard spheres. J. Appl. Phys. 116, 034904 (2014).

Hlushkou, D., Liasneuski, H., Tallarek, U. & Torquato, S. Effective diffusion coefficients in random packings of polydisperse hard spheres from two-point and three-point correlation functions. J. Appl. Phys. 118, 124901 (2015).

Zachary, C. & Torquato, S. Improved reconstructions of random media using dilation and erosion processes. Phys. Rev. E 84, 056102 (2011).

Guo, E.-Y., Chawla, N., Jing, T., Torquato, S. & Jiao, Y. Accurate modeling and reconstruction of three-dimensional percolating filamentary microstructures from two-dimensional micrographs via dilation-erosion method. Mater. Charact. 89, 33–42 (2014).

Katz, A. & Thompson, A. Quantitative prediction of permeability in porous rock. Phys. Rev. B 34, 8179 (1986).

Torquato, S. Predicting transport characteristics of hyperuniform porous media via rigorous microstructure-property relations. Adv. Water Resour. 140, 103565 (2020).

Avellaneda, M. & Torquato, S. Rigorous link between fluid permeability, electrical conductivity, and relaxation times for transport in porous media. Phys. Fluids A Fluid Dyn. 3, 2529–2540 (1991).

Ghanbarian, B., Hunt, A., Ewing, R. & Sahimi, M. Tortuosity in porous media: A critical review. Soil Sci. Soc. Am. J. 77, 1461–1477 (2013).

van der Linden, J., Narsilio, G. & Tordesillas, A. Machine learning framework for analysis of transport through complex networks in porous, granular media: A focus on permeability. Phys. Rev. E 94, 022904 (2016).

Stenzel, O., Pecho, O., Holzer, L., Neumann, M. & Schmidt, V. Predicting effective conductivities based on geometric microstructure characteristics. AIChE J. 62, 1834–1843 (2016).

Neumann, M., Stenzel, O., Willot, F., Holzer, L. & Schmidt, V. Quantifying the influence of microstructure on effective conductivity and permeability: virtual materials testing. Int. J. Solids Struct. (2019).

Barman, S., Rootzén, H. & Bolin, D. Prediction of diffusive transport through polymer films from characteristics of the pore geometry. AIChE J. 65, 446–457 (2019).

Kondo, R., Yamakawa, S., Masuoka, Y., Tajima, S. & Asahi, R. Microstructure recognition using convolutional neural networks for prediction of ionic conductivity in ceramics. Acta Mater. 141, 29–38 (2017).

Wu, J., Yin, X. & Xiao, H. Seeing permeability from images: Fast prediction with convolutional neural networks. Sci. Bull. 63, 1215–1222 (2018).

Sudakov, O., Burnaev, E. & Koroteev, D. Driving digital rock towards machine learning: Predicting permeability with gradient boosting and deep neural networks. Comput. Geosci. 127, 91–98 (2019).

Stenzel, O., Pecho, O., Holzer, L., Neumann, M. & Schmidt, V. Big data for microstructure-property relationships: A case study of predicting effective conductivities. AIChE J. 63, 4224–4232 (2017).

Kamrava, S., Tahmasebi, P. & Sahimi, M. Linking morphology of porous media to their macroscopic permeability by deep learning. Transport Porous Med. 131, 427–448 (2020).

Wu, H., Fang, W.-Z., Kang, Q., Tao, W.-Q. & Qiao, R. Predicting effective diffusivity of porous media from images by deep learning. Sci. Rep. 9, 20387 (2019).

Lubbers, N., Lookman, T. & Barros, K. Inferring low-dimensional microstructure representations using convolutional neural networks. Phys. Rev. E 96, 052111 (2017).

Röding, M., Ma, Z. & Torquato, S. Predicting permeability via statistical learning on higher-order microstructural information. ZENODO https://doi.org/10.5281/zenodo.3752765 (2020).

Pecho, O. et al. 3D microstructure effects in Ni-YSZ anodes: Prediction of effective transport properties and optimization of redox stability. Materials 8, 5554–5585 (2015).

Ma, Z. & Torquato, S. Precise algorithms to compute surface correlation functions of two-phase heterogeneous media and their applications. Phys. Rev. E 98, 013307 (2018).

Scholz, C. et al. Direct relations between morphology and transport in Boolean models. Phys. Rev. E 92, 043023 (2015).

Howard, M. et al. Connecting solute diffusion to morphology in triblock copolymer membranes. Macromolecules 53(7), 2336–2343 (2020).

Lang, A. & Potthoff, J. Fast simulation of gaussian random fields. Monte Carlo Methods Appl. 17, 195–214 (2011).

Matérn, B. Spatial Variation (Springer, New York, 1986).

Gebäck, T. & Heintz, A. A lattice Boltzmann method for the advection–diffusion equation with Neumann boundary conditions. Commun. Comput. Phys. 15, 487–505 (2014).

Gebäck, T., Marucci, M., Boissier, C., Arnehed, J. & Heintz, A. Investigation of the effect of the tortuous pore structure on water diffusion through a polymer film using lattice Boltzmann simulations. J. Phys. Chem. B 119, 5220–5227 (2015).

Perram, J. & Wertheim, M. Statistical mechanics of hard ellipsoids. I. Overlap algorithm and the contact function. J. Comput. Phys. 58, 409–416 (1985).

Bezanson, J., Edelman, A., Karpinski, S. & Shah, V. Julia: A fresh approach to numerical computing. SIAM Rev. 59, 65–98 (2017).

Ginzburg, I., Verhaeghe, F. & d’Humieres, D. Study of simple hydrodynamic solutions with the two-relaxation-times lattice Boltzmann scheme. Commun. Comput. Phys. 3, 519–581 (2008).

Zou, Q. & He, X. On pressure and velocity boundary conditions for the lattice Boltzmann BGK model. Phys. Fluids 9, 1591–1598 (1997).

Ma, Z. & Torquato, S. Random scalar fields and hyperuniformity. J. Appl. Phys. 121, 244904 (2017).

Röding, M., Svensson, P. & Lorén, N. Functional regression-based fluid permeability prediction in monodisperse sphere packings from isotropic two-point correlation functions. Comput. Mater. Sci. 134, 126–131 (2017).

Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. B 58, 267–288 (1996).

Kingma, D. & Ba, J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

Abadi, M. et al. TensorFlow: Large-scale machine learning on heterogeneous systems (2015). https://www.tensorflow.org/. Software available from tensorflow.org.

Acknowledgements

M.R. acknowledges the financial support of the Swedish Research Council (Grant Number 2016-03809) and the Swedish Research Council for Sustainable Development (Grant Number 2019-01295). Z. M. and S. T. acknowledge the support of the Air Force Office of Scientific Research Program on Mechanics of Multifunctional Materials and Microsystems under Award No. FA9550-18-1-0514. The computations were in part performed on resources at Chalmers Centre for Computational Science and Engineering (C3SE) provided by the Swedish National Infrastructure for Computing (SNIC). A GPU used for part of this research was donated by the NVIDIA Corporation. Tobias Gebäck is acknowledged for help concerning the lattice Boltzmann computations. Victor Wåhlstrand Skärström is acknowledged for producing Fig. 8.

Author information

Authors and Affiliations

Contributions

M.R., Z.M., and S.T. conceived the project and designed the study, and S.T. supervised the work. M.R. and Z.M. performed the computations and analysis. M.R., Z.M, and S.T. wrote and prepared the manuscript. M.R. and Z.M. contributed equally to this work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Röding, M., Ma, Z. & Torquato, S. Predicting permeability via statistical learning on higher-order microstructural information. Sci Rep 10, 15239 (2020). https://doi.org/10.1038/s41598-020-72085-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-72085-5

This article is cited by

-

Investigating Microstructure–Property Relationships of Nonwovens by Model-Based Virtual Material Testing

Transport in Porous Media (2024)

-

A data-driven framework for permeability prediction of natural porous rocks via microstructural characterization and pore-scale simulation

Engineering with Computers (2023)

-

Inverse design of anisotropic spinodoid materials with prescribed diffusivity

Scientific Reports (2022)

-

Machine learning methods for estimating permeability of a reservoir

International Journal of System Assurance Engineering and Management (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.