Abstract

Quantitative characterization of root system architecture and its development is important for the assessment of a complete plant phenotype. To enable high-throughput phenotyping of plant roots efficient solutions for automated image analysis are required. Since plants naturally grow in an opaque soil environment, automated analysis of optically heterogeneous and noisy soil-root images represents a challenging task. Here, we present a user-friendly GUI-based tool for semi-automated analysis of soil-root images which allows to perform an efficient image segmentation using a combination of adaptive thresholding and morphological filtering and to derive various quantitative descriptors of the root system architecture including total length, local width, projection area, volume, spatial distribution and orientation. The results of our semi-automated root image segmentation are in good conformity with the reference ground-truth data (mean dice coefficient = 0.82) compared to IJ_Rhizo and GiAroots. Root biomass values calculated with our tool within a few seconds show a high correlation (Pearson coefficient = 0.8) with the results obtained using conventional, pure manual segmentation approaches. Equipped with a number of adjustable parameters and optional correction tools our software is capable of significantly accelerating quantitative analysis and phenotyping of soil-, agar- and washed root images.

Similar content being viewed by others

Introduction

Plant roots are key drivers of plant development and growth. They absorb the water and inorganic nutrients from the soil1,2,3 and provide anchoring of the plant body4,5. Root system architecture (RSA), the spatial configuration of a root system1 is known to be an important phenotypic feature closely related to crop yield variability upon changes in environmental conditions6,7. In general, the RSA and its response to the environment are known to be dependent on multiple factors including the plant species, the plant genotype, composition of the soil, availability of nutrients and the environmental conditions8. The emerging discipline of plant phenomics aims to extract the plant anatomical and physiological properties to study the plant performance under given conditions1. In the case of roots, the relevant traits include descriptors of global and local root morphology (like total length, area, volume, and diameter, or lateral branching, the direction of a tangent, etc.)9,10,11,12. Monitoring of these traits enables conclusions about the ability of plants to respond to variable environmental factors such as drought, cold, starvation, etc.13.

In recent years, a number of approaches to root imaging and image analysis were suggested14. However, most of these works rely on the measurement of washed roots or roots grown in artificial, optically transparent media such as liquids or gels15,16 that allow a straightforward image analysis. Further non-destructive methods including X-ray computed tomography17,18,19,20, nuclear magnetic resonance (NMR) microscopy21, magnetic resonance imaging22,23 and laser scanning24 provide unique insights into 3D organization of living root architecture, however, their throughput capabilities are presently rather limited. To enable 2D imaging of roots in a soil-like environment, near-infrared (NIR) imaging of roots growing along surfaces of transparent pots or minirhizotron was designed and tested25,26. Special long pass filters were used to block root exposure to visible light and the images were taken by NIR sensitive camera with suitable illumination. The system allows a non-invasive acquisition of root images in darkness26.

To analyze a large number of root images in an automated high-throughput manner, a number of software tools are available. Most of these tools were, however, designed to extract RSA traits from specific imaging systems, e.g., images from minirhizotron25 and images of roots grown in agar27. In addition, some general tools are available for an in-depth analysis of monocot root systems regardless of root structure. These tools depend on significant user input for processing even though they can be used in a batch mode28,29,30,31. Moreover, some tools can be used as a plugin for general image processing platforms like ImageJ to perform specific tasks for the manual segmentation of roots in the image32.

The majority of software for root image analysis is rather tailored to artificial setups such as transparent growing media that cannot be applied to the analysis of heterogeneous and noisy soil-root images. With the exception of software for analysis of X-ray micro-computed tomography (μCT) images33 and Root134, which still requires extensive human-computer interaction and suitable for X-ray tomography 3D images. In recently published works35,36,37, novel machine and deep learning approaches to automated segmentation of soil-grown root images were presented. However, the presented approaches rely on color information and require a substantial amount of ground truth training data as well as substantial computational resources.

In this work, we present a GUI-based handy tool for semi-automated root image analysis (saRIA) which enables rapid segmentation of diverse 2D root images including potting soil and artificial media setups in a high-through manner. Based on a combination of adaptive image enhancement, adjustable thresholding and filtering as well as optional manual correction, saRIA represents a broadly applicable tool for quantitative analysis of diverse root image modalities as well as generation of quality ground truth reference images for the training of advanced machine learning/deep learning algorithms.

The paper is structured as follows: Materials and Methods section describes the methodological framework of saRIA including data preparation, segmentation algorithm, and root trait computation. Results section shows the segmentation capabilities of saRIA in comparison to freely available tools and presents the results of roots traits derivation from manually and saRIA segmented root images. In Discussion, we summarize the results of an evaluation study using the saRIA root image segmentation and give an outlook of possible future improvements.

Methods

Three different modalities for imaging of root system architecture were analyzed in this study including

-

Soil-root image: This type of digital image is taken by a monochrome camera (UI-5490SE-M-GL, IDS) with LED illumination (UV, 380 nm) in a custom-made imaging box similar to our previously published setup26. In brief, plants are grown in transparent pots [77 × 77 × 97 mm (WxLxH))] filled with the potting substrate (Potgrond P, Klasmann). An example of soil-root images acquired with this system is shown in Fig. 1a. Depending on the developmental stage, plant health, environmental factors (e.g., temperature, humidity), these images may, in general, exhibit diverse artifacts including low contrast between the root architecture and heterogeneous soil, inhomogeneous scene illumination (i.e. vertical intensity gradient), water condensation at the pot walls, see Fig. 1b. Identification of relevant root architecture in such structurally and statistically noisy images represents a challenging task.

-

Agar-root image: During this experiment, the plants were grown on 1/2 MS, 1.5% (w/v) agar medium (pH 5.6 without sugar) in Petri dishes for 5 days. The images were captured by scanning the dishes in grayscale at 300 dots per inch resolution using an Epson Expression 10 000 XL scanner (Seiko Epson)26. An exemplary image of Rapeseed roots is shown in Fig. 1c. This image has a clear contrast between roots and homogeneous background. Nevertheless, the background pixels have some morphological artifacts which will be discussed in the next subsection.

-

Washed-roots image: Maize plants were grown in transparent pots filled with a mixture of substrates (self-made compost, IPK) and sand (1:1) for 3 weeks. This digital image is obtained by scanning the washed maize roots on an Epson Expression 10 000 XL scanner (Seiko Epson) as shown in Fig. 1d. Compared to the above two types of root images, it is less noisy and the contrast between roots and background is significantly higher.

Consequently, the classification between the root and non-root pixels in these three image modalities is unequally difficult. Figure 2 shows histograms and pairwise distances (Pdist = pdist2(A, B)) between root and non-root grayscale distributions corresponding to soil-root, agar and washed roots images in Fig. 1, respectively. As expected, soil-root images exhibit a strong overlap between grayscale values of root and non-root pixels and the distance between them is the lowest among these three imaging modalities. Therefore, here we focused on soil-root image analysis only. Application of our approach to higher contrast image modalities (i.e. agar, washed roots images) is, however, trivial, and only requires inverting the grayscale values.

Histograms of root (blue) and non-root (green) intensity values of different imaging modalities shown in Fig. 1: (a) soil-root, (b) agar grown roots and (c) washed roots images. The pairwise distance (Pdist) between root and non-root histograms, which serves as a quantitative measure for separability of root and non-root image structures, indicates that soil-root images represent the most challenging modality for image segmentation.

Image analysis

Image analysis algorithms, as well as the graphic user interface (GUI) were implemented under the MATLAB 2018b environment38. The major goal of image analysis consists of segmentation of root architecture and calculation of phenotypic features of root architecture and image intensity (i.e. color). In the case of color images, the input image is converted to a grayscale image using the rgb2gray Matlab routine. In general, the pipeline of image analysis includes the following steps:

-

Image I/O. Most standard image formats (such as *.jpg, *.png, *.bmp, *.tif) can be imported for further processing and analysis. Stepwise single image, as well as automated processing of large image datasets is implemented.

-

Image preprocessing. Depending on the imaging modality (e.g., soil-root or agar-root images) and the presence of noisy or structural artifacts, preprocessing steps may include cropping of the region of interest (ROI), inverting of image intensity, despeckling and smoothing. In the case of agar and washed-root images, inversion of image intensity has to be performed prior to image analysis. Otherwise, the procedure of agar and washed-root image analysis is similar to soil-root images.

-

Adaptive image thresholding. Preprocessed images are segmented into a foreground (roots) and background using adaptive thresholding based on Gaussian weighted mean as suggested by39. This technique tolerates global inhomogeneity of image intensity such as vertical image gradient in our soil-root images. An example of an adaptive thresholding of Arabidopsis soil-root image (Fig. 3a) is shown in Fig. 3b.

-

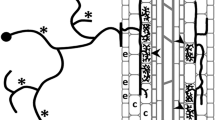

Morphological filtering. To remove white noise and small non-root blob-like structures (such as sand, gravel or water condensation artifact in Fig. 1b) morphological filtering is applied. Thereby, roots are considered to be elongated line-/curve-like structures that differ from this kind of non-root blobs with respect to their area, length, and shape (i.e. eccentricity). In the case the root region represents a single connected structure, filtering can be performed merely by applying intensity and area thresholds. If roots are represented by disconnected structures, differentiation of root from non-root structures is performed using additional shape descriptors such as length and eccentricity, i.e. a descriptor of the object’s Eigenellipse elongation, which is zero for an absolutely round and 1 for a line-object. By appropriate setting of thresholds for these three parameters, non-root blobby structures are removed. Figure 3c shows an example of morphological filtering of a preliminary segmented root image.

-

Skeletonization. Root skeleton is calculated on the basis of the segmented and filtered image. In addition to the filtering steps described above, additional thinning or eroding of the binary image is applied to suppress high-frequency noise. The exemplary image for the skeleton extraction is shown in Fig. 3d.

-

Root feature calculation. The distance transform of the cleaned binary image is calculated for assessment of the local root width (or diameter) measured in pixels of the root skeleton. Further root features include root length, root angles with respect to a vertical axis, branching and end points of the root skeleton, the intensity of root pixels and their standard statistical descriptors (i.e. mean, stdev values). The complete list of a total of 44 root traits can be found in Supplementary Information (Table S1). Note that all traits are extracted using pixel-wise calculation irrespective of a number of root systems in the image. In addition, the RSA traits can also be written out in mm. For this purpose, the user has to set the pixel-to-mm conversion factor in the saRIA GUI. Here, we derived the pixel-to-mm factor by measuring a reference line (white color bar) in the images as shown in Fig. 1. The pixel-to-mm conversion factor (CF) is then defined as follows:

$${\bf{CF}}=\frac{length\,of\,there\,ference\,line\,in\,mm}{length\,of\,there\,ferenc\,eline\,in\,pixels}$$(1)The workflow of image analysis is also shown in Fig. 4.

-

Evaluation. To examine the performance of image segmentation, a standard statistical metric, the Dice similarity coefficient (DSC)40, is used. The DSC evaluates the spatial overlap between two binary images and its value ranges between 0 (no overlap) to 1 (perfect overlap). The DSC is defined as follows:

where TP, FP, and FN are true positive, false positive and false negative pixels, respectively.

Results

Semi-automated segmentation of soil-root images

To evaluate the performance of our algorithms, segmentation of 100 Arabidopsis soil-root images was performed automatically and compared with the results of fully manual segmentation. Thereby, manual segmentation was also carried out with saRIA by applying a low-intensity threshold for selection of all high- as well as low-intensity roots, and subsequently followed by manual removal of all remaining artifacts including solitary objects as well as noise regions attached to the root (which cannot be otherwise identified and quantified as a separate object) using the ‘clearInside’ saRIA tool. This step was done by two biologists (co-authors of this manuscript) with expertise in RSA. In contrast, for semi-automated image segmentation user merely has to adjust the four basic algorithmic parameters (controlled by the four GUI sliders) according to the subjectively best result of visual inspection of a few test images. Once the best combination of algorithmic parameters is defined, segmentation of all remaining images can be performed in a fully unsupervised manner.

Figure 5 shows the performance of image segmentation compared to manual segmentation of 100 soil-plant images for the intensity threshold (T) 0.12, minimum area 450, minimum length 46 and minimum eccentricity 0.49. This figure shows that approximately 90% of images have DSC values greater than 0.7 and the mean DSC value is 0.82.

The accuracy of root image detection using saRIA vs. manual segmentation (ground-truth data) in terms of the Dice similarity coefficient (DSC). The green line points to the mean DSC value over 100 tested soil-root images. The red color bars indicate few cases of poor saRIA performance with a low DSC value that corresponds to roots in the early stage of plant development.

To validate the robustness of the saRIA image segmentation of the above 100 images, we have also compared with two other freely available tools called IJ_Rhizo41 and GiA roots42. Table 1 shows the mean DSC value for the subjectively best possible configuration of IJ_Rhizo, GiAroots with three different thresholding methods and saRIA. The table briefs that saRIA significantly outperformed with the combination of Gaussian adaptive thresholding and all morphological parameters (area, length, circularity) compared to the IJ_Rhizo and GiAroots. The brief discussion on parameter configuration of IJ_Rhizo and GiaRoots can be found at Pierret et al.41 and Galkovskyi et al.42 respectively.

Evaluation of phenotypic traits vs. smartroot

Here, the results of phenotypic root characterization obtained with saRIA are evaluated in comparison with SmartRoot32. The SmartRoot is the most widely used for the traits quantification of disconnected RSA and each part of the root is traced manually by placing multiple landmarks finally interconnected to the root skeleton. However, the SmartRoot doesn’t deliver the single-segmented binary (reference) image for the comparison with saRIA. Therefore, the root traits derived from such manually segmented images can be seen as a reference ground-truth data. To quantify the (dis)similarity between saRIA and SmartRoot results, the correlation coefficient of determination R2 and significance level p-value are used. They represent the percent of the saRIA calculated traits that are closest to the ground-truth data and model validation respectively. Figure 6 shows the correlation between the SmartRoot (x-axis) and saRIA (y-axis) outputs for three traits where each point denotes one particular image out of 126 Arabidopsis root images acquired with our in-house soil-root imaging system. Note that the images used for traits evaluation are different from the above segmentation evaluation data. The three traits used for evaluation are the total root length, total root surface area, and total root volume. As one can see, for all three traits correlation between saRIA semi-automatically and SmartRoot manually segmented images exhibit R2 values higher than 0.84, 0.86, 0.77 and p-values 7.7e-53, 5.25e-55, 3.35e-42 respectively.

Correlation between root traits calculated using semi-automated saRIA (y-axis) and manual SmartRoot (x-axis) image segmentation. Each point represents a trait value estimated from one of 126 soil-root images. The red color solid line and dotted lines represent a fitted curve and 95% confidence bounds, respectively. The R2 value indicates good conformity between saRIA and SmartRoot results of image segmentation and trait calculation.

Visualization of root features

In addition to numerical outputs, saRIA software generates root features (e.g., distance maps, skeletons, width distributions, etc) for visualization purposes. Figure 7 shows an example of images of root width and orientation. The root width is calculated as the Euclidean distance between root skeleton and root boundary pixels. Figure 7b depicts the Euclidean distance map of root object Fig. 7a where high-intensity central pixels represent the root width. The corresponding width color map is shown in Fig. 7c. The width feature is useful to calculate the root volume and surface area for biomass estimation.

Visualization of root features: (a) binary root image, (b) corresponding Euclidean distance map, (c) overlay of the binary image with the root skeleton colored according to the local root width (gray-scale map), (d) overlay of the binary image with the root skeleton colored according to the local root tangent (black-yellow color map).

Figure 7d displays the absolute orientation of each root skeleton pixel with respect to the horizontal axis in black-yellow color-map representation. Here, a validated linear regression model was used to calculate the slope of a pixel in the skeleton image. The local slope in i-th pixel is obtained by fitting a tangent line to the fraction of root skeleton framed by a 15 × 15 pixel mask around the i-th pixel. An exemplary figure for the local linear fit can be found in Supplementary Information (Fig. S1). The validated regression model means that only pixels satisfying the regression model with a high confidence level (i.e. p-value < 0.05 and R2 > 0.5) were accepted. Pixels with low confidence of the linear fit model such as root branches with the non-linear distribution of skeleton pixels were excluded by the calculation of global statistics of root orientation.

Discussion

The objectives of our GUI-based saRIA tool are to automatize the time-consuming manual segmentation of structurally complex and noisy root images and to enable calculation of RSA traits from different root imaging modalities including soil, agar and washed roots images. Using this approach, root architecture can be rapidly segmented and quantified by adjusting a small set of algorithmic parameters. Segmentation with saRIA is particularly efficient when background structures differ from roots in geometrical parameters (such as shape and size) and grayscale intensity. Artifacts resembling optical root appearance, e.g., scratches on the pot surface or high-intensity areas resulting from water condensation, are, in contrast, difficult to eliminate in a fully automated manner. Such artifacts can, however, be removed using a manual segmentation tool also available with saRIA.

The accuracy of trait estimation in saRIA depends on the quality of semi-automated image segmentation. Our solution for analyzing a large number of images is to define the best possible set of algorithmic parameters for a subset of representative root images and then to apply this configuration to all remaining images in a fully automated manner. Here, 15% of input data with different scenarios (low, medium and high dense root images) are considered for the best possible configuration settings.

The quality of image segmentation from Table 1 explains that the global thresholding methods in IJ_Rhizo (bi-level threshold) and GiAroots (single-level threshold) under-performed than adaptive thresholding methods. Since the global thresholding methods contain one or two threshold values for a complete image that preserve the high-intensity noisy objects and removes the low-intensity roots in the soil-root image. However, the GiAroots also implemented based on adaptive thresholding but it lacks the Gaussian smoothing filter in the preprocessing step and morphological constraints (i.e. length and circularity) on binary root objects, represented as x in Table 1. Therefore, the combination of adaptive thresholding and morphological filtering promising more accurate segmentation in saRIA for soil-root images.

From the summary of our automated image analysis in Fig. 5, it is evident that the accuracy of root image segmentation is tendentially higher (DSC > 0.9) for images with large root architecture. Figure 8 shows example images of small and large root architecture that exhibit low and high DSC of automated segmentation vs. ground-truth, respectively. Because the large root architecture requires a low value for threshold and high value for morphological parameters compared to the small root architecture image where most of the disconnected root components are small in morphology. It results in the removal of small-sized roots and keeping the (both disconnected root and heterogeneous soil) structures that are big in morphology. This observation confirms that the relative error in the segmentation of small root architecture (see red color bars in Fig. 5) from background pixels is higher for automated segmentation in comparison to the large roots. However, these artifacts can be overcome by setting low values for morphological parameters in the segmentation configuration.

Comparison of root image segmentation for young/small vs. large/adult plants. Top row shows (a) original, (b) ground-truth and high-DSC saRIA-segmented images of a large root, bottom row (d–f) shows an example of a relatively small root at the early stage of Arabidopsis plant development with a low DSC corresponding to the red bar number 91 in Fig. 5.

The quantitative comparison of saRIA is limited to SmartRoot because other software solutions for root image analysis are either tailored to a non-interrupted representation of root architecture like RootNav43 or restricted to high contrast imaging modalities, e.g., agar grown or washed roots, like GiARoots42 that is no longer under development and closed source and not promising more accurate segmentation as shown in Table 1.

As mentioned earlier, saRIA is capable of calculating 44 number of root traits. In brief, they are categorized into 11 features named area (number of root pixels), number of disconnected root objects, total length, surface area, volume, number of branching and ending points, statistical distribution (mean, median, standard deviation, skewness, kurtosis and percentile) of root geometry in horizontal and vertical direction, width and orientation. Among those, three important features for root biomass calculation are presented for saRIA traits evaluation.

The results of our evaluation tests in Fig. 6 show that root traits obtained using saRIA are highly correlating (R2 > 0.8) and significant (p-value < 0.05) with manual segmentation in SmartRoot software. Consequently, one can perform comparatively high-quality root phenotyping with saRIA 20 or more times faster than with manual annotation of root structures pixel by pixel.

The difference in trait estimates between saRIA and SmartRoot might result from the image segmentation parameters and root thickness. First, the morphological parameters remove tiny roots which have small area and length. Second, the local thickness of roots in saRIA is defined as the average diameter of automatically segmented roots which include high-intensity structures originating from tiny root hairs that can be avoided in manual segmentation. This may lead to differences in average root width, length and volume assessed with saRIA vs. SmartRoot. However, the ability to interactively adjust parameters is available in the saRIA to improve the trait extraction and even to produce a set of alternative image segmentation in an automated manner, i.e. automated root tracing and trait extraction for the selected configuration.

Here, we present a user-friendly GUI-based software solution for high-throughput analysis of root images of different image modalities, including challenging soil-root images. Figure 9 shows the GUI of saRIA software which is freely available as a precompiled executable program from https://ag-ba.ipk-gatersleben.de/saria.html. Further examples of agar and washed roots image segmentation using saRIA are included in the Supplementary Information, see Figs. S2 and S3. The saRIA software can be applied for the analysis of single images or large image datasets to automatically detect and extract multiple root traits. This software is designed for end-users with limited technical knowledge to enable them a widely automated analysis of complex soil-root images in an intuitive and transparent manner. The saRIA segmentation, root tracing and trait calculation algorithms require, in average, 5 seconds to process and analyze a 6-megapixel (cropped) image (on Intel(R) Core(TM) i5-4570S CPU @ 2.90 GHz, 64-bit quadcore processor with 4 GB ram and 500 GB HDD) which is significantly faster in comparison to conventional manual segmentation, e.g., SmartRoot. Table 2 summarizes the essential features of saRIA vs. other well-established root image analysis tools (SmartRoot, EZ-RHIZO, and WinRHIZO). The major difference between the saRIA and other available tools (Plant Root - roots grown in cloth substrate in custom rhizoboxes, RootReader2D - need high contrast images and RootNav - supports nested root architectures) is that it is capable of segmenting contrast varying disconnected root architectures (in a semi-automated manner with automated trait extraction) in both potting soil and artificial growing media.

In addition to routine analysis of root images, saRIA can be used for the rapid generation of ground-truth segmentation data that are highly demanded for advanced machine learning/deep learning techniques.

The study of plant genotype with root phenotype requires a contribution of many groups and utilization of molecular, physiological and imaging techniques. In addition, the performance of phenotype analysis depends on image quality. The segmentation algorithm currently bundled in saRIA is based on the intensity gradient among the pixels. Further extensions of the saRIA segmentation pipeline including advanced machine learning approaches and additional static and dynamic RSA traits like topological data (number of primary and lateral roots, branching angles, lateral density) are planned in the future.

References

Lynch, J. Root architecture and plant productivity. Plant physiology 109, 7 (1995).

Casper, B. B. & Jackson, R. B. Plant competition underground. Annu. review ecology systematics 28, 545–570 (1997).

Hodge, A., Robinson, D., Griffiths, B. & Fitter, A. Why plants bother: root proliferation results in increased nitrogen capture from an organic patch when two grasses compete. Plant, Cell & Environ. 22, 811–820 (1999).

Ennos, A. The scaling of root anchorage. J. Theor. Biol. 161, 61–75 (1993).

Niklas, K. J. & Spatz, H.-C. Allometric theory and the mechanical stability of large trees: proof and conjecture. Am. journal botany 93, 824–828 (2006).

Compant, S., Van Der Heijden, M. G. & Sessitsch, A. Climate change effects on beneficial plant–microorganism interactions. FEMS microbiology ecology 73, 197–214 (2010).

Godfray, H. C. J. et al. Food security: the challenge of feeding 9 billion people. science 327, 812–818 (2010).

Malamy, J. Intrinsic and environmental response pathways that regulate root system architecture. Plant, cell & environment 28, 67–77 (2005).

Eissenstat, D. M. On the relationship between specific root length and the rate of root proliferation: a field study using citrus rootstocks. New Phytol. 118, 63–68 (1991).

de Dorlodot, S. et al. Root system architecture: opportunities and constraints for genetic improvement of crops. Trends plant science 12, 474–481 (2007).

Price, A. H. et al. Upland rice grown in soil-filled chambers and exposed to contrasting water-deficit regimes: I. root distribution, water use and plant water status. Field Crop. Res. 76, 11–24 (2002).

Qu, Y. et al. Mapping QTLs of root morphological traits at different growth stages in rice. Genetica 133, 187–200 (2008).

Rich, S. M. & Watt, M. Soil conditions and cereal root system architecture: review and considerations for linking Darwin and weaver. J. experimental botany 64, 1193–1208 (2013).

Lobet, G., Draye, X. & Périlleux, C. An online database for plant image analysis software tools. Plant methods 9, 38 (2013).

Trachsel, S., Kaeppler, S. M., Brown, K. M. & Lynch, J. P. Shovelomics: high throughput phenotyping of maize (zea mays l.) root architecture in the field. Plant Soil 341, 75–87 (2011).

Iyer-Pascuzzi, A. S. et al. Imaging and analysis platform for automatic phenotyping and trait ranking of plant root systems. Plant Physiol. 152, 1148–1157, https://doi.org/10.1104/pp.109.150748 http://www.plantphysiol.org/content/152/3/1148.full.pdf (2010).

Heeraman, D. A., Hopmans, J. W. & Clausnitzer, V. Three dimensional imaging of plant roots in situ with X-ray computed tomography. Plant soil 189, 167–179 (1997).

Perret, J., Al-Belushi, M. & Deadman, M. Non-destructive visualization and quantification of roots using computed tomography. Soil Biol. Biochem. 39, 391–399 (2007).

Gregory, P. J. et al. Non-invasive imaging of roots with high resolution X-ray micro-tomography. In Roots: the dynamic interface between plants and the Earth, 351–359 (Springer, 2003).

Tracy, S. R. et al. The X-factor: visualizing undisturbed root architecture in soils using X-ray computed tomography. J. experimental botany 61, 311–313 (2010).

van der Weerd, L. et al. Quantitative NMR microscopy of osmotic stress responses in maize and pearl millet. J. Exp. Bot. 52, 2333–2343 (2001).

Van, A. H. Intact plant MRI for the study of cell water relations, membrane permeability, cell-to-cell and long distance water transport. J. Exp. Bot. 58, 743–756 (2006).

Van, A. H., Scheenen, T. & Vergeldt, F. J. MRI of intact plants. Photosynth. Res. 102, 213 (2009).

Fang, S., Yan, X. & Liao, H. 3D reconstruction and dynamic modeling of root architecture in situ and its application to crop phosphorus research. The Plant J. 60, 1096–1108 (2009).

Zeng, G., Birchfield, S. T. & Wells, C. E. Automatic discrimination of fine roots in minirhizotron images. New Phytol. 177, 549–557 (2008).

Shi, R., Junker, A., Seiler, C. & Altmann, T. Phenotyping roots in darkness: disturbance-free root imaging with near infrared illumination. Funct. Plant Biol. 45, 400–411 (2018).

Armengaud, P. et al. EZ-Rhizo: integrated software for the fast and accurate measurement of root system architecture. The Plant J. 57, 945–956 (2009).

Pace, J., Lee, N., Naik, H. S., Ganapathysubramanian, B. & Lübberstedt, T. Analysis of maize (zea mays l.) seedling roots with the high-throughput image analysis tool ARIA (Automatic Root Image Analysis). PLoS One 9, e108255 (2014).

Leitner, D., Felderer, B., Vontobel, P. & Schnepf, A. Recovering root system traits using image analysis exemplified by two-dimensional neutron radiography images of lupine. Plant Physiol. 164, 24–35 (2014).

Le Bot, J. et al. DART: a software to analyse root system architecture and development from captured images. Plant Soil 326, 261–273 (2010).

Arsenault, J.-L., Poulcur, S., Messier, C. & Guay, R. WinRHlZO: a root-measuring system with a unique overlap correction method. HortScience 30, 906D–906 (1995).

Lobet, G., Pagès, L. & Draye, X. A novel image-analysis toolbox enabling quantitative analysis of root system architecture. Plant physiology 157, 29–39 (2011).

Mairhofer, S. et al. On the evaluation of methods for the recovery of plant root systems from X-ray computed tomography images. Funct. Plant Biol. 42, 460, https://doi.org/10.1071/FP14071 (2015).

Flavel, R. J., Guppy, C. N., Rabbi, S. M. R. & Young, I. M. An image processing and analysis tool for identifying and analysing complex plant root systems in 3D soil using non-destructive analysis: Root1. PLOS ONE 12, 1–18, https://doi.org/10.1371/journal.pone.0176433 (2017).

Wang, T. et al. SegRoot: a high throughput segmentation method for root image analysis. Comput. Electron. Agric. 162, 845–854, https://doi.org/10.1016/j.compag.2019.05.017 (2019).

Smith, A. G., Petersen, J., Selvan, R. & Ruø Rasmussen, C. Segmentation of Roots in Soil with U-Net. arXiv e-prints arXiv:1902.11050 1902.11050 (2019).

Yu, G. et al. Root Identification in Minirhizotron Imagery with Multiple Instance Learning. arXiv e-prints arXiv:1903.03207 1903.03207 (2019).

MathWorks. MATLAB and Statistics Toolbox Release 2018b (2018).

Bradley, D. & Roth, G. Adaptive thresholding using the integral image. J. graphics tools 12, 13–21 (2007).

Zou, K. H. et al. Statistical validation of image segmentation quality based on a spatial overlap index. Acad. radiology 11, 178–189 (2004).

Pierret, A., Gonkhamdee, S., Jourdan, C. & Maeght, J.-L. IJ_rhizo: an open-source software to measure scanned images of root samples. Plant Soil 373, 531–539, https://doi.org/10.1007/s11104-013-1795-9 (2013).

Galkovskyi, T. et al. GiA Roots: software for the high throughput analysis of plant root system architecture. BMC plant biology 12, 116 (2012).

Pound, M. P. et al. RootNav: navigating images of complex root architectures. Plant Physiol. 162, 1802–1814, https://doi.org/10.1104/pp.113.221531 http://www.plantphysiol.org/content/162/4/1802.full.pdf (2013).

Acknowledgements

This work was performed within the German Plant-Phenotyping Network (DPPN) which is funded by the German Federal Ministry of Education and Research (BMBF) (project identification number: 031A053).

Author information

Authors and Affiliations

Contributions

N.N., M.H., and E.G. conceived, designed and performed the computational experiments, analyzed the data, wrote the paper, prepared figures, and tables, and reviewed drafts of the paper. C.S., R.S. and A.J. executed the laboratory experiments, acquired image data, and reviewed drafts of the paper. T.A. co-conceptualized the project and reviewed drafts of the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Narisetti, N., Henke, M., Seiler, C. et al. Semi-automated Root Image Analysis (saRIA). Sci Rep 9, 19674 (2019). https://doi.org/10.1038/s41598-019-55876-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-55876-3

This article is cited by

-

The influence of phosphorus availability on rice root traits driving iron plaque formation and dissolution, and implications for phosphorus uptake

Plant and Soil (2024)

-

Semantic segmentation of plant roots from RGB (mini-) rhizotron images—generalisation potential and false positives of established methods and advanced deep-learning models

Plant Methods (2023)

-

Comparative metabolomics of root-tips reveals distinct metabolic pathways conferring drought tolerance in contrasting genotypes of rice

BMC Genomics (2023)

-

CO2 supplementation eliminates sugar-rich media requirement for plant propagation using a simple inexpensive temporary immersion photobioreactor

Plant Cell, Tissue and Organ Culture (PCTOC) (2022)

-

Multi-dimensional Measurement-Based Approaches for Evaluating the Root Area Ratio of Plant Species

International Journal of Geosynthetics and Ground Engineering (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.