Abstract

In this paper, we demonstrate an interactive, finger-sensitive system which enables an observer to intuitively handle electro-holographic images in real time. In this system, a motion sensor detects finger gestures (swiping and pinching) and translates them into the rotation and enlargement/reduction of the holographic image, respectively. By parallelising the hologram calculation using a graphics processing unit, we realised the interactive handling of the holographic image in real time. In a demonstration of the system, we used a Leap Motion sensor and a phase modulation-type spatial light modulator with 1,920 × 1,080 pixels and a pixel pitch of 8.0 µm × 8.0 µm. The constructed interactive finger-sensitive system was able to rotate a holographic image composed of 4,096 point light sources using a swiping gesture and enlarge or reduce it using a pinching gesture in real time. The average calculation speed was 27.6 ms per hologram. Finally, we extended the constructed system to a full-colour reconstruction system that generates a more realistic three-dimensional image. The extended system successfully allowed the handling of a full-colour holographic image composed of 1,709 point light sources with a calculation speed of 22.6 ms per hologram.

Similar content being viewed by others

Introduction

Three-dimensional (3D) displays have attracted considerable attention in the digital signage1,2, entertainment3 and medical fields4,5,6. Holography7,8 is a 3D display technique that can display a natural 3D image close to an actual object and does not require scanning and synchronisation processing because it records and reconstructs light waves emitted from the object via light interference and diffraction. The interference between the light from the object and the reference light generates an interference fringe pattern called a hologram. Since the hologram is recorded on a photosensitive material, it is difficult to use classical holography to record and reconstruct motion pictures. Electro-holography9, which reconstructs 3D images using a spatial light modulator (SLM), was proposed to overcome the above shortcoming of classical holography. Thus, electro-holography can reconstruct 3D motion pictures by displaying computer-generated holograms (CGHs) obtained by simulating light propagation and interference on computers at each frame.

Although interactive 3D display systems that enable an observer to intuitively deal with 3D images have been developed based on fog screens10, rotating 2D displays11 or multiple 2D displays12, these systems require many projectors, multiple displays or high-speed mechanical processing to reconstruct natural 3D images. These systems also require synchronisation amongst all devices and a movie. Although many studies on electro-holographic displays13,14,15,16,17,18,19,20,21,22,23,24 have succeeded in real-time reconstruction using large-scale field-programmable gate arrays, graphics processing units (GPUs) and algorithms that speed up the calculation of CGHs, most of these studies realised only unidirectional systems that allow the observation but not the handling of the reconstructed images. On the other hand, interactive switching of CGHs with a motion sensor has been reported using optical tweezers25,26, which manipulate nano- and micron-sized particles in the contactless mode using a focused laser beam. Optical tweezers with CGHs (holographic optical tweezers) use CGHs to generate multiple focusing spots and can realise the interactive manipulation of small particles without the mechanical scanning process by switching the CGHs in real time. However, when using holographic optical tweezers, a manipulator does not observe the holographically reconstructed 3D images in real space but rather observes the two-dimensional (2D) images captured by a digital camera via a 2D display during the manipulations. This indicates that holographic optical tweezers have not realised the direct handling of holographic images.

Thus, we aimed to construct a bidirectional electro-holographic system that allows real-time interaction with the reconstructed images. Although the light-field display27,28, which can also reconstruct 3D images, has been successfully used for the interactive handling of 3D images, it has a drawback: the resolution of the reconstructed 3D images decreases when the images are reconstructed away from the display plane. This results from the loss of the phase information of the light. Because the light-field technique is based on geometrical optics, it cannot record and reconstruct the phase information of the light. This is problematic because the phase information significantly contributes to the resolution of the reconstructed 3D images29. On the contrary, holography can record and reconstruct phase information because it is based on wave optics. The electro-holographic images generated by Yamaguchi’s interactive system30 can be controlled by touch. However, this system cannot generate and reconstruct the electro-holographic images in real time. We have demonstrated an interactive electro-holography system in which the 3D image can be operated in real time using a keyboard31. However, using the keyboard as an interface to manipulate the images is non-intuitive. Hence, we have constructed an interactive system in which the observer can intuitively handle the electro-holographic image in real time through hand and finger movements, which are detected by a motion sensor (Fig. 1). This intuitive control mechanism provides a realistic, immersive environment for the observer. This type of intuitive handling of 3D images will likely be required in virtual-reality holographic displays in the future. In this paper, we demonstrate an interactive finger-sensitive system which enables an observer to handle electro-holographic images in real time using intuitive finger gestures.

Results

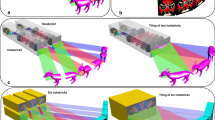

Figure 2a shows a schematic of the constructed system. First, the system detects the gestures of the observer using a motion sensor. Upon detection of a swiping gesture, the system rotates a 3D object composed of point clouds in a clockwise (leftward swipe) or anticlockwise (rightward swipe) direction. Here, the system performs the rotation process by multiplying the coordinate of each point by the rotation matrix. When the system detects a pinching gesture, the system enlarges (pinching out) or reduces (pinching in) the size of the 3D object. Here, the system performs the enlargement process by multiplying the coordinate of each point by the scale coefficient. Subsequently, the system calculates a CGH of the 3D object based on the rotation, enlargement or reduction operation. Finally, the system displays the CGH on the SLM and reconstructs a holographic 3D image by illuminating light to the SLM. The processing steps described above can be regarded as one frame of the electro-holographic image; thus, the interactive handling of electro-holographic motion pictures can be realised by repeating these processing steps. Figure 2b shows a schematic of the 3D object of a dinosaur composed of 4,096 point light sources. The 3D object was virtually placed 1 m away from the SLMs when calculating the CGHs.

Figure 3 shows the experimental setup used to demonstrate the full-colour electro-holography reconstruction system. First, we used only a green laser (532 nm) and reconstructed monochromatic holographic images. A phase modulation-type SLM (Holoeye Photonics AG, ‘PLUTO’) with 1,920 × 1,080 pixels and a pixel pitch of 8.0 µm × 8.0 µm was used to display the CGHs. The gradation of phase modulation and refresh rate of the SLM were 256 and 60 Hz, respectively. A Leap Motion (Leap Motion Inc.)32 motion sensor was used to detect finger gestures. This sensor is designed to detect motion in hands and fingers with high accuracy at more than 100 fps. A central processing unit (CPU; Intel Core i7-7700K with 4.2 GHz) and GPU (NVIDIA, ‘GeForce GTX1080Ti’) parallelised the processing of point clouds and calculation of CGHs.

L R , L G , L B : Red, green and blue lasers, respectively. S R , S G , S B : SLMs for red, green and blue reconstruction, respectively. M1~M4: Mirrors. H1, H2: Half mirrors. DB1, DB2, DR1, DR2: Dichroic mirrors.

Figure 4 and supplementary movie M1 show the reconstructed images acquired using the constructed system. Figure 4a shows the reconstructed image being rotated by the observer using a swiping gesture. Figure 4b,c show the image being enlarged and reduced by the observer using pinching gestures, respectively. The average calculation time of the system was 27.6 ms per CGH, corresponding to 36.2 fps. Using only the CPU, the average calculation time was 1.39 s; thus, the parallelised system with both the GPU and CPU was 50.4 times faster than the system based on the CPU alone.

As shown above, we successfully demonstrated that an observer can interactively handle the reconstructed image in real time using swiping and pinching gestures. The new interactive finger-sensitive system enables an observer to intuitively handle electro-holographic images in real time using finger gestures.

Discussion

To reconstruct more realistic 3D images close to an actual object, a system capable of full-colour reconstruction with red and blue light in addition to green light is needed. Thus, we extended the single-colour system to a full-colour reconstruction system to allow interaction with more realistic 3D images.

Figure 5a shows a schematic of the full-colour interactive image reconstruction system. Using the single-colour interactive system described in the Results section, we only detected the swiping and pinching gestures. This indicates that we did not use the depth information detected by the motion sensor. We then implemented depth detection in the full-colour interactive image reconstruction system. This system detects finger touch gestures using a motion sensor and switches between the display and non-display of the red, green and blue components depending on the position of the finger gesture. Here, we set a virtual plane over the Leap Motion sensor and calibrated the depth position of the virtual plane as z = 0, as shown in Fig. 5b. The motion sensor detects the z-coordinate as well as the direction of the movement of the observer’s finger position along the z-axis. The motion sensor regards the finger motion as the touch gesture only when the finger moves from the positive range (z > 0) to the negative range (z < 0). Additionally, the motion sensor detects the x- and y-coordinates on the virtual plane simultaneously. When the touch position is above the full-colour reconstructed image, the system switches to the red reconstructed image. When the position is to the left of the image, the system switches to the green reconstructed image. Finally, when the touch position is to the right, the system switches to the blue reconstructed image. In all cases, only the colour corresponding to the finger touch position changes; the other colours remain the same.

The experimental setup used for full-colour reconstruction is shown in Fig. 3. In addition to the green laser and one SLM for the green reconstructed image, we used a red laser (650 nm), a blue laser (450 nm) and two additional SLMs to display red and blue reconstructed images. The 3D object used in the demonstration (Fig. 6) consisted of an average of 1,709 point light sources for all frames. The full-colour object was virtually placed 1 m away from the SLMs when calculating the CGHs.

Figure 7 and supplementary movie M2 show an operator switching between display and non-display full-colour images by touching the display. By touching the display to the top, left and right of the full-colour reconstructed image, the full-colour interactive system switched between display and non-display of the red, green and blue reconstructed images, respectively. The average calculation time of the system was 22.6 ms per CGH; thus, the system displayed the full-colour reconstructed image at more than 40 fps. The successful demonstration indicates that the developed system enables the interactive handling of full-colour reconstructed images using finger touch gestures.

In both the single-colour and full-colour reconstructed system, it is difficult to reconstruct and interactively handle more realistic and high-definition 3D images (e.g. a 3D image comprising tens of thousands of point light sources) in real time. Hence, it is necessary to speed up the CGH calculation in order to reconstruct 3D images composed of more point light sources. The computational complexity of a CGH is O(LN x N Y ), where L is the number of point light sources, N x is the number of pixels of the CGH along the horizontal direction and N Y is the number of pixels along the vertical direction.

One method used to speed up the CGH calculation is to distribute the calculation load over multiple GPUs33,34. For instance, a full-colour interactive handling system with three GPUs that distributes the CGH calculation of the each colour to one of the three GPUs will be able to calculate the CGH three times faster than the system demonstrated in this study with only one GPU. Using this method allows high-definition images with large numbers of point light sources to be reconstructed. Another method for speeding up the CGH calculation is to adopt a fast calculation algorithm such as wavelet shrinkage-based superposition (WASABI)35. The speed enhancement achieved by WASABI depends on the selectivity rate S of the representative wavelet coefficients. Even if S is reduced to 1%, WASABI can obtain almost the same reconstructed-image quality as that obtained without WASABI. In this case, WASABI accelerates CGH calculation by 100 times compared to the method with no fast calculation algorithm.

Methods

CGH calculation

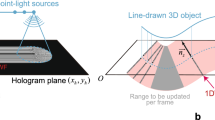

Figure 8 shows a schematic of CGH calculation, which is expressed as follows:

where (x a , y a ) are the coordinates on the CGH, (x j , y j , z j ) are the coordinates of the j-th point light source, L is the number of point light sources, i is an imaginary unit, λ is the wavelength of light, A j is the field magnitude emitted from a point light source and U is the complex amplitude calculated by point light sources and the coordinates of the CGH. Because we used a phase modulation-type SLM, we calculated a phase modulation-type CGH pattern,ϕ(x a , y a ), using the following equation:

where arg(U) is an operator indicating the argument of a complex number.

For a full-colour CGH, the wavelength in Eq. (1) is set to the red, green or blue wavelength and the CGH of each colour is calculated. Thus, the calculation time for the full-colour CGH is three times greater than that of the monochrome one. When constructing a full-colour optical system, red, green and blue CGHs can be displayed on one SLM36,37,38,39 or on three different SLMs40. For the one-SLM system, there are several methods based on time-division multiplexing36, space-division multiplexing37, superposition of the red, green and blue images on a diffuser plate38 and the use of SLMs with high dynamic phase modulation range39. Time-division multiplexing requires a high-speed SLM, such as a digital micro-mirror device, to obtain full-colour images without flickers caused by the switching of the reference lights to other colours. Space-division multiplexing decreases the resolution of each colour hologram because this method displays each colour hologram on the different regions of an SLM plane. A superposition method is proposed for a holographic projector41,42. This method is not suitable for the reconstruction of 3D images because it projects holographic images using a 2D diffuser plate. The last method requires a special phase-only SLM, which has high dynamic phase modulation range (i.e. 0–10π). Because the SLM used herein has the phase modulation range of 0–2π and a refresh rate of 60 Hz, we adopted the method using of three different SLMs.

To realise interactive handling without discomfort, the system requires real-time operation, which is difficult to achieve using only a CPU. Hence, we parallelised the processing of point clouds and the calculation of CGH by combining a CPU with a GPU.

Parallelisation of the CGH calculation

Since Eqs (1) and (2) are solved independently for each pixel, the system assigns one pixel calculation to one thread using the GPU. After the CGH calculation is complete for all pixels, the system sends the CGH result to the CPU. To calculate the full-colour CGH, the system solves Eqs (1) and (2) in the order of blue, green and red wavelengths within each thread. Finally, the system sends the full-colour CGH to the CPU.

References

Yamamoto, H., Tomiyama, Y. & Suyama, S. Floating aerial LED signage based on aerial imaging by retro-reflection (AIRR). Opt. Express 22, 26919–26924 (2014).

Hirayama, R. et al. Design, Implementation and Characterization of a Quantum-Dot-Based Volumetric Display. Sci. Rep. 5, 8472 (2015).

Andrew, M., Andreas, G. & Joel, S. K. Holographic Near-Eye Displays for Virtual and Augmented Reality. ACM Trans. Graph. 36, No.4, Article85 (2017).

Narita, Y. et al. Usefulness of a glass-free medical three-dimensional autostereoscopic display in neurosurgery. Int. J. Comput. Assist Radiol Surg 9, 905–911 (2015).

Zhao, D., Ma, L., Ma, C., Tang, J. & Liao, H. Floating autostereoscopic 3D display with multidimensional images for telesurgical visualization. Int. J. Comput. Assist Radiol Surg 11, 207–215 (2016).

Fan, Z., Weng, Y., Chen, G. & Liao, H. 3D interactive surgical visualization system using mobile spatial information acquisition and autostereoscopic display. J. Biomed. Inform. 71, 154–164 (2017).

Gabor, D. A new microscopic principle. Nature 161, 777–778 (1948).

Dallas, W. J. In digital holography and three-dimensional display: Principles and Applications (ed. Poon, T. C.) 1–49 (Springer, 2006).

Hilaire, P. S. et al. Electronic display system for computational holography. Proc. SPIE 1212, 174–182 (1990).

Tokuda, Y., Norasikin, M. A., Subramanian, S. & Plasencia, D. M. MistForm: Adaptive shape changing fog screens. Proc. CHI’ 17, 4383–4395 (2017).

Grossman, T., Wigdor, D. & Balakrishnan, R. Multi-finger gestural interaction with 3D volumetric displays. Proc. UIST’ 04, 61–70 (2004).

Stavness, I., Lam, B. & Fels, S. pCubee: A perspective-corrected handled cubic display. Proc. CHI’ 10, 381–1390 (2010).

Ichihashi, Y. et al. HORN-6 special-purpose clustered computing system for electroholography. Opt. Express 17, 13895–13903 (2009).

Pan, Y. et al. C. Fast CGH computation using S-LUT on GPU. Opt. Express 17, 18543–18555 (2009).

Shimobaba, T., Ito, T., Masuda, N., Ichihashi, Y. & Takada, N. Fast calculation of computer-generated-hologram on AMD HD5000 series GPU and OpenCL. Opt. Express 18, 9955–9960 (2010).

Son, J.-Y., Lee, B.-R., Chernyshov, O. O., Moon, K.-A. & Lee, H. Holographic display based on a spatial DMD array. Opt. Lett. 38, 3173–3176 (2013).

Xue, G. et al. Multiplexing encoding method for full-color dynamic 3D holographic display. Opt. Express 22, 18473–18482 (2014).

Tsang, P. W. M., Chow, Y.-T. & Poon, T.-C. Generation of phase-only Fresnel hologram based on down-sampling. Opt. Express 22, 25208–25214 (2014).

Niwase, H. et al. Real-time spatiotemporal division multiplexing electroholography with a single graphics processing unit utilizing movie features. Opt. Express 22, 28052–28057 (2014).

Im, D. et al. Phase-regularized polygon computer-generated holograms. Opt. Lett. 39, 3642–3645 (2014).

Kakue, T. et al. Aerial projection of three-dimensional motion pictures by electro-holography and parabolic mirrors. Sci. Rep. 5, 11750 (2015).

Igarashi, S., Nakamura, T. & Yamaguchi, M. Fast method of calculating a photorealistic hologram based on orthographic ray-wavefront conversion. Opt. Lett. 41, 1396–1399 (2016).

Lim, Y. et al. 360-degree tabletop electronic holographic display. Opt. Express 24, 24999–25009 (2016).

Li, X., Liu, J. & Wang, Y. High resolution real-time projection display using a half-overlap-pixel method. Opt. Commun. 296, 156–159 (2017).

Tomori, Z. et al. Holographic raman tweezers controlled by hand gestures and voice commands. Opt. Photon. J. 3, 331–336 (2013).

Shaw, L., Preece, D. & Rubinsztein-Dunlop, H. Kinect the dots: 3D control of optical tweezers. J. Opt. 15, 075703 (2013).

Adhikarla, V. K., Sodnik, J., Szolgay, P. & Jakus, G. Exploring direct 3D interaction for full horizontal parallax light field displays using leap motion controller. Sensors 15, 8642–8663 (2013).

Adhikarla, V. K. et al. Freehand interaction with large-scale 3D map data. Proc. IEEE True Vis.- Capture Transmiss. Display 3D Video, 1–4 (2014).

Yamaguchi, M. Light-field and holographic three-dimensional displays [Invited]. J. Opt. Soc. Am. A 33, 2348–2364 (2016).

Yamaguchi, M. Full-parallax holographic light-field 3-D displays and interactive 3-D touch. Proceedings of the IEEE. 105, 947–959 (2017).

Shimobaba, T., Shiraki, A., Ichihashi, Y., Masuda, N. & Ito, T. Interactive color electroholography using the FPGA technology and time division switching method. IEICE Electron. Express 5, 271–277 (2008).

Leap Motion Inc. Leap Motion, https://www.leapmotion.com/ Accessed at: 28/10/2017.

Nakayama, H. et al. Real-time colour electroholography using multiple graphics processing units and multiple high-definition liquid-crystal display panels. Appl. Opt. 49, 5993–5996 (2010).

Ichihashi, Y., Oi, R., Senoh, T., Yamamoto, K. & Kurita, T. Real-time capture and reconstruction system with multiple GPUs for a 3D live scene by a generation from 4K IP images to 8K holograms. Opt. Express 20, 21645–21655 (2012).

Shimobaba, T. & Ito, T. Fast generation of computer-generated holograms using wavelet shrinkage. Opt. Express 25, 77–86 (2017).

Oikawa, M. et al. Time-division colour electroholography using one-chip RGB LED and synchronizing controller. Opt. Express 19, 12008–12013 (2011).

Makowski, M. et al. Simple holographic projection in color. Opt. Express 20, 25130–25136 (2012).

Makowski, M. et al. Experimental evaluation of a full-color compact lensless holographic display. Opt. Express 17, 20840–20846 (2009).

Jesacher, A., Bernet, S. & Ritsch-Marte, M. Colour hologram projection with an SLM by exploiting its full phase modulation range. Opt. Express 22, 20530–20541 (2014).

Sasaki, H., Yamamoto, K., Wakunami, K., Ichihashi, Y. & Senoh, T. Large size three-dimensional video by electronic holography using multiple spatial light modulators. Sci. Rep. 4, 6177 (2014).

Buckley, E. Holographic laser projection. J. Display Technol. 7, 135–140 (2010).

Shimobaba, T. et al. Lensless zoomable holographic projection using scaled Fresnel diffraction. Opt. Express 21, 25285–25290 (2013).

Acknowledgements

This work is partially supported by JSPS Grant-in-Aid No. 25240015 and the Institute for Global Prominent Research, Chiba University.

Author information

Authors and Affiliations

Contributions

T.K., T.S. and T.I. directed the project; S.Y. implemented the CGH calculation and finger-sensitive processing on the computer; S.Y. measured and evaluated CGH calculation time; T.K. designed and constructed the optical setup; and all authors contributed to discussions.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yamada, S., Kakue, T., Shimobaba, T. et al. Interactive Holographic Display Based on Finger Gestures. Sci Rep 8, 2010 (2018). https://doi.org/10.1038/s41598-018-20454-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-20454-6

This article is cited by

-

Contactless human–computer interaction system based on three-dimensional holographic display and gesture recognition

Applied Physics B (2023)

-

An interactive holographic projection system that uses a hand-drawn interface with a consumer CPU

Scientific Reports (2021)

-

Examining the user experience of learning with a hologram tutor in the form of a 3D cartoon character

Education and Information Technologies (2021)

-

Application of the technical - pedagogical resource 3D holographic LED-fan display in the classroom

Smart Learning Environments (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.