Abstract

In response to the COVID-19 pandemic, the Psychological Science Accelerator coordinated three large-scale psychological studies to examine the effects of loss-gain framing, cognitive reappraisals, and autonomy framing manipulations on behavioral intentions and affective measures. The data collected (April to October 2020) included specific measures for each experimental study, a general questionnaire examining health prevention behaviors and COVID-19 experience, geographical and cultural context characterization, and demographic information for each participant. Each participant started the study with the same general questions and then was randomized to complete either one longer experiment or two shorter experiments. Data were provided by 73,223 participants with varying completion rates. Participants completed the survey from 111 geopolitical regions in 44 unique languages/dialects. The anonymized dataset described here is provided in both raw and processed formats to facilitate re-use and further analyses. The dataset offers secondary analytic opportunities to explore coping, framing, and self-determination across a diverse, global sample obtained at the onset of the COVID-19 pandemic, which can be merged with other time-sampled or geographic data.

Measurement(s) | COVID-19 Protocols, Restrictions • Health Behaviors • Personality and Behavioral Change, CTCAE • Emotion • Message Framing • Self-Determination |

Technology Type(s) | Survey • Experiment Design Type |

Factor Type(s) | COVID-19 Protocols, Restrictions • Health Behaviors • Loss-Gain Framing • Cognitive Reappraisal • Self-Determination Messaging |

Sample Characteristic - Organism | Homo |

Sample Characteristic - Environment | Daily Life |

Sample Characteristic - Location | North America • South America • Africa • Australia • Europe • United Kingdom • Asia |

Similar content being viewed by others

Background & Summary

In 2020, the rise of the COVID-19 pandemic presented enormous challenges to people’s health and well-being. In response to this challenge, in March 2020, the Psychological Science Accelerator (PSA)1 announced a call for studies focusing on applied research to answer questions on how to reduce the negative emotional and behavioral impacts of the pandemic, the PSA COVID-Rapid (PSACR) Project. The PSA is a global collaborative network of over 1,000 members across 80+ countries/geopolitical locations that develop large, “big-team science” projects. Three research studies were selected to pursue, paired with a general survey about health behaviors, COVID-19 experiences, and demographic information (https://osf.io/x976j). The dataset described here represents three studies on the psychology of message communication: Study (1) how framing affects health communication messages using gain-versus-loss framing2; Study (2) cognitive reappraisal3; and Study (3) how self-determination theory can inform health messaging for social distancing uptake4. Participants either completed Loss-Gain Framing (Study 1) and Self-Determination (Study 3) (order counterbalanced), only the Self-Determination (Study 3) or Cognitive Reappraisal (Study 2) (see Figs. 1 and 2).

Survey flow for the PSACR project. As shown in Fig. 2, participants were given one path through the study determined by the date they completed the study and randomization factors.

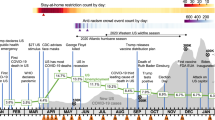

Timeline for the PSACR project in 2020. In April, only the English version of the study was available for participants. In May, Hungarian, Dutch, Polish, and Portuguese were added to the study. In the next month, French, Macedonian, Swedish, Spanish, Farsi, Norwegian, Russian, Turkish, Bulgarian, Urdu, Czech, Greek, Italian, Japanese, Slovak, Arabic, Hebrew, Filipino, and Korean were launched. In July, Croatian, German, Yoruba, Armenian, Chinese, Serbian, Finnish, Romanian, Uzbek, Bengali, Slovenian, and Hebrew were included. Languages were generally launched with its dialect variants (e.g., Dutch and Dutch-Belgian).

Loss-gain framing (Study 1)

In the first study, participants read health excerpts that framed these messages behaviors as gains or losses, and we subsequently measured participants’ intentions to follow guidelines to prevent COVID-19 transmission, attitudes towards COVID-19 prevention policies, propensity to seek additional information about COVID-19, and anxiety (using both self-report and behavioral measures)2. This study was designed to examine the role of message framing in influencing compliance with the pandemic recommendations using gain-versus-loss framing. The gain-framed messages in this study highlighted the usefulness of compliance with the messages (e.g., there is so much to gain. If you practice these four steps, you can protect yourself and others), whereas the loss-framed messages spotlighted the negative effects of ignoring the recommendations (e.g., there is so much to lose. If you do not practice these four steps, you can endanger yourself and others)5. Research on the impact of message framing on emotion and behavior is critical6, and previous studies suggest that loss-framing elicits negative emotion7. This study extended previous research to measure the impact of framing on anxiety.

Summary of study findings

Loss-framed messages (as compared to gain-framed) increased participants’ self-reported anxiety. On the contrary, there were no meaningful differences between gain framing and loss framing in effects on 1) behavioral intentions to follow COVID-19 prevention guidelines, (2) attitudes toward COVID-19 prevention policies, and 3) whether participants chose to seek more information about COVID-19. Crucially, most of these results were relatively consistent across 84 countries.

Cognitive reappraisal (Study 2)

The second study focused on emotion regulation through cognitive reappraisal3. The COVID-19 pandemic has been shown to decrease positive emotions and psychological health and increase negative emotions8,9,10. These emotional changes could potentially be mitigated by emotion regulation strategies. Cognitive reappraisal is one such strategy that encourages changes in the way one thinks about a situation in order to change the way one feels11, and this study specifically used reconstrual and repurposing as reappraisal methods. Previous research has shown that participants engaging in cognitive reappraisal can increase resilience in a simple and effective manner12, especially in comparison to other emotion strategies, such as emotion suppression13. Study 2 examined the effectiveness of reappraisal strategies to reduce negative emotions and increase positive ones regarding COVID-19 situations. Moreover, we explored whether cognitive reappraisals influence health behavioral intentions.

Summary of study findings

The two variations of reappraisal strategies tested in this study (reconstrual and repurposing) both reduced negative emotions and increased positive emotions related to COVID-19 situations compared to control conditions. Neither strategy affected health behavior intentions related to stay-at-home behaviors and hand washing behaviors. The two strategies had similar effects to one another.

Self-determination (Study 3)

The third study examined motivations/intentions to participate in social distancing using self-determination theory as a framework to design messages that either induced individual autonomy through supportive messaging or pressured individuals through controlling messaging14,15. Autonomy-supportive communication styles include information on perspective-taking, the rationale for changing behavior, and engaging an individual’s autonomy of choice in a scenario16. Controlling communication styles use shame and blame to induce a change in behavior17. In general, autonomy-supportive messages have been shown to increase behavior change18, whereas controlling messages often lead to behaviors that are the opposite of what was intended19,20. Study 3 examined the effects of autonomy-supportive versus controlling messages about social distancing on the quality of motivation, feelings of defiance, and behavioral intentions to engage in social distancing.

Summary of study findings

The controlling message increased controlled motivation for social distancing, a relatively ineffective form of motivation concerning avoiding shame and social consequences, relative to the control group who received no message. On the other hand, the autonomy-supportive message decreased feelings of defiance compared with the controlling message. Unexpectedly, neither autonomy-supportive or controlling messages influenced behavioral intentions, though existing motivations did, with autonomous motivation predicting greater long-term intentions, and controlled motivation predicting fewer long-term intentions to social distance.

Here, we present the aggregated data from all studies and from a health behavior survey assessing demographics and COVID-19 experience and local restrictions21. These data can be further merged with corresponding data using time and/or location information, potentially to track responses in tandem with COVID-19 rates (https://data.humdata.org/dataset/oxford-covid-19-government-response-tracker), vaccinations22, hospitalizations (https://ourworldindata.org/coronavirus-data-explorer), or other psychological variables (e.g., anxiety, depression; https://www.nih.gov/news-events/news-releases/all-us-research-program-launches-covid-19-research-initiatives). The dataset provides an array of untapped insights into human emotion, motivation, persuasion, and other topics that can be unlocked through secondary analyses (e.g., regional moderator analyses).

Methods

Survey flow

The PSACR project consisted of a general survey (i.e., consisting of health behaviors, COVID-19 information, and demographics) and the three studies described above (see the survey flow in Fig. 1). Data were collected online, and participants were able to complete the study from any internet-enabled device. First, participants were shown a landing page to select their language or regional variation of a language, with 44 languages included as options. Participants then read an informed consent form to familiarize themselves with the general objectives of the study and indicate their consent to participate in the study (yes/no choice). The entire study was approved by the Institutional Review Board at Ashland University as the main research hub, and local approval was obtained from other research labs depending on individual institution requirements.

The participants were then shown a general survey, COVID-19 experience, and demographics survey designed by the PSACR admin team (described below). Participants were then randomly invited to complete either (a) Cognitive Reappraisal (Study 2), or (b) both the Loss-Gain Framing (Study 1) and Self-Determination (Study 3) studies in random order. However, a small portion of participants received only Study 3 (Self-Determination), as this study was deployed before the other two studies were ready (see Fig. 2 for a timeline of the project). Creating two survey flows (Study 1 and 3 versus Study 2) kept participant completion time to approximately 20–30 minutes across the entire survey as Study 2 took longer to complete than Study 1 and 3. Data collection for Loss-Gain Framing (Study 1) and Self-Determination (Study 3) ended before Cognitive Reappraisal (Study 2) for which data collection was continued to achieve the a priori determined sample size. A timeline for the different PSACR studies onsets and offsets is presented in Fig. 2. After completing the studies, participants were shown a debriefing about the studies that included information about the pandemic and World Health Organization (WHO) recommendations. All translations and materials can be found at https://osf.io/gvw56/ and at https://osf.io/s4hj2/.

Translation

The translation team consisted of one lead coordinator, two assistant coordinators, one language specific coordinator, and translators within each language. The lead coordinator, along with the assistant coordinators, oversaw all languages organizing and connecting information between the study teams, translation teams, and implementation teams. The language coordinator worked with the individual translators for their target language, and approximately 268 individuals helped achieve the translations for this study. The translation process included three stages: forward translation, backward translation, and cultural adjustment. During forward translation, at least two translators worked together to translate each study from English to each target language. Separately, two translators then reversed this process by backward translating from each target language to English. Between these two stages, all inconsistencies were eliminated by discussion between team members. Last, the translated materials were sent to separate individuals for cultural adjustment. Cultural adjustment included wording tweaks for understanding within that culture. For example, several of the educational levels were modified based on the education system in the area that generally spoke the target language (see https://osf.io/ca3ks for a review of the translation process).

Study deployment

The study was implemented online using formr survey software23 which enabled complex randomization and study tracking during the life of the project. The study can be reproduced using the Excel files for each language and the overall survey flow psacr-pool.json file (https://osf.io/643aw/) provided in the formr folder in the Open Science Framework (OSF) repository. formr software allows a researcher to import a survey flow through .json formatted files, and the exact questions and study design can be imported using Excel or Google Sheets files. Within each language folder, we have provided the consent form, the general survey, the Loss-Gain Framing survey (Study 1), the Cognitive Reappraisal survey (Study 2), the Self-Determination survey (Study 3), and the final WHO and debriefing information. Each language setup uses the same survey flow and therefore can be recreated using the provided .json survey flow and the relevant Excel files.

General survey

The general survey was designed to gather information about the participants’ experiences with COVID-19 protocols and restrictions in their lives as well as demographic information. Participants first reported their health behaviors in response to the pandemic by indicating how many times they had left their homes in the past week, the reasons for leaving their home, their mask usage, hand washing, and coughing/sneezing actions. The next section covered COVID-19 restrictions and government responses, including current restrictions, ability to manage restrictions, and trust and satisfaction in government activities. Participants then indicated if they had been tested for COVID-19, were self-isolating for symptoms, their confidence about understanding and preventing COVID-19, and their worry over their physical and emotional well-being. The last section of the general survey covered participant demographics (age, gender, education, geopolitical region, nationality, state of residence for U.S. participants, and type of community), questions about how the participant was recruited into the survey, and questions about their household (number of members, socioeconomic status, and health conditions).

Loss-gain framing (Study 1)

In this study, each participant was first presented with an overall description of the task, which was to express their opinions on various recommendations for mitigating COVID-19. Participants were randomized into two framing conditions about the steps one can take to meet COVID-19 guidelines. These two conditions were framed in either a gain perspective (“You have so much to gain by practicing these steps”) or a loss perspective (“You have so much to lose by not following these steps”). Within the loss- and gain-framed conditions, the messages were written in three different ways, and participants were randomly assigned which version of the message to view. Participants then rated the likelihood to comply with these recommendations while the instructions were presented on the page. Next, they rated their feelings on government policies related to health, rated their emotions (i.e., anger, anxiety, fear) when considering these policies, and indicated if they wanted to learn more about WHO guidelines. Finally, participants were asked to complete a manipulation check about the information they had read to ensure they had paid attention during the study.

Cognitive reappraisal (Study 2)

This study focused on determining if a cognitive reappraisal strategy would change the emotional responses to photos related to COVID-19. First, participants rated their emotions they were feeling “right now.” For positive emotions, they indicated how hopeful, loved, peaceful, understood, and cared for they felt. For negative emotions, they indicated how annoyed, sad, angry, stressed, left out, and much hate they felt. These emotions were randomly presented such that each person saw positive and negative emotion questions interspersed, beginning either with positive (i.e., positive, negative, positive, negative, etc.) or negative. The order of the questions was also randomized across participants.

At this point in the study, participants were randomized into one of four conditions: (1) reflecting: reflecting upon your thoughts and feelings about any situation can lead to different emotional responses; (2) rethinking: different ways of interpreting or thinking about any situation can lead to different emotions; (3) refocusing: finding something good in even the most challenging situations can lead to different emotional responses; and (4) a control condition that suggested participants respond as they naturally would. Participants were provided with instructions on how to implement these appraisals. Consequently, participants had four practice trials where each time they viewed a picture, rated their current affect/mood, and wrote a few sentences about how they implemented the appraisal with respect to the picture.

After completion of the practice trials, participants rated ten COVID-19 related pictures (e.g., person on a stretcher being taken out of an ambulance, grieving/worried relatives, etc.) followed by questions about how positive and negative they felt viewing the pictures. The pictures were selected by lead team of Study 2 by searching major news sources across Asia, Europe, and North America. These pictures were rated by the lead team on sadness/anxiety and whether they should use the pictures. The final selection of pictures received higher-than-average scores (on a 1–7 Likert scale), for both ratings. This section was followed by the original emotion questions presented at the beginning of the study, in the same randomized order as they had been previously seen. These questions were then adjusted to determine anticipated emotions (i.e., to what extent will you feel sad). Participants next answered questions about their positive habits, such as exercising, and negative habits, such as drinking and smoking. Last, participants were given a series of attention check questions to identify inattentive responding, along with a final question about their current emotional state relative to their emotional state at the beginning of the study.

Self-determination (Study 3)

This study examined the COVID-19 recommendations for social-distancing across three randomly assigned conditions: (1) an autonomy-supportive message promoting personal agency and reflective choices, (2) a controlling message that was forceful and shaming, and 3) no message about social distancing. Each participant rated their current adherence with social distancing before receiving these messages. After the messages, participants rated their motivations to engage in social distancing, and how the messaging about social distancing made them feel. They then re-rated the original items about their social distancing intentions for the next week (i.e., how often will you see friends in the next week) and the next six months. At the end of the study, participants completed manipulation check items to ensure messages were experienced as supportive vs. pressuring.

Data processing

The complete data processing scripts from the study, along with annotations, can be found at https://osf.io/gvw56/. During the data collection phase of the studies, we tracked several indicators such as the current participant counts, timing, and other important factors in the study (e.g., the number of people in each language and group). The code for this tracking is presented in https://osf.io/uzqdr/, but those summary data were collected only for monitoring purposes during the study. The following data processing steps were taken on the raw data, and the final output can be found in the raw_data folder in the OSF repository. The raw data are stored separately by month in compressed zip files due to their large size and file storage limits. The processing steps included:

-

1)

Eliminate duplicate rows. We collected data across multiple servers, and sometimes, the server posted the same participant data twice.

-

2)

Creation of unique identifiers for each participant. One language of the study (Swedish) briefly did not include the appropriate code to enable creating unique identifiers. Therefore, these data were matched using other information embedded in the surveys and the unique identifier was filled in for these participants.

-

3)

Removal of pilot data. We removed pilot testing responses from the dataset. These responses were identified by the start dates and times for each language separately.

-

4)

Participant completion codes (e.g., unique subject identifiers created for reporting completion at the end of the study) were extracted from the raw data for researchers to check if their participants had completed the study.

-

5)

Participant personal information (i.e., information that could be used to identify the participant, information for creating completion codes, emails or phone numbers for lottery participants) was excluded before uploading data into the raw_data folder.

Next, we transformed the raw data set to facilitate reuse of the data. The code for this stage can be found at https://osf.io/shd9a/. The following steps were performed to create a processed dataset, and the output from this data curation can be found in the processed_data folder online.

-

1)

The information presented on the screen to the participant was included as a new column matching each item label from the formr worksheets. These labels were added as new columns in English and the language the study was displayed in.

-

2)

The answer choice from a participant is included in numeric format (e.g., 1 = Strongly Agree to 5 = Strongly Disagree), and therefore, we included a new column with the original labels of the answer choice for items that had text labels. These labels are in English, even if the study was presented in a different language.

-

3)

We created an overall participant file that has relevant information for participants across the entire study. This data file is described below.

-

4)

We separated the data from each of the embedded studies (General Survey, Loss Gain, etc. described above) into independent data files, and these files are described below.

-

5)

For the Cognitive Reappraisal study, we added a column that identified the exact items shown to participants. The original labels contain markdown and R code that generated the random order of items for each participant. The label column includes that code but can be difficult to interpret. Therefore, we recreated the order seen by each participant and merged that information into the Cognitive Reappraisal study file only.

Exclusion criteria

Each of the three individual studies used different exclusion criteria for their analyses (see technical validation description below). For the dataset described in this manuscript, no exclusion criteria were employed other than data curation described above. Therefore, the dataset includes all answers from any person that opened the survey, regardless of completion times or missing data.

Data Records

In this section, we describe the contents of the processed_data folder created from the data processing steps described above. All data is stored on OSF21. The raw_data folder contains files in a similar structure, which should be interpretable from the descriptions provided below. The main difference in these files is that the processed data include additional columns to disambiguate the information contained in each row.

Global participant data

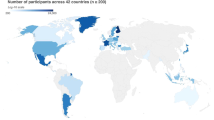

The overall participant data consist of one participant information file and two dictionary files that detail the hand-coded processing of a free text response. The participant information file (participants.csv) is a comma separated text file that contains overall participant information and can be accessed at https://osf.io/rjgwh/. The information for each column of the participant file is found in Supplemental Table 1. The file included the geopolitical region from which the participant indicated they had taken the survey, along with the state (only for U.S. participants). This information was a free text response in the original survey. The country_dictionary.xlsx (https://osf.io/8zv42/) and state_dictionary.xlsx (https://osf.io/5xbce/) were used to merge geopolitical information from the overall general survey into this file. These files are in Excel format with two tabs. Each text answer was examined by two individuals and coded into geopolitical region codes and U.S. state names. In cases of disagreement, a third person arbitrated that decision. The first tab of each document includes the final chosen answer, and the second tab includes all coder responses for transparency. Supplemental Tables 2 and 3 include the metadata for each column. Figure 3 demonstrates the geopolitical region data and corresponding sample sizes from this processing.

The top panel includes a map of the countries/geopolitical locations from which data was collected for the PSACR project with corresponding sample size. The bottom panel includes a treemap of sample size by geopolitical region, and these values are grouped by UN subregion: Eastern Europe, Northern America, Eastern Asia, Western Europe, Southern Europe, Sub-Saharan Africa, Northern Europe, Latin American and the Caribbean, Western Asia, Australia and New Zealand, South-Eastern Asia, and Southern Asia (listed here largest to smallest). Cell size depicts relative sample size for each sub-region to the whole sample and within groups relative sample size.

Individual study data

The data are in long format wherein each line represents a single item that was shown to the participant, including submit buttons, notes, and hidden item information. All four data files have the same format (Supplemental Table 4). The general survey data can be found at https://osf.io/37uca/ (general_data.csv.zip), the Loss-Gain Framing study at https://osf.io/ctsrk/ (study1_data.csv.zip), the Cognitive Reappraisal study at https://osf.io/bec2f/ (study2_data.csv.zip), and the Self-Determination study at https://osf.io/aqkjh/ (study3_data.csv.zip). Each file is in comma separated text format, which was then compressed into a zip archive to fit within space limitations for OSF. As noted in Supplemental Table 4, the Cognitive Reappraisal study has one extra column of data due to the specific randomization involved in the study.

Table 1 includes information about completion rates and demographic measures for each study. The number of participants who started the survey is first reported. Each participant started with the general survey indicating a large portion of the participants generally opened the link to the study and then declined to continue (i.e., 73,223 participants opened the link while only 54,952 started the general survey). The number of participants who completed the last item in each section is also reported. In the next row, we present the number of participants who completed most of the items (>95%) on the survey as a secondary measure of the amount of usable data within each area. Depending on the secondary interest variables, more data may be available for each study. The number of languages and geopolitical regions represented in each study are found next in the table and the overall demographics can be found in Fig. 3. The gender breakdown is provided for each study, and most participants identified as female. Last, we provide information for technical validation described below.

Technical Validation

As participants entered the survey, they were randomized into one of two combinations of studies (Cognitive Reappraisal only or Loss-Gain Framing and Self-Determination combined). Within each of these studies, the experimental group manipulations were randomly assigned. Last, when noted above, study items were randomly ordered. The participants were blind to their conditions within the study. The technical programmers and study lead investigators had knowledge of the study conditions, but these were not known to most of the data collection teams. Samples were recruited from participant pools, paid participant websites, and social media. Research teams had no control over the allocation of participants to conditions. Samples were tracked through an online Shiny app (i.e., an R statistical software that creates online interactive applications24) that displayed the total number of participants, separated by language and condition, to ensure appropriate randomization during the study deployment.

As shown in Table 1, the timing of the study completion may be used to ensure data quality by excluding participants who completed the study too quickly (as defined by a secondary analysis team). Each individual study also included manipulation checks to determine participant attentiveness. In the Loss-Gain Framing study, each participant was asked to indicate which framing message they had seen, and the number of participants indicated in Table 1 represents those who chose the correct answer based on their group assignment. The Cognitive-Reappraisal study had two manipulation check questions: participants had to pick a picture they had not previously seen and had to indicate the instructions they had been given to appraise those pictures. The values in Table 1 represent those who answered both questions correctly.

The Self-Determination study did not use a manipulation check with a “correct” answer, but rather examined if their manipulation changed the responses to questions presented at the end of the study (1 Strongly Disagree to 7 Strongly Agree). The autonomy group (M = 4.11, SD = 1.70) rated the instructions as providing more choices than the controlling message group (M = 3.57, SD = 2.01, d = 0.29) and the no message group (M = 3.75, SD = 1.82, d = 0.20), while the controlling message group rated the messages lower than the no message group (d = 0.10). A second question asked participants to rate the messages seen as trying to pressure people. The controlling messages group rated the item the highest (M = 3.30, SD = 2.07) in comparison to the autonomy message group (M = 2.62, SD = 1.91, d = 0.34) and the no message group (M = 2.88, SD = 1.96, d = 0.21). The no message group rated this item as higher than the autonomy group, d = 0.14. While these effect sizes are small, they indicate a pattern of responses that support participants’ responding to the manipulations presented for their group.

Code availability

All code can be found at https://osf.io/gvw56/.

References

Moshontz, H. et al. The Psychological Science Accelerator: Advancing Psychology Through a Distributed Collaborative Network. Adv. Methods Pract. Psychol. Sci. 1, 501–515 (2018).

Dorison, C. A. et al. In COVID-19 Health Messaging, Loss Framing Increases Anxiety with Little-to-No Concomitant Benefits: Experimental Evidence from 84 Countries. Affect. Sci. 3, 577–602 (2022).

Wang, K. et al. A multi-country test of brief reappraisal interventions on emotions during the COVID-19 pandemic. Nat. Hum. Behav. 5, 1089–1110 (2021).

Psychological Science Accelerator Self-Determination Theory Collaboration. et al. A global experiment on motivating social distancing during the COVID-19 pandemic. Proc. Natl. Acad. Sci. 119, e2111091119 (2022).

Meyerowitz, B. E. & Chaiken, S. The effect of message framing on breast self-examination attitudes, intentions, and behavior. J. Pers. Soc. Psychol. 52, 500–510 (1987).

Bavel, J. J. V. et al. Using social and behavioural science to support COVID-19 pandemic response. Nat. Hum. Behav. 4, 460–471 (2020).

Nabi, R. L. et al. Can Emotions Capture the Elusive Gain-Loss Framing Effect? A Meta-Analysis. Commun. Res. 47, 1107–1130 (2020).

Hou, Z., Du, F., Jiang, H., Zhou, X. & Lin, L. Assessment of Public Attention, Risk Perception, Emotional and Behavioural Responses to the COVID-19 Outbreak: Social Media Surveillance in China. SSRN Electron. J. https://doi.org/10.2139/ssrn.3551338 (2020).

Cullen, W., Gulati, G. & Kelly, B. D. Mental health in the COVID-19 pandemic. QJM Int. J. Med. 113, 311–312 (2020).

Yarrington, J. S. et al. Impact of the COVID-19 Pandemic on Mental Health among 157,213 Americans. J. Affect. Disord. 286, 64–70 (2021).

McRae, K. & Gross, J. J. Emotion regulation. Emotion 20, 1–9 (2020).

Tugade, M. M. & Fredrickson, B. L. Regulation of Positive Emotions: Emotion Regulation Strategies that Promote Resilience. J. Happiness Stud. 8, 311–333 (2007).

Webb, T. L., Miles, E. & Sheeran, P. Dealing with feeling: A meta-analysis of the effectiveness of strategies derived from the process model of emotion regulation. Psychol. Bull. 138, 775–808 (2012).

Deci, E. L. & Ryan, R. M. Self-determination theory: A macrotheory of human motivation, development, and health. Can. Psychol. Can. 49, 182–185 (2008).

Ryan, R. M. & Deci, E. L. Self-Determination Theory: Basic Psychological Needs in Motivation, Development, and Wellness. (Guilford Publications, 2017).

Su, Y.-L. & Reeve, J. A Meta-analysis of the Effectiveness of Intervention Programs Designed to Support Autonomy. Educ. Psychol. Rev. 23, 159–188 (2011).

Bartholomew, K. J., Ntoumanis, N. & Th⊘gersen-Ntoumani, C. A review of controlling motivational strategies from a self-determination theory perspective: implications for sports coaches. Int. Rev. Sport Exerc. Psychol. 2, 215–233 (2009).

Sheeran, P. et al. Self-determination theory interventions for health behavior change: Meta-analysis and meta-analytic structural equation modeling of randomized controlled trials. J. Consult. Clin. Psychol. 88, 726–737 (2020).

Weinstein, N., Vansteenkiste, M. & Paulmann, S. Don’t you say it that way! Experimental evidence that controlling voices elicit defiance. J. Exp. Soc. Psychol. 88, 103949 (2020).

Legault, L., Gutsell, J. N. & Inzlicht, M. Ironic Effects of Antiprejudice Messages: How Motivational Interventions Can Reduce (but Also Increase) Prejudice. Psychol. Sci. 22, 1472–1477 (2011).

Buchanan, E. et al. Data and Scripts. Open Science Framework https://doi.org/10.17605/OSF.IO/GVW56 (2022).

Mathieu, E. et al. A global database of COVID-19 vaccinations. Nat. Hum. Behav. 5, 947–953 (2021).

Arslan, R. C., Walther, M. P. & Tata, C. S. formr: A study framework allowing for automated feedback generation and complex longitudinal experience-sampling studies using R. Behav. Res. Methods 52, 376–387 (2020).

Chang, W. et al. shiny: Web Application Framework for R. (2021).

Acknowledgements

We would like to acknowledge the contribution of multiple funders who contributed to the success of this project (all listed below). Additionally, the authors would like to thank others who contributed to the PSACR project, as not all contributed to this manuscript. A complete list of personnel can be found at https://psyarxiv.com/x976j/. Erin M. Buchanan: Amazon Web Services (AWS) Imagine Grant. Amélie Gourdon-Kanhukamwe: Internal funding from Kingston University. Hannah Moshontz: National Institute of Mental Health of the National Institutes of Health under Award Number T32MH018931. Adeyemi Adetula: PSA research grant ($285.59) for the PSACR projects data collection. Dmitrii Dubrov: The work of Dmitrii Dubrov was supported within the framework of the Basic Research Program at HSE University, RF. Marek A. Vranka: Progres Q18/Cooperatio MCOM, Charles University. Yuki Yamada: JSPS KAKENHI Grant Numbers JP18K12015 and JP20H04581. Niklas Johannes: Huo Family Foundation. Tatsunori Ishii: JSPS (The Japan Society for the Promotion of Science) KAKENHI, Grant Number 19K14370. Małgorzata Kossowska: The Institute of Psychology, Jagiellonian University. Kevin van Schie: Rubicon grant (019.183SG.007) from the Netherlands Organization for Scientific Research (NWO). Robert M. Ross: Australian Research Council (DP180102384). Dmitry Grigoryev: The Russian data was collected within the framework of the HSE University Basic Research Program. Tripat Gill: Social Science and Humanities Research Council of Canada. Anthony J. Krafnick: Dominican University Faculty Support Grant. Jaime R. Silva: FONDECYT 1221538. William Jiménez-Leal: Vicerrectoria de Investigaciones, Uniandes. Agnieszka Sorokowska: Statutory funds of the Institute of Psychology, University of Wroclaw. Adriana Julieth Olaya Torres: University of Desarrollo, Faculty of Psychology. Piotr Sorokowski: Study was founded by IDN Being Human Lab (University of Wrocław). Michal Misiak: IDN Being Human Lab (University of Wrocław). Krystian Barzykowski: was supported by the National Science Centre, Poland (2019/35/B/HS6/00528). Patrícia Arriaga: was supported by UID/PSI/03125/2019 from the Portuguese National Foundation for Science and Technology (FCT). Ivan Ropovik: PRIMUS/20/HUM/009 and NPO Systemic Risk Institute (LX22NPO5101). Andrej Findor: Agentúra na podporu výskumu a vývoja (Slovak Research and Development Agency) - APVV-17-0596. Matej Hruška: Agentúra na podporu výskumu a vývoja (Slovak Research and Development Agency) - APVV-17-0596. Matus Adamkovic: Slovak Research and Development Agency, project no. APVV-20-0319. Gabriel Baník: Slovak Research and Development Agency, project no. APVV-17-0418. Dawn L. Holford: is supported by a Horizon 2020 grant 964728 (JITSUVAX) from the European Commission and was supported by a United Kingdom Research and Innovation (UKRI) Research Fellowship grant ES/V011901/1. Rodrigo A. Cárcamo: ANID - Fondecyt 1201513. Sébastien Massoni: Program FUTURE LEADER of Lorraine Université d’Excellence within the program Investissements Avenir (ANR-15-IDEX-04-LUE) operated by the French National Research Agency. Alexandre Bran: Association Nationale de la Recherche Scientifique and Pacifica (CIFRE grant 2017/0245).

Author information

Authors and Affiliations

Contributions

Writing - original draft: Erin M. Buchanan, Jeffrey M. Pavlacic, Savannah C. Lewis. Writing - review & editing: All authors. Visualization: Erin M. Buchanan, Peder Mortvedt Isager. Validation: Erin M. Buchanan. Formal Analysis: Erin M. Buchanan. Data curation: Erin M. Buchanan, Bastien Paris. Conceptualization, Methodology: Patrícia Arriaga, Nicholas A. Coles, Charles A. Dorison, Amit Goldenberg, James J. Gross, Blake Heller, Michael C. Mensink, Jeremy K. Miller, Ivan Ropovik, Alexander J. Rothman, Richard M Ryan, Andrew G.Thomas, Ke Wang, Nicole Legate, Brian P Gill, Vaughan W. Rees, Nancy Gibbs, Thuy-vy Thi Nguyen. Project Administration: Flavio Azevedo, Jennifer L. Beaudry, Julie E. Beshears, Erin M. Buchanan, Dr. Christopher R. Chartier, Nicholas A. Coles, Patrick S. Forscher, Amélie Gourdon-Kanhukamwe, Hans IJzerman, Carmel A. Levitan, Savannah C. Lewis, Peter R Mallik, Shira Meir Drexler, Jeremy K. Miller, Hannah Moshontz, Bastien Paris, Maximilian A. Primbs, MohammadHasan Sharifian, Miguel Alejandro A. Silan, Jordan W. Suchow, Anna Louise Todsen. Resources: Balazs Aczel, Matus Adamkovic, Sylwia Adamus, Adeyemi Adetula, Gabriel Agboola Adetula, Arca Adıgüzel, Bamikole Emmanuel AGESIN, Lina Pernilla Ahlgren, Afroja Ahmed, Handan Akkas, Sara G. Alves, Benedict G Antazo, Jan Antfolk, Lisa Anton-Boicuk, Patrícia Arriaga, John Jamir Benzon R. Aruta, Adrian Dahl Askelund, Flavio Azevedo, Soufian Azouaghe, Ekaterina Baklanova, Gabriel Baník, Krystian Barzykowski, Jozef Bavolar, Maja Becker, Julia Beitner, Jana B. Berkessel, Michał Białek, Olga Bialobrzeska, Ahmed Bokkour, Alexandre Bran, Tara Bulut Allred, Martin Čadek, Mariagrazia Capizzi, Nicola Cellini, Zhang Chen, Faith Chiu, Hu Chuan-Peng, Noga Cohen, Sami Çoksan, Vera Cubela Adoric, Wilson Cyrus-Lai, Johanna Czamanski-Cohen, Gabriela Czarnek, Ilker Dalgar, Anna Dalla Rosa, Lisa M. DeBruine, Ikhlas Djamai, Artur Domurat, Dmitrii Dubrov, Maksim Fedotov, Katarzyna Filip, Andrej Findor, Oscar J. Galindo-Caballero, Sandra J. Geiger, Biljana Gjoneska, Amélie Gourdon-Kanhukamwe, Caterina Grano, Agata Groyecka-Bernard, Nandor Hajdu, Paul H. P. Hanel, Mikayel Harutyunyan, Karlijn Hoyer, Evgeniya Hristova, Matej Hruška, Barbora Hubena, Keiko Ihaya, Peder Mortvedt Isager, Tatsunori Ishii, Teodor Jernsäther, Xiaoming Jiang, Niklas Johannes, Ondřej Kácha, Pavol Kačmár, Lada Kaliska, Julia Arhondis Kamburidis, Gwenaêl Kaminski, Cemre Karaarslan, Alper Karababa, Angelos P. Kassianos, Ahmed KHAOUDI, Julita Kielińska, Halil Emre Kocalar, Maria Koptjevskaja-Tamm, Max Korbmacher, Tatiana Korobova, Małgorzata Kossowska, Marta Kowal, Luca Kozma, Dajana Krupić, Dino Krupić, Yoshihiko Kunisato, Jonas R. Kunst, Murathan Kurfalı, Elizaveta Kushnir, Ranran Li, Gabriel Lins de Holanda Coelho, Efisio Manunta, Gabriela-Mariana Marcu, Marcel Martončik, Sébastien Massoni, Princess Lovella G. Maturan, Irem Metin-Orta, Jasna Milošević Đorđević, Giovanna Mioni, Aviv Mokady, Renan P. Monteiro, Sara Morales-Izquierdo, Farnaz Mosannenzadeh, Fany Muchembled, Mina Nedelcheva-Datsova, Gustav Nilsonne, Raquel Oliveira, Clara Overkott, Asil Ali Özdoğru, Tamar Elise Paltrow, Myrto Pantazi, Bastien Paris, Mariola Paruzel-Czachura, Michal Parzuchowski, Ilse L. Pit, Sara Johanna Pöntinen, Razieh Pourafshari, Ekaterina Pronizius, Nikolay R. Rachev, Niv Reggev, Ulf-Dietrich Reips, Rafael R. Ribeiro, Marta Roczniewska, Ivan Ropovik, Susana Ruiz-Fernandez, Aslı Saçaklı, Dušana Dušan Šakan, Anabela Caetano Santos, Nicolas Say, Vidar Schei, Nadya-Daniela Schmidt, Jana Schrötter, Daniela Serrato Alvarez, MohammadHasan Sharifian, Miguel Alejandro A. Silan, Claudio Singh Solorzano, Karolina Staniaszek, Stefan Stieger, Eva Štrukelj, Anna Studzinska, Naoyuki Sunami, Lilian Suter, Therese E. Sverdrup, Anna Szala, Barnabas Szaszi, Christian K Tamnes, Anna Louise Todsen, Murat Tümer, Anum Urooj, Kevin van Schie, Martin R. Vasilev, Milica Vdovic, Diego Vega, Jeroen P.H. Verharen, Kevin Vezirian, Luc Vieira, Roosevelt Vilar, Jáchym Vintr, Leonhard Volz, Claudia C. von Bastian, Marek A. Vranka, Lara Warmelink, Minja Westerlund, Yuki Yamada, Ilya Zakharov, Danilo Zambrano, Janis H. Zickfeld, Andras N. Zsido, Barbara Žuro. Investigation: Christopher L. Aberson, Matus Adamkovic, Adeyemi Adetula, Arca Adıgüzel, Reza Afhami, Elena Agadullina, Lina Pernilla Ahlgren, Afroja Ahmed, Nihan Albayrak-Aydemir, Inês A. T. Almeida, Sara G. Alves, Gulnaz Anjum, Michele Anne, Benedict G Antazo, Jan Antfolk, Nwadiogo Chisom Arinze, Azuka Ikechukwu Arinze, Patrícia Arriaga, John Jamir Benzon R. Aruta, Alexios Arvanitis, Adrian Dahl Askelund, Soufian Azouaghe, Hui Bai, Busra Bahar Balci, Tonia Ballantyne, Gabriel Baník, Krystian Barzykowski, Ernest Baskin, Sanja Batić Očovaj, Carlota Batres, Jozef Bavolar, Maja Becker, Behzad Behzadnia, Anabel Belaus, Jana B. Berkessel, Michał Białek, Olga Bialobrzeska, Gijsbert Bijlstra, Ahmed Bokkour, Jordane Boudesseul, Alexandre Bran, Erin M. Buchanan, Tara Bulut Allred, Carsten Bundt, Muhammad Mussaffa Butt, Robert J Calin-Jageman, Rodrigo A. Cárcamo, Joelle Carpentier, Nicola Cellini, Abdelilah Charyate, Zhang Chen, Faith Chiu, William J. Chopik, Weilun Chou, Sami Çoksan, W. Matthew Collins, Nadia Sarai Corral-Frias, Johanna Czamanski-Cohen, Shimrit Daches, Ilker Dalgar, Anna Dalla Rosa, William E. Davis, Anabel de la Rosa-Gómez, Barnaby James Wyld Dixson, Ikhlas Djamai, Artur Domurat, Hongfei Du, Dmitrii Dubrov, Celia Esteban-Serna, Luis Eudave, Gilad Feldman, Ana Ferreira, Andrej Findor, Paul A G Forbes, Francesco Foroni, Patrick S. Forscher, Martha Frias-Armenta, Cynthia H.Y. Fu, Tripat Gill, Biljana Gjoneska, Hendrik Godbersen, Amélie Gourdon-Kanhukamwe, Caterina Grano, Dmitry Grigoryev, Agata Groyecka-Bernard, Paul H. P. Hanel, Andree Hartanto, Mikayel Harutyunyan, Dawn L Holford, Thomas J. Hostler, Monika Hricova, Evgeniya Hristova, Matej Hruška, Keiko Ihaya, Hans IJzerman, Tatsunori Ishii, Bastian Jaeger, Allison P Janak, Steve M. J. Janssen, Lisa M. Jaremka, Teodor Jernsäther, Xiaoming Jiang, William Jiménez-Leal, Jennifer A. Joy-Gaba, Pavol Kačmár, Julia Arhondis Kamburidis, Gwenaêl Kaminski, Heather Barry Kappes, Cemre Karaarslan, Alper Karababa, Maria Karekla, Angelos P. Kassianos, Ahmed KHAOUDI, Meetu Khosla, Julita Kielińska, Natalia Kiselnikova, Halil Emre Kocalar, Monica A Koehn, Maria Koptjevskaja-Tamm, Małgorzata Kossowska, Marta Kowal, Luca Kozma, Anthony J. Krafnick, Franki Y. H. Kung, Yoshihiko Kunisato, Jonas R. Kunst, Elizaveta Kushnir, Anna O. Kuzminska, Claus Lamm, Ljiljana B. Lazarevic, David M. G. Lewis, Tiago J. S. Lima, Samuel Lins, Gabriel Lins de Holanda Coelho, Elkin O. Luis, PAULO MANUEL L. MACAPAGAL, Nadyanna M. Majeed, Mathi Manavalan, Efisio Manunta, Gabriela-Mariana Marcu, Marcel Martončik, Sébastien Massoni, Randy McCarthy, Joseph P. McFall, Michael C. Mensink, Jeremy K. Miller, Jasna Milošević Đorđević, Giovanna Mioni, Michal Misiak, Aviv Mokady, Renan P. Monteiro, David Moreau, Farnaz Mosannenzadeh, Rafał Muda, Erica D. Musser, Izuchukwu L.G. Ndukaihe, Mina Nedelcheva-Datsova, Gustav Nilsonne, Nora L. Nock, Chris Noone, CHISOM ESTHER OGBONNAYA, Adriana Julieth Olaya Torres, Raquel Oliveira, Jonas K. Olofsson, Sandersan Onie, Thomas Ostermann, Asil Ali Özdoğru, Myrto Pantazi, Marietta Papadatou-Pastou, Mariola Paruzel-Czachura, Michal Parzuchowski, Jeffrey M. Pavlacic, Jennifer T Perillo, Gerit Pfuhl, Sara Johanna Pöntinen, Maximilian A. Primbs, Ekaterina Pronizius, Nikolay R. Rachev, Theda Radtke, Rima-Maria Rahal, Crystal Reeck, Niv Reggev, Ulf-Dietrich Reips, Dongning Ren, Matheus Fernando Felix Ribeiro, Marta Roczniewska, Jan Philipp Röer, Ivan Ropovik, Robert M. Ross, Susana Ruiz-Fernandez, Aslı Saçaklı, Dušana Dušan Šakan, Anabela Caetano Santos, Nicolas Say, Vidar Schei, Kathleen Schmidt, Jana Schrötter, Martin Seehuus, Daniela Serrato Alvarez, Jaime R. Silva, Claudio Singh Solorzano, Miroslav Sirota, Agnieszka Sorokowska, Piotr Sorokowski, Jose A. Soto, Daniela Sousa, Ian D Stephen, Daniel Shafik Storage, Eva Štrukelj, Anna Studzinska, Naoyuki Sunami, Clare AM Sutherland, Therese E. Sverdrup, Paulina Szwed, Srinivasan Tatachari, María del Carmen Tejada R., Andrew G.Thomas, Mónica Camila Toro Venegas, Ulrich S. Tran, Giovanni A. Travaglino, Alexa M Tullett, Murat Tümer, Jan Urban, Anum Urooj, Jim Uttley, David C. Vaidis, Zahir Vally, Natalia Van Doren, Martin R. Vasilev, Leigh Ann Vaughn, Milica Vdovic, Diego Vega, Frederkck Verbruggen, Kevin Vezirian, Luc Vieira, Roosevelt Vilar, Iris Vilares, Johannes K. Vilsmeier, Leonhard Volz, Claudia C. von Bastian, Martin Voracek, Marek A. Vranka, Radoslaw B. Walczak, Minja Westerlund, Erin C. Westgate, Aaron L. Wichman, Megan L Willis, Kelly Wolfe, Qinyu Xiao, Siu Kit Yeung, Karen Yu, Ilya Zakharov, Danilo Zambrano, Przemysław Marcin Zdybek, Ignazio Ziano, Janis H. Zickfeld, Saša Zorjan, Andras N. Zsido.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Buchanan, E.M., Lewis, S.C., Paris, B. et al. The Psychological Science Accelerator’s COVID-19 rapid-response dataset. Sci Data 10, 87 (2023). https://doi.org/10.1038/s41597-022-01811-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-022-01811-7

This article is cited by

-

The replication crisis has led to positive structural, procedural, and community changes

Communications Psychology (2023)