Abstract

Kidney pathophysiology is often complex, nonlinear and heterogeneous, which limits the utility of hypothetical-deductive reasoning and linear, statistical approaches to diagnosis and treatment. Emerging evidence suggests that artificial intelligence (AI)-enabled decision support systems — which use algorithms based on learned examples — may have an important role in nephrology. Contemporary AI applications can accurately predict the onset of acute kidney injury before notable biochemical changes occur; can identify modifiable risk factors for chronic kidney disease onset and progression; can match or exceed human accuracy in recognizing renal tumours on imaging studies; and may augment prognostication and decision-making following renal transplantation. Future AI applications have the potential to make real-time, continuous recommendations for discrete actions and yield the greatest probability of achieving optimal kidney health outcomes. Realizing the clinical integration of AI applications will require cooperative, multidisciplinary commitment to ensure algorithm fairness, overcome barriers to clinical implementation, and build an AI-competent workforce. AI-enabled decision support should preserve the pre-eminence of wisdom and augment rather than replace human decision-making. By anchoring intuition with objective predictions and classifications, this approach should favour clinician intuition when it is honed by experience.

Key points

-

Hypothetical-deductive reasoning and linear, statistical approaches to diagnosis and treatment often fail to adequately represent the complex, nonlinear, and heterogeneous nature of kidney pathophysiology.

-

Artificial intelligence (AI)-enabled decision support systems use algorithms that learn from examples to accurately represent complex pathophysiology, including kidney pathophysiology, offering opportunities to enhance patient-centred diagnostic, prognostic and treatment approaches.

-

Contemporary AI applications can accurately predict kidney injury before the development of measurable biochemical changes, identify modifiable risk factors, and match or exceed human accuracy in recognizing kidney pathology on imaging studies.

-

Advances in the past few years suggest that AI models have potential to make real-time, continuous recommendations for discrete actions that yield the greatest probability of achieving optimal kidney health outcomes.

-

Optimizing the clinical integration of AI-enabled decision-support in nephrology will require multidisciplinary commitment to ensure algorithm fairness and the building of an AI-competent medical workforce.

-

AI-enabled decision support should preserve the pre-eminence of human wisdom and intuition in clinical decision-making by augmenting rather than replacing interactions between patients, caregivers, clinicians and data.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Schwartz, W. B., Patil, R. S. & Szolovits, P. Artificial intelligence in medicine. Where do we stand? N. Engl. J. Med. 316, 685–688 (1987).

Hashimoto, D. A., Rosman, G., Rus, D. & Meireles, O. R. Artificial intelligence in surgery: promises and perils. Ann. Surg. 268, 70–76 (2018).

Millar, J. et al. Accountability in AI. Promoting greater social trust. CIFAR https://cifar.ca/cifarnews/2018/12/06/accountability-in-ai-promoting-greater-social-trust/ (2018).

Slack, W. V., Hicks, G. P., Reed, C. E. & Van Cura, L. J. A computer-based medical-history system. N. Engl. J. Med. 274, 194–198 (1966).

Beam, A. L. & Kohane, I. S. Big data and machine learning in health care. JAMA 319, 1317–1318 (2018).

Esteva, A., Chou, K. & Yeung, S. et al. Deep learning-enabled medical computer vision. npj Digital Med. 4, 5 (2021).

Titano, J. J., Badgeley, M. & Schefflein, J. et al. Automated deep-neural-network surveillance of cranial images for acute neurologic events. Nat. Med. 24, 1337–1341 (2018).

Campanella, G., Hanna, M. G. & Geneslaw, L. et al. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nat. Med. 25, 1301–1309 (2019).

van der Ploeg, T., Austin, P. C. & Steyerberg, E. W. Modern modelling techniques are data hungry: a simulation study for predicting dichotomous endpoints. BMC Med. Res. Methodol. 14, 137 (2014).

Apathy, N. C., Holmgren, A. J. & Adler-Milstein, J. A decade post-HITECH: critical access hospitals have electronic health records but struggle to keep up with other advanced functions. J. Am. Med. Inf. Assoc. 28, 1947–1954 (2021).

Bycroft, C., Freeman, C. & Petkova, D. et al. The UK Biobank resource with deep phenotyping and genomic data. Nature 562, 203–209 (2018).

Pew Research Center. About one-in-five Americans use a smart watch or fitness tracker. PEW Research https://www.pewresearch.org/fact-tank/2020/01/09/about-one-in-five-americans-use-a-smart-watch-or-fitness-tracker (2020).

Christodoulou, E., Ma, J., Collins, G. S., Steyerberg, E. W., Verbakel, J. Y. & Van Calster, B. A systematic review shows no performance benefit of machine learning over logistic regression for clinical prediction models. J. Clin. Epidemiol. 110, 12–22 (2019).

Doshi-Velez, F., Ge, Y. & Kohane, I. Comorbidity clusters in autism spectrum disorders: an electronic health record time-series analysis. Pediatrics 133, e54–e63 (2014).

Singh, K., Choudhry, N. K. & Krumme, A. A. et al. A concept-wide association study to identify potential risk factors for nonadherence among prevalent users of antihypertensives. Pharmacoepidemiol. Drug. Saf. 28, 1299–1308 (2019).

Singh, K., Valley, T. S. & Tang, S. et al. Evaluating a widely implemented proprietary deterioration index model among hospitalized patients with COVID-19. Ann. Am. Thorac. Soc. 18, 1129–1137 (2021).

Escobar, G. J., Liu, V. X. & Kipnis, P. Automated identification of adults at risk for in-hospital clinical deterioration. N. Engl. J. Med. 384, 418–427 (2021).

Tarabichi, Y., Cheng, A. & Bar-Shain, D. et al. Improving timeliness of antibiotic administration using a provider and pharmacist facing sepsis early warning system in the emergency department setting: a randomized controlled quality improvement initiative. Crit. Care Med. https://doi.org/10.1097/ccm.0000000000005267 (2021).

Gargeya, R. & Leng, T. Automated identification of diabetic retinopathy using deep learning. Ophthalmology 124, 962–969 (2017).

Gulshan, V., Peng, L. & Coram, M. et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 316, 2402–2410 (2016).

Rajpurkar, P., Irvin, J. & Ball, R. L. et al. Deep learning for chest radiograph diagnosis: a retrospective comparison of the CheXNeXt algorithm to practicing radiologists. PLoS Med. 15, e1002686 (2018).

Liu, Y., Kohlberger, T. & Norouzi, M. et al. Artificial intelligence-based breast cancer nodal metastasis detection: insights into the black box for pathologists. Arch. Pathol. Lab. Med. 143, 859–868 (2019).

Yu, C., Liu, J., Nemati, S. & Yin, G. Reinforcement learning in healthcare: a survey. ACM Comput. Surv. 55, 1 –36 (2021).

Komorowski, M., Celi, L. A., Badawi, O., Gordon, A. C. & Faisal, A. A. The artificial intelligence clinician learns optimal treatment strategies for sepsis in intensive care. Nat. Med. 24, 1716–1720 (2018).

Gottesman, O., Johansson, F. & Komorowski, M. et al. Guidelines for reinforcement learning in healthcare. Nat. Med. 25, 16–18 (2019).

Tanakasempipat, P. Google launches Thai AI project to screen for diabetic eye disease. Reuters https://www.reuters.com/article/us-thailand-google-idUSKBN1OC1N2 (2018).

Steiner, D. F., MacDonald, R. & Liu, Y. et al. Impact of deep learning assistance on the histopathologic review of lymph nodes for metastatic breast cancer. Am. J. Surg. Pathol. 42, 1636–1646 (2018).

Horwitz, L. I., Kuznetsova, M. & Jones, S. A. Creating a learning health system through rapid-cycle, randomized testing. N. Engl. J. Med. 381, 1175–1179 (2019).

Escobar, G. J., Liu, V. X., Schuler, A., Lawson, B., Greene, J. D. & Kipnis, P. Automated identification of adults at risk for in-hospital clinical deterioration. N. Engl. J. Med. 383, 1951–1960 (2020).

Challener, D. W., Prokop, L. J. & Abu-Saleh, O. The proliferation of reports on clinical scoring systems: issues about uptake and clinical utility. JAMA 321, 2405–2406 (2019).

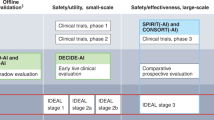

Cruz Rivera, S., Liu, X., Chan, A. W., Denniston, A. K. & Calvert, M. J. Guidelines for clinical trial protocols for interventions involving artificial intelligence: the SPIRIT-AI extension. Lancet Digital Health 2, e549–e560 (2020).

Liu, X., Cruz Rivera, S., Moher, D., Calvert, M. J. & Denniston, A. K. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension. Nat. Med. 26, 1364–1374 (2020).

Chen, Y., Huang, S. & Chen, T. et al. Machine learning for prediction and risk stratification of lupus nephritis renal flare. Am. J. Nephrol. 52, 152–160 (2021).

Kashani, K. & Herasevich, V. Sniffing out acute kidney injury in the ICU: do we have the tools? Curr. Opin. Crit. Care 19, 531–536 (2013).

Rahman, M., Shad, F. & Smith, M. C. Acute kidney injury: a guide to diagnosis and management. Am. Fam. Phys. 86, 631–639 (2012).

Lewington, A. J., Cerda, J. & Mehta, R. L. Raising awareness of acute kidney injury: a global perspective of a silent killer. Kidney Int. 84, 457–467 (2013).

Cronin, R. M., VanHouten, J. P. & Siew, E. D. et al. National Veterans Health Administration inpatient risk stratification models for hospital-acquired acute kidney injury. J. Am. Med. Inf. Assoc. 22, 1054–1071 (2015).

Thottakkara, P., Ozrazgat-Baslanti, T. & Hupf, B. B. et al. Application of machine learning techniques to high-dimensional clinical data to forecast postoperative complications. PLoS ONE 11, e0155705 (2016).

Lee, H. C., Yoon, H. K. & Nam, K. et al. Derivation and validation of machine learning approaches to predict acute kidney injury after cardiac surgery. J. Clin. Med. 7, 322 (2018).

Lee, H. C., Yoon, S. B. & Yang, S. M. et al. Prediction of acute kidney injury after liver transplantation: machine learning approaches vs. logistic regression model. Clin. Med. 7, 428 (2018).

Adhikari, L., Ozrazgat-Baslanti, T. & Ruppert, M. et al. Improved predictive models for acute kidney injury with IDEA: intraoperative data embedded analytics. PLoS ONE 14, e0214904 (2019).

Park, N., Kang, E. & Park, M. et al. Predicting acute kidney injury in cancer patients using heterogeneous and irregular data. PLoS ONE 13, e0199839 (2018).

Tran, N. K., Sen, S. & Palmieri, T. L. et al. Artificial intelligence and machine learning for predicting acute kidney injury in severely burned patients: a proof of concept. Burns 45, 1350–1358 (2019).

Li, Y. et al. Early prediction of acute kidney injury in critical care setting using clinical notes. In IEEE Int. Conf. on Bioinformatics and Biomedicine (BIBM) 683–686 (IEEE, 2018).

Flechet, M., Falini, S. & Bonetti, C. et al. Machine learning versus physicians’ prediction of acute kidney injury in critically ill adults: a prospective evaluation of the AKI predictor. Crit. Care 23, 282 (2019).

Sun, M., Baron, J. & Dighe, A. et al. Early prediction of acute kidney injury in critical care setting using clinical notes and structured multivariate physiological measurements. Stud. Health Technol. Inf. 264, 368–372 (2019).

Zhang, Z., Ho, K. M. & Hong, Y. Machine learning for the prediction of volume responsiveness in patients with oliguric acute kidney injury in critical care. Crit. Care 23, 112 (2019).

Zimmerman, L. P., Reyfman, P. A. & Smith, A. D. R. et al. Early prediction of acute kidney injury following ICU admission using a multivariate panel of physiological measurements. BMC Med. Inf. Decis. Mak. 19, 16 (2019).

Chiofolo, C., Chbat, N., Ghosh, E., Eshelman, L. & Kashani, K. Automated continuous acute kidney injury prediction and surveillance: a random forest model. Mayo Clin. Proc. 94, 783–792 (2019).

Rank, N., Pfahringer, B. & Kempfert, J. et al. Deep-learning-based real-time prediction of acute kidney injury outperforms human predictive performance. npj Digital Med. 3, 139 (2020).

Mohamadlou, H., Lynn-Palevsky, A. & Barton, C. et al. Prediction of acute kidney injury with a machine learning algorithm using electronic health record data. Can. J. Kidney Health Dis. 5, 2054358118776326 (2018).

Tomasev, N., Glorot, X. & Rae, J. W. et al. A clinically applicable approach to continuous prediction of future acute kidney injury. Nature 572, 116–119 (2019).

Koyner, J. L., Carey, K. A., Edelson, D. P. & Churpek, M. M. The development of a machine learning inpatient acute kidney injury prediction model. Crit. Care Med. 46, 1070–1077 (2018).

Churpek, M. M., Carey, K. A. & Edelson, D. P. et al. Internal and external validation of a machine learning risk score for acute kidney injury. JAMA Netw. Open 3, e2012892 (2020).

Kate, R. J., Pearce, N., Mazumdar, D. & Nilakantan, V. A continual prediction model for inpatient acute kidney injury. Comput. Biol. Med. 116, 103580 (2020).

Simonov, M., Ugwuowo, U. & Moreira, E. et al. A simple real-time model for predicting acute kidney injury in hospitalized patients in the US: a descriptive modeling study. PLoS Med. 16, e1002861 (2019).

Sabanayagam, C., Xu, D. & Ting, D. S. W. et al. A deep learning algorithm to detect chronic kidney disease from retinal photographs in community-based populations. Lancet Digit. Health 2, e295–e302 (2020).

Kuo, C. C., Chang, C. M. & Liu, K. T. et al. Automation of the kidney function prediction and classification through ultrasound-based kidney imaging using deep learning. npj Digit. Med. 2, 29 (2019).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE Conf. on Computer Vision And Pattern Recognition 770–778 (IEEE 2016).

Segal, Z., Kalifa, D. & Radinsky, K. et al. Machine learning algorithm for early detection of end-stage renal disease. ACM Digital Library 21, 518 (2020).

Chen, T. & Guestrin, C. XGBoost: a scalable tree boosting system. In Proc. 22nd ACM SIGKDD Int. Conf. on Knowledge Discovery and Data Mining (KDD’16). 785, 794 (2016).

Kang, M. W., Kim, J. & Kim, D. K. et al. Machine learning algorithm to predict mortality in patients undergoing continuous renal replacement therapy. Crit. Care 24, 42 (2020).

Makino, M., Yoshimoto, R. & Ono, M. et al. Artificial intelligence predicts the progression of diabetic kidney disease using big data machine learning. Sci. Rep. 9, 11862 (2019).

Kitamura, S., Takahashi, K., Sang, Y., Fukushima, K., Tsuji, K. & Wada, J. Deep learning could diagnose diabetic nephropathy with renal pathological immunofluorescent images. Diagnostics 10, 466 (2020).

Santoni, M., Piva, F. & Porta, C. et al. Artificial neural networks as a way to predict future kidney cancer incidence in the United States. Clin. Genitourin. Cancer 19, e84–e91 (2021).

Xi, I. L., Zhao, Y. & Wang, R. et al. Deep learning to distinguish benign from malignant renal lesions based on routine MR imaging. Clin. Cancer Res. 26, 1944–1952 (2020).

Yoo, K. D., Noh, J. & Lee, H. et al. A machine learning approach using survival statistics to predict graft survival in kidney transplant recipients: a multicenter cohort study. Sci. Rep. 7, 8904 (2017).

Loupy, A., Aubert, O. & Orandi, B. J. et al. Prediction system for risk of allograft loss in patients receiving kidney transplants: international derivation and validation study. BMJ 366, l4923 (2019).

Johnson-Mann, C. N., Loftus, T. J. & Bihorac, A. Equity and artificial intelligence in surgical care. JAMA Surg. 156, 509–510 (2021).

US Census Bureau. https://www.census.gov/data/data-tools.html (2021).

US Department of Health and Human Services. https://health.gov/healthypeople/objectives-and-data/data-sources-and-methods/data-sources (2021).

Wilkinson, M. D., Dumontier, M. & Aalbersberg, I. J. J. et al. The FAIR guiding principles for scientific data management and stewardship. Sci. Data 3, 160018–160018 (2016).

Rajkomar, A., Hardt, M., Howell, M. D., Corrado, G. & Chin, M. H. Ensuring fairness in machine learning to advance health equity. Ann. Intern. Med. 169, 866–872 (2018).

Jiang, G. Identifying and correcting label bias in machine learning. In International Conference on Artificial Intelligence and Statistics (PMLR, 2019).

Eneanya, N. D., Boulware, L. E. & Tsai, J. et al. Health inequities and the inappropriate use of race in nephrology. Nat. Rev. Nephrol. 18, 84–94 (2021).

Vyas, D. A., Eisenstein, L. G. & Jones, D. S. Hidden in plain sight — reconsidering the use of race correction in clinical algorithms. N. Engl. J. Med. 383, 874–882 (2020).

Shahian, D. M., Jacobs, J. P. & Badhwar, V. et al. The Society of Thoracic Surgeons 2018 adult cardiac surgery risk models. Part 1 — background, design considerations, and model development. Ann. Thorac. Surg. 105, 1411–1418 (2018).

Tesi, R. J., Deboisblanc, M., Saul, C., Frentz, G. & Etheredge, E. An increased incidence of rejection episodes. One of the causes of worse kidney transplantation survival in black recipients. Arch. Surg. 132, 35–39 (1997).

Rao, P. S. et al. A comprehensive risk quantification score for deceased donor kidneys: the kidney donor risk index. Transplantation 88, 231–236 (2009).

Limou, S., Nelson, G. W., Kopp, J. B. & Winkler, C. A. APOL1 kidney risk alleles: population genetics and disease associations. Adv. Chronic Kidney Dis. 21, 426–433 (2014).

Delgado, C., Baweja, M. & Burrows, N. R. et al. Reassessing the inclusion of race in diagnosing kidney diseases: an interim report from the NKF-ASN task force. Am. J. Kidney Dis. 78, 103–115 (2021).

Inker, L. A., Eneanya, N. D. & Coresh, J. et al. New creatinine- and cystatin c-based equations to estimate GFR without race. N. Engl. J. Med. 385, 1737–1749 (2021).

Delgado, C., Baweja, M. & Crews, D. C. et al. A unifying approach for GFR estimation: recommendations of the NKF-ASN task force on reassessing the inclusion of race in diagnosing kidney disease. Am. J. Kidney Dis. 79, 268–288 e1 (2022).

Hall, Y. N. Social determinants of health: addressing unmet needs in nephrology. Am. J. Kidney Dis. 72, 582–591 (2018).

Patzer, R. E., Amaral, S., Wasse, H., Volkova, N., Kleinbaum, D. & McClellan, W. M. Neighborhood poverty and racial disparities in kidney transplant waitlisting. J. Am. Soc. Nephrol. 20, 1333–1340 (2009).

Volkova, N., McClellan, W. & Klein, M. et al. Neighborhood poverty and racial differences in ESRD incidence. J. Am. Soc. Nephrol. 19, 356–364 (2008).

Grace, B. S., Clayton, P., Cass, A. & McDonald, S. P. Socio-economic status and incidence of renal replacement therapy: a registry study of Australian patients. Nephrol. Dial. Transpl. 27, 4173–4180 (2012).

Edmonds, A., Braveman, P., Arkin, E. & Jutte, D. Making the case for linking community development and health. Robert Wood Johnson Foundation http://www.buildhealthyplaces.org/content/uploads/2015/10/making_the_case_090115.pdf (2017).

Garg, A., Boynton-Jarrett, R. & Dworkin, P. H. Avoiding the unintended consequences of screening for social determinants of health. JAMA 316, 813–814 (2016).

American Medical Association. AMA passes first policy recommendations on augmented intelligence. AMA http://www.ama-assn.org/ama-passes-first-policy-recommendations-augmented-intelligence (2018).

Barocas, S., Hardt, M. & Narayanan, A. Fairness and Machine Learning Limitations and Opportunities (fairmlbooks.org, 2019).

McCradden, M. D., Joshi, S., Mazwi, M. & Anderson, J. A. Ethical limitations of algorithmic fairness solutions in health care machine learning. Lancet Digital Health 2, e221–e223 (2020).

Wolff, R. F., Moons, K. G. M. & Riley, R. D. et al. PROBAST: a tool to assess the risk of bias and applicability of prediction model studies. Ann. Intern. Med. 170, 51–58 (2019).

Peterson, E. D. Machine learning, predictive analytics, and clinical practice: can the past inform the present? JAMA 322, 2283–2284 (2019).

Loftus, T. J., Tighe, P. J. & Filiberto, A. C. et al. Artificial intelligence and surgical decision-making. JAMA Surg. 155, 148–158 (2020).

Stubbs, K., Hinds, P. J. & Wettergreen, D. Autonomy and common ground in human-robot interaction: a field study. IEEE Intell. Syst. 22, 42–50 (2007).

Linegang, M. P., Stoner, H. A. & Patterson, M. J. et al. Human–automation collaboration in dynamic mission planning: a challenge requiring an ecological approach. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 50, 2482–2486 (2006).

Rosenfeld, A., Zemel, R. & Tsotsos, J. K. The elephant in the room. Preprint at arXiv https://doi.org/10.48550/arXiv.1808.03305 (2018).

Miller, T. Explanation in artificial intelligence: insights from the social sciences. Artif. Intell. 267, 1–38 (2019).

Tonekaboni, S., Joshi, S., McCradden, M. D. & Goldenberg, A. What clinicians want: contextualizing explainable machine learning for clinical end use. PMLR https://proceedings.mlr.press/v106/tonekaboni19a.html (2021).

Esteva, A., Kuprel, B. & Novoa, R. A. et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature 542, 115–118 (2017).

Gal, Y. & Ghahramani, Z. Dropout as a Bayesian approximation: representing model uncertainty in deep learning. In Proc. 33rd Int. Conf. on Machine Learning (PMLR, 2016).

Prosperi, M., Guo, Y. & Bian, J. Bagged random causal networks for interventional queries on observational biomedical datasets. J. Biomed. Inf. 115, 103689 (2021).

Castro, D. C., Walker, I. & Glocker, B. Causality matters in medical imaging. Nat. Commun. 11, 3673 (2020).

Fleuren, L. M., Thoral, P., Shillan, D., Ercole, A. & Elbers, P. W. G. (Right Data Right Now Collaborators). Machine learning in intensive care medicine: ready for take-off? Intensive Care Med. 46, 1486–1488 (2020).

Shortliffe, E. H. & Sepulveda, M. J. Clinical decision support in the era of artificial intelligence. JAMA 320, 2199–2200 (2018).

Wexler, J., Pushkarna, M., Bolukbasi, T., Wattenberg, M., Viegas, F. & Wilson, J. The what-if tool: interactive probing of machine learning models. IEEE Trans. Vis. Comput. Graph. 26, 56–65 (2020).

Birkhead, G. S., Klompas, M. & Shah, N. R. Uses of electronic health records for public health surveillance to advance public health. Annu. Rev. Public Health 36, 345–359 (2015).

Adler-Milstein, J., Holmgren, A. J., Kralovec, P., Worzala, C., Searcy, T. & Patel, V. Electronic health record adoption in US hospitals: the emergence of a digital “advanced use” divide. J. Am. Med. Inf. Assoc. 24, 1142–1148 (2017).

Stanford Medicine. Stanford Medicine 2017 Health Trends Report: harnessing the power of data in health. Stanford http://med.stanford.edu/content/dam/sm/sm-news/documents/StanfordMedicineHealthTrendsWhitePaper2017.pdf (2017).

Leeds, I. L., Rosenblum, A. J. & Wise, P. E. et al. Eye of the beholder: risk calculators and barriers to adoption in surgical trainees. Surgery 164, 1117–1123 (2018).

Morse, K. E., Bagley, S. C. & Shah, N. H. Estimate the hidden deployment cost of predictive models to improve patient care. Nat. Med. 26, 18–19 (2020).

Blumenthal-Barby, J. S. & Krieger, H. Cognitive biases and heuristics in medical decision making: a critical review using a systematic search strategy. Med. Decis. Mak. 35, 539–557 (2015).

Ludolph, R. & Schulz, P. J. Debiasing health-related judgments and decision making: a systematic review. Med. Decis. Mak. 38, 3–13 (2017).

Goldenson, R. M. The Encyclopedia of Human Behavior; Psychology, Psychiatry, and Mental Health 1st edn (Doubleday, 1970).

Classen, D. C., Pestotnik, S. L., Evans, R. S., Lloyd, J. F. & Burke, J. P. Adverse drug events in hospitalized patients. Excess length of stay, extra costs, and attributable mortality. JAMA 277, 301–306 (1997).

Bates, D. W., Cullen, D. J. & Laird, N. et al. Incidence of adverse drug events and potential adverse drug events. Implications for prevention. ADE Prevention Study Group. JAMA 274, 29–34 (1995).

Sanfey, A. G., Rilling, J. K., Aronson, J. A., Nystrom, L. E. & Cohen, J. D. The neural basis of economic decision-making in the Ultimatum Game. Science 300, 1755–1758 (2003).

Kahneman, D. Thinking, Fast and Slow 499 (Farrar, Straus & Giroux, 2013).

Van den Bruel, A., Thompson, M., Buntinx, F. & Mant, D. Clinicians’ gut feeling about serious infections in children: observational study. BMJ 345, e6144 (2012).

Van den Bruel, A., Haj-Hassan, T., Thompson, M., Buntinx, F. & Mant, D. (European Research Network on Recognising Serious Infection). Diagnostic value of clinical features at presentation to identify serious infection in children in developed countries: a systematic review. Lancet 375, 834–845 (2010).

Bechara, A., Damasio, H., Tranel, D. & Damasio, A. R. Deciding advantageously before knowing the advantageous strategy. Science 275, 1293–1295 (1997).

Theodorou, A. & Dignum, V. Towards ethical and socio-legal governance in AI. Nat. Mach. Intell. 2, 10–12 (2020).

Hamoni, R., Lin, O., Matthews, M. & Taillon, P. J. Building Canada’s future AI workforce: in the brave new (post-pandemic) world. ICTC/CTIC https://www.ictc-ctic.ca/wp-content/uploads/2021/03/ICTC_Report_Building_ENG.pdf (2021).

Caminiti, S. AT&T’s $1 billion gambit: retraining nearly half its workforce for jobs of the future. CNBC https://www.cnbc.com/2018/03/13/atts-1-billion-gambit-retraining-nearly-half-its-workforce.html (2021).

Moritz, B. Preparing everyone, everywhere, for the digital world. PriceWaterhouseCoopers https://www.pwc.com/gx/en/issues/upskilling/everyone-digital-world.html?WT.mc_id=CT1-PL50-DM2-TR1-LS40-ND30-TTA3-CN_JoeAtkinsonDigitalTransformationBlog-&eq=CT1-PL50-DM2-CN_JoeAtkinsonDigitalTransformationBlog (2021).

Amazon. Our upskilling 2025 programs. Amazon https://www.aboutamazon.com/news/workplace/our-upskilling-2025-programs (2021).

Smith, B. Microsoft launches initiative to help 25 million people worldwide acquire the digital skills needed in a COVID-19 economy. Microsoft https://blogs.microsoft.com/blog/2020/06/30/microsoft-launches-initiative-to-help-25-million-people-worldwide-acquire-the-digital-skills-needed-in-a-covid-19-economy/ (2021).

Pfeifer, C. M. A progressive three-phase innovation to medical education in the United States. Med. Educ. Online 23, 1427988 (2018).

Nasca, T. J., Philibert, I., Brigham, T. & Flynn, T. C. The next GME accreditation system–rationale and benefits. N. Engl. J. Med. 366, 1051–1056 (2012).

Wartman, S. A. & Combs, C. D. Medical education must move from the information age to the age of artificial intelligence. Acad. Med. 93, 1107–1109 (2018).

Paranjape, K., Schinkel, M., Nannan Panday, R., Car, J. & Nanayakkara, P. Introducing artificial intelligence training in medical education. JMIR Med. Educ. 5, e16048 (2019).

Wald, H. S., George, P., Reis, S. P. & Taylor, J. S. Electronic health record training in undergraduate medical education: bridging theory to practice with curricula for empowering patient- and relationship-centered care in the computerized setting. Acad. Med. 89, 380–386 (2014).

Acknowledgements

T.J.L. was supported by the National Institute of General Medical Sciences (NIGMS) of the NIH under Award Number K23 GM140268. T.O.-B. was supported by grants K01 DK120784, R01 DK123078 and R01 DK121730 from the National Institute of Diabetes and Digestive and Kidney Diseases (NIDDK), grant R01 GM110240 from NIGMS, grant R01 EB029699 from the National Institute of Biomedical Imaging and Bioengineering (NIBIB) and grant R01 NS120924 from the National Institute of Neurological Disorders and Stroke (NINDS). B.S.G. was supported by grant R01MH121923 from the National Institute of Mental Health (NIMH), grants R01AG059319 and R01AG058469 from the National Institute for Aging (NIA) and grant 1R01HG011407-01Al from the National Human Genome Research Institute (NHGRI). G.N.N. is supported by grants R01 DK127139 from NIDDK and R01 HL155915 from the National Heart Lung and Blood Institute (NHLBI). L.C. was supported by grant K23 DK124645 from the NIDDK. A.B. was supported by grant R01 GM110240 from NIGMS, grants R01 EB029699 and R21 EB027344 from NIBIB, grant R01 NS120924 from NINDS and by grant R01 DK121730 from NIDDK. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Author information

Authors and Affiliations

Contributions

The authors contributed equally to all aspects of the article.

Corresponding author

Ethics declarations

Competing interests

B.S.G. has received consulting fees from Anthem AI and consulting and advisory fees from Prometheus Biosciences. K.S. has received grant funding from Blue Cross Blue Shield of Michigan and Teva Pharmaceuticals for unrelated work, and serves on a scientific advisory board for Flatiron Health. G.N.N. has received consulting fees from AstraZeneca, Reata, BioVie, Siemens Healthineers and GLG Consulting; grant funding from Goldfinch Bio and Renalytix; financial compensation as a scientific board member and adviser to Renalytix; owns equity in Renalytix and Pensieve Health as a cofounder and is on the advisory board of Neurona Health. The other authors declare no competing interests.

Peer review

Peer review information

Nature Reviews Nephrology thanks Laura Barisoni and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Related links

Cassandra: https://cassandra.apache.org/_/index.html

FAIR principles: https://www.go-fair.org/fair-principles/

National Patient-Centered Clinical Research Network: https://pcornet.org

Observational Medical Outcomes Partnership: https://www.ohdsi.org/

Spark Streaming: https://spark.apache.org/streaming/

What-If Tool: https://pair-code.github.io/what-if-tool/

Glossary

- Nodes

-

Computational units in a neural network. Each node has a weight that is influenced by other nodes and affects predictions made by the neural network.

- Computer vision

-

An artificial intelligence subfield in which deep models use pixels from images and videos as inputs.

- Convolutional neural networks

-

(CNNs). A CNN is a type of neural network that assembles patterns of increasing complexity to avoid overfitting (fitting too closely on inputs), which can compromise predictive performance when the model is applied to new, previously unseen data. CNNs are frequently used in imaging applications.

- Loss function

-

A mathematical function that calculates errors. Artificial intelligence algorithms are typically designed to minimize loss as the algorithm learns associations between input variables and outcomes.

- Deep neural networks

-

Neural networks with several layers of nodes between the input layer and final output layer.

- Generative adversarial neural networks

-

Two neural networks that compete with and learn from one another, offering the ability to generate synthetic data.

- Hierarchical clustering

-

An algorithm that forms groups of elements that are similar to one another and different from others by iteratively merging points according to pairwise distances.

- Random forest

-

A type of artificial intelligence model that assembles outputs from a set of decision trees and uses the majority vote or average prediction of the individual trees to produce a final prediction.

- Gradient boosting

-

An artificial intelligence (AI) technique for iteratively improving predictive performance by ensuring that the next permutation of the AI model, when combined with the prior permutation, offers a performance improvement.

- Sensitivity

-

The true positive rate; the percentage of patients with a disease for whom a model or test predicted a positive result, also known as recall. Sensitivity indicates the ability of a model or test to identify subjects who have a condition.

- Specificity

-

The true negative rate; the percentage of patients without a disease for whom a model or test predicted a negative result. Specificity indicates the ability of a model or test to identify subjects who do not have a condition.

- Positive predictive value

-

The probability that a positive prediction made by a model or test is correct according to the gold standard or ground truth. Positive predictive value is also known as precision.

- Negative predictive value

-

The probability that a negative prediction made by a model or test is correct according to the gold standard or ground truth.

- k-nearest-neighbours

-

A classification algorithm that makes predictions based on feature-space similarity to k nearby labelled instances, where k is the number of neighbours to consider and is assigned by the investigator.

- Residual neural network (ResNet) CNN architecture

-

A form of convolutional neural network that uses skip connections or shortcuts to jump over some layers, which simplifies the network and accelerates learning.

- F1 score

-

A measurement of accuracy that considers both precision, which is also known as positive predictive value, and recall, which is also known as sensitivity.

- Convolutional autoencoders

-

Convolutional neural network variants that learn which filters should be used to detect features of interest among model inputs, which are usually imaging data.

- Topic modelling

-

An artificial intelligence technique for detecting groups of text data that are similar to one another and different than others.

- Ensemble model

-

A model that assembles outputs from multiple algorithms to achieve predictive performance that is greater than that of individual algorithms.

- FAIR principles

-

The findability, accessibility, interoperability and reuse (FAIR) principles of digital assets for scientific investigation are intended to optimize the reuse of data.

- Prediction model Risk Of Bias ASsessment Tool

-

(PROBAST). An instrument for assessing the risk of bias associated with a prediction model that provides diagnostic or prognostic information.

- Observational Medical Outcomes Partnership

-

(OMOP). An organization that designed a common data model that standardizes the way medical information is captured across healthcare institutions and provides metadata tables describing relationships among data elements.

- National Patient-Centered Clinical Research Network

-

(PCORnet). An organization that designed a common data model that standardizes the way medical information is captured across healthcare institutions and is widely adopted by institutions participating in the Patient Centered Outcomes Research Institute.

- Predictive Model Markup Language

-

A programming language that standardizes methods for describing predictions models, which may facilitate sharing models among investigator groups.

- Federated learning

-

A technique for generating a central artificial intelligence (AI) model that is built with information from several local AI models that train on local data. This approach has the potential advantage of training AI models on data from multiple centres without sharing data across centres, thereby promoting data security and privacy.

Rights and permissions

About this article

Cite this article

Loftus, T.J., Shickel, B., Ozrazgat-Baslanti, T. et al. Artificial intelligence-enabled decision support in nephrology. Nat Rev Nephrol 18, 452–465 (2022). https://doi.org/10.1038/s41581-022-00562-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41581-022-00562-3

This article is cited by

-

The dawn of multimodal artificial intelligence in nephrology

Nature Reviews Nephrology (2024)

-

Predicting in-hospital outcomes of patients with acute kidney injury

Nature Communications (2023)

-

A machine learning model identifies patients in need of autoimmune disease testing using electronic health records

Nature Communications (2023)