Abstract

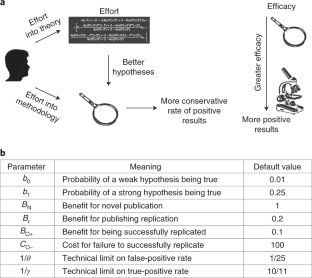

Scientists in some fields are concerned that many published results are false. Recent models predict selection for false positives as the inevitable result of pressure to publish, even when scientists are penalized for publications that fail to replicate. We model the cultural evolution of research practices when laboratories are allowed to expend effort on theory, enabling them, at a cost, to identify hypotheses that are more likely to be true, before empirical testing. Theory can restore high effort in research practice and suppress false positives to a technical minimum, even without replication. The mere ability to choose between two sets of hypotheses, one with greater prior chance of being correct, promotes better science than can be achieved with effortless access to the set of stronger hypotheses. Combining theory and replication can have synergistic effects. On the basis of our analysis, we propose four simple recommendations to promote good science.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

All scripts and data to reproduce the results are available at https://doi.org/10.5281/zenodo.4616768.

Code availability

All scripts necessary to reproduce the results are available at https://doi.org/10.5281/zenodo.4616768.

References

Nissen, S. B., Magidson, T., Gross, K. & Bergstrom, C. T. Publication bias and the canonization of false facts. eLife 5, e21451 (2016).

Kerr, N. L. Harking: hypothesizing after the results are known. Pers. Soc. Psychol. Rev. 2, 196–217 (1998).

Ioannidis, J. P. Why most published research findings are false. PLoS Med. 2, e124 (2005).

Simmons, J. P., Nelson, L. D. & Simonsohn, U. False-positive psychology: undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychol. Sci. 22, 1359–1366 (2011).

John, L. K., Loewenstein, G. & Prelec, D. Measuring the prevalence of questionable research practices with incentives for truth telling. Psychol. Sci. 23, 524–532 (2012).

Simonsohn, U., Nelson, L. D. & Simmons, J. P. P-curve: a key to the file-drawer. J. Exp. Psychol. Gen. 143, 534 (2014).

Rahal, R. & Collaboration, O. S. et al. Estimating the reproducibility of psychological science. Science 349, aac4716 (2015).

Begley, C. G. & Ioannidis, J. P. Reproducibility in science: improving the standard for basic and preclinical research. Circ. Res. 116, 116–126 (2015).

Munafò, M. R. et al. A manifesto for reproducible science. Nat. Hum. Behav. 1, 0021 (2017).

Klein, R. A. et al. Many labs 2: investigating variation in replicability across samples and settings. Adv. Methods Pract. Psychol. Sci. 1, 443–490 (2018).

Ebersole, C. R. et al. Many labs 3: evaluating participant pool quality across the academic semester via replication. J. Exp. Soc. Psychol. 67, 68–82 (2016).

Camerer, C. F. et al. Evaluating the replicability of social science experiments in Nature and Science between 2010 and 2015. Nat. Hum. Behav. 2, 637 (2018).

Nosek, B. A. et al. Promoting an open research culture. Science 348, 1422–1425 (2015).

Nosek, B. A., Ebersole, C. R., DeHaven, A. C. & Mellor, D. T. The preregistration revolution. Proc. Natl Acad. Sci. U. S. A. 115, 2600–2606 (2018).

Munafò, M. R. & Davey Smith, G. Robust research needs many lines of evidence. Nature 553, 399–401 (2018).

Gross, K. & Bergstrom, C. T. Contest models highlight inherent inefficiencies of scientific funding competitions. PLoS Biol. 17, e3000065 (2019).

Smaldino, P. E., Turner, M. A. & Contreras Kallens, P. A. Open science and modified funding lotteries can impede the natural selection of bad science. R. Soc. Open Sci. 6, 190194 (2019).

Smaldino, P. E. & McElreath, R. The natural selection of bad science. R. Soc. Open Sci. 3, 160384 (2016).

Grimes, D. R., Bauch, C. T. & Ioannidis, J. P. A. Modelling science trustworthiness under publish or perish pressure. R. Soc. Open Sci. 5, 171511 (2018).

Devezer, B., Nardin, L. G., Baumgaertner, B. & Buzbas, E. O. Scientific discovery in a model-centric framework: reproducibility, innovation, and epistemic diversity. PLoS ONE 14, e0216125–e0216125 (2019).

Szollosi, A. et al. Is preregistration worthwhile? Trends. Cogn. Sci. 24, 94–95 (2020).

Muthukrishna, M. & Henrich, J. A problem in theory. Nat. Hum. Behav. 3, 221–229 (2019).

Smaldino, P. Better methods can’t make up for mediocre theory. Nature 575, 9 (2019).

van Rooij, I. & Baggio, G. Theory before the test: how to build high-verisimilitude explanatory theories in psychological science. Perspect. Psychol. Sci. https://doi.org/10.1177/1745691620970604 (2021).

McElreath, R. & Smaldino, P. E. Replication, communication, and the population dynamics of scientific discovery. PLoS ONE 10, e0136088 (2015).

O’Connor, C. The natural selection of conservative science. Stud. Hist. Philos. Sci. 76, 24–29 (2019).

Traulsen, A., Nowak, M. A. & Pacheco, J. M. Stochastic dynamics of invasion and fixation. Phys. Rev. E 74, 011909 (2006).

Mullon, C., Keller, L. & Lehmann, L. Evolutionary stability of jointly evolving traits in subdivided populations. Am. Nat. 188, 175–95 (2016).

Leimar, O. Multidimensional convergence stability. Evol. Ecol. Res. 11, 191–208 (2009).

Gray, C. T. & Marwick, B. in Statistics and Data Science (ed. Nguyen, H.) 111–129 (Springer, 2019).

Feynman, R. P. QED: The Strange Theory of Light and Matter (Princeton Univ. Press, 1985).

Hodgkin, A. L. & Huxley, A. F. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 117, 500–44 (1952).

MacKinnon, R. Nobel lecture. Potassium channels and the atomic basis of selective ion conduction. Biosci. Rep. 24, 75–100 (2004).

Schwiening, C. J. A brief historical perspective: Hodgkin and Huxley. J. Physiol. 590, 2571–2575 (2012).

Kahneman, D. & Tversky, A. Prospect theory: an analysis of decision under risk. Econometrica 47, 263–291 (1979).

Barberis, N. C. Thirty years of prospect theory in economics: a review and assessment. J. Econ. Perspect. 27, 173–96 (2013).

Mayr, E. Where are we? Cold Spring Harbor. Symp. Quant. Biol. 24, 1–14 (1959).

Haldane, J. B. S. A defence of beanbag genetics. Perspect. Biol. Med. 7, 343–359 (1964).

Ewens, W. J. Commentary: on Haldane’s ‘defense of beanbag genetics’. Int. J. Epidemiol. 37, 447–51 (2008).

Crow, J. F. Mayr, mathematics and the study of evolution. J. Biol. 8, 13 (2009).

Sarewitz, D. The pressure to publish pushes down quality. Nature 533, 147 (2016).

Rawat, S. & Meena, S. Publish or perish: where are we heading? J. Res. Med. Sci. 19, 87–89 (2014).

Dinis-Oliveira, R. J. & Magalhães, T. The inherent drawbacks of the pressure to publish in health sciences: good or bad science. F1000Research 4, 419–419 (2015).

Kurt, S. Why do authors publish in predatory journals? Learn. Publ. 31, 141–147 (2018).

Price, D. J. D. S. Little Science, Big Science (Columbia Univ. Press, 1963).

Bornmann, L. & Mutz, R. Growth rates of modern science: a bibliometric analysis based on the number of publications and cited references. J. Assoc. Inform. Sci. Technol. 66, 2215–2222 (2015).

Acknowledgements

The authors thank P. Smaldino for constructive feedback. The authors received no specific funding for this work.

Author information

Authors and Affiliations

Contributions

A.J.S. and J.B.P. conceived the project and developed the model. A.J.S. ran the simulations and analysed the model with input from J.B.P. A.J.S. and J.B.P. wrote the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Human Behaviour thanks Timothy Parker, Jeffrey Schank and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1–12 and Supplementary Discussion.

Rights and permissions

About this article

Cite this article

Stewart, A.J., Plotkin, J.B. The natural selection of good science. Nat Hum Behav 5, 1510–1518 (2021). https://doi.org/10.1038/s41562-021-01111-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41562-021-01111-x

This article is cited by

-

The Advent and Fall of a Vocabulary Learning Bias from Communicative Efficiency

Biosemiotics (2021)