Abstract

Tensor networks permit computational and entanglement resources to be concentrated in interesting regions of Hilbert space. Implemented on NISQ machines they allow simulation of quantum systems that are much larger than the computational machine itself. This is achieved by parallelising the quantum simulation. Here, we demonstrate this in the simplest case; an infinite, translationally invariant quantum spin chain. We provide Cirq and Qiskit code that translates infinite, translationally invariant matrix product state (iMPS) algorithms to finite-depth quantum circuit machines, allowing the representation, optimisation and evolution of arbitrary one-dimensional systems. The illustrative simulated output of these codes for achievable circuit sizes is given.

Similar content being viewed by others

Introduction

The insight underpinning Steve White’s formulation of the density matrix renormalisation group (DMRG) is that entanglement is the correct resource to focus upon to formulate accurate, approximate descriptions of large quantum systems1. Later understood as an algorithm to optimise a matrix product state (MPS)2, this notion underpins the use of tensor networks as a variational parametrisation of wavefunctions with quantified entanglement resources. Such approaches allow one to concentrate computational resources in the appropriate region of Hilbert space and provide an effective and universal way to simulate quantum systems3,4. They also provide an effective framework to distribute entanglement resources in simulation on noisy intermediate-scale quantum (NISQ) computers.

Quantum computers such as those of Google, Rigetti, IBM and others implement finite-depth quantum circuits with controllable local two-qubit unitary gates. Innovations for quantum simulation include using these circuits as variational wavefunctions5, optimising them stochastically or by phase estimation6, and evolving them either by accurate Trotterisation of the evolution operator7,8,9 or variationally10. Currently, available NISQ devices are limited by gate fidelity and the resultant restriction of available entanglement resources. Since the finite-depth quantum circuit may be equivalently described as a tensor network11, tensor networks provide a convenient framework with which to distribute entanglement to the useful regions of Hilbert space and to make efficient use of this relatively scarce resource.

We dub the implementation of a tensor network on such a NISQ device a Quantum tensor network. There are several advantages to this framework. It fits directly into a broader ecosystem of classical simulation of quantum systems. Indeed, because it is based upon the manipulation of explicitly unitary elements, the quantum circuit provides perhaps the most natural realisation of tensor networks. Canonicalisation at each step in a classical tensor network calculation amounts to reducing the tensors to isometries—a step that is not required in an explicitly unitary realisation. Moreover, the remaining elements of unitaries parametrise the tangent space of the variational manifold12,13.

Here we demonstrate that quantum tensor networks can be used to parallelise quantum simulation of systems that are much larger than available NISQ machines14,15,16. Central to this is dividing the quantum system into a number of sub-elements that are weakly entangled and can be simulated in parallel on different circuits. The influence of the different regions of the system upon one another can be summarised by an effective state on a much smaller number of quantum bits. We provide Cirq and Qiskit code for the simplest class of examples—infinite, translationally invariant quantum spin chains. This is a direct translation (mutatis mutandis) of iMPS algorithms to quantum circuit machines. The remarkably simple circuits revealed below allow the representation of an infinite quantum state, and its optimisation and real-time evolution for a given Hamiltonian.

Results

Parallel quantum simulation across weakly-entangled cuts

To parallelise our simulation on a small NISQ machine, we first identify partitions of the system where the effect of one partition upon the other can be summarised by a small amount of information. This is achieved by making Schmidt decompositions across the cut: \(\left|\psi \right\rangle =\mathop{\sum }\nolimits_{\alpha = 1}^{D}{\lambda }^{\alpha }\left|{\phi }_{L}^{\alpha }\right\rangle \left|{\phi }_{R}^{\alpha }\right\rangle ,\) where \(\left|{\phi }_{L}^{\alpha }\right\rangle\) are an orthonormal set of states to the left of the cut and \(\left|{\phi }_{R}^{\alpha }\right\rangle\) the same on the right. The λα are known as the Schmidt coefficients and D the Schmidt rank or bond order. Retaining λα only above some threshold value provides a way to compress representations of a quantum state; the MPS construction can be obtained by applying this procedure sequentially along a spin chain4.

If an observation is made on the right-hand-side of such a cut, the effect of the quantum state on the left upon the observation can be summarised by just D variables corresponding to the Schmidt coefficients. This same effect can be achieved by an effective state on a spin chain of length \({{\mathrm{log}}\,}_{2}D\)—see Fig. 1—which can be parametrised on the quantum circuit by an SU(D2) unitary VL. This encodes both the Schmidt coefficients λα and the orthonormal states \(\left|{\phi }_{L}^{\alpha }\right\rangle\). The latter does not contribute to observables on the right and so in principle, VL can be parametrised by just D variational parameters. The precise numerical values must be determined by solving a quantum mechanical problem on the left of the system. Similarly, for observations made to the left of the cut, the effect of the right-hand side can be summarised by a unitary VR.

This weak entanglement allows parallel simulation of the two partitions of the system. The expectation of an operator located to the right of the partition can be carried out by replacing the state on the left by a state over much fewer spins (the number determined by the entanglement across the cut). The numerical values of correlations in this smaller representation of the left are determined by quantum effects in the full left-hand system and can be computed in parallel and iterated to consistency.

This gives a prescription for parallel quantum simulation. Calculations of the quantum wave function to the left and right of the cut can be carried out on different quantum circuits or sequentially on the same circuit. The effects of the left partition upon the right partition and vice versa—through the environment unitaries VL and VR—are iterated to consistency. At each stage of this iteration, measurements must be performed in order to determine VL/R. The small Schmidt rank of the cut reduces the computational complexity of this process—if we were to do full state tomography, to \({\mathcal{O}}({\rm{poly}}(D))\), but with more sophisticated methods even to \({\mathcal{O}}({\mathrm{log}}\,(D))\), as in the example in the following section.

There are many physical situations in which this parallelisation might be useful. For example, large organic molecules that have localised chemical activity—this activity may be modulated or tuned by the surrounding parts of the molecule and the interplay of these effects could be calculated in parallel. In the following, inspired by iMPS tensor networks, we give quantum circuits that embody these ideas.

Parallel simulation with quantum tensor networks

The translationally invariant MPS gives an approximate representation of a translationally invariant spin 1/2 state as

where n labels the lattice site, σn labels the spin 1/2 basis states on the nth site and in are auxiliary tensor indices that run from 1 to the bond order D. The tensors \({A}_{ij}^{\sigma }\) are the same on every site reflecting the translational invariance. These states can be mapped to quantum circuits by taking the MPS tensors in isometric form and identifying them with the circuit unitaries as described in the “Methods” section. A variety of techniques have been developed for the classical manipulation of these states for quantum simulation3,17,18. Here we confine ourselves to discussing the quantum circuit realisation. The Supplementary Material gives a detailed summary of the connection between the classical MPSs and their quantum circuit realisation.

Representing the state

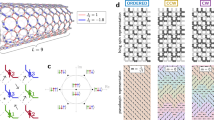

A translationally invariant, spin 1/2 MPS state of bond order D = 2N can be represented by the infinite circuit shown in Fig. 2a. Expectations of local operators in this state can be evaluated by the finite circuit shown in Fig. 2b. The effect of contracting the infinite circuit to the left of the operator is trivial due to the unitarity of U ∈ SU(2D) (which automatically encodes the left canonical form of the related MPS tensor). The contraction to the right is described by the tensor V ∈ SU(D2), which encodes an effective state over \(N={{\mathrm{log}}\,}_{2}D\) spins and their entanglement with the remaining system to the left-hand side. This unitary is determined self-consistently from U by the circuit shown in Fig. 2c. As demonstrated in ref. 4, an MPS in this form can be constructed from any state by a sequence of Schmidt decompositions running from left to right. This guarantees the existence of the isometric MPS representation and the quantum circuit realisation of it. The operation of such a circuit at D = 2 was demonstrated in ref. 19 on an IBM quantum circuit, where analytic forms were known for both U and V along a line through the phase diagram of a model with topological phase transition. In general, V ≡ V(U) is not known and must be solved following Fig. 2c.

a An infinite depth and width quantum circuit representing a translationally invariant state. U ∈ SU(dD) with d the local Hilbert space dimension and D = 2N the bond order. d = 2 for spin 1/2 and is used exclusively throughout this paper. In these illustrations D = 4. The circuit acts upon a reference state \(\left|000\ldots \right\rangle\) at the left of the figure with unitary operators applied sequentially reading left to right. b Local measurements on this translationally invariant state can be reproduced exactly by the finite circuit shown. The reduced form takes advantage of the unitarity of U, due to which sites to the left of the observable do not contribute. The environment unitary V ≡ V(U) ∈ SU(D2) summarises the effect of sites to the right of the observable and describes an effective state over \(N={{\mathrm{log}}\,}_{2}D\) spins. c The environment unitary V(U) is the solution of the fixed point equation shown. This equation is to be interpreted as equality of the reduced density matrices implied by the free qubit lines. We show in the “Methods” section how to implement this using swap gates. d A shallow circuit representation of the D = 4 states following ref. 22. Such circuits have been shown to have high fidelity with states obtained in Hamiltonian evolution and are exponentially quicker to contract on a quantum circuit than they are classical.

Optimising the state

We can find the ground state and the corresponding energy density of translationally invariant Hamiltonians by minimising the expectation value of the energy. The algorithm mirrors the variational quantum eigensolver. The expectation of the local Hamiltonian is found by measuring the corresponding Pauli strings on the physical qubits (see Fig. 2b). The result can then be minimised as a function of the ansatz parameters. Updates must be interleaved with updates to the environment, V, such that we optimise over valid translationally invariant states.

Evolving the state

Perhaps the most compelling feature of this implementation is the ease with which time-evolution can be achieved. The simple circuit shown in Fig. 3 a returns the unitary \(U^{\prime} \equiv U(t+{\mathrm{dt}})\) that updates the state encoded by U(t) to a time t + dt under evolution with the Hamiltonian \({\mathcal{H}}\). The first variation of this circuit with respect to \(U^{\prime}\) returns the time-dependent variational principle for iMPS in the form first presented by Haegeman et al. in ref. 12. The equivalence uses the automatic encoding of the gauge-fixing of the state to the canonical form, as well as encoding of the tangent space and its gauge fixing (see “Methods” section and additional notes in Supplementary Materials). As in the determination of the best groundstate approximation above, the update involves two nested loops; one to find the update \(U^{\prime}\) and one to find the environment tensors \(L\equiv L(U,U^{\prime} )\) and \(R\equiv R(U,U^{\prime} )\) —both of which are required in this case as the circuit corresponds to the overlap of two different states rather than expectations taken in a given state. We have used a slightly different way of representing these environments in Fig. 3 compared to that employed in Fig. 2.

For simplicity, we depict the above circuits for D = 2. Higher bond order cases are given in the supplementary materials. a The unitary \(U^{\prime}\) that optimises the overlap of this circuit with \(\left|000\ldots \right\rangle\) describes the time evolution of the state described by U(t) by a time interval dt under the Hamiltonian \({\mathcal{H}}\), i.e., \(U^{\prime} =U(t+dt)\). b, c The mixed environment unitaries R and L are given by the fixed point solutions of these circuit equations. As in Fig. 2, these are to be interpreted as equality of the density matrices implied by free qubit lines.

Quantum advantage

It is natural to ask whether there is any quantum advantage from using a quantum circuit in this way. Algorithms for manipulating iMPS (iDMRG, TDVP, etc.)3,17,18 are classically efficient—they have the complexity of \({\mathcal{O}}({D}^{3})\). Where then is the room for improvement by implementation on a quantum circuit? The quantum advantage comes from the potentially exponential reduction in the dependence upon the bond dimension, D.

In a quantum circuit, the multi-qubit unitaries must be compiled to the available gate set. A translationally invariant state with entanglement S can be captured by a matrix product state of bond dimension \(D \sim \exp S\). This requires a circuit depth of \({\mathcal{O}}(\exp S)\). An arbitrary \(U\in SU(2\exp S)\) unitary to implement this iMPS requires \({\mathcal{O}}(\exp S) \sim {\mathcal{O}}(D)\) gates20. However, a subset of non-trivial unitaries with entanglement S can be achieved with circuits whose depth is \({\mathcal{O}}(S) \sim {\mathcal{O}}({\mathrm{log}}\,D)\) giving an exponential speedup over the classical implementation21. This reduces the contraction time from \({\mathcal{O}}({D}^{3})\) for a typical classical implementation to \({\mathcal{O}}({\mathrm{log}}\,D)\) in a quantum case. Though these shallow circuits exist, the question remains whether they have high fidelity with the states that we are interested in ref. 22 identifies a subset of shallow quantum circuit MPS that have high fidelity with the states produced by Hamiltonian evolution. An example at bond order D = 4 is shown in Fig. 2d.

This demonstrates a quantum advantage for U. We must also consider whether the environment V (or R and L) have high fidelity shallow circuit representations. A priori there is no guarantee that, given a shallow circuit U, the V that satisfies the fixed point equations in Fig. 2, or the R and L that satisfy the fixed point equations in Fig. 3 are themselves shallow. However, there is a rigorous argument for this. We start by constructing an initial shallow approximation. When optimising the energy, as in Fig. 2, we can take advantage of the ability to diagonalize the reduced density matrix to the right and choose a corresponding V. A shallow circuit approximation to this allows us to set \({\mathrm{log}}\,D\) Schmidt coefficients exactly with the remainder lying on a smooth interpolation between them. In the case of time evolution, the mixed environments R and L cannot necessarily be diagonalised simultaneously (though off-diagonal elements are of order dt2) and a richer—though still shallow—variational parametrization allowing for this is necessary. In either case, a shallow approximation for the environment can be improved exponentially for a linear cost in qubits and circuit depth by applying the transfer matrix a linear number of times, i.e., by using the power method (see the Supplementary Materials for more details). This simply corresponds to inserting further copies of the transfer matrix in the center of the circuits in Fig. 3a. These arguments establish an asymptotic quantum advantage for our algorithm. In practice, we find that the initial shallow approximations prove remarkably accurate and these corrections are unnecessary.

Numerical results

We have written Cirq and Qiskit code to implement the quantum circuits shown in Figs. 2 and 3. The results of running this code in simulation on Google’s Cirq simulator are shown in Fig. 4. We have chosen optimisation and time evolution of the transverse field Ising model23, and Poincare sections of the dynamics of the PXP Hamiltonian24 as illustrative examples. The properties of the transverse field Ising model are well understood. The Loschmidt echo (fidelity of the time-evolved wavefunction with the initial wavefunction) reveals a dynamical phase transition23 which provides a non-trivial test for our simulation. Our main findings are as follows:

The Hamiltonian \({\mathcal{H}}=\sum_{n}[{\hat{\sigma }}_{n}^{z}{\hat{\sigma }}_{n+1}^{z}+\lambda {\hat{\sigma }}_{n}^{x}]\) is studied with a bond order D = 2 quantum matrix product state. a The SU(4) unitaries U and V are compiled to the circuit as shown. The parameter p is varied to increase the accuracy. Although more efficient parametrizations exist for 2 qubit unitaries39, as well as circuits more specifically tuned to this problem, we choose a generic circuit. It is readily extendible to higher bond orders [see Supplementary Materials]. b The optimum state is found using the circuits depicted in Fig. 2. The energy of this state is a better approximation to the true ground state energy as the depth of parametrization increases and converges to that obtained in a conventional MPS algorithm. In particular, we have checked that the parametrization of ref. 39 perfectly reproduces the MPS results. Note that at λ = 0 the Hamiltonian is optimised by a product state, which is captured perfectly with p = 1. c Transverse field Ising model displays dynamical phase transitions in the Loschmidt echo23. These are revealed in the simulated runs of the quantum time-dependent variational principle embodied by the circuits in Fig. 3. More accurate circuits are required to obtain good agreement. The results indicated as exact in the above are exact analytical results.

(i) When run without gate errors and complete representation of the unitaries U, V, L, and R, our code precisely reproduces the optimum iMPS and its time evolution using the time-dependent variational principle.

(ii) Factorisations of the unitaries reduce the fidelity of our results. These are systematically improved as the depth of expressibility of the ansatz is increased. Full parametrisations of the unitaries reproduce classical MPS results exactly.

(iii) Going from representing to optimising, to time-evolving states places increasing demands upon circuit depth and width. Accurate results require increasingly deep factorisation of the unitaries and suffer increasingly adverse effects of gate errors.

Optimising

The simulation results for the optimisation of the transverse field Ising model are given in Fig. 4. The unitary U is factorised using the ansatz in Fig. 4a. This ansatz shows good agreement with the exact bond dimension 2 MPS results with a low depth circuit. This factorisation is much lower depth than a full factorisation of SU(4). Alternative factorisations of the state unitary are possible for this problem. Lower depth ansatz can be used by identifying that the ground state of the transverse field Ising model is made up of real tensors (See Supplementary Material for details).

Time evolving

Using exact representations of unitary matrices we exactly reproduce the TDVP equations as expected. We demonstrate that shallow factorisations of the state unitaries are able to effectively capture dynamical phase transitions in the Loschmidt Echos. The ground state of the transverse field Ising model with g = 1 is prepared. This state is then evolved under the same Hamiltonian but with g = 0.2. At each step of the trajectory, the overlap of the current state with the original state is recorded. The log of this overlap is shown for the whole trajectory in Fig. 4c.

A low depth state is able to exactly represent the states used in ref. 24 to produce Poincare maps, which are used to study slow quantum thermalisation in the PXP Model25. The states are defined with a two-parameter circuit shown in Fig. 5. The quantum TDVP code is able to recreate the Poincare maps for a two-site translationally invariant MPS state. It is possible in this case to discard erroneous points by identifying points with energies that deviate from the known value by more than some fixed threshold. This is a form of error mitigation that may be applicable to other problems when using the quantum TDVP algorithm and may help mitigate the impact of noise from NISQ devices. The larger structures in the Poincare Map are distorted by errors but are still visible in the presence of integration and stochastic optimisation errors. The effects of noise on the quantum TDVP algorithm are outlined in the Supplementary Materials.

The Hamiltonian \({\mathcal{H}}=\sum_{n}(1-{\hat{\sigma }}_{n-1}^{z}){\hat{\sigma }}_{n}^{x}(1-{\hat{\sigma }}_{n+1}^{z})\), first posited to describe the results of quantum simulations using Rydberg atoms25, displays a curious property known as many-body scarring25, whereby from certain starting states, persistent oscillations that can be described with a low bond-order MPS are found. These are amenable to study on a NISQ machine using the quantum time-dependent variational principle of Fig. 3. a A simple set of 2-site periodic states at bond order D = 2 are parametrised by circuits with just 2 parameters per site, so 4 in total. b A partial Poincare section through the plane θ1 = 0.9 produced from a classical simulation using the matrix product state equations of motion presented in ref. 24. Initial conditions are chosen on a constant energy surface \(\left\langle {\mathcal{H}}\right\rangle =0\). The partial plot was produced with initial conditions along a line with spacing δϕ1 = 3 × 10−2, with θ1 = 0.9 and θ2 = 5.41. The final parameter, ϕ3, chosen to fix the energy. c The same Poincare map produced simulating the quantum time-dependent variational principle in Cirq. While the figure is blurred somewhat by integration, the main features are still apparent.

Discussion

We have presented a way to perform quantum simulations by translating tensor network algorithms to quantum circuits. Our approach allows parallel quantum simulations of large systems on small NISQ computers. We have demonstrated this for one-dimensional translationally invariant spin chains. The translation of MPS algorithms naturally encodes fundamental features of matrix product states and the tangent space to the variational manifold that they form. In demonstrating the operation of such circuits, we have touched upon some immediate questions including the expressiveness of shallow circuit restrictions of tensor network states, their effect upon simulation alongside that of finite gate fidelity. These warrant further systematic study.

Our algorithms are readily extensible to inhomogeneous one-dimensional systems and to higher dimensions following existing methods that wrap one-dimensional states to higher dimensional systems17,18. It would be interesting to study other gauge restrictions of MPS—such as the mixed gauge of modern classical time-dependent variational principle codes—which can also be implemented in quantum circuits. Generalisations of MPS that more directly describe higher dimensional systems are also available. For example, the projected entangled pair states (PEPS) give a two-dimensional generalisation. In realising these states on a quantum circuit, they must be formed from isometric tensors. Until recently, a suitable canonical form for PEPS was not available. The isometric version of PEPS presented in ref. 26 shows great promise and ought to be possible to implement on a suitably connected quantum circuit. Other tensor networks such as the multi-scale entanglement renormalisation ansatz27 (MERA) are naturally based upon unitary operators and can be realised on a quantum circuit28. Indeed, MERA has been deployed for image classification on a small quantum circuit29 and as a quantum convolutional neural network30.

The tensor network framework also provides a convenient route to harness potential quantum advantage in simulation. The one-dimensional matrix product state ansatz is efficiently contractible. The time is taken to calculate the expectation of a local operator scales proportional to the length of the system. A quantum implementation has the advantage of a potentially exponential decrease in the prefactor to this scaling. While a classical tensor network may efficiently represent the important correlations of quantum state in higher dimensions, its properties may not be efficiently contractible. Contraction of a PEPS state is provably ♯P hard31. However, a physically relevant subset of these states can be efficiently contractible and an isometric representation of them (isometric PEPS for which the Moses move of ref. 26 can be carried out without approximation are indeed quasi-local and finitely contractible) could confer quantum advantage from the shallow representation of the constituent unitaries32. The balance of advantage and cost in quantum algorithms can be delicate; extracting the elements of the tensors is easy classically, but quantum-mechanically requires tomography of the circuit state, which is exponentially slow in the number of spins measured. This may be the bottleneck in hybrid algorithms33. Using the tensor network framework to distribute entanglement resources over the Hilbert space appropriately can mitigate some of these costs.

This work demonstrates the utility of translating tensor network algorithms to quantum circuits and opens an unexplored direction for quantum simulation. Potentially all of the advances of classical simulation of quantum systems using tensor networks can be translated in this way. Moreover, it provides a complementary perspective on classical algorithms suggesting related benefits in purely unitary implementations.

Methods

Quantum matrix product states

The mapping from MPS to quantum circuits that we use automatically embodies much of the variational manifold and its tangent space. The parsimony of this mapping to the quantum circuit suggests that it is the natural home for MPS. The fundamental building block of the circuits depicted in Figs. 2, 3 is the MPS tensor. A tensor of bond order D and local Hilbert space dimension D is represented by an SU(dD) matrix following13,34,35,36,37 \({A}_{ij}^{\sigma }={U}_{(1\otimes j),(\sigma \otimes i)}\) as shown in Fig. 6. This translation automatically encodes the left canonical form of the MPS tensor; \({\sum }_{\sigma }{({A}^{\sigma })}_{ij}^{\dagger }{A}_{jk}^{\sigma }={\delta }_{ik}\). This follows directly from the unitary property of U. A classical implementation of an MPS algorithm involves returning the tensors to this form after each step in an algorithm using singular value decompositions—in a quantum algorithm, such manipulation is not required.

a The translation of an MPS tensor to a quantum circuit. The auxilliary index of bond order D is created from \(N={{\mathrm{log}}\,}_{2}D\) qubits. b The canonical form implies that the effect of contracting the MPS to the left is trivial. c With our mapping of the MPS to a quantum circuit, the unitarity of U automatically puts the tensor in left canonical form.

Moreover, the remaining elements of the unitary encode the tangent space structure to the sub-manifold of states spanded by MPS. These are important in constructing the projected Hamiltonian dynamics. Adopting the notation of ref. 12, V(σ⊗δ≠1),(i⊗j) = U(δ≠1⊗j),(σ⊗i) and automatically satisfies the null or tangent gauge-fixing condition \({\sum }_{\sigma }{({A}^{\sigma })}_{ij}^{\dagger }{V}_{jk}^{\sigma ,\delta \ne 1}=0\). This structure is responsible for the very compact quantum implementation of the time-dependent variational principle shown in Fig. 3. It obviates the need to calculate the tangent space structure at each step12.

The quantum time-dependent variational principle

The equivalence with the classical implementation of the time-dependent variational principle for matrix product states and its quantum version can be seen by adopting the following parametrization of the updated unitary in the form

Taking the explicit overlap of the circuit in Fig. 3a) with the state \(\left|000\ldots \right\rangle\) and then calculating its derivative with respect to X recovers the time-dependent variational principle as formulated in ref. 12. The tensor X is to be compared with that in ref. 12 rescaled by the square root of the environment tensor. The quickest route to see this is to expand the circuit to quadratic order in the tensor X and bi-linear order in X and dt, before differentiating with respect to X.

Optimising quantum circuits

Our algorithms require the optimisation of expectations of observables—in Figs. 2b, and 3a—and the solution of fixed-point equations in Figs. 2b and 3b, c to determine the environment and mixed environment. In all cases, optimisations are carried out stochastically.

We use the Rotosolve algorithm38 to speed up our stochastic searches. This utilises the fact that the dependence of expectations of a parametrised quantum circuit on any particular parameter is sinusoidal. As a result, after just three measurements one can take this parameter to its local optimum value. Extensions of this allow the variation to be calculated when several elements of the circuit dependent upon the same parameter.

The equations illustrated in Figs. 2b and 3b, c are implicitly identities between density matrices. We solve them using a version of the swap test that amounts to a stochastic optimisation of the objective function tr [\({(\hat{r}-\hat{s})}^{\dagger }(\hat{r}-\hat{s})\)]. Details of how the swap test is implemented for the environment in Fig. 2b and for the mixed environments in Figs. 3b, c are given in the supplementary materials.

Data availability

All data generated or analysed during this study are included in this published article (and its supplementary information files).

Code availability

All code used to generate the results presented is available to download at https://github.com/fergusbarratt/qmps. We provide both Cirq code—which we have used in our simulations—and Qiskit code with the same functionality.

References

White, S. R. Density matrix formulation for quantum renormalization groups. Phys. Rev. Lett. 69, 2863–2866 (1992).

Östlund, S. & Rommer, S. Thermodynamic limit of density matrix renormalization. Phys. Rev. Lett. 75, 3537–3540 (1995).

Perez-Garcia, D., Verstraete, F., Wolf, M. M. & Cirac, J. I. Matrix product state representations. Quantum Info. Comput. 7, 401–430 (2007).

Vidal, G. Efficient classical simulation of slightly entangled quantum computations. Phys. Rev. Lett. 91, 147902 (2003).

Peruzzo, A. et al. A variational eigenvalue solver on a photonic quantum processor. Nat. Commun. 5, 4213 (2014).

Kitaev, A. Y. Quantum measurements and the abelian stabilizer problem. Preprint at arXiv https://arxiv.org/abs/quant-ph/9511026 (1995).

Trotter, H. F. On the product of semi-groups of operators. Proc. Am. Math. Soc. 10, 545–551 (1959).

Suzuki, M. Improved trotter-like formula. Phys. Lett. A 180, 232–234 (1993).

Hastings, M. B., Wecker, D., Bauer, B. & Troyer, M. Improving quantum algorithms for quantum chemistry. Quantum Info. Comput. 15, 1–23, https://dl.acm.org/doi/10.5555/2685188.2685189 (2015).

Li, Y. & Benjamin, S. C. Efficient variational quantum simulator incorporating active error minimization. Phys. Rev. X 7, 021050 (2017).

Schön, C., Hammerer, K., Wolf, M. M., Cirac, J. I. & Solano, E. Sequential generation of matrix-product states in cavity qed. Phys. Rev. A 75, 032311 (2007).

Haegeman, J. et al. Time-dependent variational principle for quantum lattices. Phys. Rev. Lett. 107, 070601 (2011).

Green, A. G., Hooley, C. A., Keeling, J. & Simon, S. H. Feynman path integrals over entangled states. Preprint at arXiv https://arxiv.org/abs/1607.01778 (2016).

Peng, T., Harrow, A. W., Ozols, M. & Wu, X. Simulating large quantum circuits on a small quantum computer. Phys. Rev. Lett. 125, 150504 (2020).

Kim, I. H. Holographic quantum simulation. Preprint at arXiv https://arxiv.org/abs/quantu-ph/1702.02093 (2017).

Kim, I. H. Noise-resilient preparation of quantum many-body ground states. Preprint at arXiv https://arxiv.org/abs/1703.00032 (2017).

Schollwöck, U. The density-matrix renormalization group in the age of matrix product states. Ann. Phys. 326, 96–192 (2011).

Orús, R. A practical introduction to tensor networks: matrix product states and projected entangled pair states. Ann. Phys.349, 117–158 (2014).

Smith, A., Jobst, B., Green, A. G. & Pollmann, F. Crossing a topological phase transition with a quantum computer. Preprint at arXiv https://arxiv.org/abs/1910.05351v2 (2019).

Nielsen, M. A. & Chuang, I. L. Quantum Computation and Quantum Information: 10th Anniversary Edition. 10th (Cambridge University Press, 2011).

Cerezo, M., Sone, A., Volkoff, T., Cincio, L. & Coles, P. J. Cost function dependent barren plateaus in shallow parametrized quantum circuits. Nat. Commun. 12, 1–12 (2021).

Lin, S.-H., Dilip, R., Green, A. G., Smith, A. & Pollmann, F. Real- and imaginary-time evolution with compressed quantum circuits. PRX Quantum 2, 010342 (2021).

Heyl, M., Polkovnikov, A. & Kehrein, S. Dynamical quantum phase transitions in the transverse-field ising model. Phys. Rev. Lett. 110, 135704 (2013).

Michailidis, A., Turner, C., Papić, Z., Abanin, D. & Serbyn, M. Slow quantum thermalization and many-body revivals from mixed phase space. Phys. Rev. X 10, 011055 (2020).

Turner, C. J., Michailidis, A. A., Abanin, D. A., Serbyn, M. & Papić, Z. Weak ergodicity breaking from quantum many-body scars. Nat. Phys. 14, 745–749 (2018).

Zaletel, M. P. & Pollmann, F. Isometric tensor network states in two dimensions. Phys. Rev. Lett. 124, 037201 (2020).

Vidal, G. Class of quantum many-body states that can be efficiently simulated. Phys. Rev. Lett. 101, 110501 (2008).

Kim, I. H. & Swingle, B. Robust entanglement renormalization on a noisy quantum computer. Preprint at arXiv https://arxiv.org/abs/1711.07500 (2017).

Grant, E. et al. Hierarchical quantum classifiers. npj Quantum Inf. 4, 65 (2018).

Cong, I., Choi, S. & Lukin, M. D. Quantum convolutional neural networks. Nat. Phys. 15, 1273–1278 (2019).

Schuch, N., Wolf, M. M., Verstraete, F. & Cirac, J. I. Computational complexity of projected entangled pair states. Phys. Rev. Lett. 98, 140506 (2007).

Schwarz, M., Temme, K. & Verstraete, F. Preparing projected entangled pair states on a quantum computer. Phys. Rev. Lett. 108, 110502 (2012).

Wecker, D., Hastings, M. B. & Troyer, M. Progress towards practical quantum variational algorithms. Phys. Rev. A 92, 042303 (2015).

Schön, C., Solano, E., Verstraete, F., Cirac, J. I. & Wolf, M. M. Sequential generation of entangled multiqubit states. Phys. Rev. Lett. 95, 110503 (2005).

Huggins, W., Patil, P., Mitchell, B., Whaley, K. B. & Stoudenmire, E. M. Towards quantum machine learning with tensor networks. Quantum Sci. Technol. 4, 024001 (2019).

Gopalakrishnan, S. & Lamacraft, A. Unitary circuits of finite depth and infinite width from quantum channels. Phys. Rev. B 100, 064309 (2019).

Ran, S.-J. Encoding of matrix product states into quantum circuits of one-and two-qubit gates. Phys. Rev. A 101, 032310 (2020).

Ostaszewski, M., Grant, E. & Benedetti, M. Structure optimization for parameterized quantum circuits. Quantum 5, 391 (2021).

Vatan, F. & Williams, C. Optimal quantum circuits for general two-qubit gates. Phys. Rev. A 69, 032315 (2004).

Acknowledgements

J.D., F.B. and A.G.G. were supported by the EPSRC through grants EP/L015242/1, EP/L015854/1 and EP/S005021/1. F.P. is funded by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No. 771537). F.P. acknowledges the support of the DFG Research Unit FOR 1807 through grants no. PO 1370/2-1, TRR80, and the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany’s Excellence Strategy EXC-2111-390814868. We would like to acknowledge discussions with Adam Smith and Bernard Jobst, and GoogleAI for supporting the attendance of F.B. at a Cirq coding workshop.

Author information

Authors and Affiliations

Contributions

A.G.G. conceived the project and developed it initially with V.S. and M.B. F.B., J.D. and A.G.G. worked out the detailed mapping of these ideas to a quantum circuit, wrote the Cirq and Qiskit code and ran the simulations. F.P. made key contributions at various points in the code development. The manuscript was written by F.B., J.D. and A.G.G.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Barratt, F., Dborin, J., Bal, M. et al. Parallel quantum simulation of large systems on small NISQ computers. npj Quantum Inf 7, 79 (2021). https://doi.org/10.1038/s41534-021-00420-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-021-00420-3

This article is cited by

-

Shuffle-QUDIO: accelerate distributed VQE with trainability enhancement and measurement reduction

Quantum Machine Intelligence (2024)

-

Self-correcting quantum many-body control using reinforcement learning with tensor networks

Nature Machine Intelligence (2023)

-

Characterizing a non-equilibrium phase transition on a quantum computer

Nature Physics (2023)

-

Variational quantum eigensolver with reduced circuit complexity

npj Quantum Information (2022)

-

Quantum algorithms for quantum dynamics

Nature Computational Science (2022)