Abstract

Continuous-time stochastic processes pervade everyday experience, and the simulation of models of these processes is of great utility. Classical models of systems operating in continuous-time must typically track an unbounded amount of information about past behaviour, even for relatively simple models, enforcing limits on precision due to the finite memory of the machine. However, quantum machines can require less information about the past than even their optimal classical counterparts to simulate the future of discrete-time processes, and we demonstrate that this advantage extends to the continuous-time regime. Moreover, we show that this reduction in the memory requirement can be unboundedly large, allowing for arbitrary precision even with a finite quantum memory. We provide a systematic method for finding superior quantum constructions, and a protocol for analogue simulation of continuous-time renewal processes with a quantum machine.

Similar content being viewed by others

Introduction

Our experience of the world manifests as a series of observations. The goal of science is to provide a consistent explanation for these, and further, make predictions about future observations. That is, science aims to provide a model of Nature, to describe the processes that give rise to the observations. It is possible to devise many different models that make identical predictions, and so it is desirable to have criteria that discern the ‘best’ model. One such guiding philosophy is Occam’s razor “plurality should not be posited without necessity”, which can be interpreted as requiring that a model should be the ‘simplest’ that accurately describes our observations.

This now leaves us with the question of how to determine the simplest model. The field of computational mechanics1,2,3 seeks to answer this, defining the optimal predictive model of a process to be that which requires the least information about the past in order to predict the future, and uses this minimal memory requirement as a measure of complexity. There is motivation for preferring simpler models beyond the inclination for elegance; it allows one to make more fundamental statements about the processes themselves, due to their irreducible nature.3 More pragmatically, it also facilitates the building of simulators (devices emulating the behaviour of the system (Fig. 1))4 for the process, as simpler models require fewer resources (here, internal memory).

Models and simulators. a A system can be viewed as a black box which outputs what we observe, and our explanation of the observations forms a model. b We can build simulators to implement our models, in order to test them and make predictions about the future. Computational mechanics defines the optimal models to be those with the minimal internal memory requirement

Discrete-time processes have been well-studied within the computational mechanics framework.5,6,7,8,9,10,11,12,13,14,15,16 However, it has recently been shown that quantum machines can be constructed that in general exhibit a lower complexity, and hence a lower memory requirement, than their optimal classical counterparts.17,18,19,20,21,22,23,24 This substantiates the perhaps surprising notion that a quantum device can be more efficient than a classical system, even for the simulation of a purely classical stochastic process. This has recently been verified experimentally.25

Continuous-time processes have also recently been the focus of computational mechanics studies.26,27 While the principles of optimality for discrete-time processes can be directly exported to the continuous-time case, systematic study of the underlying architecture has only been carried out for a restricted set of processes; renewal processes.27 Renewal theory describes a generalisation of Poisson processes,28,29 where a system emits at a time drawn from a probabilistic distribution, before returning to its initial state (one can also view this as a series of events separated by probabilistic dwell times). Despite their apparent simplicity, such processes have many applications, including models of lifetimes,30 queues,31 and neural spike trains.13,32,33

Here, we show that the quantum advantage can be extended to continuous-time processes. Focussing on renewal processes, we provide a systematic construction for determining quantum machines that require less information about the past for accurate future prediction than the optimal classical models. We provide a protocol that can be used to implement such quantum machines as analogue simulators of a renewal process. We then illustrate the quantum advantage with two examples. In particular, we find that while the classical machines typically need infinite memory, a quantum machine can in some cases require only finite memory. We conclude by arguing that the finite memory requirement may be a typical property of quantum machines, suggest possible mediums for a physical realisation of the quantum machines, and discuss prospects for future work. The Methods section provides additional details on the framework used, and the derivations for the examples.

Results

Framework

Here we review the computational mechanics framework used to express our results (a more complete overview may be found elsewhere2,3). We consider continuous-time discrete-alphabet stochastic point processes. Such a process \({\cal P}\) is characterised by a sequence of observations (x n , t n ), drawn from a probability distribution P(X n , T n ).34 Here, the x n , drawn from an alphabet \({\cal A}_n\), are the symbols emitted by the process, while the t n record the times between emissions n − 1 and n. For shorthand, we denote the dual x n = (x n , t n ), and similarly X n for the associated stochastic variable. We denote a contiguous string of observations of emitted symbols and their temporal separations by the concatenation xl:m = x l xl+1 … xm−1, and for a stationary process we mandate that \(P({\boldsymbol{X}}_{0:L}) = P({\boldsymbol{X}}_{s:s + L})\forall s,L \in {\Bbb Z}\). Note that the discrete-time case consists of either coarse-graining the t n , or considering processes where such dwell times are either identical or irrelevant.

We define the past of a process \(\overleftarrow {\boldsymbol{x}} = {\boldsymbol{x}}_{ - \infty :0}\left( {\emptyset ,t_{0^ + }} \right)\), where 0 is the current emission step (i.e., the next emitted symbol will be x0, and \({\emptyset}\) denotes that this symbol is currently undetermined), and \(t_{0^ + }\) is the time since the last emission, with associated random variable \(T_{0^ + }\). Analogously, defining \(t_{0^ - }\) as the time to the next emission, we can denote the future \(\overrightarrow {\boldsymbol{x}} = \left( {x_0,t_{0^ - }} \right){\boldsymbol{x}}_{1:\infty }\).27 The causal states of the process are then an equivalence class defined according to a predictive equivalence relation;1 two past sequences \(\overleftarrow {\boldsymbol{x}}\) and \(\overleftarrow {\boldsymbol{x}} ^\prime\) belong to the same causal state (i.e. \(\overleftarrow {\boldsymbol{x}} \sim _e\overleftarrow {\boldsymbol{x}} ^\prime\)) iff they satisfy

We use the notation S j to represent the causal state labelled by some index j.

We desire models that are predictive, wherein the internal memory of a simulator implementing the model contains all (and no additional) information relevant to the future statistics that can be obtained from the entire past. The first part of this entails the simulator memory having the same predictive power as knowledge of the entire past (prescience2), while the second ensures that knowledge of the memory provides no further predictive power than observing the entire past output (information about the future accessible in this manner is referred to as oracular,35 and implies the simulator having decided aspects of its future output in advance). This notion of predictive models is stricter than the broader class of generative models, which must only be able to faithfully reproduce future statistics; internal states of models in the broader class may contain additional information that allows for better prediction of future outputs than knowledge of the past, violating the non-oracular condition. We note that while there exist generative models that can operate with lower memory than the optimal predictive models we will now introduce, as this is achieved by leveraging oracular information we do not consider such models here.

The probably optimal predictive classical models, termed ‘ε-machines’, operate on the causal states.1,2 In general the systematic structure of these models is well-understood only for discrete-time processes, though as we later discuss recent efforts have been made towards constructing corresponding continuous-time machines. A discrete-time ε-machine may be represented by an edge-emitting hidden Markov model, in which the hidden states are the causal states, the transitions (edges) between these states involve the emission of a symbol from the process alphabet, and the string of emitted symbols forms the process. The edges are defined by a dynamic \(T_{kj}^{(x)}\) describing the probability of transitioning from causal state S j to S k while emitting symbol x. The \(T_{kj}^{(x)}\) are thus defined by the statistics of the process, and because they depend only on the current hidden state the model is Markovian. Further, as the predictive equivalence relation ensures that the system is always in a definite causal state defined wholly and uniquely by its past output, ε-machines are unifilar.2 This means that for a given initial causal state and subsequent emission(s), the current causal state is known with certainty.

The quantity of interest for our study is the statistical complexity C μ , which answers the question “What is the minimal information required about the past in order to accurately predict the future?”. It is defined as the Shannon entropy36 of the steady state distribution π of the causal states S j ;

The use of Shannon entropy is motivated by considering the memory to be the average information stored about the past (alternatively, it can be viewed as the average information communicated in the process from the past to the future). Due to the ergodic nature of the processes considered, the time average and the ensemble average are equivalent. However, one could also consider the Hartley entropy, that is, the size of the substrate into which the memory is encoded (i.e., the logarithm of the number of states).1 It can be shown that the ε-machine also optimises this measure,2 though we shall here focus on the former measure, and implicitly consider an ensemble scenario. That is, when operating N independent simulators, the total memory required tends to NC μ as N → ∞.36 The statistical complexity is lower-bounded by the mutual information between the past and future of the process, referred to as the excess entropy \(E = I\left( {\overleftarrow {\boldsymbol{X}} ;\overrightarrow {\boldsymbol{X}} } \right)\).2

Although the predictive equivalence relation defines the optimal model for both discrete-time processes and continuous-time processes, as noted earlier, most works so far have been devoted to studying the ε-machines of discrete-time processes. It is only recently that a similar systematic causal architecture has been uncovered for a restricted set of continuous-time processes, renewal processes.27 Renewal processes form a special case of the above, where each emission occurs at an independent and identically distributed (IID) probabilistic time, and emits the same symbol. Such processes are defined entirely by this emission probability density ϕ(t), and the sequence is fully described by the emission times alone. It is useful to define the following quantities for a renewal process: the survival probability \({\mathrm{\Phi }}(t) = {\int}_t^\infty {\kern 1pt} \phi (t^{\prime})\mathrm{d}t^{\prime}\); and the mean firing rate \(\mu = \left( {{\int}_0^\infty {\kern 1pt} t\phi (t)\mathrm{d}t} \right)^{ - 1}\).

In Fig. 2 we show a generative model for such a process. Because of the IID nature of the process, the only relevant part of the past in predicting the future statistics is the time since the last emission \(t_{0^ + }\), and this assists us only in predicting the time to the next emission \(t_{0^ - }\).27 Thus, the causal equivalence relation simplifies to

We label the causal states \(S_{t_{0^ + }}\) according to the minimum \(t_{0^ + }\) belonging to the equivalence class. Depending on the form of ϕ(t), we can determine which \(t_{0^ + }\) belong to the same causal state. Notably, if ϕ(t) is Poissonian, the time since the last emission is irrelevant (as the decay rate is constant), and hence all \(t_{0^ + }\) belong to the same causal state—the process is memoryless and has C μ = 0. All other processes involve a continuum of causal states, which may either extend indefinitely, terminate in a single state at a certain time, or eventually enter a periodic continuum (see Methods A). The steady state probability density π(S t ) of the causal states depends on this causal architecture (Methods B). We specifically highlight that states in the initial continuum have π(S t ) = μΦ(t); as we will later discuss, this is the only necessary part of the architecture once we turn to quantum causal states.

The statistical complexity of the process can be defined in correspondence with Eq. (2), by taking the continuous limit of a discretised analogue of the process;

This quantity will however either be zero (for a Poissonian emission probability density), or infinite (for all other distributions), due to the infinitesimal coarse-graining. Classically therefore, it is not the most enlightening measure of complexity, and has motivated earlier work on this topic27 to instead consider use of the differential entropy for the statistical complexity; \(C_\mu ^{({\mathrm{DE}})} = - {\int}_0^\infty {\kern 1pt} \mathrm{d}t\pi \left. {\left( {S_t} \right)} \right){\mathrm{log}}_2{\kern 1pt} \pi \left( {S_t} \right)\). While this quantity allows for a comparison of the complexity of two processes, we find it lacking as an absolute measure of complexity, as it requires one to take logarithms of dimensionful quantities, and loses the original physical motivation of being the information contained within the process about its past. Instead, we will employ the true continuum limit of the Shannon entropy Eq. (4) as the measure of a process’ statistical complexity, accepting the infinities as faithfully representing that classical implementations of such models do indeed require infinite memory.

Quantum causal states

It has been shown that a quantum device simulating a discrete-time process can in general require less memory than the optimal classical model.17 In order to assemble such a device, for each causal state S j one must construct a corresponding quantum causal state \(\left| {S_j} \right\rangle = \mathop {\sum}\nolimits_{xk} {\kern 1pt} \sqrt {T_{kj}^{(x)}} \left| x \right\rangle \left| k \right\rangle\), where, as defined above, the transition dynamic \(T_{kj}^{(x)}\) is the probability that a system in S j will transition to S k , while emitting symbol x. The machine then operates by mapping the state \(\left| k \right\rangle\) with a blank ancilla to \(\left| {S_k} \right\rangle\), following which measurement of the \(|x\rangle _{}^{}\) subspace will produce symbol x with the correct probability, while leaving the remaining part of the system in \(\left| {S_k} \right\rangle\). The internal steady state of the machine is given by \(\rho = \mathop {\sum}\nolimits_j {\kern 1pt} \pi \left( {S_j} \right)\left| {S_j} \right\rangle \left\langle {S_j} \right|\). We refer to such constructions as q-machines, and their internal memory C q can be described by the von Neumann entropy36 of the steady state;

Unlike classical causal states, the overlaps \(\left\langle {S_j|S_k} \right\rangle\) of different quantum causal states are in general non-zero, and hence C q ≤ C μ (typically the inequality is strict); thence, the q-machine has a lower internal memory requirement than the corresponding ε-machine.17 Physically, this memory saving can be understood as the lack of a need to store information that allows complete discrimination between two pasts when they have some overlap in their conditional futures. This entropy reduction acquires operational significance when one considers an ensemble of independent simulators of a process sharing a common total memory.17 As with the classical case, C q is also lower bounded by the excess entropy of the process. Note that while this quantum construction is superior to the optimal classical model, it does not necessarily provide the optimal quantum model. Indeed, for particular classes of process, constructions involving several symbol outputs are known that have even lower internal memory,19,20 and there may exist as yet unknown further optimisations beyond this. Such known improvements however are not relevant for the processes we consider.

We now seek to extend this quantum memory reduction advantage to the realm of continuous-time processes. To do so, we first define a wavefunction \(\psi (t) = \sqrt {\phi (t)}\). We can rephrase the survival probability and mean firing rate in terms of this wavefunction: \({\mathrm{\Phi }}(t) = {\int}_t^\infty \left| {\psi (t^{\prime})} \right|^2\mathrm{d}t^{\prime}\); and \(\mu = \left( {{\int}_0^\infty {\kern 1pt} t\left| {\psi (t)} \right|^2\mathrm{d}t} \right)^{ - 1}\). Inspired by the quantum construction for discrete-time processes, we wish to construct quantum causal states \(\left| {S_t} \right\rangle\) such that when a measurement is made of the state (in a predefined basis), it reports a value t′ with probability (density) \(P\left( {T_{0^ - } = t^{\prime}|T_{0^ + } = t} \right)\). We may view the quantum causal state as a continuous alphabet (representing the value of \(t_{0^ - }\)) analogue of the discrete case, with only a single causal state (S0) the system may transition to after emitting this symbol.

The probability density \(P\left( {T_{0^ - } = t^{\prime}|T_{0^ + } = t} \right)\) is given by \(\phi \left( {t + t^{\prime}} \right){\mathrm{/}}{\int}_t^\infty {\kern 1pt} \phi (t^{\prime})\mathrm{d}t^{\prime} = \phi (t + t^{\prime}){\mathrm{/\Phi }}(t)\). By analogy with the discrete case we construct our quantum causal states as \(\left| {S_t} \right\rangle = {\int}_0^\infty {\kern 1pt} \mathrm{d}t^{\prime}\sqrt {P\left( {T_{0^ - } = t^{\prime}|T_{0^ + } = t} \right)} \left| {t^{\prime}} \right\rangle\), and thus:

We emphasise that while the wavefunction is encoding information about time in the modelled process, the q-machine used for simulation may encode it in any practicable continuous variable, such as the position of a particle. The measurement basis used to obtain the correct statistics is of course that defined by \(\left\{ {\left| t \right\rangle } \right\}\) (that is, measurement outcome t′ occurs with probability density \(\left| {\left\langle {t^{\prime}|S_t} \right\rangle } \right|^2 = \left| {\psi (t + t^{\prime})} \right|^2{\mathrm{/\Phi }}(t)\) when the system is in state \(\left| {S_t} \right\rangle\)).

When the first segment \([0,\tilde t)\) of the continuous variable in a quantum causal state is swept across, if the system is not found to be in this region the state is modified by application of the projector \({\mathrm{{\Pi}}}_{\tilde t} = {\int}_{\tilde t}^\infty {\kern 1pt} \mathrm{d}t\left| t \right\rangle \left\langle t \right|\) and appropriate renormalisation. When this projector is applied to the state \(\left| {S_t} \right\rangle\), the resulting state is simply \(\left| {S_{t + \tilde t}} \right\rangle\) displaced by \(\tilde t\); by correcting for this displacement the effect of the measurement sweep is exactly identical to the change in the internal memory of the machine if no emission is observed in a time period \(\tilde t\), and thus the quantum causal states automatically update when measurement sweeps are used to simulate the progression of time.

The overlap of two quantum causal states can straightforwardly be calculated:

By their very construction, these quantum states will automatically merge states with identical future statistics, even if we neglect the underlying causal architecture. Recall the causal equivalence relation Eq. (3). Since these probabilities wholly define the quantum states, if two quantum states have the same future statistics they are identical by definition. Due to the linearity of quantum mechanics, the steady state probabilities of the identical quantum states are added together to find the total probability for the state, much the same way as the underlying state probabilities are added together when merging states to form the classical causal states. Thus, when constructing the quantum ‘causal’ states, we are at liberty to ignore the classical causal architecture as described in Methods A, without any penalty to the information that is stored by the q-machine, and instead construct quantum states for all t ≥ 0 according to the prescription of Eq. (6). Note that the causal architecture can still be used as a calculational aid.

Memory of continuous-time q-machines

From Eq. (7) we see that in general the overlaps of the quantum causal states are non-zero, unlike the corresponding classical states, which are orthogonal. Because of this reduced distinguishability of the quantum causal states, the entropy of their steady state distribution is less than that of the classical causal states, and hence the amount of information that must be stored by the q-machine to accurately predict future statistics is less than that of the optimal classical machine, evincing a quantum advantage for the simulation of continuous-time stochastic processes. We will later show with our examples that this advantage can be unbounded, wherein q-machines have only a finite memory requirement for the simulation of processes for which the ε-machine requires an infinite amount of information about the past. Note that even when we consider coarse-graining the time since the last emission to a resolution of finite intervals δt we shall still see a quantum advantage due to the non-orthogonality of the quantum states. Note also that decoherence of the memory into the measurement basis destroys the quantum advantage, and will result in the classical internal memory cost C μ (see Methods C).

The density matrix describing the internal state of the q-machine is given by \(\rho = {\int}_0^\infty {\kern 1pt} \mathrm{d}t\pi \left( {S_t} \right)\left| {S_t} \right\rangle \left\langle {S_t} \right|.\) As discussed above, we can construct the quantum states \(\left| {S_t} \right\rangle\) for all t, in which case their steady state probability density π(S t ) is given by μΦ(t). We thus find that the elements of the density matrix are given by \(\rho (a,b) = \mu {\int}_0^\infty {\kern 1pt} \mathrm{d}t\psi (t + a)\psi (t + b)\). From this, we can construct a characteristic equation to find the eigenvalues λ n that diagonalise the density matrix:

The information stored by the q-machine can then be expressed in terms of these eigenvalues; \(C_q = - \mathop {\sum}\nolimits_n {\kern 1pt} \lambda _n{\kern 1pt} {\mathrm{log}}_2\lambda _n\). We find that this quantity is invariant under rescaling of the time variable in the emission probability density (see Methods D for details).

Building q-machine simulators of renewal processes

While we have explained in the abstract sense how one constructs the quantum causal states, it is interesting to also consider the structure of a device that would actually perform such simulations. In fact, a digital simulation of the process, that simply emits a sequence \(t_{0^ - :L}\) on demand drawn from the correct probability distribution \(P\left( {T_{0^ - :L} = t_{0^ - :L}|T_{0^ + } = t_{0^ + }} \right)\) would be very straightforward to assemble in principle: one must prepare the state \(\left| {S_{t_{0^ + }}} \right\rangle\), and L − 1 copies of \(\left| {S_0} \right\rangle\) (the states are all independent due to the renewal process emissions being IID). Measurement of the first state provides the \(t_{0^ - }\), while measurement of the others provides the t1:L. Because of the self-updating nature of the quantum causal states under partial measurement sweeps \([0,\tilde t)\), measurement over such a range can be used to simulate the effect of waiting for a time \(\tilde t\) for an emission.

However, this scheme is unsatisfactory as one must manually switch to a new state after each emission. Rather, a device that automatically begins operating on the state for the next emission after the previous state is finished would be preferable. We now describe such a construction, and even go a step further, by devising a setup that enables an analogue simulation of the process, and is thus able to provide emission times in (scaled) real time. For illustrative purposes, we first describe the protocol for discrete timesteps (that may be coarse-grained arbitrarily finely), and then discuss how it can be performed in continuous-time.

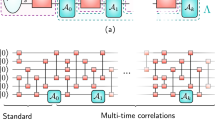

The procedure for the discrete-time case is as follows. Consider an infinite chain of qubits (two state quantum systems) labelled from 0 to ∞. Using \(\left| {1_n} \right\rangle\) to denote the state where all qubits are in state \(\left| 0 \right\rangle\) apart from the nth, which is in state \(\left| 1 \right\rangle\), we can express the discretised analogues \(\left| {\sigma _t} \right\rangle\) of the quantum causal states \(\left| {S_t} \right\rangle\) as \(\left| {\sigma _t} \right\rangle = \mathop {\sum}\nolimits_n \sqrt {P\left( {T_{0^ - } = n\mathrm{\delta} t|T_{0^ + } = t} \right)\mathrm{\delta} t} \left| {1_n} \right\rangle\), where \(P\left( {T_{0^ - } = n\mathrm{\delta} t|T_{0^ + } = t} \right) \to \phi (t + n\mathrm{\delta} t){\mathrm{/\Phi }}(t)\) as δt → 0. The location n of the qubit in state \(\left| 1 \right\rangle\) then represents the time nδt at which the emission occurs. We initialise the system in state \(\left| {\sigma _{t_{0^ + }}} \right\rangle\), according to the desired initial \(t_{0^ + }\). The chain is then processed sequentially, one qubit at a time, by performing a control gate on the qubit, which has the effect of mapping the next block of the chain to the state \(\left| {\sigma _0} \right\rangle\) if the qubit is in state \(\left| 1 \right\rangle\), and doing nothing otherwise (explicitly, the mapping required is \(\left| 0 \right\rangle \left| {1_n} \right\rangle \to \left| 0 \right\rangle \left| {1_n} \right\rangle\) \(\forall n \in {\Bbb Z}^ +\) and \(\left| 1 \right\rangle \left| 0 \right\rangle ^{ \otimes \infty } \to \left| 1 \right\rangle \left| {\sigma _0} \right\rangle\), where by construction these are the only possible input states). The qubit is then ejected from the machine (where measurement can be used to determine whether an emission event occurs at this time), and the machine then acts on the next qubit in the chain (Fig. 3a). This operation has the effect of preparing the chain in a state that provides the correct conditional probabilities if no emission is observed, and prepares the state with the correct distribution for the next emission step if an emission is observed.

q-machine simulators of renewal processes. a Analogue simulator for a discrete-time renewal process, where a continuous chain of qubits is used to encode the quantum causal state. The simulator sweeps along the chain and alters the future of the chain conditional on the current qubit, with the mappings \(\left| 0 \right\rangle \left| {1_n} \right\rangle \to \left| 0 \right\rangle \left| {1_n} \right\rangle\) and \(\left| 1 \right\rangle \left| 0 \right\rangle ^{ \otimes \infty } \to \left| 1 \right\rangle \left| {\sigma _0} \right\rangle\). Measurement of the qubit state signifies whether an emission occurs in a given timestep. b Analogue simulator for continuous-time renewal processes, where the quantum causal state is encoded into the position of a particle. The simulator sweeps along this position and generates additional particles encoding future emissions conditional on the presence of the particle. Detection of the particle signals an emission event

To operate this protocol in continuous-time, instead of encoding the state onto a discrete chain, we instead use a continuous degree of freedom, such as spatial position (henceforth referred to as the ‘tape’). As with the discrete case, we process sequentially along the tape, performing a unitary gate on the future of the tape, controlled on the current segment. Each emission step has its emission time encoded by the position of a particle on the tape (Fig. 3b); the first particle on the tape is initialised in \(\left| {S_t} \right\rangle = \left( {1{\mathrm{/}}\sqrt {{\mathrm{\Phi }}(t)} } \right){\int}_0^\infty {\kern 1pt} \mathrm{d}x\psi (t + x)\left| x \right\rangle\), where x labels the position on the tape. Since the controlled unitary operation must be performed in discrete time, on a discrete length of tape, it is designed such that it acts, controlled on the presence of a particle in the block, by placing a particle in state \(\left| {S_0} \right\rangle\), displaced to have its zero at the location of the control particle, and does nothing otherwise, akin to the discrete case above (that is, if the present particle is at position x, the combined state of the old and new particle is mapped to \(\left| x \right\rangle \left| {S_{ - x}} \right\rangle\), where we clarify that ψ(t) = 0 if t is negative). More formally, this can be written as the transformation \({\int}_0^\infty {\kern 1pt} \mathrm{d}t{\int}_L {\kern 1pt} \mathrm{d}x\psi (x + t)a_{x + t}^\dagger a_x^\dagger a_x\), where L is the block of tape upon which the gate acts, and \(a_x^\dagger\) creates a particle at x. Strictly, the gate should act in a nested fashion, by further generating an additional particle in an appropriately displaced state, when the new particle is placed within the current block. The machine then progresses to perform the same operation contiguously on the next block, while feeding out the previous block (equivalently, the tape can be fed through a static machine). Measurement of the positions of particles on the tape fed out then provides the simulated emission times.

Examples

We illustrate our proposal with two examples. We show for both these examples that not only is there a reduction in the memory requirement of the q-machine compared to the ε-machine, but also that the q-machine needs only a finite amount of memory, while the classical has infinite memory usage. Here we summarise the results, and the technical details may be found in Methods E and F.

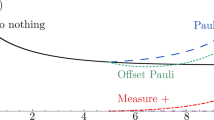

The first example is a uniform emission probability over the interval [0, τ). The corresponding emission probability density is ϕ(t) = 1/τ for 0 ≤ t < τ, and zero elsewhere (Fig. 4a). The corresponding mean firing rate and survival probability are given by μ = 2/τ and Φ(t) = 1 − t/τ (t < τ) respectively. The corresponding quantum causal states are given by \(\left| {S_t} \right\rangle = {\int}_0^{\tau - t} {\kern 1pt} \mathrm{d}t^{\prime}\left( {1{\mathrm{/}}\sqrt {\tau - t} } \right)\left| {t^{\prime}} \right\rangle\), and we can solve Eq. (8) to find that λ n = 8/(π(2n − 1))2 for \(n \in {\Bbb Z}^ +\). We can use an integral test (see Methods E) to show that \(C_q = - \mathop {\sum}\nolimits_{n = 1}^\infty {\kern 1pt} \lambda _n{\kern 1pt} {\mathrm{log}}_2\lambda _n\) is bounded, and moreover, that C q ≈ 1.2809. In Fig. 4b we show how the memory required by the q-machine tends towards this value as we use an increasingly fine coarse-graining of the discretised analogue of the process to approach the continuous limit, while the memory needed by the optimal classical machine diverges logarithmically. The memory requirement exceeds the lower bound set by the excess entropy \(E = {\mathrm{log}}_2{\kern 1pt} e - 1 \approx 0.4427\).

Uniform emission probability. a The corresponding emission probability density for a process with uniform emission probability in an interval [0,τ). b The classical memory C μ required to simulate the process diverges logarithmically as the discretisation becomes finer (N states), while the quantum memory C q converges on a finite value

For our second example, we consider a delayed Poisson process (ϕ(t) = (1/τ L ) exp(−(t − τ R )/τ L ) for t > τ R and 0 elsewhere), representing a process that exhibits an exponential decay with lifetime τ L , and a rest period τ R between emissions (Fig. 5a), forming, for example, a very crude model of a neuron firing. For this emission distribution we find that μ = (τ L + τ R )−1, and Φ(t) = 1 for t ≤ τ R and exp(−(t − τ R )/τ L ) for t > τ R . We can then show somewhat indirectly (see Methods F) that the corresponding quantum memory requirement is bounded for finite τ R /τ L (and vanishes as this ratio tends to zero), while in contrast C μ is infinite whenever this is non-zero. Further, due to the timescale invariance of the quantum memory, C q depends only on this ratio, and not the individual values of τ R and τ L . Varying this ratio allows us to sweep between a simple Poisson process with lifetime τ L , and a periodic process where the system is guaranteed to emit within an arbitrarily small interval at time τ R after the last emission. The quantum memory C q correspondingly increases with this ratio as we interpolate between the two limits (Fig. 5b), with the pure Poisson process being memoryless, and a periodic process requiring increasing memory with the sharpness of the peak. We also plot the excess entropy, given by \(E = {\mathrm{log}}_2(\tau _R{\mathrm{/}}\tau _L + 1)\) − \({\mathrm{log}}_2\mathrm{e}{\mathrm{/}}(\tau _L{\mathrm{/}}\tau _R + 1)\), which exhibits similar qualitative behaviour.

Delayed Poisson process. a The corresponding emission probability density for a delayed Poisson process with rest period τ R and lifetime τ L . b Varying the ratio τ R /τ L sweeps between a (memoryless) Poisson process and a periodic emission process, with a corresponding increase in the quantum memory C q required and excess entropy E as the distribution becomes sharper. Here, C q is calculated approximately from a very fine (214 + 1 states) discretisation

Discussion

We have shown that quantum devices can simulate models of continuous-time renewal processes with lower internal memory requirement than their corresponding optimal classical counterparts. Our examples evidence that this advantage can be arbitrarily large, compressing the need for an infinite classical memory into a finite quantum memory. Further, while we currently lack a proof, we suspect that this unbounded compression is a typical property of quantum machines. Our argument for this is as follows: consider a discretised analogue of the process, with timesteps δt. When refining the discretisation to timesteps δt/2, we introduce an additional causal state for each one already existing. Classically, all these states are orthogonal, and the classical memory requirement increases, leading to the logarithmic divergence of C μ as the timestep vanishes in the continuous limit. Contrarily, the overlap of adjacent quantum causal states \(\left| {S(t)} \right\rangle\) and \(\left| {S(t + \delta t)} \right\rangle\) is typically very large for small δt, and hence the additional interpolated states are very similar to the existing states. This overlap tends to one as the timestep vanishes, and thus as we tend to the continuum limit, the additional quantum causal states are essentially identical to those already considered, and hence the entropy increase with refinement should vanish. Thus we expect that, with the exception of pathological cases (such as when the distribution ϕ(t) involves arbitrarily sharp peaks), the quantum memory requirement will be finite.

It is prudent of course, to remark on the experimental feasibility of our proposal. Recent works have succeeded in realising quantum machines for discrete-time processes, and demonstrating their advantage over classical devices.25 Much effort has been spent on developing state-engineering protocols.37,38,39,40,41 Ultracold atoms in optical lattices42 may provide a route to realise discrete-time simulation, and of particular promise with regards to our proposal for simulating continuous-time processes, photon pulses have been shaped over distances of several hundred metres.43 This would allow for the digital simulation we propose, with the requirement of an additional control element needed to achieve the ‘real-time’ analogue simulation. The on-going development of quantum technologies in a panoply of different systems holds much promise for the future implementation of our work.

Future theoretical work in this area can progress in many different directions, including the characterisation of other information-theoretic quantities44,45 for the continuous-time quantum machines, the application to study real-world stochastic systems, and the extension of the protocol to design quantum simulators for models of more general continuous-time processes. Further, while a quantum advantage over classical simulators has been demonstrated, the general optimal construction of quantum machines is unknown, and a subject for future investigation.

Methods

A: Causal architecture for renewal processes

For the purpose of determining their causal architecture, renewal processes can be classified as one of four types.27 Here we review this classification, and describe the corresponding structure of their causal states.

The first class of processes are termed eventually Δ-Poisson. These are processes which exhibit a periodic structure under a Poissonian envelope at long times. Specifically, an eventually Δ-Poisson process is described by an emission probability of the form ϕ(t) = ϕ(τ + (t − τ)modΔ) exp(−(t − τ)/τ L ) for times t ≥ τ, for some τ, τ L , Δ ≥ 0.

The second class of process we consider, eventually Poisson, are a special case of the above, for which Δ = 0. In these processes, there is no structure at long times t > τ beyond the Poissonian decay. That is, specifically, for t > τ we have ϕ(t) = ϕ(τ) exp(−(t − τ)/τ L ).

A yet further constrained form defines our third class, the familiar Poisson process, with Δ = 0 and τ = 0. For these processes, ϕ(t) = exp(t/τ L )/τ L . We find that the conditional emission probability density of this class is time-independent.

Finally, the fourth class, not eventually Δ-Poisson, encompasses all other processes not of the above forms.

We now present the causal architecture for each of the above process classes.27 We present this architecture in a different order to that in which we presented the processes, in order to introduce their different features in order of increasing intricacy.

Recall that two times since last emission \(t_{0^ + }\) and \(t_{0^ + }^\prime\) belong to the same causal state iff they have identical conditional probability distributions \(P\left( {T_{0^ - }|T_{0^ + }} \right)\) for the time to the next emission \(t_{0^ - }\). As a result of this, and because for a Poisson process the conditional emission probability density is time independent, all \(t_{0^ + }\) belong to a single causal state (Fig. 6a) for such processes.

Causal architecture of renewal processes. The causal architecture depends on the class of the renewal process, with a a single causal state for Poisson processes, b an infinite continuum of causal states for not eventually Δ-Poisson processes, and a hybrid of the two for c eventually Poisson and d eventually Δ-Poisson processes

In stark contrast to this simplicity found for Poisson processes, it has been shown that for not eventually Δ-Poisson processes no two different \(t_{0^ + }\) belong to the same causal state.27 Instead we have an infinite continuum of causal states, which the system traverses along between emissions, with all such continuum states returning to the same initial state immediately after an emission event (see Fig. 6b).

The remaining two classes of process are hybrids of the above structures. The eventually Poisson process begins with a continuum of distinct states for times \(t_{0^ + } < \tau\), after which it terminates in a single state upon reaching the Poissonian stage (Fig. 6c), as the conditional emission probability density becomes time-independent for \(t_{0^ + } \ge \tau\).

Finally, the eventually Δ-Poisson process also begins with a continuum of distinct states for \(t_{0^ + } < \tau\), after which the system enters a periodic loop of continuum states of length Δ, mirroring the periodicity of the conditional emission probability density for such processes at times \(t_{0^ + } \ge \tau\) (see Fig. 6d). At these long times, we do not need to track exactly how long it has been since the last emission, but merely how far into the current period we are.

B: Statistical complexity of renewal processes

We now derive expressions for the statistical complexity of the different classes of renewal process. We do so by considering a discretised analogue16 of the continuum causal states, and take the limit of infinitesimal time intervals.

Starting with the case of a Poisson process, due to the single causal state we need not track any information about the time since the last emission; we thus have C μ = 0 and hence the process is memoryless.

We next jump to the case of a not eventually Δ-Poisson process, where we have the perpetual continuum of states. Considering the discrete analogues σ t of the causal states S t , we have states at each t = nδt for \(n \in {\Bbb N}\), where δt is our discretised time interval. Consider now when the system is in causal state σ nδt . In the next time interval δt the system will either make an emission, or progress along the continuum to σ(n+1)δt. This latter event occurs with probability Φ((n + 1)δt)/Φ(nδt) (that is, the conditional probability that the system does not emit before (n + 1)δt given that it did not emit before nδt), and we can consider the system to fictitiously emit a \({\emptyset}\) (null) symbol, representing the lack of a real emission. The alternative outcome is that the system actually emits a real symbol and returns to σ0, which occurs with probability 1 − Φ((n + 1)δt)/Φ(nδt). This is illustrated in Fig. 7. Note that for a Poisson process, σ0 is the lone causal state, and the discretised analogue consists of this single state with both transitions leading back to the same state, with the probabilities as given in the general case.

Discretised analogue of a renewal process. We can construct a discretised analogue of a renewal process, where at each time step δt the system can either emit a symbol 0 and return to the initial causal state, or emit nothing (signified by a null symbol ∅) and proceed to the next state in the chain. The continuous-time scenario follows as the δt → 0 limit

We can construct a transition matrix T that describes the evolution of the system for each timestep. This matrix evolves a state ω according to ω(t + δt) = Tω(t). The transition matrix for a not eventually Δ-Poisson renewal process is given by

The steady state π is defined according to π = Tπ, and thus for n ≥ 1 we have π(σ nδt ) = (Φ(nδt)/Φ((n − 1)δt))π(σ(n−1)δt), and hence iteratively we find π(σnδt) = Φ(nδt)π(σ0). By integrating over Φ(t) to find the appropriate normalisation, we have that as δt → 0, π(σ0) → μδt, and hence π(σ t ) = μΦ(t)δt. Inserting this into the Shannon entropy Eq. (4), we have that the statistical complexity is given by

which clearly diverges logarithmically as the argument of the logarithm vanishes (the number of terms in the sum grows linearly, while the prefactor to the logarithm decays linearly, thus effectively negating each other), and hence whenever such a continuum of causal states occurs (i.e., any renewal process that is not Poisson), the classical memory requirement is infinite. As noted in the main text, the self-assembly of the quantum causal states means that we can effectively treat any renewal process as not eventually Δ-Poisson in the quantum regime, and hence this steady state distribution is sufficient for our purposes. However, we will provide expressions for the steady state distributions and complexity of the eventually Poisson and eventually Δ-Poisson processes for completeness.

For eventually Poisson processes, the continuum has a finite length, after which the system resides in the final causal state until emission. The probability density for the continuum states is as for the above case, and the probability of occupation of the final state can be determined by considering the average time spent in this state, given by the lifetime of the state τ L . Thus, the steady state occupation of this final state is π(σ(τ)) = μΦ(τ)τ L , and the corresponding statistical complexity of the process is

where N = τ/δt.

Finally, for eventually Δ-Poisson processes, we have two segments of continuum per emission; the initial line where each state is occupied at most once, and the periodic continuum where each state can be traversed multiple times per emission. There is a probability Φ(τ) that the system reaches this periodic part on a given emission, and a probability Φ(τ) exp(−mΔ/τ L ) that it makes it through m circuits of this periodic component. Thus, the occupation probability in the steady state of the periodic continuum state σ t (τ ≤ t < τ + Δ) is \(\pi (\sigma _t) = {\mathrm{\Phi }}(t)\mathop {\sum}\nolimits_{m = 0}^\infty {\kern 1pt} {\mathrm{exp}}( - m{\mathrm{\Delta /}}\tau _L)\) = Φ(t)/(1 − exp(−Δ/τ L ). This gives a statistical complexity of

where N1 = τ/δt and N2 = Δ/δt.

C: Memory of decohered model

In the main text we claim that decoherence of the quantum causal states destroys the quantum advantage, and results in a memory cost C μ . We now prove this here by showing the probability distribution of the decohered states is identical to that of the steady states of the classical model, and hence has the same entropy. Consider the probability of the decohered state S D being t:

Note that the resultant model using these decohered states, while not providing any memory savings, does contain oracular information and so would not be a predictive model.

D: Timescale invariance of quantum memory

Here we show that the memory requirement of q-machines is invariant under rescaling of the time variable in the emission probability density. Consider such a rescaling t → αt = z. The wavefunction scales \(\psi (t) \to \sqrt \alpha \psi (z)\) (the factor in front due to normalisation), and the mean firing rate changes μ → αμ. Putting this into the characteristic equation (8), and using the substitution dt = dz/α, we have

where we have defined the function \(f_n^{[\alpha ]}(t) = f_n(t{\mathrm{/}}\alpha )\). Thus, after the rescaling, the characteristic equation is of the same form, solved by the same eigenvalues λ n and the rescaled (and renormalised) eigenfunctions \(f_n^{[\alpha ]}\). As the memory stored depends only on the λ n , it is hence unchanged: C q is timescale invariant.

E: Technical details for uniform emission probability example

Here we provide details of the derivation of the boundedness of the quantum memory for the uniform emission probability case, along with the other associated quantities. Starting with the definition of the process

we can straightforwardly obtain that \(\mu ^{ - 1} = {\int}_0^\tau (t{\mathrm{/}}\tau )\mathrm{d}t = \tau {\mathrm{/}}2\), and

As the time between emissions is guaranteed to be less than τ, we can consider quantum causal states only within the interval [0, τ).

The form of the quantum causal states \(\left| {S_t} \right\rangle = {\int}_0^{\tau - t} {\kern 1pt} \mathrm{d}t^{\prime}(1{\mathrm{/}}\sqrt {\tau - t} )\left| {t^{\prime}} \right\rangle\) follows directly from the definition Eq. (6), and we can construct the appropriate characteristic equation for the process:

Taking the second derivative of both sides of this equation, we find that

and hence the eigenfunctions are of the form f n (t) = A exp(ik n t) + B exp(−ik n t), with \(k_n^2 = 2{\mathrm{/}}\left( {\lambda _n\tau ^2} \right)\). We must now determine the values of k n that are valid, by substituting this solution into the original integral equation. Doing so, we find that for consistency, the following conditions must be satisfied: A = B; and cos (k n τ) = 0. These are satisfied by k n τ = (n − 1/2)π for \(n \in {\Bbb Z}^ +\) (zero and negative integer values of n produce solutions with the same eigenfunctions, by symmetry). Thus, we have that

We now wish to find the Shannon entropy of the λ n . We first show that this entropy is bounded by using an integral test46 for convergence, and then use this to provide a bounded range for C q . Define the function \(\zeta (n) = - \lambda (n){\kern 1pt} {\mathrm{log}}_2\lambda (n)\), where λ(n) = 2/(n − 1/2)2π2, the interpolated continuous analogue of the eigenvalues λ n . The sum of ζ(n) over positive integers n gives the quantum memory cost, and we note that all such values of ζ(n) are finite. Further, we note that with the exception of n = 1, they satisfy ζ(n) > ζ(n + 1) at these integer values, and hence the function is monotonically decreasing for n > 2 (specifically, the continuous function is decreasing for n > 1/2 + exp(ln 2/2 + 2/π)/π). Since the integral test requires the terms to be monotonically decreasing, we can sum up the terms to some finite N > 1, and then show the remainder of the terms converge.

Define the integral \(I(N) = {\int}_N^\infty {\kern 1pt} \zeta (x)\mathrm{d}x\). We find that

which is finite for all integer N > 1, and hence the sum converges, implying a finite value for C q . We can also use this to bound the value of the sum from N to ∞ as being between I(N) and I(N) + ζ(N). For N = 2, this allows us to bound 1.1046 ≲ C q ≲ 1.4174. By calculating additional terms in the sum prior to taking the bound, we can tighten this further; for N = 106, we find that C q ≈ 1.2809, with the additional neglected terms contributing \({\cal O}(10^{ - 5})\) as a correction.

As the excess entropy is a property of the process, rather than the simulator, we can use the same formula as for classical models.27 This reads

Putting the appropriate expressions into this equation, we find that for the uniform emission probability renewal process that the excess entropy is given by \(E = {\mathrm{log}}_2{\kern 1pt} e - 1 \approx 0.4427\), which as expected is less than C q .

F: Technical details for delayed Poisson process example

Here we provide the corresponding details of the derivations for the delayed Poisson process example. Recall that the process is defined by the emission probability density

from which it is straightforward to calculate μ−1 = τ L + τ R , and

We can exploit the causal architecture discussed in Methods A, and identify this as an eventually Poisson process. We thus have a continuum of causal states for \(0 \le t_{0^ + } < \tau _R\), and a single causal state for \(t_{0^ + } \ge \tau _R\). From the mean firing rate and survival probability, we can see that the probability density for all continuum causal states in the steady state is given by (τ L + τ R )−1, while the probability that the eventually Poisson causal state is occupied is τ L /(τ L + τ R ).

While we can approximately determine C q by considering discretised time intervals \(\delta t \ll \tau _L,\tau _R\) and see that the quantum memory appears to converge to a finite value (see Fig. 8), it is not a simple task to find an analytical expression for the continuous time limit. Instead, we shall prove boundedness of the quantum memory by considering a less efficient encoding of the causal states, and proving that this suboptimal encoding scheme has a bounded C q . Specifically, we encode the eventually Poisson state \(\left| {S_{\tau _R}} \right\rangle\) to be orthogonal to the continuum states. The density matrix is now block-diagonal, with one block for the continuum states, and a single element for the eventually Poisson state, and thus the total entropy is the sum of the entropies of the two blocks. The eventually Poisson state block contributes a finite amount (as it is a single element), and we shall now show that the contribution from the continuum block is also finite.

Convergence of quantum memory for delayed Poisson process. a The classical memory requirement C μ for the delayed Poisson process diverges logarithmically with finer discretisation (N + 1 states), while the quantum memory C q appears to converge to a finite value. b Inspection of the eigenvalues of increasingly finer discretisation of the q-machine for the delayed Poisson process shows that the eigenvalues appear to fall off with a 1/n2 dependence. Plots shown for τ R /τ L = 1, and β is a normalisation constant chosen such that \(\mathop {\sum}\nolimits_{n = 101}^\infty {\kern 1pt} \beta {\mathrm{/}}n^2 = \mathop {\sum}\nolimits_{n = 101}^{N + 1} {\kern 1pt} \lambda _n\) for the N = 215 case (eigenvalues ranked largest to smallest)

We can use the characteristic equation (8) to find the entropy contribution from the continuum block. We find that the overlap of two quantum causal states is given by \(\left\langle {S_a|S_b} \right\rangle = {\mathrm{exp}}\left( { - \left| {a - b} \right|{\mathrm{/}}2\tau _L} \right)\), and hence

Differentiating twice, we obtain

and so as with the previous case, the eigenfunctions are of the form f n (t) = A exp(ik n t) + B exp(−ik n t), now with

Again, we substitute into the original integral equation (23), which results in the consistency equations (1 − 2ik n τ L )A = (1 + 2ik n τ L )B and Im((1 + 2ik n τ L )2 exp(ik n τ R )) = 0. Thus, the valid k n satisfy

which, with one exception has one solution in each interval [mπ, (m + 1)π), \(m \in {\Bbb N}\). The exception is during the interval in which \(4\tau _L^2k_n^2 = 1\), in which case there may be two solutions. For values of k n for which \(4\tau _L^2k_n^2 \gg 1\), this is approximately satisfied by k n τ R = nπ, for \(n \in {\Bbb Z}^ +\), which leads to corresponding eigenvalues

Strictly, these approximate eigenvalues are overestimations, as the solutions to Eq. (26) for large k n are slightly larger than nπ/τ R . However, as these \(\lambda _n \ll 1\), this also overestimates their contribution to the entropy. We further note that when \(4n^2\pi ^2\tau _L^2{\mathrm{/}}\tau _R^2 \gg 1\) (i.e., for sufficiently large n), the λ n scale approximately as 1/n2. Again, this simplification overestimates the eigenvalues, and their contribution to the entropy. We can then break up the entropy into two parts; that from the finite number of terms preceeding the values of n for which the above approximations are valid (which, due to the finite number of terms, gives a finite contribution), and those that come from the terms in which we have such large n. These latter terms also have a finite contribution to the entropy due to their 1/n2 scaling, and hence the total entropy is finite. This completes our proof that the quantum memory requirement for the delayed Poisson process is finite, though unlike the previous example we do not have an analytical expression for this value.

We can again calculate the excess entropy using Eq. (20), and after some straightforward (if somewhat tedious) integration we obtain that \(E = {\mathrm{log}}_2(\tau _R{\mathrm{/}}\tau _L + 1)\) − \({\mathrm{log}}_2{\kern 1pt} \mathrm{e}{\mathrm{/}}(\tau _L{\mathrm{/}}\tau _R + 1)\), which lies below and follows similar behaviour to the memory requirement C q .

Data availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Crutchfield, J. P. & Young, K. Inferring statistical complexity. Phys. Rev. Lett. 63, 105 (1989).

Shalizi, C. R. & Crutchfield, J. P. Computational mechanics: pattern and prediction, structure and simplicity. J. Stat. Phys. 104, 817–879 (2001).

Crutchfield, J. P. Between order and chaos. Nat. Phys. 8, 17–24 (2012).

Feynman, R. P. Simulating physics with computers. Int. J. Theor. Phys. 21, 467–488 (1982).

Crutchfield, J. P. & Feldman, D. P. Statistical complexity of simple one-dimensional spin systems. Phys. Rev. E 55, R1239 (1997).

Tino, P. & Koteles, M. Extracting finite-state representations from recurrent neural networks trained on chaotic symbolic sequences. IEEE Trans. Neural Netw. 10, 284–302 (1999).

Palmer, A. J., Fairall, C. W. & Brewer, W. A. Complexity in the atmosphere. IEEE Trans. Geosci. Remote Sens. 38, 2056–2063 (2000).

Clarke, R. W., Freeman, M. P. & Watkins, N. W. Application of computational mechanics to the analysis of natural data: an example in geomagnetism. Phys. Rev. E 67, 016203 (2003).

Park, J. B., Lee, J. W., Yang, J.-S., Jo, H.-H. & Moon, H.-T. Complexity analysis of the stock market. Phys. A: Stat. Mech. Appl. 379, 179–187 (2007).

Li, C.-B., Yang, H. & Komatsuzaki, T. Multiscale complex network of protein conformational fluctuations in single-molecule time series. Proc. Natl Acad. Sci. USA 105, 536–541 (2008).

Crutchfield, J. P., Ellison, C. J. & Mahoney, J. R. Time’s barbed arrow: irreversibility, crypticity, and stored information. Phys. Rev. Lett. 103, 094101 (2009).

Löhr, W. Properties of the statistical complexity functional and partially deterministic HMMs. Entropy 11, 385–401 (2009).

Haslinger, R., Klinkner, K. L. & Shalizi, C. R. The computational structure of spike trains. Neural Comput. 22, 121–157 (2010).

Kelly, D., Dillingham, M., Hudson, A. & Wiesner, K. A new method for inferring hidden markov models from noisy time sequences. PLoS ONE 7, e29703 (2012).

Garner, A. J. P., Thompson, J., Vedral, V. & Gu, M. Thermodynamics of complexity and pattern manipulation. Phys. Rev. E 95, 042140 (2017).

Marzen, S. E. & Crutchfield, J. P. Informational and causal architecture of discrete-time renewal processes. Entropy 17, 4891–4917 (2015).

Gu, M., Wiesner, K., Rieper, E. & Vedral, V. Quantum mechanics can reduce the complexity of classical models. Nat. Commun. 3, 762 (2012).

Suen, W. Y., Thompson, J., Garner, A. J. P., Vedral, V. & Gu, M. The classical-quantum divergence of complexity in modelling spin chains. Quantum 1, 25 (2017).

Mahoney, J. R., Aghamohammadi, C. & Crutchfield, J. P. Occam’s quantum strop: synchronizing and compressing classical cryptic processes via a quantum channel. Sci. Rep. 6, 20495 (2016).

Riechers, P. M., Mahoney, J. R., Aghamohammadi, C. & Crutchfield, J. P. Minimized state complexity of quantum-encoded cryptic processes. Phys. Rev. A 93, 052317 (2016).

Aghamohammadi, C., Mahoney, J. R. & Crutchfield, J. P. The ambiguity of simplicity in quantum and classical simulation. Phys. Lett. A 381, 1223–1227 (2016).

Aghamohammadi, C., Mahoney, J. R. & Crutchfield, J. P. Extreme quantum advantage when simulating classical systems with long-range interaction. Sci. Rep. 7, 6735 (2017).

Garner, A. J. P., Liu, Q., Thompson, J., Vedral, V. & Gu, M. Provably unbounded memory advantage in stochastic simulation using quantum mechanics. New. J. Phys. 19, 103009 (2017).

Thompson, J., Garner, A. J. P., Vedral, V. & Gu, M. Using quantum theory to simplify input-output processes. npj Quantum Inf. 3, 6 (2017).

Palsson, M. S., Gu, M., Ho, J., Wiseman, H. M. & Pryde, G. J. Experimentally modeling stochastic processes with less memory by the use of a quantum processor. Sci. Adv. 3, e1601302 (2017).

Riechers, P. M. & Crutchfield, J. P. Beyond the spectral theorem: spectrally decomposing arbitrary functions of nondiagonalizable operators. Preprint at arXiv:1607.06526 (2016).

Marzen, S. & Crutchfield, J. P. Informational and causal architecture of continuous-time renewal processes. J. Stat. Phys. 168, 109 (2017).

Smith, W. L. Renewal theory and its ramifications. J. R. Stat. Soc. Series B (Methodological) 20, 243–302 (1958).

Barbu, V. S. & Limnios, N. Semi-Markov Chains and Hidden Semi-Markov Models toward Applications: Their Use in Reliability and DNA Analysis, Vol. 191 (Springer Science & Business Media, New York, 2009).

Doob, J. L. Renewal theory from the point of view of the theory of probability. Trans. Am. Math. Soc. 63, 422–438 (1948).

Kalashnikov, V. V. Mathematical Methods in Queuing Theory, Vol. 271 (Springer Science & Business Media, Netherlands, 2013).

Gerstner, W. & Kistler, W. M. Spiking Neuron Models: Single Neurons, Populations, Plasticity (Cambridge University Press, UK, 2002).

Marzen, S. E., DeWeese, M. R. & Crutchfield, J. P. Time resolution dependence of information measures for spiking neurons: scaling and universality. Front. Comput. Neurosci. 9, 105 (2015).

Khintchine, A. Korrelationstheorie der stationären stochastischen Prozesse. Math. Ann. 109, 604–615 (1934).

Crutchfield, J. P., Ellison, C. J., James, R. G. & Mahoney, J. R. Synchronization and control in intrinsic and designed computation: an information-theoretic analysis of competing models of stochastic computation. Chaos: Interdiscip. J. Nonlinear Sci. 20, 037105 (2010).

Nielsen, M. A. & Chuang, I. Quantum Computation and Quantum Information (Cambridge University Press, UK, 2000).

Verstraete, F., Wolf, M. M. & Cirac, J. I. Quantum computation and quantum-state engineering driven by dissipation. Nat. Phys. 5, 633–636 (2009).

Yi, W., Diehl, S., Daley, A. J. & Zoller, P. Driven-dissipative many-body pairing states for cold fermionic atoms in an optical lattice. New. J. Phys. 14, 055002 (2012).

Hauke, P., Sewell, R. J., Mitchell, M. W. & Lewenstein, M. Quantum control of spin correlations in ultracold lattice gases. Phys. Rev. A 87, 021601 (2013).

Pedersen, M. K., Sørensen, J. J. W. H., Tichy, M. C. & Sherson, J. F. Many-body state engineering using measurements and fixed unitary dynamics. New. J. Phys. 16, 113038 (2014).

Elliott, T. J., Kozlowski, W., Caballero-Benitez, S. F. & Mekhov, I. B. Multipartite entangled spatial modes of ultracold atoms generated and controlled by quantum measurement. Phys. Rev. Lett. 114, 113604 (2015).

Lewenstein, M., Sanpera, A. & Ahufinger, V. Ultracold Atoms in Optical Lattices: Simulating Quantum Many-Body Systems (Oxford University Press, UK, 2012).

Nisbet-Jones, P. B. R., Dilley, J., Ljunggren, D. & Kuhn, A. Highly efficient source for indistinguishable single photons of controlled shape. New. J. Phys. 13, 103036 (2011).

James, R. G., Ellison, C. J. & Crutchfield, J. P. Anatomy of a bit: information in a time series observation. Chaos 21, 037109 (2011).

Marzen, S. & Crutchfield, J. P. Information anatomy of stochastic equilibria. Entropy 16, 4713–4748 (2014).

Knopp, K. Infinite Sequences and Series (Courier Corporation, New York, 1956).

Acknowledgements

We thank Andrew Garner, Felix Binder and Suen Whei Yeap for useful discussions. This work was funded by Singapore National Research Foundation Fellowship NRF-NRFF2016-02. M.G. is also financially supported by the John Templeton Foundation Grant 53914 “Occam’s quantum mechanical razor: Can quantum theory admit the simplest understanding of reality?” and the Foundational Questions Institute. T.J.E. thanks the Centre for Quantum Technologies, National University of Singapore and the Department of Physics, University of Oxford for their hospitality.

Author information

Authors and Affiliations

Contributions

T.J.E. carried out the calculations and wrote the paper. Both authors contributed to the research conception and discussions.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Elliott, T.J., Gu, M. Superior memory efficiency of quantum devices for the simulation of continuous-time stochastic processes. npj Quantum Inf 4, 18 (2018). https://doi.org/10.1038/s41534-018-0064-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-018-0064-4

This article is cited by

-

Implementing quantum dimensionality reduction for non-Markovian stochastic simulation

Nature Communications (2023)

-

Surveying Structural Complexity in Quantum Many-Body Systems

Journal of Statistical Physics (2022)