Abstract

Background

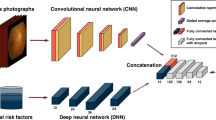

Analyzing fundus images with deep learning techniques is promising for screening systematic diseases. However, the quality of the rapidly increasing number of studies was variable and lacked systematic evaluation.

Objective

To systematically review all the articles that aimed to predict systemic parameters and conditions using fundus image and deep learning, assessing their performance, and providing suggestions that would enable translation into clinical practice.

Methods

Two major electronic databases (MEDLINE and EMBASE) were searched until August 22, 2023, with keywords ‘deep learning’ and ‘fundus’. Studies using deep learning and fundus images to predict systematic parameters were included, and assessed in four aspects: study characteristics, transparent reporting, risk of bias, and clinical availability. Transparent reporting was assessed by the TRIPOD statement, while the risk of bias was assessed by PROBAST.

Results

4969 articles were identified through systematic research. Thirty-one articles were included in the review. A variety of vascular and non-vascular diseases can be predicted by fundus images, including diabetes and related diseases (19%), sex (22%) and age (19%). Most of the studies focused on developed countries. The models’ reporting was insufficient in determining sample size and missing data treatment according to the TRIPOD. Full access to datasets and code was also under-reported. 1/31(3.2%) study was classified as having a low risk of bias overall, whereas 30/31(96.8%) were classified as having a high risk of bias according to the PROBAST. 5/31(16.1%) of studies used prospective external validation cohorts. Only two (6.4%) described the study’s calibration. The number of publications by year increased significantly from 2018 to 2023. However, only two models (6.5%) were applied to the device, and no model has been applied in clinical.

Conclusion

Deep learning fundus images have shown great potential in predicting systematic conditions in clinical situations. Further work needs to be done to improve the methodology and clinical application.

摘要

背景: 深度学习技术分析眼底图像有助于筛查全身性疾病。然而, 数量迅速增加的研究, 其质量存在差异, 并且缺乏系统性评估。目的: 对所有旨在使用眼底图像和深度学习预测系统参数和条件的文献进行系统回顾, 评估其性能, 并提供促进临床实践转化的建议。方法: 截至2023年8月22日, 使用关键词“深度学习”和“眼底”检索了两个主要的电子数据库 (MEDLINE和EMBASE) 。包括使用深度学习和眼底图像预测系统参数的研究, 并从四个方面进行评估: 研究特征、透明报告、偏倚风险和临床可用性。透明报告由TRIPOD声明评估, 而偏倚风险由PROBAST评估。结果: 系统性搜索共4969篇文章, 其中31篇被纳入综述。眼底图像可预测各种血管性和非血管性疾病, 包括糖尿病及相关疾病 (19%) 、性别 (22%) 和年龄 (19%) 。大多数研究集中在发达国家。根据TRIPOD, 在确定样本大小和缺失数据处理方面的报告不足。对于数据库和代码完整访问的报道也存在不足。根据PROBAST, 1/31 (3.2%) 被分类为总体偏倚风险低, 而30/31 (96.8%) 被分类为总体偏倚风险高。而5/31 (16.1%) 研究使用了前瞻性外部验证队列。只有两项 (6.4%) 描述了研究的校准情况。从2018年到2023年, 每年的发表文献数量显著增加。然而, 只有两个模型 (6.5%) 应用于设备, 没有模型应用于临床。结论: 深度学习眼底图像在预测临床情况方面展现出巨大潜力。需要进一步努力改进方法学和临床应用。

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 18 print issues and online access

$259.00 per year

only $14.39 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Raw data are available on request from the corresponding author.

References

Liew G, Gopinath B, White AJ, Burlutsky G, Yin Wong T, Mitchell P. Retinal vasculature fractal and stroke mortality. Stroke. 2021;52:1276–82.

Patton N, Aslam TM, MacGillivray T, Deary IJ, Dhillon B, Eikelboom RH, et al. Retinal image analysis: concepts, applications and potential. Prog Retin Eye Res. 2006;25:99–127.

Forster RB, Garcia ES, Sluiman AJ, Grecian SM, McLachlan S, MacGillivray TJ, et al. Retinal venular tortuosity and fractal dimension predict incident retinopathy in adults with type 2 diabetes: the Edinburgh Type 2 Diabetes Study. Diabetologia. 2021;64:1103–12.

Wong TY, Knudtson MD, Klein R, Klein BE, Meuer SM, Hubbard LD. Computer-assisted measurement of retinal vessel diameters in the Beaver Dam Eye Study: methodology, correlation between eyes, and effect of refractive errors. Ophthalmology. 2004;111:1183–90.

Thom S, Stettler C, Stanton A, Witt N, Tapp R, Chaturvedi N, et al. Differential effects of antihypertensive treatment on the retinal microcirculation: an anglo-scandinavian cardiac outcomes trial substudy. Hypertension. 2009;54:405–8.

Czakó C, Kovács T, Ungvari Z, Csiszar A, Yabluchanskiy A, Conley S, et al. Retinal biomarkers for Alzheimer’s disease and vascular cognitive impairment and dementia (VCID): implication for early diagnosis and prognosis. Geroscience. 2020;42:1499–525.

Gamble L, Mash AJ, Burdan T, Ruiz RS, Spivey BE. Ophthalmology (eye physician and surgeon) manpower studies for the United States. Part IV: Ophthalmology manpower distribution 1983. Ophthalmology. 1983;90:47a–64a.

Yuan M, Chen W, Wang T, Song Y, Zhu Y, Chen C, et al. Exploring the growth patterns of medical demand for eye care: a longitudinal hospital-level study over 10 years in China. Ann Transl Med. 2020;8:1374.

Celi LA, Mark RG, Stone DJ, Montgomery RA. “Big data” in the intensive care unit. Closing data loop. Am J Respir Crit Care Med. 2013;187:1157–60.

Futoma J, Simons M, Panch T, Doshi-Velez F, Celi LA. The myth of generalisability in clinical research and machine learning in health care. Lancet Digit Health. 2020;2:e489–e492.

Mookiah MRK, Hogg S, MacGillivray TJ, Prathiba V, Pradeepa R, Mohan V, et al. A review of machine learning methods for retinal blood vessel segmentation and artery/vein classification. Med Image Anal. 2021;68:101905.

van Leeuwen KG, Schalekamp S, Rutten M, van Ginneken B, de Rooij M. Artificial intelligence in radiology: 100 commercially available products and their scientific evidence. Eur Radio. 2021;31:3797–804.

Auffermann WF, Gozansky EK, Tridandapani S. Artificial intelligence in cardiothoracic radiology. AJR Am J Roentgenol 2019;212:997–1001.

Jones OT, Matin RN, van der Schaar M, Prathivadi Bhayankaram K, Ranmuthu CKI, Islam MS, et al. Artificial intelligence and machine learning algorithms for early detection of skin cancer in community and primary care settings: a systematic review. Lancet Digit Health. 2022;4:e466–e476.

Phillips M, Marsden H, Jaffe W, Matin RN, Wali GN, Greenhalgh J, et al. Assessment of accuracy of an artificial intelligence algorithm to detect melanoma in images of skin lesions. JAMA Netw Open. 2019;2:e1913436.

Nabi J. Artificial intelligence can augment global pathology initiatives. Lancet. 2018;392:2351–2.

Bera K, Schalper KA, Rimm DL, Velcheti V, Madabhushi A. Artificial intelligence in digital pathology - new tools for diagnosis and precision oncology. Nat Rev Clin Oncol. 2019;16:703–15.

Ting DSJ, Foo VH, Yang LWY, Sia JT, Ang M, Lin H, et al. Artificial intelligence for anterior segment diseases: Emerging applications in ophthalmology. Br J Ophthalmol. 2021;105:158–68.

Collins GS, Reitsma JB, Altman DG, Moons KG. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. BMJ. 2015;350:g7594.

Moons KG, Altman DG, Reitsma JB, Ioannidis JP, Macaskill P, Steyerberg EW, et al. Transparent Reporting of a multivariable prediction model for Individual Prognosis or Diagnosis (TRIPOD): explanation and elaboration. Ann Intern Med. 2015;162:W1–73.

Nagendran M, Chen Y, Lovejoy CA, Gordon AC, Komorowski M, Harvey H, et al. Artificial intelligence versus clinicians: systematic review of design, reporting standards, and claims of deep learning studies. BMJ. 2020;368:m689.

Corti C, Cobanaj M, Marian F, Dee EC, Lloyd MR, Marcu S, et al. Artificial intelligence for prediction of treatment outcomes in breast cancer: systematic review of design, reporting standards, and bias. Cancer Treat Rev. 2022;108:102410.

Wolff RF, Moons KGM, Riley RD, Whiting PF, Westwood M, Collins GS, et al. PROBAST: a tool to assess the risk of bias and applicability of prediction model studies. Ann Intern Med. 2019;170:51–58.

Moons KGM, Wolff RF, Riley RD, Whiting PF, Westwood M, Collins GS, et al. PROBAST: a tool to assess risk of bias and applicability of prediction model studies: explanation and elaboration. Ann Intern Med. 2019;170:W1–w33.

Gallifant J, Zhang J, Del Pilar Arias Lopez M, Zhu T, Camporota L, Celi LA, et al. Artificial intelligence for mechanical ventilation: systematic review of design, reporting standards, and bias. Br J Anaesth. 2022;128:343–51.

Lee AY, Yanagihara RT, Lee CS, Blazes M, Jung HC, Chee YE, et al. Multicenter, head-to-head, real-world validation study of seven automated artificial intelligence diabetic retinopathy screening systems. Diabetes Care. 2021;44:1168–75.

Kaushal A, Altman R, Langlotz C. Geographic distribution of US cohorts used to train deep learning algorithms. JAMA. 2020;324:1212–3.

Schmidhuber J. Deep learning in neural networks: an overview. Neural Netw. 2015;61:85–117.

Blaha MJ. The critical importance of risk score calibration: time for transformative approach to risk score validation? J Am Coll Cardiol. 2016;67:2131–4.

Laukkanen JA, Kunutsor SK. Is ‘re-calibration’ of standard cardiovascular disease (CVD) risk algorithms the panacea to improved CVD risk prediction and prevention? Eur Heart J. 2019;40:632–4.

Funding

This work was supported by National Natural Science Foundation of China (82220108017, 82141128), the Capital Health Research and Development of Special (2020–1–2052), and the Science & Technology Project of Beijing Municipal Science & Technology Commission (Z201100005520045, Z181100001818003).

Author information

Authors and Affiliations

Contributions

YTL and DL conceived the study and designed the study protocol. YTL, RHZ and WBW executed the search and extracted data. YTL performed the initial analysis of data, with all authors contributing to interpretation of data. All authors contributed to critical revision of the manuscript for important intellectual content and approved the final version. WBW is the study guarantor. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, Y., Zhang, R., Dong, L. et al. Predicting systemic diseases in fundus images: systematic review of setting, reporting, bias, and models’ clinical availability in deep learning studies. Eye (2024). https://doi.org/10.1038/s41433-023-02914-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41433-023-02914-0