Abstract

The focal plane of a collimator used for the geometric calibration of an optical camera is a key element in the calibration process. The traditional focal plane of the collimator has only a single aperture light lead-in, resulting in a relatively unreliable calibration accuracy. Here we demonstrate a multi-aperture micro-electro-mechanical system (MEMS) light lead-in device that is located at the optical focal plane of the collimator used to calibrate the geometric distortion in cameras. Without additional volume or power consumption, the random errors of this calibration system are decreased by the multi-image matrix. With this new construction and a method for implementing the system, the reliability of high-accuracy calibration of optical cameras is guaranteed.

Similar content being viewed by others

Introduction

High-accuracy determination of the position of features in the field of view (positioning) is one of the most significant functions of a camera used on a satellite. Knowledge of the inner orientation parameters (IOPs) of an optical camera, such as its principal distance and principal point, is a prerequisite of image positioning1–4. The accuracy of camera calibration strongly influences the accuracy in determining image position. After developing a camera, calibrations of the camera IOPs are necessary.

Many approaches to calibrating the IOPs of optical cameras exist5–14. For a remote sensing camera, the main calibration method is based on measuring angles to known points to determine the principal distance and the principal point of the optics15,16. In this method, an uncalibrated optical camera is placed on a precision turntable to capture the parallel rays of light of star points emitted by a collimator. The camera can obtain multiple positions of the star points and their images in different fields of view (FOVs) as the turntable is rotated. The recorded rotation angle and image coordinates are used to construct an equation that relates each star point to its corresponding location in the image. On the basis of an analysis of camera distortion, the optical camera has its minimum distortion at the location of its principal plane17–19. A least squares method is used to solve the imaging equations to calculate the principal distance and the location of the principal point. In this method, a single-hole light lead-in (SHLL) is positioned at the focal plane of the collimator. The measured results using the SHLL only represent one area of the FOV. To acquire imaging information for the entire FOV, a turntable is needed to access all the necessary points in the FOV. However, multiple sources of error at every rotation angle, such as turntable vibration, angle measurement errors and image positioning errors, may influence the measurement result. In accessing the full FOV, the accumulated error may influence the calibration results. As a result, the calibration accuracy is relatively unreliable.

In this study, we propose and evaluate a micro-electro-mechanical system light lead-in array device (MEMS-LLAD) composed of a porous silicon array; this system is located at the optical focal plane of the collimator used to calibrate an optical remote sensing camera. The result of using the MEMS-LLAD represents the area of one FOV. The rotation time is decreased when acquiring the full FOV, enabling a reduction in measurement error. Moreover, the accuracy in extracting point positions can also be improved because of the multiple aperture structures of the MEMS-LLAD.

Materials and methods

The calibration principle of using a light lead-in collimator

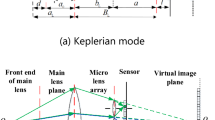

A traditional collimator typically uses a single structure, as shown in Figure 1. Calibration is performed by means of a turntable. From different turntable rotation angles, the camera obtains multiple positions for the star point in different FOVs.

On the basis of the distortion theory of an optical camera and the geometric imaging relationship, a least squares method can be used to calculate the principal distance and the principal point, which can be expressed as17–20:

where (x i,y i) is the coordinate position of the image of the hole in different FOVs in the image space. (x 0,y 0) is the coordinate of the principal point. is the angle of the FOV in the x and y directions. On the basis of Equations (1) and (2), (x i, y i) is the measured position of the image point, and is determined by the turntable. The error of the turntable can be as large as , where ρ is 1/3600 degrees. . The calibration accuracy depends mainly on the positional accuracy of the measured image coordinates (x i,y i). However, the position measurement accuracy when using an SHLL is easily influenced by multiple sources of noise. These errors will result in low calibration accuracy. To address this problem, we propose using multiple aperture arrays to calibrate remote sensing cameras. The structure of the multiple aperture array is shown in Figure 2.

When an MEMS-LLAD is used to perform camera calibration, the image acquired at every rotation angle has multiple image points. Each image point results in a measured point in the FOV. The MEMS-LLAD can cover multiple points in the FOV at every rotation angle. In the MEMS-LLAD, each aperture is encoded and accurately positioned. On the basis of the camera model and the mapping of three-dimensional object coordinates to two-dimensional image coordinates, when ideal rays from the MEMS-LLAD are projected onto the image plane, the ideal pixel image coordinates of the i-th image point are expressed by:

where

and [x i,y i]T are the encoded coordinates of the i-th aperture in the MEMS-LLAD, are the ideal normalized image coordinates, f c is the focal length of the collimator, f is the principal distance, [u 0,v 0]T is the principal point and is the rotation angle of the turntable in x and y directions at t time. When lens distortion is considered, the model can be expanded by inserting a term to account for the distortion position error ε,

where

and . The final mapping of ideal points to distorted image coordinates can be expressed as:

Using a set of image points and rotation angles, we can solve the following cost function to calculate the IOPs:

To accurately determine the coordinates in Equation (8), we utilized multiple image points, correcting the optical aberrations caused by vibration, system errors and extraction errors, to calibrate the camera. Figure 3 shows the principle of position extraction. First, we extracted an array template from the MEMS-LLAD image at its original angle. Second, multiple image points at every rotated angle were used as sub-resolution features (SRFs) to invert the point spread function (PSF) using a sparse total variation frame21. The reconstructed PSF was used to reconstruct a high-resolution image to correct for optical aberrations22. We used the extracted template to perform cross-correlation with the images captured at the different rotation angles. The correlation operation can be expressed as:

where P 0(i,j) is the extracted template image. We used a centroid method to extract the position of each image point from the correlation value.

The centroid value is calculated by the following equation:

Equation (4) can be used to calculate the accurate position at a certain FOV angle (FOVA). On the basis of Equations (1) and (2), the principal distance and principal point can be calculated.

Fabrication of MEMS light lead-in array device

The size of a multi-hole MEMS-LLAD is mainly determined by the size and resolution of the image sensor of the camera. In our uncalibrated camera, the active area of the image sensor is 5.42 mm×6.78 mm. The pixel size is 5.3 μm×5.3 μm. The focal length of the camera and collimator is 2 m and 1.8 m, respectively. The active area of the LLAD is 4.878 mm×6.102 mm. The minimum size of each hole is 4.77 μm×4.77 μm. The LLAD pattern we designed is shown in Figure 4.

Figure 5 shows a schematic of the LLAD structure. The entire structure is a stack. Between the surface on which light is incident to the exit surface, there is an antireflection film, neutral-tinted glass substrate, mask layers and a secondary anti-reflection layer. The neutral-tinted glass is used as the coating substrate. Optical anti radiation quartz glass is used as the glass substrate. The mask layers are composed of gold film and tantalum film. The chromium film is used as a secondary antireflection layer.

Using MEMS machining technology, we established a machining process to fabricate the LLAD used in this work. The fabrication process is shown in Figure 6 and can be summarized in two steps: (1) chromium, gold and tantalum materials are plated on a specified glass substrate; and (2) a photoetching process is performed on the metal layers to create several small apertures. The LLAD includes a glass substrate, a mask layer and an anti-reflective layer; the mask thickness is 1.6 mm. The etched apertures in the mask let light pass through, whereas other parts cannot because they are covered by metal layers. First, a layer of chromium is plated on the glass substrate. The layer of chromium completely attenuates the light with its thickness of 75 nm, which effectively determines the transmissivity of the optical system. Second, the gold membrane is plated on the chromium layer. The gold membrane is a mask layer with a thickness of 200 nm. Third, the tantalum membrane is plated on the gold layer and is a radiation protection layer; its thickness is 60 nm. Fourth, the photoresist is deposited onto the plated substrate by spin coating. A polymethylmethacrylate material is used as the photoresist in the fabrication process. Fifth, proximity lithography is performed to expose the photoresist through the photomask. Sixth, developer is applied to remove the exposed photoresist to form the pattern on the plated substrate. Seventh, a second chromium layer is plated on the cut substrate. This layer is used as the secondary antireflection layer; its thickness is 75 nm. The number of holes in the MEMS LLAD influences system accuracy. On the basis of random errors, the precision of a multi-aperture system is of the single aperture system, when the number of apertures is N.

Results

A customized camera was developed to verify the proposed method, and the MEMS-LLAD was used to set up the experimental system. Figure 7 shows the experimental arrangement. The system included an optical camera, an MEMS-LLAD, a collimator and a high-accuracy turntable. The MEMS-LLAD was installed at the focal plane of the collimator. The collimator provided a target at infinity for the test system. The remote sensing camera is composed of an optical system, an image sensor and a processing circuit. The optical system was a co-axial Schmidt–Cassegrain optical system. By design, the aperture diameter was 203.2 mm, and the focal length was 2032 mm; hence the F/ratio of the optical system was 10. The image sensor was a CMOS detector with an image resolution of 1280×1024 pixels. In the process of adjusting the system, several pieces of auxiliary equipment, such as an optical theodolite and benchmark prisms, were utilized to determine the relative positions of the remote sensing camera and the MEMS-LLAD.

The camera controller set the imaging mode. The three-axis turntable was adjusted evenly using the optical theodolite. The collimator was also adjusted evenly. By adjusting the support tooling of the camera and using the benchmark prisms located on the camera’s optical axis, the camera’s visual axis and collimator were adjusted to share a common axis. The MEMS-LLAD in the collimator was imaged onto the target CMOS sensor. Figure 8 shows the captured images.

As the turntable was rotated, the rotation angle was recorded along with the captured images. The processing circuitry output the star coordinates in real time. Figure 9 shows the position trajectories of the image points in one period.

Least squares two-multiplication regression analysis was used to obtain the optimal estimated values of the IOPs based on the measured centroid positions and the rotation angles. In addition, the SHLL was used to calibrate the IOPs of the camera for reference. Table 1 shows the results obtained using the two methods.

In our experimental process, the MEMS-LLAD was used to calibrate the camera under the same conditions as that for using the SHLL method. We used vibration isolation and maintained a constant room temperature to suppress any environmental influence and provide a consistent imaging environment for the two methods. On the basis of Equation (1), the errors can be expressed as:

where δ y are the position measurement errors, which depend on the accuracy of the extraction algorithm, δ y are the angle measurement errors, F y are the transfer parameters of the centroid displacement errors for the image points and F ω are the transfer parameters of the angle measurement errors. In the SHLL measurement, the centroid extraction accuracy can reach 1/10 of a pixel and δ y=5.3 μm/10=0.53 μm. When using the MEMS-LLAD, we used cross-correlation to extract the point positions. Generally, the extraction accuracy when using cross-correlation can reach 0.01–0.07 pixel23,24. In our method, we utilized the SRF inversion method to correct the optical aberrations. The position measurement errors using this method are smaller than 0.01 pixel, which means . Because the number of turntable positions when using this method is smaller than when using the SHLL, F ω and F y are smaller than when using the SHLL. Therefore, the errors of the IOPs when using this method are reduced than when using the SHLL, which is consistent with the experimental results seen in Table 1. On the basis of the above-mentioned analysis, the MEMS-LLAD method has the merits in the position extraction accuracy and the error transfer parameters, enabling improved calibration precision.

Discussion

In the experimental set-up, the MEMS-LLAD may be affected by turntable vibrations, environmental vibrations, temperature and airflows, leading to errors in calibration. The pattern of the MEMS-LLAD itself may influence the calibration accuracy. The greater the number of apertures used, the less the rotation number and the higher the calibration accuracy that can be obtained. However, a larger number of apertures will result in difficulties with fabrication. On the basis of the SRF inversion algorithm, a stable PSF can be acquired when the number of apertures is larger than 20. In our experiment, the number of apertures was set to 33; other numbers are also feasible. With the number of apertures set at 33, an experiment was performed to test the calibration accuracy of our method in a laboratory held at constant temperature. In addition, the turntable used in the experiments was placed on a gas-floating vibration isolation platform to avoid vibrational disturbances. We tested the calibration accuracy of the principal distance and the principal point while making multiple measurements. We processed tens of thousands of images to determine the calibration accuracy. The variation of the principal distance using images collected within a time interval of 2000 s was determined. Using the data, the mean square error was computed and the variation of the principal distance was determined to be as high as 0.017 mm. The variation in the coordinates of the principal point reached 0.005 mm and 0.0049 mm in the x and y directions, respectively. The camera has 5 m image positioning accuracy. It requires less than 50 μm calibration accuracy of the principal distance and the principal point. The variability was at the micron level and met the camera mapping requirements.

We also compared our method with other calibration methods, such as self-calibration25, orientation model26, arbitrary coplanar circles27 and angle measurement, for calibration accuracy, processing speed and maneuverability. We also tested the calibration precision of conventional measurement angle-based methods using a single point emitted by a collimator. The calibration precision of principal calibration methods for remote sensing cameras has been reported in Refs. 25, 26. Table 2 summarizes the comparisons among the proposed method and other methods. The calibration precision of this method is seen to be better than that of other methods.

Our method uses 12 images to perform each calibration. The bundle adjustment method uses 200 images to perform each calibration28. For a complete calibration, the test field method and the goniometric method need ~20 images29. The angle measurement method requires more than 100 images. Our method requires the smallest number of images, which means that the method consumes the smallest amount of time for each calibration. In addition, our method can perform a calibration without the use of a reference distance. We basically utilized the same calibration set-up as the angle measurement method. As mentioned earlier, self-calibration methods usually require several reference test fields to be viewed from different angles. Other methods, such as the 180° maneuvering method30 and ground control point (GCP)-based method31, usually require many external reference targets. Therefore, our proposed method simplifies the calibration process, is easy to operate and requires less auxiliary equipment and computation time for calibrating remote sensing cameras.

Conclusions

In this paper, we propose a MEMS-LLAD for use in the collimator used for calibrating optical cameras to improve calibration accuracy. The traditional optical focal plane of the collimator typically uses a single light lead-in array. As a result, calibration accuracy is generally unreliable. We prepared an MEMS-LLAD for use at the optical focal plane of the collimator. Without additional volume or power consumption, the random error of this calibration system was decreased using a multi-image matrix.

References

Figoski JW . Quickbird telescope: The reality of large high-quality commercial space optics. Proceedings of SPIE 1999; 3779: 22–30.

Han C . Recent earth imaging commercial satellites with high resolutions. Chinese Journal of Optics and Applied Optics 2010; 3: 202–208 (in Chinese).

Li J, Xing F, Chu D et al. High-accuracy self-calibration for smart, optical orbiting payloads integrated with attitude and position determination. Sensors 2016; 16: 1176.

Li J, Zhang Y, Liu S et al. Self-calibration method based on surface micromaching of light transceiver focal plane for optical camera. Remote Sensing 2016; 8: 893.

Heikkila J, Silven O . A four-step camera calibration procedure with implicit image correction. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition; 17–19 Jun 1997; San Juan, Puerto Rico; 1997: 1106–1112.

Gonzalez-Aguilera D, Rodriguez-Gonzalvez P, Armesto J et al. Trimble Gx200 and Riegl LMS-Z390i sensor self-calibration. Optics Express 2011; 19: 2676–2693.

Lin PD, Sung CK . Comparing two new camera calibration methods with traditional pinhole calibrations. Optics Express 2007; 15: 3012–3022.

Wei Z, Liu X . Vanishing feature constraints calibration method for binocular vision sensor. Optics Express 2008; 23: 18897–18914.

Bauer M, Grießbach D, Hermerschmidt A et al. Geometrical camera calibration with diffractive optical elements. Optics Express 2008; 16: 20241–20248.

Yilmazturk F . Full-automatic self-calibration of color digital cameras using color targets. Optics Express 2011; 19: 18164–18174.

Ricolfe-Viala C, Sanchez-Salmeron A . Camera calibration under optimal conditions. Optics Express 2011; 19: 10769–10775.

Hong Y, Ren G, Liu E . Non-iterative method for camera calibration. Optics Express 2007; 23: 23992–24003.

Ricolfe-Viala C, Sanchez-Salmeron A, Valera A . Calibration of a trinocular system formed with wideangle lens cameras. Optics Express 2012; 20: 27691–27696.

Li J, Liu F, Liu S et al. Optical remote sensor calibration using micromachined multiplexing optical focal planes. IEEE Sensors Journal 2017; 17: 1663–1672.

Fu R, Zhang Y, Zhang J . Study on geometric measurement methods for line-array stereo mapping camera. Spacecraft Recovery & Remote Sensing 2011; 32: 62–67.

Hieronymus J . Comparaision of methods for geometric camera calibration. Proceedings of the XXII ISPRS Congress International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; 25 Aug–1 Sep 2012; Melbourne, Australia; 2012: 595–599.

Yuan F, Qi WJ, Fang AP . Laboratory geometric calibration of areal digital aerial camera. IOP Conference Series: Earth and Environmental Science 2014; 17: 12196.

Chen T, Shibasaki R, Lin Z . A rigorous laboratory calibration method for interior orientation of airborne linear push-broom camera. Photogrammetric Engineering & Remote Sensing 2007; 4: 369–374.

Wu G, Han B, He X . Calibration of geometric parameters of line array CCD camera based on exact measuring angle in lab. Optics and Precision Engineering 2007; 15: 1628–1632 (in Chinese).

Yuan F, Qi W, Fang A et al. Laboratory geometric calibration of non-metric digital camera. Proceedings of SPIE 2013; 8921: 99–103.

Li J, Liu Z, Liu F . Using sub-resolution features for self-compensation of the modulation transfer function in remote sensing. Optics Express 2017; 25: 4018–4037.

Li J, Xing F, Sun T et al. Efficient assessment method of on-board modulation transfer function of optical remote sensing sensors. Optics express 2015; 23: 6187–6208.

Ackermann F . Digital image correlation: Performance and potential application in photogrammetry. The Photogrammetric Record 1984; 11: 429–439.

Ackermann F . High precision digital image correlation. Proceedings of the 39th Photogrammetric Week; Baden-Württemberg, Germany; 1983: 231–243.

Zhang CS, Fraser CS, Liu SJ . Interior orientation error modeling and correction for precise georeferencing of satellite imagery. Proceedings of XXII ISPRS Congress, International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; 25 Aug–1 Sep 2012; Melbourne, Australia; 2012: 285–290.

Lichti DD, Kim C . A comparison of three geometric self-calibration methods for range cameras. Remote Sensing 2011; 3: 1014–1028.

Chen Q, Wu H, Wada T . Camera calibration with two arbitrary coplanar circles. European Conference on Computer Vision. 11–14 May, 2004; Prague, Czech Republic; vol. 3023: 521–532.

Lipski C, Bose D, Eisemann M et al. Sparse bundle adjustment speedup strategies. Paper presented at the 18th International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision; 1–4 Feb 2010; Plzen, Czech Republic; 2010: 85–88.

Hieronymus J . Comparison of methods for geometric camera calibration. Proceedings of XXII ISPRS Congress, Remote Sensing and Spatial Information Sciences; 25 Aug to 1 Sep 2010; Melbourne, Australia; 2010: 85–88.

Fourest S, Kubik P, Lebègue L et al. Star-based methods for Pleiades HR commissioning. Proceedings of XXII ISPRS Congress, Remote Sensing and Spatial Information Sciences; Aug 25–Sep 01, 2012; Melbourne, Australia; 25: 531-536.

Greslou Daniel, de Lussy Françoise, Amberg Virginie et al. Pleiades-HR 1A&1B image quality commissioning: Innovative geometric calibration methods and results. Proceedings of SPIE 2013; 8866 10.1117/12.2023877.

Acknowledgements

This work is supported by National Science Foundation of China (no. 61505093, 61505190) and the National Key Research and Development Plan (2016YFC0103600).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Competing interests

The authors declare no conflict of interest.

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Li, J., Liu, Z. Optical focal plane based on MEMS light lead-in for geometric camera calibration. Microsyst Nanoeng 3, 17058 (2017). https://doi.org/10.1038/micronano.2017.58

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/micronano.2017.58