Abstract

There is increasing evidence that genome-wide association (GWA) studies represent a powerful approach to the identification of genes involved in common human diseases. We describe a joint GWA study (using the Affymetrix GeneChip 500K Mapping Array Set) undertaken in the British population, which has examined ∼2,000 individuals for each of 7 major diseases and a shared set of ∼3,000 controls. Case-control comparisons identified 24 independent association signals at P < 5 × 10-7: 1 in bipolar disorder, 1 in coronary artery disease, 9 in Crohn’s disease, 3 in rheumatoid arthritis, 7 in type 1 diabetes and 3 in type 2 diabetes. On the basis of prior findings and replication studies thus-far completed, almost all of these signals reflect genuine susceptibility effects. We observed association at many previously identified loci, and found compelling evidence that some loci confer risk for more than one of the diseases studied. Across all diseases, we identified a large number of further signals (including 58 loci with single-point P values between 10-5 and 5 × 10-7) likely to yield additional susceptibility loci. The importance of appropriately large samples was confirmed by the modest effect sizes observed at most loci identified. This study thus represents a thorough validation of the GWA approach. It has also demonstrated that careful use of a shared control group represents a safe and effective approach to GWA analyses of multiple disease phenotypes; has generated a genome-wide genotype database for future studies of common diseases in the British population; and shown that, provided individuals with non-European ancestry are excluded, the extent of population stratification in the British population is generally modest. Our findings offer new avenues for exploring the pathophysiology of these important disorders. We anticipate that our data, results and software, which will be widely available to other investigators, will provide a powerful resource for human genetics research.

Similar content being viewed by others

Main

Despite extensive research efforts for more than a decade, the genetic basis of common human diseases remains largely unknown. Although there have been some notable successes1, linkage and candidate gene association studies have often failed to deliver definitive results. Yet the identification of the variants, genes and pathways involved in particular diseases offers a potential route to new therapies, improved diagnosis and better disease prevention. For some time it has been hoped that the advent of genome-wide association (GWA) studies would provide a successful new tool for unlocking the genetic basis of many of these common causes of human morbidity and mortality1.

Three recent advances mean that GWA studies that are powered to detect plausible effect sizes are now possible2. First, the International HapMap resource3, which documents patterns of genome-wide variation and linkage disequilibrium in four population samples, greatly facilitates both the design and analysis of association studies. Second, the availability of dense genotyping chips, containing sets of hundreds of thousands of single nucleotide polymorphisms (SNPs) that provide good coverage of much of the human genome, means that for the first time GWA studies for thousands of cases and controls are technically and financially feasible. Third, appropriately large and well-characterized clinical samples have been assembled for many common diseases.

The Wellcome Trust Case Control Consortium (WTCCC) was formed with a view to exploring the utility, design and analyses of GWA studies. It brought together over 50 research groups from the UK that are active in researching the genetics of common human diseases, with expertise ranging from clinical, through genotyping, to informatics and statistical analysis. Here we describe the main experiment of the consortium: GWA studies of 2,000 cases and 3,000 shared controls for 7 complex human diseases of major public health importance—bipolar disorder (BD), coronary artery disease (CAD), Crohn’s disease (CD), hypertension (HT), rheumatoid arthritis (RA), type 1 diabetes (T1D), and type 2 diabetes (T2D). Two further experiments undertaken by the consortium will be reported elsewhere: a GWA study for tuberculosis in 1,500 cases and 1,500 controls, sampled from The Gambia; and an association study of 1,500 common controls with 1,000 cases for each of breast cancer, multiple sclerosis, ankylosing spondylitis and autoimmune thyroid disease, all typed at around 15,000 mainly non-synonymous SNPs. By simultaneously studying seven diseases with differing aetiologies, we hoped to develop insights, not only into the specific genetic contributions to each of the diseases, but also into differences in allelic architecture across the diseases. A further major aim was to address important methodological issues of relevance to all GWA studies, such as quality control, design and analysis. In addition to our main association results, we address several of these issues below, including the choice of controls for genetic studies, the extent of population structure within Great Britain, sample sizes necessary to detect genetic effects of varying sizes, and improvements in genotype-calling algorithms and analytical methods.

Samples and experimental analyses

Individuals included in the study were living within England, Scotland and Wales (‘Great Britain’) and the vast majority had self-identified themselves as white Europeans (153 individuals with non-Caucasian ancestry were excluded from final analysis—see below). The seven conditions selected for study are all common familial diseases of major public health importance both in the UK and globally4, and for which suitable nationally representative sample sets were available. The control individuals came from two sources: 1,500 individuals from the 1958 British Birth Cohort (58C) and 1,500 individuals selected from blood donors recruited as part of this project (UK Blood Services (UKBS) controls). See Methods and Supplementary Table 1 for sample recruitment, phenotypes and summary details for each collection.

We adopted an experimental design with 2,000 cases for each disease and 3,000 combined controls. All 17,000 samples were genotyped with the GeneChip 500K Mapping Array Set (Affymetrix chip), which comprises 500,568 SNPs, as described in Methods. The power of this study (estimated from simulations that mimic linkage disequilibrium patterns in the HapMap Caucasian sample (CEU), see Methods) averaged across SNPs with minor allele frequencies (MAFs) above 5% is estimated to be 43% for alleles with a relative risk of 1.3, increasing to 80% for a relative risk of 1.5, for a P-value threshold of 5 × 10-7 (Supplementary Table 2).

We developed a new algorithm, CHIAMO, which we applied to simultaneously call the genotypes from all individuals (see Methods and Supplementary Information). Cross-platform comparison showed CHIAMO to outperform BRLMM (the standard Affymetrix algorithm) by having an error rate under 0.2% (Supplementary Table 3), and comparison of 108 duplicate genotypes in our study gave a discordance rate of 0.12%.

We excluded 809 samples after checks for contamination, false identity, non-Caucasian ancestry and relatedness (see Methods and Supplementary Table 4); 16,179 individuals remained in the study.

Genome-wide, 469,557 SNPs (93.8%) passed our quality control filters (described in Methods) giving an average call rate of 99.63%. Of those, 392,575 have study-wide MAFs > 1% (45,106 have MAFs < 0.1%; see also Supplementary Figs 1 and 2). Initial analyses of the polymorphic SNPs suggest that patterns of linkage disequilibrium in our samples are very similar to those in HapMap (Supplementary Fig. 3). Therefore, we expect genome coverage with the Affymetrix 500K set in this study to be similar to that estimated for the HapMap CEU panel2.

All SNPs passing quality control filters were used in the association analyses, although power is very low for SNPs with low MAFs (unless they have unusually large effects). On visual inspection of the cluster plots of SNPs showing apparently strong association, we removed a further 638 SNPs with poor clustering.

Control groups

Our main purpose in using two control groups was to assess possible bias in ascertaining control samples. In addition, noting that DNA sample processing differed between these groups, comparison of control groups also provides a check for effects of differential genotyping errors as a result of differences in DNA collection and preparation. Figure 1a shows the results of 1-d.f. Mantel-extension tests5 for differences in allele frequencies of SNPs between subjects from the 58BC and UKBS collections, stratified by 12 broad regions of Great Britain (see Supplementary Table 5 and Supplementary Fig. 4 for region definitions). The associated quantile-quantile plot (see Methods for background) in Fig. 1b shows good agreement with the null distribution (similar results are obtained for tests that do not stratify by geography, data not shown). The fact that we see few significant differences between these two control groups despite the fact that they differ in population groups sampled, DNA processing, and age, indicates that there would be little bias due to use of either sample as a control group for any of the case series, and justifies our combining of the two control groups to form a single group of 3,000 subjects for our main analyses.

a, P values from the trend test for differences between SNP allele frequencies in the two control groups, stratified by geographical region. SNPs have been excluded on the basis of failure in a test for Hardy–Weinberg equilibrium in either control group considered separately, a low call rate, or if minor allele frequency is less than 1%, but not on the basis of a difference between control groups. Green dots indicate SNPs with a P value <1 × 10-5. b, Quantile-quantile plots of these test statistics. In this and subsequent quantile-quantile plots, the shaded region is the 95% concentration band (see Methods).

One consequence of using a shared control group (for which detailed phenotyping for all traits of interest is not available) relates to the potential for misclassification bias: a proportion of the controls is likely to have the disease of interest (and therefore might meet the criteria for inclusion as a case) and some others will develop it in the future. However, the effect this has on power is modest unless the extent of misclassification bias is substantial; for example, if 5% of controls would meet the definition of cases at the same age, the loss of power is approximately the same as that due to a reduction of the sample size by 10%6. Even for the higher prevalence conditions examined by the WTCCC (such as HT, CAD and T2D), the precise ascertainment schemes used here (which enriched for more extreme phenotypes and/or strong family history) will have limited the proportions of controls meeting case criteria to low levels (for example, to <5%). Although a study design which used ‘hypercontrols’ (that is, selection of control individuals from the lower extremity of the relevant trait distribution) would generally be the most powerful approach in a study focusing on one disease, the merits of such an approach need to be weighed against the additional costs associated with the need to phenotype and genotype each control sample.

Geographical variation and population structure

An additional cause of false positive findings is hidden population structure. Case and control samples may differ in the distribution of their ancestry, either owing to control sampling effects, as discussed above, or to confounding when different ancestries carry higher disease risk and are, as a result, over-represented in cases. Even after exclusion of individuals with evidence of recent non-European ancestry, the British population is heterogeneous, having been shaped by several waves of immigration from southern and northern Europe. Whether the differences between these incoming populations are sufficiently large to distort the findings of population-based case-control studies is an open question.

We first examined our samples for non-European ancestry, using multidimensional scaling after ‘seeding’ our data with those from the three HapMap analysis panels (see Supplementary Fig. 5 and Methods), and excluded 153 individuals on this basis. We next looked for evidence of population heterogeneity by studying allele frequency differences between the 12 broad geographical regions (defined in Supplementary Fig. 4). The results for these 11-d.f. tests and associated quantile-quantile plots are shown in Fig. 2. Widespread small differences in allele frequencies are evident as an increased slope of the line (Fig. 2b); in addition, a few loci show much larger differences (Fig. 2a and Supplementary Fig. 6).

a, P values for the 11-d.f. test for difference in SNP allele frequencies between geographical regions, within the 9 collections. SNPs have been excluded using the project quality control filters described in Methods. Green dots indicate SNPs with a P value <1 × 10-5. b, Quantile-quantile plots of these test statistics. SNPs at which the test statistic exceeds 100 are represented by triangles at the top of the plot, and the shaded region is the 95% concentration band (see Methods). Also shown in blue is the quantile-quantile plot resulting from removal of all SNPs in the 13 most differentiated regions (Table 1).

Thirteen genomic regions showing strong geographical variation are listed in Table 1, and Supplementary Fig. 7 shows the way in which their allele frequencies vary geographically. The predominant pattern is variation along a NW/SE axis. The most likely cause for these marked geographical differences is natural selection, most plausibly in populations ancestral to those now in the UK. Variation due to selection has previously been implicated at LCT (lactase) and major histocompatibility complex (MHC)7,8,9, and within-UK differentiation at 4p14 has been found independently10, but others seem to be new findings. All but three of the regions contain known genes. Aside from evolutionary interest, genes showing evidence of natural selection are particularly interesting for the biology of traits such as infectious diseases; possible targets for selection include NADSYN1 (NAD synthetase 1) at 11q13, which could have a role in prevention of pellagra, as well as TLR1 (toll-like receptor 1) at 4p14, for which a role in the biology of tuberculosis and leprosy has been suggested10.

There may be important population structure that is not well captured by current geographical region of residence. Present implementations of strongly model-based approaches such as STRUCTURE11,12 are impracticable for data sets of this size, and we reverted to the classical method of principal components13,14, using a subset of 197,175 SNPs chosen to reduce inter-locus linkage disequilibrium. Nevertheless, four of the first six principal components clearly picked up effects attributable to local linkage disequilibrium rather than genome-wide structure. The remaining two components show the same predominant geographical trend from NW to SE but, perhaps unsurprisingly, London is set somewhat apart (Supplementary Fig. 8).

The overall effect of population structure on our association results seems to be small, once recent migrants from outside Europe are excluded. Estimates of over-dispersion of the association trend test statistics (usually denoted λ; ref. 15) ranged from 1.03 and 1.05 for RA and T1D, respectively, to 1.08–1.11 for the remaining diseases. Some of this over-dispersion could be due to factors other than structure, and this possibility is supported by the fact that inclusion of the two ancestry informative principal components as covariates in the association tests reduced the over-dispersion estimates only slightly (Supplementary Table 6), as did stratification by geographical region. This impression is confirmed on noting that P values with and without correction for structure are similar (Supplementary Fig. 9). We conclude that, for most of the genome, population structure has at most a small confounding effect in our study, and as a consequence the analyses reported below do not correct for structure. In principle, apparent associations in the few genomic regions identified in Table 1 as showing strong geographical differentiation should be interpreted with caution, but none arose in our analyses.

Disease association results

We assessed evidence for association in several ways (see Methods for details), drawing on both classical and bayesian statistical approaches. For polymorphic SNPs on the Affymetrix chip, we performed trend tests (1 degree of freedom16) and general genotype tests (2 degrees of freedom16, referred to as genotypic) between each case collection and the pooled controls, and calculated analogous Bayes factors. There are examples from animal models where genetic effects act differently in males and females17, and to assess this in our data we applied a sex-differentiated test which is sensitive to associations of a different magnitude and/or direction in the two sexes.

Our study also allows us to look for loci which may have an effect in more than one disease. To assess this, we compared our common controls with all cases in each of three natural groupings of diseases: CAD+HT+T2D (metabolic and cardiovascular phenotypes with potential aetiological overlap, for example, involving defects in insulin action); RA+T1D (already known to share common loci); and CD+RA+T1D (all autoimmune diseases).

To help to capture putative disease loci not on the Affymetrix chip we used a new multilocus method in which a population genetics model is applied to our genotype data and the HapMap reference samples to simulate, or impute, genotype data at 2,193,483 HapMap SNPs not on the Affymetrix chip. These imputed, or in silico, genotypes are then tested for association in the same ways as SNPs genotyped in the project.

Before detailing the principal results for each disease, we first summarize our main observations. Table 2 details the findings from the WTCCC scan for the 15 variants for which there was strong prior evidence of association with one or more of the diseases studied, based on extensive replication studies. All but two of these show associations in our study, with the magnitude of the evidence generally consistent with their effect sizes as estimated from prior studies. One of the signals for which we failed to obtain evidence of replication (APOE in CAD) is poorly tagged by the Affymetrix 500K chip. The other (INS in T1D) is represented by a single SNP that marginally failed our study-wide quality control filters (overall missingness 5.2%) but which was nonetheless strongly associated with T1D when examined. Quantile-quantile plots for the trend test for each of the seven diseases show only very minor deviations from the null distribution, except in the extreme tails which correspond to associations reported below (Fig. 3). The quantile-quantile plots and the results at positive controls (Table 2) give confidence in the quality of our data and the robustness of our analyses.

For each of the seven disease collections, a quantile-quantile plot of the results of the trend test is shown in black for all SNPs that pass the standard project filters, have a minor allele frequency >1% and missing data rate <1%. SNPs that were visually inspected and revealed genotype calling problems were excluded. These filters were chosen to minimize the influence of genotype-calling artefacts. Each quantile-quantile plot shown in black involves around 360,000 SNPs. SNPs at which the test statistic exceeds 30 are represented by triangles. Additional quantile-quantile plots, which also exclude all SNPs located in the regions of association listed in Table 3, are superimposed in blue (for BD, the exclusion of these SNPs has no visible effect on the plot, and for HT there are no such SNPs). The blue quantile-quantile plots show that departures in the extreme tail of the distribution of test statistics are due to regions with a strong signal for association.

Our genome-wide results for the trend test are illustrated in Fig. 4. The single-disease trend and genotypic tests for SNPs on the chip identified 21 signals across the 7 diseases that exceeded a threshold of 5 × 10-7 (Table 3). For each of these SNPs (except those within the MHC), cluster plots are shown in Supplementary Fig. 10 and ‘signal plots’ in Fig. 5. These signal plots estimate the likely demarcation of the hit region and show the signal at genotyped and imputed SNPs together with local genomic context. Four further strong (with P < 5 × 10-7) associations were revealed by the other primary analyses described (Table 3). One locus (in RA) was revealed by the sex-differentiated analysis, two through multilocus approaches (both for T1D) and one through an analysis which combined cases from more than one autoimmune disease (signal plots in Supplementary Figs 11, 12 and 13, respectively).

For each of seven diseases -log10 of the trend test P value for quality-control-positive SNPs, excluding those in each disease that were excluded for having poor clustering after visual inspection, are plotted against position on each chromosome. Chromosomes are shown in alternating colours for clarity, with P values <1 × 10-5 highlighted in green. All panels are truncated at -log10(P value) = 15, although some markers (for example, in the MHC in T1D and RA) exceed this significance threshold.

Characteristics of genomic regions 1.25 Mb to either side of ‘hit SNPs’—SNPs with lowest P values. Region boundaries (vertical dotted lines) were chosen to coincide with locations where test statistics returned to background levels and, where possible, recombination hotspots. Upper panel, -log10(P values) for the test (trend or genotypic) with the smallest P value at the hit SNP. Black points represent SNPs typed in the study, and grey points represent SNPs whose genotypes were imputed. SNPs imputed with higher confidence are shown in darker grey. Middle panel, fine-scale recombination rate (centimorgans per Mb) estimated from Phase II HapMap. The purple line shows the cumulative genetic distance (in cM) from the hit SNP. Lower panel, known genes, and sequence conservation in 17 vertebrates. Known genes (orange) in the hit region are listed in the upper right part of each plot in chromosomal order, starting at the left edge of the region. The top track shows plus-strand genes and the middle track shows minus-strand genes. Sequence conservation (bottom track) scores are based on the phylogenetic hidden Markov model phastCons. Highly conserved regions (phastCons score ≥600) are shown in blue. Information in middle and lower panels is taken from the UCSC Genome Browser. Positions are in NCBI build-35 coordinates. See Supplementary Information on ‘signal plots’.

All of these signals were subjected to visual inspection of cluster plots, and in all cases (with one exception noted below) nearby correlated SNPs also showed a strong signal (see signal plots). Thus, genotyping artefacts are unlikely to be responsible for these associations. Indeed, at the time of writing, 12 of these 25 strong signals represent replications of previously reported findings (only those with extensive prior replication are reported in Table 2). Of the remainder, follow-up studies (reported elsewhere) have confirmed all but one of the loci (ten in total) for which replication has been attempted10,19,20,21,22,23,24. The other replication study gave equivocal results. Of the 18 loci implicated in autoimmune diseases, 5 show associations (P < 0.001) to more than 1 condition, leading to a number of further potential new associations, at least one of which has also been replicated10.

It is likely that further susceptibility genes will be identified through follow-up of other signals for which the evidence from our scan is less conclusive (see below for some specific examples). For example, there are 58 further signals with single-point P values between 10-5 and 5 × 10-7 for which inspection of cluster plots verifies CHIAMO calls (Table 4). As described below, analyses which make use of selected case samples to expand the reference group should also provide a useful route to the prioritization of such putative signals for further analysis. For convenience, the strongest association results are presented separately for each disease in Supplementary Table 7.

Several general points are relevant to interpretation of these disease-association data. First, replication studies are required to confirm associations from GWAs. For the reasons given in the box, we regard very low P values (say P < 5 × 10-7) in our comparatively large sample size as strong evidence for association, and indeed all or most of the loci we find at this level are either already known or have now been confirmed by subsequent replication. Such replication studies are also the substrate for efforts to determine the range of associated phenotypes and to identify and characterize pathologically relevant variation.

Second, failure to detect a prominent association signal in the present study cannot provide conclusive exclusion of any given gene. This is the consequence of several factors including: less-than-complete coverage of common variation genome-wide on the Affymetrix chip; poor coverage (by design) of rare variants, including many structural variants (thereby reducing power to detect rare, penetrant, alleles)25; difficulties with defining the full genomic extent of the gene of interest; and, despite the sample size, relatively low power to detect, at levels of significance appropriate for genome-wide analysis, variants with modest effect sizes (odds ratio (OR) < 1.2).

Third, whereas the association signals detected can help to define regions of interest, they cannot provide unambiguous identification of the causal genes. Nevertheless, assessments on the basis of positional candidacy carry considerable weight, and, as we show, these already allow us, for selected diseases, to highlight pathways and mechanisms of particular interest. Naturally, extensive resequencing and fine-mapping work, followed by functional studies will be required before such inferences can be translated into robust statements about the molecular and physiological mechanisms involved.

We turn now to a discussion of the main findings for each disease, focusing here only on the most significant and interesting results from the analyses described above, and consideration of an expanded reference group, described below.

Bipolar disorder (BD)

Bipolar disorder (BD; manic depressive illness26) refers to an episodic recurrent pathological disturbance in mood (affect) ranging from extreme elation or mania to severe depression and usually accompanied by disturbances in thinking and behaviour: psychotic features (delusions and hallucinations) often occur. Pathogenesis is poorly understood but there is robust evidence for a substantial genetic contribution to risk27,28. The estimated sibling recurrence risk (λs) is 7–10 and heritability 80–90%27,28. The definition of BD phenotype is based solely on clinical features because, as yet, psychiatry lacks validating diagnostic tests such as those available for many physical illnesses. Indeed, a major goal of molecular genetics approaches to psychiatric illness is an improvement in diagnostic classification that will follow identification of the biological systems that underpin the clinical syndromes. The phenotype definition that we have used includes individuals that have suffered one or more episodes of pathologically elevated mood (see Methods), a criterion that captures the clinical spectrum of bipolar mood variation that shows familial aggregation29.

Several genomic regions have been implicated in linkage studies30 and, recently, replicated evidence implicating specific genes has been reported. Increasing evidence suggests an overlap in genetic susceptibility with schizophrenia, a psychotic disorder with many similarities to BD. In particular association findings have been reported with both disorders at DAOA (D-amino acid oxidase activator), DISC1 (disrupted in schizophrenia 1), NRG1 (neuregulin1) and DTNBP1 (dystrobrevin binding protein 1)31.

The strongest signal in BD was with rs420259 at chromosome 16p12 (genotypic test P = 6.3 × 10-8; Table 3) and the best-fitting genetic model was recessive (Supplementary Table 8). Although recognizing that this signal was not additionally supported by the expanded reference group analysis (see below and Supplementary Table 9) and that independent replication is essential, we note that several genes at this locus could have pathological relevance to BD, (Fig. 5). These include PALB2 (partner and localizer of BRCA2), which is involved in stability of key nuclear structures including chromatin and the nuclear matrix; NDUFAB1 (NADH dehydrogenase (ubiquinone) 1, alpha/beta subcomplex, 1), which encodes a subunit of complex I of the mitochondrial respiratory chain; and DCTN5 (dynactin 5), which encodes a protein involved in intracellular transport that is known to interact with the gene ‘disrupted in schizophrenia 1’ (DISC1)32, the latter having been implicated in susceptibility to bipolar disorder as well as schizophrenia33.

Of the four regions showing association at P < 5 × 10-7 in the expanded reference group analysis (Supplementary Table 9), it is of interest that the closest gene to the signal at rs1526805 (P = 2.2 × 10-7) is KCNC2 which encodes the Shaw-related voltage-gated potassium channel. Ion channelopathies are well-recognized as causes of episodic central nervous system disease, including seizures, ataxias and paralyses34. It is possible that this may extend to episodic disturbances of mood and behaviour.

Amongst the other higher ranked signals in the BD data set (Supplementary Table 7), there is support for the previously suggested importance of GABA neurotransmission (rs7680321 (P = 6.2 × 10-5) in GABRB1 encoding a ligand-gated ion channel (GABA A receptor, beta 1))35, glutamate neurotransmission (rs1485171 (P = 9.7 × 10-5) in GRM7 (glutamate receptor, metabotropic 7))35 and synaptic function (rs11089599 (P = 7.2 × 10-5) in SYN3 (synapsin III)36).

We note that a broad range of genetic and non-genetic data point to the importance of analyses that use alternative approaches to phenotype definition, including symptom dimensions31. Although beyond the scope of the current paper, such analyses will be required to maximize the potential of the current BD data set.

Coronary artery disease (CAD)

Coronary artery disease (coronary atherosclerosis) is a chronic degenerative condition in which lipid and fibrous matrix is deposited in the walls of the coronary arteries to form atheromatous plaques37. It may be clinically silent or present with angina pectoris or acute myocardial infarction. Pathogenesis is complex, with endothelial dysfunction, oxidative stress and inflammation contributing to development and instability of the atherosclerotic plaque37.

In addition to lifestyle and environmental factors, genes are important in the aetiology of CAD38. For early myocardial infarction, estimates of λs range from ∼2 to ∼7 (ref. 39). Genetic variation is thought likely to influence risk of CAD both directly and through effects on known CAD risk factors including hypertension, diabetes and hypercholesterolaemia. Genome-wide linkage studies have mapped several loci that may affect susceptibility to CAD/myocardial infarction40 although for only two of these has the likely gene been identified (ALOX5AP (arachidonate 5-lipoxygenase-activating protein) and LTA4H (leukotriene A4 hydrolase))41,42. Association studies have identified several plausible genetic variants affecting lipids, thrombosis, inflammation or vascular biology but for most the evidence is not yet conclusive40. We did not find evidence for strong association at any of these genes within our study (Table 2 and Supplementary Table 10).

The most notable new finding for CAD is the powerful association on chromosome 9p21.3 (Table 3; Fig. 5). Although the strongest signal is seen at rs1333049 (P = 1.8 × 10-14), associations are seen for SNPs across > 100 kilobases. This region has not been highlighted in previous studies of CAD or myocardial infarction40,43. The region of interest contains the coding sequences of genes for two cyclin dependent kinase inhibitors, CDKN2A (encoding p16INK4a) and CDKN2B (p15INK4b), although the most closely associated SNP is some distance removed. Both genes have multiple isoforms, have an important role in the regulation of the cell cycle and are widely expressed44, with CDKN2B known to be expressed in the macrophages but not the smooth muscle cells of fibrofatty lesions45,46. It is of interest that expression of CDKN2B is induced by transforming growth factor beta (TGF-β) and that the TGF-β signalling system is implicated in the pathogenesis of human atherosclerosis45,46. Besides CDKN2A and CDKN2B, the only other known gene nearby is MTAP which encodes methylthioadenosine phosphorylase, an enzyme that contributes to polyamine metabolism and is important for the salvage of both adenine and methionine. MTAP is ubiquitously expressed, including in the cardiovascular system47. Further work is required to determine whether the CAD association at this locus is mediated through CDKN2A/B, MTAP or some other mechanism. The same region also shows replicated evidence of association to T2D in the WTCCC and other data sets19,21,22, though different SNPs seem to be involved.

None of the loci showing more modest associations with CAD (Table 4) includes genes hitherto strongly implicated in the pathogenesis of CAD. A potentially interesting association is at rs6922269 (P = 6.3 × 10-6), an intronic SNP in MTHFD1L, which encodes methylenetetrahydrofolate dehydrogenase (NADP+-dependent) 1-like, the mitochondrial isozyme of C1-tetrahydrofolate (THF) synthase48,49. C1-THF synthases interconvert the one carbon units carried by the biologically active form of folic acid, C1-tetrahydrofolate. These are used in a variety of cellular processes including purine and methionine synthesis48. Another enzyme in the same pathway, methylene THF reductase (encoded by MTHFR) is subject to a common mutation which influences plasma homocysteine level50 and has been associated with increased risk of coronary and other atherosclerotic disease51. The possibility of a link between variants in MTHFD1L and CAD risk is supported by evidence that MTHFD1L activity also contributes to plasma homocysteine52 and that defects in the MTHFD1L pathway may increase plasma homocysteine level48,53.

An intronic SNP in ADAMTS17 (a disintegrin and metalloproteinase with thrombospondin motifs 17), which showed modest association (rs1994016; P = 1.1 × 10-4) in our primary analysis, showed a much stronger association in the expanded reference group analysis (see below and Supplementary Table 9). Although the specific function of ADAMTS17 has not been determined, other members of the ADAMTS family have been implicated in vascular extracellular matrix degradation, vascular remodelling and atherosclerosis54,55.

Crohn’s disease (CD)

Crohn’s disease is a common form of chronic inflammatory bowel disease56. The pathogenic mechanisms are poorly understood, but probably involve a dysregulated immune response to commensal intestinal bacteria and possibly defects in mucosal barrier function or bacterial clearance57. Genetic predisposition to CD is suggested by a λs of 17–35 and by twin studies that contrast monozygotic concordance rates of 50% with only 10% in dizygotic pairs58,59.

A number of CD-susceptibility loci have previously been defined, and all of these generate strong signals in our data (Table 2). In 2001, positional cloning identified CARD15 (caspase recruitment domain family, member 15; NOD2) as the first confirmed CD-susceptibility gene60,61. In the present study, this locus is represented by rs17221417 (P = 9.4 × 10-12). A second association, on chromosome 5q31 (ref. 62) has been widely replicated, although the identity of the causative gene is disputed owing to extensive regional linkage disequilibrium63. Here, the previously described risk haplotype is tagged by rs6596075 (P = 5.4 × 10-7).

More recent studies have identified four further CD-susceptibility loci, all of which are strongly replicated in the present study. The association between CD and SNPs within IL23R (interleukin 23 receptor)63 is here represented by a cluster of associated SNPs, including rs11805303 (P = 6.5 × 10-13). The strongest signal for CD in the present scan (at rs10210302; P = 7.1 × 10-14) maps to the ATG16L1 (ATG16 autophagy related 16-like 1) gene and is in strong linkage disequilibrium (r2 = 0.97) with a non-synonymous SNP (T300A, rs2241880) associated with CD in a German non-synonymous SNP scan64. The third is a locus at chromosome 10q21 around rs10761659 (P = 2.7 × 10-7) and represents a non-coding intergenic SNP mapping 14-kb telomeric to gene ZNF365 and 55-kb centromeric to the pseudogene antiquitin-like 4—a recently detected signal65. Finally, strong association with a cluster of SNPs around rs17234657 (P = 2.1 × 10-13) within a 1.2 Mb gene desert on chromosome 5p13.1, recapitulates the finding of a recent GWA study66.

The current study identifies four further new strong association signals in CD, located on chromosomes 3p21, 5q33, 10q24 and 18p11 (Table 3; Fig. 5). Successful replication for all four loci is reported elsewhere23.

The first of these includes several SNPs around IRGM (immunity-related guanosine triphosphatase; the human homologue of the mouse Irgm/Lrg47), the strongest signal being at rs1000113 (P = 5.1 × 10-8). IRGM encodes a GTP-binding protein which induces autophagy and is involved in elimination of intracellular bacteria, including Mycobacterium tuberculosis67. Reduced function and/or activity of this gene would be expected to lead to persistence of intracellular bacteria, consistent with existing models of CD pathogenesis57 and the recent ATG16L1 association64 (see above).

The second novel CD association is seen at rs9858542 (P = 7.7 × 10-7), a synonymous coding SNP within the BSN (bassoon) gene on chromosome 3p21. BSN is thought to encode a scaffold protein expressed in brain and involved in neurotransmitter release; a more plausible regional candidate is MST1 (macrophage stimulating 1), which encodes a protein influencing motile activity and phagocytosis by resident peritoneal macrophages68.

The third novel association involves a cluster of SNPs around rs10883365 (P = 1.4 × 10-8) on chromosome 10q24.2. The most credible candidate here is the NKX2-3 (NK2 transcription factor related, locus 3) gene, a member of the NKX family of homeodomain-containing transcription factors. Targeted disruption of the murine homologue of NKX2-3 results in defective development of the intestine and secondary lymphoid organs69. Abnormal expression of NKX2-3 may alter gut migration of antigen-responsive lymphocytes and influence the intestinal inflammatory response.

The final novel association, at rs2542151 (P = 4.6 × 10-8) maps 5.5-kb upstream of PTPN2 (protein tyrosine phosphatase, non-receptor type 2) on chromosome 18p11. PTPN2 encodes the T cell protein tyrosine phosphatase TCPTP, a key negative regulator of inflammatory responses. The same locus also shows strong association with T1D susceptibility (trend test P = 1.9 × 10-6) and a consistent, though weaker, association with RA (P = 1.9 × 10-2), supporting the existence of overlapping pathways in the pathogenesis of very distinct inflammatory phenotypes (combined trend test P value for all three diseases = 9 × 10-8) (Table 3; ref. 10).

Several further loci generating less strong evidence for association are of interest on the basis of their biological candidacy (Table 4). For example, rs9469220 (P = 8.7 × 10-7) mapping to the human leukocyte antigen (HLA) system class II region was detected in the ‘second tier’ of associations (Table 4). This suggests a significant contribution of HLA to CD-susceptibility, though less marked than seen in classical autoimmune conditions such as RA and T1D. Another interesting candidate flagged in Table 4 is TNFAIP3 (TNFα induced protein 3), the closest gene to rs7753394 on chromosome 6q23. The protein product inhibits TNFα-induced NFκB-dependent gene expression by interfering with RIP- or TRAF-2-mediated transactivation signals—hence interacting with the same pathway as CARD15 (NOD2). Markers with lower levels of significance include rs6478108 (P = 9.0 × 10-5) within TNFSF15 (tumour necrosis factor super family, member 15), previously reported associated with CD70; and rs3816769 (P = 3.1 × 10-5) which maps within STAT3 (signal transducers and activator of transcription, member 3). On the X chromosome rs2807261 (P = 1.3 × 10-7) maps 50-kb from the gene CD40LG (CD40 ligand—previously known as TNF superfamily, member 5), implicated in the regulation of B-cell proliferation, adhesion and immunoglobulin class switching71. As described in the section on T1D, a modest association between CD and SNPs in the vicinity of the PTPN11 gene on chromosome 12q24 (P = 1.5 × 10-3) probably reflects a locus influencing general autoimmune predisposition.

An emerging theme from molecular genetic studies of CD is the importance of defects in autophagy and the processing of phagocytosed bacteria. A number of other specific components within innate and adaptive immune pathways are also highlighted.

Hypertension (HT)

Hypertension refers to a clinically significant increase in blood pressure and constitutes an important risk factor for cardiovascular disease (http://www.who.int/whr/2002/en/; ref. 72). Lifestyle exposures that elevate blood pressure, including sodium intake, alcohol and excess weight73 are well-described risk factors. Genetic factors are also important74,75. Estimates of λs are approximately 2.5–3.5.

Experimental models have highlighted a number of quantitative trait loci but these have yet to translate into insights into human hypertension76. Linkage studies are consistent with susceptibility genes of modest effect size77 and well-replicated findings have yet to emerge from association approaches.

None of the variants previously associated with HT showed evidence for association in our study although we note that some, such as promoter of the WNK1 (WNK lysine deficient protein kinase 1) gene78,79, are not well tagged by the Affymetrix chip.

For HT there were no SNPs with significance below 5 × 10-7 (Table 3) but the number and distribution of association signals in the range 10-4 to 10-7 was similar to that of the other diseases studied (Table 4 and Supplementary Table 7). There are several possible explanations. First, HT may have fewer common risk alleles of larger effect sizes than some of the other complex phenotypes. If so, then identification of susceptibility variants for HT is likely to be reliant on the synthesis of findings from multiple large-scale studies. Second, the present study may have failed to detect genuine common susceptibility variants of large effect size because they happened to be poorly tagged by the set of SNPs genotyped in the current study. If so, further rounds of genotyping using resources that offer increased density (or complementary SNP sets), and/or improved analytical methods (for example, imputation-based) should facilitate their discovery. Third, study of HT may be more susceptible than other phenotypes to the diluting effects of misclassification bias due to the presence of hypertensive individuals within the control samples. If so, power can be improved in future studies by use of controls specifically screened to exclude individuals with elevated blood pressure.

The most strongly associated SNPs (Table 4) do not identify genes from physiological systems previously implicated by clinical or genetic studies in hypertension. The strongest signal overall is with rs2820037 on 1q43 (genotypic test, P = 7.7 × 10-7). The closest genes are RYR2 (encoding the ryanodine receptor 2), mutations in which are associated with stress-induced polymorphic ventricular tachycardia and arrhythmogenic right ventricular dysplasia80,81; CHRM3, encoding the cholinergic receptor muscarinic 3, a member of the G protein-coupled receptor family32; and ZP4, the product of which is zona pellucida glycoprotein 481. The strong association signals on the X chromosome using an expanded reference group (see below and Supplementary Table 9) are of substantial interest but they do not identify known genes of obvious relevance to HT.

Rheumatoid arthritis (RA)

Rheumatoid arthritis is a chronic inflammatory disease characterized by destruction of the synovial joints resulting in severe disability, particularly in patients who remain refractory to available therapies82. Susceptibility to, and severity of, RA are determined by both genetic and environmental factors, with λs estimates ranging from 5–10 (ref. 83).

An association between RA and alleles of the HLA-DRB1 locus has long been established84. Despite extensive linkage85,86,87 and association studies, only one other RA susceptibility locus has been convincingly identified in Caucasians. In common with several autoimmune diseases including T1D, carriage of the T allele of the rs2476601 SNP in the PTPN22 (protein tyrosine phosphatase, non-receptor type 22) gene has been reproducibly associated with RA, conferring a genetic relative risk of approximately 1.8 (refs 88, 89). These known associations with HLA-DRB1 and PTPN22 explain around 50% of the familial aggregation of RA.

Both these previous associations emerge strongly here (Table 2). The most associated marker within PTPN22 (rs6679677: chromosome 1p13) is perfectly correlated (HapMap CEU data r2 = 1) with the functionally relevant SNP (rs2476601) described previously, and the effect size is consistent with previous estimates89. Amongst other putative RA susceptibility genes, two SNPs mapping to CTLA-4 (cytotoxic T-lymphocyte associated 4) rs3087243 and rs11571300 were only nominally significant (P = 0.085 and P = 0.034, respectively) (Supplementary Table 10).

RA was the sole disease for which the sex-differentiated analysis generated a strong signal due to different genetic effects in males and females. The SNP rs11761231 (chromosome 7) generates a P value of 3.9 × 10-7 for the 2-degrees of freedom (d.f.) sex-differentiated test which combines trend tests in males and females (Table 3). (The trend test ignoring the sex of the individuals has a P value of 1.7 × 10-6.) This genotype has no effect on disease status in males, but a strong apparently additive effect in females (P value in a logistic regression model with additive log-odds is 0.68 in males and 6.8 × 10-8 in females, additive OR for females 1.32), and may represent one of the first sex-differentiated effects in human diseases. Cluster plots for this SNP seem good, but it is surrounded by recombination hotspots and has no other SNPs on the Affymetrix chip with r2 > 0.1 (Supplementary Fig. 11). Some caution is therefore required, but this represents a potentially interesting finding which warrants further investigation, particularly given the sex-related prevalence difference characteristic of this condition.

None of the 9 SNPs with nominal P values in the range 10-5 to 5 × 10-7 (Table 4) map to loci previously associated with RA. Of particular interest is the association of SNPs mapping close to both the alpha and beta chains of the IL2 receptor (rs2104286 in the case of IL2RA; rs743777 and IL2RB). The IL2 receptor mediates IL2 stimulation of T lymphocytes and is thereby thought to have an important role in preventing autoimmunity. A rare 4-base-pair deletion of IL2RA has been associated with development of severe autoimmune disease90, and there is evidence (from previous data91, and from this study and its follow-up) that SNPs within the IL2RA gene region are associated with T1D (see also T1D section).

Several of the SNPs with nominal significance in the range 10-4 to 10-5 (Supplementary Table 7) map to genes with plausible biological relevance. Examples include SNPs within genes implicated in the TNF pathway (for example, rs2771369 in TNFAIP2 (tumour necrosis factor, alpha-induced protein 2)) or in the regulation of T-cell function (rs854350 in GZMB (granzyme B) and rs4750316 in PRKCQ (protein kinase C, theta)). The association with rs10786617 in KAZALD1 (Kazal-type serine protease inhibitor domain-containing protein 1 precursor), a gene whose product is known to have a role in bone regeneration after injury, may be relevant to the development of bone erosions in RA.

RA and T1D were already known to have two disease susceptibility genes in common: at the MHC, and at PTPN22. As detailed elsewhere, our study provides data indicating that this list can be extended to include variants around IL2RA (chromosome 10p15), PTPN2 (chromosome 18p11) and the chromosome 12q24 region (Supplementary Table 11), all apparently novel in RA.

Type 1 diabetes (T1D)

Type 1 diabetes is a chronic autoimmune disorder with onset usually in childhood92. The λs for T1D is ∼15 and twin data suggest that over 85% of the phenotypic variance is due to genetic factors93. There are six genes/regions for which there is strong pre-existing statistical support for a role in T1D-susceptibility: these are the major histocompatibility complex (MHC), the genes encoding insulin, CTLA-4 (cytotoxic T-lymphocyte associated 4) and PTPN22 (protein tyrosine phosphatase, non-receptor type 22), and the regions around the interleukin 2 receptor alpha (IL2RA/CD25) and interferon-induced helicase 1 genes (IFIH1/MDA5)94. However, these signals can explain only part of the familial aggregation of T1D. Five of these previously identified associations were detected in this scan (P ≤ 0.001) (Table 2 and Supplementary Table 10), the exception being the INS gene discussed above.

In this study, single-point analyses revealed three novel regions (on chromosomes 12q13, 12q24 and 16p13) showing strong evidence of association (P < 5 × 10-7; Table 3). Four further regions attained similar levels of significance either through multilocus analyses (chromosomes 4q27 and 12p13: Table 3, Supplementary Fig. 12), or through the combined analysis of autoimmune cases (chromosomes 18p11 and the 10p15 CD25 region: Table 3, Supplementary Fig. 13). The associations with T1D for chromosomes 12q13, 12q24, 16p13 and 18p11 have been confirmed in independent and multiple populations10.

The two signals on chromosome 12 (at 12q13 and 12q24) map to regions of extensive linkage disequilibrium covering more than ten genes (Fig. 5). Several of these represent functional candidates because of their presumed roles in immune signalling, considered to be a major feature of T1D-susceptibility. These include ERBB3 (receptor tyrosine-protein kinase erbB-3 precursor) at 12q13 and SH2B3/LNK (SH2B adaptor protein 3), TRAFD1 (TRAF-type zinc finger domain containing 1) and PTPN11 (protein tyrosine phosphatase, non-receptor type 11) at 12q24. For these signal regions in particular, extensive resequencing, further genotyping and targeted functional studies will be essential steps in identifying which gene, or genes, are causal95. Of those listed, PTPN11 is a particularly attractive candidate given a major role in insulin and immune signalling96. It is also a member of the same family of regulatory phosphatases as PTPN22, already established as an important susceptibility gene for T1D and other autoimmune diseases94,97. Indeed, the 12q24 variant most associated with T1D also features in both the CD and RA scans, generating a combined signal for all autoimmune cases of 9.3 × 10-10 (Supplementary Table 11).

In contrast, available annotations suggest that the 16p13 region contains only two genes of unknown function, KIAA0350 and dexamethasone-induced transcript (Fig. 5). Also, the region of association identified on 18p11 (Supplementary Fig. 14), which seems to confer susceptibility to all three autoimmune conditions studied (combined trend test P = 9 × 10-8, P = 4.6 × 10-8 for CD, 1.9 × 10-2 for RA, and 1.9 × 10-6 for T1D: Supplementary Table 11), maps to a single gene, PTPN2 (protein tyrosine phosphatase, non-receptor type 2), a member of the same family as PTPN22 and PTPN11 and involved in immune regulation96.

Our scan found associations with SNPs within the chromosome 10p15 region containing CD25, encoding the high-affinity receptor for IL-2. This is consistent with a previous report of associations of this region with T1D91. The CD25 region has previously been shown to be associated with Graves’ disease98 and the present study also provides evidence of association with RA (combined trend test P = 5 × 10-8, P = ∼7 × 10-6 for RA and T1D separately, Supplementary Table 11). This finding has clear biological connections to the evidence of association between T1D and a region of 4q27 revealed by the multilocus analysis (Supplementary Table 12, Supplementary Fig. 12). This region contains the genes encoding both IL-2 and IL-21. Together with studies in the NOD (nonobese diabetic) mouse model of T1D, which have shown that a major non-MHC locus (Idd3) reflects regulatory variation of the Il2 gene99, our results point to the primary importance of the IL-2 pathway in T1D and other autoimmune diseases.

One further region deserves comment. In the multilocus analysis, there was increased support for a region on chromosome 12p13 containing several candidate genes, including CD69 (CD69 antigen (p60, early T-cell activation antigen)) and multiple CLEC (C-type lectin domain family) genes. In contrast to the chromosome 4 region where the effect of imputation is to tip an already-strong signal (5.01 × 10-7 for typed rs17388568, trend test) over the arbitrary threshold of 5 × 10-7, the 12p13 locus involves a more marked change between imputed and actual (7.2 × 10-7 for rs11052552, general test). Replication studies of this imputed SNP to date have produced equivocal results (for details see ref. 10).

Type 2 diabetes (T2D)

Type 2 diabetes is a chronic metabolic disorder typically first diagnosed in the middle to late adult years100. Strongly associated with obesity, the condition features defects in both the secretion and peripheral actions of insulin101. The appreciable familial aggregation of T2D (an estimated λs of ∼3.0 in European individuals)73 reflects both shared family environment and genetic predisposition. Heritability values vary widely with most estimates between 30 and 70%101.

To date, robust, widely replicated associations in non-isolate populations are limited to variants in three genes: PPARG (encoding the peroxisomal proliferative activated receptor gamma; P12A102), KCNJ11 (the inwardly-rectifying Kir6.2 component of the pancreatic beta-cell KATP channel; E23K103) and TCF7L2 (transcription factor 7-like 2; rs7903146 (refs 104, 105)).

All three of these signals are detected here with effect-sizes consistent with previous reports (Table 2). A cluster of SNPs on chromosome 10q, within TCF7L2, represented by rs4506565 (trend test, OR 1.36, P = 5.7 × 10-13) generates the strongest association signal for T2D (Table 3, Fig. 5). Rs4506565 is in tight linkage disequilibrium (r2 of 0.92 in the CEU component of HapMap) with rs7903146, the variant with the strongest aetiological claims104,106. In fact, our imputation analysis confirms that rs7903146, though unrepresented on the chip, is responsible for the strongest association effect in this region (Fig. 5). TCF7L2 acts within the WNT-signalling pathway, and effects on diabetes risk seem to be mediated predominantly through beta-cell dysfunction107.

As expected, given existing effect-size estimates, the signals associated with variants within the other established T2D-susceptibility genes, KCNJ11 (rs5215, r2 of 0.9 with rs5219, E23K) and PPARG (rs17036328, r2 of 1 with rs1801282, P12A) are less dramatic (trend test, OR 1.15 and 1.23 respectively, both P = ∼0.001). These examples illustrate how genuine disease-susceptibility variants can generate association signals which would not attract immediate attention for follow-up in the genomewide context.

Apart from TCF7L2, the scan reveals two signals for T2D with P values less than 5 × 10-7 (Table 3, Fig. 5). The first of these maps within the FTO (fat-mass and obesity-associated) gene on chromosome 16q. Several adjacent SNPs (including rs9939609, rs7193144 and rs8050136) generate signals characterized by a per-allele OR for T2D of ∼1.25 and a risk-allele frequency of ∼40% in controls. As recently described in follow-up studies prompted by this finding, the effect of these variants on T2D-risk has been replicated and is mediated entirely by their marked effect on adiposity24.

The third association signal (chromosome 6p22) features a cluster of highly associated SNPs (including rs9465871) with risk-allele frequencies between 18 and 35%, mapping to intron 5 of the CDKAL1 (CDK5 regulatory subunit associated protein 1-like 1) gene. Although the function of CDKAL1 is not known, it shares homology at the protein domain level with CDK5 regulatory subunit associated protein 1 (CDK5RAP1). CDK5RAP1 is known to inhibit the activation of CDK5, a cyclin-dependent kinase which has been implicated in the maintenance of normal beta-cell function108. Our own follow-up studies, and scans by other groups have shown strong replication of this finding19,20,21,22. The effect of this variant on T2D-risk shows significant departures from additivity (Supplementary Table 8).

One notable inclusion amongst the variants with more modest association signals is a cluster of SNPs on chromosome 10 including rs10748582 and rs7923866, which generate trend test P values between 10-4 and 10-5. This cluster maps in the vicinity of the HHEX (homeobox, hematopoietically expressed) and IDE (insulin-degrading enzyme) genes, in a region recently highlighted in a GWA scan for T2D performed in 1363 subjects of French origin109. The SNPs showing association in our data are proxies for those reported in the French study and generate similar effect-size estimates for T2D.

Of the three other regions highlighted by the French scan109, none can be confirmed by our data. The SNP in SLC30A8 associated with T2D in the French report (rs13266634) is poorly correlated with SNPs on the Affymetrix chip (r2 < 0.01), and extensive recombination events in the region limit the value of data-imputation methods. Coverage of the LOC387761 and EXT2 signals is considerably better, but, for these, neither genotyped nor imputed SNPs show evidence for association with T2D.

WTCCC data contributed to identification of two additional robustly replicating T2D signals, mapping to the IGF2BP2 gene and CDKN2A/CDKN2B regions19,21,22, although neither generated impressive P values on the primary scan analysis (neither single-point P was <10-4). The latter signal maps to the same region as the CAD signal on chromosome 9 though different SNPs are involved. The other SNPs in Table 4 do not map to genes or regions previously implicated in T2D pathogenesis, and replication efforts to date have not identified any confirmed signals19.

Expanded reference group analyses

For a fixed number of cases, power of a case-control study can be increased by enlarging the reference group. Our main analyses used a control:case ratio of 1.5:1 for each disease. The availability of the other 6 disease data sets gave us the opportunity to expand the reference group up to a ratio of ∼7.5:1, with potential reciprocal benefits for the analysis of each disease. For BD and T2D the expanded reference group comprised the 58C and UKBS controls supplemented by the other 6 disease sets; for CAD and HT this expanded reference group was reduced to exclude HT and CAD respectively; for CD, RA and T1D, the reference group was augmented only by the cases from the non-autoimmune diseases.

The utility of the expanded reference group approach was demonstrated by increased evidence for association at most of the loci that received strongest support from our primary analysis, including many of the signals at loci known to show robust association in T1D, T2D and CD (Supplementary Table 9). Additionally, this analysis elevated several loci with modest levels of statistical significance in the primary analysis, to the top tier of statistical significance (P < 5 × 10-7).

Our data indicate that this approach may be a useful adjunct to conventional analysis and that loci identified as highly significant should be considered for follow up. There are two important caveats. First, susceptibility genes that influence both the test disease and one or more of the diseases included in the reference group will cause loss of power. Second, a ‘mirror-image’ effect could occur whereby a strong association within the expanded reference sample (for example, HLA in autoimmune diseases) causes spurious association with the opposite allele in the test disease. Thus, a positive association using an expanded reference group must be interpreted within the context of association findings in the diseases included within the reference group.

Disease models

It is of interest to consider which statistical models best describe the data at and between loci that are strongly associated with disease status. Biological interpretation of these statistical models is not straightforward but they can help in choosing more powerful statistical tools for detecting associations.

First, consider separately each of the 19 non-MHC SNPs showing strong evidence for association on either the trend or genotypic test in Table 3. For four of these 19, the P value on the 2-d.f. genotypic test was smaller than that on the 1-d.f. trend test (Table 3). When comparing disease models, these were also the four SNPs with evidence for departure from a simple model in which odds of disease increase multiplicatively with the number of copies of the risk allele (Supplementary Table 8). This supports our view that the genotypic test should be carried out in addition to the trend test, although should perhaps be viewed more cautiously for two reasons: it is more susceptible to genotyping errors; and (on the basis of our findings) experience does not favour strong dominance effects.

A separate question relates to the best models for the way in which different loci combine to affect susceptibility to a disease, and as a consequence on the extent to which methods explicitly allowing interactions between loci should be employed to detect associations110. None of the analyses reported here includes such interactions, so we are not well placed to address the general question. Nonetheless, within each collection with multiple associated regions (CD, T1D and T2D) we considered all pairs of non-MHC SNPs in Table 3 and looked for a departure from the model in which the two loci combine to increase log-odds in an additive fashion. We found suggestive evidence of a departure from multilocus additivity between rs1000113 and rs10761659 in CD (unadjusted P value = 0.002) and between rs9465871 and rs4506565 in T2D (unadjusted P value = 0.004). Further investigation of this question, preferably on unbiased sets of disease loci found through the application of single locus and interaction-based approaches, would seem warranted.

Discussion

We have studied seven common familial diseases by genome-wide association analysis in 16,179 individuals. Our findings inform understanding of the genetic basis of the diseases concerned and provide methodological insights relevant to the pursuit of GWA studies in general.

A simple but important observation is that GWA analysis provides a highly effective approach for exploring the genetic underpinnings of common familial diseases. Our yield of novel, highly significant association findings is comparable to, or exceeds, the number of those hitherto-generated by candidate gene or positional cloning efforts. For many of the compelling signals, replication has already been obtained, including regions on chromosomes 3p21, 5q33, 10q24 and 18p11 for CD23, 12q13, 12q24, 16p13 and 18p11 in T1D10 and 6p22 and 16q12 in T2D19,20,21,22,24. For others, replication is required to establish a definitive relationship with disease. Additional findings of particular interest include the identification of several loci that seem to influence susceptibility to multiple autoimmune diseases, and the suggestion of a novel locus for RA which shows sex-specific effects.

Our study enables us to make several general recommendations relevant to GWA studies. The first relates to the importance of careful quality control. In such large data sets, small systematic differences can readily produce effects capable of obscuring the true associations being sought111,112. We implemented extensive quality control checks to minimize differences in sample DNA concentration, quality and handling procedures and combined a new genotype-calling algorithm (CHIAMO) with a set of filtering heuristics to select SNPs for further analysis. Given that infallible detection of incorrect genotype calls is not yet possible, the criteria used for SNP exclusion need to strike a compromise between stringency (which may discard true signals or generate spurious positives through differential missingness) and leniency (with the danger that true signals are swamped by spurious findings due to poor genotype calling). As such, systematic visual inspection of cluster plots for SNPs of interest remains an integral part of the quality control process.

The potential for population structure to undermine inferences in case-control association studies has long been debated113 but limited empirical data have been available to assess the issue. Our study highlighted several loci, some known and some new, which demonstrate substantial geographical variation in allele frequencies across Britain (Table 1), most probably due to natural selection in ancestral populations. Outside these loci, the effects of population structure are relatively minor, and do not represent a major source of confounding, provided that individuals with appreciable non-European ancestry are excluded. Although these conclusions may not generalize to studies in other locations, this finding reinforces the logistical and economic benefits of the case-control design over alternatives (such as family-based association studies).

Our study allowed us to address another important methodological issue: the adequacy, or otherwise, of using a common set of controls, rather than a sample recruited explicitly for use with a defined disease sample. It is often assumed that failure to match cases and controls for socio-demographic variables will lead to substantial inflation of the type I error rate. Our study demonstrates that, within the context of large-scale genetic association studies, for British populations at least, this concern has been overstated. A related argument against use of population controls relates to the perceived impact of misclassification bias when a proportion of controls meet the criteria used to define cases. However, the consequent loss of power is modest unless the trait of interest is very common6. Given the above, the present study provides a compelling case for both the suitability and efficiency of the common control design in Britain and warrants its serious consideration elsewhere. Further benefits can be expected from use of this common control genotype data set in future GWA studies in Britain. Finally, in failing to detect significant differences in performance between the epidemiological sample (58C) and that derived from blood donors (UKBS), we validate the use of the latter samples for cost-effective, large-scale control DNA provision.

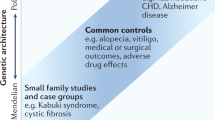

In terms of general biological insights, the most profound relate to inferences about the allelic architecture of common traits. The novel variants we have uncovered are characterized by modest effect size (that is, per-allele ORs between 1.2 and 1.5) and even these estimates are likely to be inflated114. We identified no additional common variants of very large effect (akin to HLA in T1D: Supplementary Fig. 15). The observed distribution of effect sizes is consistent with models based on theoretical considerations and empirical data from animal models87,115,116 that suggest that, for any given trait, there will be few (if any) large effects, a handful of modest effects and a substantial number of genes generating small or very small increases in disease risk.

There are several important corollaries. Notwithstanding the incomplete coverage afforded by the genotyping reagents employed, most of the susceptibility effects yet to be uncovered for these diseases (at least those attributable to, or tagged by, common SNPs) are likely to have effects of similar or smaller magnitude to those we have highlighted. Beyond the signals with the strongest evidence for association, most of which are likely to be real (and many of which have already been confirmed), there will be many additional susceptibility variants for which the WTCCC provides some evidence, but for which extensive replication will be required to establish validity. PPARG and KCNJ11 provide examples of proven susceptibility genes (for T2D) that generated only modest evidence for association within the WTCCC, and which would only have been revealed by such replication efforts. Given the likely preponderance of susceptibility variants of small effect, the potential for identifying further loci is limited only by the clinical resources available for replication (assuming suitable study design, accurate genotyping and appropriate analysis and inference). Provided the attribution of a causal relationship with the trait of interest is robust, even variants of very small effect can offer fundamental biological insights.

The patterns of allelic architecture uncovered mean that replication efforts will need to feature comparably large sample sizes: even if one accepts more relaxed significance thresholds given the prior evidence, one has to consider the inflation in effect-size estimates in the primary study. Caution is required in reaching negative conclusions on the basis of a single failed attempt at replication, or any set of replication attempts that are inadequately powered.

One of our major design considerations was sample size. We set out to include samples larger than those previously examined for genome-wide association, and our results suggest that such large sample sizes were necessary. Even with 2,000 cases and 3,000 controls, adequate power is restricted to common variants of relatively large effect (see Supplementary Table 2). We carried out an experiment to see which SNPs showing strong evidence of association in the full data (that is, signals outside MHC with trend test P < 5 × 10-7), would have been detected at that same threshold in only a subset of our data (Fig. 6). Because it focuses on a particular but arbitrary P-value threshold, some care is needed in interpreting the figure. Nonetheless, for subsamples of 1,000 cases and 1,000 controls, of the 16 loci detected in the full study, we would have been certain of seeing only 2, with an expectation of about 6; for subsamples of 1,500 cases and 1,500 controls, we could expect to have seen about 9. These figures provide stark evidence that the larger the study sample, the more loci can be expected to reach threshold significance values. Indeed, given the likely distribution of effect sizes for most complex traits (see above), there are strong grounds for the prosecution of GWA studies on an even larger scale than ours, and, wherever possible, combining the results from existing GWA scans performed for the same trait. To assist such efforts, individual level data from this study will be widely available through the Consortium’s Data Access Committee (follow links from http://www.wtccc.org.uk).

For the 16 SNPs in Table 3 (outside the MHC) with P values for the trend test below 5 × 10-7, we randomly generated 1,000 subsets of our full data set corresponding to case-control studies with different numbers of cases, and the same number of controls (x axis). The y axis gives the proportion of subsamples of a given size in which that SNP achieved a P value for the trend test below 5 × 10-7. SNPs are numbered according to the row in which they occur in Table 3 (so that, for example, the CAD hit is numbered 2, and the TCF7L2 hit on chromosome 10 for T2D is numbered 20).

In our study, T1D and CD, the conditions showing strongest familial aggregation (as quantified by their sibling relative risks, λs), generated the largest number of highly significant associations. This relationship was not sustained in comparisons between the other five diseases. It is important to recognize that the association signals so far identified account for only a small proportion of overall familiality. There is a disparity in scale between the modest locus-specific λs effects attributable to the identified associations (for instance, the prominent TCF7L2 signal for T2D translates into a λs of only 1.03) and the estimates of overall familiality that reflects the combined effects of all genes and shared family environment. These estimates demonstrate the limited potential of the variants thus far identified (singly or in combination) to provide clinically useful prediction of disease117,118.

The identification and characterization of the aetiological variants that underlie replicated associations will necessitate extensive fine-mapping and functional validation. We view the WTCCC study and data set as an important first step towards harnessing the powerful molecular genomic tools now available to dissect the biological basis of common disease and translating those findings into improvements in human health.

Methods summary

A detailed description of materials and methods is given in Methods. The workflow and organization of the project are given in Supplementary Fig. 16. Case series came from previously established collections with nationally representative recruitment: 2,000 samples were genotyped for each. The control samples came from two sources: half from the 1958 Birth Cohort and the remainder from a new UK Blood Service sample. The latter collection was established specifically for this study and is a UK national repository of anonymized DNA samples from 3,622 consenting blood donors. The vast majority of subjects were self-reported as of European Caucasian ancestry. All DNA samples were requantified and tested for degradation and PCR amplification. Genotyping was performed using GeneChip 500K arrays at the Affymetrix Services Lab (California): arrays not passing the 93% call rate threshold at P = 0.33 with the Dynamic Model algorithm were repeated. CEL (cell intensity) files were transferred to WTCCC for quantile normalization, and genotypes called using a new genotyping algorithm, CHIAMO, developed for this project. QC/QA measures included sample call rate, overall heterozygosity and evidence of non-European ancestry (809 samples excluded; 16,179 retained for analysis). SNPs were excluded from analysis because of missing data rates, departures from Hardy–Weinberg equilibrium and other metrics (31,011 excluded; 469,557 retained). Standard 1-d.f. and 2-d.f. tests of case-control association were supplemented with bayesian approaches, multilocus methods (data imputation) and analyses with combined data sets, either as additional cases (to detect variants influencing multiple phenotypes) or as an expanded reference group (to increase power). Results for each SNP for all analyses reported will be available from http://www.wtccc.org.uk, as will details allowing other researchers to apply for access to WTCCC genotype data. Software packages developed within the WTCCC are available on request (see Methods for details).

Online Methods

BD phenotype description

BD cases were all over the age of 16 yr, living in mainland UK and of European descent. Recruitment was undertaken throughout the UK by teams based in Aberdeen (8% of cases), Birmingham (35% cases), Cardiff (33% cases), London (15% cases) and Newcastle (9% cases). Individuals who had been in contact with mental health services were recruited if they suffered with a major mood disorder in which clinically significant episodes of elevated mood had occurred. This was defined as a lifetime diagnosis of a bipolar mood disorder according to Research Diagnostic Criteria119 and included the bipolar subtypes that have been shown in family studies to co-aggregate for example29: bipolar I disorder (71% cases), schizoaffective disorder bipolar type (15% cases), bipolar II disorder (9% cases) and manic disorder (5% cases). After providing written informed consent, all subjects were interviewed by a trained psychologist or psychiatrist using a semi-structured lifetime diagnostic psychiatric interview (in most cases the Schedules for Clinical Assessment in Neuropsychiatry120 and available psychiatric medical records were reviewed). Using all available data, best-estimate ratings were made for a set of key phenotypic measures on the basis of the OPCRIT checklist (which covers both psychopathology and course of illness)121,122 and lifetime psychiatric diagnoses were assigned according to the Research Diagnostic Criteria119. The reliability of these methods has been shown to be high119,123,124. Further details of clinical methodology can be found in Green, 2005 (ref. 123) and Green, 2006 (ref. 124).

CAD phenotype description

CAD cases had a validated history of either myocardial infarction or coronary revascularization (coronary artery bypass surgery or percutaneous coronary angioplasty) before their 66th birthday. Verification of the history of CAD was required either from hospital records or the primary care physician. Recruitment was carried out on a national basis in the UK through a direct approach to the public via (1) the media and (2) mailing all general practices (family physicians) with information about the study, as previously described125. In an initial pilot phase, potential participants were also identified and approached through local CAD databases in the two lead centres (Leeds and Leicester). Although the majority of subjects had at least one further sib also affected with premature CAD, only one subject from each family was included in the present study.

CD phenotype description