Abstract

In the current study, we demonstrate the use of a quality framework to review the process for improving the quality and safety of the patient in the health care department. The researchers paid attention to assessing the performance of the health care service, where the data is usually heterogeneous to patient’s health conditions. In our study, the support vector machine (SVM) regression model is used to handle the challenge of adjusting the risk factors attached to the patients. Further, the design of exponentially weighted moving average (EWMA) control charts is proposed based on the residuals obtained through SVM regression model. Analyzing real cardiac surgery patient data, we employed the SVM method to gauge patient condition. The resulting SVM-EWMA chart, fashioned via SVM modeling, revealed superior shift detection capabilities and demonstrated enhanced efficacy compared to the risk-adjusted EWMA control chart.

Similar content being viewed by others

Introduction

Statistical Process Control (SPC) is a fundamental methodology in quality management, providing a systematic framework for monitoring, analyzing, and optimizing processes. It leverages statistical techniques to ensure consistency, stability, and quality across manufacturing and service-oriented operations. The advent of Machine Learning (ML) has introduced transformative capabilities to SPC, enabling computers to learn and predict outcomes without explicit programming. ML algorithms, adept at handling large datasets, excel in identifying complex patterns and detecting subtle anomalies in real-time, augmenting traditional statistical methods. By leveraging historical and real-time data, ML algorithms forecast potential deviations, equipment failures, or defects, enabling proactive intervention. ML-driven SPC applications include predictive maintenance, anomaly detection, fault diagnosis, and process optimization, continually refining accuracy through ongoing learning. Integrating ML with SPC introduces adaptability, enhancing process monitoring and quality maintenance, thereby driving efficiency, productivity, waste reduction, and superior product quality in manufacturing and process management domains.

Walter A. Shewhart pioneered control charts in the 1920s for monitoring industrial production, evolving beyond industrial use into various fields. The standard Cumulative Sum (CUSUM) procedure is commonly used for quality monitoring but may signal changes due to patient mix variations rather than surgical performance changes. Steiner et al.1 pioneered the risk-adjusted control chart and introduced a new CUSUM procedure that adjusts for pre-operative patient risk, making it suitable for settings with diverse patient populations. In healthcare, adjusting for diverse patient factors, such as through the Parsonnet scoring system, aids in evaluating surgery risks by Asadyyobi and Niaki2. To curb false alarms, statistical studies rely on risk-adjusted control charts, crucial for accurate monitoring across diverse risk profiles. Neuburger et al.3 highlighted the limited adoption of statistical control charts despite clinical teams' use of time series charts for performance monitoring. Their study compared four control charts for detecting changes in rates of binary clinical data, revealing the strengths of Shewhart, EWMA, CUSUM for different rate changes, and emphasizing CUSUM's effectiveness in swiftly identifying patient safety issues causing adverse event rate increases. Zeng4 noted an increased emphasis on healthcare quality, highlighting the significance of monitoring care providers' performance. Utilizing continuous measures like clinical outcomes, service utilization, and cost enables prompt detection of performance changes, crucial for issue prevention and improving care quality. Zhen He et al.5 introduce a novel EWMA control chart for continuous surgical outcome monitoring, integrating actual survival time and predicted mortality. Simulation studies demonstrate its superior efficiency compared to existing methods, such as risk-adjusted survival time cumulative sum charts. The implementation involves individual surgeon performance monitoring based on varying patient risk levels, exemplified through a real case study. Tighkhorshid et al.6 utilize post-cardiac surgery survival time as a continuous quality measure, introducing a risk-adjusted EWMA control chart. Phase II evaluation using average run length criteria demonstrates improved process deviation detection, notably after integrating surgeon group effects in the regression model. Lai et al.7 proposed an EWMA chart for monitoring average surgical risk and variance shifts efficiently, outperforming existing cumulative sum methods in detecting variance changes and slight shifts in surgical risk. Applied to Hong Kong's Surgical Outcome Monitoring and Improvement Program data, it highlighted improvements in hospital outcomes. Asif et al.8 focus on the RAMA-EWMA control chart for identifying survival time after cardiac surgery, assessing its performance using a two-year dataset. Extensive simulations demonstrate its superior shift diagnostic ability compared to the control chart studied, evaluated through average run length properties (Table 1).

Asif and Noor-ul-Amin21 introduce an adaptive risk-adjusted EWMA (ARAEWMA) control chart, combining AFT regression with an adaptive EWMA (AEWMA) concept. Utilizing cardiac surgery patient data assessed via the Parsonnet score method, the ARAEWMA chart demonstrates superior shift detection and efficiency compared to the risk-adjusted EWMA chart. Aslam et al.23 introduce upper and lower-sided improved adaptive EWMA control charts for exponential distribution-modeled data. The upper-sided chart detects upward shifts, while the lower-sided chart identifies downward shifts. Monte Carlo simulations demonstrate their superior performance over existing control charts, validated using hospital stay time data for male traumatic brain injury patients. Lai et al.24 introduce a GLR-based control chart for monitoring risk-adjusted ZIP processes with EWMA, demonstrating superior performance in detecting parameter shifts compared to existing methods. Application to influenza and flight delay datasets underscores its effectiveness. Rasouli et al.25 introduce a risk-adjusted time-variant linear state space model, applied with a group multivariate EWMA (GMEWMA) control chart to monitor multistage therapeutic processes, validated through simulation and thyroid cancer surgery, demonstrating effective real-world performance. Sogandi et al.26 proposed control charts, based on a Bernoulli state space model, incorporating categorical covariates and utilizing an expectation–maximization algorithm for parameter estimation, demonstrating competitive performance against Hotelling’s chart in shift detection and superiority in outlier identification, validated through simulation and real case study. Kazemi et al.27 proposed the RA-MTCUSUM control chart, combining AFT regression, Tukey's control chart, and multivariate CUSUM, showing robustness in simulation experiments across various distributions and real sepsis patient datasets from a Tehran hospital compared to traditional control charts. Tang and Gan28 develop and study a risk-adjusted EWMA charting method based on multiple outcomes, demonstrating its performance and comparability with risk-adjusted CUSUM using real surgical data. The study emphasizes the attractiveness of the risk-adjusted EWMA procedure owing to its performance and interpretability. Yeganeh et al.22 presents an ANN-based control chart for monitoring binary surgical outcomes, outperforming existing studies via ARL criterion. It explores machine learning in health-care monitoring, offering real-life applications, and assesses robustness by incorporating Beta distribution for mortality rates. Rafiei and Asadzadeh29 develop a risk-adjusted CUSUM chart for detecting declining shifts in post-surgery patient survival times, showing superior performance via a multi-objective economic-statistical model, validated in a cardiac surgery center, surpassing alternative designs in statistical and economic aspects.

Upon reviewing the related literature, it was found that all existing risk-adjusted control charts for monitoring binary surgical outcomes rely heavily on statistical assumptions. To our knowledge, there have been no studies that have employed machine learning techniques within a control chart framework for this purpose. However, the use of machine learning schemes such as support vector regression, SVM, and artificial neural network (ANN) is well-established in other process monitoring situations. To address this gap in healthcare applications, this paper introduces an SVM-based control chart (SVM-EWMA) to monitor the performance of binary surgical outcomes in Phase II. "Risk adjusted EWMA control chart" section of the paper concentrates on discussing the principles machine learning and SVM, while "Proposed SVM based risk adjusted control chart" section extensively outlines the development process and structure of the SVM-EWMA control chart. Furthermore, the performance of the newly proposed chart by utilizing run-length profiles is presented in the same section. Moving forward, “Main findings” section provides the main findings of suggested control charts, offering insights into their respective strengths and limitations. “Conclusion” section encapsulates the conclusive remarks and the overall implications of the study's findings.

Risk adjusted EWMA control chart

In this section, we presented the RAEWMA control chart. Fit the AFT model as the first step of the design of the control chart as given in Eq. (1).

where Pt is the personnet score of the tth patient, \(\beta_{0}\), \(\beta_{1}\) are the regression coefficients, σ is standard deviation and εt is the random error of survival time distribution. The estimated values of \(\beta_{0}\), \(\beta_{1}\) and σ are obtained by in-control data set. where Pt represents the personnet score of the tth patient, \(\beta_{0}\), \(\beta_{1}\) are the two regression coefficients, σ is standard deviation and εt is the error term of survival time distribution. The values of \(\beta_{0}\) = 5.07026, \(\beta_{1}\) = − 0.03348 and σ = 0.57 are estimated by using the in-control data set as reported by Asif and Noor-ul-Amin21.

From the study of Tighkhorshid et al.6, the statistic of RAEWMA control chart is constructed by using the standardized residual (SR-AFT) from the AFT regression model. The SR-AFT is denoted by \(w_{t}\) and calculated as

where t is the sample number, the λ is a smoothing constaa sample of size one from the residuals obtainednt range from 0 < λ ≤ 1. The zo is the initial value. For the improvement of the process, it is important to observe any decrease in survival time so one-sided statistic used for the RAEWMA control chart that is given by

\(\xi\) indicates the mean of SR-AFT and λ is taken as 0.2. The variance of this statistic is given as

and the lower control limit is given by

where L is the control coefficient. The λ determines the decline of the rate of weights, so both of these two parameters L and λ define the in-control ARL to evaluate the performance of the RAEWMA chart. The similar design is adopted by Asif and Noor-ul-Amin21 to proposed ARAEWMA control chart.

Machine learning and SVM

Machine learning, a groundbreaking facet of artificial intelligence, empowers computers to learn autonomously without explicit programming. It hinges on crafting algorithms enabling systems to learn from data, making predictions or decisions. Three core types exist: supervised learning, training models on labeled data to predict new outputs; unsupervised learning, detecting patterns in unlabeled data; and reinforcement learning, where agents learn via trial and error. Widely applicable across fields like finance, healthcare, and autonomous vehicles, ML extracts insights, identifies patterns, and drives efficiency. Its implementation involves data collection, preprocessing, model training, evaluation, and deployment. With technological strides, ML continues evolving, enabling computers to handle intricate tasks, shaping the landscape of intelligent systems.

SVMs are robust tools in supervised learning, adept at both classification and regression tasks by crafting optimal hyperplanes to segregate data points or predict continuous outcomes. These models emphasize maximizing the margin between distinct classes, depicted visually as a line in two dimensions or a hyperplane in higher dimensions. The crucial support vectors, positioned closest to this boundary, influence its placement and orientation. SVMs excel in handling linear and non-linear data through diverse kernel functions that transform input spaces to enable linear separability. Their strength lies in generalizing well to new data and managing high-dimensional spaces, but their performance can be sensitive to kernel and parameter choices, posing challenges with larger datasets due to computational complexities. Nonetheless, SVMs find extensive use in text classification, image recognition, and biology due to their adaptability in handling intricate decision boundaries in machine learning. Introduced by Vapnik, and Smola30, SVMs leverage structural risk minimization principles, surpassing empirical risk minimization of traditional neural networks. Initially designed for classification, SVMs have expanded to regression problems, demonstrating prowess in identifying optimal hyperplanes to maximize margins between classes, offering pivotal solutions for various real-world applications.

Consider the challenge of distinguishing between observations within a dataset that fall into two distinct categories. These categories are identified by labels of either − 1 or + 1. Essentially,

Consider a hyperplane described as

The hyperplane achieves optimal separation when observations are error-free and the closest vectors to it maximize their distance. Equation (7) is transformed into a canonical form Eq. (8), constraining parameters w and b accordingly.

In simpler terms, it asserts that the norm of the weight vector must be equivalent to the inverse of the distance from the nearest point in the dataset to the hyperplane Gunn31. The separating hyperplane's canonical form must adhere to this particular constraint.

The distance d(w, b; x) of a point x from the hyper plane (x, b) is

Maximizing the margin q(x, b) within the limitations outlined in Eq. (10) is crucial for attaining the optimal hyperplane. This margin, defined in Eq. (4), determines the objective.

Given Eq. (12), it is evident that the optimal hyperplane is the one that minimizes.

It's important to highlight that minimizing Eq. (12) is tantamount to applying the principles of structural risk minimization (SRM). To implement SRM, it is assumed that a bound-holds, as indicated by ||x||< A. Subsequently, considering Eqs. (10) and (11), we obtain:

From the equations mentioned earlier, it's clear that the hyperplanes cannot be closer than 1/A to any data point, thereby narrowing down the feasible hyperplanes. A solution to the optimization problem in Eq. (13) under the constraint in Eq. (10) can be determined by identifying the saddle point of the Lagrange functional, as proposed by Minoux32.

Involving \(\alpha_{i}\) as a Lagrange Multiplier, the Lagrangian equation requires minimization concerning x and b while necessitating maximization concerning a (where a ≥ 0). Opting to solve the dual problem proves to be a more straightforward approach.

The minimum in Eq. (16) is obtained by solving the following two equations.

Replacing these equations, the solution to the problem is given by,

By solving these equations while accounting for the mentioned constraints, one can ascertain the Lagrange multipliers. These multipliers serve as the basis for deriving the separating hyperplane.

where \(x_{r}\) and \(x_{s}\) are support vectors from each class.

Proposed SVM based risk adjusted control chart

In this section, we introduce the proposed risk-adjusted control chart, termed RAEWMA-SVM, which utilizes SVM. While Stiner et al.1 utilized a logistic model for their risk-adjusted control chart, our approach, as detailed in "Risk adjusted EWMA control chart" section, is based on residuals derived from the Accelerated Failure Time (AFT) regression model by Tighkhorshid et al.6. In our proposed design, these residuals are obtained through the implementation of an SVM regression model. Following the methodology outlined by Tighkhorshid et al.6, the statistic for the RAEWMA control chart is constructed using the Standardized Residuals from the SVM regression model, denoted as SR-SVM. The RAEWMA statistic based on SR-SVM is expressed as follows:

In the provided formula, where t represents the sample number, λ denotes the smoothing constant within the range 0 < λ ≤ 1, and \(E_{0}\) is the initial value. To enhance the process monitoring, it is crucial to detect any decrease in survival time. Consequently, a one-sided statistic is employed for the RAEWMA-SVM control chart, expressed as

\(\xi\) indicates the mean of SR-SVM and λ is taken as 0.1 and 0.25. In RAEWMA-SVM control chart, if the plotting statistic \(\left|{F}_{t}\right|\) < L, then the process prompted the out-of-control signal.

The dataset utilized in this study is sourced from the work of Steiner et al.1 and pertains to patients undergoing cardiac surgery. The primary focus of analysis revolves around the survival time post-cardiac surgery, which serves as a key quality characteristic in assessing patient outcomes. In this dataset, patient information is tracked for a period of 30 days following surgery. If a patient survives beyond this interval or if there is no recorded information regarding the patient demise within the specified timeframe, the observations are deemed as right-censored. Simulation studies are conducted to evaluate the proposed approach, taking into account both patient health states and surgeon groups. A crucial risk factor considered in the model is the parsonnet score, which serves as an indicator of the patient's health state preceding cardiac surgery. This score incorporates various patient attributes such as age, gender, diabetes status, among others, to quantify the overall risk profile. The parsonnet score ranges from 0 to 100, with higher scores indicating an elevated risk of mortality post-surgery. The analysis integrates the parsonnet score as a key covariate in the model, enabling the evaluation of its impact on patient outcomes. By leveraging this comprehensive dataset and accounting for patient health states and surgeon groups, the study aims to provide insights into the effectiveness of the proposed approach in assessing survival outcomes following cardiac surgery. Control charts play a pivotal role in quality control by evaluating process performance. Their effectiveness is gauged through run length profiles, primarily focusing on two critical indicators: ARL and the SDRL, and in this study we also using the percentiles for the run length. ARL signifies the average duration data remains within control limits before detecting the first anomaly, while SDRL measures the variability in these durations. Lower values in both metrics denote superior control chart performance, indicating quicker detection of deviations from normalcy. Various computation methods—such as the Markov chain, integral equation, and Monte-Carlo simulation—exist in the literature for calculating ARLs and SDRLs. In our research, we've specifically employed the Monte-Carlo simulation technique to meticulously examine these run-length profiles. To enhance our model's accuracy, we've set fixed ARL0 values at 370 and 500, deliberately adjusting them across diverse shift sizes. This deliberate variation enables a comprehensive exploration that how different magnitudes of shifts influence the control chart's sensitivity and overall performance. In this research, we used the Monte-Carlo simulation technique to assess the run-length profiles by using the R language. The following steps are used to compute the run length profiles in the form ARLs and SDRLs.

- Step 1::

-

Computing the values of residuals

Data selection:

-

i.

We collected data from cardiac surgery patients, as reported in the study by Steiner et al.19.

In-control dataset selection:

-

i.

We partitioned the dataset into two segments, using the first two years' data as the in-control dataset.

-

ii.

This approach aligns with the methodology outlined by Tighkhorshid et al.6, who also utilized the same dataset in their study.

Residual computation:

-

i.

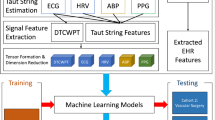

To obtain the value of et, utilized SVM model with the in-control data set for computation. The reader may consult the supplementary Appendix A for the detailed steps of SVM model fitting. The flowchart in Fig. 1 is also helpful to understand this step.

-

ii.

Utilizing the trained SVM model, we obtained the residual values for further analysis.

-

i.

- Step 2::

-

Setting up control limits

-

i.

Select a sample of size one from the residuals obtained in Step 1.

-

ii.

Using the Eqs. (19) and (20), we computed the statistic for the newly proposed control chart. This allowed us to evaluate the process's performance based on the chart's design.

-

iii.

We iterated the previous two steps until the process was confirmed to be within control.

-

iv.

If the process was found to be out of control during any iteration, we recorded the count of in-control occurrences as the run length.

-

v.

To calculate the in-control ARL (ARL0), we repeated steps (i-iii) for a total of 50,000 iterations.

-

vi.

If the target ARL0 was not achieved, we revisited the preceding steps (i-iv), this time using a different value for the control limit parameter, ℎ. This adjustment aimed to bring the process closer to the desired ARL0 value.

-

i.

- Step 3::

-

For the out-of-control ARLs

-

i.

To assess the robustness of the proposed method, the out-of-control performance is examined under various shifts in the residuals at different change points. In this analysis, it is assumed that the survival time, Parsonnet score, and surgeon groups are known for each patient. Different shifts are applied to the residuals (i.e. \(e_{t} + \delta\)) of the SVM model, allowing for the evaluation of the method's performance under varying scenarios.

-

ii.

Compute the value of ARL and SDRL by repeating the process 50,000 times.

-

i.

The ARL, SDRL and percentiles values for an in-control process are set at 370 and 500. The shift denoted by \(\delta\). The values for shift are selected as 0.0, 0.01, 0.02, 0.03, 0.04, 0.05, 0.06, 0.07, 0.08, 0.09, 0.10, 0.40, 1.00 and 2.00. The smoothing constant is set to \(\lambda\) = 0.10 and 0.25, and the outcomes are displayed in Tables 2, 3, 4, 5. Comparative results of the proposed SVM-EWMA with risk adjusted EWMA control chart for various shifts for fixed for ARL0 = 200 and 370 are demonstrated in Table 6.

Table 2 presents the outcomes of the proposed SVM-EWMA control chart, utilizing a smoothing constant for ARL0 = 370 across various shifts. The smoothing constant \(\lambda\) is set at 0.10. A clear trend emerges where the resulting ARL values decrease as the shift values increase, demonstrating an unbiased characteristic of the ARL. For instance, considering different shift values, such as 370.24, 250.11, 157.92, 101.13, 75.90, 65.07, 57.62, 47.69, 40.64, 35.26, 30.77, 2.92, 1.00, and 1.00, a pattern emerges where increasing shift values correspond to smaller ARL values. Figure 1 shows the flow chart for proposed SVM-EWMA control chart. Similarly, Table 3 illustrates this pattern for a different smoothing constant (\(\lambda\) = 0.25) at ARL0 = 500. The sequence of ARL values at various shifts of 1 with a fixed shift of follows a similar trend: 370.76, 195.62, 135.28, 121.95, 104.12, 84.76, 75.74, 70.03, 63.86, 64.76, 61.28, 59.00, 1.00, and 1.00. As the shift values increase, the ARL values consistently decrease, maintaining this pattern across the shifts. Tables 3 and 5 present a comprehensive overview for ARL0 = 500, demonstrating the application of the recommended design for the SVM-EWMA control chart with two distinct values (0.10 and 0.25) for the smoothing constant ρ across various shifts. They illustrate the performance of the SVM-EWMA control chart under these conditions. Similarly, Table 6 provides insights into the ARL and SDRL values for both the risk-adjusted EWMA and the suggested SVM-EWMA control chart, set at ARL0 = 370. This table explores the impact of different smoothing constant values (0.10 and 0.25) at various shifts on the resulting ARL. Notably, the outcomes indicate that a smaller value for the smoothing constant corresponds to a smaller ARL, highlighting this relationship between the smoothing constant and resulting ARL values. This section revolves around comparing the proposed SVM-EWMA with the RAEWMA control chart, as presented in Table 6. The aim is to evaluate the SVM-EWMA's performance against the RAEWMA when ARL0 is set at 370, considering different smoothing constant values (0.10 and 0.25) and employing various shifts. Observing Table 6, it becomes evident that the ARL values obtained from the proposed SVM-EWMA control chart are consistently smaller compared to those derived from the RAEWMA control chart across different shift values. For instance, consider shift 0.03 with ARL0 = 370 and a smoothing constant of 0.10: the suggested control chart yields a value of 102.39, while the RAEWMA control chart provides a value of 204.31. This comparison reveals that the proposed model consistently generates smaller ARL values than the existing model. Similarly, at shift 0.07 for ARL0 = 370, the ARL value for the proposed control chart stands at 47.49, whereas for the existing control chart, it's 104.15. Figure 2 shows the significant performance of the suggested SVM-EWMA control chart compared to the RAEWMA control chart. Additionally, from Fig. 2, we can observe minor differences between the existing method and the proposed method at low and high shifts, while our proposed method efficiently performs at moderate shifts. These outcomes strongly indicate that the proposed SVM-EWMA control chart generates more efficient and superior results compared to the considered control chart, consistently showcasing its efficacy across various shift scenarios. Note that the residuals are derived from the in-control dataset using the SVM model. To create a shifted dataset, the value of the shift is added to the residuals. Subsequently, these shifted residuals are employed to assess the performance of the control charts. A similar procedure is applied for the AFT model, as outlined in Table 6.

Main findings

This investigation serves to underscore the adeptness of our proposed chart, in swiftly and effectively identifying subtle shifts within processes, outperforming the established SVM-EWMA control chart. Through an in-depth computational analysis showcased in Tables 2, 3, 4, 5, 6, the superiority of our proposed control chart becomes distinctly evident, while Table 1 consists of existence studies. By meticulously calculating ARL and SDRL values for ARL0 = 370 and 500 across an array of shifts while employing smoothing constants \(\lambda\) = 0.10 and 0.25, this research endeavors to shed light on the crucial insights derived from the attained results:

-

Table 2 displays the outcomes obtained from employing the proposed SVM-EWMA control chart, utilizing a smoothing constant for ARL0 = 370 across varying shifts. A smoothing constant of \(\lambda\) = 0.1 is used. The observed trend reveals a descending order in the resulting ARL values as the shift values increase, signifying the unbiased nature of the ARL. For instance, consider the ARL values corresponding to different shifts: 370.24, 250.11, 157.92, 101.13, 75.90, 65.07, 57.62, 47.69, 40.64, 35.26, 30.77, 2.92, 1.00, and 1.00. As the shift values increase, there is a consistent reduction in the ARL values. Similarly, Table 3 demonstrates a similar pattern when utilizing a smoothing constant of \(\lambda\) = 0.25 at ARL0 = 370. The sequence of ARL values across different shifts follows a decreasing trend: 370.01, 195.62, 135.28, 121.95, 104.12, 84.76, 75.74, 70.03, 63.86, 61.76, 59.00, and 1.00. This consistent pattern further underscores that higher shift values correspond to smaller ARL values, reaffirming the trend observed in Table 1.

-

Tables 4 and 5 provide a comprehensive overview focusing on ARL0 = 500. These tables encompass the application of the suggested design for the SVM-EWMA control chart, utilizing two distinct smoothing constant values, 0.1 and 0.3, across various shifts. A notable observation gleaned from the outcomes is that smaller values for the smoothing constant consistently yield smaller ARL values. This trend is evident across the results presented in these tables, indicating that lower values for the smoothing constant are associated with reduced ARL values, regardless of the shifts applied in the analysis.

-

The comparison table, Table 6, serves to evaluate the efficacy of the proposed SVM-EWMA control chart against the corresponding risk-adjusted EWMA control chart. Both charts are assessed using smoothing constants of \(\lambda\) = 0.10 and 0.25, specifically for ARL0 = 370 across different shifts. The findings depicted in Table 5 vividly illustrate the superior performance of the proposed SVM-EWMA control chart in yielding smaller and more efficient results compared to the risk-adjusted EWMA control chart. For example, at ARL0 = 370 with a smoothing constant of 0.25 and shift 0.03, the resulting ARL for the proposed SVM-EWMA and the risk-adjusted EWMA control chart are 121.95 and 292.93, respectively. A similar trend is evident at ARL0 = 370 with a shift of 0.08, displaying values of 63.86 for the proposed SVM-EWMA and 196.40 for the existing chart. These consistent observations affirm that the newly proposed SVM-EWMA control chart consistently outperforms the considered RAEWMA control chart.

Conclusion

The application of statistical process monitoring tools extends far beyond the industrial sector and finds utility in diverse fields like healthcare. However, in healthcare, adjusting for risk factors is crucial prior to employing control charts. This study focuses on cardiac surgical data and emphasizes the necessity of adjusting patients' risk factors using an SVM model before implementing control charts. The newly proposed control chart in this study adapts the smoothing constant's value based on estimated shifts. The resulting ARL values across various shifts and different smoothing constants are meticulously presented in tables, underscoring the effectiveness of our proposed chart. This research highlights that our proposed SVM-EWMA control chart exhibits superior efficiency compared to its counterpart in monitoring healthcare processes. This emphasizes the importance of adapting statistical tools like control charts to suit the specific needs and nuances of healthcare contexts, particularly in assessing and managing risks associated with cardiac surgeries.

Data availability

The datasets used or analyzed in the ongoing study are held by the corresponding author, who can provide access to interested parties upon request. This procedure allows individuals seeking the data for further examination or validation to contact the corresponding author for access.

References

Steiner, S. H., Cook, R. J., Farewell, V. T. & Treasure, T. Monitoring surgical performance using risk-adjusted cumulative sum charts. Biostatistics 1(4), 441–452 (2000).

Asadayyobi, N. & Niaki, T. A. Monitoring patient survival times in surgical systems using a risk-adjusted AFT regression chart. Qual. Technol. Quant. Manag. 14(2), 237–248 (2016).

Neuburger, J., Walker, K., Sherlaw-Johnson, C., van der Meulen, J. & Cromwell, D. A. Comparison of control charts for monitoring clinical performance using binary data. BMJ Qual. Saf. 26(11), 919–928 (2017).

Zeng, L. Risk-adjusted performance monitoring in healthcare quality control. Qual. Reliab. Manag. Appl. 17(8), 27–45 (2016).

Ding, N., He, Z., Shi, L. & Qu, L. A new risk adjusted EWMA control chart based on survival time for monitoring surgical outcome quality. Qual. Reliab. Eng. Int. 37(4), 1650–1663 (2021).

Tighkhorshid, E., Amiri, A. & Amirkhani, F. A risk-adjusted EWMA chart with dynamic probability control limits for monitoring survival time. Commun. Stat.-Simul. Comput. 51(3), 1333–1354 (2020).

Lai, X. et al. A risk-adjusted approach to monitoring surgery for survival outcomes based on a weighted score test. Comput. Ind. Eng. 160, 107568 (2021).

Asif, F., Noor-ul-Amin, M., & Riaz, A. Accelerated failure time model based risk adjusted MA-EWMA control chart. Commun. Stat.-Simul. Comput. 33(7), 1–11 (2022).

Poloniecki, J., Valencia, O. & Littlejohns, P. Cumulative risk adjusted mortality chart for detecting changes in death rate: Observational study of heart surgery. BMJ 316(7146), 1697–1700 (1998).

Steiner, S. H., Cook, R. J. & Farewell, V. T. Monitoring paired binary surgical outcomes using cumulative sum charts. Stat. Med. 18(1), 69–86 (1999).

Lovegrove, J., Valencia, O., Treasure, T., Sherlaw-Johnson, C. & Gallivan, S. Monitoring the results of cardiac surgery by variable life-adjusted display. Lancet 350(9085), 1128–1130 (1997).

Steiner, S. H. & Jones, M. Risk-adjusted survival time monitoring with an updating exponentially weighted moving average (EWMA) control chart. Stat. Med. 29(4), 444–454 (2010).

Cook, D. A., Steiner, S. H., Cook, R. J., Farewell, V. T. & Morton, A. P. Monitoring the evolutionary process of quality: Risk-adjusted charting to track outcomes in intensive care. Crit. Care Med. 31(6), 1676–1682 (2003).

Biswas, P. & Kalbfleisch, J. D. A risk-adjusted CUSUM in continuous time based on the Cox model. Stat. Med. 27(17), 3382–3406 (2008).

Sego, L. H., Reynolds, M. R. Jr. & Woodall, W. H. Risk-adjusted monitoring of survival times. Stat. Med. 28(9), 1386–1401 (2009).

Paynabar, K., Jin, J. & Yeh, A. B. Phase I risk-adjusted control charts for monitoring surgical performance by considering categorical covariates. J. Qual. Technol. 44(1), 39–53 (2012).

Mohammadian, F., Niaki, S. T. A. & Amiri, A. Phase-I risk-adjusted geometric control charts to monitor health-care systems. Qual. Reliab. Eng. Int. 32(1), 19–28 (2016).

Aminnayeri, M. & Sogandi, F. A risk adjusted self-starting Bernoulli CUSUM control chart with dynamic probability control limits. AUT J. Model. Simul. 48(2), 103–110 (2016).

Zhang, X., Loda, J. B. & Woodall, W. H. Dynamic probability control limits for risk-adjusted CUSUM charts based on multiresponses. Stat. Med. 36(16), 2547–2558 (2017).

Sogandi, F., Aminnayeri, M., Mohammadpour, A. & Amiri, A. Risk-adjusted Bernoulli chart in multi-stage healthcare processes based on state-space model with a latent risk variable and dynamic probability control limits. Comput. Ind. Eng. 130, 699–713 (2019).

Asif, F. & Noor-ul-Amin, M. Adaptive risk adjusted exponentially weighted moving average control chart based on accelerated failure time regression. Qual. Reliab. Eng. Int. 38(8), 4169–4181 (2022).

Yeganeh, A., Shadman, A., Shongwe, S. C. & Abbasi, S. A. Employing evolutionary artificial neural network in risk-adjusted monitoring of surgical performance. Neural Comput. Appl. 35(14), 10677–10693 (2023).

Aslam, M., Khan, M., Rasheed, Z., Anwar, S. M. & Abbasi, S. A. An improved adaptive EWMA control chart for monitoring time between events with application in health sector. Qual. Reliab. Eng. Int. 39(4), 1396–1412 (2023).

Lai, X. et al. Generalized likelihood ratio based risk-adjusted control chart for zero-inflated Poisson process. Qual. Reliab. Eng. Int. 39(1), 363–381 (2023).

Rasouli, M., Noorossana, R. & Samimi, Y. Monitoring multistage multivariate therapeutic processes using risk-adjusted model-based group multivariate EWMA control chart. Qual. Reliab. Eng. Int. 38(5), 2445–2474 (2022).

Sogandi, F., Aminnayeri, M., Mohammadpour, A. & Amiri, A. Phase I risk-adjusted Bernoulli chart in multistage healthcare processes based on the state-space model. J. Stat. Comput. Simul. 91(3), 522–542 (2021).

Kazemi, S., Noorossana, R., Rasouli, M., Nayebpour, M. R. & Heidari, K. Monitoring therapeutic processes using risk-adjusted multivariate Tukey’s CUSUM control chart. Qual. Reliab. Eng. Int. 37(6), 2818–2833 (2021).

Tang, X., & Gan, F. F. Risk-adjusted exponentially weighted moving average charting procedure based on multi-responses. Paper presented at the Frontiers in Statistical Quality Control 12 (2018).

Rafiei, N. & Asadzadeh, S. Designing a risk-adjusted CUSUM control chart based on DEA and NSGA-II approaches A case study in healthcare: Cardiovascular patients. Scientia Iranica 29(5), 2696–2709 (2022).

Vapnik, V., Golowich, S. & Smola, A. Support vector method for function approximation, regression estimation, and signal processing. Adv. Neural Inf. Process. Syst. 9, 281–287 (1997).

Gunn, S. R. Support vector machines for classification and regression. Technical Report (University of Southampton, 1998).

Minoux, M. Mathematical Programming: Theory and Algorithms (Wiley, 1986).

Acknowledgements

The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University, Saudi Arabia for funding this work through Large Groups Project under grant number R.G.P2/186/44.

Author information

Authors and Affiliations

Contributions

M.N.A. and I.K. contributed to the manuscript by performing mathematical analyses and numerical simulations. A.A.A. and B.A. conceived the main concept, conducted data analysis, and assisted in restructuring the manuscript. A.R.R.A. and I.K. rigorously validated the results, revised the manuscript, and secured funding. Additionally, I.K. and A.A.A. enhanced the language of the manuscript and conducted additional numerical simulations. The final version of the manuscript, prepared for submission, represents a consensus reached by all authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Noor-ul-Amin, M., Khan, I., Alzahrani, A.R.R. et al. Risk adjusted EWMA control chart based on support vector machine with application to cardiac surgery data. Sci Rep 14, 9633 (2024). https://doi.org/10.1038/s41598-024-60285-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-60285-2

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.