Abstract

Graph domain adaptation (GDA) aims to address the challenge of limited label data in the target graph domain. Existing methods such as UDAGCN, GRADE, DEAL, and COCO for different-level (node-level, graph-level) adaptation tasks exhibit variations in domain feature extraction, and most of them solely rely on representation alignment to transfer label information from a labeled source domain to an unlabeled target domain. However, this approach can be influenced by irrelevant information and usually ignores the conditional shift of the downstream predictor. To effectively address this issue, we introduce a target-oriented unsupervised graph domain adaptive framework for graph adaptation called TO-UGDA. Particularly, domain-invariant feature representations are extracted using graph information bottleneck. The discrepancy between two domains is minimized using an adversarial alignment strategy to obtain a unified feature distribution. Additionally, the meta pseudo-label is introduced to enhance downstream adaptation and improve the model’s generalizability. Through extensive experimentation on real-world graph datasets, it is proved that the proposed framework achieves excellent performance across various node-level and graph-level adaptation tasks.

Similar content being viewed by others

Introduction

Graph neural networks (GNNs) typically rely on end-to-end supervision for training, which often demands a large amount of labeled data1,2. Manual labeling of graph data3,4, especially in the case of protein-protein interaction (PPI) networks5, is a time-consuming task. Furthermore, the absence of labels poses a significant challenge in newly-formed graph domains such as subway and aviation networks6,7. It is urgent to alleviate the challenge of sparse labels in the target domain by utilizing relevant or similar labeled domain graph data to train the models. However, recent research demonstrates that graph neural networks’ performance degrades when training models rely solely on labeled source data. The reason for this performance discrepancy is that the data used for training (labeled source data) and inference (unlabeled target data) originate from distinct distributions8,9. Consequently, training a well-generalized graph neural network model, especially for only source domain labeled data, presents a significant challenge.

In order to deal with this challenge, many scholars10,11,12,13 adopt the framework of joint learning to reduce the difference between the representation distributions of two domains. The framework of joint learning can effectively improve the accuracy of target domain unlabeled data, but there are still several critical problems:

(1) The adaptive performance of representation alignment is limited by irrelevant feature interference14,15,16. For instance, in social networks, social networks where the distributions of users’ friendships (the input) and their activity patterns (the label) are significantly influenced by the time and location of data collection17. In financial networks18, the flows of payments between transactions (the input) and the emergence of illicit transactions (the label) exhibit a strong correlation with external contextual factors such as the time of day or market conditions. These external factors can act as confounding variables, hindering the effectiveness of representation alignment methods. (2) Alignment strategies of domain feature design exhibit variations in different-level graph tasks19,20,21. For example, in the field of graph-level biomolecular, enhancing the feature representation of the subgraph functional groups in a molecule that yield its certain properties may provide insights to guide further experiments22. In protein-protein interaction (PPI) networks5, node pairs of protein are often used for domain feature extraction to explore the interaction principles between two protein nodes. (3) Ignoring the semantic distribution shift of the target domain23,24, such as feature scarcity, varying noise, and temporal evolution, can lead to suboptimal performance in graph adaptation tasks. For instance, in citation networks, the distribution of citations and subject areas changes over time25, reflecting the evolving nature of academic research. To address this, it’s crucial to incorporate techniques that adapt to such shifts, enabling models to capture the current state of the network more accurately.

In this report, TO-UGDA addresses the challenge of irrelevant feature interference by leveraging the Graph Information Bottleneck (GIB)26,27. This innovative approach effectively filters out superfluous information, focusing solely on the most pertinent features for domain adaptation. This is achieved by learning a compressed representation of the graph structure, which captures the crucial patterns for task performance while excluding noise and irrelevant features. Furthermore, TO-UGDA offers a flexible framework that can seamlessly adapt to varying levels of graph tasks. By establishing a specific sub-graph i.i.d. assumption28 and incorporating GIB-based adversarial adaptation training, our framework ensures robust alignment of domain features across diverse graph structures and tasks. This ranges from micro-level information in cross-network node classification to macro-level topology in cross-domain graph classification. Additionally, TO-UGDA incorporates meta pseudo-labels29, enabling the model to adapt to semantic distribution shifts in the target domain. By extracting self-semantic information from the target domain data, the model becomes more resilient to feature noise and time evolution, leading to enhanced adaptation and generalization capabilities. Experimental results demonstrate the effectiveness of our proposed method, achieving exceptional performance in two different-level graph tasks while exhibiting remarkable stability.

In summary, this report makes the following contributions:

-

1.

From the perspective of the joint probability distribution, we define and explain the adaptation error bounds of the encoder and predictor.

-

2.

We introduce a novel Target-Oriented Unsupervised Graph Domain Adaptation framework (TO-UGDA) that adopts a GIB-based adversarial strategy to align invariant graph feature representations and incorporates meta pseudo-labeling to bridge the gap in downstream semantic conditional adaptation, resulting in a more generalizable model.

-

3.

TO-UGDA outperforms the baseline in adaptation of micro information in cross-network node classification tasks and macro topology information in cross-domain graph classification tasks.

Related works

Unsupervised domain adaptation

Unsupervised domain adaptation (UDA), a crucial branch of transfer learning30, aims to address the problem of different distributions by minimizing the distribution discrepancy and transferring label knowledge of source domain31,32.

In recent years, many researchers have constantly advocated and paid attention to UDA, such as MMD33, DANN34, CDAN35 and TLDA36. In this report, we mainly discuss the adversarial-based domain adaptation method35,37 used in our framework. The main idea is minimizing the distance between the source and target domain representation to maximize the confusion of the domain discriminator, which forces the graph encoder can share relevant label knowledge and align feature distribution. The pioneering work DANN34 uses the generative-adversarial method of GAN38 to align two domains. MADA39 and CDAN35 take the downstream classification probability as the additional condition information to relieve the problem of downstream conditional shift.

However, it is noted that the assumption of independent and identically distributed (i.i.d.) of representation samples, which holds in classic research fields like computer vision40,41, natural language processing42, and signal processing technology43, does not directly apply to graph domain adaptation. In the graph domain, the graph representation depends on neighboring nodes and edges44, making it challenging to satisfy the i.i.d. assumption.

Graph domain adaptation

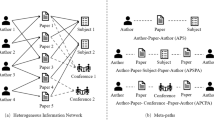

In recent years, many researchers in the graph field have proposed graph adaptive learning methods to resolve the alignment challenge under the non-i.i.d. assumptions, which can be divided into two different level types, node-level adaptation, and graph-level adaptation.

Node-level adaptation, which can also be considered as a cross-network task involving the alignment of source and target entire connected networks, has been explored in recent years. UDAGCN10 introduces the gradient reversal layer to align cross-network node embedding and develops a dual GCN component to ensure the local and global representation consistency of each node and reduce the irrelevant domain feature dependence. GRADE45 proposes a novel graph subtree discrepancy to measure the graph distribution shift between source and target networks, reduces irrelevant domain feature messages passing through graph subtrees and establishes constrained generalized error boundaries.

Graph-level adaptation gives rise to an interesting phenomenon where the graph macro topology representation satisfies the assumption of i.i.d. within the intra-domain (between graphs), but not within the node embedding of the graph itself (between nodes in the same graph)46,47. Therefore, it is crucial to strike a balance in the multi-player game of graph node representation, intra-domain topology information, and reducing outer-domain discrepancy. To tackle this challenge, DEAL24 employs a clever strategy that combines data augmentation with contrastive learning to address the challenging balance issue that arises in multi-party games. Furthermore, it leverages the encoding features of shallow graph neural networks as clustering information, enabling a clear and distinct differentiation between labels in the target domain. This approach not only enhances the extraction of domain topology feature information but also ensures a more robust and effective performance in handling the complexities of multi-party gaming scenarios. COCO48 proposes a coupled graph representation learning approach to extract invariant domain topology information and reduce the domain discrepancy by two different feature encoding modules, which incorporates graph representations learned from complementary views for enhanced domain topology information understanding.

These methods effectively relieve the problem of unsupervised graph domain adaptation. Below we briefly introduce the two main methods used in this report: Graph Information Bottleneck (GIB)26,27 is a principle used in graph neural networks to balance the complexity and robustness of learned representations. It ensures that the representation captures enough information to perform the task while avoiding irrelevant information that could lead to overfitting and alignment interference. Meta Pseudo-Labels29 is a knowledge distillation technique where the model generating pseudo labels for unlabeled data adjusts its predictions based on the performance of another model trained with these labels. This feedback loop refines the pseudo labels, leading to better model performance over time.

Problem definition and analysis

This section defines two graph adaptation tasks and analyzes the adaptive error bound of the encoder and predictor from the perspective of the joint probability distribution.

Problem statement

Inspired by previous works on graph domain adaptation10,45,48,49, we formally define two different problems of graph domain adaptation in detail.

Problem Formulation 1

(Cross-Network Node Adaptation) Given an unlabeled target single network \(G^{t}\) and a labeled source network \((G^{s},Y)\), cross-network node adaptation aims to improve the prediction performance of Node-Level task in the target network by using knowledge from the source network.

Problem Formulation 2

(Cross-Domain Graph Adaptation) Given an unlabeled target domain dataset \(D^{t}\) and a labeled source domain dataset \((D^{s},Y)\), the purpose of cross-domain graph adaptation is to improve the accuracy of Graph-Level property prediction in the target domain dataset by using knowledge from the source domain.

Adaptation error bound of encoder and predictor

In graph tasks, it is common to utilize classic architecture such as GNN encoder P(X) and classifier P(Y|X) to model the joint distribution P(X, Y) between data and labels50:

Aligning the joint distribution requires two steps, as shown in Fig. 1. The first step is to align the representations of the source domain and target domain data as closely as possible, and the second step is to fine-tune the source domain classifier by extracting the conditional information from the target domain itself.

According to two main steps in Fig. 1, we provide adaptation objective definitions from the perspectives of marginal distribution alignment and conditional distribution alignment.

Adaptation Objective 1

(Marginal Distribution Adaptation) Given the source and target graph representations \({H^{s}, H^{t}}\) obtained using the same GNN module with parameter \(\theta _f\), margin distribution adaptation refers to minimize the distribution discrepancy of \(d\big (P_{s}(x), P_{t}(x)\big )\) of \(\{H^{s}, H^{t}\}\), which can be defined as \(\underset{\theta _f}{{\text {argmin}}} \Delta _{d}=\underset{\theta _f}{{\text {argmin}}} \int _{-{\infty }}^{+{\infty }} d\big (P_s({x}), P_t({x})\big )dx\).

Adaptation Objective 2

(Conditional Distribution Adaptation) Given source and target graph classifiers with parameter \(\{\theta _c^{s},\theta _c^{t}\}\), assume conditional distribution \(\{P_{s}(y|x),P_{t}(y|x)\}\) of two classifiers can be applied on share representation P(x), therefore semantic distribution adaptation can be defined as \(\underset{\theta _c^{s},\theta _c^{t}}{{\text {argmin}}} \Delta _{d}=\underset{\theta _c^{s},\theta _c^{t}}{{\text {argmin}}} \int _{-{\infty }}^{+{\infty }} d\big (P_{s}(y|x),P_{t}(y|x)\big )P(x)dxdy\).

Methodology

Framework

To optimize these two objectives, there are three key steps in the TO-UGDA training process: (1) Joint pre-training of source and target domain data; (2) GIB-based domain adaptation; (3) Unsupervised meta pseudo-label learning. The model architecture is depicted in Fig. 2.

Joint pre-training initialization based on contrastive learning

Contrastive pre-training initialization has been proven to be beneficial for various graph tasks51,52. By combining data from two domains and applying self-supervised contrastive learning, the GNN encoder \(Z=F(x)\) is capable of learning generalized feature embedding and unifying the representation space.

For a given original sample \(x_i\), multiple similar disturbance samples \({ x_j }\) constitute a part of the positive pairs, and other samples that are far from the given original sample are constructed as negative pairs. The initialization GNN encoder is trained using a contrastive learning loss function:

where \(sim(\cdot , \cdot )\) denotes cosine similarity between two vectors and \(\tau\) is a temperature parameter. This loss function encourages the embeddings of the positive pair \((x_i, x_j^+)\) to be close to each other while pushing the embeddings of the negative pair \((x_i, x_j^-)\) further apart.

GIB-based invariant representation domain adaptation

Graph embedding violates the i.i.d. assumption, posing an alignment challenge for acquiring invariant information due to the node representation dependence on their neighboring nodes. Therefore, TO-UGDA needs to design a special encoder to extract invariant information, and then build GIB-based domain adaptation.

Invariant graph representation

As assumed by information theory26,28, node representations can be locally dependent on their important neighboring structures. Therefore, we establish a specific i.i.d. assumption that local neighborhood structure can represent each node in the graph, which enables it to adopt representation learning based on mutual information to extract invariant features.

In this report, we extract crucial neighborhood structural information from the original graph structure, denoted as \(A_v^{(l)}\) and described by a Bernoulli distribution with parameter \(\alpha _{v}^{(l)} \in [0, 1]\). This information is obtained to update the node representation \(Z_v^{(l)}\in R^{n}\) using the l-th layer GNN with parameter \(W^{(l)}\), as detailed below:

where, \(N_v\) represents the node number about neighborhood structure \(G_v\) of node v. Furthermore, the graph-level representation utilizes a \({\text {Readout}}(\cdot )\) function to represent each graph in the dataset, which is defined as:

where \(Z_G\in R^{n}\) is the n-dimensional invariant feature representation of input samples.

GIB-based domain adaptation

Inspired by information bottleneck theory26,53, the adaptation module of TO-UGDA is encouraged to maximize the mutual information between the source domain representation \(Z_s\) and the label \(Y_s\) to enhance prediction accuracy on source labeled data \((X_s,Y_s)\), and maximize the mutual information between two domains representation \((Z_s,Z_t)\) to align domain distribution. Finally, the graph information bottleneck avoids the interference of excessive irrelevant information. Therefore, the multi-objective optimization can be defined as:

where, \(\Omega\) is the search space of the optimal representation model \(\mathbb {P} (Z|X)\), \(I(\cdot ;\cdot )\) denotes the mutual information, \(I^{S}(X_s; Z_s) \le \gamma\) and \(I^{T}(X_t; Z_t) \le \gamma\) act as double GIBs constraints enable to limit the propagation of both domains information between the original input samples X and their invariant feature representation Z.

Due to the high computational complexity of mutual information measurement in the calculation process of constraint term, the variational upper bound \(I^{up}(X; Z)\) is used to effectively implement double GIBs information constraints about \(I^{S}(X_s; Z_s)\) and \(I^{T}(X_t; Z_t)\), and the proof is detailed in Supplementary Appendix A.

The first objective term \(\max I\left( Z_s; Y_s\right)\) in Eq. (5) can be equivalently achieved by minimizing the classification loss \(\mathcalligra {L}_{c l a}\left( F, C ; \theta _{f, c}\right)\) for the representation Z about the graph data G via invariant sub-information \(G_{sub}\), as follows:

where, \(\mathbb {P}_{\theta }\left( Y \mid G_{sub}\right)\) is a variational approximation of \(\mathbb {P}\left( Y \mid G_{sub}\right)\) to solve the intractable challenge of \(\mathbb {P}\left( Y \mid G_{sub}\right)\), the proof is detailed in Supplementary Appendix B1 and B2.

Meanwhile, the second objective term \(\max I\left( Z_s; Z_t\right)\) in Eq. (5) can be equivalently achieved by the adversarial loss \(\mathcalligra {L}_{a d v}\left( F, D ; \theta _{f, d}\right)\) of the discriminator D to maximize the lower-bound Donsker-Varadhan Representation54,55, as follows:

where, \(\mathcalligra {L}_{adv}\left( F, D ; \theta _{f, d}\right)\) is an instance \(D_{\theta }\) of any class \(\mathcalligra {F}\) of function \(T:\Omega \rightarrow \mathbb {R}\), which satisfying the integrability constraints of the Donsker-Varadhan Representation by a deep neural network with parameter \(\theta \in \Theta\) to obtain the lower-bound Donsker-Varadhan Representation \(I_{\theta }^{DV} \left( Z_s; Z_t\right)\), the proof is detailed in Supplementary Appendix B3.

Each mutual information term in Eq. (5) can be efficiently calculated, therefore, the final adaptation optimization loss function is:

where \(\beta\) is the weight factor about invariant representation, and the Eq. (8) is derived from Eq. (5) by GIB paradigm26 and Lagrange multiplier approach, the proof is detailed in Supplementary Appendix B4.

The GIB-based domain adaptation ensures that only the invariant features of two domains can be aligned to the same representation distribution and transfer the label information Y.

Unsupervised meta pseudo-label distillation

Inspired by meta pseudo-label knowledge distillation29, the teacher model actively participates in Boundary Bargaining Game (a term referring to the process of refining decision boundaries) and knowledge propagation on unlabeled data. Meanwhile, the student’s performance, which feeds back on labeled data testing after pseudo-label distillation from the teacher model, influences the direction and weight of the boundary games in the teacher model’s next step. This balance ensures both the generalization of unlabeled data and the fitting of labeled data.

In our work, we also consider target domain as the most crucial aspect, that the approach effectively reduces the discrepancy of conditional distribution adaptation \(\int _{-{\infty }}^{+{\infty }} d\big (P_{s}(y|x),P_{t}(y|x)\big )P(x)dxdy\) about the self-semantic information of target domain and the transfer knowledge of the source domain by the student testing performance. Furthermore, it alleviates the limitation of current graph adaptation methods, which often overemphasize source-labeled data and neglect target domain semantic conditional information. However, the most crucial step here is how the teacher model updates based on the performance of the student model.

Let T and S denote the teacher model and the student model, respectively, parameterized by \(\theta _T\) and \(\theta _S\). The ultimate training objective of TO-UGDA lies in achieving Bargaining Game’s Nash equilibrium between the self-semantic information of the target domain and the transfer knowledge of the source domain, by quantifying the classification loss of the student \(\theta _S\) on unseen true labeled source domain data:

where \(\theta _S(\theta _T)\) represents the relationship that student \(\theta _S\) rely on the pseudo-label generated by teacher \(\theta _T\).

During the bargaining distillation process, the student model is trained using pseudo labels generated by the teacher model in the target domain, and the teacher model is updated based on the student’s test performance on unseen true labeled source domain data. However, it is a challenge to directly update the teacher model’s parameters and achieve the Nash equilibrium of the bargaining distillation process by the performance of the student model. This process involves three key steps, as follows:

(1) Training the teacher model using labeled source domain dataset and unlabeled target domain dataset, with the optimization objective for \(\theta _T\) can be defined as:

where \(\mathcalligra {L}_{\text{ adapt }}\) is the adaptation loss function presented in Eq. (8).

(2) Training the student model \(\theta _S\) using the pseudo labels \((x_t, {\hat{y}}_t)\) generated by the teacher model \(\theta _T\), the optimization objective is:

where student model \(\theta _S\) is initialized based on unsupervised semantic clustering.

(3) Obtaining the bargaining distillation loss \(\mathcalligra {L}_{\text{ distill }}(T,S;[x_s, x_t, y_s])\), which is used to correct the updating direction of the teacher model based on the performance of the student model’s parameters, as follows:

where \(\mathcalligra {L}_{\text{ distill }}\) is expressed as the product of two derivatives (the student testing performance of pseudo-label and the teacher adaptation of soft pseudo-label), the details of the proof are described in Supplementary Appendix C1. Therefore, the updated adaptation loss function of teacher model is:

In the learning process, the student model can improve the adaptive ability of the overall model in the target domain, by leveraging target domain self-semantic information to limit the parameter search space of the teacher model. This method is helpful to improve the accuracy and efficiency of target domain prediction. Specifically, we describe the training algorithm of TO-UGDA in the Supplementary Appendix C2.

Experiments and analysis

Datasets

The effectiveness of the method is evaluated on multiple adaptation datasets with varying cause types, demonstrating its generalized adaptability. Detailed statistics are presented in Tables 1 and 2. In addition, we present the results of experiments on cross-network (node-level) and cross-domain (graph-level) adaptation tasks.

Cross-network

-

1.

Air-Traffic Network56: The dataset comprises air traffic networks in the United States, Europe, and Brazil, where each node represents an airport and an edge indicates the presence of commercial flights. The categories of airports are determined based on building size and aircraft activity.

-

2.

Citation Networks57: The citation networks DBLPv8 and ACMv9 are two paper citation networks. Each edge in these networks represents the citation relationship between two papers, where each node represents a paper and the class label indicates the research topic.

Cross-domain

In our experiment, we utilized various real-world datasets from different research areas and backgrounds in TUDataset58 to compare the performance of different baselines.

-

1.

Mutagenicity: The mutagenicity dataset comprises molecular structures and Ames test data. We divide the molecular structures into four different distribution sub-datasets (namely M0, M1, M2, and M3) based on the edge density of these structures.

-

2.

Letter-Drawings: This dataset consists of distorted letter drawings, as well as their variations at different intensity levels (low, medium, and high). For each class, multiple prototype drawings are manually constructed by using undirected edges and nodes to represent the handwritten alphabet.

-

3.

NCI: A biological dataset for the classification of anticancer activities. In this dataset, each graph represents a chemical compound, where nodes and edges represent atoms and chemical bonds, respectively. NCI1 is an activity screening for non-small cell lung cancer cells, and NCI109 is an activity screening for ovarian cancer cells.

Baselines

Three type baseline models are used for cross-network node classification adaptation: (1) Source-Only: GCN59, SGC60, GCNII61; (2) Node Feature-Only adaptation: CDAN35, DANN62, MDD63; (3) cross-network adaptation: AdaGCN64, UDAGCN10, EGI49, and GRADE45. For cross-domain graph classification adaptation, the following three type baselines are used to compare: (1) Source-Only: GCN59, SGC60, GIN44, (2) Traditional domain adaptation methods: CDAN35, ToAlign65 and MetaAlign66, whose feature encoder is replaced with GCN. (3) Graph cross-domain adaptation: DEAL24 and COCO48, a customized framework for adaptation tasks of graph classification.

Implementation details

We adopt a two-hidden-layer graph convolutional network as the feature extractor and a single layer of fully connected neural networks. In addition, the teacher model and the student model are optimized using SGD and Adam optimizers, with learning rates of 0.02 and 0.001. Each experimental result is the mean value through three repetitions and 200 epochs. We use Accuracy(ACC) as the classification metric.

Performance comparison

Cross-network

We conducted extensive experiments on the Air-Traffic Networks and Citation Networks. The experiment results of all methods in node classification are shown in Tables 3 and 4. From Tables 3 and 4, it can be seen that (1) Node Feature-Only adaptation performs worse than the Source-Only method in Citation Network. This can be attributed to the presence of an obvious community structure and topic citation style in Citation Network. (2) Cross-network adaptation achieves the best performance in node adaptation classification compared to other baselines. The reason is that the cross-network adaptation method can simultaneously align node features and topological structure information, effectively enhancing the model’s adaptability to graph-structured data. (3) TO-UGDA outperforms all other methods in node adaptation classification. Specifically, TO-UGDA achieves improvements of 3.8% to 14.5%, and 6.5% to 25.3% on the Air-Traffic and Citation networks, respectively.

Cross-domain

Cross-domain adaptation is a multi-graph alignment problem, resulting in diverse application scenarios for adaptation. We conducted experiments on three representative datasets: Mutagenesis (for edge density adaptation), Letter-drawing (for noise interference adaptation), and NCI (for label application adaptation).

The experimental results of all methods in graph classification are shown in Tables 5, 6, and 7. Several observations need to be highlighted: (1) Traditional domain adaptation methods did not show performance improvement, compared to the Source-Only method in three graph domain datasets. This is because cross-domain adaptation is susceptible to noise affecting the graph structure and irrelevant feature information from neighboring nodes. (2) Graph cross-domain adaptation outperforms other baselines by incorporating shallow representation semantic information and topological structure alignment. (3) TO-UGDA outperforms all compared methods in graph adaptation classification. Specifically, TO-UGDA achieves improvements of 0.2% to 18.9%, 3.8% to 18.92%, and 3.26% to 11.06% on the Mutagenicity, Letter-Drawing and NCI datasets, respectively. These significant breakthroughs can be attributed to the incorporation of GIB-based domain adversarial learning and pseudo-label knowledge distillation, making our model more generalized and adaptable to diverse adaptation scenarios.

Ablation study

We conduct an ablation study and analysis using citation networks as an example. In this study, we selectively remove components of TO-UGDA: pre-training with joint contrastive learning (Pre-Training), GIB-based domain adversarial adaptation (GIBDA), and pseudo-label knowledge distillation (Distill). This process results in six different variant models (A-F).

The ablation results are shown in Fig. 3, and we can obtain several observations. (1) The complete TO-UGDA outperforms all other variant models, which validates the importance of each module in unsupervised graph adaptation. (2) The score of variant C rapidly drops by 10.9% and 9.2% when the GIBDA component is removed, empirically validating the significance of invariant feature alignment. (3) Compared to variant D, the removal of the Distill component caused a decrease of 4.5% and 5.1%. This demonstrates that pseudo-label knowledge distillation effectively mines latent target-oriented information. (4) Comparing variants C and E, after removing GIBDA, the existence of the Distill module still reduces the accuracy by 2.5% and 1.2%, indicating that the latent information mining of Distill relies on the invariant feature filter and alignment.

Training stability evaluation and adaptation weight analysis

Models with weak adaptive ability are prone to exhibiting significant fluctuations in target domain accuracy, both before and after each round of parameter updates. Furthermore, our method exhibits superior convergence performance compared to other methods, even in early training iterations, as depicted in Fig. 4. This demonstrates the effectiveness of our approach, which benefits from the initialization of joint pre-training, enabling faster convergence and reduced training costs.

Additionally, in Fig. 5, we observed that the performance of TO-UGDA initially increases and then decreases as the adaptation weight parameter \(\beta\) varies from 0 to 0.05. This phenomenon occurs because a small weight for GIBDA fails to provide sufficient graph adaptation and invariant feature representation ability, while a large weight misleads the objective function, neglecting the learning of source domain features and labels.

Visualization of T-SNE

The visualization of the graph representations learned from our method and other baselines has been presented in Fig. 6. We observed that (1) Traditional adversarial domain adaptive method CDAN overly focus on complete feature distribution alignment, causing significant alignment interference by irrelevant features, as shown in Figs. 6b and f. (2) The representation distribution of TO-UGDA exhibits better local clustering and global separation in classification than GIN and DEAL in Fig. 6d. The source domain and target domain data distributions exhibit good alignment performance in Fig. 6h.

Conclusions and future work

In conclusion, we have defined the adaptive error bounds of the encoder and predictor, explaining them from the perspective of joint distribution probability. Drawing inspiration from this analysis, we propose TO-UGDA, a novel graph domain adaptation framework that leverages invariant feature alignment to extract essential information while discarding irrelevant details. TO-UGDA effectively addresses the challenges of Target-Oriented Unsupervised Graph Domain Adaptation. Extensive experimentation has demonstrated the superior performance of TO-UGDA over all baselines. The experimental outcomes demonstrate that by aligning invariant features, our model can effectively capture shared invariant features between the source and target domains. These invariant features remain consistent across different domains, enabling the model to seamlessly adapt to novel and unprecedented data, thereby enhancing its generalization capabilities. Furthermore, by emphasizing these invariant features, our approach minimizes the negative transfer effects that often arise due to domain discrepancies. Additionally, incorporating semantic information into the target domain further aids the model in grasping the intricate relationships between transferred knowledge and the inherent structure and meaning of the target domain data. Consequently, the model becomes more adept at precisely capturing semantic information within the target domain and learning label knowledge from the source domain, ultimately leading to improved performance in the target domain.

In the future, we will further research and explore how graph information bottleneck theory can select efficient compression strategies in graph adaptation tasks and avoid overfitting in the source domain. And how to avoid the potential amplification of the impact of adversarial attacks on meta pseudo labels during multiple distillation processes. At the same time, we plan to conduct experiments on the domain adaptation task of node-link prediction. We also aim to explore interpretable research to identify the invariant features in the source and target domains and uncover the captured semantic information in the target domain. This deeper understanding and analysis of graph adaptation tasks will facilitate further advancements in the field.

Data availability

The data supporting the findings of this study are available within the manuscript or supplementary information files.

References

Liu, Z., Nguyen, T.-K. & Fang, Y. Tail-gnn: Tail-node graph neural networks. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, 1109–1119 (2021).

Dai, E., Jin, W., Liu, H. & Wang, S. Towards robust graph neural networks for noisy graphs with sparse labels. In Proceedings of the Fifteenth ACM International Conference on Web Search and Data Mining, 181–191 (2022).

Suresh, S., Li, P., Hao, C. & Neville, J. Adversarial graph augmentation to improve graph contrastive learning. In Proceedings of the International Conference on Neural Information Processing Systems (2021).

Xu, D., Cheng, W., Luo, D., Chen, H. & Zhang, X. Infogcl: Information-aware graph contrastive learning. In Proceedings of the International Conference on Neural Information Processing Systems (2021).

Jiang, B., Kloster, K., Gleich, D. F. & Gribskov, M. Aptrank: an adaptive pagerank model for protein function prediction on bi-relational graphs. Bioinformatics 33, 1829–1836 (2017).

Ali, A., Zhu, Y. & Zakarya, M. Exploiting dynamic spatio-temporal graph convolutional neural networks for citywide traffic flows prediction. Neural Netw. 145, 233–247 (2022).

Zhang, J., Cao, J., Huang, W., Shi, X. & Zhou, X. Rutting prediction and analysis of influence factors based on multivariate transfer entropy and graph neural networks. Neural Netw. 157, 26–38 (2023).

Koh, P. W. et al. Wilds: A benchmark of in-the-wild distribution shifts. In International Conference on Machine Learning, 5637–5664 (PMLR, 2021).

Yehudai, G., Fetaya, E., Meirom, E., Chechik, G. & Maron, H. From local structures to size generalization in graph neural networks. In International Conference on Machine Learning, 11975–11986 (PMLR, 2021).

Wu, M., Pan, S., Zhou, C., Chang, X. & Zhu, X. Unsupervised domain adaptive graph convolutional networks. In Proceedings of the Web Conference, 1457–1467 (2020).

Wu, M., Pan, S. & Zhu, X. Attraction and repulsion: Unsupervised domain adaptive graph contrastive learning network. IEEE Trans. Emerg. Top. Comput. Intell. 6, 1079–1091 (2022).

Dai, Y., Zhu, H., Yang, S. & Zhang, H. Gcl-osda: Uncertainty prediction-based graph collaborative learning for open-set domain adaptation. Knowl.-Based Syst. 256, 109850 (2022).

Ding, K., Shu, K., Shan, X., Li, J. & Liu, H. Cross-domain graph anomaly detection. IEEE Trans. Neural Netw. Learn. Syst. 33, 2406–2415 (2021).

Bui, M.-H., Tran, T., Tran, A. T. & Phung, D. Exploiting domain-specific features to enhance domain generalization. In Proceedings of the International Conference on Neural Information Processing Systems (2021).

Yan, S., Zhang, Y., Xie, M., Zhang, D. & Yu, Z. Cross-domain person re-identification with pose-invariant feature decomposition and hypergraph structure alignment. Neurocomputing 467, 229–241 (2022).

Jiang, F., Li, Q., Liu, P., Zhou, X. & Sun, Z. Adversarial learning domain-invariant conditional features for robust face anti-spoofing. Int. J. Comput. Vision 131, 1680–1703 (2023).

Fakhraei, S., Foulds, J., Shashanka, M. & Getoor, L. Collective spammer detection in evolving multi-relational social networks. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’15, 1769-1778 (2015).

Pareja, A. et al. Evolvegcn: Evolving graph convolutional networks for dynamic graphs. In Proceedings of the AAAI Conference on Artificial Intelligence, 5363–5370 (2020).

Liu, B. & Chen, C.-H. An adaptive multi-hop branch ensemble-based graph adaptation framework with edge-cloud orchestration for condition monitoring. IEEE Transactions on Industrial Informatics (2023).

Xiong, H., Yan, J. & Pan, L. Contrastive multi-view multiplex network embedding with applications to robust network alignment. In Proceedings of the 27th ACM SIGKDD conference on knowledge discovery & data mining, 1913–1923 (2021).

He, Z. et al. A novel unsupervised domain adaptation framework based on graph convolutional network and multi-level feature alignment for inter-subject ecg classification. Expert Syst. Appl. 221, 119711 (2023).

Wencel-Delord, J. & Glorius, F. C-h bond activation enables the rapid construction and late-stage diversification of functional molecules. Nat. Chem. 5, 369–375 (2013).

Yu, J., Liang, J. & He, R. Mind the label shift of augmentation-based graph ood generalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 11620–11630 (2023).

Yin, N. et al. Deal: An unsupervised domain adaptive framework for graph-level classification. In Proceedings of the 30th ACM International Conference on Multimedia, 3470–3479 (2022).

Hu, W. et al. Open graph benchmark: datasets for machine learning on graphs. In Proceedings of the International Conference on Neural Information Processing Systems (2020).

Wu, T., Ren, H., Li, P. & Leskovec, J. Graph information bottleneck. In Proceedings of the International Conference on Neural Information Processing Systems (2020).

Yu, J. et al. Recognizing predictive substructures with subgraph information bottleneck. IEEE Trans. Pattern Anal. Mach. Intell. 46(3), 1650–1663 (2021).

Miao, S., Liu, M. & Li, P. Interpretable and generalizable graph learning via stochastic attention mechanism. In International Conference on Machine Learning (2022).

Pham, H., Dai, Z., Xie, Q. & Le, Q. V. Meta pseudo labels. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 11557–11568 (2021).

Zhuang, F. et al. A comprehensive survey on transfer learning. Proc. IEEE 109, 43–76 (2020).

Tan, C. et al. A survey on deep transfer learning. In Artificial Neural Networks and Machine Learning–ICANN 2018: 27th International Conference on Artificial Neural Networks (2018).

Wang, M. & Deng, W. Deep visual domain adaptation: A survey. Neurocomputing 312, 135–153 (2018).

Gretton, A., Borgwardt, K. M., Rasch, M. J., Schölkopf, B. & Smola, A. A kernel two-sample test. J. Mach. Learn. Res. 13, 723–773 (2012).

Long, M., Zhu, H., Wang, J. & Jordan, M. I. Unsupervised domain adaptation with residual transfer networks. In Proceedings of the International Conference on Neural Information Processing Systems (2016).

Long, M., Cao, Z., Wang, J. & Jordan, M. I. Conditional adversarial domain adaptation. In Proceedings of the International Conference on Neural Information Processing Systems (2018).

Zhuang, F., Cheng, X., Luo, P., Pan, S. J. & He, Q. Supervised representation learning: Transfer learning with deep autoencoders. In Twenty-fourth international joint conference on artificial intelligence (2015).

Motiian, S., Jones, Q., Iranmanesh, S. M. & Doretto, G. Few-shot adversarial domain adaptation. In Proceedings of the International Conference on Neural Information Processing Systems, 6671 – 6681 (2017).

Goodfellow, I. et al. Generative adversarial networks. Commun. ACM 63, 139–144 (2020).

Pei, Z., Cao, Z., Long, M. & Wang, J. Multi-adversarial domain adaptation. In 32nd AAAI Conference on Artificial Intelligence, AAAI 2018, 3934 – 3941 (New Orleans, LA, United states, 2018).

He, T., Shen, L., Guo, Y., Ding, G. & Guo, Z. Secret: Self-consistent pseudo label refinement for unsupervised domain adaptive person re-identification. In Proceedings of the AAAI conference on artificial intelligence, 879–887 (2022).

Huang, J., Guan, D., Xiao, A., Lu, S. & Shao, L. Category contrast for unsupervised domain adaptation in visual tasks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 1203–1214 (2022).

Gururangan, S. et al. Don’t stop pretraining: Adapt language models to domains and tasks. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, 8342–8360 (2020).

Willmore, B. D. & King, A. J. Adaptation in auditory processing. Physiol. Rev. 103, 1025–1058 (2023).

Xu, K., Hu, W., Leskovec, J. & Jegelka, S. How powerful are graph neural networks? In International Conference on Learning Representations (2018).

Wu, J., He, J. & Ainsworth, E. Non-iid transfer learning on graphs. In Proceedings of the AAAI Conference on Artificial Intelligence, 10342–10350 (2023).

Han, X., Huang, Z., An, B. & Bai, J. Adaptive transfer learning on graph neural networks. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, 565–574 (2021).

Liu, W. et al. Unified cross-domain classification via geometric and statistical adaptations. Pattern Recogn. 110, 107658 (2021).

Yin, N. et al. Coco: A coupled contrastive framework for unsupervised domain adaptive graph classification. In Proceedings of the 40th International Conference on Machine Learning, 40040–40053 (2023).

Zhu, Q. et al. Transfer learning of graph neural networks with ego-graph information maximization. In Proceedings of the International Conference on Neural Information Processing Systems (2021).

Li, A., Boyd, A., Smyth, P. & Mandt, S. Detecting and adapting to irregular distribution shifts in bayesian online learning. In Proceedings of the International Conference on Neural Information Processing Systems (2021).

Akkas, S. & Azad, A. Jgcl: Joint self-supervised and supervised graph contrastive learning. In Companion Proceedings of the Web Conference 2022: 1099–1105 (2022).

Zeng, J. & Xie, P. Contrastive self-supervised learning for graph classification. In 35th AAAI Conference on Artificial Intelligence, AAAI 2021, vol. 12B, 10824 – 10832 (2021).

Alemi, A. A., Fischer, I., Dillon, J. V. & Murphy, K. Deep variational information bottleneck. In International Conference on Learning Representations (2017).

Donsker, M. D. & Varadhan, S. R. S. Asymptotics for the polaron. Commun. Pure Appl. Math. 36, 505–528 (1983).

Belghazi, M. I. et al. Mutual information neural estimation. In International conference on machine learning, 531–540 (PMLR, 2018).

Ribeiro, L. F., Saverese, P. H. & Figueiredo, D. R. struc2vec: Learning node representations from structural identity. In Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining, 385–394 (2017). https://doi.org/10.1145/3097983.3098061

Tang, J. et al. Arnetminer: extraction and mining of academic social networks. In Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining, 990–998(2008). https://doi.org/10.1145/1401890.1402008

Morris, C. et al. Tudataset: A collection of benchmark datasets for learning with graphs. arXiv (2020). https://doi.org/10.48550/arXiv.2007.08663

Kipf, T. N. & Welling, M. Semi-supervised classification with graph convolutional networks. In International Conference on Learning Representations (2017).

Wu, F. et al. Simplifying graph convolutional networks. In International conference on machine learning, 6861–6871 (PMLR, 2019).

Chen, M., Wei, Z., Huang, Z., Ding, B. & Li, Y. Simple and deep graph convolutional networks. In International conference on machine learning, 1725–1735 (PMLR, 2020).

Ganin, Y. et al. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 17, 1–35 (2016).

Zhang, Y., Liu, T., Long, M. & Jordan, M. Bridging theory and algorithm for domain adaptation. In International conference on machine learning, 7404–7413 (PMLR, 2019).

Dai, Q., Wu, X.-M., Xiao, J., Shen, X. & Wang, D. Graph transfer learning via adversarial domain adaptation with graph convolution. IEEE Trans. Knowl. Data Eng. 35, 4908–4922 (2022).

Wei, G., Lan, C., Zeng, W., Zhang, Z. & Chen, Z. Toalign: Task-oriented alignment for unsupervised domain adaptation. In Proceedings of the International Conference on Neural Information Processing Systems (2021).

Wei, G., Lan, C., Zeng, W. & Chen, Z. Metaalign: Coordinating domain alignment and classification for unsupervised domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 16643–16653 (2021).

Acknowledgements

This work was supported by the Major Program of the National Natural Science Foundation of China with Grant No T2293771, by the Innovation Research Group Project of the Natural Science Foundation of Sichuan with Grant No 24NSFTD0129 and by the Key Research and Development Project of Sichuan with Grant No 24ZDYF0004.

Author information

Authors and Affiliations

Contributions

Z. Z. developed the theoretical framework, conducted the majority of the experiments, and wrote the initial draft of the manuscript. J. X. and Z. Y. contributed to the initial brainstorming sessions, offering ideas and assisting with some experiments. T. M. provided analysis and advice on the research problems. D. C. provided valuable guidance, funding support, and essential computational resources for the research, and revised the manuscript. All authors have read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zeng, Z., Xie, J., Yang, Z. et al. TO-UGDA: target-oriented unsupervised graph domain adaptation. Sci Rep 14, 9165 (2024). https://doi.org/10.1038/s41598-024-59890-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-59890-y

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.