Abstract

Tucker decomposition is widely used for image representation, data reconstruction, and machine learning tasks, but the calculation cost for updating the Tucker core is high. Bilevel form of triple decomposition (TriD) overcomes this issue by decomposing the Tucker core into three low-dimensional third-order factor tensors and plays an important role in the dimension reduction of data representation. TriD, on the other hand, is incapable of precisely encoding similarity relationships for tensor data with a complex manifold structure. To address this shortcoming, we take advantage of hypergraph learning and propose a novel hypergraph regularized nonnegative triple decomposition for multiway data analysis that employs the hypergraph to model the complex relationships among the raw data. Furthermore, we develop a multiplicative update algorithm to solve our optimization problem and theoretically prove its convergence. Finally, we perform extensive numerical tests on six real-world datasets, and the results show that our proposed algorithm outperforms some state-of-the-art methods.

Similar content being viewed by others

Introduction

A massive amount of high-dimensional data has been accumulated in social networks, neural networks, data mining, computer vision, and other domains as data extraction technology has advanced. A number of issues arise when analyzing and processing high-dimensional data, such as the need for long computation times and large memory spaces. As a result, dimensionality reduction is commonly conducted prior to further processing and analysis of these data. High-dimensional data is often vectorized to form a larger matrix. Matrix-based methods, such as principal component analysis (PCA)1, singular value decomposition (SVD)2, multiway extensions of the SVD3, and linear discriminant analysis (LDA)4, are then used for dimensionality reduction. However, the matrix-based dimensionality reduction methods ignore the internal structure of the data. Therefore, tensor decomposition techniques are used to gain a better understanding of data features. There are some widely used tensor decomposition methods, such as Eckart-Young decomposition5, CANDECOMP/PARAFAC (CP) decomposition6, Tucker decomposition (TD)7, and the family of principal component decomposition models related to TD8,9,10,11,12. TD is the decomposition of a tensor into the product of the core tensor and some factor matrices in different directions. When the core tensor in TD is taken to be the unit tensor, it degenerates to CP decomposition. Different from CP, multiway versions of principal component decompositions related to TD focus on underlining different numbers of main influence components for various multiway data via feature extraction along different modes of models.

TD has been successfully applied in the fields of pattern recognition, cluster analysis, image denoising, and image complementation. Due to the powerful data representation capabilities of TD, many TD variants have been developed in recent years based on reasonable assumptions such as sparsity13, smoothness14, and convolution15. However, TD faces some challenges when dealing with high-dimensional data: (i) The size of the core tensor in TD grows rapidly as the order of the data increases, which may result in a high cost of calculation and estimation complexity; (ii) TD does not consider the variability in each direction. This variability is widespread in some real data, such as traffic and internet data, where the three modes of the third-order tensor have strong temporal, spatial, and periodic significance16. To remedy these shortcomings, Qi et al.17 proposed a bilevel form of triple decomposition (TriD). The triple decomposition for third-order tensors transforms a third-order tensor into a product of three third-order factor tensors. Each factor tensor represents a different meaning and is of lower dimension in two directions. TriD performs TD on a tensor and triple decomposes the Tucker core at the same time. The number of parameters in TriD is less than that of TD in substantial cases. Therefore, TriD is less costly than TD.

Although TriD has achieved better results in tensor data recovery experiments, it does not take into account the geometrical manifold structure of the data. In the past decade, manifold learning has been widely adopted to preserve the geometric information of original data. Cai et al.18 explored the geometrical information by constructing a k-nearest neighbor graph and proposed the graph regularized nonnegative matrix factorization (GNMF), which demonstrated promising performance in clustering analysis. To improve the robustness of GNMF, some variants of GNMF have been proposed as described in the literatiures19,20,21,22,23,24. Li et al.25 introduced a manifold regularization term on the core tensor and proposed a manifold regularization nonnegative Tucker decomposition (MR-NTD) method. Qiu et al.26 proposed a graph regularized nonnegaitve Tucker decomposition (GNTD) method by applying Laplacian regularization to the last nonnegative factor matrix. Liu et al.27 presented a technique known as graph regularized \(L_p\) smooth NTD (GSNTD) via embedding graph regularization and \(L_p\) smooth constraint into the original model of NTD. Subsequently, Wu et al.28 proposed a manifold regularization nonnegative triple decomposition (MRNTriD) of tensor sets that takes advantage of tensor geometry information. These graph-based manifold learning methods perform well in clustering. They, however, only consider the pairwise relationship between samples and ignore the high-order relationship among samples. Hypergraph learning is a good candidate for solving this problem.

Using a hypergraph to model the high-order relationship between samples will improve classification performance. There are numerous significant methods combined with hypergraphs that work well in clustering tasks: Zeng et al.29 presented a hypergraph regularized nonnegative matrix factorization (HNMF) method. Wang et al.30 introduced a hypergraph regularization to \(L_{1/2}\)-NMF (HSNMF) for exploiting spectral-spatial joint structure of hypespectral images. Huang et al.31 constructed a sparse hypergraph for better clustering and proposed a sparse hypergraph regularized NMF (SHNMF) method. Yin et al.32 proposed a hypergraph regularized nonnegative tensor factorization (HyperNTF) method by incorporating hypergraph into nonnegative tensor decomposition. Zhao et al.33 introduced a hypergraph regularized term into the framework of the nonnegative tensor ring decomposition and proposed a hypergraph regularized nonnegative tensor ring decomposition (HGNTR). To reduce computational complexity and suppress noise, they applied the low-rank approximation trick to accelerate HGNTR (LraHGNTR)33. Huang et al.34 designed a method to dynamically update the hypergraph and proposed a dynamic hypergraph regularized nonnegative Tucker decomposition (DHNTD) method.

To the best of our knowledge, there is no method to consider higher-order relationships among data sample points in TriD. Inspired by the advantages of hypergraph learning and TriD, in this paper, we present a hypergraph regularized nonnegative triple decomposition (HNTriD) model. HNTriD can explore low-dimensional parts-based representations while preserving detailed complex geometrical information from high-dimensional tensor data. Then, we develop an iterative multiplicative updating algorithm to solve the HNTriD model. The following are the main contributions of this paper:

-

HNTriD is a novel dimensionality reduction method by incorporating hypergraph learning into TriD. It is good at dealing with the clustering tasks for tensor data, and the computation cost and containment resources could be greatly reduced.

-

HNTriD embraces the merit of the complex connections of observed samples while retaining raw data structural information in dimensionality reduction. We attribute this excellent performance to the hypergraph regularized term’s ability which can successfully approximate the inner relationships of original data.

-

HNTriD makes sense for some practical applications, such as clustering tasks, because it performs well at multiway data learning and can successfully preserve the important characteristics in dimensionality reduction. Experimental results in some popular datasets, including COIL20, GEORGIA, MNIST, ORL, PIE, and USPS, show that HNTriD outperforms existing rival approaches in cluster analysis.

The remainder of this paper is organized as follows: Section 2 goes over some fundamental concepts, such as NTD, TriD, and hypergraph learning, that will be used in the subsequent sections. The objective function of the HNTriD model is proposed in Section 3, and we discuss the HNTriD optimization algorithm in detail, including the updating rules for the parameters of the model, the convergence analysis of the proposed method, and the computation complexity analysis of HNTriD. In Section 4, we present some experimental results that can be used to validate the efficacy and accuracy of our proposed method. The last section is the conclusion.

Related work

In this section, we briefly overview some basic definitions, including NTD32,34,35, TriD17,25, hypergraph learning36,37,38. The notations used in this paper are listed in Table 1.

Nonnegative tensor decomposition (NTD)

TD is a popular class of methods for dimensionality reduction of high-dimensional data7. The data collected in real life are usually nonnegative, so it makes more physical sense to add nonnegative constraints to all factors in TD. Therefore, we focus on the nonnegative tensor decomposition (NTD). In fact, NTD is a multiway extension of nonnegative matrix factorization (NMF)39, which imposes nonnegative constraints to the TD model35, and it preserves the multilinear structure of data. Given a nonnegative third-order tensor \(\mathcal {X}\in \mathbb {R}_+^{n_1\times n_2\times n_3}\), NTD can be expressed as a core nonnegative tensor \(\hat{\mathcal {X}}\in \mathbb {R}_+^{r_1\times r_2\times r_3}\) multiplied by three nonnegative factor matrices \(\textbf{U}\in \mathbb {R}_+^{n_1\times r_1}\), \(\textbf{V}\in \mathbb {R}_+^{n_2\times r_2}\), and \(\textbf{W}\in \mathbb {R}_+^{n_3\times r_3}\), and it can be formulated as

If the smallest integers \(r_1, r_2, r_3\) such that (1) holds, then we call the vector \((r_1, r_2, r_3)\) the Tucker rank. In the process of solving the optimal solution, we usually use its transformation of the mode-n matricization, and (1) can be expressed in the following equivalent forms

where \(\textbf{X}_{(n)}\) denotes the mode-n matricization of the tensor \(\mathcal {X}\), “\(\otimes\)” denotes the Kronecker product of two matrices.

Bilevel form of triple decomposition (TriD)

In the TD and NTD methods, the size of the core tensor grows rapidly as the order of data increases, which may result in a high cost of calculation. To overcome this shortcoming, Qi et al.17 recently proposed a new form of triple decomposition for third-order tensors, which reduces a third-order tensor to the product of three third-order factor tensors.

Definition 1

17 Let \(\hat{\mathcal {X}}=(\hat{x}_{ijl})\in \mathbb {R}^{r_1\times r_2\times r_3}\) be a nonzero tensor. We say that \(\hat{\mathcal {X}}\) is the triple product of three third-order square tensors \(\mathcal {A}\in \mathbb {R}^{r_1\times r\times r}\), \(\mathcal {B}\in \mathbb {R}^{r\times r_2 \times r}\), and \(\mathcal {C}\in \mathbb {R}^{r\times r\times r_3}\), triple product of the tensors is denoted by

where \(\mathcal {A}\), \(\mathcal {B}\), and \(\mathcal {C}\) are named horizontally square tensor, laterally square tensor, and frontally square tensor, respectively. For \(i=1,2,\ldots ,r_1, j=1,2,\ldots ,r_2\), \(l=1,2,\ldots ,r_3\), the elementwise definition of the triple product can be illustrated as

If

where “\(mid\{\cdot \}\)” denotes the median, we call (3) is a low rank triple decomposition of \(\hat{\mathcal {X}}\). \(\mathcal {A}, \mathcal {B}\), and \(\mathcal {C}\) are the factor tensors of \(\hat{\mathcal {X}}\). The smallest value of r such that (4) holds is known as the triple rank of \(\hat{\mathcal {X}}\), which is denoted as TriRank(\(\hat{\mathcal {X}}\))=r. The triple rank of a zero tensor is defined as zero.

If a third-order tensor is decomposed by TD, and its Tucker core is triple decomposed into three tensors simultaneously. Then we get a bilevel form of the triple decomposition, that is shown below.

Definition 2

17 Based on the definition of NTD shown in (1), if the core tensor \(\hat{\mathcal {X}}\) has a triple decomposition \(\hat{\mathcal {X}}=\llbracket {\mathcal {A}\mathcal {B}\mathcal {C}}\rrbracket\), where TriRank(\(\hat{\mathcal {X}}\))=r, \(\mathcal {A}\in \mathbb {R}_{+}^{r_1\times r\times r}\), \(\mathcal {B}\in \mathbb {R}_{+}^{r\times r_2 \times r}\), and \(\mathcal {C}\in \mathbb {R}_{+}^{r\times r \times r_3}\). Then \(\mathcal {X}\) can be represented as

We call (5) a bilevel form of the triple decomposition of \(\mathcal {X}\), which is always referred as TriD. \(\mathcal {A}\), \(\mathcal {B}\), and \(\mathcal {C}\) are the inner factor tensors.

From (1), the minimum number in parameters of NTD of the third-order tensor \(\mathcal {X}\) is \(n_1r_1+n_2r_2+n_3r_3+r_1r_2r_3\), where \((r_1, r_2, r_3)\) is the Tucker rank of \(\mathcal {X}\). On the other hand, the number of parameters of TriD is \(n_1r_1+n_2r_2+n_3r_3+(r_1+r_2+ r_3)r^2\), where r is the triple rank of \(\hat{\mathcal {X}}\). Generally, the triple rank of \(\hat{\mathcal {X}}\) is far less than each of the Tucker rank’s components of the original tensor \(\mathcal {X}\). Then there are substantial cases where the number of parameters of TriD is strictly less than that of the TD.

NTD and TriD are linear dimensionality reduction techniques that may miss the essential nonlinear data structure. Manifold learning, on the other hand, is an effective technique for discovering geometric structure in multiway data, and hypergraph learning is a promising manifold learning method.

Hypergraph learning

To improve clustering performance, it is necessary to maintain the internal hidden geometry structure information, which can be detailed by the hypergraph learning. Given \(n_3\) grayscale image \(\{\textbf{X}_1,\textbf{X}_2,\ldots , \textbf{X}_{n_3}\}\), each grayscale image can be viewed as a matrix of size \(n_1\times n_2\). These \(n_3\) matrices are stacked to form a tensor \(\mathcal {X}\) of size \(n_1\times n_2 \times n_3\). The i-th frontal slice \(\mathcal {X}(:,:,i)\) of the tensor \(\mathcal {X}\) is exactly the matrix \(\textbf{X}_i\). In addition, we can build a hypergraph \((\mathbb {V},\mathbb {E};S)\) to encode the geometrical structure of raw data40. Each node \(v_i\in \mathbb {V}\) represents a related data \(\textbf{X}_i\) and every hyperedge \(e_{i}\in \mathbb {E}\) consists of several nodes that are clustered by some constraints. For each vertex \(v_i\), we form a hyperedge \(e_i\) of \(v_i\) and the k-neighbours of \(v_i\). For each hyperedge \(e_i\) with a weight \(s(e_i)\) which is used to measure the similarity of the contained image nodes. The weight \(s(e_i)\) can be calculated as follows:

where \(\sigma =\frac{1}{k n_3}\sum _{i=1}^{n_3}\sum _{j\in e_i}\Vert \textbf{X}_i-\textbf{X}_j\Vert _F\) denotes the mean distance among all vertices in hyperedge \(e_i\). In particular, we can construct an incidence matrix \(\textbf{H}\) as follows:

The degrees of a node \(v_i\) and a hyperedge \(e_q\) can be expressed as

and

respectively. We use \(\textbf{D}_v, \textbf{D}_e\), and \(\textbf{S}_e\) to denote diagonal matrices whose elements are \(d(v_i)\), \(d(e_q)\), and \(s(e_q)\), respectively.

To make the hypergraph more visual, we show the spatial structure in Figure 1. Herein every \(v_j (j=1, 2, \dots , 9)\) represents a node, and each \(e_i (i=1, 2, \dots , 5)\) denotes a hyperedge.

If two matrix data \(\textbf{X}_i\) and \(\textbf{X}_j\) are similar in the original raw observation, it is reasonable to assume that their low-dimensional representations \(\textbf{w}_i\) and \(\textbf{w}_j\) are adjacent to each other. Combined with practical application and theoretical analysis of hypergraph31,32,33, we can assume that \(\textbf{w}_i\) and \(\textbf{w}_j\) are the corresponding vectors that are related to the nodes \(v_i\) and \(v_j\). Then, the following expression can be used to calculate the clustering similarity of the original data \(\textbf{X}_i\) and \(\textbf{X}_j\) in the low-dimensional approximation.

where \(\textbf{L}=\textbf{D}_v-\bar{\textbf{S}}\) is a hypergraph Laplacian matrix that characterizes the data manifold, and \(\bar{\textbf{S}}=\textbf{H}\mathbf{{S}}_e\textbf{D}_{e} ^{-1}\textbf{H}^\top\).

Hypergraph regularized nonnegative triple decomposition (HNTriD)

TriD is a significant tensor data dimensional reduction algorithm, but it ignores higher-order relationships between the inner parts of raw data and does not consider nonnegative constraints, which may result in a big gap in data clustering performance. Modeling the high-order relationship among samples will help to improve performance. Hypergraph learning is an effective tool for illustrating the inner complex connections of multiway data. By incorporating the hypergraph Laplacian regularized term into the bilevel form of triple decomposition, we get a new method named HNTriD, as shown in the following subsection.

Objective function of HNTriD

Suppose \(\mathcal {X}\in \mathbb {R}_{+}^{n_1\times n_2 \times n_3}\) be a third-order nonnegative tensor which we stack the samples that are represented by \(n_3\) second-order data \(\textbf{X}_i\in \mathbb {R}_{+}^{n_1\times n_2} (i=1,2,\ldots ,n_3)\) as the elements of the third mode, each \(\textbf{X}_i\) represents an original data sample of the raw data. Note that \(\textbf{X}_{(3)}=[\textrm{vec}{(\textbf{X}_1)},\textrm{vec}{(\textbf{X}_2)},\ldots ,\textrm{vec}{(\textbf{X}_3)}]^\top\), unfolding \(\mathcal {X}\) along the third mode we can simplify (1) into its matricization form that equals to the third equation of (2), which can be written as

Let \(\textbf{W}=[\textbf{w}_1,\textbf{w}_2,\ldots ,\textbf{w}_{n_3}]^\top\), each \(\textbf{w}_k\in \mathbb {R}_+^{n_3}\) can be regarded as a low-dimensional representation for the data \(\textbf{X}_k\) under the basis of \((\textbf{V}\otimes \textbf{U})\hat{\textbf{X}}_{(3)}^\top\).

To improve the multiway data representation ability and brush up operational efficiency, we propose the following HNTriD model, which incorporates the hypergraph constraint into the TriD model. For a given nonnegative tensor, \(\mathcal {X}\in \mathbb {R}_{+}^{n_1\times n_2\times n_3}\), HNTriD aims to find three nonnegative tensors \(\mathcal {A}\in \mathbb {R}_{+}^{r_1\times r\times r}\), \(\mathcal {B}\in \mathbb {R}_{+}^{r\times r_2 \times r}\), and \(\mathcal {C}\in \mathbb {R}_{+}^{r\times r \times r_3}\) and three nonnegative factor matrices \(\textbf{U}\in \mathbb {R}_{+}^{n_1\times r_1}\), \(\textbf{V}\in \mathbb {R}_{+}^{n_2\times r_2}\), and \(\textbf{W}\in \mathbb {R}_{+}^{n_3\times r_3}\) such that

The first and second parts of (7) are the reconstruction error term and the hypergraph regularized term, respectively. The reconstruction error term in (7) can be seen as a deep nonnegative tensor decomposition with two layers. The first layer is a TD in the following form

where \(\mathcal {Y}\times _1\textbf{U}\times _2\textbf{V}\) denotes the set of multilinear bases of the original data \(\mathcal {X}\) and \(\textbf{W}\) denotes the encoding matrix of \(\mathcal {X}\) under this set of multilinear bases. The second layer is the triple decomposition, which takes the following form

where each factor tensor represents a different meaning in different application problems. For example, in social networks and transportation data, different characteristics such as temporal stability, spatial correlation, and traffic periodicity may be reflected in each of these three factors. This two-layer decomposition not only reduces the computation required to update the core tensor, but also takes into account the respective advantages of the TD and the triple decomposition. The variable \(\alpha\) is an adjustment parameter that is used to measure the importance of the hypergraph regularization term. The hypergraph regularization term preserves the multilateral relationships among the data, so we establish model (7).

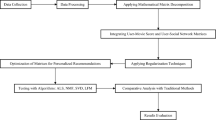

HNTriD is used to represent high-dimensional data in a low-dimensional form. To better show the implications of HNTriD, we draw a flowchart to provide a concise overview of the implementation procedure in Figure 2.

Optimization algorithm

When the parameters \(\mathcal {A}, \mathcal {B}, \mathcal {C}, \textbf{U}, \textbf{V}\), and \(\textbf{W}\) are considered simultaneously, the objective function \(f_{HNTriD}\) of HNTriD in (7) is not convex. Therefore, obtaining the global optimal solution is difficult. To deal with it, we introduce an iterative algorithm that achieves a local minimum. To simplify the process of solving the optimal algorithm, we show two important lemmas that will be frequently used.

Lemma 1

17Let \(\hat{\mathcal {X}}=\llbracket {\mathcal {A}\mathcal {B}\mathcal {C}}\rrbracket\), we define three third-order tensors \(\mathcal {F}\in \mathbb {R}_{+}^{r^2\times r_2\times r_3}\), \(\mathcal {G}\in \mathbb {R}_{+}^{r_1\times r^2 \times r_3}\), and \(\mathcal {H}\in \mathbb {R}_{+}^{r_1\times r_2\times r^2}\) with entries

where \(k=q+(s-1)r\), \(l=p+(s-1)r\), and \(m=p+(q-1)r\), respectively. Then

Lemma 2

Let \(\textbf{M}\in \mathbb {R}^{m\times n}\), \(\textbf{N}\in \mathbb {R}^{n\times p}\), \(\textbf{P}\in \mathbb {R}^{p\times q}\), and \(\textbf{Q}\in \mathbb {R}^{m\times q}\). Then

Proof

According to

one has

Combining it with

and

yields

Therefore, \(\frac{\partial \Vert \textbf{M}\textbf{N}\textbf{P}-\textbf{Q}\Vert _F^2}{\partial \textbf{N}}=2 \textbf{M}^\top (\textbf{M}\textbf{N}\textbf{P}-\textbf{Q})\textbf{P}^\top\) is obtained. This completes the proof. \(\square\)

Solutions of inner factor tensors

When the variables \(\mathcal {B}, \mathcal {C}, \textbf{U}, \textbf{V}\), and \(\textbf{W}\) are fixed, then the objective function of HNTriD is equivalent to

The Lagrange function of the above optimization problem (10) is

The matricization form of (11) that along the mode-1 is

where \(\textbf{F}_{(1)}\) is the unfolding form of \(\mathcal {F}\) that defined as (8). By Lemma 2, the gradient of \(L_{\mathcal {A}}\) with respect to \(\textbf{A}_{(1)}\) is given by

According to41, we can take advantage of the Karush-Kuhn-Tucker (KKT) conditions \(\frac{\partial L_{\mathcal {A}}}{\partial \textbf{A}_{(1)}}=\textbf{0}\) and \(\Phi _1 \circledast \textbf{A}_{(1)}=\textbf{0}\), then the following equation is satisfied,

Based on the above equation, we obtain the following updating rule for \(\mathcal {A}\), and

Using the same technique, updating rules for inner factor tensors \(\mathcal {B}\) and \(\mathcal {C}\) are obtained, which can be expressed as

and

respectively.

Solutions of factor matrices

When the variables \(\mathcal {A}, \mathcal {B}, \mathcal {C}, \textbf{U}\), and \(\textbf{V}\) are fixed, then the objective function of HNTriD is equivalent to

The Lagrange function of the optimization problem (15) is

By using a transformation of the mode-3 matricization of the tensor \(\mathcal {X}\) and \(\hat{\mathcal {X}}\), (16) is obtained as follows

By Lemma 2, the gradient of \(L_{\textbf{W}}\) with respect to \(\textbf{W}\) is given by

Using the Karush-Kuhn-Tucker (KKT) conditions \(\frac{\partial L_{\textbf{W}}}{\partial \textbf{W}}=\textbf{0}\) and \(\Psi _3 \circledast \textbf{W}=\textbf{0}\), the following equation is satisfied,

Based on the above equation, we obtain the following updating rule for \(\textbf{W}\), and

Using the same technique, updating rules for the inner factor matrices \(\textbf{U}\) and \(\textbf{V}\) are obtained, which can presented as

and

respectively.

Convergence analysis theorically

In this subsection, the convergence of the iterative updating algorithm is investigated. Our proof will make use of an auxiliary function that is defined as below.

Definition 3

42 \(\mathbb {G}(x,\tilde{x})\) is an auxiliary function for \(\mathbb {F}(x)\) if the conditions

are satisfied.

The auxiliary function is of great help due to the key property that is shown as follows:

Lemma 3

42 If \(\mathbb {G}(x,\tilde{x})\) is an auxiliary function of \(\mathbb {F}(x)\), then \(\mathbb {F}(x)\) is non-increasing under the update

Now, we are going to show that the update rule for \(\textbf{A}_{(1)}\) shown in (12) is exactly the same as that shown in (20) with a proper auxiliary function. Considering the ith row and jth column entry \([\textbf{A}_{(1)}]_{ij}\) in \(\textbf{A}_{(1)}\), we use \(\mathbb {F}_{ij}\) to denote the part of the objective function (7) that is relevant only to \([\textbf{A}_{(1)}]_{ij}\). The first and second derivatives of \(\mathbb {F}_{ij}\) are

and

respectively.

Lemma 4

The function

is an auxiliary function for \(\mathbb {F}_{ij}\), which is only relevant to \([\textbf{A}_{(1)}]_{ij}\).

Proof

Since \(\mathbb {G}(x,x)=\mathbb {F}_{ij}(x)\) is obvious, we only need to show that the condition \(\mathbb {G}(x,[\textbf{A}_{(1)}]_{ij}^t)\ge \mathbb {F}_{ij}(x)\) holds. To achieve this, we take into consideration the Taylor series expansion of \(\mathbb {F}_{ij}(x)\) which can be formalized as

Comparing (21) with (22), we can get that \(\mathbb {G}(x,[\textbf{A}_{(1)}]_{ij}^t)\ge \mathbb {F}_{ij}(x)\) is satisfied as long as

holds, which can be expressed as

Since

which implies (23) holds, then \(\mathbb {G}(x,[\textbf{A}_{(1)}]_{ij}^t)\ge \mathbb {F}_{ij}(x)\) is satisfied. This completes the proof. \(\square\)

Theorem 1

The objective function of the HNTriD model (7) is non-increasing under the updating rule \(\textbf{A}_{(1)}\) represented as (12).

Proof

Replacing the auxiliary function \(\mathbb {G}(x,x^t)\) of (20) with (21) yields

According to

we have

Then we can see that (24) agrees with (12), and the Lemma 4 guarantees that (21) is an auxiliary function of \(\mathbb {F}_{ij}\). Based on this, in conjunction with Lemma 3, we can get that \(f_{HNTriD}\) is non-increasing under the update rule of (12). The proof is then finished. \(\square\)

We are going to state that the update for \(\textbf{W}\) expressed as (17) is equal to the update (20) with an appropriate auxiliary function. Considering the ith row and jth column entry \(\textbf{W}_{ij}\) in \(\textbf{W}\), we use \(\hat{\mathbb {F}}_{ij}\) to denote the part of the objective function (7) that is only relevant to \(\textbf{W}_{ij}\). The first and second derivatives of \(\hat{\mathbb {F}}_{ij}\) are shown below

and

respectively.

Lemma 5

The function

is an auxiliary function for \(\hat{\mathbb {F}}_{ij}\), which is only relevant to \(\textbf{W}_{ij}\).

Proof

Since \(\hat{\mathbb {G}}(x,x)=\hat{\mathbb {F}}_{ij}(x)\) is obvious, we only need to illustrate that the condition \(\hat{\mathbb {G}}(x,x)\ge \hat{\mathbb {F}}_{ij}(x)\) holds. To achieve this, we take into consideration the Taylor series expansion of \(\hat{\mathbb {F}}_{ij}(x)\) which can be expressed as follows

Combing (25) with (26) we can find that \(\hat{\mathbb {G}}(x,\textbf{W}_{ij}^t)\ge \hat{\mathbb {F}}_{ij}(x)\) is equivalent to

And the above equation can be rewritten as

Since

which implies (27) holds, and \(\hat{\mathbb {G}}(x,\textbf{W}_{ij}^t)\ge \hat{\mathbb {F}}_{ij}(x)\) is satisfied. This completes the proof. \(\square\)

Theorem 2

The objective function of the HNTriD model (7) is non-increasing under the updating rule \(\textbf{W}\) represented as (17).

Proof

Using (25) to replace the \(\mathbb {G}(x,x^t)\) that lies in (20), we obtain

According to

we have

It is worth noting that (28) is consistent with (17). Lemma 5 ensures that (25) is an auxiliary function of \(\hat{\mathbb {F}}_{ij}\), which combined with Lemma 3 results in \(f_{HNTriD}\) being non-increasing under the update rule (17). This brings the proof to a close. \(\square\)

Applying the same techniques to parameters \(\mathcal {B}, \mathcal {C}, \textbf{U}\), and \(\textbf{V}\) to check the convergence of HNTriD. To summarize, we can obtain that \(f_{HNTriD}\) is non-increasing under each of the update rules for inner factor tensors and matrices \(\mathcal {A}, \mathcal {B}, \mathcal {C}, \textbf{U}, \textbf{V}\), and \(\textbf{W}\) while fixing the others. Before imposing our algorithm on real-world datasets for clustering tasks, it is necessary to simplify the calculation formulas of the parameters \(\mathcal {A}, \mathcal {B}, \mathcal {C}, \textbf{U}, \textbf{V}\), and \(\textbf{W}\), as in the following Remark.

Remark 1

From the form of updating rules of \(\mathcal {A},\mathcal {B},\mathcal {C},\textbf{U}, \textbf{V}\), and \(\textbf{W}\), it is a fact that each update needs to calculate the Kronecker products which requires costly storage resources. To simplify the produce of updating for mentioned parameters, we take advantages of the tensor property of the mode-n unfolding. Then, we get

and

which means that (12) and (18) can be transformed as

and

respectively. According to

and

(13) and (19) can be calculated as

and

Similarly, (14) and (17) can be further rewritten as

and

respectively.

Hence, the learning rules for the objective function are obtained via the multiplicative update methods described as above. Specifically, we randomly initialize the tensors and factor matrices \(\mathcal {A}\), \(\mathcal {B}\), \(\mathcal {C}\), \(\textbf{U},\textbf{V}\), and \(\textbf{W}\), then iterate them by (29), (31), (33), (30), (32), and (34). Each iteration ends when the stopping criterion is met. After completing all iterations, we record the operations of the model and examine the convergence at the end of each iteration. The pseudo-code for HNTriD is given in Algorithm 1.

Computational complexity analysis

In this subsection, we analyze the computational complexity of the proposed HNTriD model. First, we consider the calculation cost for the tensor-tensor product in (8). In the process of computation tensors \(\mathcal {F}, \mathcal {G}\), and \(\mathcal {H}\) takes \(\mathcal {O}(r^3r_2r_3)\), \(\mathcal {O}(r^3r_1r_3)\), and \(\mathcal {O}(r^3r_1r_2)\) operations, respectively. It requires \(\mathcal {O}(n_1r_1^2+n_2r_2^2+n_3r_3^2)\) operations to calculate symmetric matrices \(\textbf{U}^\top \textbf{U}\), \(\textbf{V}^\top \textbf{V}\), and \(\textbf{W}^\top \textbf{W}\). It takes \(\mathcal {O}(n_1n_2n_3r_2+n_1n_3r_2r_3+n_1r^2r_2r_3)\) operations for calculating \((\mathcal {X}\times _2 \textbf{V}^\top \times _3 \textbf{W}^\top )_{(1)}\textbf{F}_{(1)}^\top\). It takes \(\mathcal {O}(r^2r_2^2r_3+r^2r_2r_3^2+r^4r_2r_3+r^4r_1+n_1r^2r_1)\) operations to calculate \(\textbf{U}\textbf{A}_{(1)}(\mathcal {F}\times _2 \textbf{V}^\top \textbf{V}\times _3 \textbf{W}^\top \textbf{W})_{(1)}\textbf{F}_{(1)}^\top\). Since \((\mathcal {X}\times _2 \textbf{V}^\top \times _3 \textbf{W}^\top )_{(1)}\textbf{F}_{(1)}^\top\) and \(\textbf{U}\textbf{A}_{(1)}(\mathcal {F}\times _2 \textbf{V}^\top \textbf{V}\times _3 \textbf{W}^\top \textbf{W})_{(1)}\textbf{F}_{(1)}^\top\) are available, the computational cost for each term, including \(\textbf{U}^\top (\mathcal {X}\times _2 \textbf{V}^\top \times _3 \textbf{W}^\top )_{(1)}\textbf{F}_{(1)}^\top\), \(\textbf{U}^\top \textbf{U}\textbf{A}_{(1)}(\mathcal {F}\times _2 \textbf{V}^\top \textbf{V}\times _3 \textbf{W}^\top \textbf{W})_{(1)}\textbf{F}_{(1)}^\top\), \((\mathcal {X}\times _2 \textbf{V}^\top \times _3 \textbf{W}^\top )_{(1)}\textbf{F}_{(1)}^\top \textbf{A}_{(1)}^\top\), and \(\textbf{U}\textbf{A}_{(1)}(\mathcal {F}\times _2 \textbf{V}^\top \textbf{V}\times _3 \textbf{W}^\top \textbf{W})_{(1)}\textbf{F}_{(1)}^\top \textbf{A}_{(1)}^\top\), is equal to \(\mathcal {O}(n_1r^2r_1)\). Then, the cost of computing the update rules of \(\mathcal {A}\) in (29) is about \(\mathcal {O}(r^2r_1)\). Assume that integers \(r_1, r_2, r_3\), and r are of the same order of magnitude and they are much smaller than \(n_1,n_2\), and \(n_3\). We claim that the total computational cost of computing the update rule of \(\mathcal {A}\) in (29) and \(\textbf{U}\) in (30) is approximately

Similarly, the total computational cost of computing updating rules for \(\mathcal {B}\) in (31) and \(\textbf{V}\) in (32) is about

The total computational cost of updating the rules for \(\mathcal {C}\) in (33) and \(\textbf{W}\) in (34) is approximately

Therefore, we can get the total calculation cost of the HNTriD algorithm approximately as

Experiments

To check the validation of our proposed HNTriD algorithm for clustering data with dimensionality reduction, we run experiments on six popular datasets and compare the results of (7) with that of the related state-of-the-art methods, including NMF42, GNMF18, HNMF29, HSNMF31, SHNMF43, HGNTR33, LraHGNTR33, HyperNTF32, and TriD17. All the simulations will be performed on a desktop computer equipped with an Intel (R) Core (TM) i5-10400F CPU at 2.90 GHz and 16 GB of memory, running MATLAB 2015a in Windows 10.

Datasets

The clustering performance is evaluated on six widely used datasets, including COIL20, GEORGIA, MNIST, ORL, PIE, and USPS. The general statistical information of the datasets is summarized in Table 2, including the samples, sizes, and categories that were used in the numerical modeling tests of this paper. A brief overview of the mentioned datasets is presented below.

-

COIL20 (https://www.cs.columbia.edu/CAVE/software/softlib/coil-20.php): It is a grayscale image dataset comprised of photographs taken from 20 different individuals, and each person was photographed 72 pieces of images from different angles. After resizing each image to \(32\times 32\), we can get a third-order tensor \(\mathcal {Y}\in \mathbb {R}_{+}^{32\times 32 \times 1,440}\).

-

GEORGIA (http://www.anefian.com/research/face_reco.htm): It is a colored JPG image dataset, every image was drawn from 50 people and each person was photographed 15 pieces of images with cluttered backgrounds. The images used in this paper have been converted to grayscale and resized to \(32\times 32\). We can obtain a tensor of third order, which defined as \(\mathcal {Y}\in \mathbb {R}_{+}^{32\times 32 \times 750}\).

-

MNIST (http://yann.lecun.com/exdb/mnist/): It is a handwritten digit image dataset, and each image is \(28\times 28\) in size. More than 60,000 digit images were collected in the MNIST dataset range from “0” to “9”. In the numerical tests of this paper, we chose 100 images randomly for each single digit. Thus, the chosen images can be presented as a third-order tensor \(\mathcal {Y}\in \mathbb {R}_{+}^{28\times 28\times 1,000}\).

-

ORL (https://github.com/saeid436/Face-Recognition-MLP/tree/main/ORL): It is a dataset that includes 400 grayscale face images of 40 different people collected from different facial expressions, various facial details, and varying lighting, and each image is in size of \(112\times 92\). A third-order tensor can be defined as \(\mathcal {Y}\in \mathbb {R}_{+}^{112\times 92 \times 400}\).

-

PIE (http://www.ri.cmu.edu/projects/project_418.html): It is a dataset containing over 40,000 facial images collected from 68 different individuals. These images were taken in a variety of poses, lighting conditions, and expressions. We randomly selected 53 people with 22 different facial images for our numerical tests. We converted them to gray-level and resize them to \(32\times 32\). Then the selected images can be expressed as a third-order tensor \(\mathcal {Y}\in \mathbb {R}_{+}^{32\times 32 \times 1,166}\).

-

USPS (https://www.csie.ntu.edu.tw/cjlin/libsvmtools/datasets/multiclass.html#usps): It is a dataset that includes 11,000 grayscale handwritten digits (from “0” to “9”) that are \(16 \times 16\) in size. In the simulation tests of this paper, we chose 100 images at random for each digit. On this basis, we can build a third-order tensor \(\mathcal {Y}\in \mathbb {R}_{+}^{16\times 16 \times 1,000}\).

Evaluation metrics

Clustering analysis groups samples only according to the sample data itself and its aim is to group different objects into different groups according to the controlled conditions. The way to evaluate the efficiency of the clustering methods is that objects within groups are similar to each other, while objects differ from group to group. The greater the similarity within the group, the greater the difference between the groups, the better the clustering effect. As we know, the ACC, NMI, and PUR are widely used assessment criteria44,45 of clustering algorithm. The accuracy (ACC) can be defined as

where n is the number of samples in datasets, \(\bar{x_i}\) and \(x_i\) denote the cluster sample and the original sample, respectively. The symbol \(map(\cdot )\) indicates the matchup relationship mapping function, which is responsible for matching the cluster samples and original samples. The symbol \(\delta (\cdot ,\cdot )\) is the delta function shown as follows

In general, the agreement between two clusters can be measured with the mutual information (\(\text {MI}\)), which is widely used in clustering applications. Given two discrete random variables \(\bar{X}\) and X which stand for the cluster label sets and true label sets, \(\bar{x}\) and x are selected arbitrarily from \(\bar{X}\) and X, respectively. Then, the \(\text {MI}\) can be measured by

where \(p(\bar{x})\) and p(x) are the edge probability distribution function which denote the probabilities of the samples. The \(p(\bar{x},x)\) denotes the joint probability distribution function of \(\bar{X}\) and X which means that the object belongs to category \(\bar{X}\) and category X at the same time. To force the score to have an upper bound, we take the \(\text {NMI}\) as one of the evaluation criterion, and the definition is

where \(\text {T}(\bar{X})\) and \(\text {T}(X)\) are the entropy of the cluster label set \(\bar{X}\) and the entropy of the true label set X. In this way, the score ranges of \(\text {NMI}(\bar{X},X)\) is from 0 to 1.

The purity (\(\text {PUR}\)) of a clustering algorithm is a simple assessment format which only have to calculate the proportion of the correct clustering to the total. In other words, the \(\text {PUR}\) is to scale the degree of correctness of measurement, the \(\text {PUR}\) score of a cluster is observed by a weighted sum of the \(\text {PUR}\) values of the respective clusters, which is denoted by

where \(\bar{X}=(\bar{x}_1,\bar{x}_2,\ldots ,\bar{x}_k)\) is the cluster category set, the \(\bar{x}_i\) denotes the ith cluster set. \(X=(x_1,x_2,\ldots ,x_k)\) is the original datasets that need to be clustered, \(x_i\) represents the ith original object. The total number of the objects is n that need to be clustered and the function \(|\cdot |\) denotes the cardinality of a set.

Algorithms for comparison

To ensure the clustering performance, we compare the proposed HNTriD model with the following state-of-the-art clustering algorithms.

-

NMF42: It incorporates nonnegative constraint into two factor matrices decomposed from the original matrix.

-

GNMF18: It imposes the graph constraint to the coefficient matrix of the NMF method.

-

HNMF29: It incorporates the hypergraph constraint into the coefficient matrix of the NMF method.

-

HSNMF30: It imposes the hypergraph constraint on the coefficient matrix based on the \(L_{1/2}\)-NMF method.

-

SHNMF31: It takes the sparse hypergraph as a regularization and adds it to the NMF framework.

-

HGNTR33: It includes the hypergraph constraint on the last TR core tensor and a nonnegative constraint on TR factor tensors.

-

LraHGNTR33: It is the low-rank approximation of HGNTR.

-

HyperNTF32: It imposes a hypergraph constraint on the last factor matrix of the CP model and limits all factor matrices to be nonnegative.

-

TriD17: It is a bilevel form of the triple decomposition of a third-order tensor.

Parameters selection

To achieve the best performance, some critical parameters in the experimental simulations needed to be adjusted. In all tests, let \(\epsilon =10^{-5}\) and the maximum number of iterations be 1000 unless otherwise specified. We set the regularized term \(\alpha\) at the grid of \(\{10^{-3}, 10^{-2}, 10^{-1}, 1, 10, 100, 1000\}\), and the k-nearest neighbors are chosen from \(\{3, 4, 5, 6, 7\}\). The parameters \(r_1\) and \(r_2\) are integers empirically chosen from \(\{3, 4, \dots , 32\}\), and the integer r is chosen from \(\{2, 3, \dots , 20\}\). Furthermore, we choose the third mode, \(r_3\), as the number of categories in the related datasets, as shown in Table 2. In our experiments, we let one of the parameters \(r_1,r_2,r,k,\alpha\) varies in the grid given above, and the rest of the parameters were fixed, and the parameters corresponding to the maximum values of NMI in the experiments were recorded. The optimal parameters corresponding to each dataset are given in Table 3.

In Figure 3, we show the effect of the parameters \(\alpha\) and k on the three indicators ACC, NMI, and PUR on different datasets. In subplots (a), (c), and (e) of Figure 3, the remaining parameters except \(\alpha\) are taken as in Table 3. In subplots (b), (d), and (f) of Figure 3, the remaining parameters except k are taken as in Table 3.

From Figure 3, we can conclude that when the parameter \(\alpha\) is set to \(10^{3}, 10^{-2}, 10, 10^{-2}, 10^{-2}\), and \(10^2\), the ACC, NMI, and PUR all perform better in clustering tasks on COIL20, GEORGIA, MNIST, ORL, PIE, and USPS datasets. The parameter k is set to 4, 3, 5, 5, 6, and 4, the ACC, NMI, and PUR achieve better results on COIL20, GEORGIA, MNIST, ORL, PIE, and USPS datasets, respectively.

Convergence study experimentally

In Section 3.3, we demonstrated that our HNTriD algorithm is non-increasing in theory. Here, we validate it using six HNTriD convergence curves tested from six related datasets, which are shown in Figure 4. There are two important key features that can be identified from Figure 4. First, as the number of iterations increases, the objective function value of HNTriD decreases. Second, the convergence report states that the curve declines rapidly and reaches a relatively stable state within thirty iterations. To summarize, HNTriD experiments show that our method works well on the six datasets mentioned above.

Experimental comparison

To validate the effectiveness of the proposed HNTriD method, we compare it to some state-of-the-art methods under the assessment criteria ACC, NMI, and PUR. For HNTriD, the parameters are selected as in Table 3. For each method embodied with manifold learning, we set the regularized term \(\alpha\) at the grid of \(\{10^{-3},10^{-2},10^{-1},1,10,100,1000\}\), and the k-nearest neighbors are chosen from {3, 4, 5, 6, 7}. For methods based on TD, such as TriD and HyperNTF, we take the dimension of the third direction of the core tensor to be the class number of the original data. For methods based on tensor ring decomposition, such as HGNTR and LraHGNTR, we take the product of the first order and the third order to be the class number of the original data. The remaining parameters in the comparison algorithm are adjusted on the grid taken by HNTriD. First, we run a number of numerical tests to compare the clustering effect across different datasets. Second, statistical significance comparison is performed on COIL20 and MNIST using the t-test. Third, we present 2-D visualizations of different methods for clustering results on the COIL20 dataset and then complete the comparison tests by means of the t-SNE technique46. Finally, we compare the amount of time they took to finish clustering tasks on six related real-world datasets.

Numerical comparison results

All experiments are run on the same sub-raw datasets, which are chosen at random from the corresponding database. Each experimental result is obtained only after the process has been repeated 100 times. The numerical tests, in particular, are performed in two steps. The first step is to choose a group of objects at random from the raw data and then decompose them into corresponding sub-raw data based on the parametric form of the model. To ensure that the experimental results are as accurate as possible to the real-world data clustering situation. We repeat the first step 10 times to obtain 10 groups of sub-raw data. In the second step, we use the K-means method to compute the evaluation index value for each group of sub-raw data. As before, we repeat the second step 10 times to obtain 10 evaluation values for each group of sub-raw data. Throughout the experiment, we can receive 100 evaluation index values and calculate the average value as the performance result for each method. Finally, we report the average performance in Tables 4, 5, and 6. Simultaneously, we record the time spent by each method performing clustering tasks on each dataset, and the results are shown in Figure 6.

Tables 4, 5, and 6, present experimental results demonstrating correlation algorithm clustering performance on six datasets. The advanced clustering method can be found in the tables under the quantitative clustering rules. For ease of observation, we highlight the best data in bold and the second ones in a slight underline. The experimental results presented above lead us to the following conclusion: (i) In terms of clustering performance, as measured by ACC, NMI, and PUR, our proposed method outperforms others in the majority of cases, and our experimental results are second-best, if not the best. (ii) The best experimental results in the mass are located in tensor-based methods. Because tensor methods consider more information from the raw data. (iii) The HNTriD method outperforms other tensor-based decomposition methods in most cases. Because it inherits the previous algorithm’s excellent characteristics, including TriD, and preserves the data’s multi-linear structure. Experiments show that the proposed HNTriD algorithm performs well in clustering tasks.

Statistical significance comparison

A t-test is a statistical technique used to determine if there is a significant difference between two groups of data. It functions as an important tool in hypothesis testing and aids researchers in determining whether two groups are genuinely distinct47,48. Subsequently, we examine the statistical significance of the disparity between HNTriD and some typical approaches using t-test. Similar to49, we take the significance level of \(p < 0.05\) in the t-test to draw the difference. If our approach outperforms a compared method in a comparison test and the difference is statistically significant (t-test, \(p < 0.05\)), we record it as significant better or worse for one time. If the difference between our approach and a compared method is not statistically significant, then we say that they are comparable. We use (a, b, c) to display comparison results. Three integers inside the brackets respectively correspond to the number of times that the performance of our method is significantly better than, comparable to, significantly worse than a related method. We compare 10 algorithms (including HNTriD) on both the COIL20 and MNIST datasets. In each comparison, we run all the compared algorithms 10 times, and we repeat each group of comparison experiments 10 times. Specifically, the statistical test results are presented in Tables 7 and 8.

According to comparison tests show in Table 7, our method is significantly superior to the compared methods on the COIL20 dataset. The experimental results on MNIST demonstrate a clear decline in performance compared to the experimental findings on COIL20. However, from the overview of all metrics’ evaluation, the results still demonstrate a high level of performance when compared to other approaches. Based on the information provided in Tables 7 and 8, it is evident that our method demonstrates significant statistical advancements compared to the listed methods in most cases. The statistical test findings indicate that our method has a significantly bigger advantage over the other compared ones.

Visualization on clustering tasks

In order to visually demonstrate the clustering performance of HNTriD, we present cluster visualizations of several comparable approaches to assess the data learning capability of HNTriD. In this experiment, we choose the COIL20 dataset as a representative example to conduct comparative tests on clustering tasks. We specifically select 10 categories of data for analysis. The data analysis is shown in a two-dimensional space using t-SNE, and the cluster results are displayed in Figure 5 for visual comparison.

Figure 5 demonstrates that the HNTriD method, when applied to the mulitiway dataset, is capable of effectively discerning the differences between data samples. HNTriD outperforms other approaches in visually separating sample clusters in the COIL20 dataset, while some methods fail to completely separate samples from other clusters. This strategy enhances the reliability of the clustering data comparison experiment mentioned above and confirms that the inclusion of HNTriD improves the learning capability of multiway data.

Running time comparison

From the previous experimental results (including numerical experiments, statistical significance comparison, and visualization on clustering tasks), the HNTriD model shows better data analysis performance. However, it is important to take into account the time cost when applying mathematical models in real-life situations. This means that if we can improve the efficiency of calculations while preserving the quality of data analysis, the mathematical model will be more effective in practical applications. Based on this background, we figure out the time cost and use Figure 6 to record the running time of clustering tasks for each method on six related datasets. On each dataset, we compare the computational time required by each method to complete the same numerical tests described in Subsection 4.6. Each bar in the Figure 6 represents the total time needed for a method to complete the cluster analysis of a dataset, and different colors represent different algorithms. For example, for each dataset, the time cost of HNTriD is represented in yellow.

We can deduce the following statements from the bar graph: (i) Matrix-based decomposition methods are almost always faster than tensor-based ones. Matrix-based methods have an obvious advantage in terms of running speed for there are few factors that needed to be updated due to their special arithmetic expression. (ii) When compared to general methods, manifold learning ones take longer to complete clustering tasks in most cases. This occurs because manifold learning algorithms require updating more parameters in clustering data. (iii) When compared to matrix-based algorithms, the HNTriD algorithm takes longer to cluster tasks. Given the computational complexity of the algorithm, the experimental results are consistent with our expectations. The increase in computational time is due to the construction of the hypergraph and the depiction of raw data. (iv) Among the tensor-based methods, the HNTriD algorithm’s computation speed does not fall behind while maintaining its superior performance.

Conclusions

In this paper, the proposed HNTriD method performs well in multiway data learning because it combines the advantages of hypergraph learning and TriD. By constructing hypergraphs, it can reveal the complex structural information of more complex variables hidden among raw data. When combined with the TriD model, it can retain the multi-linear structure of high-order data while mining the potential information within the data and has strong data clustering abilities. Furthermore, we use the multiplicative update method to optimize the proposed HNTriD model, and experiments show that the new algorithm is convergent. The proposed algorithm is applied to six real-world datasets for clustering analysis, including COIL20, GEORGIA, MNIST, ORL, PIE, and USPS, and the data clustering results are compared to those of several existing algorithms. The experimental results demonstrate that the proposed HNTriD method is efficient and saves time in data analysis. In our current work, our hypergraph does not change once it is generated, which may result in a less-than-ideal hypergraph learned in some data with unexpected noise. The solution to this problem, however, is outside the scope of our current work, and we hope to improve it in the future.

Data availability statements

The open datasets used in this manuscript have been linked in related parts, and the datasets generated during the current study are available from the corresponding author on reasonable request.

References

Wold, S., Esbensen, K. & Geladi, P. Principal component analysis. Chemometr. Intell. Lab. 2(1–3), 37–52 (1987).

Stewart, G. W. On the early history of the singular value decomposition. SIAM Rev. 35(4), 551–566 (1993).

Beh, E. J., & Lombardo, R. Multiple and Multiway Correspondence Analysis. Wiley Interdiscip. Rev. Comput. Stat. 11 e1464. MR3999531, (2019). https://doi.org/10.1002/wics.1464

Martinez, A. M. & Kak, A. C. PCA versus LDA. IEEE Trans. Pattern Anal. Mach. Intell. 23(2), 228–233 (2001).

Carroll, J. D. & Chang, J. J. Analysis of individual differences in multidimensional scaling via an n-way generalization of “eckart-young’’ decomposition. Psych. 35(3), 283–319 (1970).

Domanov, I. & Lathauwer, L. D. Canonical polyadic decomposition of third-order tensors: Reduction to generalized eigenvalue decomposition. SIAM J. Matrix Anal. App. 35(2), 636–660 (2014).

Kolda, T. G. & Bader, B. W. Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009).

Ceulemans, E. & Kiers, H. A. Selecting among three-mode principal component models of different types and complexities: A numerical convex hull based method. Br. J. Math. Stat. Psychol. 59(1), 133–150 (2006).

Kroonenberg, P. M. Applied Multiway Data Analysis. Wiley Series in Probability and Statistics, Wiley Interscience, Hoboken, NJ. MR2378349 (2008). https://doi.org/10.1002/9780470238004

Kiers, H. A. L. Three-way methods for the analysis of qualitative and quantitative two-way data (DSWO Press, Leiden, NL, 1989).

Kroonenberg, P. M. Multiway extensions of the SVD. Advanced studies in behaviormetrics and data science T. Imaizumi, A. Nakayama, S. Yokoyama, (eds.) 141–157 (2020)

Lombardo, R., Velden, M. & Beh, E. J. Three-way correspondence analysis in R. R J. 15(2), 237–262 (2023).

Xu, Y. Y. Alternating proximal gradient method for sparse nonnegative Tucker decomposition. Math. Program. Comput. 7, 39–70 (2015).

Yokota, T., Zdunek, R., Cichocki, A. & Yamashita, Y. Smooth nonnegative matrix and tensor factorizations for robust multi-way data analysis. Signal Process. 113, 234–249 (2015).

Wu, Q., Zhang, L. Q. & Cichocki, A. Multifactor sparse feature extraction using convolutive nonnegative Tucker decomposition. Neurocomputing 129, 17–24 (2014).

Tan, H. C., Yang, Z. X., Feng, G., Wang, W. H. & Ran, B. Correlation analysis for tensor-based traffic data imputation method. Procedia Soc. Behav. Sci. 96, 2611–2620 (2013).

Qi, L. Q., Chen, Y. N., Bakshi, M. & Zhang, X. Z. Triple decomposition and tensor recovery of third order tensors. SIAM J. Matrix Anal. Appl. 42(1), 299–329 (2021).

Cai, D., He, X. F., Han, J. W. & Huang, T. S. Graph regularized nonnegative matrix factorization for data representation. IEEE Trans. Pattern Anal. Mach. Intell. 33(8), 1548–1560 (2011).

Chen, K. Y., Che, H. J., Li, X. Q. & Leung, M. F. Graph non-negative matrix factorization with alternative smoothed l 0 regularizations. Neural Comput. Appl. 35(14), 9995–10009 (2023).

Deng, P. et al. Tri-regularized nonnegative matrix tri-factorization for co-clustering. Knowl-Based Syst. 226, 107101 (2021).

Li, C. L., Che, H. J., Leung, M. F., Liu, C. & Yan, Z. Robust multi-view non-negative matrix factorization with adaptive graph and diversity constraints. Inf. Sci. 634, 587–607 (2023).

Lv, L. S., Bardou, D., Hu, P., Liu, Y. Q. & Yu, G. H. Graph regularized nonnegative matrix factorization for link prediction in directed temporal networks using pagerank centrality. Chaos Solitons Fractals 159, 112107 (2022).

Nasiri, E., Berahmand, K. & Li, Y. F. Robust graph regularization nonnegative matrix factorization for link prediction in attributed networks. Multimed. Tools Appl. 82(3), 3745–3768 (2023).

Wang, Q., He, X., Jiang, X. & Li, X. L. Robust bi-stochastic graph regularized matrix factorization for data clustering. IEEE Trans. Pattern Anal. Mach. Intell. 44(1), 390–403 (2020).

Li, X. T., Ng, M. K., Cong, G., Ye, Y. M. & Wu, Q. Y. MR-NTD: Manifold regularization nonnegative Tucker decomposition for tensor data dimension reduction and representation. IEEE Trans. Neural Netw. Learn. Syst. 28(8), 1787–1800 (2016).

Qiu, Y. N., Zhou, G. X., Wang, Y. J., Zhang, Y. & Xie, S. L. A generalized graph regularized non-negative Tucker decomposition framework for tensor data representation. IEEE T. Cybern. 52(1), 594–607 (2020).

Liu, Q., Lu, L. & Chen, Z. Non-negative Tucker decomposition with graph regularization and smooth constraint for clustering. Pattern Recognit. 148, 110207 (2024).

Wu, F. S., Li, C. Q. & Li, Y. T. Manifold regularization nonnegative triple decomposition of tensor sets for image compression and representation. J. Optimiz. Theory App. 192(3), 979–1000 (2022).

Zeng, K., Yu, J., Li, C. H., You, J. & Jin, T. Image clustering by hyper-graph regularized non-negative matrix factorization. Neurocomputing 138, 209–217 (2014).

Wang, W. H., Qian, Y. T. & Tang, Y. Y. Hypergraph-regularized sparse NMF for hyperspectral unmixing. IEEE J. Sel. Topics Appl. Earth Obs. Remote Sens. 9(2), 681–694 (2016).

Huang, S. et al. Improved hypergraph regularized nonnegative matrix factorization with sparse representation. Pattern Recognit. Lett. 102, 8–14 (2018).

Yin, W. G., Qu, Y. Z., Ma, Z. M. & Liu, Q. Y. HyperNTF: A hypergraph regularized nonnegative tensor factorization for dimensionality reduction. Neurocomputing 512, 190–202 (2022).

Zhao, X. H., Yu, Y. Y., Zhou, G. X., Zhao, Q. B. & Sun, W. J. Fast hypergraph regularized nonnegative tensor ring decomposition based on low-rank approximation. Appl. Intell. 52(15), 17684–17707 (2022).

Huang, Z. H., Zhou, G. X., Qiu, Y. N., Yu, Y. Y. & Dai, H. A dynamic hypergraph regularized non-negative Tucker decomposition framework for multiway data analysis. Int. J. Mach. Learn. Cybern. 13(12), 3691–3710 (2022).

Kim, Y. D., & Choi, S. Nonnegative Tucker decomposition. In IEEE Comput. Vis. Pattern Recognit., pp. 1–8 (2007). IEEE

Gao, Y. et al. Hypergraph learning: Methods and practices. IEEE Trans. Pattern Anal. Mach. Intell. 44(5), 2548–2566 (2020).

Bretto, A. Hypergraph Theory (Springer, New York, 2013).

Zhang, Z. H., Bai, L., Liang, Y. H. & Hancock, E. Joint hypergraph learning and sparse regression for feature selection. Pattern Recognit. 63, 291–309 (2017).

Lee, D. D. & Seung, H. S. Learning the parts of objects by non-negative matrix factorization. Nature 401(6755), 788–791 (1999).

Zhou, D. Y., Huang, J. Y., & Schölkopf, B. Learning with hypergraphs: Clustering, classification, and embedding. Adv. Neural Inf. Process. Syst. 19 (2006)

Boyd, S., Boyd, S. P. & Vandenberghe, L. Convex Optimization (Cambridge Univ. Press, Cambridge, 2004).

Lee, D. D. & Seung, H. S. Algorithms for non-negative matrix factorization. Proc. Adv. Neural Inf. Process. Syst. 1, 556–562 (2001).

Wang, C. Y. et al. Dual hyper-graph regularized supervised NMF for selecting differentially expressed genes and tumor classification. IEEE ACM Trans. Comput. Biol. Bioinf. 18(6), 2375–2383 (2020).

Razak, F. A. The derivation of mutual information and covariance function using centered random variables. In AIP Conference Proceedings, vol. 1635, pp. 883–889 (2014). AIP

Yin, M., Gao, J. B., Xie, S. L. & Guo, Y. Multiview subspace clustering via tensorial t-product representation. IEEE Trans. Neural Netw. Learn. Syst. 30(3), 851–864 (2018).

Li, S., Li, W., Lu, H. & Li, Y. Semi-supervised non-negative matrix tri-factorization with adaptive neighbors and block-diagonal learning. Eng. Appl. Artif. Intell. 121, 106043 (2023).

Demšar, J. Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 7, 1–30 (2006).

Huang, D., Wang, C. D. & Lai, J. H. Locally weighted ensemble clustering. IEEE Trans. Cybern. 48(5), 1460–1473 (2017).

Zhang, G. Y., Zhou, Y. R., He, X. Y., Wang, C. D. & Huang, D. One-step kernel multi-view subspace clustering. Knowl. Based Syst. 189, 105126 (2020).

Acknowledgements

Fatimah Abdul Razak is supported by Universiti Kebangsaan Malaysia under Grant GUP-2021-046. Qingshui Liao is supported by Scientific Research Foundation of Higher Education Institutions for Young Talents of Department of Education of Guizhou Province under Grant QJJ[2022]129. Qilong Liu is supported by Guizhou Provincial Basic Research Program (Natural Science) under Grant QKHJC-ZK[2023]YB245.

Author information

Authors and Affiliations

Contributions

The idea of this article is proposed by Fatimah Abdul Razak, the exact algorithms are provided by Qingshui Liao, the numerical tests are conducted by Qingshui Liao, the English writing is finished by Qingshui Liao and polished by Qilong Liu and Fatimah Abdul Razak.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liao, Q., Liu, Q. & Razak, F.A. Hypergraph regularized nonnegative triple decomposition for multiway data analysis. Sci Rep 14, 9098 (2024). https://doi.org/10.1038/s41598-024-59300-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-59300-3

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.