Abstract

Abnormal voice may identify those at risk of post-stroke aspiration. This study was aimed to determine whether machine learning algorithms with voice recorded via a mobile device can accurately classify those with dysphagia at risk of tube feeding and post-stroke aspiration pneumonia and be used as digital biomarkers. Voice samples from patients referred for swallowing disturbance in a university-affiliated hospital were collected prospectively using a mobile device. Subjects that required tube feeding were further classified to high risk of respiratory complication, based on the voluntary cough strength and abnormal chest x-ray images. A total of 449 samples were obtained, with 234 requiring tube feeding and 113 showing high risk of respiratory complications. The eXtreme gradient boosting multimodal models that included abnormal acoustic features and clinical variables showed high sensitivity levels of 88.7% (95% CI 82.6–94.7) and 84.5% (95% CI 76.9–92.1) in the classification of those at risk of tube feeding and at high risk of respiratory complications; respectively. In both cases, voice features proved to be the strongest contributing factors in these models. Voice features may be considered as viable digital biomarkers in those at risk of respiratory complications related to post-stroke dysphagia.

Similar content being viewed by others

Introduction

Disturbed swallowing, or oropharyngeal dysphagia, commonly occurs after cerebrovascular disease, and may result in malnutrition, dehydration, and aspiration pneumonia1. These respiratory complications occur in approximately one-third of the post-stroke dysphagia population and are associated with high mortality and morbidity2. Early screening and prevention of these respiratory events may also affect prognosis; a recent study found that any previous episode of aspiration pneumonia resulted in poor stroke outcomes3.

Consequently, efforts have been made to develop a screening test that can safely and quickly predict aspiration pneumonia. Impaired gag reflex, dysphonia, weak cough, and choking after swallowing are known predictive factors4. Among these clinical signs, voice change is associated with aspiration and penetration5. A study has demonstrated that 90% of aspirators exhibit dysphonic vocal quality6, and specific voice patterns may indicate aspiration7,8,9. While speech-language pathologists and other experts can reliably detect pathological voice changes, non-experts can be inaccurate10 or miss them5. An acoustic analysis can increase the sensitivity of detecting voice changes objectively and help in clinical evaluation11,12.

Recently, machine learning (ML) and deep learning methods have been used to better predict voice disorders, achieving accuracy levels as high as 90% by using the acoustic parameters of jitter, shimmer, and noise-to-harmonic ratio (NHR)13. Another study using Gaussian mixture model system reported discriminating vocal fold disorders with 99% accuracy14. In addition, studies advocated using vocal biomarkers recorded via smartphones to classify patients with coronary artery disease and pulmonary hypertension15,16,17,18. By contrast, no study has used machine learning algorithms to identify those at risk of dysphagia and subsequent respiratory complications using vocal biomarkers. A previous study demonstrated that phonation among critically ill and intensive care unit patients helps screen aspiration, but objective acoustic features from the phonation data were not collected19. Also, despite the widespread use of mobile devices to integrate vocal biomarkers in making a patient’s diagnosis, no study has yet used these devices to analyze the voice features in these patients.

An automated system that identifies vocal biomarkers from those at risk of aspiration and thus require tube feeding in a non-invasive and easy manner using a mobile device has high potentials to be used at the bedside or in remote settings via telemedicine. Therefore, we aimed to determine if incorporating these digital voice signals, recorded via iPad tablets, into multimodal ML algorithms can accurately classify those at risk of aspiration. Because severe stroke can lead to aspiration pneumonia, we also sought to introduce the best model that classifies those at high risk of respiratory complications.

Methods

Study design and participants

This study included patients referred for swallowing disturbance for at least seven days at a university-affiliated hospital from September 2019 to June 2021. The inclusion criteria were participants with suspected swallowing disorder who were referred for swallowing assessment attributable to a brain lesion including stroke, and the ability to understand the instructions and participate in the phonation task. Participants who were unable to perform phonation or had not undergone the instrumental swallowing tests or other swallowing assessments were excluded from the study. Those with severe cognitive dysfunction that would not allow participation in the voice recording or spirometry assessment were excluded. Those with neurodegenerative disorders such as Parkinson’s disease, Alzheimer’s disease was also excluded. The Institutional Review Board (HC19EESE0060) of the Catholic University of Korea, Bucheon St. Mary’s hospital approved the use of pertinent clinical information relevant to swallowing, neurological deficit at the time of encounter, and medical record preceding the assessment to confirm for any respiratory events, including aspiration pneumonia for analysis. All the subjects’ identifying information was removed. Explanation of the study was provided to all participants verbally, with all pertinent information including the process of the voice recording. Voice recording was performed with patient’s consent during the routine swallowing assessment. After the data collection was completed, all personal information was de-identified. Any conversational component that would identify the participant were not recorded. Database was locked, and only researchers who were responsible for the software and data curation had authorization to access the data. Because this study presented no harm to subjects, took less than 2 min to complete and ensured participant privacy, the institutional review board approved the study. Informed consent was given by all participants for data collection.

Datasets from voice recording

Voice recording was performed at enrollment with a blinded assessment, where the participants underwent clinical evaluation with chief complaints of dysphagia. Contrary to previous studies, no solid or liquid boluses were provided prior to voice recording. Voice was recorded with no oral bolus swallowing. Phonation were recorded using an iPad (Apple, Cupertino, CA, USA) through an embedded microphone. A voice recorder application by Apple was used, and the sound sampling frequency was 44,100 Hz. The digitized phonation signals were band-pass filtered between 20 and 8000 Hz to use data from the entire frequency band gathered by the iPad. In each case, the smart device was positioned 20 cm from the patient’s face20. Estimation accuracy is unaffected if the microphone is positioned within 30 cm from the participant’s mouth. Acoustic signals were obtained in a quiet room to eliminate ambient sounds20. No additional equipment was used. Under the examiner’s instructions, the patient was asked to phonate a single syllable for at least 5 s with comfortable pitch and loudness. To ensure uniformity in pitch variation a single instructor provided guidance so that the participants produced phonation under a comfortable pitch and loudness. An easy-to-follow single vowel phonation was chosen in this study in consideration that some participants manifested severe neurological deficits. The vowel /e/ vowel requires an unrounded lip position and a mid-tongue position and can be performed even in those with facial palsy or tongue deviations. A minimum of three attempts was recorded. The examiner was blinded to the patient’s neurological information and did not participate in the clinical evaluation of swallowing.

Clinical parameters

Based on the findings from the instrumental swallowing test, enrolled participants were classified into two groups: (1) mild or minimal evidence of aspiration or dysphagia, who were deemed safe to undergo oral feeding, or (2) severe dysphagia with risk of aspiration that required tube feeding. The latter group was then further classified into two subgroups according to the risk of respiratory complications. This risk was stratified according to peak cough flow (PCF)21 evaluated using spirometry performed within the same day and abnormal chest x-ray images. Voluntary PCF was measured with forceful cough produced by the participants. Before the PCF measurement, the clinician provided verbal instructions to explain the method of cough production by command. The clinician then provided a live demonstration of coughing on the portable spirometer. Those with poor understanding could practice several times before a formal assessment. Voluntary PCF was measured on the peak flow meter (Micro-Plus Spirometer; Carefusion Corp.), in adherence to the guidelines recommended by the American Thoracic Society/European Respiratory Society22. The values were presented as the mean of the three highest values from the five attempts23. For voluntary PCF, cutoff values of less than 80 L/min were classified as high risk of respiratory complications24. Description on other clinical parameters is shown on Supplementary Methods S1.

Preprocessing data

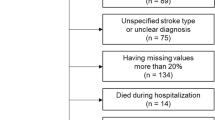

The study used an Intel i9 X-Series processor and GeForce RTX 3090 (24 GB). A two-step wise model was developed using various ML algorithms that would classify (1) the presence of severe dysphagia requiring tube feeding and (2) high risk of respiratory complications with Fig. 1 delineating the preprocessing and model developmental process.

Algorithm development. Raw data from voice signals were preprocessed after normalization. Clinical data were concatenated to the Praat features in the machine learning models. A two step-process was then used to first classify those with oral feeding versus tube feeding (algorithm 1) and, among the latter, classify those at high risk of respiratory complications (algorithm 2). ML machine learning, SVM support vector machine, GMM Gaussian mixture model, XGBoost extreme gradient boosting.

The preprocessing steps of data splitting, transformation and performance evaluation are presented in the Supplementary Methods S2.

Feature extraction

The following features were extracted using the Praat software. Jitter and shimmer values can be measured using different parameters, namely local, absolute, Relative Average Perturbation (RAP), five point-Period Perturbation Quotient (PPQ5), and ddp for jitter; and local, localdbShimmer, Amplitude Perturbation Quotient (APQ)3, APQ5, APQ11, and Dda for shimmer values, and finally the cepstral peak prominence (CPP) values. Description of these features and feature selections are described in the Supplementary Methods S3.

Multimodal model development

Age and severity of neurological deficit may affect voice features. To adjust these differences, clinical data were concatenated to the acoustic features in the ML algorithms to produce multimodal models. Among the various functional parameters, confounding variables were controlled according to the VIF, and those with high multicollinearity were excluded from the final models. To evaluate the effect of the clinical factors on the ML algorithms, we compared the addition of a clinical factor to the absence of one. The machine learning algorithms performed in this study are described in detail at the Supplementary Methods S4. All methods were carried out in accordance with relevant guidelines and regulation in model development and validation.

Statistical analysis

Because participants were assessed at the time of enrollment, no missing data required the imputation method. The Shapiro–Wilk test for normality was used to evaluate the distribution of continuous variables. Between-group analyses were conducted using the Student’s t-test, Mann–Whitney test, or chi-square test. Continuous variables were expressed as mean and standard deviation, and categorical variables were expressed as numbers with percentages. All statistical analyses were performed using R Statistical Software (version 2.15.3; R Foundation for Statistical Computing, Vienna, Austria), which can be downloaded for free from the Internet (https://www.r-project.org/). For the ML algorithms, the performance of the prediction models was evaluated by computing the AUC, sensitivity, specificity, positive predictive value, and negative predictive value using the Scikit-Learn package in Python.

Results

Demographic features

A total of 449 participants were enrolled, and based on the instrumental swallowing tests, 215 participants (47.9%) were classified as having only mild dysphagia with mild aspiration that allowed full oral feeding, while 234 participants (52.1%) were classified as having severe dysphagia and aspiration and required tube feeding. As shown in Table 1, those with tube feeding exhibited severe neurological deficits and poor swallowing function, confirmed by the instrumental swallowing tests.

Among those with tube feeding, 113 participants (48.3%) in the high risk (higher risk for respiratory complications) showed abnormal chest x-ray findings with significantly low voluntary cough strength (52.4 ± 20.0 L/min) compared to those with low risk (lower risk for respiratory complications) (175.1 ± 85.2 L/min) (Table 2). Among these patients, 93% (n = 105) had been linked to a respiratory disorder, such as confirmed aspiration pneumonia (n = 98) or pleural effusion or bronchitis (n = 7) after dysphagia onset. The SNR of the voice files were in the range 55–70 dB, which indicate that the background noise was minimal. The high-risk group showed higher values of standard deviation of the fundamental frequency, frequency and amplitude perturbation, and noise parameters than the low-risk group. Figure 1 shows how clinical data were concatenated to the acoustic features in the ML algorithms to produce multimodal models. Figure 2 shows the relationship between the voice features and clinical parameters.

Correlation analysis between the Praat features and the clinical parameters. The correlation graph shows that nearly all the voice features showed significant association with the clinical parameters, especially with those related to swallowing, and peak cough flow values. An exception was observed with the fundamental frequencies, which failed to show any association with the clinical parameters. *p < 0.05; **p < 0.01; ***p < 0.001. HNR harmonic to noise ratio, F0 fundamental frequency, MBI modified barthel index, NIHSS national institutes of health stroke scale. F0 fundamental frequency, SD standard deviation, APQ amplitude perturbation quotient, PPQ period perturbation quotient, RAP relative average perturbation, PAS penetration-aspiration scale, NIHSS national institutes of health stroke scale, HNR harmonic to noise ratio, MASA mann assessment of swallowing ability, FOIS functional oral intake scale, PCF peak cough flow, MMSE mini-mental state examination, MBI modified barthel index.

Severity of dysphagia, tube feeding risk classification (algorithm 1)

Table 3 shows that among the various ML models, the XGBoost model showed the highest sensitivity (72.7%; 95% CI 64.3–81.0%) and AUC (0.78; 95% CI 0.73–0.82) levels with the selected Praat features. After testing for multicollinearity, age, weight, and NIHSS score were selected to evaluate the performance of the models with the voice parameters. The multimodal models showed improved diagnostic properties, with the XGBoost model again showing the highest sensitivity (88.7%; 95% CI 82.6–94.7%) and AUC (0.85; 95% CI 0.82–0.89) values (Fig. 3a).

AUC-ROC curve of the XGBoost model for classifying tube feeding and risk of respiratory complications. AUC-ROC curves show that multimodal models that combine phonation and clinical data demonstrate high levels of AUC in classifying (a) risk of tube feeding and (b) respiratory complications. AUC area under curve, ROC receiver operating characteristic.

Respiratory risk classification (algorithm 2)

Table 4 shows the performance of the ML algorithms for the risk classification of respiratory complications with Praat features. The XGBoost model's algorithms showed the highest sensitivity (76.5%; 95% CI 68.2%-84.8%) and AUC (0.74; 95% CI 0.66–0.81) levels. MBI was selected as a clinical feature to evaluate the risk of aspiration pneumonia, while other factors, including MMSE, were not used in this algorithm because of multicollinearity with other variances. With the inclusion of MBI, age and weight in the models, the sensitivity of the XGBoost model increased to 84.5% (95% CI 76.9–92.1%), and the AUC increased to 0.84 (95% CI 0.81–0.87) (Fig. 3b).

Feature contribution

Feature scoring is frequently used to interpret ML algorithms. The XGBoost algorithm counts out the importance by gain, frequency, and cover. Gain is the measured value of the contribution to each tree of an ensemble model. Cover is the relatively measured value of the observed value through the leaf node of each tree in the model. Frequency is the measured value as to how frequently each independent variable is used decisively in the model. We choose gain to calculate the feature importance score. Figure 4 shows how Praat and clinical features contributed to the classification in the XGBoost model. Among these, the RAP and APQ11Shimmer were major features, even after the inclusion of other clinical features to the model.

Feature importance analysis. Feature importance analysis from the XGBoost with plots demonstrating that APQ11Shimmer and RAP values are the major features even after including the clinical variables in (a) classifying those with tube feeding and (b) at risk of respiratory complications. XGBoost extreme gradient boosting, RAP relative average perturbation, APQ amplitude perturbation quotient, HNR harmonic to noise ratio, F0 fundamental frequency, MBI modified barthel index, NIHSS national institutes of health stroke scale.

Discussion

This study demonstrated that the acoustic parameters recorded via a mobile device may help distinguish post-stroke patients at high risk of respiratory complications. Among various ML models, the XGBoost multimodal model that included acoustic parameters, age, weight, and NIHSS score, showed an AUC of 0.85 and high sensitivity levels of 88.7% in the classification of those with tube feeding and high risk of aspiration. A second model showed an AUC of 0.84 and high sensitivity levels of 84.5% in the classification of those at risk of respiratory complications. Among these parameters, APQ11shimmer and RAP proved to be the strongest contributing factors. Our results are consistent with recent work advocating for the use of vocal biomarkers for various medical15,16 and neurological disorders. These algorithms could facilitate the early identification of those at risk of aspiration and help prevent respiratory complications in an automated and objective manner.

Comparative analysis of different data input types shows that a multimodal combination approach with clinical factors can improve model performance. Previous studies have advocated the combination of clinical and demographic data with voice signals to distinguish different voice pathologies25. Such multimodal learning models have proven successful in the classifying pathological voice disorders such as neoplasms, phonotrauma, and vocal palsy26. Considering that post-stroke pneumonia is multifactorial27, it may be too far-fetched to conclude that simple vowel phonation could classify aspiration risk, necessitating the development of multimodal algorithms. Following these previous studies, the multimodal models in this study showed the highest sensitivity level up to 88.7%. These were promising results considering that subjective methods in swallowing investigation can only detect 40% of aspiration occurrences28. The clinical factors used in this study were already known to predict the risk of aspiration pneumonia, with NIHSS known to be a useful parameter for predicting dysphagia in stroke patients29,30 and sub-score of MBI or levels of disability to be associated with aspiration pneumonia27,31.

One of the key characteristics of this study was to classify the risk of respiratory complications based on the spirometry findings. In contrast, previous studies have attempted to use acoustic features to classify the presence/absence of aspiration per se from instrumental assessments, which have shown inconsistent diagnostic properties11,12. As in phonation, complete glottic closure is an essential mechanism for generating a strong cough32. Smith-Hammond and Goldstein reported that objective measurements of voluntary cough could be used to help identify at-risk of aspiration in stroke patients8. Not all patients with abnormal swallow aspirate, with only one-third showing aspiration on VFSS2,33,34. Similarly, not all who aspirate develop aspiration pneumonia; only one-third do. Therefore, in this study, the risk of respiratory complication was not classified based on presence of aspiration but the strength of the voluntary cough and abnormal chest images. 93% of those in the high-risk group had confirmed aspiration pneumonia proving the validity of these measures to assess those at risk of respiratory complications.

Another important characteristic is that we incorporated acoustic features into the ML algorithms. Among these models, XGBoost showed the best accuracy and had higher learning potential than the other models, which was expected because of the structure that this technique is a nonlinear model with larger dimensions and has shown excellent performance in other clinical studies35,36. From these XGBoost algorithms, the APQ11shimmer and RAP were shown to be the two major Praat features even after controlling other clinical factors that may increase respiratory complications, indicating that these two features may be used as potential biomarkers to distinguish those at high-risk respiratory complications.

Past studies on which acoustic parameters can best predict aspiration11 have shown conflicting results12.

Our results showed consistent findings that the RAP and APQ11shimmer to be the most vital contributing factors in classifying those with tube feeding and those at high risk of respiratory complications, though some differences were observed in the rank of these features in each model. For example, the RAP was the most crucial contributor to classifying those with severe dysphagia that would require tube feeding, who also showed a more severe grade of an aspiration than those with mild dysphagia. RAP is one of the best jitter parameters that reflects the perceptual pitch and increases when the glottis is in contact with material or secretion5 and is therefore very likely to be the most significant contributor in classifying those at high risk of aspiration. Our results correspond to previous studies that have shown the RAP to show significant changes after aspiration12 with high sensitivity levels11.

By contrast, the APQ11 shimmer was the most critical contributor in classifying those at risk of respiratory complications. Our findings agree with the fact that among the amplitude perturbation parameters, APQ11shimmer is known to reflect better the decreased glottic control than the APQ3 or APQ5. Proper glottic closure is related to the cough force, and its impairment can lead to improper secretion clearance and aspiration pneumonia32. Our results support the role of APQ11shimmer as a marker that reflects glottic dysfunction that plays a most significant role in the model to classify those at high risk of respiratory complications. This strong association of APQ11shimmer with glottic dysfunction was further proven in a previous machine learning study classifying glottic cancer37. A final point of interest was while CPP has been pointed to provide a solid basis to quantitatively approach dysphonia severity, this was not observed in this study. The CPP showed high VIF values and therefore were not eligible to be included in the ML algorithms38.

In this study, voice recordings were easily attained with no adverse effects or technical difficulties. Recording with mobile devices eliminates the potential risk of bolus aspiration and does not require the use of special equipment, such as a sensor or accelerometer39,40. The methods proposed in this study differed from past studies in that the voice recording was performed without the introduction of additional bolus material and posed no additional threat of aspiration and thus can be safely performed even on those at risk of aspiration pneumonia. Also, the main objective of our algorithms differed from past studies; we attempted to classify those with severe dysphagia or risk of respiratory complications, while past studies attempted only to detect the presence of aspiration per se via voice changes after a bolus swallow. Additionally, voice recordings can easily be performed on severely ill patients to obtain objective voice biomarkers. In a broader context, this novel method to assess patients admitted to the intensive care unit or in the emergency setting, which require an easy but noninvasive bedside evaluation to screen for the risk of dysphagia and aspiration pneumonia19 before referral for instrumental tests.

Our study has some limitations. First, only those with brain lesions were included in the study; and those with any degree of dysphagia referred for assessment were used for model development with no comparison to a healthy normal population. However, our ongoing studies on deep learning models include acoustic analysis data from a healthy population and attempt to distinguish dysphagic voices from normal ones. Second, while those undergoing FEES underwent phonation recording simultaneously, there was a lapse in those who underwent VFSS with a median interval of four days. Future studies that assess voice change 24 h after stroke onset upon hospital arrival would better reflect early voice change in predicting dysphagia and aspiration risk. Third, a single vowel phonation of /e/ was evaluated in this study. Previous studies have shown that the corner vowels /i/, /o/, or /u/ have differences in the acoustic parameters, and the logarithmic energy during vowel phonation was also proven to show differences41. The acoustic parameters from this vowel showed good AUC values, but whether multiple vowels can help increase the accuracy is a topic that warrants more future studies. Finally, despite the high AUC, the sensitivity levels for the second algorithm showed wide confidence intervals. Future studies that combine voice signals with other patient-generated health data to further increase these diagnostic properties are warranted.

In conclusion, voice parameters obtained via a mobile device under controlled settings can help to classify those at risk of respiratory complications with high sensitivity and accuracy levels. Whether our novel algorithms using mobile devices can help identify those at a high risk of respiratory complications, allowing for early referral to respiratory experts and subsequently reducing aspiration pneumonia is a topic that needs to be explored in future large-scale multi-center prospective studies.

Data availability

Data may be available upon special request to the corresponding authors. Scripts, including the method of feature extract, the preprocessing method, and ML algorithms, on GitHub: https://github.com/ruaeh/Dysphagia-ML.

Change history

16 December 2022

A Correction to this paper has been published: https://doi.org/10.1038/s41598-022-26224-9

Abbreviations

- APQ:

-

Amplitude perturbation quotient

- AUC:

-

Area under the curve

- CI:

-

Confidence interval

- CPP:

-

Cepstral peak prominence

- DT:

-

Decision tree

- F0:

-

Fundamental frequency

- FEES:

-

Fiberoptic endoscopic evaluation of swallowing

- GMM:

-

Gaussian mixture model

- HNR:

-

Harmonic-to-noise ratio

- LR:

-

Logistic regression

- ML:

-

Machine learning

- MBI:

-

Modified Barthel index

- MMSE:

-

Mini-mental state examination

- NIHSS:

-

National Institutes of Health Stroke Scale

- NHR:

-

Noise-to-harmonic ratio

- RAP:

-

Relative average perturbation

- RF:

-

Random forest

- PCF:

-

Peak cough flow

- PPQ5:

-

Five point-period perturbation quotient

- SNR:

-

Signal-to-noise ratio

- SVM:

-

Support vector machine

- VFSS:

-

Videofluoroscopic swallowing study

- VIF:

-

Variance inflation factor

- XGBoost:

-

EXtreme gradient boost

References

Warnecke, T. et al. Neurogenic dysphagia: Systematic review and proposal of a classification system. Neurology 96, e876–e889 (2021).

Armstrong, J. R. & Mosher, B. D. Aspiration pneumonia after stroke: Intervention and prevention. Neurohospitalist 1, 85–93 (2011).

Park, H. Y. et al. Potential Prognostic Impact of Dopamine Receptor D1 (rs4532) polymorphism in post-stroke outcome in the elderly. Front. Neurol. 12, 675060 (2021).

Daniels, S. K., Ballo, L. A., Mahoney, M. C. & Foundas, A. L. Clinical predictors of dysphagia and aspiration risk: outcome measures in acute stroke patients. Arch. Phys. Med. Rehabil. 81, 1030–1033 (2000).

Groves-Wright, K. J., Boyce, S. & Kelchner, L. Perception of wet vocal quality in identifying penetration/aspiration during swallowing. J. Speech. Lang. Hear. Res. 53, 620–632 (2010).

Homer, J., Massey, E. W., Riski, J. E., Lathrop, D. L. & Chase, K. N. Aspiration following stroke: Clinical correlates and outcome. Neurology 38, 1359–1359 (1988).

McCullough, G. H., Wertz, R. T. & Rosenbek, J. C. Sensitivity and specificity of clinical/bedside examination signs for detecting aspiration in adults subsequent to stroke. J. Commun. Disord. 34, 55–72 (2001).

Smith Hammond, C. A. et al. Predicting aspiration in patients with ischemic stroke: comparison of clinical signs and aerodynamic measures of voluntary cough. Chest 135, 769–777 (2009).

Warms, T. & Richards, J. “Wet Voice” as a predictor of penetration and aspiration in oropharyngeal dysphagia. Dysphagia 15, 84–88 (2000).

Groves-Wright, K. J. Acoustics and Perception of Wet Vocal Quality in Identifying Penetration/Aspiration During Swallowing (University of Cincinnati, 2007).

Ryu, J. S., Park, S. R. & Choi, K. H. Prediction of laryngeal aspiration using voice analysis. Am. J. Phys. Med. Rehabil. 83, 753–757 (2004).

Kang, Y. A., Kim, J., Jee, S. J., Jo, C. W. & Koo, B. S. Detection of voice changes due to aspiration via acoustic voice analysis. Auris Nasus Larynx 45, 801–806 (2018).

Dankovičová, Z., Sovák, D., Drotár, P. & Vokorokos, L. Machine learning approach to dysphonia detection. Appl. Sci. 8, 1927 (2018).

Ali, Z., Hossain, M. S., Muhammad, G. & Sangaiah, A. K. An intelligent healthcare system for detection and classification to discriminate vocal fold disorders. Future Gener. Comput. Syst. 85, 19–28 (2018).

Maor, E. et al. Voice signal characteristics are independently associated with coronary artery disease. Mayo Clin. Proc. 93, 840–847 (2018).

Sara, J. D. S. et al. Non-invasive vocal biomarker is associated with pulmonary hypertension. PLoS ONE 15, e0231441 (2020).

Manfredi, C. et al. Smartphones offer new opportunities in clinical voice research. J. Voice 31(111), 111-e111-112 (2017).

Petrizzo, D. & Popolo, P. S. Smartphone use in clinical voice recording and acoustic analysis: a literature review. J. Voice 35, 499 e423-499 e428 (2021).

Festic, E. et al. Novel bedside phonetic evaluation to identify dysphagia and aspiration risk. Chest 149, 649–659 (2016).

Umayahara, Y. et al. A mobile cough strength evaluation device using cough sounds. Sensors (Basel) 18, 3810 (2018).

Kulnik, S. T. et al. Higher cough flow is associated with lower risk of pneumonia in acute stroke. Thorax 71, 474–475 (2016).

American Thoracic Society/European Respiratory, S. ATS/ERS Statement on respiratory muscle testing. Am. J. Respir. Crit. Care Med. 166, 518–624 (2002).

Park, G. Y. et al. Decreased diaphragm excursion in stroke patients with dysphagia as assessed by M-mode sonography. Arch. Phys. Med. Rehabil. 96, 114–121 (2015).

Sohn, D. et al. Determining peak cough flow cutoff values to predict aspiration pneumonia among patients with dysphagia using the citric acid reflexive cough test. Arch. Phys. Med. Rehabil. 99, 2532-2539 e2531 (2018).

Fang, S.-H., Wang, C.-T., Chen, J.-Y., Tsao, Y. & Lin, F.-C. Combining acoustic signals and medical records to improve pathological voice classification. APSIPA Trans. Signal Inf. Process. 8, e14 (2019).

Mroueh, Y., Marcheret, E. & Goel, V. Deep multimodal learning for audio-visual speech recognition. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2130–2134 (2015).

Mann, G., Hankey, G. J. & Cameron, D. Swallowing function after stroke: Prognosis and prognostic factors at 6 months. Stroke 30, 744–748 (1999).

Splaingard, M. L., Hutchins, B., Sulton, L. D. & Chaudhuri, G. Aspiration in rehabilitation patients: Videofluoroscopy vs bedside clinical assessment. Arch. Phys. Med. Rehabil. 69, 637–640 (1988).

Henke, C., Foerch, C. & Lapa, S. Early screening parameters for dysphagia in acute ischemic stroke. Cerebrovasc. Dis. 44, 285–290 (2017).

Jeyaseelan, R. D., Vargo, M. M. & Chae, J. National Institutes of Health Stroke Scale (NIHSS) as an early predictor of poststroke dysphagia. PM R 7, 593–598 (2015).

Yu, K. J. & Park, D. Clinical characteristics of dysphagic stroke patients with salivary aspiration: A STROBE-compliant retrospective study. Medicine (Baltimore) 98, e14977 (2019).

Han, Y. J., Jang, Y. J., Park, G. Y., Joo, Y. H. & Im, S. Role of injection laryngoplasty in preventing post-stroke aspiration pneumonia, case series report. Medicine (Baltimore) 99, 19220 (2020).

Hammond, C. A. S. & Goldstein, L. B. Cough and aspiration of food and liquids due to oral-pharyngeal dysphagia: ACCP evidence-based clinical practice guidelines. Chest 129, 154S-168S (2006).

McCullough, G. H. et al. Utility of clinical swallowing examination measures for detecting aspiration post-stroke. J. Speech. Lang. Hear. Res. 48, 1280–1293 (2005).

Xu, Y. et al. Extreme gradient boosting model has a better performance in predicting the risk of 90-day readmissions in patients with ischaemic stroke. J. Stroke Cerebrovasc. Dis. 28, 104441 (2019).

Li, X. et al. Using machine learning to predict stroke-associated pneumonia in Chinese acute ischaemic stroke patients. Eur. J. Neurol. 27, 1656–1663 (2020).

Kim, H. et al. Convolutional neural network classifies pathological voice change in laryngeal cancer with high accuracy. J. Clin. Med. 9, 3415 (2020).

Maryn, Y., Roy, N., De Bodt, M., Van Cauwenberge, P. & Corthals, P. Acoustic measurement of overall voice quality: a meta-analysis. J. Acoust. Soc. Am. 126, 2619–2634 (2009).

Dudik, J. M., Kurosu, A., Coyle, J. L. & Sejdic, E. Dysphagia and its effects on swallowing sounds and vibrations in adults. Biomed. Eng. Online 17, 69 (2018).

Khalifa, Y., Coyle, J. L. & Sejdic, E. Non-invasive identification of swallows via deep learning in high resolution cervical auscultation recordings. Sci. Rep. 10, 8704 (2020).

Roldan-Vasco, S., Orozco-Duque, A., Suarez-Escudero, J. C. & Orozco-Arroyave, J. R. Machine learning based analysis of speech dimensions in functional oropharyngeal dysphagia. Comput. Methods Programs Biomed. 208, 106248 (2021).

Acknowledgements

We would like to thank Ms Sun Min Kim (MA, SLP) for her assistance with the acoustic analysis.

Funding

This work was supported by a National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (no. 2020R1F1A106581413), by the Po-Ca Networking Groups funded by The Postech-Catholic Biomedical Engineering Institute (No. 5-2020-B0001-00050) and by the Priority Research Centers Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (2020R1A6A1A03047902).

Author information

Authors and Affiliations

Contributions

Concept and design: S.L. and S.I. Acquisition, analysis, or interpretation of data: D.P. and S.I. Drafting of the manuscript: D.P., H-Y.P., and S.I. Critical revision of the manuscript for important intellectual content: S.L and H.S.K. Statistical analysis: D.P. and S.I. Obtained funding: S.L. and S.I. Administrative, technical, or material support: D.P. and S.I. Supervision: S.L. and S.I.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: The original version of this Article contained an error in the Author Information section. “These authors contributed equally: Hae-Yeon Park, DoGyeom Park, Seungchul Lee and Sun Im.” now reads: “These authors contributed equally: Hae-Yeon Park and DoGyeom Park. These authors jointly supervised this work: Seungchul Lee and Sun Im.” In addition, the Article contained an error in the Funding section. It now reads: “This work was supported by a National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (no. 2020R1F1A106581413), by the Po-Ca Networking Groups funded by The Postech-Catholic Biomedical Engineering Institute (No. 5-2020-B0001-00050) and by the Priority Research Centers Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (2020R1A6A1A03047902).”

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Park, HY., Park, D., Kang, H.S. et al. Post-stroke respiratory complications using machine learning with voice features from mobile devices. Sci Rep 12, 16682 (2022). https://doi.org/10.1038/s41598-022-20348-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-20348-8

This article is cited by

-

Prediction of dysphagia aspiration through machine learning-based analysis of patients’ postprandial voices

Journal of NeuroEngineering and Rehabilitation (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.