Abstract

Guilt is a negative emotion elicited by realizing one has caused actual or perceived harm to another person. One of guilt’s primary functions is to signal that one is aware of the harm that was caused and regrets it, an indication that the harm will not be repeated. Verbal expressions of guilt are often deemed insufficient by observers when not accompanied by nonverbal signals such as facial expression, gesture, posture, or gaze. Some research has investigated isolated nonverbal expressions in guilt, however none to date has explored multiple nonverbal channels simultaneously. This study explored facial expression, gesture, posture, and gaze during the real-time experience of guilt when response demands are minimal. Healthy adults completed a novel task involving watching videos designed to elicit guilt, as well as comparison emotions. During the video task, participants were continuously recorded to capture nonverbal behaviour, which was then analyzed via automated facial expression software. We found that while feeling guilt, individuals engaged less in several nonverbal behaviours than they did while experiencing the comparison emotions. This may reflect the highly social aspect of guilt, suggesting that an audience is required to prompt a guilt display, or may suggest that guilt does not have clear nonverbal correlates.

Similar content being viewed by others

Introduction

Guilt is the emotional consequence of realizing that through one’s action or inaction one is or could be responsible for an actual harm occurring to another person1,2. Guilt serves two social functions: to discourage antisocial behaviour and encourage prosocial behaviours3. It is the prosocial action of apology and acknowledging guilt that necessitates guilt’s successful, genuine conveyance to observers4,5. Conveyance of sincere feelings of guilt have often been found to involve nonverbal expressions, such as kneeling or crying6. However, to date little research has established the specific ways in which guilt may be conveyed by the face and body.

Nonverbal expressions of emotion

Emotion is often conveyed by language and non-language qualities of the voice, but the expression of emotion is enhanced by nonverbal behaviours, which are universal in humans, though precise expressions are culturally bound7,8,9. Patterns of nonverbal activity that signify different emotional states has attracted a great deal of scrutiny not only from the scientific community, but also from law enforcement, the media, public relations, and many others10,11. Four channels of nonverbal behaviour have been particularly studied in this area. The most frequently studied is facial expression, the movement of face muscles alone and in concert to create emotionally meaningful positions, such as a smile or furrowed brows12. Gestures involve the action of parts of the body alone or together to create or enhance emotional messages to the observer (e.g., giving a thumbs up to indicate that all is well)13. Closely related is posture, the position of the body or part of the body, which can convey gross emotional states, as in leaning the upper body and head away from an observer to convey discomfort14,15. Finally, gaze, the direction of the eyes at or away from an observer or the stimulus, can signal approach or avoidance intention16,17. Together, these nonverbal signals can produce a display of a person’s thoughts and emotions better than any single nonverbal signal alone16,18.

Nonverbal expressions of guilt

Guilt drives reparative behaviour, that is, actions that seek to fix the harm caused, and one of the primary reparative actions is apology19,20. For an apology to successfully repair a harm, it must be perceived by the recipient as sincere and driven by genuine intentions to right the wrong and to not behave similarly in future20,21. Successfully conveying that one feels guilt has been shown to be effective in convincing the observer that the apology is authentic and backed by the intention to not cause harm again6,22. Observers of apologies often look to extraverbal signifiers to affirm that the guilt expressed is sincere. The failure to convey guilt or remorse nonverbally has been associated with perceptions of insincerity and manipulativeness23,24. Conversely, behaviours such as tears, negative facial expressions, and postural slumping have been associated with increased judgements of emotional sincerity, and more positive evaluations of the transgressor5,6.

Existing studies of the nonverbal expression of guilt are rare. Of the two studies that have investigated facial expression of guilt, one did not identify a unique facial expression of guilt25. The other identified lowering of the brow as important both for expressors of guilt and for observers to read guilt in expressors, while stretching of the lips was observed in expressors but not fundamental to observers26. Julle-Danière et al.26 also identified three gestures as key to guilt expression: touching the neck with one hand, nodding, and turning the head away. No studies have investigated posture specific to guilt; however, studies of the two related emotions of embarrassment and shame have suggested a collapsed, diminished posture, with shoulders pulled down and towards the midline of the chest and head tilted downwards27,28,29. Gaze aversion has been associated with guilt in some studies, though results are mixed30,31,32.

The present study

Though expressing guilt is a fundamental aspect of its social purpose, no studies to date have investigated the combination of nonverbal behaviours (e.g. facial expression, gaze, gesture, and posture) associated with feeling guilty. The present study sought to fill this gap by identifying whether there is a distinct nonverbal expression associated with the real-time experience of guilt in healthy adults. We hypothesized that there would be a unique nonverbal signature of guilt that was distinct from other emotions. Based on the existing literature around guilt, as well as shame and embarrassment, we predicted that the pattern of behaviours elicited during the experience of guilt would convey submission and contrition to an observer. That is, the face would display a negative aspect, gestures would involve touching the head or neck and aversion of the head, posture would be slumped and diminished, and gaze would be averted. To this end, healthy adults took part in a video task designed to elicit guilt and comparison emotions while continuous discreet recordings were made of their faces and upper bodies. These were then analysed via automated analysis software and trained behavioural coders.

Method

Participant characteristics and enrollment

The sample comprised of healthy adults recruited in London, Ontario, Canada between late 2017 and early 2020 as described in Stewart et al. 202333. Participants were recruited through word of mouth, as well as advertisements placed throughout the community that invited participants to take part in research on emotion. Inclusion criteria included: age 18 to 80, normal or corrected to normal vision, normal or corrected to normal hearing, and fluency in English. Exclusion criteria included current major neurological or psychological disorder, including movement disorders. All study procedures were approved by the University of Western Ontario Research Ethics Board. Participants provided written informed consent prior to undertaking study procedures and were compensated for their time.

Sample size calculations

Using linear regression procedures, a targeted sample size of N = 79 was retrospectively identified as sufficient to maintain a minimum power (1-ß) of 0.95 and detect a medium effect size between 0.30 and 0.36 with alpha = 0.05. Power calculations were determined using G* Power 3.1.9.734 with 1 group and 10 response variables. The power calculation was based upon estimates from a similar study which detected significant group effects with effect size between ƒ2 = 0.30 and ƒ2 = 0.36 when investigating the postural and gestural expression of shame relative to pride29.

Stimuli

Opinions and behaviour questionnaire

Participants completed a 103-item computer questionnaire on topics such as charity involvement, conservation of the environment, and identification with national identity. This questionnaire was developed by the authors based on similar questionnaires created by Statistics Canada35. Depending on the question, answers were given using yes/no, a scale from 1 (not at all) to 5 (very much), multiple choice, or free answer (see “supplemental material” for examples). Prior to the questionnaire, participants were informed that they would receive feedback about themselves in comparison to others based on their responses (see below).

Feedback statements

A short statement which purported to be derived from the above questionnaire were presented before each video. Every participant received standard feedback statements regardless of their responses to these questions (see “supplemental material”). Before undertaking the video task, participants were instructed that they would see the feedback statements, which would provide accurate feedback about themselves and their behaviours based on comparisons to prior participants and Statistics Canada. Feedback statements were developed to connect the content of the video with the past and present actions and opinions of the participant; on their own, statements did not induce their associated emotion, but supported it in combination with the video content. For example, before a video about food wastage, a participant would see “You waste much more food than average,” while a video about charitable donation would be preceded by, “You donate less than the average Canadian.” Feedback statements were either broad statements that an average person could not easily falsify about themselves, such as “You could do more to help people in Canada,” or put the subject in comparison with an average other, with the assumption that most people could not accurately know their relative place, such as “You think peacekeeping is as important as most Canadians.”

Video clips

Forty short video clips drawn from advertising campaigns, online home videos, films, and television shows were chosen to elicit the emotions of guilt, amusement, disgust, neutral, pride, and sadness (see “supplemental material”). Comparison emotions were selected to contrast guilt with closely related emotions (disgust and sadness), a distinct emotion (amusement), a social emotion (pride), and a baseline unemotional state (neutral). We selected 10 videos to elicit guilt, while 6 videos were chosen for each of the comparison emotions. Each clip was selected by the authors and 14 individuals (8 women, 6 men) took part in a pilot study to ensure that each clip reliably elicited its target emotion, as well as to ensure that intensity, arousal, and valence ratings were consistent across emotions (see “supplemental material”). Time windows in each video during which the emotion occurred the most strongly were also endorsed during the pilot study using CARMA video rating software, a continuous affective rating system similar to an affective rating dial36. Only these peak emotion windows were used in later analysis37. Video clip durations were between 20 s and 2 min, with an average duration of 1 min.

Procedure

Following informed consent procedures and the collection of demographic information, participants were seated in a comfortable chair in front of a computer monitor and instructed to complete the opinions and behaviours questionnaire independently. Following questionnaire completion, the webcam was turned on and adjusted to ensure that the participant’s entire head and upper chest were visible in frame, and the participant received the full task instructions. During data collection a researcher was positioned behind a standing screen to respond to questions, concerns, or distress during the study while reducing distraction for the participant.

Video task

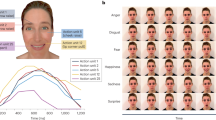

The task was programmed and run in E-Prime version 3.0 (Psychology Software Tools., Pittsburgh, PA). Participants were presented with a single feedback statement in the centre of the screen, which would remain until the participant clicked to acknowledge it. The linked video clip would then play. After the conclusion of the video, a black screen would appear and last for ten seconds, during which the participant was instructed to think about the video contents and how it made them feel. Participants were then presented with a list of 12 emotion words and asked to select the primary emotion experienced during the video clip (Table 1); only one word could be selected, and participants were instructed to choose the strongest emotion felt (endorsed emotion). This list contained the six target emotions as well as additional words potentially related to guilt (anger, contempt, embarrassment, shame), and the remaining basic emotions (fear, happiness).

After endorsing their primary emotion, participants were presented with the same list and instructed to select any additional emotions that they felt during the video clip; participants were allowed to choose as many words as they needed to describe their emotional experience, or none. This was followed by a rest period denoted by a white screen lasting 20 s. This procedure repeated in an individually randomized order until all 40 videos had been watched (Fig. 1). There were no explicit requirements for an apology or reparative action.

Debrief

Following the conclusion of the video task, a deception check was carried out. Participants rated whether they believed that on average the feedback statements they received were accurate and applied to them on a scale from 1 (agree strongly) to 5 (disagree strongly). Participants were then debriefed about the deception in use during the course of the study and given the opportunity to withdraw their consent to be included in the final analysis.

Video recording, data cleaning, and analysis

The entire task was filmed with an MWay 720p webcam mounted unobtrusively atop the computer monitor on which stimuli were displayed. The recording was captured using Biopac’s AcqKnowledge linked media recording function (Biopac Systems Inc., Goleta, CA). Participants were instructed to maintain a frontward facing attitude to ensure minimal rotation and good quality recordings of facial movements. The section of peak emotion identified by the pilot study for each video (see video clips section) was clipped from the total recording using OpenShot Video Editor v2.5.138 and precise timepoints provided by AcqKnowledge.

Action unit analysis

Recordings with a resolution of 640 × 480 at 33 frames per second were saved as MP4 files and analyzed frame by frame by FaceReader 8.1, an automated facial expression analysis software, a reliable and valid method for analyzing facial expression data (Noldus Information Technology, Wageningen, The Netherlands)39,40. FaceReader was set to detect each of the twenty most common facial Action Units (AUs), which were reported on a scale between 0 (not present) and 1 (maximally present). Frame by frame AU data was averaged across the entire analysis window, and scores for individual videos were averaged across all videos of the same emotion as endorsed by the participants to create a composite score for each AU for each emotion.

From the 20 available AUs produced by FaceReader, six were selected a priori based on existing studies that identified facial expressions of emotion in embarrassment, shame, and guilt, as well as related emotions such as anxiety (Table 2). AUs 12- Lip Corner Puller, 14-Dimpler, 15- Lip Corner Depressor, and 24- Lip Pressor were selected as all have been associated with the display of embarrassment and shame30,41. AU 4- Brow Lowerer and AU 20- Lip Stretcher are the only facial AUs that have been previously associated with the expression of guilt specifically26.

Postural and gestural analysis

Gestural data was scored manually using the Body Action and Posture Coding System43. Six body postures and gestures were chosen a priori from the 141 available postures and gestures from the Body Action and Posture Coding System based on existing research (Table 2). Lowering or tilting downwards of the head; slumping, diminishment or collapsing of the upper body; and touching of the face have all been associated with shame and embarrassment27,28,29,30,42. Turning of the head, nodding, and touching of the neck has been specifically associated with guilt26. Gestures and postures were scored by three independent raters blinded to the emotional condition of each video. Following rating of the initial 15 participants, Cohen’s kappa confirmed inter-rater reliability (k = 0.78). After retraining and consensus scoring on points of discrepancy, each rater completed postural and gestural ratings for a subset of participants so that all participant videos were completely coded. All postures and gestures were scored in 5 s increments, with postures coded as present/absent and gestures coded in terms of the number of times that they occurred. Postures were scored as percentage of time spent in the posture, while gestures were scored for number of occurrences.

Gaze analysis

FaceReader recorded gaze direction as forward, left, or right in relation to the screen for each video frame. Aversion of gaze has been associated in many studies with shame, embarrassment, and guilt26,30,32,41. Forward gaze was coded as 1 while left and right were both coded as 0. The codes were averaged across the analysis window to create a percentage of time spent looking at the screen between 0 and 100 for each video, and this percentage was averaged across all videos of the same emotion as endorsed by the participants to create a composite score for percentage of time that gaze was directed at the screen for each emotion.

Analytic approach

All data analysis was carried out in R Studio v1.3.95944,45. Data for individuals who were missing single data points due to recording glitches or failures, but for whom the rest of the data was usable, were imputed using multivariate imputation by chained equations via the mice function mice package version 3.11.046. All AU, gaze, postural, and gestural measures were transformed into percent of maximum possible (POMP) to account for individual variation and enable comparison between participants47.

To identify the variables that contributed to the distinction between emotion categories with guilt as the reference group, available AUs, gaze direction, gestures, and postures were entered into a linear mixed effects model as predictor variables using the lmer function in the lme4 package v1.1–27.148. To account for the repeated measures nature of the data, participant ID was entered into the model as a random effect, while gender and age were entered as fixed effects. Confidence intervals for the model were calculated using the confint function in the stats package v4.1.049. Planned contrasts were carried out to investigate the specific differences between guilt and the comparison emotions for each postural, gestural, and facial variable using Quade’s ANCOVA via the aov and the summary.lm functions in the stats package v4.1.049. All graphs were made using the ggplot2 package v3.3.5, and in-graph calculations were performed using the ggpubr package v0.4.050,51.

Ethics approval

Ethics approval was awarded by the University of Western Ontario Research Ethics Board and the study was conducted in accordance with the ethical standards established in the 1964 Declaration of Helsinki and its later amendments.

Consent to participate and publish

Informed consent was obtained from all individual participants included in the study. They specifically gave informed consent for their data to be published in peer-reviewed journals.

Results

Participant demographics

108 participants ranging in age from 18 to 77 (M = 39, Med = 31) participated in the study. Participants reported attending between 6 and 23 years of formal education (M = 15.963, Med = 16). Participants were excluded from the main analysis for failure to endorse feeling guilt as a primary emotion during the video task (7); technical errors in recording or analysis of video data such as videos that were too dark for the face to be clearly seen, videos in which the participant moved their face out of the frame after recording began, videos which recorded at too low of a frame rate to be analyzed, or video files which were corrupted during data transfer (20); and incomplete or absent video recordings due to power or equipment failure (4). Thus, 77 participants (36 women, 41 men) were included in the final video data analysis.

Task debrief and deception check

No participations requested removal of their data after being debriefed. The mode and median response to the deception check of whether the participants believed the feedback statements given to them were accurate and applied to them was 2, or “Agree somewhat.”

Endorsed emotion results

Nonverbal signal composite scores were created based on an individual’s endorsed emotion rather than the emotion targeted; thus, on an individual basis videos were reclassified to the emotion endorsed by the participant and used as part of that emotion’s composite score. Mean, range, standard deviation, and target accuracy of videos included in the composite score are reported in Table 3. Videos intended to elicit pride and guilt were most likely to trigger other emotions, while sadness and disgust were most likely to elicit their target emotion (Table 3). See “supplemental material” for further description of emotion endorsement results.

Given the duration of the task and possibility of reduced attention that may have affected nonverbal behaviour, we analyzed the concordance between the target emotion and emotion endorsed during the first (M = 11.766, SD = 2.432) and second half (M = 12.052, SD = 3.096) of the study and did not find that they significantly differed, t(76) = − 0.903, p = 0.369.

Video data results

Linear mixed effect model

Of the variables of interest, slumping or collapsing of the upper body, touching of the neck, and nodding occurred so infrequently that they could not be included in the ethogram. As such, AUs 4-Brow Lowerer, 12-Lip Corner Puller, 14-Dimpler, 15-Lip Corner Depressor, 20-Lip Stretcher, 24-Lip Pressor, tilt down of the head, turning of the head, touching of the face, and gaze direction were entered into a linear mixed effects model with both age and gender included as fixed effects in the model (Table 4). Overall, this model was statistically significant χ2(10) = 36.251 p < 0.001.

Planned contrasts

To investigate the specific relationships between these variables, planned contrasts were carried out between guilt and all comparison emotions for each of the variables identified as significant in the mixed effects model (Table 5).

These contrasts identified head tilt down as the most significantly different posture or gesture (p < 0.001 for all emotion comparisons). Participants were less likely to tilt their heads down during the guilt condition relative to any other emotion (Fig. 2). Turning of the head separated guilt from amusement, disgust, and sadness; participants were less likely to turn their heads in guilt relative to those emotions (Fig. 3). 4-Brow Lowerer (Fig. 4) was less frequent in guilt than in disgust, while 12-Lip Corner Puller (Fig. 5) was less frequent in guilt than in amusement, disgust, or sadness.

Comparison of presence of downwards head tilt across emotions. During guilt (M = 0.52), participants were less likely to tilt their heads down than in amusement (M = 11.6), p < 0.001, disgust (M = 21.2), p < 0.001, neutral (M = 9.3), p < 0.001, pride (M = 15.0), p < 0.001, or sadness (M = 11.2), p < 0.001.

General discussion

Emotions are commonly displayed on the face and by the body. While the expression of social emotions is often more complicated than basic emotions, there is clear evidence that social emotions can be read through the face and the body29,52. Though there have been some limited studies of the facial expression of guilt and related emotions, little research to date has explored the embodiment of guilt26,30,53. We sought to investigate the facial and bodily expressions of guilt in healthy adults, and to delineate the features that are key to the nonverbal expression of guilt. This study has provided the first combined exploration of postures, gestures, gaze, and facial expression in guilt without direct social interaction.

We identified differences between guilt and the comparison emotions on several facial, postural, and gestural variables. Head tilt down, turning the head, Lip Corner Puller, and Brow Lowerer were found to be significantly different in guilt relative to at least one other emotion. While touching of the face was identified by the omnibus test as a potential feature of guilt, these differences did not survive in the follow-up contrasts. The other facial expression variables under consideration, AUs Dimpler, Lip Corner Depressor, Lip Stretcher, and Lip Pressor did not contribute significantly to the ethogram. Direction of gaze also did not contribute significantly.

Both Brow Lowerer and Lip Corner Puller were less commonly observed in guilt than several comparison emotions. Brow Lowerer initiates a furrowed brow common in negative emotions like disgust, sadness, or frustration54,55. This AU was engaged during guilt about the same proportion of time as it was during all other emotions except disgust, where it was engaged more. This difference may indicate a difference in the expression of disgust, or the intensity of disgust felt, rather than a particular difference with guilt, which is borne out by the comparison of Brow Lowerer to other emotions (see “supplemental material”). Lip Corner Puller pulls the corners of the lips up and out, and is often seen as an aspect of the appeasement display when an embarrassed individual attempts to lighten the experience via an embarrassed smile30,53,56. This facial display was less common in guilt compared to amusement, disgust, and sadness. In amusement, this is likely reflective of smiling or laughter, while in disgust this relative increase in the activation of Lip Corner Puller compared to guilt may represent grimacing, nervous laughter or smiling, genuine amusement at disgusting stimuli, or an attempt to distance the self from distressing feelings57,58,59. This is likely again to be reflective of a difference of those emotions, rather than a distinguishing feature of guilt. However, it is interesting to note that individuals did not engage in these potentially coping-related behaviours in the negative experience of guilt while they did in disgust. Participants were less likely to tilt their heads down in guilt than in any other emotion. This finding was unexpected and in contrast to previous studies, as downwards-facing head posture has been found to be one of the most common aspects of the display of embarrassment and shame; this may reflect the absence of an audience30. Similarly, turning the head away was significantly less common in guilt compared to amusement, disgust, and sadness, with similar trends in comparison to neutral and pride, despite being previously identified as key for guilt in a feigned wrongdoing scenario26. For amusement, turning away may be secondary to movement of the head during laughter, though the exact reason for this difference is unclear. In disgust and sadness, this gesture may reflect evasion, as participants were allowed to turn away from stimuli that distressed them, which is a common reaction to objects of disgust or sadness60. Again, it is notable that this evasive move was not observed in guilt.

There are a few possible explanations for the unexpected findings in this study. One possibility is that these findings are reflective of the social nature of guilt. Both tilting and turning of the head were less common in guilt relative to other emotions, while aversion of gaze was consistent between guilt and the comparison emotions. These gestures have previously been strongly associated with the display of guilt in tasks that involved in-person interaction26,32,53. The absence of these gestures in this study may indicate that the commission of these movements is a purely social gesture, that is, they make up an appeasement or evasion display intended to enhance an apology, offer submission or remorse, evade the emotional consequences of looking into a victim’s eyes, or some other social motive. Absent a victim or observer who is aware of the performer’s guilt, as in this task, the drive to immediately enact these behaviours disappears. This may also hold true for the lack of engagement of facial AUs. While it is difficult to avoid crumpling one’s face when disgusted, to control a smile while laughing, or to control the raising of the brow during surprise, our results suggest there may be no instinctual drive to make a guilty face in the absence of an observer to acknowledge and attempt to ameliorate one’s guilt to.

Another possibility is that the relatively level, forward facing, direct posture of the head and direct gaze reflects attentional capture by the guilt-inducing stimuli61. Often, attention is captured by arousing stimuli, even if the arousing stimulus is negative in nature62. The guilt stimuli were also rendered personal by the context statements presented at the beginning of each video, which directly related the content of the video to the participant. While this was common across all film clips, the guilt film clips in particular were the most self-focused, as they were intended to directly appeal to the viewer’s actions, behaviours, or opinions. Thus, while some videos in other emotion categories addressed themselves to the viewer (to appeal to the individual to purchase something, to avoid certain behaviours, etc.), a majority of the guilt videos specifically addressed the participant and invited them to reflect upon themselves. Previous research has consistently found that attention is easily captured and held by self-focused stimuli63,64. As the guilt videos remained consistently personal throughout, they may have directed attention more strongly to the video than other emotions.

Limitations

One potential limitation of this study is that there was no measurement of emotional intensity or arousal taken on a per-video basis. Therefore, it is not possible to know if the observed changes in the facial, postural, gestural, or gaze variables are reflective of a difference in emotional expression, of emotional intensity, of the general arousal level, or some combination thereof. It is also not possible to explore whether there is a parametric correlation between the intensity of the emotional experience and the intensity of nonverbal expressions. This is especially important as past research has suggested that guilt expressions particularly depend on the intensity of the underlying guilt feelings26. Arousal and intensity measurements should be collected during or after each individual video to better characterize the emotional experience underlying the observed expressions. Another limitation is that the arithmetic mean of each variable was taken throughout the entire analysis window and averaged across all videos of that emotion type. This creates a conservative estimate of many nonverbal variables, as the predominant emotion, along with its consistency and intensity, may vary considerably throughout the course of even a relatively short video65. More granular epochs, or more continuous examination of the nonverbal signals may enable the capture of subtle expressional elements that may otherwise be lost to averaging. Another possible limitation is the lack of specific exclusion criterion to prevent individuals with facial muscle disorders from taking part in the study, which could have affected the facial expression results. Although no patients with obvious facial weakness were observed, stricter exclusion criteria would prevent a similar issue in future studies. A further limitation is the lack of an explicit social aspect to this study. As previous studies have shown that having an audience can impact nonverbal displays of emotion, the lack of an audience may have limited the usefulness of this paradigm to elicit nonverbal guilt. Future studies could include a social observation aspect to account for this. The relatively low rate of endorsement of guilt for each stimulus reflects a challenge of this method for guilt elicitation. The videos were a standard set that did not specifically target areas of guilt for individuals, and instead relied on broader appeals to typical areas of guilt (e.g. climate change, charity). While attempts were made to personalize the content with feedback statements, more personalized stimuli would likely be able to elicit guilt more consistently. To achieve higher rates of guilt endorsement, future studies could consider tailoring stimuli based on participants’ interests or use another method to elicit guilt such as feigning wrongdoing. There are potential weaknesses in the usage of the Facial Action Coding System (FACS), particularly automated systems as was used in this study. Because of the reliance on an algorithm to generate the AUs, elements of the videos themselves, such as quality, lighting, colour, and angle, as well as of the individual in the video, such as hair length or position, glasses, piercings, or head scarves, can all greatly impact the ability of the system to correctly classify AUs66,67. Similarly, studies have found that the underlying algorithm has not been well trained on non-Western faces, meaning that the system does more poorly when attempting to analyze racialized groups68,69. All efforts were made in the present study to ensure that video quality was sufficient and that participants were well placed with minimal facial obstructions, leading to a fairly high rate of rejection of videos for analysis. Nonetheless, it is possible that video or participant qualities may have affected the video analysis, and thus the results of this study must be interpreted with caution. Future studies should take care to ensure that video and participant qualities are sufficient to be coded using an automated system, and to use systems which are trained on more diverse training sets.

Conclusion

This study sought to address the current gap in the literature around the nonverbal expression of guilt in healthy adults. We found an unexpected pattern of under-reactivity to guilt relative to other emotions that is potentially reflective of guilt’s social nature, the absence of reflexivity or a hardwired expression for guilt, its capacity to capture and hold attention, or some combination thereof. These findings suggest directions for future studies to address the lack of knowledge surrounding the nonverbal expression of guilt. In particular, future studies may include a social aspect to the paradigm, such as including an audience for some participants but not others, or by having an observer say the feedback statements to participants, to expand on the possibility of guilt expression being bound to social pressures. It would be particularly valuable to investigate the downwards head tilt in a social version of the paradigm, as it has previously been identified as a relevant signal but was much less likely to be observed during guilt in this study.Additional future directions include explorations of nonverbal guilt expression in children who are developing guilt, or in populations outside of the North American context who might have different conceptualizations and experiences of guilt, or culturally bound expressions of guilt.

Data availability

Data and supplementary information are uploaded on the Open Science Framework, an open science platform. Please use this view-only link to access this material on OSF: https://osf.io/kvb3y/?view_only=d9a0993561244217aff5a072c70505ff. This study’s design and its analysis were not pre-registered.

References

Huhmann, B. A. & Brotherton, T. P. A content analysis of guilt appeals in popular magazine advertisements. J. Advert. 26(2), 35–45. https://doi.org/10.1080/00913367.1997.10673521 (1997).

Zeelenberg, M. & Breugelmans, S. M. The role of interpersonal harm in distinguishing regret from guilt. Emotion 8(5), 589–596. https://doi.org/10.1037/a0012894 (2008).

Tangney, J. P., Stuewig, J. & Mashek, D. J. Moral emotions and moral behavior. Ann. Rev. Psychol. 58, 345–372. https://doi.org/10.1146/annurev.psych.56.091103.070145 (2007).

Eisenberg, T., Garvey, S. P. & Wells, M. T. But was he sorry? The role of remorse in capital sentencing. Cornell L. Rev. 83, 1599 (1997).

MacLin, M. K., Downs, C., MacLin, O. H. & Caspers, H. M. The effect of defendant facial expression on mock juror decision-making: The power of remorse. North Am. J. Psychol. 11(2), 323–332 (2009).

Hornsey, M. J. et al. Embodied remorse: Physical displays of remorse increase positive responses to public apologies, but have negligible effects on forgiveness. J. Personal. Soc. Psychol. 119(2), 367–389. https://doi.org/10.1037/pspi0000208 (2020).

Archer, D. Unspoken diversity: Cultural differences in gestures. Qual. Sociol. 20(1), 79–105 (1997).

Jack, R. E., Garrod, O. G. B., Yu, H., Caldara, R. & Schyns, P. G. Facial expressions of emotion are not culturally universal. Proc. Natl. Acad. Sci. USA 109(19), 7241–7244. https://doi.org/10.1073/pnas.1200155109 (2012).

Matsumoto, D. & Hwang, H. C. Cultural similarities and differences in emblematic gestures. J. Nonverbal Behav. 37(1), 1–27. https://doi.org/10.1007/s10919-012-0143-8 (2013).

Granhag, P. A. & Strömwall, L. A. Repeated interrogations: verbal and non-verbal cues to deception. Appl. Cognit. Psychol. 16(3), 243–257. https://doi.org/10.1002/acp.784 (2002).

Stephens, K. K., Waller, M. J. & Sohrab, S. G. Over-emoting and perceptions of sincerity: Effects of nuanced displays of emotions and chosen words on credibility perceptions during a crisis. Public Relat. Rev. 45(5), 101841. https://doi.org/10.1016/j.pubrev.2019.101841 (2019).

Ekman, P. & Oster, H. Facial expressions of emotion. Ann. Rev. Psychol. 30(1), 527–554. https://doi.org/10.1146/annurev.ps.30.020179.002523 (1979).

Kipp, M., & Martin, J.C., Gesture and emotion: Can basic gestural form features discriminate emotions?. In 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, 1–8. (2009) https://doi.org/10.1109/ACII.2009.5349544

Gregersen, T. S. Nonverbal cues: Clues to the detection of foreign language anxiety. Foreign Lang. Ann. 38(3), 388–400. https://doi.org/10.1111/j.1944-9720.2005.tb02225.x (2005).

Mondloch, C. J., Nelson, N. L. & Horner, M. Asymmetries of influence: differential effects of body postures on perceptions of emotional facial expressions. Plos One 8(9), e73605. https://doi.org/10.1371/journal.pone.0073605 (2013).

Adams, R. B. & Kleck, R. E. Perceived gaze direction and the processing of facial displays of emotion. Psychol. Sci. 14(6), 644–647. https://doi.org/10.1046/j.0956-7976.2003.psci_1479.x (2003).

Bayliss, A. P., Frischen, A., Fenske, M. J. & Tipper, S. P. Affective evaluations of objects are influenced by observed gaze direction and emotional expression. Cognition 104(3), 644–653. https://doi.org/10.1016/j.cognition.2006.07.012 (2007).

Castellano, G., Kessous, L. & Caridakis, G. Emotion Recognition through Multiple Modalities: Face, Body Gesture, Speech. In Affect and Emotion in Human-Computer Interaction (eds Peter, C. & Beale, R.) 92–103 (Springer, Berlin Heidelberg, 2008).

Howell, A. J., Turowski, J. B. & Buro, K. Guilt, empathy, and apology. Personal. Individ. Differ. 53(7), 917–922. https://doi.org/10.1016/j.paid.2012.06.021 (2012).

Rosenstock, S. & O’Connor, C. When it’s good to feel bad: An evolutionary model of guilt and apology. Front. Robot. A I, 5. https://doi.org/10.3389/frobt.2018.00009 (2018).

Shore, D. M. & Parkinson, B. Interpersonal effects of strategic and spontaneous guilt communication in trust games. Cognit. Emot. 32(6), 1382–1390. https://doi.org/10.1080/02699931.2017.1395728 (2018).

Hareli, S. & Eisikovits, Z. The role of communicating social emotions accompanying apologies in forgiveness. Motivat. Emot. 30(3), 189–197. https://doi.org/10.1007/s11031-006-9025-x (2006).

Sandlin, J. K. & Gracyalny, M. L. Seeking sincerity, finding forgiveness: YouTube apologies as image repair. Public Relat. Rev. 44(3), 393–406. https://doi.org/10.1016/j.pubrev.2018.04.007 (2018).

ten Brinke, L. & Adams, G. S. Saving face? When emotion displays during public apologies mitigate damage to organizational performance. Organ. Behav. Hum. Decis. Process. 130, 1–12 (2015).

Keltner, D. & Buswell, B. N. Embarrassment: Its distinct form and appeasement functions. Psychol. Bull. 122(3), 250–270. https://doi.org/10.1037/0033-2909.122.3.250 (1997).

Julle-Danière, E. et al. Are there non-verbal signals of guilt?. Plos One 15(4), e0231756. https://doi.org/10.1371/journal.pone.0231756 (2020).

Haidt, J. & Keltner, D. Culture and facial expression: Open-ended methods find more expressions and a gradient of recognition. Cognit. Emot. 13(3), 225–266. https://doi.org/10.1080/026999399379267 (1999).

Keltner, D. & Anderson, C. Saving face for darwin: The functions and uses of embarrassment. Curr. Direct. Psychol. Sci. 9(6), 187–192. https://doi.org/10.1111/1467-8721.00091 (2000).

Tracy, J. L. & Matsumoto, D. The spontaneous expression of pride and shame: Evidence for biologically innate nonverbal displays. Proc. Natl. Acad. Sci. USA 105(33), 11655–11660. https://doi.org/10.1073/pnas.0802686105 (2008).

Keltner, D. Signs of appeasement: Evidence for the distinct displays of embarrassment, amusement, and shame. J. Personal. Soc. Psychol. 68(3), 441–454. https://doi.org/10.1037/0022-3514.68.3.441 (1995).

Pivetti, M., Camodeca, M. & Rapino, M. Shame, guilt, and anger: Their cognitive, physiological, and behavioral correlates. Curr. Psychol. 35(4), 690–699. https://doi.org/10.1007/s12144-015-9339-5 (2016).

Yu, H., Duan, Y. & Zhou, X. Guilt in the eyes: Eye movement and physiological evidence for guilt-induced social avoidance. J. Exp. Soc. Psychol. 71, 128–137. https://doi.org/10.1016/j.jesp.2017.03.007 (2017).

Stewart, C. A. et al. The psychophysiology of guilt in healthy adults. Cognit. Affect. Behav. Neurosci. 23, 1192–1209 (2023).

Faul, F., Erdfelder, E., Lang, A. G. & Buchner, A. G* Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39(2), 175–191 (2007).

Statistics Canada (2021). Questionnaire design. Retrieved from Statistics Canada www150.statcan.gc.ca/n1/edu/power-pouvoir/ch2/questionnaires/5214775-eng.htm accessed June 13 2021

Ruef, A. M. & Levenson, R. W. Continuous Measurement of Emotion: The Affect Rating Dial. In Handbook of Emotion Elicitation and Assessment (eds Coan, J. A. & Allen, J. J. B.) 286–297 (Oxford University Press, 2007). https://doi.org/10.1093/oso/9780195169157.003.0018.

Girard, J. M. CARMA: Software for continuous affect rating and media annotation. J. Open Res. Softw. 2(1), e5. https://doi.org/10.5334/jors.ar (2014).

Thomas, J., Finch, A., McCall, H., Girard, O., Karlinux, T.J., & Lavault, M. (2021). Openshot (version 2.5.1). http://www.openshot.org/

Borsos, Z., Jakab, Z., Stefanik, K., Bogdán, B. & Gyori, M. Test-retest reliability in automated emotional facial expression analysis: Exploring FaceReader 8.0 on data from typically developing children and children with autism. Appl. Sci. 12(15), 7759 (2022).

Skiendziel, T., Rösch, A. G. & Schultheiss, O. C. Assessing the convergent validity between the automated emotion recognition software Noldus FaceReader 7 and Facial Action Coding System Scoring. PloS One 14(10), e0223905 (2019).

Velusamy, S., Kannan, H., Anand, B., Sharma, A., & Navathe, B., A method to infer emotions from facial Action Units. In 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2028–2031. (2011) https://doi.org/10.1109/ICASSP.2011.5946910

Tracy, J. L., Robins, R. W. & Schriber, R. A. Development of a FACS-verified set of basic and self-conscious emotion expressions. Emotion 9(4), 554–559. https://doi.org/10.1037/a0015766 (2009).

Dael, N., Mortillaro, M. & Scherer, K. R. The body action and posture coding system (BAP): Development and reliability. J. Nonverbal Behav. 36(2), 97–121. https://doi.org/10.1007/s10919-012-0130-0 (2012).

R Core Team (2018). R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. <URL:https://www.R-project.org/>.

RStudio Team (2016). RStudio: Integrated Development Environment for R. RStudio, Inc., Boston, MA. <URL: http://www.rstudio.com/>.

Van Buuren, S. & Groothuis-Oudshoorn, K. mice: Multivariate imputation by chained equations in R. J. Statist. Softw. 45(1), 1–67 (2011).

Cohen, P., Cohen, J., Aiken, L. S. & West, S. G. The problem of units and the circumstance for POMP. Multivar. Behav. Res. 34(3), 315–346. https://doi.org/10.1207/S15327906MBR3403_2 (1999).

Bates, D., Mächler, M., Bolker, B. & Walker, S. Fitting linear mixed-effects models using lme4. J. Statist. Softw. 67(1), 1–48. https://doi.org/10.18637/jss.v067.i01 (2015).

Venables WN, Ripley BD (2002). Modern Applied Statistics with S, Fourth edition. Springer, New York. ISBN 0-387-95457-0, <URL:http://www.stats.ox.ac.uk/pub/MASS4>.

Kassambara A (2020). ggpubr: 'ggplot2' Based Publication Ready Plots. R package version 0.4.0, <URL: https://CRAN.R-project.org/package=ggpubr>.

Wickham H (2016). ggplot2: Elegant Graphics for Data Analysis. Springer-Verlag New York. ISBN 978-3-319-24277-4, <URL:https://ggplot2.tidyverse.org>.

App, B., McIntosh, D. N., Reed, C. L. & Hertenstein, M. J. Nonverbal channel use in communication of emotion: How may depend on why. Emotion 11(3), 603–617. https://doi.org/10.1037/a0023164 (2011).

Keltner, D. & Buswell, B. Evidence for the distinctness of embarrassment, shame, and guilt: A study of recalled antecedents and facial expressions of emotion. Cognit. Emot. 10(2), 155–172. https://doi.org/10.1080/026999396380312 (1996).

Kohler, C. G. et al. Differences in facial expressions of four universal emotions. Psychiatr. Res. 128(3), 235–244. https://doi.org/10.1016/j.psychres.2004.07.003 (2004).

Grafsgaard, J., Wiggins, J. B., Boyer, K. E., Wiebe, E. N., & Lester, J. Automatically recognizing facial expression: Predicting engagement and frustration. In Educational Data Mining 2013. (2013)

Ambadar, Z., Cohn, J. F. & Reed, L. I. All smiles are not created equal: Morphology and timing of smiles perceived as amused, polite, and embarrassed/nervous. J. Nonverbal Behav. 33(1), 17–34. https://doi.org/10.1007/s10919-008-0059-5 (2009).

Ansfield, M. E. Smiling when distressed: When a smile is a frown turned upside down. Personal. Soc. Psychol. Bull. 33(6), 763–775. https://doi.org/10.1177/0146167206297398 (2007).

Hemenover, S. H. & Schimmack, U. That’s disgusting! …, but very amusing: Mixed feelings of amusement and disgust. Cognit. Emot. 21(5), 1102–1113. https://doi.org/10.1080/02699930601057037 (2007).

Keltner, D. & Bonanno, G. A. A study of laughter and dissociation: Distinct correlates of laughter and smiling during bereavement. J. Personal. Soc. Psychol. 73(4), 687–702. https://doi.org/10.1037/0022-3514.73.4.687 (1997).

Hanich, J. Dis/liking disgust: the revulsion experience at the movies. New Rev. Film Telev. Stud. 7(3), 293–309. https://doi.org/10.1080/17400300903047052 (2009).

Langton, S. R., Watt, R. J. & Bruce, V. Do the eyes have it? Cues to the direction of social attention. Trends Cognit. Sci. 4(2), 50–59 (2000).

Strauss, G. P. & Allen, D. N. Positive and negative emotions uniquely capture attention. Appl. Neuropsychol. 16(2), 144–149. https://doi.org/10.1080/09084280802636413 (2009).

Alexopoulos, T., Muller, D., Ric, F. & Marendaz, C. I, me, mine: Automatic attentional capture by self-related stimuli. Eur. J. Soc. Psychol. 42(6), 770–779. https://doi.org/10.1002/ejsp.1882 (2012).

Schäfer, S., Wesslein, A.-K., Spence, C., Wentura, D. & Frings, C. Self-prioritization in vision, audition, and touch. Exp. Brain Res. 234(8), 2141–2150. https://doi.org/10.1007/s00221-016-4616-6 (2016).

Davydov, D. M., Zech, E. & Luminet, O. Affective context of sadness and physiological response patterns. J. Psychophysiol. 25(2), 67–80. https://doi.org/10.1027/0269-8803/a000031 (2011).

Cross, M. P., Hunter, J. F., Smith, J. R., Twidwell, R. E. & Pressman, S. D. Comparing, differentiating, and applying affective facial coding techniques for the assessment of positive emotion. J. Pos. Psychol. 18(3), 420–438 (2023).

Landmann, E. I can see how you feel—Methodological considerations and handling of Noldus’s FaceReader software for emotion measurement. Technol. Forecast. Soc. Change 197, 122889 (2023).

Li, Y. T., Yeh, S. L. & Huang, T. R. The cross-race effect in automatic facial expression recognition violates measurement invariance. Front. Psychol. 14, 1201145 (2023).

Xu, N., Guo, G., Lai, H. & Chen, H. Usability study of two in-vehicle information systems using finger tracking and facial expression recognition technology. Int. J. Hum. –Comput. Interact. 34(11), 1032–1044 (2018).

Funding

This research was supported in part by the Lawson Internal Research Fund (7761670) awarded to CAS and EF.

Author information

Authors and Affiliations

Contributions

Conceptualisation C.A.S, E.F.; Methodology C.A.S., E.F Data collection and investigation C.A.S., E.F.; Formal Analysis C.A.S., E.F.; Writing—Original Draft C.A.S, E.F.; Writing—Review & Editing C.A.S, E.F., D.G.V.M, P.T., P.M., S.P.; Supervision E.F.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Stewart, C.A., Mitchell, D.G.V., MacDonald, P.A. et al. The nonverbal expression of guilt in healthy adults. Sci Rep 14, 10607 (2024). https://doi.org/10.1038/s41598-024-60980-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-60980-0

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.