Abstract

In many social and biological networks, the collective dynamics of the entire system can be shaped by a small set of influential units through a global cascading process, manifested by an abrupt first-order transition in dynamical behaviors. Despite its importance in applications, efficient identification of multiple influential spreaders in cascading processes still remains a challenging task for large-scale networks. Here we address this issue by exploring the collective influence in general threshold models of cascading process. Our analysis reveals that the importance of spreaders is fixed by the subcritical paths along which cascades propagate: the number of subcritical paths attached to each spreader determines its contribution to global cascades. The concept of subcritical path allows us to introduce a scalable algorithm for massively large-scale networks. Results in both synthetic random graphs and real networks show that the proposed method can achieve larger collective influence given the same number of seeds compared with other scalable heuristic approaches.

Similar content being viewed by others

Introduction

Cascading process lies at the heart of an array of complex phenomena in social and biological systems, including failure propagation in infrastructure1, adoption of new behaviors2, diffusion of innovations in social networks3 and cascading failures in brain networks4, etc. In these cascading processes, a small number of influential units, or influencers, arise as a consequence of the structural diversity of the underlying interacting networks5. In different fields, it has been accepted that the initial activation of a small set of such “superspreaders”, who usually hold prominent locations in networks, is capable of shaping the collective dynamics of large populations6,7,8. In practice, identification of superspreaders can help to control the entire network’s dynamics with a low cost, e.g., a company can boost product popularity by targeted advertisement on influencers in viral marketing, or we can maintain the robustness of infrastructure systems by protecting structurally pivotal units. Given its great practical values in a wide range of important applications, the problem of locating superspreaders has attracted much attention in various disciplines9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28.

In the simple case of finding single influential spreaders, centrality-based heuristic measures such as degree29, Betweenness30, PageRank31 and K-core32 are routinely adopted. Beyond this non-interacting problem of finding single spreaders, it becomes more complicated when trying to select a group of spreaders, due to the collective effects of multiple agents. In fact, searching for the optimal set of influencers in cascading dynamics is an NP-hard problem and remains to be a challenging conundrum in network science9. To address the many-body problem, several approaches designed for the influence maximization in different models have been proposed. In the case of percolation model, a framework for optimal percolation based on the stability analysis of zero solution was developed15,16. More recently, message passing algorithms for optimal decycling in statistical physics further pushed the critical point toward its optimal value20,21. For susceptible-infected-recovered (SIR) model, the problem of finding influential spreaders is also explored using the percolation theory in recent works24,25. In the above models, cascading processes can be transformed to the percolation model with a continuous phase transition. While optimal percolation theory15,16 applies only to systems with second order phase transitions, here we treat the case of cascading models which present first order discontinuous transitions. Such transitions cannot be treated with the stability analysis methods based on the non-backtracking matrix as done in15,16 for models with continuous transitions, so a new approach is needed.

In a large variety of contexts, the cascading process is properly described by the Linear Threshold Model (LTM) in which the states of nodes are determined by a threshold rule33,34,35,36,37,38,39,40. That is, a node will become active only after a certain number of its neighbors have been activated. The choice of threshold mi = ki − 1 in LTM guarantees a continuous phase transition, where ki is the degree of node i. In this case, the cascading dynamics of LTM can be mapped to the classical percolation process15, for which the influence maximization problem can be solved by various algorithms designed for optimal percolation. Nevertheless, for other choices of threshold, LTM exhibits a first-order (i.e., discontinuous) phase transition. In fact, influence maximization in LTM corresponds to finding nontypical trajectories of cascading processes that deviate from the average ones17. Altarelli et al.17 analyzed the statistics of large deviations of LTM dynamics with a belief propagation algorithm, and further developed a Max-Sum (MS) algorithm to explicitly find the optimal set of seeds in terms of a predefined energy function18. Guggiola and Semerjian19 obtained the theoretical limit of the size of minimal contagious sets for random regular graphs, and used a survey propagation like algorithm to locate the minimal set of seeds. Given these recent progresses in searching for optimal influencers in LTM, it is a challenging task to apply these methods to massively large-scale networks with tens of millions nodes encountered in modern big-data analysis. Therefore, the problem of developing an efficient scalable algorithm of influence maximization in cascading models with discontinuous transitions that is feasible in real-world applications still needs to be further explored.

Here, we examine the collective influence in general LTM, and develop a scalable algorithm for influence maximization. By analyzing the message passing equations of LTM, we formulate the form of interactions between spreaders and provide an analytical expression of their contributions to cascading process. Each seed’s contribution, defined as the collective influence in threshold model (CI-TM), is determined by the number of subcritical paths emanating from it. Since the subcritical paths are such routes along which cascades can propagate, CI-TM can be considered as a reliable estimation of seeds’ structural importance in LTM. CI-TM is the generalization of the CI algorithm of optimal percolation for second order transitions treated in15,16 to the present case of first order transitions. To apply our method to large-scale networks, we present an efficient adaptive selection procedure to achieve collective influence maximization. Compared with other competing heuristics, our results on both synthetic and realistic large-scale networks reveal that the proposed mechanism-based algorithm can produce a larger cascading process given the same number of seeds.

Results

Collective influence in threshold models: CI-TM

We present a theoretical framework to analyze the collective influence of individuals in general LTM. For a network with N nodes and M links, the topology is represented by the adjacency matrix {Aij}N×N, where Aij = 1 if i and j are connected, and Aij = 0 otherwise. The vector n = (n1, n2, …, nN) records whether a node i is chosen as a seed (ni = 1) or not (ni = 0). The total fraction of seeds is therefore q = ∑ini/N. During the spreading, the state of each node falls into the category of either active or inactive. The spreading starts from a q fraction of active seeds and evolves following a threshold rule: a node i becomes active when mi neighbors get activated. This process terminates when there are no more newly activated nodes. We introduce νi as node i’s indicator in active (νi = 1) or inactive (νi = 0) state at the final stage, and denote Q(q) as the size of the giant connected component of active population.

For a directed link i → j, we introduce νi→j as the indicator of i being in an active state assuming node j is disconnected from the network. If ni = 1, then νi→j = 1. Otherwise, νi→j = 1 only when there are at least mi active neighbors excluding j. Since there exist many possible choices of these mi neighbors, we define  as the set of all combinations of mi nodes selected from ∂i\j, where ∂i\j is the set of nearest neighbors of i excluding j. Clearly, if i has ki connections emanating from it, there are

as the set of all combinations of mi nodes selected from ∂i\j, where ∂i\j is the set of nearest neighbors of i excluding j. Clearly, if i has ki connections emanating from it, there are  combinations, so the set

combinations, so the set  contains

contains  elements, denoted by Ph,

elements, denoted by Ph,  . Each element Ph has the form

. Each element Ph has the form  where

where  are the mi nodes in the hth combination. Figure 1a illustrates all three combinations P1, P2 and P3 corresponding to νi→j for node i with a threshold mi = 2. Should at least one combination is fully activated, we have νi→j = 1.

are the mi nodes in the hth combination. Figure 1a illustrates all three combinations P1, P2 and P3 corresponding to νi→j for node i with a threshold mi = 2. Should at least one combination is fully activated, we have νi→j = 1.

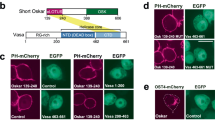

(a), Three combinations of neighbors P1, P2 and P3 corresponding to νi→j in message passing equation. Node i has a threshold mi = 2. The full activation of at least one combination will lead to νi→j = 1. (b), For link i → j with an active neighbor k1 and inactive ones k2 and k3,  since i has 0 (<mi − 1) active neighbor excluding k1 and j, while

since i has 0 (<mi − 1) active neighbor excluding k1 and j, while  because i has 1 (=mi − 1) active neighbor k1 excluding k2 and j. (c), Illustrations of subcritical paths ending with link i → j for L = 0, 1, 2. Red dots stand for seeds, while squares represent m − 1 active neighbors attached to subcritical nodes. Subcritical paths are highlighted by thick links. (d), The contribution of seed i to

because i has 1 (=mi − 1) active neighbor k1 excluding k2 and j. (c), Illustrations of subcritical paths ending with link i → j for L = 0, 1, 2. Red dots stand for seeds, while squares represent m − 1 active neighbors attached to subcritical nodes. Subcritical paths are highlighted by thick links. (d), The contribution of seed i to  exerted through subcritical paths of length L = 0, 1, 2. (e), Calculation method of CI − TML(i). Subcritical paths starting from i with length

exerted through subcritical paths of length L = 0, 1, 2. (e), Calculation method of CI − TML(i). Subcritical paths starting from i with length  are displayed by different colors. (f), An example of subcritical cluster. Assuming a uniform threshold m = 3, nodes inside the circle are subcritical since they all have 2 active neighbors, represented by blue nodes. Activation of the red node will trigger a cascade covering all subcritical nodes.

are displayed by different colors. (f), An example of subcritical cluster. Assuming a uniform threshold m = 3, nodes inside the circle are subcritical since they all have 2 active neighbors, represented by blue nodes. Activation of the red node will trigger a cascade covering all subcritical nodes.

Generally, for locally tree-like networks, we have the following message passing equation:

The final state of i is given by

The above equations Eq. (1-2) describe the general cases of LTM. For the special choice of threshold mi = ki − 1, there is only one combination in  , and the transition becomes continuous and then it can be treated with the stability methods of optimal percolation as done in15,16. We note that, Eq. (1-2) are only valid under the locally tree-like assumption. For synthetic random networks, this assumption holds since short loops appear with a probability of order O(1/N)15. Nevertheless, a considerable number of short loops may exist in real-world networks. For those networks with clustering, many prior works have confirmed that results obtained for tree-like networks apply quite well also for loopy graphs. For instance, Melnik et al. found that, for a series of problems, the tree-like approximation worked well for clustered networks as long as the mean intervertex distance was sufficiently small41. As most of real-world networks are small-world, the approximation of Eq. (1-2) should be reasonable provided the density of loops is not excessively large.

, and the transition becomes continuous and then it can be treated with the stability methods of optimal percolation as done in15,16. We note that, Eq. (1-2) are only valid under the locally tree-like assumption. For synthetic random networks, this assumption holds since short loops appear with a probability of order O(1/N)15. Nevertheless, a considerable number of short loops may exist in real-world networks. For those networks with clustering, many prior works have confirmed that results obtained for tree-like networks apply quite well also for loopy graphs. For instance, Melnik et al. found that, for a series of problems, the tree-like approximation worked well for clustered networks as long as the mean intervertex distance was sufficiently small41. As most of real-world networks are small-world, the approximation of Eq. (1-2) should be reasonable provided the density of loops is not excessively large.

For all the 2M directed links i → j, Eq. (1) is a nonlinear function of  :

:

In Eq. (3),  in which ni→j = ni for link i → j, and

in which ni→j = ni for link i → j, and  where Gi→j is the nonlinear function of vector ν→ for link i → j. Given the initial configuration of seeds n, the final state of ν→ is fully determined by the self-consistent Eq. (3). Unfortunately, it cannot be solved directly due to the exponentially growing number of combinations in

where Gi→j is the nonlinear function of vector ν→ for link i → j. Given the initial configuration of seeds n, the final state of ν→ is fully determined by the self-consistent Eq. (3). Unfortunately, it cannot be solved directly due to the exponentially growing number of combinations in  . Therefore, for a small number of seeds, we adopt the iterative method to estimate the solution. In this point of view, Eq. (3) can be treated as a discrete dynamical system

. Therefore, for a small number of seeds, we adopt the iterative method to estimate the solution. In this point of view, Eq. (3) can be treated as a discrete dynamical system

with the initial condition  .

.

To simplify the calculation, we approximate the nonlinear function Gi→j by linearization. Define  . By Eq. (1), we know that

. By Eq. (1), we know that  for

for  . While in the case of

. While in the case of  and k ≠ j, we have

and k ≠ j, we have

Although Eq. (5) has a complex form, in fact it is only determined by a simple quantity  , which is interpreted as the number of i’s active neighbors excluding k and j when i is absent from the network. On one hand, if ak→i,i→j ≥ mi, at least one term of

, which is interpreted as the number of i’s active neighbors excluding k and j when i is absent from the network. On one hand, if ak→i,i→j ≥ mi, at least one term of  equals one, since we are selecting mi elements from a set containing at least mi elements of value 1. Under such condition,

equals one, since we are selecting mi elements from a set containing at least mi elements of value 1. Under such condition,  . On the other hand, if ak→i,i→j ≤ mi − 2, all the terms

. On the other hand, if ak→i,i→j ≤ mi − 2, all the terms  are zeros because we are selecting mi − 1 elements from a set containing at most mi − 2 nonzero elements, which also leads to

are zeros because we are selecting mi − 1 elements from a set containing at most mi − 2 nonzero elements, which also leads to  . When ak→i,i→j = mi − 1, all the terms

. When ak→i,i→j = mi − 1, all the terms  and

and  are zeros, and only the exact combination of these mi − 1 nonzero elements would lead to

are zeros, and only the exact combination of these mi − 1 nonzero elements would lead to  . Therefore, we have

. Therefore, we have  . Based on the above reasoning, we define a quantity

. Based on the above reasoning, we define a quantity  for links

for links  and i → j as follows:

and i → j as follows:

The definition of  is reminiscent of the Hashimoto non-backtracking (NB) matrix

is reminiscent of the Hashimoto non-backtracking (NB) matrix  15,42,43. In the case of mi = ki − 1, our quantity

15,42,43. In the case of mi = ki − 1, our quantity  can be transformed to the corresponding element of NB matrix

can be transformed to the corresponding element of NB matrix  . In fact,

. In fact,  is closely related to the concept of subcritical nodes. Recall that a node i is subcritical if it has mi − 1 active neighbors38,39,40. This implies that one more activation of its neighbors will cause i activated. From Eq. (6) we know that

is closely related to the concept of subcritical nodes. Recall that a node i is subcritical if it has mi − 1 active neighbors38,39,40. This implies that one more activation of its neighbors will cause i activated. From Eq. (6) we know that  only if the links

only if the links  and i → j are connected, non-backtracking, and additionally, node i is subcritical in the absence of node k and j. In Fig. 1b, node i has an active neighbor k1 and two inactive ones k2 and k3. By definition, for a threshold mi = 2, we conclude

and i → j are connected, non-backtracking, and additionally, node i is subcritical in the absence of node k and j. In Fig. 1b, node i has an active neighbor k1 and two inactive ones k2 and k3. By definition, for a threshold mi = 2, we conclude  since i has no active neighbor excluding k1 and j, while

since i has no active neighbor excluding k1 and j, while  because i has 1 (=mi − 1) active neighbor excluding k2 and j.

because i has 1 (=mi − 1) active neighbor excluding k2 and j.

For a small ν→, a standard linearization around origin 0 gives  . However this will cause degeneracy since Eq. (5) constantly gives

. However this will cause degeneracy since Eq. (5) constantly gives  . Therefore, we approximate Gi→j(ν→) by

. Therefore, we approximate Gi→j(ν→) by  given ν→ is close to 0. In Methods, we prove that this linearization has an approximation accuracy of O(|ν→|2) (| ⋅ | is the vector norm), same as the linear Taylor expansion. To account for the increasing network size as N → ∞, we define the vector norm as

given ν→ is close to 0. In Methods, we prove that this linearization has an approximation accuracy of O(|ν→|2) (| ⋅ | is the vector norm), same as the linear Taylor expansion. To account for the increasing network size as N → ∞, we define the vector norm as  (2M is the number of directed links), so that |ν→| is always bounded below 1 for all network sizes. The linear approximation is valid when the number of links attached to initial seeds is small compared with all directed links. As we will see, the fraction of seeds at the discontinuous transition is small for both synthetic and realistic networks. Therefore, the linear approximation before the critical point should be valid. In Methods, we compare |ν→| calculated by linear approximation with its real value on a scale-free network assuming LTM is initialed by one single seed. The approximation results agree well with their true values. See the detailed discussion in Methods.

(2M is the number of directed links), so that |ν→| is always bounded below 1 for all network sizes. The linear approximation is valid when the number of links attached to initial seeds is small compared with all directed links. As we will see, the fraction of seeds at the discontinuous transition is small for both synthetic and realistic networks. Therefore, the linear approximation before the critical point should be valid. In Methods, we compare |ν→| calculated by linear approximation with its real value on a scale-free network assuming LTM is initialed by one single seed. The approximation results agree well with their true values. See the detailed discussion in Methods.

Combining all direct links, Eq. (4) can be approximated by a linear equation

where  is a 2M × 2M matrix defined on the directed links

is a 2M × 2M matrix defined on the directed links  , i → j with elements

, i → j with elements

With the notion of  , we can write

, we can write  as:

as:

Now we update the state of  following Eq. (7). In the following calculation, we simplify

following Eq. (7). In the following calculation, we simplify  and

and  to

to  and

and  respectively for notation convenience. We put the matrix

respectively for notation convenience. We put the matrix  in a higher-dimensional space15:

in a higher-dimensional space15:

where function  is 1 if

is 1 if  , and 0 otherwise. The indices

, and 0 otherwise. The indices  run from 1 to N. Starting from

run from 1 to N. Starting from  ,

,  gives

gives

The physical meaning of Eq. (11) can be interpreted as follows. If node i is a seed,  . Otherwise,

. Otherwise,  is nonzero if i is subcritical (

is nonzero if i is subcritical ( ) and at least one of its corresponding neighbors k is a seed (nk = 1). Supposing i is not a seed, the contribution of a neighboring seed k is conveyed by the directed path k → i → j that satisfies nk = 1, ni = 0 and

) and at least one of its corresponding neighbors k is a seed (nk = 1). Supposing i is not a seed, the contribution of a neighboring seed k is conveyed by the directed path k → i → j that satisfies nk = 1, ni = 0 and  , which is shown in the second panel of Fig. 1c.

, which is shown in the second panel of Fig. 1c.

For t = 2, we have  . Therefore,

. Therefore,

The last term in Eq. (12) is actually the contribution of node i’s 2-step neighbors s to  . The contribution of a seed s is conducted through a directed path s → k → i → j that satisfies ns = 1, nk = 0, ni = 0 and

. The contribution of a seed s is conducted through a directed path s → k → i → j that satisfies ns = 1, nk = 0, ni = 0 and  (See Fig. 1c).

(See Fig. 1c).

Inspired by Eq. (12), we define the concept of subcritical paths. For a directed link i → j, the path  is a subcritical path of length L if

is a subcritical path of length L if  ,

,  , and any two consecutive links are non-backtracking. If i1 = i, we set the path’s length L = 0. The subcritical paths of length L = 0, 1 and 2 are displayed in Fig. 1c. Notice that, the calculation of L-length subcritical paths is in fact implemented by the multiplication of

, and any two consecutive links are non-backtracking. If i1 = i, we set the path’s length L = 0. The subcritical paths of length L = 0, 1 and 2 are displayed in Fig. 1c. Notice that, the calculation of L-length subcritical paths is in fact implemented by the multiplication of  . In fact, the concept of subcritical path has a clear physical meaning. A subcritical path is composed of connected subcritical nodes. So once the node i1 at the beginning of the subcritical path is activated, the cascade of activation will propagate along the path and lead to νi→j = 1 for the link i → j at the tail. Therefore, the long-range interaction between node i1 and node i is realized through the subcritical path connecting them. Following this idea, we can generalize Eq. (12) to

. In fact, the concept of subcritical path has a clear physical meaning. A subcritical path is composed of connected subcritical nodes. So once the node i1 at the beginning of the subcritical path is activated, the cascade of activation will propagate along the path and lead to νi→j = 1 for the link i → j at the tail. Therefore, the long-range interaction between node i1 and node i is realized through the subcritical path connecting them. Following this idea, we can generalize Eq. (12) to  at a given time T. The exact formula for

at a given time T. The exact formula for  is

is

where k−1 = j, k0 = i and  runs from 1 to N for

runs from 1 to N for  . Notice that the form of

. Notice that the form of  is nothing but ni plus the contribution of seeds connected to i through subcritical paths with length L ≤ T when ni = 0.

is nothing but ni plus the contribution of seeds connected to i through subcritical paths with length L ≤ T when ni = 0.

CI-TM Algorithm

To quantify the active population in LTM, we define  , where 2M is the total number of directed links. Starting from

, where 2M is the total number of directed links. Starting from  when no seed is selected,

when no seed is selected,  increases as more seeds are activated. Therefore, we expect that the collective influence of a given number of seeds can be optimized by maximizing

increases as more seeds are activated. Therefore, we expect that the collective influence of a given number of seeds can be optimized by maximizing  .

.

Based on the form of each element in ν→, we learn that the contribution of a seed i to  is composed of all its collective contributions to every potential element, exerted through the subcritical paths attached to i. Therefore, we employ a seed’s contribution to

is composed of all its collective contributions to every potential element, exerted through the subcritical paths attached to i. Therefore, we employ a seed’s contribution to  to define its Collective Influence in Threshold Model (CI-TM) to find the best influencers. For the trivial case of subcritical paths with length L = 0, we define CI − TM0(i) = ki, where ki is the degree of node i. Thus, at the zero-order approximation we recover the high-degree heuristic. The first panel of Fig. 1d illustrates CI − TM0(i) = 3 for node i. For L ≥ 1, subcritical paths are involved in the definition of CI-TM. For L = 1,

to define its Collective Influence in Threshold Model (CI-TM) to find the best influencers. For the trivial case of subcritical paths with length L = 0, we define CI − TM0(i) = ki, where ki is the degree of node i. Thus, at the zero-order approximation we recover the high-degree heuristic. The first panel of Fig. 1d illustrates CI − TM0(i) = 3 for node i. For L ≥ 1, subcritical paths are involved in the definition of CI-TM. For L = 1,

As shown in Fig. 1(d), the contribution of node i to  through subcritical paths of length L = 1 is 2. Therefore, we have CI − TM1(i) = 5. For L = 2,

through subcritical paths of length L = 1 is 2. Therefore, we have CI − TM1(i) = 5. For L = 2,

In Fig. 1d, the additional 2-length subcritical paths also contribute to CI − TM2(i), leading to CI − TM2(i) = 7. Moreover, in Fig. 1d, we can observe that for the tree structure, the activation of node j in the first-step update will not affect  in the second-step update, which means

in the second-step update, which means  . More generally,

. More generally,  is not affected by the activation of k’s any precedent nodes on the subcritical path. Therefore, we will leave out the superscript t in the definition of CI-TM for locally tree-like networks. We can generalize the above CI-TM calculation to any given L. In summary, the definition of node i’s influence CI-TM in an area of length L is:

is not affected by the activation of k’s any precedent nodes on the subcritical path. Therefore, we will leave out the superscript t in the definition of CI-TM for locally tree-like networks. We can generalize the above CI-TM calculation to any given L. In summary, the definition of node i’s influence CI-TM in an area of length L is:

Figure 1e illustrates the calculation of node i’s CI-TM for L = 2, in which subcritical paths with length  are distinguished by colors.

are distinguished by colors.

For a given fraction q of seeds, our goal is to maximize  . As we have explained, the CI-TM value of a seed depends on the choice of other seeds. Therefore, it is hard to obtain the optimal configuration {n|∑ini/N = q} without turning to extremely time-consuming algorithms. To compromise and obtain a scalable algorithm, we propose an adaptive CI-TM algorithm following a greedy approach. Define C(i, L) as the set of node i plus subcritical vertices belonging to all subcritical paths originating from i with length

. As we have explained, the CI-TM value of a seed depends on the choice of other seeds. Therefore, it is hard to obtain the optimal configuration {n|∑ini/N = q} without turning to extremely time-consuming algorithms. To compromise and obtain a scalable algorithm, we propose an adaptive CI-TM algorithm following a greedy approach. Define C(i, L) as the set of node i plus subcritical vertices belonging to all subcritical paths originating from i with length  . Beginning with an empty seed set S, we remove the top CI-TM nodes as follow. The calculation proceeds following the CI-TM algorithm.

. Beginning with an empty seed set S, we remove the top CI-TM nodes as follow. The calculation proceeds following the CI-TM algorithm.

Algorithm 1 CI-TM algorithm

1: Initialize S = ∅

2: Calculate CI − TML for all nodes

3: for l = 1 to qN do

4: Select i with the largest CI − TML

5: S = S ∪ {i}

6: Remove C(i, L), and decrease the degree and threshold of C(i, L)’s existing neighbors by 1

7: Update CI − TML for nodes within L + 1 steps from C(i, L)

8: end for

9: Output S

In the above algorithm, we remove C(i, L) once i is added to the seed set. The reason lies in that it is unnecessary to select nodes in C(i, L) as seeds in later calculation, because the activation of i will definitely active C(i, L) (See Fig. 1f). Besides, C(i, L) can be identified during the computation of CI − TML without additional cost. In traditional centrality-based methods, seeds may have significant overlap in their influenced population. It has been reported that the performance of these methods, such as K-core, suffers a lot from this phenomenon12. On the contrary, in our algorithm, this problem is alleviated by the removal of subcritical nodes in C(i, L), which successfully reduces the overlap and improves the efficacy of each seed. Although such greedy strategy is not guaranteed to give the exact optimal spreaders, we expect a good performance in comparison with other heuristic methods in large-scale networks. For extreme sparse networks with large numbers of fragmented subcritical clusters, a simple modified algorithm can find a smaller set of influencers (See Methods).

More importantly, the CI-TM algorithm is scalable for large networks with a computational complexity O(NlogN) as N → ∞. On one hand, computing CI − TML is equivalent to iteratively visiting subcritical neighbors of each node layer by layer within L radius. Because of the finite search radius, computing CI − TML for each node takes O(1) time. Initially, we have to calculate CI − TML for all nodes. However, during later adaptive calculation, there is no need to update CI − TML for all nodes. We only have to recalculate for nodes within L + 1 steps from the removed vertices, which scales as O(1) compared to the network size as N → ∞ as shown in ref. 16. On the other hand, selecting the node with maximal CI-TM can be realized by making use of the data structure of heap that takes O(logN) time16. Therefore, the overall complexity of ranking N nodes is O(NlogN) even when we remove the top CI-TM nodes one by one. In addition, considering the relative small number of subcritical neighbors, the cost of searching for subcritical paths is far less than that when scanning all neighbors. This permits the efficient computation of CI-TM for considerably large L. In our later experiments on finite-size networks, we do not put a limit on L so as to calculate CI-TM thoroughly. But remember that we can always truncate L to speed up CI-TM algorithm for extremely large-scale networks.

Test of CI-TM Algorithm

We first simulate LTM dynamics on synthetic random networks, including  -Rényi (ER) and scale-free (SF) networks. In the models, we adopt a fractional threshold rule

-Rényi (ER) and scale-free (SF) networks. In the models, we adopt a fractional threshold rule  , which means that a node will be activated once t fraction of its neighbors are active (

, which means that a node will be activated once t fraction of its neighbors are active ( is the ceiling function). Here we choose this special form of threshold setting. But we note that the algorithm can apply to other more general choices of threshold in LTM. In order to verify the efficacy of CI-TM algorithm, we compare its performance against several widely-used ranking methods, including high degree (HD)29, high degree adaptive (HDA), PageRank (PR)31 and K-core adaptive (KsA)32. As a reference, we also display the result of random selection of seeds, as well as the size of optimal seed set identified by Max-Sum algorithm (MS)18. Details about these strategies are explained in Methods.

is the ceiling function). Here we choose this special form of threshold setting. But we note that the algorithm can apply to other more general choices of threshold in LTM. In order to verify the efficacy of CI-TM algorithm, we compare its performance against several widely-used ranking methods, including high degree (HD)29, high degree adaptive (HDA), PageRank (PR)31 and K-core adaptive (KsA)32. As a reference, we also display the result of random selection of seeds, as well as the size of optimal seed set identified by Max-Sum algorithm (MS)18. Details about these strategies are explained in Methods.

Figure 2a presents Q(q) versus q on ER networks (t = 0.5, N = 2 × 105, 〈k〉 = 6). Similar to bootstrap percolation on homogeneous networks, Q(q) first undergoes a continuous transition from Q(q) = 0 to nonzero, and then a first-order transition at a higher value of qc37. Remarkably, compared with competing heuristics, CI-TM algorithm achieves a larger active population for a given number of seeds. It not only brings about an earlier continuous transition, but also activates the total population with a smaller seed set. Among all the strategies, random selection represents the average behavior of cascade initiated by randomly chosen seeds, with a critical value  . Although the original K-core ranking has an unsatisfactory performance for multi-source spreading12, the adaptive version KsA gives a better result similar to HDA. For different threshold t, the critical values qc for CI-TM and other heuristic methods are shown in Table 1. We also provide the first-order critical value qc for CI-TM and HDA on ER networks with different average degree 〈k〉 in the inset of Fig. 2a. With the growth of 〈k〉, qc increases and so does the difference between CI-TM and HDA. In some cases, qc can be further improved by a simple modification on CI-TM (See Methods).

. Although the original K-core ranking has an unsatisfactory performance for multi-source spreading12, the adaptive version KsA gives a better result similar to HDA. For different threshold t, the critical values qc for CI-TM and other heuristic methods are shown in Table 1. We also provide the first-order critical value qc for CI-TM and HDA on ER networks with different average degree 〈k〉 in the inset of Fig. 2a. With the growth of 〈k〉, qc increases and so does the difference between CI-TM and HDA. In some cases, qc can be further improved by a simple modification on CI-TM (See Methods).

(a), Size of active giant component Q(q) versus the fraction of seeds q for ER networks with size N = 2 × 105 and mean degree 〈k〉 = 6. Different methods are distinguished by distinct markers and colors. Threshold is set as fractional t = 0.5. The CI-TM algorithm is run without limitation on L. MS is implemented by using T = 40 and a reinforcement parameter r = 1 × 10−4. For CI-TM, the identified critical value is  while for Random selection

while for Random selection  . Inset presents the critical values qc identified by HDA and CI-TM for different mean degree 〈k〉. (b), Comparison for scale-free networks with size N = 2 × 105, power-law exponent γ = 3, minimal degree kmin = 2 and maximal degree kmax = 1000. The fractional threshold of LTM is also set as t = 0.5. Inset shows the critical values qc for different exponents γ. All the results are averaged over 50 realizations.

. Inset presents the critical values qc identified by HDA and CI-TM for different mean degree 〈k〉. (b), Comparison for scale-free networks with size N = 2 × 105, power-law exponent γ = 3, minimal degree kmin = 2 and maximal degree kmax = 1000. The fractional threshold of LTM is also set as t = 0.5. Inset shows the critical values qc for different exponents γ. All the results are averaged over 50 realizations.

We then examine CI-TM’s performance on SF networks with power-law degree distributions  in Fig. 2b. We generate SF networks of size N = 2 × 105 and power-law exponent γ = 3 following the configuration model44. It can be seen that the critical value of first-order transition becomes rather small for SF networks, due to the existence of highly connected hubs. Still, CI-TM algorithm outperforms other heuristic approaches by producing a larger active component Q(q) for a given fraction of seed q. Since most nodes have a quite small number of connections in SF networks, the cascade triggered by randomly selected seeds is limited to a local scale, even with a relatively large number of seeds, as shown by the grey line at the bottom of Fig. 2b. This implies, compared with homogeneous networks, the deviations of the optimized trajectories from typical ones are much more extreme in heterogeneous networks. Moreover, as SF networks become more heterogeneous with a smaller power-law exponent γ of the degree distribution, the minimal number of seeds required for global cascade decreases accordingly, as shown in the inset of Fig. 2b.

in Fig. 2b. We generate SF networks of size N = 2 × 105 and power-law exponent γ = 3 following the configuration model44. It can be seen that the critical value of first-order transition becomes rather small for SF networks, due to the existence of highly connected hubs. Still, CI-TM algorithm outperforms other heuristic approaches by producing a larger active component Q(q) for a given fraction of seed q. Since most nodes have a quite small number of connections in SF networks, the cascade triggered by randomly selected seeds is limited to a local scale, even with a relatively large number of seeds, as shown by the grey line at the bottom of Fig. 2b. This implies, compared with homogeneous networks, the deviations of the optimized trajectories from typical ones are much more extreme in heterogeneous networks. Moreover, as SF networks become more heterogeneous with a smaller power-law exponent γ of the degree distribution, the minimal number of seeds required for global cascade decreases accordingly, as shown in the inset of Fig. 2b.

In applications, we are frequently faced with large-scale networks which exhibit more complicated topological characteristics than random graphs. Thus, it is more necessary and challenging to find a feasible strategy to efficiently approximate the optimal spreaders for those networks. Next, we explore CI-TM algorithm’s performance for real networks. We examine two representative datasets: Youtube friendship network (N = 3, 223, 589, M = 9, 375, 374, c = 0.00138, l = 5.29)45 and Internet autonomous system network (N = 1, 464, 020, M = 10, 863, 640, c = 0.00539, l = 5.04)46. Here N is the network size, M is the number of undirected links, c is the average clustering coefficient, and l is the average shortest path length. Youtube network represents the undirected friend relations between users in the famous video sharing website Youtube. The Internet network records the communications between routers in different autonomous systems. The links between routers are constructed from the Border Gateway Protocol logs in an interval of 785 days. This provides an example of infrastructure network on which malicious attack and failure propagation may occur. Both networks are treated as undirected in the analysis.

Figure 3a displays Q(q) for different numbers of seeds |S| for Youtube network. CI-TM is able to trigger the global cascade with a smaller group of seeds, whose size is quite small compared to the entire network due to the heavy-tailed degree distribution. We also discover that, in the setting of first-order transitions, some nodes with moderate numbers of connections play a crucial role in the collective influence of LTM. As shown in the inset figure, we present the percentage of influencers predicted by CI-TM that HDA and HD have identified, with the vertical dash line indicating CI-TM’s qc. At qc, HDA and HD locate nearly 80% overlapping seeds with CI-TM algorithm, most of which are tagged as hubs. However, due to the collective nature of LTM, seeding the set of privileged nodes in the non-interacting view does not guarantee the maximization of collective influence. The other proportion of spreaders with lower degree, although may be inefficient as single spreaders, are responsible for bridging the collective influence of hubs. With the help of both hubs and bridging low degree nodes, CI-TM can expand the collective influence with a smaller number of seeds. The Internet network also exhibits a similar phenomenon in Fig. 3b. In this case, HDA and HD can only find 80% influencers at the first-order transition of CI-TM algorithm, missing a substantial amount of nodes with lower degree but indispensable in integrating the collective influence of high-degree seeds.

(a), The relationship between the size of active giant component Q(q) and the number of seeds |S| for Youtube friendship network, calculated by different methods. Inset displays the percentage of influencers predicted by CI-TM that HDA and HD have identified.The vertical dash line indicates the critical point of CI-TM. (b), Same analysis for Internet network.

Although the performance of K-core can be improved by adaptive calculation in Fig. 2, for large-scale real networks, we do not display the result of KsA due to its O(N2) computational complexity and only show the curve of K-core. One cause for the unsatisfactory result of K-core is that it is not designed as a multiple spreaders finder since high K-core nodes tend to form densely connected clusters in the same shell, which prevents the expanding of information cascade.

In Methods, we further compare CI-TM algorithm with other methods, including Betweenness Centrality (BC)30, Closeness Centrality (CC)47 and Greedy Algorithm (GA)9. Results from ER and SF networks suggest that CI-TM algorithm also outperforms computationally expensive BC, CC and GA.

Analysis of subcritical paths

With the CI-TM algorithm, we present an analysis of subcritical paths in cascading process. In Fig. 4a, we first display the evolution of the number of subcritical paths during the sequential activation process based on CI-TM ranking. We run LTM model for t = 0.5 on an ER network with size N = 104 and average degree 〈k〉 = 6. Nodes are activated sequentially according to their ranks in CI-TM algorithm. At the time of each activation, the number of subcritical paths attached to the node is calculated. After the activation, nodes on the subcritical paths are activated automatically, as we did in the CI-TM algorithm. In Fig. 4a, the evolution of subcritical path number for CI-TM (L = 5, 10, 20) is displayed. For all L values, the number of subcritical paths peaks at the critical point, where the first-order transition occurs. In addition, as L increases, the peak time of CI-TM is slightly shifted forward, while the peak value increases dramatically. As large numbers of subcritical paths imply a heavy computational burden, the majority of computation is concentrated around the critical point. Therefore, if we want to optimize the influence before the discontinuous transition, which is common in real-world applications, CI-TM algorithm becomes much more efficient since it avoids counting extremely long subcritical paths near the critical point.

(a) Comparison of the number of subcritical paths attached to each node when it is activated sequentially according to CI-TM ranking. Results for CI-TM with L = 5, L = 10 and L = 20 are displayed. LTM for t = 0.5 runs on an ER network with size N = 104 and average degree 〈k〉 = 6. (b) Distribution of activation time in the global cascading for CI-TM (L = 5, 10, 20) and Random strategy at qc. Inset shows the length of subcritical paths for each node when activated in CI-TM ranking. The curve for Random seed selection is averaged over 1,000 independent LTM realizations.

We also examine the distribution of nodes’ activation time in a global cascading. In Fig. 4b, we report the distribution of activation time at the critical point for CI-TM (L = 5, 10, 20) and random selection of seeds. All the curves first decrease and then develop a second peak. Compared with the distribution of random selection, CI-TM has a much larger number of nodes getting activated at the second peak. More importantly, the increase of L in CI-TM algorithm will postpone the arrival of the second peak, which is similar to the previous finding on regular networks17. In CI-TM algorithm with a larger L, longer subcritical paths are allowed during the calculation, as shown in the inset of Fig. 4b. Considering the size of optimal seeds in Fig. 4a and the distribution of activation time in Fig. 4b, a smaller L in CI-TM algorithm can expedite the global cascading, but at the expense of a few more seeds.

Discussion

Identification of superspreaders in LTM has great practical implications in a wide range of dynamical processes. However, the complicated interactions among multiple spreaders prevent us from accurately locating the pivotal influencers in LTM. In this work, we propose a framework to analyze the collective influence of individuals in general LTM. By iteratively solving the linearized message passing equations, we decompose  into separate components, each of which corresponds to the contribution made by a single seed. Particularly, we find that the contribution of a seed is largely determined by its interplay with other nodes through subcritical paths. In order to maximize the active population, we develop a scalable CI-TM algorithm that is feasible for large-scale networks. Results show that the proposed CI-TM algorithm outperforms other ranking strategies in synthetic random graphs and real-world networks. Our CI-TM algorithm can be employed in relevant applications such as viral marketing and information spreading in big-data analysis.

into separate components, each of which corresponds to the contribution made by a single seed. Particularly, we find that the contribution of a seed is largely determined by its interplay with other nodes through subcritical paths. In order to maximize the active population, we develop a scalable CI-TM algorithm that is feasible for large-scale networks. Results show that the proposed CI-TM algorithm outperforms other ranking strategies in synthetic random graphs and real-world networks. Our CI-TM algorithm can be employed in relevant applications such as viral marketing and information spreading in big-data analysis.

Methods

Linearization of Gi→j

The conventional method to linearize the nonlinear function Gi→j(ν→) is Taylor expansion around the fixed point 0:  . However, for our specific function Gi→j, the gradient

. However, for our specific function Gi→j, the gradient  is constantly 0 according to Eq. (5). To avoid the degeneracy, other linear approximation method should be applied.

is constantly 0 according to Eq. (5). To avoid the degeneracy, other linear approximation method should be applied.

For a differentiable function  and x,

and x,  , the mean value theorem guarantees that there exists a real number c ∈ (0, 1) such that f(y) − f(x) = ∇f((1 − c)x + c y) ⋅ (y − x). Here ∇ is the gradient and ⋅ denotes the dot product. Set f = Gi→j, x = 0, and y = ν→, we have

, the mean value theorem guarantees that there exists a real number c ∈ (0, 1) such that f(y) − f(x) = ∇f((1 − c)x + c y) ⋅ (y − x). Here ∇ is the gradient and ⋅ denotes the dot product. Set f = Gi→j, x = 0, and y = ν→, we have  . Notice that, if we set c = 0, the above equation becomes the classical linear Taylor expansion:

. Notice that, if we set c = 0, the above equation becomes the classical linear Taylor expansion:  , where the approximation accuracy is O(|ν→|2) (| ⋅ | is the norm of vectors). In a network with N nodes and M undirected links, we define the norm as |ν→| ≡ ∑ijνi → j/2M (2M is the number of directed links) so that |ν→| is bounded below 1 for network size N → ∞.

, where the approximation accuracy is O(|ν→|2) (| ⋅ | is the norm of vectors). In a network with N nodes and M undirected links, we define the norm as |ν→| ≡ ∑ijνi → j/2M (2M is the number of directed links) so that |ν→| is bounded below 1 for network size N → ∞.

To deal with the degeneracy of  , we approximate Gi→j(ν→) by setting c = 1 for small ν→:

, we approximate Gi→j(ν→) by setting c = 1 for small ν→:  . The approximation error can be calculated by

. The approximation error can be calculated by  . Recall that

. Recall that  . In a finite-size network, for a small ν→ with elements |νi→j| ≤ 1, the gradient of each element

. In a finite-size network, for a small ν→ with elements |νi→j| ≤ 1, the gradient of each element  is bounded according to Eq. (5). For all 2M elements, there exists a uniform upper bound for all the gradients

is bounded according to Eq. (5). For all 2M elements, there exists a uniform upper bound for all the gradients  . Applying the mean value theorem to the differentiable function

. Applying the mean value theorem to the differentiable function  , there should be a constant L such that

, there should be a constant L such that  for all the elements of

for all the elements of  . Summing up all the elements in

. Summing up all the elements in  , we conclude that

, we conclude that  . Therefore, the approximation error

. Therefore, the approximation error  . This proves that the accuracy of the linear approximation is O(|ν→|2), which is same as the linear Taylor expansion.

. This proves that the accuracy of the linear approximation is O(|ν→|2), which is same as the linear Taylor expansion.

In the CI-TM algorithm, we only select one seed at each time step. Here we directly examine the accuracy of the linear approximation when LTM is initiated by one single seed. Specifically, we run LTM dynamics with threshold t = 0.5 from each node in a scale-free network (N = 5,000, M = 7753, γ = 3). For each of these realizations, we plot the realistic |ν→| value and its approximation in Fig. 5. As expected, the approximation is generally lower than the real value since loops are neglected. The correlation between real values and approximations is 0.9118, and a higher correlation 0.9437 is obtained in the logarithmic scale. Therefore, the linear approximation in each step of CI-TM algorithm is accurate. As more seeds are considered, the approximation accuracy will decrease gradually. The decreasing rate should be related to the number of short loops existing in the network. How the density of short loops affects the approximation accuracy will be further explored in future works.

More comparisons with competing methods

A growing number of methods aimed to rank nodes’ influence in networks have been proposed in previous studies. Here we introduce some of the most widely used competing methods and perform a thorough comparison with CI-TM algorithm.

High degree (HD)

Degree, defined as the number of connections attached to a node, is the most widely-used measure of influence29. In HD method, we rank nodes according to their degrees in a descending order, and sequentially select them as information sources. For HD method, the selected hubs intend to link with each other due to the assortative mixing property, making their influence areas overlap significantly. In this case, the selected seeds could rarely be optimal. High degree adaptive (HDA) is the adaptive version of HD method. After each removal, the degree of each node is recalculated. Such adaptive procedure can usually mitigate the overlapping and improve the performance of HD.

K-core (Ks)

Through a k-shell decomposition process, K-core method assigns each node a kS value to distinguish whether it locates in the core region or peripheral area32. In k-shell decomposition, nodes are iteratively removed from the network according to their current degrees. During the removal, all the nodes are classified into different k-shells. The K-core method selects nodes within high k-shells as the spreaders. In practice, single influential spreaders can be identified effectively by K-core ranking, which has been confirmed by both simulations and real-world data12,13,14. However, K-core ranking has the disadvantage of severe overlap of seeds’ influence areas, and therefore performs poorly for multiple node selection. This limitation can be alleviated with an adaptive scheme where we recompute the K-core after each removal. Since there exists many nodes in the same k-shell, we select the node with the largest degree to further distinguish nodes within the highest k-shell. Such K-core adaptive (KsA) method can effectively enhance the performance of K-core.

PageRank (PR)

PageRank is a popular ranking algorithm of webpages which was developed and used by search engine Google31. Over the years, PageRank has been adopted in many practical ranking problems. Generally speaking, PageRank measures a webpage’s stationary visiting probability by a random walker following the hyperlinks in the network. As a special case of eigenvector centralities, PageRank evaluates the score of a node by taking into account its neighbors’ scores. Even though such score-propagating mechanism works well for some purposes such as webpage ranking, an unfavorable consequence may be a heavy accumulation of scores near the high-degree nodes, specially for scale-free networks43.

Greedy algorithm (GA)

In GA, starting from an empty set of seeds, nodes with the maximal marginal gain are sequentially added to the seed set. Kempe et al. have proven that for a class of LTM with the attribute of submodularity, GA has a performance guarantee of 1 − 1/e ≈ 63%, which means it could achieve at least 63% of real maximal influence9. This result relies on the submodular property defined by a diminishing returns effect: the marginal gain from adding a node to the seed set S decreases with the size of S. It has been proven that several classes of LTM have submodular property, such as a random choice of thresholds. However, for LTM with a fixed threshold, it is not generally submodular. As a consequence, GA is not guaranteed to provide a such approximation of the optimal spreaders for general LTM. Furthermore, the greedy search of GA requires massive simulations, which makes GA unscalable and thus limits its application in large-scale social networks.

Betweenness centrality (BC)

BC quantifies the importance of node i in terms of the number of shortest paths cross through it30. Therefore, nodes with large BC usually occupy the pivotal positions in the shortest pathways connecting large numbers of nodes. In BC method, we select nodes with high BC scores as seeds. Although BC has been widely applied in social network analysis, its relatively high computational complexity makes BC prohibitive for large-scale networks. A typical BC algorithm takes O(MN) to calculate for a network with N nodes and M links48, which is still not applicable to modern social networks with millions of users.

Closeness centrality (CC)

Closeness centrality quantifies how close a node to other nodes in the network47. Formally, CC is defined as the reciprocal of the average shortest distance of a node to others in a network. Thus, nodes with high CC values tend to locate at the center of network clusters or communities. In CC method, we pick the seeds according to nodes’ CC scores. Same as BC, CC also requires the heavy task of calculating all possible shortest paths. Thus the high computational cost of CC makes it infeasible for large-scale networks.

Max-Sum (MS)

F. Altarelli et al. developed a Max-Sum algorithm aimed to find the initial configurations that maximized the final number of active nodes in threshold models. Precisely, the trajectory of nodes’ states is parametrized by a configuration t = {ti, 1 ≤ i ≤ N} where  is the activation time of node i (ti = ∞ if inactive). By mapping the optimization onto a constraint satisfaction problem, an energy-minimizing algorithm based on the cavity method of statistical physics is proposed. In the algorithm, a convolution process is employed to compute the Max-Sum updates. The technical details of the derivation and implementation of the MS algorithm can be found in ref. 18. In some cases MS algorithm does not converge, then a reinforcement strategy is implemented18. By imposing an external field slowly increasing over time with a growth rate r, the system is forced to converge to a higher energy, which increases with r. In addition, it requires O(r−1) iterations to reach convergence. For a node of degree k and threshold mi, each update takes

is the activation time of node i (ti = ∞ if inactive). By mapping the optimization onto a constraint satisfaction problem, an energy-minimizing algorithm based on the cavity method of statistical physics is proposed. In the algorithm, a convolution process is employed to compute the Max-Sum updates. The technical details of the derivation and implementation of the MS algorithm can be found in ref. 18. In some cases MS algorithm does not converge, then a reinforcement strategy is implemented18. By imposing an external field slowly increasing over time with a growth rate r, the system is forced to converge to a higher energy, which increases with r. In addition, it requires O(r−1) iterations to reach convergence. For a node of degree k and threshold mi, each update takes  operations18. Pre-computing the convolution can further save a factor of k − 1. Considering the updates of all N nodes for O(r−1) iterations, the overall complexity of MS is

operations18. Pre-computing the convolution can further save a factor of k − 1. Considering the updates of all N nodes for O(r−1) iterations, the overall complexity of MS is  . Therefore, the time complexity of MS depends on both the degree distribution of networks and the choice of threshold.

. Therefore, the time complexity of MS depends on both the degree distribution of networks and the choice of threshold.

In Fig. 6, we provide the thorough comparisons of different methods on ER and SF networks (N = 104), including computationally expensive methods GA, BC and CC. We set T = 40 and a reinforcement parameter r = 1 × 10−4 in MS algorithm. For both homogeneous and heterogeneous networks, CI-TM shows a larger active population for a given fraction of seed q compared with other heuristic ranking strategies. We display the scaling of run time of CI-TM algorithm for ER networks with 〈k〉 = 6 and threshold t = 0.5 as a function of N in the inset of Fig. 6a. CI-TM algorithm with L = 3 is feasible for networks with size up to N = O(108) within run time of O(105) seconds, which can be applied to the modern large-scale online social networks.

Performance of different methods for (a) ER network (N = 104, 〈k〉 = 6, t = 0.5) and (b) scale-free network (N = 104, γ = 3, t = 0.5). In addition to the methods we have examined in the main text, we also display the results of Greedy Algorithm (GA), Betweenness Centrality (BC), Closeness Centrality (CC) and Max-Sum (MS). We set the parameter T = 40 in MS and implement reinforcement with parameter r = 10−4. The inset displays the run time of CI-TM (L = 3) on ER networks (〈k〉 = 6, t = 0.5). The dashed line is power-law fitting.

Modified CI-TM algorithm

The CI-TM algorithm is essentially a greedy approach based on CI-TM values. The success of CI-TM algorithm depends on whether the currently selected seed has potentials to create more subcritical nodes that are helpful for the early formation of giant subcritical cluster. In LTM, there exists a special case of subcritical nodes with threshold m = 1, which is defined as vulnerable vertices in previous literature36. Different from general subcritical nodes, vulnerable vertices are naturally subcritical since they have threshold m = 1 and do not rely on the states of others. During the calculation, a node of degree k becomes vulnerable when its m − 1 neighbors are removed, leaving k − m + 1 links in the network. For ER networks with a low average degree, the limited number of remaining links of vulnerable vertices could only form fragmented clusters. In this case, CI-TM would bias to nodes connected to large numbers of small vulnerable clusters, such as a peripheral hub linked to numerous leaf nodes. Unfortunately, the activation of such small clusters provides little help to the formation of giant subcritical cluster. Because the scattered vulnerable clusters have very few links connected to existing non-subcritical nodes, their activations are not effective in producing subsequent subcritical nodes. Moreover, once global cascade appears, these fragmented vulnerable clusters will be activated subsequently, without additional seeds. In this case, we heuristically propose a modified CI-TM (CI-TMm) algorithm by excluding vulnerable nodes in the calculation of CI-TM value. The performance of CI-TMm algorithm is displayed in Fig. 7 for ER networks with an average degree 〈k〉 = 4. The critical value qc for CI-TMm is advanced compared to CI-TM algorithm. However, before the first-order transition, CI-TMm cannot optimize the spreading and has substantially lower Q(q) than CI-TM. We should note that, CI-TMm presents a lower qc only in the situation of fragmented vulnerable clusters. For ER networks with higher average degrees (e.g., 〈k〉 = 6) where relatively large vulnerable clusters emerge, CI-TM still predicts earlier first-order transition.

For ER networks (N = 2 × 105, 〈k〉 = 4, t = 0.5), the performance of CI-TMm surpasses CI-TM by excluding the fragmented vulnerable clusters in the adaptive calculation. Although Q(q) for CI-TMm is lower at first, it exceeds other methods near the critical point and achieves the earliest first-order transition.

Additional Information

How to cite this article: Pei, S. et al. Efficient collective influence maximization in cascading processes with first-order transitions. Sci. Rep. 7, 45240; doi: 10.1038/srep45240 (2017).

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

Buldyrev, S. V., Parshani, R., Paul, G., Stanley, H. E. & Havlin, S. Catastrophic cascade of failures in interdependent networks. Nature 464, 1025–1028 (2010).

Watts, D. J. & Dodds, P. S. Influentials, networks, and public opinion formation. J. Consum. Res. 34, 441–458 (2007).

Rogers, E. M. Diffusion of Innovation(Free Press, New York, 1995).

Reis, S. D. et al. Avoiding catastrophic failure in correlated networks of networks. Nature Phys. 10, 762–767 (2014).

Kleinberg, J. Algorithmic Game Theory (Cascading Behavior in Networks: Algorithmic and Economic Issues)chapter 24, 613–632 (Cambridge University Press, Cambridge, 2007).

Domingos, P. & Richardson, M. Mining the network value of customers. In Proc. 7th ACM SIGKDD Int. Conf. on Knowledge Discovery and Data Mining 57–66 (ACM, 2001).

Valente, T. W. & Davis, R. L. Accelerating the diffusion of innovations using opinion leaders. Ann. Am. Acad. Polit. Soc. Sci. 556, 55–67 (1999).

Galeotti, A. & Goyal, S. Influencing the influencers: a theory of strategic diffusion. The RAND J. Econ. 40, 509–532 (2009).

Kempe, D., Kleinberg, J. & Tardos, É. Maximizing the spread of influence through a social network. In Proc. 9th ACM SIGKDD Int. Conf. on Knowledge Discovery and Data Mining. 137–146 (ACM, 2003).

Leskovec, J., Krause, A., Guestrin, C., Faloutsos, C., VanBriesen, J. & Glance, N. Cost-effective outbreak detection in networks. In Proc. 13th ACM SIGKDD Int. Conf. on Knowledge Discovery and Data Mining. 420–429 (ACM, 2007).

Chen, W., Wang, Y. & Yang, S. Efficient influence maximization in social networks. In Proc. 15th ACM SIGKDD Int. Conf. on Knowledge Discovery and Data Mining. 199–208 (ACM, 2009).

Kitsak, M. et al. Identification of influential spreaders in complex networks. Nature Phys. 6, 888–893 (2010).

Pei, S. & Makse, H. A. Spreading dynamics in complex networks. J. Stat. Mech. 12, P12002 (2013).

Pei, S., Muchnik, L., Andrade Jr, J. S., Zheng, Z. & Makse, H. A. Searching for superspreaders of information in real-world social media. Sci. Rep. 4, 5547 (2014).

Morone, F. & Makse, H. A. Influence maximization in complex networks through optimal percolation. Nature 524, 65–68 (2015).

Morone, F., Min, B., Bo, L., Mari, R. & Makse, H. A. Collective Influence Algorithm to find influencers via optimal percolation in massively large social media. Sci. Rep. 6, 30062 (2016).

Altarelli, F., Braunstein, A., Dall’Asta, L. & Zecchina, R. Large deviations of cascade processes on graphs. Phys. Rev. E 87, 062115 (2013).

Altarelli, F., Braunstein, A., Dall’Asta, L. & Zecchina, R. Optimizing spread dynamics on graphs by message passing. J. Stat. Mech. 9, P09011 (2013).

Guggiola, A. & Semerjian, G. Minimal contagious sets in random regular graphs. J. Stat. Phys. 158, 300–358 (2015).

Mugisha, S. & Zhou, H. J. Identifying optimal targets of network attack by belief propagation. Phys. Rev. E 94, 012305 (2016).

Braunstein, A., Dall’Asta, L., Semerjian, G. & Zdeborová, L. Network dismantling. Proc. Natl. Acad. Sci. USA 113, 12368–12373 (2016).

Teng, X., Pei, S., Morone, F. & Makse, H. A. Collective influence of multiple spreaders evaluated by tracing real information flow in large-scale social networks. Sci. Rep. 6, 36043 (2016).

Pei, S., Muchnik, L., Tang, S., Zheng, Z. & Makse, H. A. Exploring the complex pattern of information spreading in online blog communities. PLoS ONE 10, e0126894 (2015).

Radicchi, F. & Castellano, C. Leveraging percolation theory to single out influential spreaders in networks. Phys. Rev. E 93, 062314 (2016).

Hu, Y., Ji, S., Feng, L. & Jin, Y. Quantify and maximise global viral influence through local network information. arXiv preprint arXiv:1509.03484 (2015).

Lawyer, G. Understanding the influence of all nodes in a network. Sci. Rep. 5, 8665 (2015).

Quax, R., Apolloni, A. & Sloot, P. M. The diminishing role of hubs in dynamical processes on complex networks. J. R. Soc. Interface 10, 20130568 (2013).

Tang, S., Teng, X., Pei, S., Yan, S. & Zheng, Z. Identification of highly susceptible individuals in complex networks. Physica A 432, 363–372 (2015).

Albert, R., Jeong, H. & Barabási, A. L. Error and attack tolerance of complex networks. Nature 406, 378–382 (2000).

Freeman, L. C. Centrality in social networks conceptual clarification. Soc. Netw. 1, 215–239 (1978).

Brin, S. & Page, L. Reprint of: The anatomy of a large-scale hypertextual web search engine. Computer networks 56, 3825–3833 (2012).

Seidman, S. B. Network structure and minimum degree. Soc. Netw. 5, 269–287 (1983).

Granovetter, M. Threshold models of collective behavior. Am. J. Sociol. 83, 1420–1443 (1978).

Schelling, T. C. Micromotives and macrobehavior(Norton, New York, 1978).

Valente, T. W. Network Models of the Diffusion of Innovations(Hampton Press, Cresskill, NJ, 1995).

Watts, D. J. A simple model of global cascades on random networks. Proc. Natl. Acad. Sci. USA 99, 5766–5771 (2002).

Baxter, G. J., Dorogovtsev, S. N., Goltsev, A. V. & Mendes, J. F. F. Bootstrap percolation on complex networks. Phys. Rev. E 82, 011103 (2010).

Goltsev, A. V., Dorogovtsev, S. N. & Mendes, J. F. F. k-core (bootstrap) percolation on complex networks: Critical phenomena and nonlocal effects. Phys. Rev. E 73, 056101 (2006).

Dorogovtsev, S. N., Goltsev, A. V. & Mendes, J. F. F. k-core architecture and k-core percolation on complex networks. Physica D 224, 7–19 (2006).

Schwarz, J. M., Liu, A. J. & Chayes, L. Q. The onset of jamming as the sudden emergence of an infinite k-core cluster. Europhys. Lett. 73, 560 (2006).

Melnik, S., Hackett, A., Porter, M. A., Mucha, P. J. & Gleeson, J. P. The unreasonable effectiveness of tree-based theory for networks with clustering. Phys. Rev. E 83, 036112 (2011).

Hashimoto, K. Zeta functions of finite graphs and representations of p-adic groups. Adv. Stud. Pure Math. 15, 211 (1989).

Martin, T., Zhang, X. & Newman, M. E. J. Localization and centrality in networks. Phys. Rev. E 90, 052808 (2014).

Molloy, M. & Reed, B. A critical point for random graphs with a given degree sequence. Random Structures & Algorithms 6, 161–180 (1995).

Mislove, A. Online Social Networks: Measurement, Analysis, and Applications to Distributed Information Systems. PhD thesis, Rice University (2009).

Leskovec, J., Kleinberg, J. & Faloutsos, C. Graphs over time: densification laws, shrinking diameters and possible explanations. In Proc. 11th ACM SIGKDD Int. Conf. on Knowledge Discovery and Data Mining. 177–187 (ACM, 2005).

Bavelas, A. Communication patterns in tasks oriented groups. J. Acoust. Soc. Am. 22, 725–730 (1950).

Brandes, U. A faster algorithm for betweenness centrality. J. Math. Sociol. 25, 163–177 (2001).

Acknowledgements

This work was supported by NIH-NIBIB 1R01EB022720, NIH-NCI U54CA137788/ U54CA132378, NSF-PoLS PHY-1305476, NSF-IIS 1515022, and ARL Cooperative Agreement Number W911NF-09-2-0053, the ARL Network Science CTA (to H.A.M.), as well as US NIH grant GM110748 and the Defense Threat Reduction Agency contract HDTRA1-15-C-0018 (to J.S.).

Author information

Authors and Affiliations

Contributions

S.P., X.T, J.S., F.M. and H.A.M. designed research, performed study, analyzed data, and wrote the paper. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Pei, S., Teng, X., Shaman, J. et al. Efficient collective influence maximization in cascading processes with first-order transitions. Sci Rep 7, 45240 (2017). https://doi.org/10.1038/srep45240

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep45240

This article is cited by

-

Leveraging neighborhood and path information for influential spreaders recognition in complex networks

Journal of Intelligent Information Systems (2023)

-

Hierarchical Cycle-Tree Packing Model for Optimal K-Core Attack

Journal of Statistical Physics (2023)

-

Multi-source detection based on neighborhood entropy in social networks

Scientific Reports (2022)

-

Cycle-tree guided attack of random K-core: Spin glass model and efficient message-passing algorithm

Science China Physics, Mechanics & Astronomy (2022)

-

Social network node influence maximization method combined with degree discount and local node optimization

Social Network Analysis and Mining (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.