Abstract

Bayesian inference provides a principled way of estimating the parameters of a stochastic process that is observed discretely in time. The overdamped Brownian motion of a particle confined in an optical trap is generally modelled by the Ornstein-Uhlenbeck process and can be observed directly in experiment. Here we present Bayesian methods for inferring the parameters of this process, the trap stiffness and the particle diffusion coefficient, that use exact likelihoods and sufficient statistics to arrive at simple expressions for the maximum a posteriori estimates. This obviates the need for Monte Carlo sampling and yields methods that are both fast and accurate. We apply these to experimental data and demonstrate their advantage over commonly used non-Bayesian fitting methods.

Similar content being viewed by others

Introduction

Since the seminal contributions of Rayleigh, Einstein, Smoluchowski, Langevin and others1, stochastic processes have been used to model physical phenomena in which fluctuations play an essential role. Examples include the Brownian motion of a particle, the fluctuation of current in a resistor, and the radioactive decay of subatomic particles2. A central problem is to infer the parameters of the process from partially observed sample paths, for instance, the diffusion constant from a time series of positions, or the resistance from a time series of current measurements, and so on. Bayesian inference provides a principled solution to this inverse problem3, making optimal use of the information contained in the partially observed sample path4.

The motion of a Brownian particle harmonically trapped in optical tweezers in a volume of a viscous fluid far away from walls is usually modelled by the Ornstein-Uhlenbeck stochastic process2,5. The stiffness k of the harmonic potential, the friction γ of the particle, and the temperature kBT of the fluid are the three parameters of the stochastic dynamics. For spherical particles Stokes’ law γ = 6πηa relates the friction to the particle radius a and the fluid viscosity η, while the Einstein relation6, which holds generally, relates the particle diffusion coefficient D to the temperature and friction through D = kBTγ−1. Of these several physical parameters, any two may be chosen independently, and it is conventional to choose the ratio k/γ and D to be independent as they relate, respectively, to the mean regression rate λ and the volatility σ of the Ornstein-Uhlenbeck process (see below).

Reliable estimation of the stiffness is a necessary first step in using tweezers for force measurements. An estimation of the friction, for a particle of known size, provides an indirect measure of the viscosity of the medium. This microscopic method of viscometry is of great utility when sample volumes are in the nanoliter range and conventional viscometric methods cannot be used. Conversely, an estimate of the friction in a fluid of known viscosity provides a method for estimating the particle size. In both these cases, an estimate of the diffusion coefficient provides, by virtue of the Einstein relation, identical information.

Extant protocols for estimating these parameters from discrete observations of the position of the Brownian particle can be divided into “fluctuation” and “response” categories. In the fluctuational methods, the fluctuating position of the particle is recorded and the known forms of the static and dynamic correlation functions are fitted to the data. In response methods, external perturbations are applied to the particle and the known forms of the average response is fitted to the data. Considerable care is needed in these fitting procedures to obtain reliable estimates7.

In recent work8, Bayesian inference has been applied to the optical tweezer parameter estimation problem. The posterior probability distribution of the stiffness and diffusion coefficient is estimated for a time series of positions, making it a method of the “fluctuation” category. Monte Carlo sampling is needed to compute the posterior distribution and estimation from a time series of 10,000 points requires few tens of seconds. The advantages of the Bayesian method over conventional calibration methods have been discussed at length in this work.

In this paper, we present two Bayesian methods, of the fluctuational category, which do not require Monte Carlo sampling and, consequently, are extremely fast. For example, they estimate the trap stiffness and diffusion coefficient from time series containing a million points in less than a millisecond. The first method extracts information exploiting the Markov property of the sample path and jointly estimates the mean regression rate k/γ and the diffusion coefficient D. The second method extracts information from the equal-time fluctuations of the position, which, in equilibrium, cannot depend on the friction coefficient, and is, then, a function of the stiffness k alone. In essence, this is a recasting of the “equipartition” method in the language of Bayesian inference.

The first method, in addition to inheriting the generic advantages of Bayesian inference that have already been pointed out in ref. 8, has several specific advantages. First, it uses the exact expression for the likelihood, which is valid for any Δt, the interval at which the position is observed. Therefore, it works reliably with data acquired at low frequencies. Second, the exact likelihood is expressed in terms of four sufficient statistics, which are quadratic functions of the positions. Their use greatly reduces the computation needed to evaluate the posterior distribution, as four numbers, rather than a large time series now represents the entire information relevant to inference. Finally, we are able to obtain exact maximum a posteriori (MAP) estimates of the mean regression and diffusion coefficients, and their error bars, in terms of the four sufficient statistics. This obviates the need for the Monte Carlo sampling or numerical minimization steps usually required in Bayesian inference. Bayesian credible regions are easily calculated from the analytically obtained error bars. The second method is different from the conventional equipartition method in that it provides a Bayesian error bar, representing a Bayesian credible interval, rather than a frequentist confidence interval9. The combined use of exact likelihoods, sufficient statistics and analytical MAP estimates yields both speed and accuracy in parameter estimation.

We apply both methods to experimental data and obtain MAP estimates and error bars that are in excellent agreement with each other. These estimates are found to be in good agreement with the commonly used power spectral density (PSD) calibration method7. The Bayesian methods of this paper are implemented in a well-documented, open-source software freely available on GitHub10.

The remainder of the paper is organized as follows. In the next section we recall several key properties of the sample paths and distributions of the Ornstein-Uhlenbeck process. We, then, present the Bayesian methods and describe the experimental setup. The Bayesian procedures are then applied to the experimental data. We conclude with a discussion of future directions in the application of Bayesian inference to optical tweezer experiments and advocate its use as a complement to standard non-Bayesian methods.

Ornstein-Uhlenbeck process

The Langevin equation for a Brownian particle confined in a potential U is given by

where ξ(t) is a zero-mean Gaussian white noise with variance 〈ξ(t)ξ(t′)〉 = 2kBTγδ(t − t′) as required by the fluctuation-dissipation theorem. In the limit of vanishing inertia and a harmonic potential,  , we obtain the overdamped Langevin equation

, we obtain the overdamped Langevin equation

where ζ(t) is now a zero-mean Gaussian white noise with unit variance. This describes the Ornstein-Uhlenbeck process, whose sample paths obey the Ito stochastic differential equation

where λ is the mean-regression rate, σ is the volatility and W(t) is the Wiener process5.

For Brownian motion, the mean regression rate λ = k/γ is the ratio of the stiffness and the friction while the square of the volatility σ2 = 2D is twice the diffusion coefficient D. The latter follows by comparing the Langevin and Ito forms of the path equation and recalling the Einstein relation D = kBTγ−1 between the diffusion and friction coefficients of a Brownian particle. In problems involving Brownian motion, it is convenient to work with the diffusion coefficient, rather than the volatility.

The ratio of λ and D provides the stiffness

in units of kBT. We note that, in general, there is no relation between the mean regression rate and volatility of the Ornstein-Uhlenbeck process and the preceding identity is a consequence of additional physical constraints, namely the fluctuation-dissipation and Einstein relations6.

The transition probability density P1|1(x′t′|xt), the probability of a displacement from x at time t to x′ at time t′, obeys the Fokker-Planck equation  , where the Fokker-Planck operator is

, where the Fokker-Planck operator is

The solution is a normal distribution,

where  is the univariate normal distribution with mean a and variance b. This solution is exact and holds for arbitrary values of |t − t′|. The correlation function decays exponentially,

is the univariate normal distribution with mean a and variance b. This solution is exact and holds for arbitrary values of |t − t′|. The correlation function decays exponentially,

a property guaranteed by Doob’s theorem for any Gauss-Markov process2. The Fourier transform of the correlation function gives the power spectral density

which is Lorentzian in the angular frequency ω. The corner frequency fc = λ/2π is proportional to the mean regression rate.

The stationary distribution P1(x) obeys the steady state Fokker-Plank equation  and the solution is, again, a normal distribution,

and the solution is, again, a normal distribution,

Comparing the forms of P1|1 and P1 it is clear that former tends to the latter for |t − t′| → ∞, as it should.

The transition probability density yields the Bayesian method for jointly estimating λ and D (and hence k), while the stationary distribution yields the Bayesian method for directly estimating k alone. We now describe these two methods.

Bayesian inference

Consider, now, the time series X ≡ (x1, x2, …, xN) consisting of observations of the sample path x(t) at the discrete times t = nΔt with n = 1, …, N. Then, using the Markov property of the Ornstein-Uhlenbeck process, the probability of the sample path is given by11

The probability P(λ, D|X) of the parameters, given the sample path, can now be computed using Bayes theorem, as

The denominator P(X) is an unimportant normalization, independent of the parameters, that we henceforth ignore. Since both k and γ must be positive, for stability and positivity of entropy production respectively, we use informative priors for λ and D, P(λ, D) = H(λ)H(D), where H is the Heaviside step function. This assigns zero probability weight for negative values of the parameters and equal probability weight for all positive values. The logarithm of the posterior probability, after using the explicit forms of P1|1 and P1, is

where we have defined the two quantities

The maximum a posteriori (MAP) estimate (λ*, D*) solves the stationary conditions ∂lnP(λ, D|X)/∂λ = 0 and ∂lnP(λ, D|X)/∂D = 0, while the error bars of this estimate are obtained from the Hessian matrix of second derivatives evaluated at the maximum3,12,13. The analytical solution of the stationary conditions, derived in the Supplementary Information, yields the MAP estimate to be

where both I2 and Δn are now evaluated at λ = λ* and the sum runs from n = 1, …, N − 1. These provide direct estimates of the parameters without the need for fitting, minimization, or Monte Carlo sampling.

The error bars are obtained from a Taylor expansion of the log posterior to quadratic order about the MAP value,

where Δλ = λ − λ* and ΔD = D − D* and ∑−1 is the matrix of second derivatives of the log posterior evaluated at the maximum. The elements  ,

,  ,

,  of the covariance matrix ∑ are the Bayesian error bars; they determine the size and shape of the Bayesian credible region around the maximum13. Their unwieldy expressions are provided in the Appendix and are made use of when computing credible regions around the MAP estimates. We refer to this Bayesian estimation procedure as “Bayes I” below.

of the covariance matrix ∑ are the Bayesian error bars; they determine the size and shape of the Bayesian credible region around the maximum13. Their unwieldy expressions are provided in the Appendix and are made use of when computing credible regions around the MAP estimates. We refer to this Bayesian estimation procedure as “Bayes I” below.

A second Bayesian procedure for directly estimating the trap stiffness results when X is interpreted not as a time series but as an exchangeable sequence, or, in physical terms, as repeated independent observations of the stationary distribution P1(x)12. In that case, the posterior probability, assuming an informative prior that constrains k to positive values, is

The MAP estimate and its error bar follow straightforwardly from the posterior distribution as

and, not unexpectedly, the standard error decreases as the number of observations increases. This procedure is independent of Δt and is equivalent to the equipartition method when the Heaviside prior is used for k. We refer to this procedure as “Bayes II” below.

The posterior probabilities in both methods can be written in terms of four functions of the data

which, therefore, are the sufficient statistics of the problem. The entire information in the time series X relevant to estimation is contained in these four statistics12. Their use reduces computational expense greatly, as only four numbers, rather than the entire time series, is needed for evaluating the posterior distributions.

The posterior distributions obtained above are for flat priors. Other choice of priors are possible. In particular, since both D and k are scale parameters a non-informative Jeffreys prior is appropriate3. Jeffreys has observed, however, that “An accurate statement of the prior probability is not necessary in a pure problem of estimation when the number of observations is large”3. The number of observations are in the tens of thousands in time series we study here and the posterior is dominated by the likelihoood rather than the prior. The prior, then, has an insignificant contribution to the posterior.

We note that the error bars obtained in both Bayes I and Bayes II refer to Bayesian credible intervals, which are relevant to the uncertainty in the parameter estimates, given the data set X. In contrast, conventional error bars refer to frequentist confidence intervals, which are relevant to the outcomes of hypothetical repetitions of measurement. In general, Bayesian credible intervals and frequentist confidence intervals are not identical and should not be compared as they provide answers to separate questions9.

A comparison of the estimates for the trap stiffness obtained from these independent procedures provides a check on the validity of the Ornstein-Uhlenbeck process as a data model. Any significant disagreement between the estimates from the two methods signals a breakdown of the applicability of the model and the assumptions implicit in it: overdamped dynamics, constant friction, harmonicity of the potential, and thermal equilibrium. This completes our description of the Bayesian procedures for estimating λ, D, and k.

Experimental setup and data acquisition

We collect position fluctuation data of an optically trapped Brownian particle using a standard optical tweezers setup that is described in detail in ref. 14. Here we provide a brief overview. The optical tweezers system is constructed around a Zeiss inverted microscope (Axiovert.A1) with a 100x 1.4 numerical aperture (NA) objective lens tightly focusing laser light at 1064 nm from a semiconductor laser (Lasever, maximum power 500 mW) into the sample. The back aperture of the objective is slightly overfilled to maximize the trapping intensity. The sample consists of a dilute suspension (volume fraction ϕ = 0.01) of polystyrene spheres of diameter 3 μm in 10% NaCl-water solution, around 20 μl of which is pipetted on a standard glass cover slip. The total power available at the trapping plane is around 15 mW. A single particle is trapped at an axial distance greater than several times its radius to avoid any wall-effects in the effective drag force due to the water, and it’s motion is observed by back-focal plane interferometry using the back-scattered intensity of a detection laser at 671 nm that co-propagates with the trapping laser. The detection laser power is maintained at much lower levels than that required to trap a particle. The back-scattered signal from the trapped particle is measured using a balanced detection scheme, schematically illustrated in Fig. 1. The back-scattered light beam is incident on an edge mirror which divides it equally into two halves that are focused using two lenses of equal focal length on photodiodes PD1 and PD2 (Thorlabs PDA100A-EC Si-photodiodes of bandwidth 2.4 MHz). The voltage outputs A and B, of PD1 and PD2 respectively, are then combined as (A − B)/(A + B) to give the normalized value of the x coordinate of motion at any instant of time. The advantage of such balanced detection is that the intensity fluctuations of the laser are present in both beams simultaneously and are thus canceled out when the difference is taken. Note that the direction of the edge mirror decides whether the x or y coordinate of motion is being measured. The mirror is rotated by 90 degrees to select between the coordinates. The fast response of the photodiodes, with a rise time of 50 ns at highest gain, ensures that spurious correlations are kept to a minimum and the data filtering necessary with slower commercial quadrant photodetectors is avoided entirely. The data from the photodiodes is logged into a computer using a National Instruments DAQ system and Labview at sampling rates between 2–5 kHz. For calibrating the motion, i.e. converting the voltage into physical distance which is necessary for measuring the diffusion constant, we employ an acousto-optic modulator that is placed in the back-focal plane of the microscope objective and scan the trapped bead by distances which are determined from the pixel calibration of images taken by the camera attached to the microscope14. The balanced detection output is simultaneously measured to yield the voltage-distance calibration of the detection system. The detection signal amplitude for Brownian motion data for 3 μm diameter spheres in water is around 1.5 V/μm and the noise floor is around 5 mV, which implies that we have a sensitivity of around 7 nm (considering signal/noise = 2) for this case. However, since scattering from spheres depends on diameter (generally increasing with diameter) as well as the refractive index of the ambient medium, this value changes when we change spheres or the medium. Typically, the particle localization is within 2–7 nm in our experiments. For the viscosity measurement, we add glycerol to water in fixed proportions to create 5 samples of different viscosity. The viscosity of each sample is then measured by a commercial rheometer (Brookfield DB3TLVCJ0) to match with the experimental results. The voltage-distance calibration is performed every time we change the particle or the ambient medium.

We note that the measured data is a result of a transformation by the detection apparatus of the physical sample paths. The Bayesian modeling of the detection apparatus and the transformations it induces on the physical sample paths is not pursued here. Therefore, we have a problem of pure estimation and there is no attempt to compare between alternative models of the data generation process.

Results and Discussions

We now present our results. In Fig. (2) we show a typical sample path of one component of motion in the plane of the trap, together with its histogram, autocorrelation function and spectral density. The histogram shows that the distribution of positions is stationary and very well-approximated by a Gaussian. The variance 〈x2〉 is used in the conventional “equipartition” method to estimate the spring constant k, while the fitting of the autocorrelation to the exponential in Eq. (7) or of the spectral density to the Lorentzian in Eq. (8) is used to estimate the spring constant when the friction constant is given. For estimation of the stiffness from the PSD, we employ the procedures suggested in ref. 7, including “blocking” the data with a bin size of 100 points, and setting the frequency range for fitting in order to avoid systematic errors due to reliability issues at both low and high frequencies7. However, there exist issues in estimating stiffness from both the equipartition method - where the presence of any additive noise leads to an increase in the variance that leads to over-estimation of the trap stiffness, and the PSD - where the standard systematics related to fitting can be minimized at best but not removed.

Panel (a) shows discrete observations of one coordinate of the sample path, (b) the histogram of the position coordinate, (c) the autocorrelation function and (d) is the spectral density. Panel (e) and (f) are the spectral density for 5 and 10 μm diameter polystyrene spheres. The fits of λ from the both the autocorrelation and the spectral density depend, respectively, on the number of lags and the number of frequencies used. The guidelines in ref. 7 and 18 are followed in obtaining the fits.

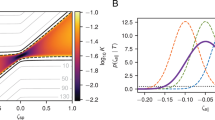

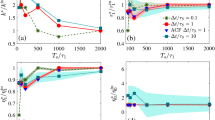

The results of Bayesian inference are shown in Fig. (3). In the top panel we show filled contours of the posterior distribution in the λ − D plane, together with contours of equal probability, for the “Bayes I” method. There is a single maximum at (λ*, D*) whose numerically computed value is in excellent agreement with the analytical MAP estimates of Eq. (12). In the bottom panel we show the Bayesian posterior distribution for the stiffness for the “Bayes II” method. There is remarkably good agreement between the two Bayesian estimates and the fit to the power spectral density as shown in Fig. (4) and Table. (1). This consistency between three conceptually and procedurally independent methods is evidence for the appropriateness of the data model. The agreement with the spectral density method, shown in the third column of the table, is within 2–3% in all cases, other than the first case where the inherent low stiffness of the trap due to low trapping power led to larger systematics due to the increased influence of the ambient low frequency noise, as we shall discuss later. The typical length of our time series is N ∼ 30000 and this gives a Bayesian error bar that is less than  % of the mean. These are well below the systematic errors and the approximately 1.5–2% variability of the estimates obtained from the fitting procedure. The 1σ standard errors in the mean are indicated in parenthesis next to each k value in Table. (1). Note that for inference of the absolute values of k and D*, we have used a temperature of 300 K which is the same as the lab environment temperature - this assumption being based on studies in literature15 where the effects of laser heating in water has shown to be well below 1 K at the power levels we employ in the trap. However, the fact that the results from Bayes I and Bayes II are extremely close to each other demonstrates that our estimate of temperature is trustworthy. Next, in order to determine the robustness of our experimental techniques, we perform experiments on two other sizes of polystyrene spheres - of diameter 5 and 10 μm, respectively. Representative values of measured stiffness and diffusion constant at a laser power of 43.8 mW are shown in Table 2. It is clear that the remarkable consistency in the values of k and D* given by the Bayes I and II methods are preserved, as is the close agreement with the values obtained from the PSD analysis. Note that the stiffness values are not really related to bead size as is well known in literature - the dependence of k on bead diameter being rather non-linear16. Our next set of measurements are directed towards determining the extent of systematic errors in our measurements. Systematic errors in our experimental apparatus may arise due to various issues including slow drifts of the laser power, beam pointing of the laser, drifts in ambient temperature, coupling with ambient low frequency (typically acoustic) noise sources, and possibly other unidentified reasons. The coupling with ambient low frequency noise sources would manifest themselves at low trapping stiffness, where sudden perturbations could affect the Brownian motion of the bead since the restoring force is less. On the other hand, the affect of slow drifts of experimental parameters would be observed at time series data of longer length. Thus, we attempt to understand the effect of systematics in our experiments using two different approaches: a) by comparing the mean and standard deviation of measured trapping parameters over 3 sets of independent time series data collected at low and high trapping laser powers for the same particle (diameter 3 μm), and b) by comparing the trapping parameters measured on time series data of different length obtained at the same laser power for the same particle. The results are shown in Table 3(a) and (b). In Table 3(a), we observe that at a low laser power of 18.5 mW, we have a standard deviation of around 6.5% in k and 4.5% in D*, while at the higher power of 43.8 mW, the standard deviation is only around 1.5% for both k and D*. This demonstrates that the effect of ambient noise does increase at lower trapping powers. In Table 3(b), we show the results of measurements of k and D* for time series data of different lengths for the same particle trapped at a laser power of 48 mW (we choose a high laser power since the coupling with the ambient noise is lesser in that case). It is clear that for time series of lengths 5, 10, and 20 s, the variation in the mean of both k and D* is only around 1.5%, but there is a large change in the mean values between 7–24% for time series data of 40 and 60 s. In addition, we check that the estimates of k and D* over different non-overlapping segments of up to 20 s length in a single data set are within the Bayesian error bars for all the time series; however, for 40 and 60 s, the mean values of different non-overlapping sets of 20 s data differ from each other significantly. Thus, it is clear that systematics due to slow drifts in different experimental apparatus occur at time scales longer than 20 s. Note that we perform our experiments on an optical table with active vibration isolation, so that there is no coupling with ambient vibrations, whereas vibrations from the table itself are at much higher frequencies than our region of interest due to the large table mass and are also damped out very fast by the presence of active dampers (we also take care not to place vibrating objects such as power supplies, etc on the table).

% of the mean. These are well below the systematic errors and the approximately 1.5–2% variability of the estimates obtained from the fitting procedure. The 1σ standard errors in the mean are indicated in parenthesis next to each k value in Table. (1). Note that for inference of the absolute values of k and D*, we have used a temperature of 300 K which is the same as the lab environment temperature - this assumption being based on studies in literature15 where the effects of laser heating in water has shown to be well below 1 K at the power levels we employ in the trap. However, the fact that the results from Bayes I and Bayes II are extremely close to each other demonstrates that our estimate of temperature is trustworthy. Next, in order to determine the robustness of our experimental techniques, we perform experiments on two other sizes of polystyrene spheres - of diameter 5 and 10 μm, respectively. Representative values of measured stiffness and diffusion constant at a laser power of 43.8 mW are shown in Table 2. It is clear that the remarkable consistency in the values of k and D* given by the Bayes I and II methods are preserved, as is the close agreement with the values obtained from the PSD analysis. Note that the stiffness values are not really related to bead size as is well known in literature - the dependence of k on bead diameter being rather non-linear16. Our next set of measurements are directed towards determining the extent of systematic errors in our measurements. Systematic errors in our experimental apparatus may arise due to various issues including slow drifts of the laser power, beam pointing of the laser, drifts in ambient temperature, coupling with ambient low frequency (typically acoustic) noise sources, and possibly other unidentified reasons. The coupling with ambient low frequency noise sources would manifest themselves at low trapping stiffness, where sudden perturbations could affect the Brownian motion of the bead since the restoring force is less. On the other hand, the affect of slow drifts of experimental parameters would be observed at time series data of longer length. Thus, we attempt to understand the effect of systematics in our experiments using two different approaches: a) by comparing the mean and standard deviation of measured trapping parameters over 3 sets of independent time series data collected at low and high trapping laser powers for the same particle (diameter 3 μm), and b) by comparing the trapping parameters measured on time series data of different length obtained at the same laser power for the same particle. The results are shown in Table 3(a) and (b). In Table 3(a), we observe that at a low laser power of 18.5 mW, we have a standard deviation of around 6.5% in k and 4.5% in D*, while at the higher power of 43.8 mW, the standard deviation is only around 1.5% for both k and D*. This demonstrates that the effect of ambient noise does increase at lower trapping powers. In Table 3(b), we show the results of measurements of k and D* for time series data of different lengths for the same particle trapped at a laser power of 48 mW (we choose a high laser power since the coupling with the ambient noise is lesser in that case). It is clear that for time series of lengths 5, 10, and 20 s, the variation in the mean of both k and D* is only around 1.5%, but there is a large change in the mean values between 7–24% for time series data of 40 and 60 s. In addition, we check that the estimates of k and D* over different non-overlapping segments of up to 20 s length in a single data set are within the Bayesian error bars for all the time series; however, for 40 and 60 s, the mean values of different non-overlapping sets of 20 s data differ from each other significantly. Thus, it is clear that systematics due to slow drifts in different experimental apparatus occur at time scales longer than 20 s. Note that we perform our experiments on an optical table with active vibration isolation, so that there is no coupling with ambient vibrations, whereas vibrations from the table itself are at much higher frequencies than our region of interest due to the large table mass and are also damped out very fast by the presence of active dampers (we also take care not to place vibrating objects such as power supplies, etc on the table).

The left panel shows filled contours of Eq. (11). The MAP estimate, Eq. (12), is marked by the filled dot and contours enclosing 70%, 90% and 99% of the probability are labeled. The middle panel shows Eq. (14). The MAP estimate, Eq. (15), is marked by the filled dot and intervals enclosing 70%, 90% and 99% of the probability are shaded. The two estimates for k agree to three decimal places. The analysis is for polystyrene bead of radius a = 3 μm.

To compare the Bayesian estimate for the diffusion coefficient we repeat the experiment for different solvent viscosities keeping both the laser power (corresponding to k ~ 6 pN) and the particle radius (a = 3μm) fixed. The Stokes-Einstein relation then provides an estimate of the diffusion coefficient. We compare this estimate with the MAP estimate D* in Table 4 to find agreement to within 10% in all cases. The Stokes-Einstein relation can be used “in reverse” to obtain a MAP estimate of the viscosity, η*, which agrees very well with the known viscosity of the mixture. The experiments were performed for five sets of data for each viscosity sample and the mean value of D* has been reported with the corresponding 1σ error in parenthesis. The error bars, which are higher than that for the k measurement, chiefly reflect the systematic errors in our experimental apparatus that have been described previously and occur at time scales longer than 20s, which is the duration over which a single data set is collected. As mentioned earlier, we have checked that the estimates of D*over different non-overlapping segments of a single data set are within the Bayesian error bars, which again confirms that the errors we observe are due to systematic shifts in the operating conditions of the experiment. The agreement in the viscosity values for that measured in the rheometer and by the Bayesian estimate of D* is within 5% for all cases with the exception of the last, where the enhanced friction caused a shift in λ towards lower values, once again increasing the effects of systematics due to ambient low frequency noise sources. Also, since this value corresponded to the highest concentration of glycerol in the water + glycerol mixture, the effects of laser heating could have been more significant15, leading to a slight increase of temperature at the trap focal volume which we have not considered in the Bayesian estimate. This “fluctuation” method of estimating the viscosity does not require the application of external fields and is so guaranteed to yield the linear response of the system while the Bayesian analysis extracts, optimally, all information relevant to this estimation problem. The viscosity of nanoliter samples can be estimated by this method, making it an attractive alternative to “response” methods that impose an external shear flow.

Conclusion

In this work, we have presented an exact Bayesian method for jointly estimating the mean regression rate and the diffusion coefficient of an optically trapped Brownian particle. The trap stiffness in temperature units is obtained as a ratio of the mean regression rate and the diffusion coefficient. We have also rephrased the standard “equipartition” method of directly estimating the trap stiffness as a problem in Bayesian inference. We have assumed that the Ornstein-Uhlenbeck process is the data generating model. More general models, which include the position dependence of the particle friction (as would be the case in the proximity to walls) or the non-Markovian character of the trajectories (as would be the case when momentum diffusion is not slow compared to the time scales of interest) can, with additional effort, be incorporated in the Bayesian framework. Exact analytical solutions will no longer be available and one has to resort to approximations of the likelihood, such as short-time expansions of the Fokker-Planck propagator or numerical solutions of the equivalent stochastic differential equations. These introduce discretization errors which must be carefully evaluated. In contrast, the method presented here is exact and can serve as an useful “null hypothesis” when comparing between different models for the data. In future work, we shall present Bayesian methods for more complex models and provide a fully Bayesian procedure, embodying Ockham’s razor12, for the problem of model selection.

Bayesian analysis is generally applicable in studying the dynamics (Brownian or otherwise) of a vast range of mesoscopic particles in diverse trapping environments. While we have focussed on spherical particles here, the method is not restricted thus, and can be applied to non-spherical particles. The rotational and translation motions are typically coupled in such cases, making the analysis more difficult in detail but no different in principle. The capability of modeling the apparatus itself could also be extremely helpful in understanding and improving the experimental techniques employed in optical trapping with the possibility of studying different systematic effects that may influence the trajectory of trapped particles, thus enhancing the capabilities and sphere of influence of optical tweezers.

Bayesian methods for data analysis are not widespread in soft matter, despite of their advantages and demonstrated success in other areas of physics. To quote a popular textbook17: “Increasingly, researchers in many branches of science are coming into contact with Bayesian statistics or Bayesian probability theory. By encompassing both inductive and deductive logic, Bayesian analysis can improve model parameter estimates by many orders of magnitude. It provides a simple and unified approach to all data analysis problems, allowing the experimenter to assign probabilities to competing hypotheses of interest, on the basis of the current state of knowledge.” In future, we hope to see many more applications of this “elegant and powerful approach to scientific inference”17 to problems in soft matter.

Additional Information

How to cite this article: Bera, S. et al. Fast Bayesian inference of optical trap stiffness and particle diffusion. Sci. Rep. 7, 41638; doi: 10.1038/srep41638 (2017).

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

Chandrasekhar, S. Stochastic problems in physics and astronomy. Reviews of modern physics 15, 1 (1943).

Van Kampen, N. G. Stochastic processes in physics and chemistry, vol. 1 (Elsevier, 1992).

Jeffreys, H. The theory of probability (OUP Oxford, 1998).

Zellner, A. Optimal information processing and bayes’s theorem. The American Statistician 42, 278–280 (1988).

Gardiner, C. W. et al. Handbook of stochastic methods, vol. 3 (Springer: Berlin,, 1985).

Kubo, R. The fluctuation-dissipation theorem. Reports on progress in physics 29, 255 (1966).

Berg-Sørensen, K. & Flyvbjerg, H. Power spectrum analysis for optical tweezers. Review of Scientific Instruments 75, 594–612 (2004).

Richly, M. U. et al. Calibrating optical tweezers with bayesian inference. Optics express 21, 31578–31590 (2013).

Jaynes, E. T. & Kempthorne, O. Confidence intervals vs bayesian intervals. In Foundations of probability theory, statistical inference, and statistical theories of science, 175–257 (Springer, 1976).

Singh, R. & Adhikari, R. Pybisp. URL https://github.com/ronojoy/pybisp.

Wang, M. C. & Uhlenbeck, G. E. On the theory of the brownian motion ii. Reviews of Modern Physics 17, 323 (1945).

Jaynes, E. T. Probability theory: The logic of science (Cambridge university press, 2003).

Sivia, D. & Skilling, J. Data analysis: a Bayesian tutorial (OUP Oxford, 2006).

Pal, S. B., Haldar, A., Roy, B. & Banerjee, A. Measurement of probe displacement to the thermal resolution limit in photonic force microscopy using a miniature quadrant photodetector. Review of Scientific Instruments 83, 023108 (2012).

Peterman, E. J. G., Gittes, F. & Schmidt, C. F. On the theory of the brownian motion ii. Biophysical Journal 84, 1308 (2003).

Simmons, R. M., Finer, J. T., Chu, S. & Spudich, J. A. Quantitative measurements of force and displacement using an optical trap. Biophysical Journal 70, 1813 (1996).

Gregory, P. Bayesian Logical Data Analysis for the Physical Sciences: A Comparative Approach with Mathematica® Support (Cambridge University Press, 2005).

Tassieri, M., Evans, R., Warren, R. L., Bailey, N. J. & Cooper, J. M. Microrheology with optical tweezers: data analysis. New Journal of Physics 14, 115032 (2012).

Acknowledgements

R.A. expresses his gratitude, long overdue, to Professor M. E. Cates for introducing him to Bayesianism.

Author information

Authors and Affiliations

Contributions

S.B. and S.P. contributed equally to this work – S.B. worked on the theoretical analysis and S.P. in experiments. R.S. and R.A. developed PyBISP. D.G. helped in the data analysis and A.K. in experiments. R.A. supervised the development of the Bayesian framework and the theoretical analysis. The experiments were performed at A.B.’s lab. R.A. and A.B. designed the research and wrote the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Supplementary information

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Bera, S., Paul, S., Singh, R. et al. Fast Bayesian inference of optical trap stiffness and particle diffusion. Sci Rep 7, 41638 (2017). https://doi.org/10.1038/srep41638

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep41638

This article is cited by

-

Quantitative analysis of non-equilibrium systems from short-time experimental data

Communications Physics (2021)

-

Bayesian inference of the viscoelastic properties of a Jeffrey’s fluid using optical tweezers

Scientific Reports (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.