Abstract

Social reward, as a significant mechanism explaining the evolution of cooperation, has attracted great attention both theoretically and experimentally. In this paper, we study the evolution of cooperation by proposing a reward model in network population, where a third strategy, reward, as an independent yet particular type of cooperation is introduced in 2-person evolutionary games. Specifically, a new kind of role corresponding to reward strategy, reward agents, is defined, which is aimed at increasing the income of cooperators by applying to them a social reward. Results from numerical simulations show that consideration of social reward greatly promotes the evolution of cooperation, which is confirmed for different network topologies and two evolutionary games. Moreover, we explore the microscopic mechanisms for the promotion of cooperation in the three-strategy model. As expected, the reward agents play a vital role in the formation of cooperative clusters, thus resisting the aggression of defectors. Our research might provide valuable insights into further exploring the nature of cooperation in the real world.

Similar content being viewed by others

Introduction

Although competition and natural selection among species drive their evolution and theoretically bring more benefit to defection, the emergence of cooperation behaviors among agents is still ubiquitous in the real world, ranging from biological systems to economic and social systems1,2,3,4,5. Therefore, exploring the extensive cooperation behaviors becomes an open challenge and attract the attentions of scientific researchers in a myriad of fields including physics, mathematics, biology and behavioral science6,7,8,9,10. In addition to being a good tool for the decision problem11,12,13,14, evolutionary game theory has been generally accepted as the common framework to tackle the issue. In particular, the prisoner’s dilemma game (PDG), the snowdrift game (SDG) and the stag-hunt game (SHG) are often employed as the metaphors for the social dilemmas15,16,17,18,19,20,21.

Irrespective of which game applies, agents can choose either to cooperate or to defect in the procedure of the game. They will both receive R (P) under mutual cooperation (mutual defection).A defector will obtain the temptation T when confronts with a cooperator, while the cooperator gains the so-called sucker’s payoff S. As a standard practice, in the PDG, the payoffs must be ordered as T > R > P > S so that the defection is the best strategy irrespective of the opponent’s decision22,23,24,25. Both in the SDG and the SHG, players interact in a similar way, but the payoff ranking is T > R > S > P (R > T > P > S) for the SDG (SHG). This minor distinction induces a significant change in the game dynamics26.

Over the past decades, a variety of scenarios have been proposed to offset the above unfavorable outcome and to enhance the cooperation in the population. These mechanisms can be classified into five categories: kin selection, direct and indirect reciprocity, group selection and network reciprocity7. In particular, network reciprocity, where agents are arranged on the spatially structured topology and interact only with their direct neighbors, has attracted a great deal of attention23,27,28. Therefore, the evolution of cooperation has been extensively explored in a variety of topologies such as regular square lattices, small-world networks, Erdös-Rényi (ER) graph, Barabási-Albert scale-free (SF) network and so on29,30,31,32. Meanwhile, different factors have also been considered in the same network structures for exploring their impact on the evolution of cooperation. For instance, different evolutionary dynamics33, different rewire mechanisms34, high value of the clustering coefficient35, randomness of different nature or a large set of strategies36, all allow the emergence and prosperity of cooperation, even if the temptation to defect reaches a high value37,38,39.

Up to the present, many realistic scenarios are introduced into evolutionary games such as tit-for-tat, win-stay and lose-shift, memory effects, age structure, and different teaching capabilities40,41,42,43,44. Besides, there is another situation of particular relevance that has received a lot of attention45,46,47,48,49,50. This is the case of social reward where cooperators can get additional benefits for their selfless behavior. In most of existing works under the reward mechanism, cooperators receive rewards as a second-stage behavior51, and these papers are almost limited to pubic goods games52,53,54. Inspired by these findings, we wonder how cooperation fares when reward is considered as an independent strategy in the pairwise interaction games.

In this work, we explore the impact of social reward on the evolution of cooperation on 2-person games in which interactions are driven by complex topologies. Specially, we introduce a third strategy (reward) in the traditional PDG and SDG and study how these defined ‘reward agents’ affect the evolution of cooperation in several topological settings.

Results

A third strategy, reward, as an independent yet particular kind of cooperation strategy is introduced in the traditional PDG and SDG models to explore the influence of social reward on the emergence of cooperative behavior. When a reward agent encounters a cooperator, the former will reward the latter γ (the degree of reward) at a small cost β with the effect of increasing the effective payoff gained by the latter. The simulation results (see Supplementary Fig. S1 and Fig. S2) show that only when the value of β is smaller than γ, will the reward mechanism promote the evolution of cooperation. When γ is fixed, the smaller the value of β, the more obvious the promoting effect. In order to get an obvious enhancement, we fix β = 0.01 and change γ from 0.1 to 0.9 in the whole work. The details of interactions between agents and their corresponding payoffs are summarized in Table 1 in the methods section.

Firstly, we concentrate on the impact of the introduced mechanism on the sustainability of cooperation by measuring the average fraction of cooperative agents <ρ> which is defined as the total fraction of cooperators and reward agents presented at the equilibrium state. The results for the PDG on three types of networks (see Methods) are shown in Fig. 1. In the standard settings of the games, the PDG on a regular square lattice provides smaller levels of cooperation compared with the other two networks ? Thus, the case of the PDG on a regular square lattice is firstly considered as demonstrated in Fig. 1(a). For the traditional PDG (i.e., no social reward), the fraction of cooperators decreases rapidly and becomes zero soon afterwards for a very small value of temptation b as indicated in the black dot line in Fig. 1(a). Interestingly, even if a small reward (γ = 0.1 or 0.3) is introduced, the dynamics of the system radically change: cooperation can survive and even become the dominant strategy for some values of b. The simulation results for the ER and the SF networks are presented in Fig. 1(b) and Fig. 1(c), respectively. It is observed that the larger the γ value, the higher the critical value of b for the extinction of cooperators, which indicates that the reward mechanism facilitates the evolution of cooperation under the two networks as well.

The generality of this newly introduced mechanism in promoting cooperation is also tested for the SDG. The fraction of cooperative agents <ρ> as a function of the cost-to-benefit ratio r for the three topologies is presented in Fig. 2. It is observed that cooperation can survive for a wider range of r values for a larger γ, implying that the reward mechanism boosts the evolution of cooperation. The results of numerical simulation in the PDG and the SDG suggest that the social reward mechanism is generally effective in sustaining the evolution of cooperation, irrespective of potential interaction networks and the types of evolutionary games.

(a) Square lattice, (b) ER graph and (c) SF network. Other parameters are consistent with Fig. 1.

To explore what are the mechanisms that allow the social reward to favor cooperation, we analyze the typical spatial configurations of the three types of agents for the PDG on a regular square lattice in Fig. 3. The parameter b is given by a constant term in the four patterns (b = 1.2). In the traditional case, the defectors occupy the whole lattice after 105 MCS (at equilibrium) as shown in Fig. 3(a), which suggests that network reciprocity is not enough to enhance the evolution of cooperation for the applied temptation value. However, when the social reward is introduced into the game, different evolutionary phenomena can be observed.Different from the classic case, a few cooperative agents (reward agents) survive in the equilibrium state as shown in Fig. 3(b) (γ = 0.1). It is because the cooperators who get in touch with defectors will firstly become reward agents and form clusters to prevent the invasion of defectors. It is the protection mechanism that allows the cooperation strategy to survive under the temptation value. When the level of reward becomes higher (say γ = 0.3 or 0.5), the cooperative agents not only survive but also become the dominant strategy. As illustrated in Fig. 3(c) (γ = 0.3), the clusters of reward agents become larger and more compact, which further results in fewer spaces left for the defectors. A few cooperator islands survive in the interior of the giant reward clusters that protect them from the intrusion by defectors. When the value of γ is further increased (γ = 0.5), for the cooperators, the profits from the interaction with reward agents become larger. Therefore, a few of reward agents turn into the members of cooperators and form a series of compact C + Re clusters, which make the special cooperative agents become more and more powerful. Finally, the cooperators and the reward agents tend to replace the defectors and occupy the whole population, as illustrated in Fig. 3(d) (γ = 0.5). These illustrative snapshots demonstrate the fact that when the reward mechanism is involved into the standard PDG, the sustainability of cooperation can be improved via strategic adjustment (say, from the cooperation strategy to the reward strategy) to form the compact hybrid cooperative clusters.

Note that the reward mechanisms enable the formation of extremely tight compact cooperative clusters. It is significant to elucidate its potential dynamics for the PDG on the square lattice. The temporal traits of the fraction of cooperative agents <ρ> for different values of the parameters γ are therefore analyzed in Fig. 4. For the traditional version, as shown by the black solid line in Fig. 4, the decrease of the cooperators cannot halt and the cooperative behavior dies out soon. When the reward mechanism is introduced, as the evolution continues, the role of the reward begins to be awaken. It is observed that the exploitation of the defectors is effectively restrained and the spreading of the cooperative behavior is promoted. As shown in the figure, the larger of the γ values, the more evident the reversal of cooperation. For a large value of γ (γ = 0.3 or 0.5), the initial decay of cooperation is restrained earlier, and finally the cooperation reaches a higher level (more than 99%). Part of the cooperators turn into reward agents and form a few compact cooperative clusters to counterattack against the invasion of the defectors, and the fraction of the cooperative agents begin to rise. The cooperative agents situated at the boundaries of clusters become robust to defector attacks and even induce the weakened defectors to become their compartments, which ultimately causes the undisputed dominance of cooperation. It is worth mentioning that the reward agents, as a defined novel kind of cooperative agents, have the same probability to populate the grid as the traditional cooperators and defectors in the initial conditions. That is why the fraction of cooperative agents in the initial condition under the newly introduced mechanism is higher than the fraction of cooperators in the standard game model.

Then it will become of particular interest to confirm the above analysis of the evolution processes. In order to provide accurate answers, we investigate in Fig. 5 the fractions of the three independent strategies including cooperation strategy, defection strategy and reward strategy in the three network topologies. The numerical simulation results of evolutionary PDG on the square lattice, the ER and SF networks are presented from Fig. 5(a) to Fig. 5(c), respectively. The values of γ in the three graphs are fixed to be 0.1. The change trends of the three strategies on the regular network (Fig. 5(a)) and the ER network (Fig. 5(b)) are extremely similar. When the b value is low, there are few defectors populating on the networks, and cooperative agents occupy most of the network nodes. With the increase of b, cooperators gradually convert to reward agents to resist the aggression of the defectors till the number of reward agents reaches the maximum value (all the cooperators turn into reward agents). The value of b corresponding to the maximum number of reward agents is 1.04 for the square lattice and 1.26 for the ER network, which also indicates that the evolution of cooperation on the ER network is superior to the evolution on the square lattice. Once all the cooperators become reward agents, these strategists have no other way to stop the spreading of defectors and the invasion of defectors become overwhelming. Essentially, because the interaction between reward agents reports fewer benefits than those between a defector and a reward agent, a small increase in b induces a transition for the agents from reward strategy to defection strategy, which finally produces the breakdown of the reward agent clusters into small clusters till all the reward agents die out. Ultimately, the defectors occupy the entire network.

The results for the SF network are radically different from the evolution of cooperative behavior on the ER and square lattice networks, as presented in Fig. 5(c). Both the reward agents and cooperators are monotonically decreasing as b becomes large. Meanwhile, the number of defectors is increasing monotonically, which implies that the mechanism promoting the evolution of cooperation for SF network may be different from the two previous discussed networks. From the change trends of the fractions of the three strategists, we can speculate that when the value of b is low, the system is composed of numerous islands of cooperators surrounded by reward agents. When the temptation to defect b gradually becomes larger, the profits from the interaction between reward agents are fewer than those from the interaction between a defector and a cooperator. Therefore, the reward agents who are situated on the vertices among the cooperators and the defectors will firstly convert to defectors. Those cooperators who are protected by reward agents become defectors as well, which induces the gradual growth of the number of defection clusters, as Fig. 5(c) shows.

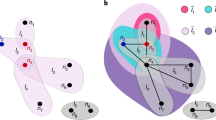

The local distributions of the cooperators provide us with important clues to analyze the system at the microscopic scale. Therefore, a pure strategist who keeps its strategy unchanged in all generations after a transient period is defined. Generally speaking, we pay more attention to cooperative behaviors, and we define three kinds of pure strategists: pure cooperators, pure reward agents, and pure cooperators and reward agents (defined as the agents that alternatively spend some time as a cooperator or as a reward agent). To further confirm the above analysis of the evolutionary mechanism for the PDG on the SF topologies, we pay attention to the three types of clusters of pure strategists: clusters formed by pure cooperators (C clusters), clusters formed by pure reward agents (Re clusters) and the ones formed by pure cooperators and reward agents together (C + Re clusters). In particular, the C + Re clusters may contain several C clusters or Re clusters. The evolution of the number of pure cooperative clusters (NC) and the number of cooperative agents (LC) in the corresponding largest cluster NC as a function of the temptation to defect b are presented in Fig. 6(a) and Fig. 6(b). In the standard PDG on SF graphs, hubs are usually occupied by the cooperators and a giant cluster of cooperators starts to grow around them until the entire network forms a complete cluster. Increasing b produces a reduction in the size of the cooperation cluster that doesn’t collapse until a higher b is reached. As inferred before, the number of cooperation and reward clusters monotonically decreases while only one cooperation and reward cluster is presented in the system until it dies out for higher b values as shown in Fig. 6(a). This is in agreement with the standard formulation of the PDG on SF topologies. Furthermore, it is evidently demonstrate in Fig. 6 that the evolution of cooperation is improved by social reward through comparison between the traditional results and the simulation results under the reward mechanism. The results imply that in the presence of social reward, the heterogeneity of the topologies strongly affects the structure and evolution of cooperation.

Finally, we have also explored how the three strategists are distributed by degree classes. The distributions of cooperators, defectors and reward agents at equilibrium for degree classes on the SF networks are demonstrated in Fig. 7(a) and Fig. 7(b), which represent for the traditional PDG (b = 2.25) and the evolutionary PDG (b = 2.4) in the newly introduced mechanism (γ = 0.1), respectively. The selection of parameter b is made to let the cooperation level equal in the two models, where the fraction of cooperation approximately equal to 0.5. The ratios of cooperators (C) and the mix of cooperators and reward agents (C + Re) as a function of the nodes’ degrees in Fig. 7(a) and in Fig. 7(b) imply that pure cooperators in the traditional model are mainly replaced by the mix of ‘C’ and ‘Re’ players, and there are only very few new nodes with different degrees form. As the figure shows, the cooperators and reward agents tend to dominate in moderate and highly connected nodes, which is also more conducive to the formation of large clusters to resist the invasion of defectors. That is why the SF network has more advantages in promoting the evolution of cooperation compared with the other two network topologies (the regular lattice and ER networks) as illustrated in Fig. 1 and Fig. 2.

Discussion

The reward scenario is extremely common in realistic world, ranging from biological systems to economic as well as social systems. In this context, we have explored the impact of social reward on the evolution of cooperation in prisoners’ dilemma game and snowdrift game. Numerical simulations show that when the social reward is introduced, the cooperation strategy is greatly improved in both the PDG and the SDG.

The analysis of the system at the microscopic level for the PDG helps us to understand the mechanisms that favor the evolution of cooperation. As described in many classical works55,56,57,58, the formation of cooperator clusters is an essential way to resist the invasion of defectors. In the three-strategy model, the defined cooperative players (reward agents) make the individuals more inclined to choose cooperation when b is small, which might be assumed to be conducive to the formation of cooperative clusters. With the increment of the temptation to defect, the cooperators firstly turn into reward agents, and then the giant cluster of reward agents break down into several ones until all the reward agents die out. Finally, defection becomes the dominant strategy. While in heterogenous graphs, cooperation is driven by nodes that can be both cooperators or reward agents. When b continues to increase, benefit from cooperative strategy is less than that from defection strategy. Therefore, the cooperative agents who are situated on the vertices among cooperators and defectors will firstly convert to defectors. By erosion of the single cluster of cooperators, the defectors gradually occupy the whole network.

In reality, individual behavior can be guided in a certain direction by social reward just as that in the article. Our results suggest that the reward strategy has a beneficial impact on the evolution of cooperative behavior, which might be particularly essential for animal and human societies.

Methods

As mentioned in the Introduction section, the three typical social dilemmas involving pairwise interactions include the prisoner’s dilemma game, the snowdrift game and the stag-hunt game. Here, we focus on the prisoner’s dilemma game (PDG) and the snowdrift game (SDG). We introduce social reward in the PDG and the SDG models on three network structures. In this work, the reward is presented as an additional third strategy corresponding to a novel kind of cooperative agents defined as reward agents. When playing with a cooperator, the reward agent will reward the cooperator γ at a small cost β, while in interaction with a defector, the reward agent acts as a cooperator. Therefore, the reward agents are defined as particular cooperators. However, note that they can exist independently, which is at variance with the second-stage behavior51. It is found from the simulation results (see Supplementary Fig. S1 and Fig. S2) that only when the value of β is smaller than γ, will the reward mechanism promote the evolution of cooperation. When γ is fixed, the smaller the value of β, the more obvious the promoting effect. In the model, we fix β to be 0.01 and change γ from 0.1 to 0.9 assuring that an obvious promoting effect can be observed. Based on previous seminal works, we consider the evolutionary PDG that is characterized with the temptation to defect T = b (the highest payoff received by a defector when playing against a cooperator), reward for mutual cooperation R = 1, and both the punishment for mutual defection P as well as the suckers payoff S (the lowest payoff received by a cooperator if playing against a defector) equaling 0. It should be pointed out that although we choose a simple and weak version (namely, S = 0), the conclusions are robust and can be observed in the full parameterized space59. For the SDG, we choose a similar scheme with T = 1 + r, R = 1, S = 1 − r and P = 0, where 0 ≤ r ≤ 1 represents the cost-to-benefit ratio (satisfying the ranking T > R > S > P). The interactions between the agents and the relative payoffs are presented in Table 1.

The evolutionary games will be conducted in regular square lattices with periodic boundary conditions, Erdös-Rényi (ER) graph, and Barabási-Albert scale-free (SF) network with the same size (N = 104 nodes) and the same average degree, i.e., <k> = 4. Initially, the three strategies of cooperation (C), defection (D), and reward (Re) are randomly distributed among the individuals with an equal probability. Then, at each Monte Carlo time step, each node i in the network gets a payoff Pi by adding all the payoffs obtained from playing with all its neighbors. Next, all the agents synchronously upstate their strategies by comparing the respective payoffs with their randomly selected neighbors, say j, and if Pi > Pj, player i will keep its strategy unchanged in the next step. On the contrary, if Pi < Pj, player i will adopt the strategy of player j with a probability proportional to the payoff difference:

where ki and kj represent the degrees of players i and j respectively, and Pmax stands for the maximum possible payoff difference between the two agents. For the classical version (without reward mechanism), it is obvious that Pmax equals to b (1 + r) for the PDG (SDG). However, under the newly introduced mechanism, the value of Pmax depends on the size relationship between b (1 + r) and 1 + γ. From equation (1), it is worth noting that it is possible for one individual to change its strategy into another strategy if the payoff of the chosen neighbor is higher than its. For instance, a cooperator can become a reward agent or a defector, but it is not a consequence of a sort of second-stage behavior in this evolutionary dynamics.

The simulations results are acquired by averaging over the last 104 Monte Carlo time steps of the total 105. To double-check, we further analyze the size of the fluctuations in <ρ>. If the size is smaller than 10−2, we assume that the stationary state has been reached; otherwise we wait for another 104 time-steps and redo the check. The system reaches the stationary state in all the simulations and no additional time-steps are needed.

Additional Information

How to cite this article: Wu, Y. et al. Impact of Social Reward on the Evolution of the Cooperation Behavior in Complex Networks. Sci. Rep. 7, 41076; doi: 10.1038/srep41076 (2017).

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

Bone, J. E., Wallace, B., Bshary, R. & Raihani, N. J. Power asymmetries and punishment in a prisoner’s dilemma with variable cooperative investment. Plos One 11, e0155773 (2016).

Deng, X., Zhang, Q., Deng, Y. & Wang, Z. A novel framework of classical and quantum prisoner’s dilemma games on coupled networks. Sci. Rep. 6, 23024 (2016).

Hilbe, C., Röhl, T. & Milinski, M. Extortion subdues human players but is finally punished in the prisoner’s dilemma. Nat. Commun. 5, 3976 (2014).

Gould, S. J. Darwinism and the expansion of evolutionary theory. Science 216, 380–387 (1982).

Wang, Z. et al. Statistical physics of vaccination. Phys. Rep. 664, 1–113 (2016).

Nowak, M. A., Sasaki, A., Taylor, C. & Fudenberg, D. Emergence of cooperation and evolutionary stability in finite populations. Nature 428, 646–650 (2004).

Nowak, M. A. Five rules for the evolution of cooperation. Science 314, 1560–1563 (2006).

Pennisi, E. How did cooperative behavior evolve? Science 309, 93 (2005).

Gómez-Gardeñes, J., Reinares, I., Arenas, A. & FlorÃa, L. M. Evolution of cooperation in multiplex networks. Sci. Rep. 2, 620 (2012).

Wang, Z., Szolnoki, A. & Perc, M. Self-organization towards optimally interdependent networks by means of coevolution. New J. Phys. 16, 033041 (2014).

Huang, K., Zheng, X. & Su, Y. Effect of heterogeneous sub-populations on the evolution of cooperation. Appl. Math. Comput. 270, 681–687 (2015).

Azevedo, A. & Paxson, D. Developing real option game models. Eur. J. Oper. Res. 237, 909–920 (2014).

Huang, K., Zheng, X., Yang, Y. & Wang, T. Behavioral evolution in evacuation crowd based on heterogeneous rationality of small groups. Appl. Math. Comput. 266, 501–506 (2015).

Huang, K., Zheng, X., Li, Z. & Yang, Y. Understanding cooperative behavior based on the coevolution of game strategy and link weight. Sci. Rep. 5, 14783 (2015).

Wu, Z. X., Xu, X. J., Huang, Z. G., Wang, S. J. & Wang, Y. H. Evolutionary prisoner’s dilemma game with dynamic preferential selection. Phys. Rev. E 74, 021107 (2006).

Vukov, J., Szabó, G. & Szolnoki, A. Cooperation in the noisy case: Prisoner’s dilemma game on two types of regular random graphs. Phys. Rev. E 73, 067103 (2006).

Liu, Y. et al. Aspiration-based learning promotes cooperation in spatial prisoner’s dilemma games. EPL 94, 60002 (2011).

Doebeli, M. & Hauert, C. Models of cooperation based on the prisoner’s dilemma and the snowdrift game. Ecol. Lett. 8, 748–766 (2005).

Zhong, L. X., Zheng, D. F., Zheng, B., Xu, C. & Hui, P. M. Networking effects on cooperation in evolutionary snowdrift game. EPL 76, 724–730 (2006).

Santos, F. C. & Pacheco, J. M. Scale-free networks provide a unifying framework for the emergence of cooperation. Phys. Rev. Lett. 95, 098104 (2005).

Perc, M. & Szolnoki, A. Coevolutionary games–a mini review. BioSystems 99, 109–125 (2010).

Zhang, H. F., Jin, Z. & Wang, Z. Cooperation and popularity in spatial games. Physica A 414, 86–94 (2014).

Szabó, G. & Fáth, G. Evolutionary games on graphs. Phys. Rep. 446, 97–216 (2007).

Perc, M. & Grigolini, P. Collective behavior and evolutionary games – an introduction. Chaos, Solitons & Fractals 56, 1–5 (2013).

Ohtsuki, H., Nowak, M. A. & Pacheco, J. M. Breaking the symmetry between interaction and replacement in evolutionary dynamics on graphs. Phys. Rev. Lett. 98, 108106 (2007).

Wang, W. X., Ren, J., Chen, G. & Wang, B. H. Memory-based snowdrift game on networks. Phys. Rev. E 74, 056113 (2006).

Perc, M., Szolnoki, A., Floría, L. M. & Moreno, Y. Evolutionary dynamics of group interactions on structured populations: a review. J. R. Soc. Interface 10, 20120997 (2013).

Wang, Z., Kokubo, S., Tanimoto, J., Fukuda, E. & Shigaki, K. Insight into the so-called spatial reciprocity. Phys. Rev. E 88, 042145 (2013).

Szabó, G., Vukov, J. & Szolnoki, A. Phase diagrams for prisoner’s dilemma game on two-dimensional lattices. Phys. Rev. E 72, 047107 (2005).

Sánchez, A. D., López, J. M. & Rodríguez, M. A. Nonequilibrium phase transitions in directed small-world networks. Phys. Rev. Lett 88, 048701 (2002).

Watts, D. J. & Strogatz, S. H. Collective dynamics of small-world networks. Nature 393, 440–442 (1998).

Wu, Z. X., Guan, J. Y., Xu, X. J. & Wang, Y. H. Evolutionary prisoner’s dilemma game on barabási–albert scale-free networks. Physica A 379, 672–680 (2007).

Xia, C. et al. Role of update dynamics in the collective cooperation on the spatial snowdrift games: Beyond unconditional imitation and replicator dynamics. Chaos, Solitons & Fractals 45, 1239–1245 (2012).

Poncela, J., Gómez-Gardeñes, J., Floría, L. M., Sánchez, A. & Moreno, Y. Complex cooperative networks from evolutionary preferential attachment. Plos One 3, e2449 (2008).

Assenza, S., Gómez-Gardeñes, J. & Latora, V. Enhancement of cooperation in highly clustered scale-free networks. Phys. Rev. E 78, 017101 (2008).

Hauert, C. & Schuster, H. G. Extending the iterated prisoner’s dilemma without synchrony. J. Theor. Biol. 192, 155–166 (1998).

Xu, B., Shi, H., Wang, J. & Huang, Y. Effective seeding strategy in evolutionary prisoner’s dilemma games on online social networks. Mode. Phys. Lett. B 29, 1550027 (2015).

Wang, Z., Xia, C. Y., Meloni, S., Zhou, C. S. & Moreno, Y. Impact of social punishment on cooperative behavior in complex networks. Sci. Rep. 3, 3055 (2013).

Li, H., Dai, Q., Cheng, H. & Yang, J. Effects of inter-connections between two communities on cooperation in the spatial prisoner’s dilemma game. New J. Phys. 12, 093048 (2010).

Liu, R. R., Jia, C. X. & Wang, B. H. Effects of heritability on evolutionary cooperation in spatial prisoner’s dilemma games. Phys. Proc. 3, 1853–1858 (2010).

Nowak, M. & Sigmund, K. A strategy of win-stay, lose-shift that outperforms tit-for-tat in the prisoner’s dilemma game. Nature 364, 56–58 (1993).

Qin, S. M., Chen, Y., Zhao, X. Y. & Shi, J. Effect of memory on the prisoner’s dilemma game in a square lattice. Phys. Rev. E 78, 041129 (2008).

Wang, Z., Wang, Z., Zhu, X. & Arenzon, J. J. Cooperation and age structure in spatial games. Phys. Rev. E 85, 011149 (2012).

Szolnoki, A. & Perc, M. Coevolution of teaching activity promotes cooperation. New J. Phys. 10, 043036 (2008).

Sigmund, K., Hauert, C. & Nowak, M. A. Reward and punishment. Proc. Natl. Acad. Sci. USA 98, 10757–10762 (2001).

Andreoni, J., Harbaugh, W. & Vesterlund, L. The carrot or the stick: Rewards, punishment and cooperation. Am. Econ. Rev. 93, 893–902 (2003).

Szolnoki, A. & Perc, M. Correlation of positive and negative reciprocity fails to confer an evolutionary advantage: Phase transitions to elementary strategies. Phys. Rev. X 3, 041021 (2013).

Szolnoki, A. & Perc, M. Decelerated invasion and waning-moon patterns in public goods games with delayed distribution. Phys. Rev. E 87, 054801 (2013).

Sasaki, T. & Uchida, S. Rewards and the evolution of cooperation in public good games. Biol. Lett. 10, 20130903 (2014).

Szolnoki, A. & Perc, M. Antisocial pool rewarding does not deter public cooperation. Proc. R. Soc. B 282, 20151975 (2015).

Jiménez, R., Lugo, H., Cuesta, J. A. & Sánchez, A. Emergence and resilience of cooperation in the spatial prisoner’s dilemma via a reward mechanism. J. Theoret. Biol. 250, 475–483 (2008).

Szolnoki, A. & Perc, M. Reward and cooperation in the spatial public goods game. EPL 92, 38003 (2010).

Milinski, M., Semmann, D. & Krambeck, H. J. Reputation helps solve the ‘tragedy of the commons’. Nature 415, 424–426 (2002).

Hauert, C. Replicator dynamics of reward & reputation in public goods games. J. Theoret. Biol. 267, 22–28 (2010).

Szolnoki, A., Perc, M. & Danku, Z. Towards effective payoffs in the prisoner’s dilemma game on scale-free networks. Physica A 387, 2075–2082 (2008).

Vukov, J., Szabó, G. & Szolnoki, A. Evolutionary prisoner’s dilemma game on newman-watts networks. Phys. Rev. E 77, 026109 (2008).

Szolnoki, A., Perc, M. & Danku, Z. Making new connections towards cooperation in the prisoner’s dilemma game. EPL 84, 50007 (2008).

Bagrow, J. P. & Brockmann, D. Natural emergence of clusters and bursts in network evolution. Phys. Rev. X 3, 021016 (2013).

Tanimoto, J. & Sagara, H. Relationship between dilemma occurrence and the existence of a weakly dominant strategy in a two-player symmetric game. Biosystems 90, 105–114 (2007).

Acknowledgements

This project was supported in part by the National Basic Research Program (2012CB955804), the Major Research Plan of the National Natural Science Foundation of China (91430108), the National Natural Science Foundation of China (11171251), and the Major Program of Tianjin University of Finance and Economics (ZD1302).

Author information

Authors and Affiliations

Contributions

Y.W. initiated the idea. Y.W. and S.C. built the model. Y.W., S.Z., Z.Z. and Z.D. performed analysis. All authors contributed to the scientific discussion and revision of the article.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Supplementary information

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Wu, Y., Chang, S., Zhang, Z. et al. Impact of Social Reward on the Evolution of the Cooperation Behavior in Complex Networks. Sci Rep 7, 41076 (2017). https://doi.org/10.1038/srep41076

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep41076

This article is cited by

-

Knowing the past improves cooperation in the future

Scientific Reports (2019)

-

Environment-based preference selection promotes cooperation in spatial prisoner’s dilemma game

Scientific Reports (2018)

-

Coevolution of teaching ability and cooperation in spatial evolutionary games

Scientific Reports (2018)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.