Abstract

The transfer entropy is a well-established measure of information flow, which quantifies directed influence between two stochastic time series and has been shown to be useful in a variety fields of science. Here we introduce the transfer entropy of the backward time series called the backward transfer entropy, and show that the backward transfer entropy quantifies how far it is from dynamics to a hidden Markov model. Furthermore, we discuss physical interpretations of the backward transfer entropy in completely different settings of thermodynamics for information processing and the gambling with side information. In both settings of thermodynamics and the gambling, the backward transfer entropy characterizes a possible loss of some benefit, where the conventional transfer entropy characterizes a possible benefit. Our result implies the deep connection between thermodynamics and the gambling in the presence of information flow, and that the backward transfer entropy would be useful as a novel measure of information flow in nonequilibrium thermodynamics, biochemical sciences, economics and statistics.

Similar content being viewed by others

Introduction

In many scientific problems, we consider directed influence between two component parts of complex system. To extract meaningful influence between component parts, the methods of time series analysis have been widely used1,2,3. Especially, time series analysis based on information theory4 provides useful methods for detecting the directed influence between component parts. For example, the transfer entropy (TE)5,6,7 is one of the most influential informational methods to detect directed influence between two stochastic time series. The main idea behind TE is that, by conditioning on the history of one time series, informational measure of correlation between two time series represents the information flow that is actually transferred at the present time. Transfer entropy has been well adopted in a variety of research areas such as economics8, neural networks9,10,11, biochemical physics12,13,14 and statistical physics15,16,17,18,19. Several efforts to improve the measure of TE have also been done20,21,22.

In a variety of fields, a similar concept of TE has been discussed for a long time. In economics, the statistical hypothesis test called as the Granger causality (GC) has been used to detect the causal relationship between two time series23,24. Indeed, for Gaussian variables, the statement of GC is equivalent to TE25. In information theory, nearly the same informational measure of information flow called the directed information (DI)26,27 has been discussed as a fundamental bound of the noisy channel coding under causal feedback loop. As in the case of GC, DI can be applied to an economic situation28,29, that is the gambling with side information4,30.

In recent studies of a thermodynamic model implementing the Maxwell’s demon31,32, which reduces the entropy change in a small subsystem by using information, TE has attracted much attention13,14,15,18,33,34,35,36,37,38. In this context, TE from a small subsystem to other systems generally gives a lower bound of the entropy change in a subsystem15,18,33. As a tighter bound of the entropy change for Markov jump process, another directed informational measure called the dynamic information flow (DIF)34 has also been discussed33,34,35,36,37,38,39,40,41,42,43.

In this article, we provide the unified perspective on different measures of information flow, i.e., TE, DI, and DIF. To introduce TE for backward time series13,38, called backward transfer entropy (BTE), we clarify the relationship between these informational measures. By considering BTE, we also obtain a tighter bound of the entropy change in a small subsystem even for non Markov process. In the context of time series analysis, this BTE has a proper meaning: an informational measure for detecting a hidden Markov model. From the view point of the statistical hypothesis test, BTE quantifies an anti-causal prediction. These fact implies that BTE would be a useful directed measure of information flow as well as TE.

Furthermore, we also discuss the analogy between thermodynamics for a small system32,44,45 and the gambling with side information4,30. To considering its analogy, we found that TE and BTE play similar roles in both settings of thermodynamics and gambling: BTE quantifies a loss of some benefit while TE quantifies some benefit. Our result reveals the deep connection between two different fields of science, thermodynamics and gambling.

Results

Setting

We consider stochastic dynamics of interacting systems  and

and  , which are not necessarily Markov processes. We consider a discrete time k (=1, …, N), and write the state of

, which are not necessarily Markov processes. We consider a discrete time k (=1, …, N), and write the state of  (

( ) at time k as xk (yk). Let

) at time k as xk (yk). Let  (

( ) be the path of system

) be the path of system  (

( ) from time k − l + 1 to k where l ≥ 1 is the length of the path. The probability distribution of the composite system at time k is represented by p(Xk = xk, Yk = yk), and that of paths is represented by

) from time k − l + 1 to k where l ≥ 1 is the length of the path. The probability distribution of the composite system at time k is represented by p(Xk = xk, Yk = yk), and that of paths is represented by  , where capital letters (e.g., Xk) represent random variables of its states (e.g., xk).

, where capital letters (e.g., Xk) represent random variables of its states (e.g., xk).

The dynamics of composite system are characterized by the conditional probability

such that

such that

where p(A = a|B = b):= p(A = a, B = b)/p(B = b) is the conditional probability of a under the condition of b.

Transfer entropy

Here, we introduce conventional TE as a measure of directed information flow, which is defined as the conditional mutual information4 between two time series under the condition of the one’s past. The mutual information characterizes the static correlation between two systems. The mutual information between X and Y at time k is defined as

This mutual information is nonnegative quantity, and vanishes if and only if xk and yk are statistically independent (i.e., p(Xk = xk, Yk = yk) = p(Xk = xk)p(Yk = yk))4. This mutual information quantifies how much the state of yk includes the information about xk, or equivalently the state of xk includes the information about yk. In a same way, the mutual information between two paths  and

and  is also defined as

is also defined as

While the mutual information is very useful in a variety fields of science4, it only represents statistical correlation between two systems in a symmetric way. In order to characterize the directed information flow from X to Y, Schreiber5 introduced TE defined as

with k ≤ k′. Equation (4) implies that TE  is an informational difference about the path of the system

is an informational difference about the path of the system  that is newly obtained by the path of the system

that is newly obtained by the path of the system  from time k′ to k′ + 1. Thus, TE

from time k′ to k′ + 1. Thus, TE  can be regarded as a directed information flow from

can be regarded as a directed information flow from  to

to  at time k′. This TE can be rewritten as the conditional mutual information4 between the paths of

at time k′. This TE can be rewritten as the conditional mutual information4 between the paths of  and the state of

and the state of  under the condition of the history of

under the condition of the history of  :

:

which implies that TE is nonnegative quantity, and vanishes if and only if the transition probability in  from

from  to yk′+1 does not depend on the time series

to yk′+1 does not depend on the time series  , i.e.,

, i.e.,

). [see also Fig. 1(a)].

). [see also Fig. 1(a)].

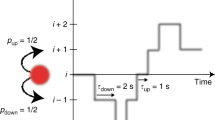

Schematics of TE and BTE.

Two graphs (a) and (b) are the Bayesian networks corresponding to the joint probabilities p(Xk = xk, Yk = yk, Yk+1 = yk+1) = p(Xk = xk)p(Yk = yk|Xk = xk)p(Yk+1 = yk+1|Xk = xk, Yk = yk) and p(Xm+1 = xm+1, Ym = ym, Yk+1 = yk+1) = p(Ym = ym)p(Ym+1 = ym+1|Ym = ym)p(Xm+1 = xm+1|Ym+1 = ym+1, Ym = ym), respectively (see also refs 15, 37 and 60). (a) Transfer entropy  corresponds to the edge from Xk to Yk+1 on the Bayesian network. If TE

corresponds to the edge from Xk to Yk+1 on the Bayesian network. If TE  is zero, the edge from Xk to Yk+1 vanishes, i.e., p(Xk = xk, Yk = yk, Yk+1 = yk+1) = p(Xk = xk)p(Yk = yk|Xk = xk)p(Yk+1 = yk+1|Yk = yk). (b) Backward transfer entropy

is zero, the edge from Xk to Yk+1 vanishes, i.e., p(Xk = xk, Yk = yk, Yk+1 = yk+1) = p(Xk = xk)p(Yk = yk|Xk = xk)p(Yk+1 = yk+1|Yk = yk). (b) Backward transfer entropy  corresponds to the edge from Ym to Xm+1 on the Bayesian network. If BTE

corresponds to the edge from Ym to Xm+1 on the Bayesian network. If BTE  is zero, the edge from Ym to Xm+1 vanishes, i.e., p(Xm+1 = xm+1, Ym = ym, Ym+1 = ym+1) = p(Ym = ym)p(Ym+1 = ym+1|Ym = ym)p(Xm+1 = xm+1|Ym+1 = ym+1).

is zero, the edge from Ym to Xm+1 vanishes, i.e., p(Xm+1 = xm+1, Ym = ym, Ym+1 = ym+1) = p(Ym = ym)p(Ym+1 = ym+1|Ym = ym)p(Xm+1 = xm+1|Ym+1 = ym+1).

Backward transfer entropy

Here, we introduce BTE as a novel usage of TE for the backward paths. We first consider the backward path of the system  (

( );

);  (

( ), which is the time-reversed trajectories of the system

), which is the time-reversed trajectories of the system  (

( ) from time N − k + l to N − k + 1. We now introduce the concept of BTE defined as TE for the backward paths

) from time N − k + l to N − k + 1. We now introduce the concept of BTE defined as TE for the backward paths

with m = N − k, m′ = N − k′ and k ≤ k′. In this sense, BTE may represent “the time-reversed directed information flow from the future to the past.” However BTE is well defined as the conditional mutual information, it is nontrivial if such a concept makes any sense information-theoretically or physically where stochastic dynamics of composite system itself do not necessarily have the time-reversal symmetry.

To clarify the proper meaning of BTE, we compare BTE  with TE

with TE  [see Fig. 1]. Transfer entropy quantifies the dependence of Xk in the transition from time Yk to Yk+1 [see Fig. 1(a)]. In the same way, BTE quantifies the dependence of Ym in the correlation between Xm+1 and Ym+1 [see Fig. 1(b)]. Thus, BTE implies how Xm+1 depends on Ym+1 without the dependence of the past state Ym. In other words, BTE

[see Fig. 1]. Transfer entropy quantifies the dependence of Xk in the transition from time Yk to Yk+1 [see Fig. 1(a)]. In the same way, BTE quantifies the dependence of Ym in the correlation between Xm+1 and Ym+1 [see Fig. 1(b)]. Thus, BTE implies how Xm+1 depends on Ym+1 without the dependence of the past state Ym. In other words, BTE  is nonnegative and vanishes if and only if a Markov chain Ym → Ym+1 → Xm+1 exists, which implies that dynamics of X are given by a hidden Markov model. In general, BTE

is nonnegative and vanishes if and only if a Markov chain Ym → Ym+1 → Xm+1 exists, which implies that dynamics of X are given by a hidden Markov model. In general, BTE  is nonnegative and vanishes if and only if a Markov chain

is nonnegative and vanishes if and only if a Markov chain

exists. Therefore, BTE from  to

to  quantifies how far it is from composite dynamics of

quantifies how far it is from composite dynamics of  and

and  to a hidden Markov model in

to a hidden Markov model in  .

.

Thermodynamics of information

We next discuss a thermodynamic meaning of BTE. To clarify the interpretation of BTE in nonequilibrium stochastic thermodynamics, we consider the following non-Markovian interacting dynamics

where a nonnegative integer n represents the time delay between  and

and  . The stochastic entropy change in heat bath

. The stochastic entropy change in heat bath  attached to the system

attached to the system  from time 1 to N in the presence of

from time 1 to N in the presence of  15 is defined as

15 is defined as

where

is the transition probability of backward dynamics, which satisfies the normalization of the probability  . For example, if the system

. For example, if the system  and

and  does not include any odd variable that changes its sign with the time-reversal transformation, the backward probability is given by pB(Xk = xk|Xk+1 = xk+1, Yk−n = yk−n) = p(Xk+1 = xk|Xk = xk+1, Yk−n = yk−n) with k ≥ n + 1 (pB(Xk = xk|Xk+1 = xk+1, Y1 = y1) = p(Xk+1 = xk|Xk = xk+1, Y1 = y1) with k ≤ n). This definition of the entropy change in the heat bath Eq. (9) is well known as the local detailed balance or the detailed fluctuation theorem45. We define the entropy change in

does not include any odd variable that changes its sign with the time-reversal transformation, the backward probability is given by pB(Xk = xk|Xk+1 = xk+1, Yk−n = yk−n) = p(Xk+1 = xk|Xk = xk+1, Yk−n = yk−n) with k ≥ n + 1 (pB(Xk = xk|Xk+1 = xk+1, Y1 = y1) = p(Xk+1 = xk|Xk = xk+1, Y1 = y1) with k ≤ n). This definition of the entropy change in the heat bath Eq. (9) is well known as the local detailed balance or the detailed fluctuation theorem45. We define the entropy change in  and heat bath as

and heat bath as

where  is the stochastic Shannon entropy change in

is the stochastic Shannon entropy change in  .

.

For the non-Markovian interacting dynamics Eq. (8), we have the following inequality (see Method);

We add that the term  vanishes for the Markovian interacting dynamics (n = 0).

vanishes for the Markovian interacting dynamics (n = 0).

These results [Eqs (12) and (13)] can be interpreted as a generalized second law of thermodynamics for the subsystem  in the presence of information flow from

in the presence of information flow from  to

to  . If there is no interaction between

. If there is no interaction between  and

and  , informational terms vanish, i.e.,

, informational terms vanish, i.e.,  ,

,  ,

,  ,

,  , I(XN;YN) = 0 and I(X1; Y1) = 0. Thus these results reproduce the conventional second law of thermodynamics

, I(XN;YN) = 0 and I(X1; Y1) = 0. Thus these results reproduce the conventional second law of thermodynamics  , which indicates the nonnegativity of the entropy change in

, which indicates the nonnegativity of the entropy change in  and bath45. If there is some interaction between

and bath45. If there is some interaction between  and

and  ,

,  can be negative, and its lower bound is given by the sum of TE from X to Y and mutual information between

can be negative, and its lower bound is given by the sum of TE from X to Y and mutual information between  and

and  at initial time;

at initial time;

which is a nonnegative quantity  . In information theory, this quantity

. In information theory, this quantity  is known as DI from

is known as DI from  to

to  27. Intuitively speaking,

27. Intuitively speaking,  quantifies a kind of thermodynamic benefit because its negativity is related to the work extraction in

quantifies a kind of thermodynamic benefit because its negativity is related to the work extraction in  in the presence of

in the presence of  32. Thus, a weaker bound (13) implies that the sum of TE quantifies a possible thermodynamic benefit of

32. Thus, a weaker bound (13) implies that the sum of TE quantifies a possible thermodynamic benefit of  in the presence of

in the presence of  .

.

We next consider the sum of TE for the time-reversed trajectories;

which is given by the sum of BTE and the mutual information between  and

and  at final time. A tighter bound (12) can be rewritten as the difference between the sum of TE and BTE;

at final time. A tighter bound (12) can be rewritten as the difference between the sum of TE and BTE;

This result implies that a possible benefit  should be reduced by up to the sum of BTE

should be reduced by up to the sum of BTE  . Thus, the sum of BTE means a loss of thermodynamic benefit. We add that a tighter bound

. Thus, the sum of BTE means a loss of thermodynamic benefit. We add that a tighter bound  is not necessarily nonnegative while a weaker bound

is not necessarily nonnegative while a weaker bound  is nonnegative.

is nonnegative.

We here consider the case of Markovian interacting dynamics (n = 0). For Markovian interacting dynamics, we have the following additivity for a tighter bound [see Supplementary information (SI)]

where the sum of TE and BTE for a single time step  and

and  are defined as

are defined as  and

and  , respectively. This additivity implies that a tighter bound for multi time steps is equivalent to the sum of a tighter bound for a single time step

, respectively. This additivity implies that a tighter bound for multi time steps is equivalent to the sum of a tighter bound for a single time step

. We stress that a tighter bound for a single time step has been derived in ref. 13. We next consider the continuous limit xk = x(t = kΔt), yk = y(t = kΔt), and N = O(Δt−1), where t denotes continuous time, Δt ≪ 1 is an infinitesimal time interval and the symbol O is the Landau notation. Here we clarify the relationship between a tighter bound (16) and DIF34 (or the learning rate18) defined as

. We stress that a tighter bound for a single time step has been derived in ref. 13. We next consider the continuous limit xk = x(t = kΔt), yk = y(t = kΔt), and N = O(Δt−1), where t denotes continuous time, Δt ≪ 1 is an infinitesimal time interval and the symbol O is the Landau notation. Here we clarify the relationship between a tighter bound (16) and DIF34 (or the learning rate18) defined as  . For the bipartite Markov jump process18 or two dimensional Langevin dynamics without any correlation between thermal noises in X and Y15, we have the following relationship [see also SI]

. For the bipartite Markov jump process18 or two dimensional Langevin dynamics without any correlation between thermal noises in X and Y15, we have the following relationship [see also SI]

Thus a bound by TE and BTE is equivalent to a bound by DIF for such systems in the continuous limit, i.e.,  .

.

Gambling with side information

In classical information theory, the formalism of the gambling with side information has been well known as another perspective of information theory based on the data compression over a noisy communication channel4,30. In the gambling with side information, the mutual information between the result in the gambling and the side information gives a bound of the gambler’s benefit.

This formalism of gambling is similar to the above-mentioned result in thermodynamics of information. In thermodynamics, thermodynamic benefit (e.g., the work extraction) can be obtained by using information. On the other hand, the gambler obtain the benefit by using side information. We here clarify the analogy between gambling and thermodynamics in the presence of information flow. To clarify the analogy between thermodynamics and gambling, BTE plays a crucial role as well as TE.

We introduce the basic concept of the gambling with side information given by the horse race4,30. Let yk be the horse that won the k-th horse race. Let fk ≥ 0 and ok ≥ 0 be the bet fraction and the odds on the k-th race, respectively. Let mk be the gambler’s wealth before the k-th race. Let sk be the side information at time k. We consider the set of side information xk−1 = {s1, …, sk−1}, which the gambler can access before the k-th race. The bet fraction fk is given by the function  with k ≥ 2, and f1(y1|x1). The conditional dependence

with k ≥ 2, and f1(y1|x1). The conditional dependence  ({x1}) of

({x1}) of  (f(y1|x1)) implies that the gambler can decide the bet fraction fk (f1) by considering the past information

(f(y1|x1)) implies that the gambler can decide the bet fraction fk (f1) by considering the past information  ({x1}). We assume normalizations of the bet fractions

({x1}). We assume normalizations of the bet fractions  and

and  , which mean that the gambler bets all one’s money in every race. We also assume that

, which mean that the gambler bets all one’s money in every race. We also assume that  . This condition satisfies if the odds in every race are fair, i.e., 1/ok(yk) is given by a probability of Yk.

. This condition satisfies if the odds in every race are fair, i.e., 1/ok(yk) is given by a probability of Yk.

The stochastic gambler’s wealth growth rate at k-th race is given by

with k ≥ 2 [ ], which implies that the gambler’s wealth stochastically changes due to the bet fraction and odds. The information theory of the gambling with side information indicates that the ensemble average of total wealth growth

], which implies that the gambler’s wealth stochastically changes due to the bet fraction and odds. The information theory of the gambling with side information indicates that the ensemble average of total wealth growth  is bounded by the sum of TE (or DI) from X to Y28,29 (see Method);

is bounded by the sum of TE (or DI) from X to Y28,29 (see Method);

where  indicates the ensemble average, and

indicates the ensemble average, and

is the Shannon entropy of

is the Shannon entropy of  . This result (21) implies that the sum of TE can be interpreted as a possible benefit of the gambler.

. This result (21) implies that the sum of TE can be interpreted as a possible benefit of the gambler.

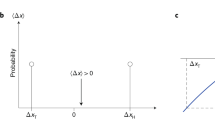

We discuss the analogy between thermodynamics of information and the gambling with side information. A weaker bound in the gambling with side information (21) is similar to a weaker bound in thermodynamics of information (16), where the negative entropy change  corresponds to the total wealth growth G. On the other hand, a tighter bound in the gambling with side information (20) is rather different from a tighter bound by the sum of BTE in thermodynamics of information (16). We show that a tighter bound in the gambling is also given by the sum of BTE if we consider the special case that the bookmaker who decides the odds ok cheats in the horse race; The odds ok can be decided by the unaccessible side information xk+1 and information of the future races

corresponds to the total wealth growth G. On the other hand, a tighter bound in the gambling with side information (20) is rather different from a tighter bound by the sum of BTE in thermodynamics of information (16). We show that a tighter bound in the gambling is also given by the sum of BTE if we consider the special case that the bookmaker who decides the odds ok cheats in the horse race; The odds ok can be decided by the unaccessible side information xk+1 and information of the future races  [see also Fig. 2]. In this special case, the fair odds of the k-th race ok can be the conditional probability of the future information

[see also Fig. 2]. In this special case, the fair odds of the k-th race ok can be the conditional probability of the future information  with k ≤ N − 1, and 1/oN(yN) = p(YN = yN|XN = xN). The inequality (20) can be rewritten as

with k ≤ N − 1, and 1/oN(yN) = p(YN = yN|XN = xN). The inequality (20) can be rewritten as

Schematic of the special case of the horse race.

The gambler can only access the past side information xk−1 and the past races  , and decides the bet fraction fk on the k-th race. The bookmaker makes some cheating which can access the future side information xk+1 and the future races

, and decides the bet fraction fk on the k-th race. The bookmaker makes some cheating which can access the future side information xk+1 and the future races  , and decides the odds on the k-th race.

, and decides the odds on the k-th race.

which implies that the sum of BTE  ) represents a loss of the gambler’s benefit because of the cheating by the bookmaker who can access the future information with anti-causality. We stress that Eq. (22) has a same form of the thermodynamic inequality (16) for Markovian interacting dynamics (n = 0). This fact implies that thermodynamics of information can be interpreted as the special case of the gambling with side information; The gambler uses the past information and the bookmaker uses the future information. If we regard thermodynamic dynamics as the gambling, anti-causal effect should be considered.

) represents a loss of the gambler’s benefit because of the cheating by the bookmaker who can access the future information with anti-causality. We stress that Eq. (22) has a same form of the thermodynamic inequality (16) for Markovian interacting dynamics (n = 0). This fact implies that thermodynamics of information can be interpreted as the special case of the gambling with side information; The gambler uses the past information and the bookmaker uses the future information. If we regard thermodynamic dynamics as the gambling, anti-causal effect should be considered.

Causality

We here show that BTE itself is related to anti-causality without considering the gambling. From the view point of the statistical hypothesis test, TE is equivalent to GC for Gaussian variables25. Therefore, it is naturally expected that BTE can be interpreted as a kind of the causality test.

Suppose that we consider two linear regression models

where α (α′) is a constant term, A (A′) is the vector of regression coefficients, ⊕ denotes concatenation of vectors, and  (

( ) is an error term. The Granger causality of

) is an error term. The Granger causality of  to

to  quantifies how the past time series of

quantifies how the past time series of  in the first model reduces the prediction error of

in the first model reduces the prediction error of  compared to the error in the second model. Performing ordinary mean squares to find the regression coefficients A (A′) and α (α′) that minimize the variance of

compared to the error in the second model. Performing ordinary mean squares to find the regression coefficients A (A′) and α (α′) that minimize the variance of  (

( ), the standard measure of GC is given by

), the standard measure of GC is given by

where var( ) denotes the variance of

) denotes the variance of  . Here we assume that the joint probability

. Here we assume that the joint probability  is Gaussian. Under Gaussian assumption, TE and GC are equivalent up to a factor of 2,

is Gaussian. Under Gaussian assumption, TE and GC are equivalent up to a factor of 2,

In the same way, we discuss BTE from the view point of GC. Here we assume that the joint probability  is Gaussian. Suppose that two linear regression models

is Gaussian. Suppose that two linear regression models

where α† (α′†) is a constant term, A† (A′†) is the vector of regression coefficients and  (

( ) is an error term. These linear regression models give a prediction of the past state of

) is an error term. These linear regression models give a prediction of the past state of  using the future time series of

using the future time series of  and

and  . Intuitively speaking, we consider GC of

. Intuitively speaking, we consider GC of  to

to  for the rewind playback video of composite dynamics

for the rewind playback video of composite dynamics  and

and  . We call this causality test the Granger anti-causality of

. We call this causality test the Granger anti-causality of  to

to  . Performing ordinary mean squares to find A† (A′†) and α† (α′†) that minimize var(

. Performing ordinary mean squares to find A† (A′†) and α† (α′†) that minimize var( ) (var(

) (var( )), we define a measure of the Granger anti-causality of

)), we define a measure of the Granger anti-causality of  to

to  as

as  . The backward transfer entropy is equivalent to the Granger anti-causality up to factor 2,

. The backward transfer entropy is equivalent to the Granger anti-causality up to factor 2,

This fact implies that BTE can be interpreted as a kind of anti-causality test. We stress that composite dynamics of  and

and  are not necessarily driven with anti-causality even if a measure of the Granger anti-causality

are not necessarily driven with anti-causality even if a measure of the Granger anti-causality  has nonzero value. As GC just finds only the predictive causality23,24, the Granger anti-causality also finds only the predictive causality for the backward time series.

has nonzero value. As GC just finds only the predictive causality23,24, the Granger anti-causality also finds only the predictive causality for the backward time series.

Discussion

We proposed that directed measure of information called BTE, which is possibly useful to detect a hidden Markov model (7) and predictive anti-causality (29). In the both setting of thermodynamics and the gambling, the measurement of BTE has a profitable meaning; the detection of a loss of a possible benefit in the inequalities (16) and (22).

The concept of BTE can provide a clear perspective in the studies of the biochemical sensor and thermodynamics of information, because the difference between TE and DIF has attracted attention recently in these fields14,35. In ref. 14, Hartich et al. have proposed the novel informational measure for the biochemical sensor called sensory capacity. The sensory capacity is defined as the ratio between TE and DIF  . Because DIF can be rewritten by TE and BTE [Eq. (18)] for Markovian interacting dynamics, we have the following expression for the sensory capacity in a stationary state,

. Because DIF can be rewritten by TE and BTE [Eq. (18)] for Markovian interacting dynamics, we have the following expression for the sensory capacity in a stationary state,

where we used I(Xk+1; Yk+1) = I(Xk; Yk) in a stationary state. This fact indicates that the ratio between TE and BTE could be useful to quantify the performance of the biochemical sensor. By using this expression (29), we show that the maximum value of the sensory capacity C = 1 can be achieved if a Markov chain of a hidden Markov model Yk → Yk+1 → Xk+1 exists. In ref. 35, Horowitz and Sandberg have shown a comparison between two thermodynamic bound by TE and DIF for two dimensional Langevin dynamics. For the Kalman-Bucy filter which is the optimal controller, they have found the fact that DIF is equivalent to TE in a stationary state. This idea can be clarified by the concept of BTE. Because the Kalman-Bucy filter can be interpreted as a hidden Markov model, BTE should be zero, and DIF is equivalent to TE in a stationary state.

Our results can be interpreted as a generalization of previous works in thermodynamics of information46,47,48. In refs 46 and 47, S. Still et al. discuss the prediction in thermodynamics for Markovian interacting dynamics. In our results, we show the connection between thermodynamics of information and the predictive causality from the view point of GC. Thus, our results give a new insight into these works of the prediction in thermodynamics. In ref. 48, G. Diana and M. Esposito have introduced the time-reversed mutual information for Markovian interacting dynamics. In our results, we introduce BTE, which is TE in the time-reversed way. Thus, our result provides a similar description of thermodynamics by introducing BTE, even for non-Markovian interacting dynamics.

We point out the time symmetry in the generalized second law (12). For Markovian interacting dynamics, the equality in Eq. (12) holds if dynamics of  has a local reversibility (see SI). Here we consider a time reversed transformation

has a local reversibility (see SI). Here we consider a time reversed transformation  , and assume a local reversibility such that the backward probability pB(A = a|B = b) equals to the original probability p(A = a|B = b) for any random variables A and B. In a time reversed transformation, we have

, and assume a local reversibility such that the backward probability pB(A = a|B = b) equals to the original probability p(A = a|B = b) for any random variables A and B. In a time reversed transformation, we have  ,

,  and

and  . The generalized second law Eq. (12) changes the sign in a time reversed transformation,

. The generalized second law Eq. (12) changes the sign in a time reversed transformation,

. Thus, the generalized second law (12) has the same time symmetry in the conventional second law, i.e.,

. Thus, the generalized second law (12) has the same time symmetry in the conventional second law, i.e.,  even for non-Markovian interacting dynamics, where ΔStot is the entropy change in total systems. In other words, the generalized second law (12) provides the arrow of time as the conventional second law. This fact may indicate that BTE is useful as well as TE in physical situations where the time symmetry plays a crucial role in physical laws.

even for non-Markovian interacting dynamics, where ΔStot is the entropy change in total systems. In other words, the generalized second law (12) provides the arrow of time as the conventional second law. This fact may indicate that BTE is useful as well as TE in physical situations where the time symmetry plays a crucial role in physical laws.

We also point out that this paper clarifies the analogy between thermodynamics of information and the gambling. The analogy between the gambling and thermodynamics has been proposed in ref. 49, however, the analogy between Eqs (16) and (22) are different from one in ref. 49. In ref. 49, D. A. Vinkler et al. discuss the particular case of the work extraction in Szilard engine, and consider the work extraction in Szilard engine as the gambling. On the other hand, our result provides the analogy between the general law of thermodynamics of information and the gambling. To clarify this analogy, we may apply the theory of gambling, for example the portfolio theory50,51, to thermodynamic situations in general. We also stress that the gambling with side information directly connects with the data compression in information theory4. Therefore, the generalized second law of thermodynamics may directly connect with the data compression in information theory. To consider such applications, BTE would play a tricky role in the theory of the gambling where the odds should be decided with anti-causality.

Finally, we discuss the usage of BTE in time series analysis. In principle, we prepare the backward time series data from the original time series data, and do a calculation of BTE as TE. To calculate BTE, we can estimate how far it is from dynamics of two time series to a hidden Markov model, or detect the predictive causality for the backward time series. In physical situations, we also can detect thermodynamic performance by comparing BTE with TE. If the sum of BTE from the target system to the other systems is larger than the sum of TE from the target system to the other systems, the target system could seem to violate the second law of thermodynamics because of the inequality (16), where the other systems play a similar role of Maxwell’s demon. Therefore, BTE could be useful to detect phenomena of Maxwell’s demon in several settings such as Brownian particles52,53, electric devices54,55, and biochemical networks13,56,57,58,59,60.

Method

The outline of the derivation of inequality (12)

We here show the outline of the derivation of the generalized second law (12) [see also SI for details]. In SI, we show that the quantity  , can be rewritten as the Kullbuck-Leiber divergence

, can be rewritten as the Kullbuck-Leiber divergence  4, where

4, where

and

and

, are nonnegative functions that satisfy the normalizations

, are nonnegative functions that satisfy the normalizations  and

and  . Due to the nonnegativity of the Kullbuck-Leiber divergence, we obtain the inequality (12), i.e.,

. Due to the nonnegativity of the Kullbuck-Leiber divergence, we obtain the inequality (12), i.e.,  . We add that the integrated fluctuation theorem corresponding to the inequality (12) is also valid, i.e.,

. We add that the integrated fluctuation theorem corresponding to the inequality (12) is also valid, i.e.,  .

.

The outline of the derivation of inequality (20)

We here show the outline of the derivation of the gambling inequality (20) [see also SI for details]. The quantity  can be rewritten as the Kullbuck-Leiber divergence DKL(ρ || π), where

can be rewritten as the Kullbuck-Leiber divergence DKL(ρ || π), where  and

and

, are nonnegative functions that satisfy the normalizations

, are nonnegative functions that satisfy the normalizations  and

and  . Due to the nonnegativity of the Kullbuck-Leiber divergence, we have the inequality (20), i.e.,

. Due to the nonnegativity of the Kullbuck-Leiber divergence, we have the inequality (20), i.e.,  .

.

Additional Information

How to cite this article: Ito, S. Backward transfer entropy: Informational measure for detecting hidden Markov models and its interpretations in thermodynamics, gambling and causality. Sci. Rep. 6, 36831; doi: 10.1038/srep36831 (2016).

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

Hamilton, J. D. “Time series analysis” (Princeton: Princeton university press, 1994).

Gao, Z. K. & Jin, N. D. A directed weighted complex network for characterizing chaotic dynamics from time series. Nonlinear Analysis: Real World Applications, 13, 947–952 (2012).

Ahmed, M. U. & Mandic, D. P. Multivariate multiscale entropy analysis. IEEE Signal Processing Letters, 19, 91–94 (2012).

Cover, T. M. & Thomas, J. A. “Elements of Information Theory” (John Wiley and Sons, New York, 1991).

Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 85, 461 (2000).

Kaiser, A. & Schreiber, T. Information transfer in continuous processes. Physica D 166, 43–62 (2002).

Hlaváčková-Schindler, K., Paluš, M., Vejmelka, M. & Bhattacharya, J. Causality detection based on information-theoretic approaches in time series analysis. Phys. Rep. 441, 1–46 (2007).

Marschinski, R. & Kantz, H. Analyzing the information flow between financial time series. Eur. Phys. J. B 30, 275 (2002).

Lungarella, M. & Sporns, O. Mapping information flow in sensorimotor networks. PLoS Comput. Biol, 2, e144 (2006).

Vicente, R., Wibral, M., Lindner, M. & Pipa, G. Transfer entropya model-free measure of effective connectivity for the neurosciences. J. Comput. Neurosci. 30, 45–67 (2011).

Wibral, M. et al. Measuring information-transfer delays. PloS one, 8(2), e55809 (2013).

Bauer, M., Cox, J. W., Caveness, M. H., Downs, J. J. & Thornhill, N. F. Finding the direction of disturbance propagation in a chemical process using transfer entropy. IEEE Trans. Control Syst. Techn. 15, 12–21 (2007).

Ito, S. & Sagawa, T. Maxwell’s demon in biochemical signal transduction with feedback loop. Nat. Commun. 6, 7498 (2015).

Hartich, D., Barato, A. C. & Seifert, U. Sensory capacity: An information theoretical measure of the performance of a sensor. Phys. Rev. E 93, 022116 (2016).

Ito, S. & Sagawa, T. Information thermodynamics on causal networks. Phys. Rev. Lett. 111, 180603 (2013).

Prokopenko, M., Lizier, J. T. & Price, D. C. On thermodynamic interpretation of transfer entropy. Entropy 15, 524–543 (2013).

Barnett, L., Lizier, J. T., Harr, M., Seth, A. K. & Bossomaier, T. Information flow in a kinetic Ising model peaks in the disordered phase. Phys. Rev. Lett. 111, 177203 (2013).

Hartich, D., Barato, A. C. & Seifert, U. Stochastic thermodynamics of bipartite systems: transfer entropy inequalities and a Maxwell’s demon interpretation. J. Stat. Mech. P02016 (2014).

Prokopenko, M. & Einav, I. Information thermodynamics of near-equilibrium computation. Phys. Rev. E 91, 062143 (2015).

Wibral, M., Vicente, R. & Lizier, J. T. (Eds.). Directed information measures in neuroscience (Heidelberg: Springer. 2014).

Staniek, M. & Lehnertz, K. Symbolic transfer entropy. Phys. Rev. Lett. 100, 158101 (2008).

Williams, P. L. & Randall, D. B. “Generalized measures of information transfer.” arXiv preprint arXiv:1102.1507 (2011).

Granger, C. W., Ghysels, E., Swanson, N. R. & Watson, M. W. “Essays in econometrics: collected papers of Clive WJ Granger” (Cambridge University Press 2001).

Granger, C. W. Investigating causal relations by econometric models and cross-spectral methods. Econometrica 37, 424438 (1969).

Barnett, L., Barrett, A. B. & Seth, A. K. Granger causality and transfer entropy are equivalent for Gaussian variables. Phys. Rev. Lett. 103, 238701 (2009).

Marko, H. The bidirectional communication theory-a generalization of information theory. IEEE Trans. Infom. Theory 21, 1345 (1973).

Massey, J. Causality, feedback and directed information. In Proc. Int. Symp. Inf. Theory Applic. 303–305 (1990).

Hirono, Y. & Hidaka, Y. Jarzynski-type equalities in gambling: role of information in capital growth. J. Stat. Phys. 161, 721 (2015).

Permuter, H. H., Kim, Y. H. & Weissman, T. On directed information and gambling. In Proc. International Symposium on Information Theory (ISIT), 1403 (2008).

Kelly, J. A new interpretation of information rate. Bell Syst. Tech. J. 35, 917926 (1956).

Sagawa, T. & Ueda, M. Generalized Jarzynski equality under nonequilibrium feedback control. Phys. Rev. Lett. 104, 090602 (2010).

Parrondo, J. M., Horowitz, J. M. & Sagawa, T. Thermodynamics of information. Nat. Phys. 11, 131–139 (2015).

Allahverdyan, A. E., Janzing, D. & Mahler, G. Thermodynamic efficiency of information and heat flow. J. Stat. Mech. P09011 (2009).

Horowitz, J. M. & Esposito, M. Thermodynamics with continuous information flow. Phys. Rev. X, 4, 031015 (2014).

Horowitz, J. M. & Sandberg, H. Second-law-like inequalities with information and their interpretations. New J. Phys. 16, 125007 (2014).

Horowitz, J. M. Multipartite information flow for multiple Maxwell demons. J. Stat. Mech. P03006 (2015).

Ito, S. & Sagawa, T. “Information flow and entropy production on Bayesian networks” arXiv: 1506.08519 (2015); a book chapter in M. Dehmer, F. Emmert-Streib, Z. Chen & Y. Shi (Eds.) “Mathematical Foundations and Applications of Graph Entropy” (Wiley-VCH Verlag, Weinheim, 2016).

Ito, S., Information thermodynamics on causal networks and its application to biochemical signal transduction. (Springer: Japan,, 2016).

Shiraishi, N. & Sagawa, T. Fluctuation theorem for partially masked nonequilibrium dynamics. Phys. Rev. E. 91, 012130 (2015).

Shiraishi, N., Ito, S., Kawaguchi, K. & Sagawa, T. Role of measurement-feedback separation in autonomous Maxwell’s demons. New J. Phys. 17, 045012 (2015).

Rosinberg, M. L., Munakata, T. & Tarjus, G. Stochastic thermodynamics of Langevin systems under time-delayed feedback control: Second-law-like inequalities. Phys. Rev. E 91, 042114 (2015).

Cafaro, C., Ali, S. A. & Giffin, A. Thermodynamic aspects of information transfer in complex dynamical systems. Phys. Rev. E, 93, 022114 (2016).

Yamamoto, S., Ito, S., Shiraishi, N. & Sagawa, T. Linear Irreversible Thermodynamics and Onsager Reciprocity for Information-driven Engines. arXiv:1604.07988 (2016).

Sekimoto, K. Stochastic Energetics (Springer, 2010).

Seifert, U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 75, 126001 (2012).

Still, S., Sivak, D. A., Bell, A. J. & Crooks, G. E. Thermodynamics of prediction. Phys. Rev. Lett. 109, 120604 (2012).

Still, S. Information bottleneck approach to predictive inference. Entropy, 16, 968–989 (2014).

Diana, G. & Esposito, M. Mutual entropy production in bipartite systems. Journal of Statistical Mechanics: Theory and Experiment, P04010 (2014).

Vinkler, D. A., Permuter, H. H. & Merhav, N. Analogy between gambling and measurement-based work extraction. J. Stat. Mech. P043403 (2016).

Cover, T. M. & Ordentlich, E. Universal portfolios with side information. IEEE Trans. Infom. Theory 42, 348–363 (1996).

Permuter, H. H., Kim, Y. H. & Weissman, T. Interpretations of directed information in portfolio theory, data compression, and hypothesis testing. IEEE Trans. Inform. Theory 57, 3248–3259 (2011).

Toyabe, S., Sagawa, T., Ueda, M., Muneyuki, E. & Sano, M. Experimental demonstration of information-to-energy conversion and validation of the generalized Jarzynski equality. Nat. Phys. 6, 988 (2010).

Bérut, A., Arakelyan, A., Petrosyan, A., Ciliberto, S., Dillenschneider, R. & Lutz, E. Experimental verification of Landauer’s principle linking information and thermodynamics. Nature 483, 187 (2012).

Koski, J. V., Maisi, V. F., Sagawa, T. & Pekola, J. P. Experimental observation of the role of mutual information in the nonequilibrium dynamics of a Maxwell demon. Phys. Rev. Lett. 113, 030601 (2014).

Kutvonen, A., Koski, J. & Ala-Nissila, T. Thermodynamics and efficiency of an autonomous on-chip Maxwell’s demon. Sci. Rep. 6, 21126 (2016).

Sartori, P., Granger, L., Lee, C. F. & Horowitz, J. M. Thermodynamic costs of information processing in sensory adaptation. PLoS Comput Biol, 10, e1003974 (2014).

Barato, A. C., Hartich, D. & Seifert, U. Efficiency of cellular information processing. New J. Phys. 16, 103024 (2014).

Bo, S., Del Giudice, M. & Celani, A. Thermodynamic limits to information harvesting by sensory systems. J. Stat. Mech. P01014 (2015).

Ouldridge, T. E., Govern, C. C. & Wolde, P. R. T. The thermodynamics of computational copying in biochemical systems. arXiv:1503.00909 (2015).

McGrath, T., Jones, N. S., Wolde, P. R. T. & Ouldridge, T. E., A biochemical machine for the interconversion of mutual information and work. arXiv:1604.05474 (2016).

Acknowledgements

We are grateful to Takumi Matsumoto, Takahiro Sagawa, Naoto Shiraishi and Shumpei Yamamoto for the fruitful discussion on a range of issues of thermodynamics. This work was supported by JSPS KAKENHI Grant Numbers JP16K17780 and JP15J07404.

Author information

Authors and Affiliations

Contributions

S.I. constructed the theory, and carried out the analytical calculations, and wrote the paper.

Ethics declarations

Competing interests

The author declares no competing financial interests.

Electronic supplementary material

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Ito, S. Backward transfer entropy: Informational measure for detecting hidden Markov models and its interpretations in thermodynamics, gambling and causality. Sci Rep 6, 36831 (2016). https://doi.org/10.1038/srep36831

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep36831

This article is cited by

-

Geometric thermodynamics for the Fokker–Planck equation: stochastic thermodynamic links between information geometry and optimal transport

Information Geometry (2024)

-

Gibbs Distribution from Sequentially Predictive Form of the Second Law

Journal of Statistical Physics (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.