Abstract

Camera calibration based on point features leads the main trends in vision-based measurement systems for both fundamental researches and potential applications. However, the calibration results tend to be affected by the precision of the feature point extraction in the camera images. As the point features are noise sensitive, line features are more appropriate to provide a stable calibration due to the noise immunity of line features. We propose a calibration method using the perpendicularity of the lines on a 2D target. The objective function of the camera internal parameters is theoretically constructed by the reverse projections of the image lines on a 2D target in the world coordinate system. We experimentally explore the performances of the perpendicularity method and compare them with the point feature methods at different distances. By the perpendicularity and the noise immunity of the lines, our work achieves a relatively higher calibration precision.

Similar content being viewed by others

Introduction

Camera is considered as an important instrument in the researches of three-dimensional reconstruction and computer vision1,2,3,4,5. The purpose of camera calibration is to obtain the transform parameters between the 2D image and the 3D space, which is crucial for the applications of biology6, materials7, image processing8,9,10,11, photon imaging12,13,14,15,16, physical measurement17,18,19,20, object detection21 and sensors22,23. A camera calibration system normally includes a CCD camera to capture the 2D image and a calibration target that is placed in the view filed of the camera in the 3D space. The measurement precision of the camera depends on the calibrated parameters. Therefore, it is significant to calibrate the camera with a precise approach.

The camera calibration is widely studied in recent years. It can be classified in three main categories, 3D cube-based calibration method, 2D plane-based calibration method and 1D bar-based calibration method. The 3D calibration methods are initially developed by Abdel-Aziz24. A 3D cubic target is established to provide the coordinates of the 3D points. The direct linear transform is invented to determine the transform matrix of the camera. Xu25 donated a three-DOF (degree of freedom) global calibration system to accurately move and rotate the 3D calibration board. A three-DOF global calibration model is constructed to calibrate the binocular systems at different positions. Then, the 2D plane-based methods26,27,28,29,30 are provided to promote the convenience of the on-site calibration and to simplify the target fabrication. A calibration method is proposed by Ying31 based on the geometric invariants. The camera parameters are solved by the projections of two lines and three spheres in the camera calibration. Shishir32 presented a method to calibrate a fish-eye lens camera. The camera is calibrated by defining a mapping between the points in the world coordinate system and the corresponding point locations in the image plane. Bell33 proposed a method to calibrate the camera by using a digital display to generate fringe patterns that encode feature points into the carrier phase. These feature points are accurately recovered, even if the fringe patterns are substantially blurred. Zhang34 outlined a camera calibration method that based on a 1D target with feature balls. The camera calibration is solved if one point is fixed. A solution is developed if six or more images of the 1D target are observed. Later, Miyagawa35 presented a simple camera calibration method from a single image using five points on two orthogonal 1D targets. The bundle adjustment technique is proposed to optimize the camera parameters. On the whole, although the accurate camera calibration is achieved by the 3D calibration targets, the precise 3D target is always difficult to be fabricated and carried. Moreover, it is difficult to apply in many fields due to the volume of the 3D target. The 2D methods are investigated to provide a convenient calibration compared with the 3D methods. A 2D calibration target contributes sufficient information of geometrical features to accurately calibrate the camera36,37,38. Besides, the 2D calibration target is in moderate size and easy to be fabricated. Although the 1D target takes the simplest model in geometry, the 1D calibration method generates less information compared with the 2D calibration method in one image.

A reliable method is outlined in this paper according to the 2D calibration target. As the point features are normally adopted in the 2D calibration method, the transform matrix is calculated by the relationship between the 2D projective lines in the image and the lines in the 3D space. Then, the reprojection errors of the lines are studied in order to verify the validity of the method. The point-based calibration methods are compared with the line-based method to evaluate the accuracy and the noise immunity in the calibration process. Finally, the lines on the target are reconstructed by the projective lines and the transform matrix with the camera parameters. The objective function is built and optimized by the perpendicularity of the reconstructed lines. The perpendicularity method is compared with the original methods to evaluate the performances of the approaches.

Results

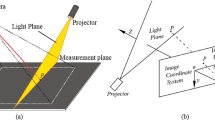

In the process of the camera calibration, it is important to provide the relationship between the coordinates of the 2D image and the ones of the 3D space. A method is proposed for the camera calibration according to the perpendicularity of 2D lines in Fig. 1. A 2D target with the checkerboard pattern is adopted as the calibration object to construct the perpendicularity of 2D lines. The world coordinate system Ow-XwYwZw is attached on the 2D calibration board and the original point of the world coordinate system Ow is located at the upper left corner of the 2D calibration board. The axes OwXw and OwYw are defined along two vertical sides of the target. The 2D calibration board is arbitrarily placed in the view filed of the camera. Oc-XcYcZc indicates the camera coordinate system. The original point of the camera coordinate system Oc is located at the optical center of the camera. OcXc, OcYc are respectively parallel to OpXp, OpYp of the image coordinate system. OcZc is the optical axis that is perpendicular to the image plane. ai and bi are two perpendicular lines generated from the checkerboard pattern on the 2D target. As the world coordinate system is attached to the 2D target, ai and bi are defined in the world coordinate system.  and

and  are the lines in the image coordinate system corresponding to the two perpendicular lines ai and bi in the world coordinate system. The transformation from the world coordinate system to the image coordinate system is a typical projective transformation; therefore, the line

are the lines in the image coordinate system corresponding to the two perpendicular lines ai and bi in the world coordinate system. The transformation from the world coordinate system to the image coordinate system is a typical projective transformation; therefore, the line  is not usually perpendicular to the line

is not usually perpendicular to the line  in the image. The vectors of the two lines

in the image. The vectors of the two lines  and

and  can be solved from the observations of the camera.

can be solved from the observations of the camera.

The method to calibrate a camera using perpendicularity of dual 2D lines in observations.

Ow-XwYwZw, Op-XpYp and Oc-XcYcZc indicate the world coordinate system, the image coordinate system and the camera coordinate system, respectively. Ha is the homography matrix from the world coordinate system to the image coordinate system. ai and bi are two perpendicular lines in the world coordinate system.  and

and  are the two projective lines of ai and bi in the image coordinate system.

are the two projective lines of ai and bi in the image coordinate system.

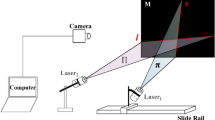

According to the calibration method, the transform matrix Ha is generated from the 2D lines in the world coordinate system and the 2D projective lines in the image coordinate system, firstly. Then, the initial solutions of the intrinsic parameters of the camera are contributed by the transform matrices of the observations. Finally, the optimal solutions are provided by minimizing the objective function. The following experiments are divided to two aspects, the initial solution experiments and the optimal solution experiments, in order to verify the validity of the method based on the perpendicularity of 2D lines. The point-based methods proposed by Zhang37 and Tsai39 are adopted as the comparative methods. The two methods are 2D plane-based calibrations. An A4 paper with the checkerboard pattern is covered on the 2D target. The size of each square is 10 mm × 10 mm. Four capture distances, 400 mm, 500 mm, 600 mm and 800 mm, are chosen to study the effect of distance in the experiments, respectively. For each distance, ten images are captured to calibrate the camera. The resolution of the images is 1024 × 768. In the process of camera calibration, 32 lines are defined by the checkerboard paper in the world coordinate system. Figure 2(a–d) show the original images observed at the distances of 400 mm, 500 mm, 600 mm and 800 mm in the first group of images, respectively. Similarly, Fig. 2(e–h) are the second group of images at the distances of 400 mm, 500 mm, 600 mm and 800 mm, respectively.

Two groups of the experimental images of the target with checkerboard pattern in the different distances.

(a) The image at the distance of 400 mm in the first group experiments. (b) The image at the distance of 500 mm in the first group experiments. (c) The image at the distance of 600 mm in the first group experiments. (d) The image at the distance of 800 mm in the first group experiments. (e) The image at the distance of 400 mm in the second group experiments. (f) The image at the distance of 500 mm in the second group experiments. (g) The image at the distance of 600 mm in the second group experiments. (h) The image at the distance of 800 mm in the second group experiments.

The coordinates of the 2D projective lines are extracted by the Hough transform40,41,42 in the images. Hough transform finds the straight line in the parameter space that is less affected by noises. So the result of the line extraction is more stable than the result of the point extraction. Figure 3 shows the results of the Hough transform in the polar coordinate system. A sinusoidal curve corresponds to a 2D point in the Cartesian coordinate system. The blue crosses symbolize the radial coordinates ρ and the angular coordinates θ of 32 lines in the polar coordinate system. The extraction results of the lines are illustrated in Fig. 4(a–h). It is obviously that the Hough transform accurately detects the lines on the calibration target.

The results of the Hough transform in the polar coordinate system.

The sinusoidal curves relate to the 2D points in the world coordinate system. The blue crosses represent the radial coordinates ρ and the angular coordinates θ of 32 lines in the polar coordinate system. (a–h) are the corresponding results to Fig. 2(a–h), respectively.

The recognition results of the 2D lines in the Cartesian coordinate system.

The coordinates of the lines are derived from the radial coordinates ρ and the angular coordinates θ in the polar coordinate system. (a–h) are the corresponding results of Fig. 2(a–h), respectively.

The line-based method is compared to the point-based methods to verify the calibration validity and noise immunity. The transform matrix Ha of the line projection is experimentally obtained from the coordinates of the lines in the image coordinate system and the coordinates of the lines in the world coordinate system. Then we have43

where aai is the line in the world coordinate system,  is the reprojection of the line aai by the transform matrix Ha. The transform matrices of the Zhang’s method and Tsai’s method are denoted by Hm, Ht. We have43

is the reprojection of the line aai by the transform matrix Ha. The transform matrices of the Zhang’s method and Tsai’s method are denoted by Hm, Ht. We have43

where Mi is the point in the world coordinate system, mi is the point in the image coordinate system. The image points mi are fitted to a straight line by the least square method. The coordinates of these lines are denoted by  ,

,  . The errors of three methods are defined by

. The errors of three methods are defined by

where Δaai is the error of the line-based method, Δami is the error of the Zhang’s point-based method with 2D plane target, Δati is the error of the Tsai’s point-based method with 2D plane target,  is the image line generated by the Hough transform,

is the image line generated by the Hough transform,  is the reprojection of the line aai by the transform matrix Ha,

is the reprojection of the line aai by the transform matrix Ha,  is the fitted line derived from the transform matrix Hm and the reprojections of points,

is the fitted line derived from the transform matrix Hm and the reprojections of points,  is the fitted line derived from the transform matrix Ht and the reprojections of points.

is the fitted line derived from the transform matrix Ht and the reprojections of points.

The errors of the perpendicularity method, Zhang’s method and Tsai’s method are shown in Fig. 5(a–h). The errors of the line-based method are less than the point-based methods. The first group of experiments corresponds to Fig. 5(a–d). The mean errors and the variances are listed in Table 1. The second group of experiments corresponds to Fig. 5(e–h). The mean errors and the variances are listed in Table 2. According to the error data above, the errors in the X direction, Y direction and the root-mean-square errors of the line-based method are all far less than the errors of the point-based methods in the two groups of experiments. The errors of the line-based method vary indistinctively with the increasing distance. However, the errors of the point-based methods show the increasing trend when the distance is on the rise. The error variances of the line-based method have been compared with the error variances of the point-based methods. It is indicated that the variation range of the errors using the line-based method is smaller than the variation range of the point-based methods. The variances of the errors adopting the line-based method fluctuate in a small range with the increase of the distance. However, the variances of the errors using the point-based methods provide a significant jump with the increasing distance. The results reveal that the line-based method is less affected by the capture distance compared to the point-based methods. According to the results and analyses above, the line-based method contributes higher accuracy in the camera calibration process.

The errors of the reprojective lines adopting the line-based calibration method, Zhang’s and Tsai’s point-based methods in the X direction, Y direction and the root-mean-square of errors, respectively.

(a–h) correspond to Fig. 2(a–h), respectively. The red data are the experiment errors of the line-based calibration method. The blue data are the Zhang’s point-based calibration method. The orange data are the Tsai’s point-based calibration method. The red data are located below the blue and orange data, which denote that the line-based calibration method contributes the higher accuracy.

The calibration accuracy of the three methods is further analyzed by adding different levels of Gaussian noises to the original images. The variances of the added noises are 0.0001, 0.0002, 0.0005, 0.001, 0.002, 0.005, 0.01, 0.02 and 0.05, respectively. The average errors are identified by the root-mean-square errors of the lines. The line-based method and Zhang’s and Tsai’s point-based methods are compared in Fig. 6. The values of the Gaussian noises are shown by the denary logarithms and the values of the average errors are presented by the natural logarithms for the purpose of direct observation. Figure 6(a–d) show the relationship between the average errors and the values of the noises at the distances of 400 mm, 500 mm, 600 mm and 800 mm, respectively. The errors of the three methods are all on the increase with the increasing noises. The average root-mean-square errors of the ten images using the line-based method increase from 9.06 × 10−5 to 4.10 × 10−2 as the noises vary from 0.0001 to 0.05 at the distance of 400 mm. Nevertheless, the average root-mean-square errors of the ten images based on the Zhang’s and Tsai’s methods are from 3.02 × 10−2 to 2.62 × 10−1 and from 5.14×10−2 to 3.15×10−1, as the noises vary from 0.0001 to 0.05 at the distance of 400 mm. Moreover, when the distance is 500 mm, the average root-mean-square errors of the ten images grow from 8.58 × 10−5 to 3.80 × 10−2. The average errors of Zhang’s and Tsai’s methods are from 2.81 × 10−2 to 2.52 × 10−1 and from 5.53×10−2 to 3.84×10−1, respectively. At the distance of 600 mm, the root-mean-square errors of the ten images using the line-based method increase from 9.88 × 10−5 to 4.08 × 10−2, respectively. The average errors of Zhang’s and Tsai’s methods are from 3.09 × 10−2 to 2.61 × 10−1 and from 5.54×10−2 to 4.20×10−1, respectively. At the distance of 800 mm, the root-mean-square errors of the ten images using the line-based method grow from 9.13 × 10−5 to 3.93 × 10−2, respectively. The root-mean-square errors of Zhang’s and Tsai’s methods are from 3.21 × 10−2 to 2.57 × 10−1 and from 6.07×10−2 to 3.85×10−1, respectively. It is evident that the average errors of the line-based method steadily increase as the noises are on the rise. However, the average errors of the line-based method grow more slowly than the errors of the point-based methods under the nine levels of noises. It proves that the line-based method provides a better noise immunity compared with the point-based calibration methods.

Average logarithmic errors of the line-based calibration, Zhang’s and Tsai’s point-based calibrations related to the logarithms of noises and the serial number of lines.

(a) The comparison of the errors using the line-based method and the point-based methods at the distance of 400 mm. (b) The comparison of the errors using the line-based method and the point-based methods at the distance of 500 mm. (c) The comparison of the errors using the line-based methods and the point-based methods at the distance of 600 mm. (d) The comparison of the errors using the line-based method and the point-based methods at the distance of 800 mm.

The calibration results are affected by noises in the camera calibration process. Therefore, the initial solution of the intrinsic parameters should be optimized to approach the real values of the parameters. The perpendicular method is proposed to solve the optimal value of the intrinsic parameter matrix. The elements of u0 and v0 indicate the principal point with pixel dimensions. As the principal point should theoretically coincide with the center of the image, u0 and v0 are chosen to evaluate the validity of the proposed optimal method. Figure 7 presents the optimal results of the initial values of the two elements u0 and v0 in the intrinsic parameter matrix. The dotted lines in Fig. 7 present the coordinates of the image center in the image coordinate system. Comparative experiments are performed on the perpendicularity method and Zhang’s method at the different distances.

The calibration results of the principle point (u0, v0) generated from the perpendicularity method, Zhang’s method and Tsai’s method.

The red marks show that the initial solutions and the optimal solutions of the perpendicularity method vary with the number of the images. The blue marks show that the initial solutions and the optimal solutions of Zhang’s method vary with the number of the images. The orange marks show that the initial solutions and the optimal solutions of Tsai’s method vary with the number of the images. (a) The initial and the optimal values of u0 of the perpendicularity method, Zhang’s and Tsai’s methods at the distance of 400 mm. (b) The initial and the optimal values of v0 of the perpendicularity method, Zhang’s and Tsai’s methods at the distance of 400 mm. (c) The initial and the optimal values of u0 of the perpendicularity method, Zhang’s and Tsai’s methods at the distance of 500 mm. (d) The initial and the optimal values of v0 of the perpendicularity method, Zhang’s and Tsai’s methods at the distance of 500 mm. (e) The initial and the optimal values of u0 of the perpendicularity method, Zhang’s and Tsai’s methods at the distance of 600 mm. (f) The initial and the optimal values of v0 of the perpendicularity method, Zhang’s and Tsai’s methods at the distance of 600 mm. (g) The initial and the optimal values of u0 of the perpendicularity method, Zhang’s and Tsai’s methods at the distance of 800 mm. (h) The initial and the optimal values of v0 of the perpendicularity method, Zhang’s and Tsai’s methods at the distance of 800 mm.

According to Fig. 7, the initial values of u0 and v0 using the perpendicularity method approach to the coordinates of the center points of the images with the rising number of the images. The first few points are far away from the dotted lines. However, the optimal values of the perpendicularity optimal method are all near the dotted lines. The initial values of u0 and v0 based on Zhang’s and Tsai’s methods vary a lot with the increasing numbers of the images. Moreover, the optimal values are basically near the dotted lines as the number of the images is on the rise.

The means and the variances of the initial and optimal values of u0, v0 are listed in Table 3. The calibrated intrinsic parameters of the camera at the different distances are shown in Table 4. Considering the experiment data above, the mean values of the initial u0 and v0 adopting the perpendicularity method are more close to the coordinates of the center point compared with the Zhang’s and Tsai’s methods. The optimal values of u0 and v0 of the perpendicularity method show an obviously decreasing trend. The mean optimal values of u0 and v0 are close to the coordinates of the center point of the image. The optimal values of u0 and v0 based on the Zhang’s and Tsai’s optimal methods are also close to the coordinates of the center point. However, the descending velocity of the perpendicularity method is higher than the velocity of Zhang’s and Tsai’s optimal methods. Moreover, the variances of the optimal values of u0 and v0 based on Zhang’s and Tsai’s methods are larger than the variances of the perpendicularity method, except the variances of the initial and optimal values of u0 at the distance of 400 mm, the optimal value of v0 at the distance of 500 mm, the initial value of u0, the initial and the optimal values of v0 at the distance of 600 mm, and the initial value of u0 at the distance of 800 mm.

Discussion

In the experiment results, the line-based calibration method contributes an initial solution with higher accuracy. The perpendicularity method describes a better optimization approach. In the camera calibration process, the coordinates of the geometrical features significantly affect the accuracy of the camera calibration. In the perpendicularity method, the Hough transform is employed to extract the coordinates of the lines. As the Hough transform takes the advantage of the higher noise immunity than the point extraction method, the coordinates of lines are more accurate than the coordinates of points for the geometrical features. Furthermore, the 2D lines are stable features with respect to the variable distance. However, the 2D points that are identified in a close observation are smaller in a far observation. As the noise effects are the same on the images, the point feature tends to be recognized in the different locations for different distances. Finally, as the lines pass though the feature points, the objective function adopts the perpendicularity of the lines as the optimal object, which includes more geometrical information than the feature point superposition.

Methods

The previous method adopts points as the calibration features. Consequently, Harris corner detector38 is often chosen to extract point coordinates in the image to calibrate the camera. In this paper, the coordinates of the lines in the image coordinate system are generated from the Hough transform40,41. The coordinates of a random 2D point in the Cartesian coordinate system correspond to a sinusoidal curve with two parameters, the radial coordinate ρ and the angular coordinate θ, in the polar coordinate system. Thus, a line in the Cartesian coordinate system is transferred to a series of sinusoidal curves in the polar coordinate system by the Hough transform. The polar coordinates of the crossing point of the sinusoidal curves relate to the correct coordinates of the line in the Cartesian coordinate system. Thus, Hough transform extracts the line in the image by solving the optimal values of the radial coordinate ρ and the angular coordinate θ in the parameter space and transferring them to the Cartesian coordinate system.

The calibration method includes two procedures, initial solution and optimal solution. The initial solutions of the homography matrix Ha and camera parameters are solved at first by the similar way to the point-based calibration method37. The line transform from the 2D world coordinate system to the image coordinate system is represented as43

where ai = [ai, bi, ci]T,  = [

= [ ,

,  ,

,  ]T, Ha = [h1 h2 h3]T, hjT is the jth-row of Ha, Ha is a 3 × 3 transfer matrix of the camera.

]T, Ha = [h1 h2 h3]T, hjT is the jth-row of Ha, Ha is a 3 × 3 transfer matrix of the camera.

As the cross product of two same vectors,  and Haai, is a zero vector 0, a 2D projective line

and Haai, is a zero vector 0, a 2D projective line  in the image coordinate system and 2D line ai in the world coordinate system satisfy

in the image coordinate system and 2D line ai in the world coordinate system satisfy

Equation (8) is rewritten as

where  ,

,  ,

,  .

.

The singular value decomposition of the matrix G is expressed by44

where B1 and C1 are orthogonal matrices, Λ1 is a diagonal matrix composed of the descending singular values.

From the right orthogonal matrix C1, we have44

where  is the vector related to the smallest singular value in Λ1. Ha is obtained by arranging vector h.

is the vector related to the smallest singular value in Λ1. Ha is obtained by arranging vector h.

The transform matrix Ha stands for the projection between 2D world lines and 2D image lines, however, the camera parameters have been calculated by the point-based transform matrix Hm. Therefore, the transform from the line-based matrix Ha to the point-based matrix Hm should be performed on Ha. Although the relationship between Ha and Hm are given by ref. 43, we investigate the relationship by the following another way.

Two 2D points x1 and x2 are projected to the image points  and

and  by the point-based transform matrix Hm as follows43

by the point-based transform matrix Hm as follows43

The 2D line l determined by the 2D points x1 and x2 is carried out as

The projective line l′ determined by the image points  and

and  is

is

From equations (12), (13) and (15), the projective line l′ is

The right of equation (14) is transferred to

where  is the adjoint matrix of Hm.

is the adjoint matrix of Hm.

From equations (14), (16) and (17), we have

For a non-singular projective matrix Hm, it is well known that

Stacking equations (7), (18) and (19), we obtain

where  .

.

A projective matrix Hm is decomposed to37

where Hm = [h1 h2 h3], hi is the ith column of Hm,  is the intrinsic parameter matrix of the camera, (r1 r2 t) is the extrinsic parameters that relates the position and posture of the camera in the world coordinate system.

is the intrinsic parameter matrix of the camera, (r1 r2 t) is the extrinsic parameters that relates the position and posture of the camera in the world coordinate system.

The intrinsic parameters of the camera can be solved by37

where  , qij = [hi1hj1, hi1hj2 + hi2hj1, hi2hj2, hi3hj1 + hi1hj3, hi3hj2 + hi2hj3, hi3hj3]T, x = [x1, x2, x3, x4, x5, x6].

, qij = [hi1hj1, hi1hj2 + hi2hj1, hi2hj2, hi3hj1 + hi1hj3, hi3hj2 + hi2hj3, hi3hj3]T, x = [x1, x2, x3, x4, x5, x6].

The singular value decomposition of the matrix Q that is derived from several homography matrices Hm is expressed by44

where B2 and C2 are orthogonal matrices, Λ2 is a diagonal matrix with the descending singular values. From the orthogonal matrix C2, we have44

According to equation (24), the intrinsic parameters can be determined by37

where α, β are the scale factors of the image, γ is the skew parameter of the image axes, λ is a scalar, (u0, v0) is the principal point with pixel dimensions.

The extrinsic parameters r1, r2 and t are obtained from equations (21) and (25) and given by37

The intrinsic parameters in the matrix A are considered as the initial solutions of the camera. The image information is affected by noises, illuminations, capture distance and other factors. For this reason, an objective function is constructed to solve the optimal solutions of the camera parameters. The perpendicularity of lines is not preserved under the perspective imaging. However, the property is invariable to the reconstructed lines in the world coordinate system. The parameterized lines in the world coordinate system are reconstructed by the lines in the image coordinate system and camera parameters. Then, the objective function is established by the sum of the dot products among the perpendicular reconstructed lines. Finally, the camera parameters are achieved by minimizing the objective function.

According to equations (7) and (21), the relationship between the coordinates of the lines in the world coordinate system and the coordinates of the lines in the image coordinate system can be represented as43

where  ,

,  are the coordinates of the image lines in the ith image,

are the coordinates of the image lines in the ith image,  is the transpose of the point-based homography matrix of the ith image, ai, bi are the reconstructed lines in the world coordinate system.

is the transpose of the point-based homography matrix of the ith image, ai, bi are the reconstructed lines in the world coordinate system.

The sum of the dot products among the perpendicular reconstructed lines should be theoretically zero, then

where ai and bi indicate a vertical line and a horizontal line in the world coordinate system, respectively.

From equation (21), Hmiis written by the product of the intrinsic matrix and the extrinsic matrix as

Stacking equations (27)-(30), the objective function considering the perpendicularity of the reconstructed lines is given by

The optimal elements of the intrinsic parameters of the camera are obtained by minimizing the objective function and given by

where arg means the arguments correspond to the minimized function f(u0, v0, α, β, γ,).

Additional Information

How to cite this article: Xu, G. et al. A method to calibrate a camera using perpendicularity of 2D lines in the target observations. Sci. Rep. 6, 34951; doi: 10.1038/srep34951 (2016).

References

Poulin-Girard, A. S., Thibault, S. & Laurendeau, D. Influence of camera calibration conditions on the accuracy of 3D reconstruction. Opt. Express 24, 2678–2686 (2016).

Velten, A. et al. Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nat. Commun. 3, 745 (2012).

Brida, G., Degiovanni, I. P., Genovese, M., Rastello, M. L. & Ruoberchera, I. Detection of multimode spatial correlation in PDC and application to the absolute calibration of a CCD camera. Opt. Express 18, 20572–20584 (2010).

Juarezsalazar, R., Guerrerosanchez, F., Robledosanchez, C. & Gonzálezgarcía, J. Camera calibration by multiplexed phase encoding of coordinate information. Appl. Optics 54, 4895–4906 (2015).

Rodríguez, J. A. M. & Alanís, F. C. M. Binocular self-calibration performed via adaptive genetic algorithm based on laser line imaging. J. Mod. Optic. 26, 1–14 (2016).

Feld, M. S., Yaqoob, Z., Psaltis, D. & Yang, C. Optical phase conjugation for turbidity suppression in biological samples. Nat. Photon. 2, 110–115 (2008).

Popoff, S., Lerosey, G., Fink, M., Boccara, A. C. & Gigan, S. Image transmission through an opaque material. Nat. Commun. 1, 1–5 (2010).

Charbal, A. et al. Integrated digital image correlation considering gray level and blur variations: application to distortion measurements of IR camera. Opt. Laser. Eng. 78, 75–85 (2016).

Vellekoop, I. M., Lagendijk, A. & Mosk, A. P. Exploiting disorder for perfect focusing. Nat. Photon. 4, 320–322 (2010).

Wang, L., Ho, P. P., Liu, C., Zhang, G. & Alfano, R. R. Ballistic 2-D imaging through scattering walls using an ultrafast optical Kerr gate. Science 253, 769–771 (1991).

Katz, O., Small, E., Bromberg, Y. & Silberberg, Y. Focusing and compression of ultrashort pulses through scattering media. Nat. Photon. 5, 372–377 (2011).

Avella, A., Ruo-Berchera, I., Degiovanni, I. P., Brida, G. & Genovese, M. Absolute calibration of an EMCCD camera by quantum correlation, linking photon counting to the analog regime. Opt. Lett. 41, 1841–1844 (2016).

Morris, P. A., Aspden, R. S., Bell, J. E., Boyd, R. W. & Padgett, M. J. Imaging with a small number of photons. Nat. Commun. 6, 5913–5913 (2015).

Schwarz, B. Mapping the world in 3D. Nat. Photon. 4, 429–430 (2010).

Katz, O., Small, E. & Silberberg, Y. Looking around corners and through thin turbid layers in real time with scattered incoherent light. Nat. Photon. 6, 549–553 (2012).

Gariepy, G. et al. Single-photon sensitive light-in-fight imaging. Nat. Commun. 6, 6021–6021 (2015).

Lee, J., Kim, Y. J., Lee, K., Lee, S. & Kim, S. W. Time-of-flight measurement with femtosecond light pulses. Nat. Photon. 4, 716–720 (2010).

Bizjan, B., Širok, B., Drnovšek, J. & Pušnik, I. Temperature measurement of mineral melt by means of a high-speed camera. Appl. Optics 54, 7978–7984 (2015).

Houssineau, J., Clark, D. E., Ivekovic, S. & Lee, C. S. A unified approach for multi-object triangulation, tracking and camera calibration. IEEE Trans. Signal Proces. 64, 2934–2948 (2014).

Marques, M. J., Rivet, S., Bradu, A. & Podoleanu, A. Polarization-sensitive optical coherence tomography system tolerant to fiber disturbances using a line camera. Opt. Lett. 40, 3858–3861 (2015).

Lind, S., Aßmann, S., Zigan, L. & Will, S. Fluorescence characteristics of the fuel tracers triethylamine and trimethylamine for the investigation of fuel distribution in internal combustion engines. Appl. Optics 55, 710–715 (2016).

McCarthy, A. et al. Long-range time-of-flight scanning sensor based onhigh-speed time-correlated single-photon counting. Appl. Optics 48, 6241–6251 (2009).

Vargas, J., Gonzálezfernandez, L., Quiroga, J. A. & Belenguer, T. Calibration of a shack–hartmann wavefront sensor as an orthographic camera. Opt. Lett. 35, 1762–1764 (2010).

Abdel-Aziz, Y. I., Karara, H. M. & Hauck, M. Direct linear transformation from comparator coordinates into object space coordinates in close-range photogrammetry. Photogramm. Eng. Rem. S. 81, 103–107 (2015).

Xu, G. et al. Three degrees of freedom global calibration method for measurement systems with binocular vision. J. Opt. Soc. Korea 20, 107–117 (2016).

Huang, L., Chua, P. S. K. & Asundi, A. Least-squares calibration method for fringe projection profilometry considering camera lens distortion. Appl. Optics 49, 1539–1548 (2010).

Liu, M. et al. Generic precise augmented reality guiding system and its calibration method based on 3d virtual model. Opt. Express 24, 12026–12042 (2016).

Xu, G. et al. An optimization solution of a laser plane in vision measurement with the distance object between global origin and calibration points. Sci. Rep-UK 5, 11928–11944 (2015).

Xu, G., Zhang, X., Su, J., Li, X. & Zheng, A. Solution approach of a laser plane based on Plücker matrices of the projective lines on a flexible 2D target. Appl. Optics 55, 2653–2656 (2016).

Lv, F., Zhao, T. & Nevatia, R. Camera calibration from video of a walking human. IEEE Trans. Pattern Anal. 28, 1513–1518 (2006).

Ying, X. & Hu, Z. Catadioptric camera calibration using geometric invariants. IEEE Trans. Pattern Anal. 26, 1260–1271 (2004).

Shah, S. & Aggarwa, J. K. Intrinsic parameter calibration procedure for a (high-distortion) fish-eye lens camera with distortion model and accuracy estimation. Pattern Recogn. 29, 1775–1788 (1996).

Bell, T., Xu, J. & Zhang, S. Method for out-of-focus camera calibration. Appl. Optics 55, 2346–2352 (2016).

Zhang, Z. Camera calibration with one-dimensional objects. IEEE Trans. Pattern Anal. 26, 892–899 (2004).

Miyagawa, I., Arai, H. & Koike, H. Simple camera calibration from a single image using five points on two orthogonal 1-d objects. IEEE Trans. Image Process. 19, 1528–1538 (2010).

Chen, R. et al. Accurate calibration method for camera and projector in fringe patterns measurement system. Appl. Optics 55, 4293–4300 (2016).

Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. 22, 1330–1334 (2000).

Harris, C. & Stephens, M. A combined corner and edge detector. Alvey Vision Conference 147–151 (1988).

Tsai, R. A versatile camera calibration technique for high-accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE J. Robot. Autom. 3, 323–344 (1987).

O’Gorman, F. & Clowes, M. B. Finding picture edges through collinearity of feature points. IEEE Trans. Comput. 25, 449–456 (1976).

Hart, P. E. How the Hough transform was invented. IEEE Signal Proc. Mag. 26, 18–22 (2009).

Fernandes, L. A. F. & Oliveira, M. M. Real-time line detection through an improved Hough transform voting scheme. Pattern Recogn. 41, 299–314 (2008).

Hartley, R. & Zisserman, A. Multiple View Geometry in Computer Vision (Cambridge University Press, Cambridge, 2003).

Golub, G. H. & Van Loan, C. F. Matrix Computations (Johns Hopkins University Press, Baltimore, 2012).

Acknowledgements

This work was funded by National Natural Science Foundation of China under Grant No. 51478204, No. 51205164, Natural Science Foundation of Jilin Province under Grant No. 20150101027JC, and China Postdoctoral Science Special Foundation under Grant No. 2014T70284.

Author information

Authors and Affiliations

Contributions

G.X., A.Z. and X.L. wrote the main manuscript text, G.X. and A.Z. did the experiments, the data analysis and the calibration algorithm, X.L. and J.S. prepared all the figures. All authors contributed to the manuscript.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Xu, G., Zheng, A., Li, X. et al. A method to calibrate a camera using perpendicularity of 2D lines in the target observations. Sci Rep 6, 34951 (2016). https://doi.org/10.1038/srep34951

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep34951

This article is cited by

-

An efficient method for measuring the internal parameters of optical cameras based on optical fibres

Scientific Reports (2017)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.