Abstract

Gene regulatory networks can be successfully modeled as Boolean networks. A much discussed hypothesis says that such model networks reproduce empirical findings the best if they are tuned to operate at criticality, i.e. at the borderline between their ordered and disordered phases. Critical networks have been argued to lead to a number of functional advantages such as maximal dynamical range, maximal sensitivity to environmental changes, as well as to an excellent tradeoff between stability and flexibility. Here, we study the effect of noise within the context of Boolean networks trained to learn complex tasks under supervision. We verify that quasi-critical networks are the ones learning in the fastest possible way –even for asynchronous updating rules– and that the larger the task complexity the smaller the distance to criticality. On the other hand, when additional sources of intrinsic noise in the network states and/or in its wiring pattern are introduced, the optimally performing networks become clearly subcritical. These results suggest that in order to compensate for inherent stochasticity, regulatory and other type of biological networks might become subcritical rather than being critical, all the most if the task to be performed has limited complexity.

Similar content being viewed by others

Introduction

The central dogma of molecular biology is that each single gene is transcribed into RNA, which in turn is translated into a protein, which –usually in cooperation with different proteins– can regulate the expression of other genes, giving rise to a complex network of regulatory interactions and different possible patterns of gene expression1. Genetic regulation, protein-protein interactions, as well as cell metabolic and signaling pathways are essential biological processes that can all be represented as networks2. The network picture encapsulates the complexity of cellular processes and provides us a natural framework for a systems-perspective approach to extremely complicated biological problems. As a matter of fact, the study of information processing in living systems has shifted from the analysis of single pathways to increasingly complex regulatory networks, allowing for a visualization of the collective effects of a host of units acting at unison. Since the pioneering work of Kauffman3,4,5,6,7, genetic regulatory systems have been modeled as Boolean networks, in which the expression level of each gene is represented by a binary (on/off) variable and where mutual regulatory interactions are described as arbitrary random Boolean functions operating synchronously at discrete time steps. Even if admittedly simplistic and limited in a number of ways (e.g. continuous levels of gene expression might be essential to understand some cellular processes), such a binary description is particularly useful when dealing with large networks because it simplifies the overwhelming complexity of the real problem reducing it to a logical one. In particular, the Boolean approach has shed light on important conceptual problems such as the possibility of diverse (phenotypic) states emerging from a unique given genetic network, as well as the possibility of transitions among them (as happens in cell differentiation and reprogramming), and the emergence of cycles in cell states. The trajectory of the segment polarity network in the fly Drosophila melanogaster8 and the yeast cell cycle9 are two specific examples in which the most relevant features of gene expression have been fully elucidated on the basis of Boolean models10 (for more details we refer to the literature4,5,6,11,12).

Random Boolean networks (RBNs) can operate in different regimes including ordered and chaotic phases as well as a critical point (or line or surface) separating them in parameter space. Ordered or frozen phases (typically obtained for small network connectivities) are characterized by a small set of stable attractors which are largely robust to perturbations, while in the disordered or chaotic phase (typically obtained for densely connected networks) perturbations rapidly propagate all through the network hindering the existence of truly stable states. As formalized mathematically by Derrida and Pomeau, separating these two phases there is a critical line (that used to be called the “edge of chaos”) at which perturbations propagate marginally13. It was conjectured some time ago that critical RBNs might be optimal to represent actual biological networks; the underlying idea is that operating at criticality might provide such systems with an optimal tradeoff between being exceedingly ordered/stable (thus, barely responsive to environmental changes, signals, and clues) and being too disordered/noisy (thus enormously sensitive to the effects of noise, lacking the required robustness and accuracy that biological machinery demands14). The criticality hypothesis states that the marginal situation between these two impractical tendencies constitutes an excellent compromise. This conjecture (which was developed in the machine-learning and neural-network community15,16,17), proposes that –by operating nearby criticality– networks exhibit an optimal tradeoff between stability to perturbations and sensitivity/responsiveness to signals. Similarly, at larger timescales, it also provides an excellent compromise between robustness and evolvability18,19. Moreover, it entails optimization of information storage and transmission20,21, response and sensitivity, computational capabilities, and a number of other functional advantages14,18,19,21,22,23,24,25,26.

In parallel, the development of powerful experimental high-throughput technologies in molecular biology has paved the way to experimental analyses of gene-expression patterns in large regulatory networks. Recent empirical results, analyzing hundreds of microarray experiments to infer regulatory interactions among genes and implementing these data into Boolean models, seem to support the hypothesis that regulatory networks of Saccharomyces cerevisiae, Escherichia coli, Bacillus subtilis, the murine macrophage, as well as some subnetworks of Drosophila melanogaster and Arabidopsis thaliana are indeed very close to criticality (in the sense of marginal propagation of perturbations)27,28, while some other empirical analyses leave the door open to regulatory networks being ordered/subcritical14,29.

Recent work, aimed at rationalizing why and how criticality might come about in living systems, relies on adaptive/evolutionary models, in which communities of agents –each of them modeled as a Boolean network– are selected for if they succeed at performing some complex tasks which may change in time. For instance, Hidalgo et al.30 showed –by employing an information-theoretic approach– that critical networks may emerge as optimal solutions in such a setting (however, the networks employed as a specific example in ref. 30 are fully connected and thus lack the structural richness of usual RBNs). Similarly, Goudarzi et al.31 considered an ensemble of RBN’s able to experience “mutations” in their topological structure and employed a genetic algorithm to select for those able to perform a given computational task (see Fig. 1); i.e. networks which have learnt have a larger fitness than those that have not. Under these conditions the ensemble converges to a state in which all networks operate close to criticality. In other words, critical networks emerge as the optimal solution out of the combined selective pressures of having to learn different tasks (i.e. having to produce different outcomes/attractors) and being able to readily shift among them following changes in the inputs in real time.

Sketch of the network architecture.

(A) Dynamical phases in general random Boolean networks (RBNs) in the case for which KC ≈ 2 (p = 1/2). (B) Constrained network architecture: we impose some (3) input nodes (colored in green, blue and red) to receive information from the environment and some output/readout ones (1; violet color) to produce a response. The overall computational task to be learned can be summarized in a predefined truth table  = F(i1, i2, i3) where

= F(i1, i2, i3) where  is the output state and i1,2,3 the input ones. (C) During the network dynamics and adaptive evolution, there can be noise sources (internal or external) disturbing the network states as well as its topological structure. (D) The aim is to find the optimal connectivity to learn and perform successfully the computational tasks either in the absence of additional stochasticity as well as in the presence of noise.

is the output state and i1,2,3 the input ones. (C) During the network dynamics and adaptive evolution, there can be noise sources (internal or external) disturbing the network states as well as its topological structure. (D) The aim is to find the optimal connectivity to learn and perform successfully the computational tasks either in the absence of additional stochasticity as well as in the presence of noise.

Given that living cells typically possess very low copy numbers of important regulatory molecules (e.g. for the 80% of genes in Escherichia coli genome the copy number of their associated proteins is less than 100)32 stochastic effects are unavoidable and ubiquitous in gene regulatory networks33. Even if noise is usually assumed to be detrimental to reliable information transfer and, more in general, to cell functioning, stochastic effects can lead to beneficial outcomes; for instance, noise accounts for the observed (phenotypic) variability in identical (isogenic) populations34 and can help cells to adapt to fluctuating environments35,36,37,38. Within the framework of RBN the role of stochasticity and noise has been addressed in a number of works39,40,41.

In this paper, we further delve in the problem of investigating the mechanisms and the conditions under which networks may become critical (or not), focusing on the role played by noise, and ask the question whether–in the presence of strongly noisy conditions– regulatory networks, modeled as RBNs having to perform some complex computational task, operate in ordered, critical or supercritical regimes (see Fig. 1). In other words: what is the role of noise in the emergence of criticality? Does it foster or hinder critical behavior? In order to gauge the effect of noise on the dynamics of RBNs having to perform a complex task we consider a setting very similar to that of Goudarzi et al.31, but including different additional sources of stochasticity. In particular, our approach differs from the previous one in three main aspects: (i) we consider asynchronous updating42,43,44 rather than the usual deterministic one, thus introducing the effect of stochasticity in the updating timings, (ii) both the structure and the dynamics of the networks are subjected to noise (be it intrinsic or external), and (iii) we do not consider an evolutionary algorithm to search for the best possible network connectivity, but rather we work in a constant-connectivity ensemble and explore how the network performance depends on the network connectivity, i.e. on the network dynamical state.

As we shall illustrate, criticality emerges as the solution providing the fastest route to learning complex tasks but, on the other hand, once additional sources of stochasticity are explicitly taken into account, ordered dynamical states perform better than critical ones. That is, networks need to compensate the excess of noise by becoming progressively more subcritical.

Model and Training Protocol

As said above, we consider a setting (similar to that of previous approaches31) to train Random Boolean networks to perform a computational task, but we introduce a number of changes –to be detailed in this section– devoted mostly to implement stochasticity in various ways.

Network architecture and dynamics

Random Boolean networks (RBNs) consist of random Erdős-Rényi (ER) structures with N nodes, L directed links, and averaged connectivity or degree K = L/N. Self-loops and multiple edges with the same directionality linking two nodes are explicitly excluded, and otherwise the wiring pattern is randomly set. Each node i represents a gene, whose dynamical state is characterized by a Boolean variable σi = {0, 1}; 1 for the “on” state and 0 for the “off” one. The node is updated according to a random Boolean function, fi, which depends on the state of the Kin(i) neighbor nodes regulating it (restricted to a maximum value of 8 for computational convenience), and it contributes to regulating the state of Kout(i) out-neighbors (seeTable I in Methods for an example of random Boolean functions). The averaged fraction of 1’s in the outputs of the random Boolean function, p, can be fixed a priori and taken as a control parameter, determining the bias toward “on” or “off” states (here, we consider the unbiased case p = 1/2 in all analyses). In contrast with most studies of RBNs and in order to implement a first source of stochasticity, nodes are updated in an asynchronous way42,43,44, i.e. a given node is randomly selected with homogeneous probability, its state is updated according to:

where  identifies the j − th neighbor of node i, time is incremented in Δt = 1/N units, and the process is iterated. A time step of the dynamics corresponds to one update per node on average. In order to implement computational tasks or learning rules in RBNs we consider a slight variation of the just-described general architecture, in which some pre-defined input and output nodes are included (see Fig. 1B). By construction, input nodes are imposed to have Kin = 0, so that they are not influenced by others and Kout > 0, so that they are not isolated, while–on the contrary– output nodes have Kout = 0 and Kin ≥ 1 (in particular, we take ninput = 3 input nodes and one single output or readout node (noutput = 1 as in Fig. 1B). The set of N − ninput non-input nodes is called the network core.

identifies the j − th neighbor of node i, time is incremented in Δt = 1/N units, and the process is iterated. A time step of the dynamics corresponds to one update per node on average. In order to implement computational tasks or learning rules in RBNs we consider a slight variation of the just-described general architecture, in which some pre-defined input and output nodes are included (see Fig. 1B). By construction, input nodes are imposed to have Kin = 0, so that they are not influenced by others and Kout > 0, so that they are not isolated, while–on the contrary– output nodes have Kout = 0 and Kin ≥ 1 (in particular, we take ninput = 3 input nodes and one single output or readout node (noutput = 1 as in Fig. 1B). The set of N − ninput non-input nodes is called the network core.

Assessing the network dynamical state

In the infinite size limit, synchronous RBNs are known to exhibit a critical point –in the sense of marginal propagation of perturbations5,13– at a value of the connectivity  , being ordered/subcritical for K < KC(p) and disordered/supercritical otherwise. In particular, in the unbiased case, p = 1/2, KC = 2 (see Fig. 1A) which is often quoted as “the” critical connectivity for RBNs. However, these results hold only for infinite networks; for finite ones, critical values are shifted toward slightly larger connectivity values by corrections of order

, being ordered/subcritical for K < KC(p) and disordered/supercritical otherwise. In particular, in the unbiased case, p = 1/2, KC = 2 (see Fig. 1A) which is often quoted as “the” critical connectivity for RBNs. However, these results hold only for infinite networks; for finite ones, critical values are shifted toward slightly larger connectivity values by corrections of order  . Here, instead of calculating such critical values analytically, and thus to quantify possible deviations from criticality, we explicitly compute in numerical simulations the dynamical state of any given finite-size network. For this, we determine whether individual site perturbations do grow or shrink on average; i.e. we measure the branching parameter, B, defined as the averaged Hamming distance –after one timestep– between the original and all possible network-states differing from the original one at just a single (flipped) site (see Methods). Branching parameters B > 1 (resp. B < 1) reflect supercritical (resp. subcritical) networks while the marginal case B = 1 is the trademark of criticality5,13.

. Here, instead of calculating such critical values analytically, and thus to quantify possible deviations from criticality, we explicitly compute in numerical simulations the dynamical state of any given finite-size network. For this, we determine whether individual site perturbations do grow or shrink on average; i.e. we measure the branching parameter, B, defined as the averaged Hamming distance –after one timestep– between the original and all possible network-states differing from the original one at just a single (flipped) site (see Methods). Branching parameters B > 1 (resp. B < 1) reflect supercritical (resp. subcritical) networks while the marginal case B = 1 is the trademark of criticality5,13.

Computational tasks

The task to be learned can be codified in a “truth table”, i.e. for each specific input configuration (out of a total of  ) there is an output value to be reproduced. A given truth table defines a specific computational task. An example is the odd-even classifier (rule R150 in the Wolfram’s classification of cellular automata45), which assigns a Boolean variable to each input accounting for its parity. Other examples that we consider are rules number R51 and R60 in Wolfram’s classification. These rules can be categorized accordingly to their “complexity”, understanding as such, the number of nodes in the input that do change the output state when altered (and how often they do so for different values of the remaining nodes). In particular, out of the three rules that we study here, the most complex one is the odd-even classifier (R150) whose output obviously depends on all input nodes, R60 is an intermediate case, while the less complex one is R51 whose output is the opposite of one particular input unit, being insensitive to the other two. A more precise definition on how to quantify task complexity –unnecessary for our purposes here– has been discussed by Goudarzi et al.31.

) there is an output value to be reproduced. A given truth table defines a specific computational task. An example is the odd-even classifier (rule R150 in the Wolfram’s classification of cellular automata45), which assigns a Boolean variable to each input accounting for its parity. Other examples that we consider are rules number R51 and R60 in Wolfram’s classification. These rules can be categorized accordingly to their “complexity”, understanding as such, the number of nodes in the input that do change the output state when altered (and how often they do so for different values of the remaining nodes). In particular, out of the three rules that we study here, the most complex one is the odd-even classifier (R150) whose output obviously depends on all input nodes, R60 is an intermediate case, while the less complex one is R51 whose output is the opposite of one particular input unit, being insensitive to the other two. A more precise definition on how to quantify task complexity –unnecessary for our purposes here– has been discussed by Goudarzi et al.31.

Network fitness

The goal of the trained networks is to produce –for each specific input configuration i– a time-averaged value of the output state, 〈σoutput(i)〉, which is as close as possible to the desired output in the task truth table,  ; the difference between these two values,

; the difference between these two values,  , –which is a real number–is a measure of the network performance for a fixed input configuration. The overall network fitness is defined as one minus the average of such difference for

, –which is a real number–is a measure of the network performance for a fixed input configuration. The overall network fitness is defined as one minus the average of such difference for  randomly chosen input configurations:

randomly chosen input configurations:

The network is trained to “learn” to produce –as fast as possible– the correct output when exposed to each of the  specific input states; i.e. the network learns the computational task as defined by a given truth table. To implement this, we sequentially expose the network to I randomly chosen inputs. The resulting random order of inputs can be viewed as a form of stochasticity, mimicking environmental variability. Moreover, the environment is assumed to change rapidly so that, in order to cope with that, networks are trained to reach the correct output within just tmax (usually fixed to 10) timesteps, after which the input is changed (while the network state is left unaltered). The first half of this time interval allows for the network to adapt to the new input configuration, while in the second half we measure the average state of the output node 〈σoutput〉 and compute the value of the network fitness, F.

specific input states; i.e. the network learns the computational task as defined by a given truth table. To implement this, we sequentially expose the network to I randomly chosen inputs. The resulting random order of inputs can be viewed as a form of stochasticity, mimicking environmental variability. Moreover, the environment is assumed to change rapidly so that, in order to cope with that, networks are trained to reach the correct output within just tmax (usually fixed to 10) timesteps, after which the input is changed (while the network state is left unaltered). The first half of this time interval allows for the network to adapt to the new input configuration, while in the second half we measure the average state of the output node 〈σoutput〉 and compute the value of the network fitness, F.

Network mutations

Having established the fitness of a given network, M, we now allow it to “mutate” by rewiring some existing links –thus preserving its overall connectivity K– and generate a slightly modified network M′. The technicalities of how the mutation process is implemented are deferred to the Methods section.

Network evolution and convergence

The network with the largest fitness value, between M and its mutated counterpart M′, is selected (while the original one is kept if the two fitnesses coincide). This mutation and selection process defines an evolutionary time step (to be distinguished from a time step of the dynamics; there is a factor tmaxI between both). The evolutionary process is iterated until F reaches its maximal possible value F = 1. Observe, however, that as the I inputs are randomly chosen at each evolutionary step, observation of F = 1 at a given step does not necessarily imply F = 1 at successive time. Therefore, in order to impose that the network robustly “learns” the computational task, we continue to measure its fitness, when exposing it to a much large number of randomly chosen inputs (100I, instead of just I as in the fitness-computation Eq. (2)); if F = 1 all accros this long checking time window, the network is classified as having learned. Otherwise, the mutation/selection process is restarted until an optimally performing network is found. The final number of evolutionary steps required to reach an optimal network is called convergence time, T.

Ensemble averages

Keeping fixed specific values of the network size N and connectivity K, the previous evolutionary process is iterated a large number of times (typically from 103 to 5 ⋅ 105) giving rise to an ensemble of trained networks. The ensemble averaged convergence time,  , is a proxy for the network performance: the best network ensemble is the one with the smallest

, is a proxy for the network performance: the best network ensemble is the one with the smallest  . In this set of networks –once they have been trained– we also measured the ensemble-average of the branching parameter,

. In this set of networks –once they have been trained– we also measured the ensemble-average of the branching parameter,  . In the approach of Goudarzi et al.31, K is allowed to change during the evolutionary process; thus the fastest learning networks are selected for; instead, we explore different fixed-K ensembles and determine a posteriori which is the optimal one. Both approaches are obviously equivalent to determine the optimal connectivity K.

. In the approach of Goudarzi et al.31, K is allowed to change during the evolutionary process; thus the fastest learning networks are selected for; instead, we explore different fixed-K ensembles and determine a posteriori which is the optimal one. Both approaches are obviously equivalent to determine the optimal connectivity K.

Dynamics under noisy conditions

To investigate the effect of fluctuations in the system dynamics, we allow the dynamics to be exposed to noise. In particular, we consider that either (i) with a small probability, η, nodes can invert their state every time they are updated (accounting for errors/fluctuations in gene expression levels) or (ii) with some small probability, ξ, (which is proportional to the network connectivity) the network topology experiences a mutation process at each evolutionary step, and the mutated network is kept/selected regardless of its fitness value (this describes physical damage in the network produced, for example, by the lack or excess of some regulatory factors). For the sake of simplicity, we refer to the first possibility as “dynamical” noise and to the second one as “structural” noise.

Results

Convergence times and dynamical phases of learning networks

Even in the absence of explicit noise sources, the dynamics based on asynchronous updating –which is the one we adopt here– has a stochastic component (i.e. nodes are updated in a random order), which could be more adequate to represent real genetic networks than synchronously updated RBNs as it avoids spurious effects associated with perfectly synchronous updating43.

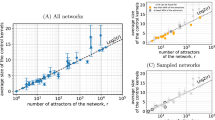

We consider a complex computational task –the odd-even classifier– and analyze networks of variable N and K. We let them evolve to learn this task and measure the average convergence time,  , to do so. Results are shown in Fig. 2 for sizes from N = 6 to N = 64 as a function of the network connectivity K (from K = 0.5 to K = 3.5). First of all (upper Fig. 2B), observe that for all values of N,

, to do so. Results are shown in Fig. 2 for sizes from N = 6 to N = 64 as a function of the network connectivity K (from K = 0.5 to K = 3.5). First of all (upper Fig. 2B), observe that for all values of N,  exhibits a characteristic (pseudo)parabolic shape with a minimum at some optimal connectivity value, KT, at which networks learn the computational task in the fastest possible way. It is important to stress that networks with connectivities other than KT also learn, even if after longer evolutionary times. In Fig. 2A the same data are represented, but rescaling

exhibits a characteristic (pseudo)parabolic shape with a minimum at some optimal connectivity value, KT, at which networks learn the computational task in the fastest possible way. It is important to stress that networks with connectivities other than KT also learn, even if after longer evolutionary times. In Fig. 2A the same data are represented, but rescaling  for each N with its minimum,

for each N with its minimum,  (this is done to help the eye to compare the location of the different minima). In Fig. 2C we plot |KT − 2| as a function of N (blue squares); the value K = 2 corresponds to the usually accepted critical connectivity for RBNs in the infinite size limit. Observe that the optimal connectivities seem to converge to this value, K = 2, as a power-law function of N. The precision of our numerics does not allow us to discriminate if the convergence is exactly to K = 2 or to a nearby value (within 2.00 ± 0.05) in the large-size limit. In Fig. 2A, we also present results for the branching parameter,

(this is done to help the eye to compare the location of the different minima). In Fig. 2C we plot |KT − 2| as a function of N (blue squares); the value K = 2 corresponds to the usually accepted critical connectivity for RBNs in the infinite size limit. Observe that the optimal connectivities seem to converge to this value, K = 2, as a power-law function of N. The precision of our numerics does not allow us to discriminate if the convergence is exactly to K = 2 or to a nearby value (within 2.00 ± 0.05) in the large-size limit. In Fig. 2A, we also present results for the branching parameter,  (see Methods), for the same network ensembles, which allows us to explicitly determine the average dynamical regime as a function of K. Importantly,

(see Methods), for the same network ensembles, which allows us to explicitly determine the average dynamical regime as a function of K. Importantly,  is computed in the ensemble of networks that have learned –and not in the Erdős-Rényi ensemble– and Hamming distance measurements are restricted to the network core (excluding input nodes, which do not change in the course of the dynamics). In particular, dotted lines in Fig. 2A stand for measurements of

is computed in the ensemble of networks that have learned –and not in the Erdős-Rényi ensemble– and Hamming distance measurements are restricted to the network core (excluding input nodes, which do not change in the course of the dynamics). In particular, dotted lines in Fig. 2A stand for measurements of  , after perturbing nodes in the core, while dashed-dotted lines correspond to perturbations at input nodes. Observe that these two sets of curves exhibit qualitatively different behaviors. We have chosen to present results in this way to stress the fact that –after learning– networks are not homogeneous, and not all nodes respond in the same way; in particular, the network is more responsive (larger

, after perturbing nodes in the core, while dashed-dotted lines correspond to perturbations at input nodes. Observe that these two sets of curves exhibit qualitatively different behaviors. We have chosen to present results in this way to stress the fact that –after learning– networks are not homogeneous, and not all nodes respond in the same way; in particular, the network is more responsive (larger  ) to input perturbations than to changes in the core. For example, networks with connectivity K = 2 are supercritical to input perturbations (fostering network sensitivity to external changes) and subcritical for core perturbations (as required for a robust convergence to the attractor/output).

) to input perturbations than to changes in the core. For example, networks with connectivity K = 2 are supercritical to input perturbations (fostering network sensitivity to external changes) and subcritical for core perturbations (as required for a robust convergence to the attractor/output).

(A) Averaged convergence time,  , divided by its minimum value

, divided by its minimum value  plotted as a function of the network connectivity K for different network sizes N (solid lines); ensemble averages performed over up to 5 ⋅ 105 network realizations. Similarly, the non-normalized convergence times are plotted in (B). Discontinuous lines in (A) represent the value of the branching parameter,

plotted as a function of the network connectivity K for different network sizes N (solid lines); ensemble averages performed over up to 5 ⋅ 105 network realizations. Similarly, the non-normalized convergence times are plotted in (B). Discontinuous lines in (A) represent the value of the branching parameter,  as measured in the network after the learning process is completed; dashed-dotted lines stand for

as measured in the network after the learning process is completed; dashed-dotted lines stand for  averaged after perturbing only input nodes, while dotted lines have been obtained after perturbing nodes in the network core. Note that as

averaged after perturbing only input nodes, while dotted lines have been obtained after perturbing nodes in the network core. Note that as  and

and  are both dimensionless quantities, they have been plotted in the same scale; the same color code has been used for all curves. (C) Scaling of the connectivity at which the minimum

are both dimensionless quantities, they have been plotted in the same scale; the same color code has been used for all curves. (C) Scaling of the connectivity at which the minimum  is obtained, KT, as a function of N (blue squares), plotted together with the position of the critical point KC as estimated from the condition

is obtained, KT, as a function of N (blue squares), plotted together with the position of the critical point KC as estimated from the condition  (orange diamonds). In both cases, there is a convergence toward a value close to 2 in the large N limit (blue squares) the red line is a guide to the eye and corresponds to a decay

(orange diamonds). In both cases, there is a convergence toward a value close to 2 in the large N limit (blue squares) the red line is a guide to the eye and corresponds to a decay  toward 2. (D) Δ = KC − KT plotted as a function of N showing explicitly that the distance to criticality diminishes with network size; i.e. the larger the network the closer to criticality the fastest learning networks.

toward 2. (D) Δ = KC − KT plotted as a function of N showing explicitly that the distance to criticality diminishes with network size; i.e. the larger the network the closer to criticality the fastest learning networks.

To obtain the overall branching parameter  (given N and K) –for all nodes in the network– we need to average these two contributions (weighted with ninput = 3 and N − 3 nodes, respectively). For these averaged curves (which, for the sake of clarity, are not explicitly shown in Fig. 2A) the crossing

(given N and K) –for all nodes in the network– we need to average these two contributions (weighted with ninput = 3 and N − 3 nodes, respectively). For these averaged curves (which, for the sake of clarity, are not explicitly shown in Fig. 2A) the crossing  indicates overall critical dynamics, and corresponds to a critical connectivity KC. KC turns out to be larger than K = 2 and shifts toward lower connectivity values as N grows; indeed, its distance to K = 2 decreases with N (see Fig. 2C; orange diamonds), suggesting that learning networks have critical connectivity K ≈ 2 (within our resolution) in the infinite size limit, as happens with random networks.

indicates overall critical dynamics, and corresponds to a critical connectivity KC. KC turns out to be larger than K = 2 and shifts toward lower connectivity values as N grows; indeed, its distance to K = 2 decreases with N (see Fig. 2C; orange diamonds), suggesting that learning networks have critical connectivity K ≈ 2 (within our resolution) in the infinite size limit, as happens with random networks.

Moreover, we have measured the difference Δ = KC − KT to gauge how far optimal connectivities (in the sense of achieving the fastest possible learning) are from critical dynamics (in the sense of the branching parameter as close as possible to 1). As shown in Fig. 2D (magenta circles), Δ decreases monotonically upon increasing N, indicating that –for sufficiently large networks– the optimal connectivity is as close to criticality as desired, but for any finite size they are slightly subcritical (Δ > 0). Thus optimal learning occurs for slightly subcritical networks, arbitrarily close to criticality for sufficiently large system sizes.

Figure 3A illustrates results for other, less complex (see above) computational tasks. As before, there is a well-defined minimum for  in all cases, but these times are significantly shorter for lesser complex tasks (about two orders of magnitude less for a fixed size). Observe also that for the simplest, R51 rule,

in all cases, but these times are significantly shorter for lesser complex tasks (about two orders of magnitude less for a fixed size). Observe also that for the simplest, R51 rule,  hardly depends on K (Fig. 3B), indicating that, as the task complexity decreases K progressively becomes a lesser relevant parameter. Observe also (Fig. 3D) that the distance of optimal networks to criticality, Δ, decreases with increasing network complexity. Therefore, it is reasonable to conjecture that for more complex tasks than the ones we considered (e.g. involving larger values of ninput), the benefits derived from operating at optimality/criticality are progressively more crucial.

hardly depends on K (Fig. 3B), indicating that, as the task complexity decreases K progressively becomes a lesser relevant parameter. Observe also (Fig. 3D) that the distance of optimal networks to criticality, Δ, decreases with increasing network complexity. Therefore, it is reasonable to conjecture that for more complex tasks than the ones we considered (e.g. involving larger values of ninput), the benefits derived from operating at optimality/criticality are progressively more crucial.

Figure analogous to Fig. 2, but obtained for different computational tasks of different complexity (from the most complex R150 to the intermediate R60 and the simplest R51 rule; the names come from Wolfram’s classification of cellular automata45). (A)  for N = 16 (solid lines) and the three considered rules. Discontinuous lines are as in Fig. 2 (different colors stand for different rules). (B) Non-normalized

for N = 16 (solid lines) and the three considered rules. Discontinuous lines are as in Fig. 2 (different colors stand for different rules). (B) Non-normalized  for N = 16; the same color code has been used for all curves. (C) Optimal-time connectivities for fast learning, KT (red squares) and critical connectivities KC (blue circles) for the different rules. Observe that in all cases, optimal networks are slightly subcritical for this relatively small size N = 16. As shown in (D) the distance to criticality decreases upon increasing the task complexity.

for N = 16; the same color code has been used for all curves. (C) Optimal-time connectivities for fast learning, KT (red squares) and critical connectivities KC (blue circles) for the different rules. Observe that in all cases, optimal networks are slightly subcritical for this relatively small size N = 16. As shown in (D) the distance to criticality decreases upon increasing the task complexity.

Finally, we also scrutinized the network topology (in-degree distribution) after learning and, interestingly, we did not detect significant structural changes, as the overall network skeleton was in all cases very close to a random network.

Summing up, in order to achieve the fastest possible learning of complex tasks, RBNs with a connectivity such that their dynamics turns out to be critical (or slightly subcritical for finite sizes) are the best possible option. The larger the network size and the more complex the task, the more evolutionarily favourable to be close to criticality.

Learning under noisy conditions

Dynamical noise

Figure 4 is analogous to Fig. 2 but has been obtained in the presence of dynamical noise, η ≠ 0 (results for η = 0 are also plotted for the sake of comparison); observe that we present results for a fixed size N = 16 and variable noise strengths (from η = 10−5 to η = 10−3). It is noteworthy that for larger values of η (e.g. 0.01) the dynamics is so noisy that the probability for the networks –resulting out of the evolutionary process– to pass the robustness filter we have imposed (i.e. to have fitness F = 1 for 100I evolutionary steps) is exceedingly small. Therefore, networks do not achieve perfect learning in such extremely noise conditions. On the other hand, for exceedingly small noise strengths, we essentially see the same results as for η = 0, within the simulation checking time windows we consider. For intermediate noise-strength levels (such as the ones reported in Fig. 4) networks are likely to pass the filter. In such cases, (see Fig. 4B), the optimal connectivity is observed to shift toward lower values of K as the noise level is increased (see also Fig. 4C where KT is plotted as a function of η for various system sizes). In parallel, the averaged convergence times,  (Fig. 4B, same color code as in panel A), also grow with noise.

(Fig. 4B, same color code as in panel A), also grow with noise.

Dynamical noise.

Figure analogous to Figs 2 and 3 but for analyzing the dependence on the strength η of the dynamical noise. (A)  for N = 16 (solid lines) as a function of K for different values of η (different colors). Discontinuous lines are as in Figs 2 and 3 (however, different colors stand now for different η values). (B) Non-normalized

for N = 16 (solid lines) as a function of K for different values of η (different colors). Discontinuous lines are as in Figs 2 and 3 (however, different colors stand now for different η values). (B) Non-normalized  for N = 16; the same color-code has been used for all curves. (C) Optimal-time connectivities for fast learning, KT, (diamonds) and critical connectivities KC (circles) for the different values of η and various network sizes (N = 12 blue, N = 16 orange, N = 24 purple). In all cases, optimal networks are slightly subcritical for this relatively small sizes. However, in contrast with the noiseless cases above, here (D) the distance to criticality Δ does not decrease upon enlarging the size (except for exceedingly small noise strengths, e.g. 10−5, for which noise effects are not visible in the time windows we consider) actually it remains almost constant or –for large values of η such as 10−3– it grows with N, and in any case, it grows with the noise strength (same color code used in (C,D)).

for N = 16; the same color-code has been used for all curves. (C) Optimal-time connectivities for fast learning, KT, (diamonds) and critical connectivities KC (circles) for the different values of η and various network sizes (N = 12 blue, N = 16 orange, N = 24 purple). In all cases, optimal networks are slightly subcritical for this relatively small sizes. However, in contrast with the noiseless cases above, here (D) the distance to criticality Δ does not decrease upon enlarging the size (except for exceedingly small noise strengths, e.g. 10−5, for which noise effects are not visible in the time windows we consider) actually it remains almost constant or –for large values of η such as 10−3– it grows with N, and in any case, it grows with the noise strength (same color code used in (C,D)).

On the other hand, the branching parameter (measured keeping the noise switched on) computed by perturbing core nodes does not show a strong dependence on η (see dotted lines in Fig. 4A) while the values of  obtained by perturbing just the inputs (dashed-dotted lines in Fig. 4A) are more severely affected. The resulting critical points obtained by averaging these two contributions and equating them to unity are plotted in Fig. 4C, are always close to K = 2 (for the considered sizes). Comparing these values with the optimal connectivities for learning, i.e. measuring, Δ = KC − KT, one observes (see Fig. 4D) that Δ increases monotonically with η. This occurs for the different system sizes we studied allowing us to conclude that under noise conditions, it takes longer to learn, and the larger the dynamical-noise strength the more subcritical the optimal networks.

obtained by perturbing just the inputs (dashed-dotted lines in Fig. 4A) are more severely affected. The resulting critical points obtained by averaging these two contributions and equating them to unity are plotted in Fig. 4C, are always close to K = 2 (for the considered sizes). Comparing these values with the optimal connectivities for learning, i.e. measuring, Δ = KC − KT, one observes (see Fig. 4D) that Δ increases monotonically with η. This occurs for the different system sizes we studied allowing us to conclude that under noise conditions, it takes longer to learn, and the larger the dynamical-noise strength the more subcritical the optimal networks.

Structural noise

Figure 5 shows results analogous to those in Fig. 4. Also in this case we present results for a fixed size N = 16 and variable noise strengths (from ξ = 10−3 to ξ = 10−2). In parallel with the site-noise case, there is a noise intensity threshold above which the mutation probability is exceedingly high for the networks to learn, while for too small strengths, the same results as for ξ = 0 are observed within the operational checking time windows we have. For intermediate noise amplitudes, the larger ξ the longer the learning process takes (see Fig. 5B). In these cases, the optimal connectivity is observed to shift toward lower values of K as the noise level is increased (see also Fig. 5C where KT is plot as a function of ξ). Also, as above, the branching parameter,  (measured keeping a fixed network structure) does not have a strong dependence on ξ (Fig. 5A). The associated critical point KC is slightly above K = 2 for small noises, and moves progressively to smaller connectivity values as ξ grows. Also, as in the previous case, Δ increases monotonically with η, so that, as above, we can safely conclude that, in general, the larger the structural noise strength the more subcritical the optimal networks.

(measured keeping a fixed network structure) does not have a strong dependence on ξ (Fig. 5A). The associated critical point KC is slightly above K = 2 for small noises, and moves progressively to smaller connectivity values as ξ grows. Also, as in the previous case, Δ increases monotonically with η, so that, as above, we can safely conclude that, in general, the larger the structural noise strength the more subcritical the optimal networks.

Structural noise.

Figure analogous to Fig. 4 but analyzing the dependence on the strength ξ of the structural noise. (A)  for N = 16 (solid lines) as a function of K for different values of ξ (different colors). Discontinuous lines are as in Fig. 4 (different colors stand now for different ξ values). (B) Non-normalized

for N = 16 (solid lines) as a function of K for different values of ξ (different colors). Discontinuous lines are as in Fig. 4 (different colors stand now for different ξ values). (B) Non-normalized  for N = 16 (C) Optimal-time connectivities for fast learning, KT (diamonds) and critical connectivities KC (circles) for the different values of ξ and various network sizes (N = 12 blue, N = 16 orange, N = 24 purple). Observe that in all cases, optimal networks are slightly subcritical for this relatively small sizes. However, in contrast with the noiseless cases above, and in parallel with the case of dynamical noise, here (D) the distance to criticality Δ does not decrease upon enlarging the size (except for extremely low values of the noise, as in Fig. 4), actually it remains almost constant and, in any case, it grows with the noise strength. Same color code used in (C,D).

for N = 16 (C) Optimal-time connectivities for fast learning, KT (diamonds) and critical connectivities KC (circles) for the different values of ξ and various network sizes (N = 12 blue, N = 16 orange, N = 24 purple). Observe that in all cases, optimal networks are slightly subcritical for this relatively small sizes. However, in contrast with the noiseless cases above, and in parallel with the case of dynamical noise, here (D) the distance to criticality Δ does not decrease upon enlarging the size (except for extremely low values of the noise, as in Fig. 4), actually it remains almost constant and, in any case, it grows with the noise strength. Same color code used in (C,D).

Summing up, we conclude that while in the case of noiseless dynamics the optimal solution –to achieve the fastest possible learning– is obtained at connectivities for which the network is about critical (actually slightly subcritical, but closer and closer to criticality as the network size and/or the complexity of the task are increased), the situation is different in the presence of additional stochasticity, be it dynamical or structural noise. Under noisy conditions, the optimal solutions lie clearly well within the ordered/subcritical phase. A straightforward interpretation of this result is that the network dynamics needs to compensate for the excess of noise, and does so by reducing its internal level of uncertainty, i.e. by shifting deep into the ordered/subcritical phase.

Empirical networks

We have collected a set of empirical data from the literature and compiled a set of real directed networks. This includes public empirical datasets with biological genetic regulatory networks46, and networks of metabolic interactions47. Specific examples of networks collected from the literature are the metabolic networks of Chlamydomonas reinhardtii (K = 2.05)48) and Bacillus subtilis (K = 1.03)49, and the gene regulatory networks of Escherichia coli (K = 1.24, K = 2.32)41,50, Arabidopsis thaliana (K = 2.755)51, Mycobacterium tuberculosis (K = 1.19, K = 1.98)52,53, Pseudomonas aeruginosa (K = 1.48)54 and Saccharomyces cerevisiae (K = 1.85)55. Figure 6 presents a scatter plot of all networks in our dataset, representing the averaged connectivity K and network size N of each one. As it can be seen, the averaged connectivity of this dataset is well below the value K = 2, the critical connectivity for large random networks, suggesting that they could operate in subcritical regimes. It is noteworthy that it has been suggested that some empirical networks with high connectivity values (such as some of the outliers in Fig. 6) might result from systematic errors in correlation analyses (giving rise to false positives)56.

Size N versus mean connectivity K for empirical biological networks of different types.

In particular, it includes genetic and metabolic networks of species such as Escherichia coli, Saccharomyces cerevisiae, Pseudomonas aeruginosa, Bacillus subtilis, etc (see main text). Observe that all networks are significantly sparse, with most mean connectivities lying between K = 1 and K = 2. The outliers, with K > 10 come all from BioGRID46; the most extreme case has K = 41.90 and corresponds to the genetic network of “Escherichia coli K-12 W3110” (but, it might be that these networks are plagued with false-positive connections56). In the inset, we plot the probability that a network from our empirical ensemble is at a certain relative distance to the critical point of a random Boolean model with its corresponding connectivity, i.e. δ = (K − Kc(p))/Kc(p), assuming a fixed value of the bias p (in particular, we show results for p = 1/2, p = 0.8 and 0.9); observe that regardless of the value of the considered bias (which in general is unknown to us) most of the networks lie within the subcritical regime (assuming their dynamics was random).

Being more precise –given the absence of knowledge on dynamical aspects of the specific dynamics of each empirical network– it is not possible to properly ascertain the dynamical state (critical or not) of each of them. For instance, in large random Boolean networks the critical point is located as discussed above at  5,11,12; thus the minimal possible critical connectivity is K = 2 (corresponding to the unbiased case p = 1/2). Note that for finite random networks, the critical connectivity shifts to values slightly larger than 2 (positive corrections of order N−1). Therefore, if the collected (finite) empirical networks obeyed random Boolean dynamics– in light of Fig. 6– almost all of them would be certainly subcritical. However, we know that the dynamics of real networks may involve, for instance, canalizing and/or weighted updating functions11, and for such networks the critical connectivity can be in some cases smaller than K = 2. Therefore, even if no definite conclusion can be extracted from these empirical data about the possibility of criticality (or absence of it), we can certainly conclude that empirical networks are quite sparse (significantly sparser than critical random networks) suggesting that –in the absence of further information about their intrinsic dynamics– the most likely scenario would be that they operate in ordered regimes (see below for an extended discusion).

5,11,12; thus the minimal possible critical connectivity is K = 2 (corresponding to the unbiased case p = 1/2). Note that for finite random networks, the critical connectivity shifts to values slightly larger than 2 (positive corrections of order N−1). Therefore, if the collected (finite) empirical networks obeyed random Boolean dynamics– in light of Fig. 6– almost all of them would be certainly subcritical. However, we know that the dynamics of real networks may involve, for instance, canalizing and/or weighted updating functions11, and for such networks the critical connectivity can be in some cases smaller than K = 2. Therefore, even if no definite conclusion can be extracted from these empirical data about the possibility of criticality (or absence of it), we can certainly conclude that empirical networks are quite sparse (significantly sparser than critical random networks) suggesting that –in the absence of further information about their intrinsic dynamics– the most likely scenario would be that they operate in ordered regimes (see below for an extended discusion).

Conclusions and Discussion

The hypothesis that living systems may operate in the vicinity of critical points of their internal dynamics has inspired and tantalized scientists for some time. In particular, it has been claimed that genetic regulatory networks might operate close to criticality, achieving in this way an optimal balance between sensitivity to signals and stability to noise, and/or between adaptability and robustness on large evolutionary scales. A few works have recently explored different mechanisms allowing for networks to self-organize or evolve to critical or quasi-critical dynamics.

Here –inspired by the set up proposed by Goudarzi et al.31 –we have shown that random Boolean network models that are trained to perform a given computational task, can learn it much faster if they have a connectivity K such that their dynamics turns out to be close to criticality, as defined by a marginal averaged propagation of perturbations. This does not mean that networks far from criticality cannot learn; indeed they do, but it takes much longer to do so. Two important differences between the present work and previous ones are as follows. First, we work with networks with constant connectivity, i.e. the allowed mutations keep K constant, while in previous work there was no such constraint31. This difference implies that our evolutionary process does not converge to the optimal connectivity for fast learning, KT; by studying the constant-connectivity ensemble, we are able to put forward that learning (not necessarily in the fastest possible way) is compatible with rather diverse connectivity patterns and, thus, with the network being critical, subcritical or supercritical. The second important difference is that we implement a stochastic updating scheme, which introduces stochasticity in the dynamics; we find, however, that results are mostly insensitive to this change. Moreover, we have seen that in all cases, the distance to criticality of the optimal-connectivity networks diminishes monotonically upon enlarging system size and upon enlarging the task complexity. Indeed, very simple tasks, establishing simple relationships between (a few) inputs and the output, can be readily learned by networks in the ordered/subcritical regime, where such a direct correspondence can be robustly realized. On the other hand, complex tasks, in which the output is sensitive to many different possible changes in the input nodes, require of much larger responsiveness/susceptibility, and thus, shift the network optimal connectivity toward larger values, closer and closer to criticality. In any case, we do not find under any circumstances the optimal connectivity to lie within the disordered/supercritical regime; it seems as if the requirement to learn a task was incompatible with the network being disordered.

Biological systems must have homeostasis, i.e. the capacity to maintain their internal conditions even in the presence of fluctuations and noise, be it internal or external. In the second part of our study we posed ourself the question of how do these results depend upon the explicit introduction of noise. To this end, we have introduced more extreme forms of noise, be it dynamical or structural, within the same RBN model. Dynamical noise allows network nodes to invert their dynamical state with a small probability each time they are updated, introducing perturbations that can potentially propagate through the system, compromising the network performance. Similarly, structural noise, implying that the network topology itself is exposed to random changes with some small probability, also producing potential damage in the learned patterns. Both of these noise sources have clear correspondence with stochastic effects in real biological networks. In both cases, there is a threshold in noise strength above which networks do not learn the computational task in a reliable and robust way; i.e. they end up being plagued with errors, hindering network learning. Such thresholds clearly depend on the criterion imposed to declare that networks have learned; put differently, if the time in which one checks for network robustness are increased, i.e. if the criterion becomes more stringent, the noise-strength thresholds diminish. Remarkably, in both of the cases, dynamical and structural noise, we find that the optimal connectivity to achieve the fastest possible learning lies deep-inside the subcritical region, far away from criticality, and the distance to criticality increases upon enlarging the noise strength and does not diminish upon increasing the system size (as it happens in the absence of explicit noise).

Our results suggest that real biological networks, in order to perform the complex tasks required for information processing and survival in a noisy world, should operate in sub-critical regimes rather than in critical ones as it has been argued. As a matter of fact, the collection of empirical (genetic and metabolic) networks that we have compiled from the recent literature shows a rather sparse averaged connectivity in most cases, with only a few outlier networks. If the dynamics underlying these networks could be modeled by random Boolean functions, one could safely conclude that they are typically subcritical. However, in most cases, the dynamics remains mostly unknown, and a clear cut conclusion about the dynamical state of each specific network instance cannot be derived. To fill this gap, recent analyses have employed high throughput data from hundreds of microarray experiments to infer regulatory interactions among genes. This type of approach leads to more detailed information on dynamical aspects (e.g. switching off a given gene it is possible to follow the cascade of modifications it generates through the whole network). The resulting data, implemented into Boolean models, seem to support the hypothesis that regulatory networks for a number of species (Saccharomyces cerevisiae, Escherichia coli, etc) are close to criticality27,28, but some other analyses leave the door open for the networks to operate in an ordered/subcritical phase14,29. Therefore, given the present state of affairs, one can only conclude that more accurate and extensive experimental approaches (including, in particular, more accurate direct measurements of the bias p) would be extremely valuable to shed further light on this fascinating problem.

An important observation to be made is that the tasks we have employed to be learned are relatively simple (as they only involve a maximum of 3 input nodes and a single readout). Thus, one can wonders what would happen if a more extensive use of the network potentiality was necessary (by employing for instance, two or more tasks simultaneously, and/or involving a much larger number of inputs in each single task). Under the light of our results for the noiseless case –where we found that upon considering far more complex tasks, involving many more input and output nodes, the dynamics becomes progressively more critical– it would not be surprising that if one could analyze much more complex tasks –as the ones probably controlling real biological networks– the dynamics could become closer to criticality even in the presence of noise. Furthermore, in such more complex cases, one should also relax the criterion to declare that networks have learned, and look for “fuzzy” types of learning (i.e. accept networks with fitnesses slightly smaller than one). The combination of much more complex rules together with less rigid criteria for learning, could very likely shift the optimal solutions toward more critical states. A detailed analysis of these issues is left as an open challenge for future work.

It is also noteworthy that, even if network topology is known to play a very important role in the outcome of RBNs18,19,56,57,58,59, here we have focused mostly on random Erdős-Rényi networks and left the analysis of important topological features of empirical networks –such as scale-free connectivity distributions, and hierarchical and modular organization– for future work. These aspects might also play an important role in determining the network dynamical state. Finally, we also plan to extend the studies beyond the limit of the Boolean approach and to implement more complex and biologically realistic tasks. Hence, our summary is that the criticality hypothesis remains as a valid and fascinating possibility, but that it needs to be critically evaluated under each set of specific circumstances, avoiding making exceedingly general claims.

Methods

Network mutations

-

1

Given a original network, M, we perform a rewiring, which consists in choosing a link (say from node i to node j), removing it, and introducing a new one (from i to j′) assuming this one did not exist before (and keeping the topological constraints described above).

-

2

This change of the network topology, requires some modifications in the random Boolean functions fj and fj′ (see Table 1). For fj one needs to eliminate the input σi; thus fj changes from being a function of Kin(j) arguments to a function of Kin(j) − 1. The new function coincides with the original one fixing σi = 0, i.e. for the case when the driving node i was off. After this, each output in its table is changed with probability 1/4, defining the “mutated” Boolean function. Similarly, for node j′ a new argument, σi, is introduced to the Boolean function fj′: all values for σi = 1 (“on” i node) are assigned randomly, while for σi = 0 (the new input is off) we keep the pre-existing Boolean-function values.

-

3

This whole rewiring process is performed the first time with prob. one; after that a second rewiring is attempted with prob. 1/2; if it occurs, then a third one happens with prob. 1/3 and so on, giving rise to a mutated network, M′. This sequential process allows for the possibility of large mutations, involving many re-wirings.

-

4

Observe that these mutations keep the out degree sequence, as well as the overall connectivity K fixed, so it can be understood as a sort of “micro-canonical ensemble”60. Note that this differs from previous studies31 where the overall network connectivity was allowed to change along the evolutionary dynamics. Our approach permits us to analyze the network performance as a function of network connectivity and, thus, as a function of its dynamical state.

Assessing network criticality

We employ the standard method of plotting the Derrida curve in order to determine the dynamical phase of any specific RBN –specified by its topology and the set of its Boolean functions– and assess how far it operates from criticality. The method is based in damage spreading dynamics and involves the next steps: (1) take a network M in one specific state, and a copy of it M′ in which a single randomly chosen node has changed its state, (2) compute the Hamming distance, H5,13, between these two networks after one time step (t = 1; in the asynchronous case nodes are updated following the same random order in both networks), (3) average such a Hamming distance by considering all the possible nodes in the network that can host the initial one-node perturbation, (4) average the previous result over network states. We define the branching parameter B, as the averaged H after perturbing the different nodes in the network (in some cases, we present results for perturbations only at input/core nodes). If B < 1 perturbations shrink on average and the network is said to be subcritical (or in the ordered phase), while if B > 1 perturbations proliferate and grow on average and the network is supercritical (chaotic or disordered phase). Finally, in the intermediate case, B = 1, in which perturbations propagate marginally, the network is critical.

Observe that in networks with some fixed input and output nodes, we can measure B in different ways, depending on whether we flip input nodes or not and on whether we compute the Hamming distance in the whole network or just in the core (excluding input nodes); therefore the concept of criticality might refer to just the core or to the full network. Finally, in order to determine the critical regime of an ensemble of networks –and not just an individual one– it is necessary to measure the ensemble average,  , of B.

, of B.

Additional Information

How to cite this article: Villegas, P. et al. Intrinsic noise and deviations from criticality in Boolean gene-regulatory networks. Sci. Rep. 6, 34743; doi: 10.1038/srep34743 (2016).

References

Crick, F. Central dogma of molecular biology. Nature 227, 561–563 (1970).

Buchanan, M. Networks in cell biology (Cambridge University Press, Cambridge, 2010).

Kauffman, S. Metabolic stability and epigenesis in randomly constructed genetic nets. J. Theor. Biol. 22, 437–467 (1969).

Kauffman, S. A. The origins of order: Self-organization and selection in evolution (Oxford university press, New York, 1993).

Gros, C. Random Boolean networks (Springer, Berlin Heidelberg, 2011).

De Jong, H. Modeling and simulation of genetic regulatory systems: a literature review. J. Comp. Biol. 9, 67–103 (2002).

Alon, U. An introduction to systems biology: design principles of biological circuits (CRC press, London, 2006).

Albert, R. & Othmer, H. G. The topology of the regulatory interactions predicts the expression pattern of the segment polarity genes in Drosophila melanogaster. J. Theor. Biol. 223, 1–18 (2003).

Li, F., Long, T., Lu, Y., Ouyang, Q. & Tang, C. The yeast cell-cycle network is robustly designed. Proc. Natl. Acad. Sci. USA 101, 4781–4786 (2004).

Bornholdt, S. Less is more in modeling large genetic networks. Science 310, 449 (2005).

Drossel, B. Random boolean networks. In Schuster, H. G. (ed.) Reviews of nonlinear dynamics and complexity vol. 1, chap. 3, 69–110 (Wiley VCH, Weinheim, 2008).

Aldana, M., Coppersmith, S. & Kadanoff, L. P. Boolean dynamics with random couplings. In Kaplan, E., Marsden, J. E. & Sreenivasan, K. R. (eds.) Perspectives and Problems in Nonlinear Science 23–89 (Springer-Verlag, New York, 2003).

Derrida, B. & Pomeau, Y. Random networks of automata: a simple annealed approximation. Europhys. Lett. 1, 45 (1986).

Kauffman, S., Peterson, C., Samuelsson, B. & Troein, C. Random boolean network models and the yeast transcriptional network. Proc. Natl. Acad. Sci. USA 100, 14796–14799 (2003).

Langton, C. G. Computation at the edge of chaos: phase transitions and emergent computation. Physica D 42, 12–37 (1990).

Maass, W., Natschläger, T. & Markram, H. Real-time computing without stable states: A new framework for neural computation based on perturbations. Neural Comput. 14, 2531–2560 (2002).

Bertschinger, N. & Natschlager, T. Real-time computation at the edge of chaos in recurrent neural networks. Neural Comput. 16, 1413–1436 (2004).

Aldana, M., Balleza, E., Kauffman, S. & Resendiz, O. Robustness and evolvability in genetic regulatory networks. J. Theor. Biol. 245, 433–448 (2007).

Sosa, C. T., Huang, S. & Aldana, M. Criticality is an emergent property of genetic networks that exhibit evolvability. PLoS Comp. Biol. 8, e1002669 (2012).

Ribeiro, A. S., Kauffman, S. A., Lloyd-Price, J., Samuelsson, B. & Socolar, J. E. Mutual information in random boolean models of regulatory networks. Phys. Rev. E 77, 011901 (2008).

Krawitz, P. & Shmulevich, I. Basin entropy in boolean network ensembles. Phys. Rev. Lett. 98, 158701 (2007).

Torres-Sosa, C., Huang, S. & Aldana, M. Criticality is an emergent property of genetic networks that exhibit evolvability. PLoS Comp. Biol. 8, e1002669 (2012).

Shew, W. L. & Plenz, D. The functional benefits of criticality in the cortex. Neuroscientist 19, 88–100 (2013).

Kinouchi, O. & Copelli, M. Optimal dynamical range of excitable networks at criticality. Nat. Phys. 2, 348–351 (2006).

Furusawa, C. & Kaneko, K. Adaptation to optimal cell growth through self-organized criticality. Phys. Rev. Lett. 108, 208103 (2012).

Chaté, H. & Muñoz, M. Insect swarms go critical. Physics 7, 120 (2014).

Balleza, E. et al. Critical dynamics in genetic regulatory networks: examples from four kingdoms. PLoS One 3, e2456 (2008).

Nykter, M. et al. Gene expression dynamics in the macrophage exhibit criticality. Proc. Natl. Acad. Sci. USA 105, 1897–1900 (2008).

Shmulevich, I., Kauffman, S. A. & Aldana, M. Eukaryotic cells are dynamically ordered or critical but not chaotic. Proc. Natl. Acad. Sci. USA 102, 13439–13444 (2005).

Hidalgo, J. et al. Information-based fitness and the emergence of criticality in living systems. Proc. Natl. Acad. Sci. USA 111, 10095–10100 (2014).

Goudarzi, A., Teuscher, C., Gulbahce, N. & Rohlf, T. Emergent criticality through adaptive information processing in boolean networks. Phys. Rev. Lett. 108, 128702 (2012).

Guptasarma, P. Does replication-induced transcription regulate synthesis of the myriad low copy number proteins of Escherichia coli? Bioessays 17, 987–997 (1995).

Schwikowski, B., Uetz, P. & Fields, S. A network of protein-protein interactions in yeast. Nat. Biotechnol. 18, 1257–1261 (2000).

Elowitz, M. B., Levine, A. J., Siggia, E. D. & Swain, P. S. Stochastic gene expression in a single cell. Science 297, 1183–1186 (2002).

Eldar, A. & Elowitz, M. B. Functional roles for noise in genetic circuits. Nature 467, 167–173 (2010).

Losick, R. & Desplan, C. Stochasticity and cell fate. Science 320, 65–68 (2008).

Balázsi, G., van Oudenaarden, A. & Collins, J. J. Cellular decision making and biological noise: from microbes to mammals. Cell 144, 910–925 (2011).

Tkačik, G. & Walczak, A. M. Information transmission in genetic regulatory networks: a review. J. Phys. Condens. Mat. 23, 153102 (2011).

Stern, M. D. Emergence of homeostasis and “noise imprinting” in an evolution model. Proc. Natl. Acad. Sci. USA 96, 10746–10751 (1999).

Darabos, C., Tomassini, M. & Giacobini, M. Dynamics of unperturbed and noisy generalized boolean networks. J. Theor. Biol. 260, 531–544 (2009).

Peixoto, T. P. Emergence of robustness against noise: A structural phase transition in evolved models of gene regulatory networks. Phys. Rev. E 85, 041908 (2012).

Gershenson, C. Classification of random boolean networks. In Standish, R. K., Bedau, M. A. & Abbas, H. A. (eds.) Proceedings of the eighth international conference on Artificial life 1–8 (The MIT Press, Cambridge, 2002).

Greil, F. & Drossel, B. Dynamics of critical kauffman networks under asynchronous stochastic update. Phys. Rev. Lett. 95, 048701 (2005).

Schmal, C., Peixoto, T. P. & Drossel, B. Boolean networks with robust and reliable trajectories. New J. Phys. 12, 113054 (2010).

Wolfram, S. A new kind of science vol. 5 (Wolfram media, Champaign, 2002).

Stark, C. et al. Biogrid: a general repository for interaction datasets. Nucleic Acids Res. 34, D535–D539 (2006).

Feist, A. M., Herrgård, M. J., Thiele, I., Reed, J. L. & Palsson, B. Ø. Reconstruction of biochemical networks in microorganisms. Nat. Rev. Microbiol. 7, 129–143 (2009).

Chang, R. L. et al. Metabolic network reconstruction of chlamydomonas offers insight into light-driven algal metabolism. Mol. Sys. Biol. 7, 518 (2011).

Oh, Y.-K., Palsson, B. O., Park, S. M., Schilling, C. H. & Mahadevan, R. Genome-scale reconstruction of metabolic network in Bacillus subtilis based on high-throughput phenotyping and gene essentiality data. J. Biol. Chem. 282, 28791–28799 (2007).

Balázsi, G., Barabási, A.-L. & Oltvai, Z. N. Topological units of environmental signal processing in the transcriptional regulatory network of Escherichia coli. Proc. Natl. Acad. Sci. USA 102, 7841–7846 (2005).

Ma, S., Gong, Q. & Bohnert, H. J. An arabidopsis gene network based on the graphical gaussian model. Genome Res. 17, 1614–1625 (2007).

Balázsi, G., Heath, A. P., Shi, L. & Gennaro, M. L. The temporal response of the Mycobacterium tuberculosis gene regulatory network during growth arrest. Mol. Sys. Biol. 4 (2008).

Sanz, J. et al. The transcriptional regulatory network of Mycobacterium tuberculosis. PLoS One 6, e22178 (2011).

Galán-Vásquez, E., Luna, B. & Martnez-Antonio, A. The regulatory network of Pseudomonas aeruginosa. Microb. Inform. Exp. 1, 1–11 (2011).

Guelzim, N., Bottani, S., Bourgine, P. & Képès, F. Topological and causal structure of the yeast transcriptional regulatory network. Nat. Genet. 31, 60–63 (2002).

Leclerc, R. D. Survival of the sparsest: robust gene networks are parsimonious. Mol. Sys. Biol. 4 (2008).

Drossel, B. & Greil, F. Critical boolean networks with scale-free in-degree distribution. Phys. Rev. E 80, 026102 (2009).

Szejka, A. & Drossel, B. Evolution of boolean networks under selection for a robust response to external inputs yields an extensive neutral space. Phys. Rev. E 81, 021908 (2010).

Aldana, M. Boolean dynamics of networks with scale-free topology. Physica D 185, 45–66 (2003).

Ben-Naim, E., Frauenfelder, H. & Toroczkai, Z. Complex networks. In Lecture Notes in Physics 650 (Springer, Berlin Heidelberg, 2004).

Acknowledgements

We acknowledge the Spanish-MINECO grant FIS2013-43201-P (FEDER funds) for financial support. We thank P. Moretti for very useful comments.

Author information

Authors and Affiliations

Contributions

J.H. and M.A.M. conceived the project, P.V. and J.R.-F. performed the numerical simulations and prepared the figures. P.V., J.H. and M.A.M. wrote the main manuscript text. All authors reviewed the manuscript.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Villegas, P., Ruiz-Franco, J., Hidalgo, J. et al. Intrinsic noise and deviations from criticality in Boolean gene-regulatory networks. Sci Rep 6, 34743 (2016). https://doi.org/10.1038/srep34743

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep34743

This article is cited by

-

Understanding the physical mechanism of intrinsic noise inside viscous isotropic solids

Scientific Reports (2022)

-

Robust stability of interactional genetic regulatory networks with reaction-diffusion terms

Advances in Difference Equations (2017)

-

Observability of Boolean multiplex control networks

Scientific Reports (2017)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.