Abstract

Community structure is one of the fundamental characteristics of complex networks. Many methods have been proposed for community detection. However, most of these methods are designed for static networks and are not suitable for dynamic networks that evolve over time. Recently, the evolutionary clustering framework was proposed for clustering dynamic data, and it can also be used for community detection in dynamic networks. In this paper, a multi-similarity spectral (MSSC) method is proposed as an improvement to the former evolutionary clustering method. To detect the community structure in dynamic networks, our method considers the different similarity metrics of networks. First, multiple similarity matrices are constructed for each snapshot of dynamic networks. Then, a dynamic co-training algorithm is proposed by bootstrapping the clustering of different similarity measures. Compared with a number of baseline models, the experimental results show that the proposed MSSC method has better performance on some widely used synthetic and real-world datasets with ground-truth community structure that change over time.

Similar content being viewed by others

Introduction

Complex networks have been studied in many domains, such as genomic networks, social networks, communication networks and co-author networks1. The community structure has revealed important structure in these complex networks2,3,4,5,6. A great deal of research has been devoted to detecting communities in complex networks, such as graph partitioning7,8, hierarchical clustering9, modularity optimization10, spectral clustering11,12, label propagation, game theory and information diffusion13, a detailed review is available in the literature14. However, most existing methods are designed for static networks, and not suitable for real-world data networks with dynamic characteristics. For example, the interactions among users in the blogosphere or circles of friends are not stationary because some interactions disappear, and some new ones appear each day.

Recently, some methods have been proposed to find community structures and their temporal evolution in dynamic networks. An intuitive idea is to divide the network into discrete time steps and to use static methods to the snapshot networks15,16,17,18,19,20,21,22. The so-called two-stages methods, analyse the community extraction and the community evolution in two separated stages. In other words, the communities are extracted at a given snapshot while ignoring the changing trends among and within communities of the dynamic networks. These two-stage methods are extremely noise-sensitive and produce unstable clustering results. For example, nodes or links disappear or emerge in the subsequent snapshot, which is impossible to detect using the two-stage methods. A better choice is to consider multiple time steps as a whole and the evolutionary clustering algorithm is proposed23, which can detect communities of the current snapshot by joining with the community structure of the previous snapshot.

In fact, evolutionary clustering algorithm enables one to detect current communities using community structures from the previous steps by introducing an item called the temporal smoothness. The general framework for evolutionary clustering was first formulated by Chakrabarti et al.23. In this framework, they proposed heuristic solutions to evolutionary hierarchical clustering and k-means clustering. The framework FacetNet, which was proposed by Lin et al.24, relies on non-negative matrix factorization. A density-based clustering method, which was proposed by Kim and Han25, and uses a cost embedding technique and optimal modularity, can efficiently find temporally smoothed local clusters of high quality.

The existing evolutionary clustering methods that are most similar to MSSC are the PCQ (preserving cluster quality) and PCM (preserving cluster membership) methods26. PCQ and PCM are two proposed frameworks that incorporate the temporal smoothness in spectral clustering. In both frameworks, a cost function is defined as the sum of the traditional cluster quality cost and the temporal smoothness item. Our method follows the evolutionary clustering strategy, but with one major difference. The intuitive goal of spectral clustering is to detect latent communities in networks such that the points are similar in the same community and different in different communities. There are several similarity measurements to evaluate the similarities between two vertices. A common approach is to encode prior knowledge about objects using a kernel, such as the linear kernel, Gaussian kernel and Fisher kernel. A large proportion of existing spectral clustering algorithms use only one similarity measurement. However, there is a problem in that the clustering results based on different similarity matrices may be notably different11,27. Here, we introduce a multi-similarity method to the evolutionary spectral clustering algorithm, which simultaneously considers multiple similarity matrices.

Inspired by Abhishek Kumar et al.28, we propose a multi-similarity spectral clustering (MSSC) method and a dynamic co-training algorithm for community detection in dynamic networks. The proposed method preserves the evolutionary information of community structure by combining the current data and historic partitions. The idea of co-training was originally proposed in semi-supervised learning for bootstrapping procedures where two hypotheses are trained in different views29. The cotraining idea assumes that the two views are conditionally independent and sufficient, i.e., each view can conditionally independently give the classifiers and be sufficient for classification on its own. Then the classification is restricted in one view to be consistent with those in other views. Co-training has been used to classify web pages using the text on the page as one view and the anchor text of hyperlinks on other pages that point to the page as the other views30. In another words, the text in a hyperlink on one page can provide information about the page to which it links. Similarity to semi-supervised learning, the clustering, which is based on different similarity measures, is obtained using information from one another by co-training in the proposed dynamic co-training approach. This process is repeated in a pre-defined number of iterations.

Moreover, the problem of how to determine the weight of the temporal penalty to the historic partitions, which reflects the user preferences on the historic information, remains. In many cases, this parameter depends on the users’ subjective preference26, which is undesirable. We propose an adaptive model to dynamically tune the temporal smoothness parameter.

In summary, we introduce multiple similarity measures in the evolutionary spectral clustering method. We propose a dynamic co-training method, which accommodates multiple similarity measures and regularizes current communities according to the temporal smoothness of historic ones. Then, an adaptive approach is presented to learn the change in weight of the temporal penalty over time. Based on these ideas, a multi-similarity evolutionary spectral clustering method is presented to discover communities in dynamic networks using the evolutionary clustering23 and dynamic co-training method. The performance of the proposed MSSC method is demonstrated on some widely used synthetic and real-world datasets with ground-truths.

Results

To quantitatively compare our algorithm and others, we compare the values of the normalized mutual information (NMI)31 and the sum of squares for error (SSE)32 for various networks from the literature. The NMI is a well known entropy measure in information theory, which measures the similarity of two clusters (in this paper, between the community structures  obtained using our method and G obtained from the ground truth). Assume that the i–th row of

obtained using our method and G obtained from the ground truth). Assume that the i–th row of  indicates the community membership of the i–th node (i.e., if the ith node belongs to the k-th community, then

indicates the community membership of the i–th node (i.e., if the ith node belongs to the k-th community, then  and

and  for k ≠ k′). NMI can be defined as

for k ≠ k′). NMI can be defined as  , which is the normalization of mutual information

, which is the normalization of mutual information  by the average of two entropies

by the average of two entropies  and H(G). The NMI value is a quantity between 0 and 1, a higher NMI indicates higher consistency, and NMI = 1 corresponds to being identical. SSE can be defined as

and H(G). The NMI value is a quantity between 0 and 1, a higher NMI indicates higher consistency, and NMI = 1 corresponds to being identical. SSE can be defined as  , which measures the distance between the community structure represented by

, which measures the distance between the community structure represented by  and that represented by G. A smaller SSE, indicates a smaller difference between the prediction values and the factual values.

and that represented by G. A smaller SSE, indicates a smaller difference between the prediction values and the factual values.

We compare the accuracy against three previously published spectral clustering algorithms for detecting communities in dynamic networks: the preserving cluster quality method (PCQ)26, the preserving cluster membership method (PCM)26 and the traditional two-stage method. PCQ and PCM are two proposed frameworks that incorporate temporal smoothness in spectral clustering. In both frameworks, a cost function is defined as the sum of the traditional cluster quality cost and a temporal smoothness one. Although these two frameworks have similar expressions for the cost function, the temporal smoothness cost in PCQ is expressed as how well the current partition clusters historic data, which makes the clusters depend on both current data and historic data, whereas the temporal cost in PCM is expressed as the difference between the current partition and the historic partition, which prevents the clusters from dramatically deviating from the recent history. The traditional two-stage method divides the network into discrete time steps and performs static spectral clustering11 at each time step. Each approach is repeated for 10 times, and the average result and variance are presented. The parameter for PCQ and PCM is α = 0.9. We begin by inferring communities in three synthetic datasets with known embedded communities. Next, we study two real-world datasets, where communities are identified by human domain experts. For concreteness and simplicity, we restrict ourselves in this paper to the case of two similarity measures. The proposed method can be extended for more than two similarity matrices. We choose to use the Gaussian kernel and linear kernel as the similarity measures among different data points. Then, the similarity matrices are  and

and  , where

, where  and

and  represent the m-dimensional feature vectors and i ≠ j. In our experiments,

represent the m-dimensional feature vectors and i ≠ j. In our experiments,  is a column vector of the adjacency matrix A at snapshot t, which is represented by At. In other words,

is a column vector of the adjacency matrix A at snapshot t, which is represented by At. In other words,  is an n-dimensional feature vector. The σ is taken equal to the median of the pair-wise Euclidean distances between the data points.

is an n-dimensional feature vector. The σ is taken equal to the median of the pair-wise Euclidean distances between the data points.

Synthetic Datasets

GN-benchmark network #1

The first dataset is generated according to the description by Newman et al.33. This dataset contains 128 nodes, which are divided into 4 communities, each of which has 32 nodes. We generate data for 10 consecutive snapshots. In each snapshot from 2 to 10, the dynamics are introduced as follows: from each community we randomly select certain members to leave their original community and randomly join the other three communities. Pairs of nodes are randomly linked with a higher probability pin for within-community edges and a lower probability pout for between-community edges. Aloughth pout is freely varied, the value of pin is selected to maintain the expected degree of each vertex as a constant. When the average degree for the nodes is fixed, parameter z, which represents the mean number of edges from a node to the nodes in other communities, is sufficient to describe the data. With the increase in z, the community structure becomes indistinct. We consider three values (4, 5 and 6) for z; the average degree of each node is 16 and 20 at each snapshot. We randomly select 1, 3 and 6 nodes to change their cluster membership. The performance is significantly improved, as shown in Table 1, where Cn is the number of changed nodes. In general, our method has a higher NMI and a smaller SSE in most situations except when z = 4 (the average degree is 16) and z = 5 (the average degree is 20), where the NC-based PCM outperforms the MSSC method. Because space is limited, the values of NMI and SSE are the average values from snapshot 1 to 10. In Fig. 1, we intuitively show the performance under the conditions that parameter z is 5, the average degree of each node is 16 and at each snapshot, 3 nodes change their cluster membership. Figure 1(a) shows that the MSSC method always outperforms the baselines, which indicates that our method has a better accuracy. In addition, Fig. 1(b) shows that the MSSC method has a lower error in cluster membership with respect to the ground truth from a general view. In both figures, our method significantly improves the accuracy and reduces the error compared with PCQ, PCM and static spectral clustering.

(a,b) Normalized mutual information and the sum of the squared errors of different methods at 10 snapshots in synthetic networks, where the parameter z is 5, the average degree of each node is 16 and at each snapshot, 3 nodes change their cluster membership. (c,d) Performance for a single contraction event with 1000 nodes over 10 snapshots; the nodes have a mean degree of 15, a maximum degree of 50, and a mixing parameter value of μ = 0, which controls the overlapping among communities. Notice that the x-axes show the snapshots.

GN-benchmark network #2

To compare the effectiveness as the number of communities varies, we use the second dataset with two types of data sets, which were generated by Francesco Folino and Clara Pizzuti34: SYN-FIX with a fixed number of communities and SYN-VAR with a variable number of communities. For SYN-FIX, the data generating method is identical to the GN-benchmark network #1. The network consists of 128 nodes, which are divided into four communities of 32 nodes. Every node has an average degree of 16 and shares z links with other nodes of the network. Then, 3 nodes are randomly selected from each community and randomly assigned to the other three communities. For SYN-VAR, the generating method for SYN-FIX is modified to introduce the forming and dissolving of communities and the attaching and detaching of nodes. The initial network contains 256 nodes, which are divided into 4 communities of 64 nodes. Then, 10 consecutive networks are generated by randomly choosing 8 nodes from each community, and a new community is generated with these 32 nodes. This process is performed for 5 timestamps before the nodes return to the original communities. Every node has an average degree of 16 and shares z links with the other nodes of the network. A new community is created once at each timestamp between 2 ≤ t ≤ 5. Therefore, the numbers of communities between 1 ≤ t ≤ 10 are 4, 5, 6, 7, 8, 8, 7, 6, 5, and 4. At each snapshot, 16 nodes are randomly deleted, and 16 new nodes are added to the network for 2 ≤ t ≤ 10. Table 2 shows the accuracy and error of the community membership that are obtained by the four algorithms for SYN-FIX and SYN-VAR with z = 3 and z = 5. Table 2 shows that the MSSC method can handle dynamic networks well when the number of community varies, and when z = 3, the community structure is easy to detect because there is less noise. Hence, although MSSC does not perform well in NMI, it has a lower error for SYN-FIX.

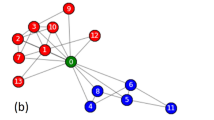

Synthetic dataset #3

The third synthetic dataset is used to study the MSSC method in dynamic networks, where the number of nodes changes. Greene et al.31 developed a set of benchmarks based on the embedding of events in synthetic graphs. Five dynamic networks are generated without overlapping communities for five different event types: birth and death, expansion, contraction, merging and splitting, and switch. A single birth event occurs when a new dynamic community appears, and a single death event occurs when an old dynamic community dissolutions. A single mergeing event occurs if two distinct dynamic communities observed at snapshot t − 1 match to a single step community at snapshot t and a single splitting event occurs if a single dynamic community at snapshot t − 1 is matched to two distinct step communities at snapshot t. The expansion of a dynamic community occurs when its corresponding step community at snapshot t is significantly larger than the previous one and the contraction of a dynamic community occurs when its corresponding step community at snapshot t is significantly smaller than the previous one. The switch event occurs when the nodes move among the communities. The performance of a small example dynamic graph produced by the generator is shown in Fig. 1(c,d), which involves 1000 nodes, 17 embedded dynamic communities and a single contraction event. To evaluate methods, we constructed five different synthetic networks for five different event types, which covered 1000 nodes over 10 snapshots. In each of the five synthetic datasets, 20% of node memberships were randomly permuted at each snapshot to simulate the natural movement of users among communities over time. The snapshot graphs share a number of parameters: the nodes have a mean degree of 15, a maximum degree of 50, and a mixing parameter value of μ = 0, which controls the overlap between communities. The number of communities were constrained to have sizes in the range of [20, 100]. In each of the five synthetic datasets, the node memberships were randomly permuted at each step to simulate the natural movement of users among communities over time. Table 3 shows the performance of five different methods in different events. We also find that the standard deviation for MSSC is smaller, which implies that the clustering results are more stable.

Real-World Datasets

NEC Blog Dataset

The blog data were collected by an NEC in-house blog crawler. Given seeds of manually picked highly ranked blogs, the crawler discovered blogs that were densely connected with the seeds, which resulted in an expanded set of blogs that communicated with each other. The NEC blog dataset has been used in several previous studies on dynamic networks24,26,35. The dataset contains 148, 681 entry-to-entry links among 407 blogs crawled during 15 months, which start from July 2005. First, we construct an adjacency matrix, where the nodes correspond to blogs, and the edges are interlinks among the blogs (obtained by aggregating all entry-to-entry links). In the blog network, the number of nodes changes in different snapshots. The blogs roughly form 2 main clusters, the larger cluster consists of blogs with technology focuses and the smaller cluster contains blogs with non-technology focuses (e.g., politics, international issues, digital libraries). Therefore, in the following studies, we set the number of clusters to be 2. Figure 2 shows the performance. Because the edges are sparse, we take 4 weeks as a snapshot and aggregate all edges in every month into an affinity matrix for that snapshot. Figure 2(a) shows that although MSSC does not perform as well as NA-based PCQ and PCM in the first few snapshots, MSSC begins to outperform NA-based PCQ and PCM as time progresses. In addition, MSSC retains a lower variance than NA-based PCQ and PCM. This result suggests that the benefits of MSSC accumulate more over time than those of NA-based PCQ and PCM. Furthermore, Fig. 2(b) shows that MSSC has lower errors although it does not outperform the baselines in NMI at few snapshots.

(a,b) This NEC blog dataset contains 407 blogs crawled during 15 consecutive months, which begin from July 2005, where each month is a snapshot. (c,d) The network of e-mail contacts at the department of computer science at KIT is an ever-changing network during 48 consecutive months, where the snapshot is six months.

KIT E-mail Dataset

Furthermore, we consider a large number of snapshots of the e-mail communication network in the Department of Informatics at KIT36. The network of e-mail contacts at the department of computer science at KIT is an ever-changing graph during 48 consecutive months from September 2006 to August 2010. The vertices represent members, and the edges correspond to the e-mail contacts weighted by the number of e-mails sent between two individuals. Because the edges are sparse, we construct the adjacency matrix among 231 active members. In the E-mail network, the clusters are different departments of computer science at KIT. The number of clusters is 14, 23, 25, 26, and 27, for the snapshots of 1, 2, 3, 4, and 6 months, respectively, because the smaller divided intervals correspond to more data points that are treated as isolated points. Therefore, when we take one month as a snapshot, the number of clusters is the smallest. Because of limited space, we show the NMI scores and SSE values for the 8 snapshots situation (each snapshot is six months) in Fig. 2(c,d). We observe that MSSC outperforms the baseline methods. To study the effect of considering historic information, Table 4 takes 1, 2, 3, 4, and 6 months as a snapshot. We observe that the more snapshots, correspond to more know historic information and smaller error. Therefore the SSE is smallest when the dynamic networks are considered as 48 snapshots.

Discussion

In this paper, to find a highly efficient spectral clustering method for community detection in dynamic networks, we propose an MSSC method by considering different measures together. We first construct multiple similarity matrices for each snapshot of dynamic networks and present a dynamic co-training method that bootstrapping the clustering of different similarity measures using information from one another. Furthermore, the proposed dynamic co-training method, which considers the evolution between two neighbouring snapshots can preserve the historic information of community structure. Finally, we use a simple but effective method to adaptively estimate the temporal smoothing parameter in the objective.

We have evaluated our MSSC method on both synthetic and real-world networks with ground-truths, and compared it with three state-of-the-art spectral clustering methods. The experimental results show that the method effectively detects communities in dynamic networks for most analysed data sets with various network and community size.

In all of our experiments, we observe that the major improvement in performance is obtained in the first iteration. The performance varies around that value in subsequent iterations. Therefore, in this paper, we show the results after the first iteration. In general, the algorithm does not converge, which is also the case with the semi-supervised co-training algorithm28.

However, the number of clusters or communities must be pre-designed in each snapshot. Determining the number of clusters is an important and difficult research problem in the field of model selection. There is currently no good resolution method for this problem. Some previously suggested approaches to this problem are cross-validation37, minimum description length methods that use two-part or universal codes38, and maximization of a marginal likelihood39. Our algorithms can use any of these methods to automatically select the number of cluster k because our algorithm still uses the fundamental spectral clustering algorithm. Additionally, as a spectral clustering method, MSSC must construct an adjacency matrix and calculate the eigen-decomposition of the corresponding Laplacian matrix. Both steps are computationally expensive. For a data set of n data points, these two steps have complexities of O(n2) and O(n3), which are unbearable burdens for large-scale applications40. There are some options to accelerate the spectral clustering algorithm, such as landmark-based spectral clustering (LSC), which selects  representative data points as the landmarks and represents the remaining data points as the linear combinations of these landmarks41,42. Liu et al.43 introduced a sequential reduction algorithm based on the observation that some data points quickly converge to their true embedding, so that an early stop strategy will speed up the decomposition. Yan, Huang, and Jordan44 also provided a general framework for fast approximate spectral clustering.

representative data points as the landmarks and represents the remaining data points as the linear combinations of these landmarks41,42. Liu et al.43 introduced a sequential reduction algorithm based on the observation that some data points quickly converge to their true embedding, so that an early stop strategy will speed up the decomposition. Yan, Huang, and Jordan44 also provided a general framework for fast approximate spectral clustering.

Methods

Traditional spectral clustering

In this section, we review the traditional spectral clustering approach11. The basic idea of spectral clustering is to cluster based on the spectrum of a Laplacian matrix. Given a set of data points {x1, x2, …, xn}, the intuitive goal of clustering is to find a reasonable method to divide the data points into several groups, with greater similarity in each group and dissimilarity among the groups. From the view of graph theory, the data can be represented as a similarity-based graph G = (V, E) with vertex set V and edge set E. Each vertex vi in this graph represents a data point xi, and the edge between vertices vi and vj is weighted by similarity Wij. For any given similarity matrix W, we can construct the unnormalized Laplacian matrix by L = D − W and the normalized Laplacian matrix by  , where the degree matrix D is defined as a diagonal matrix with elements

, where the degree matrix D is defined as a diagonal matrix with elements  . The adjacency matrix is a square matrix A, such that its element Aij is one when there is an edge from vertex vi to vertex vj and is zero when there is no edge. Two common variants of spectral clustering are average association and normalized cut45. The two partition criteria that maximize the association with the group and minimize the disassociation among groups are identical (the proof is provided in the literature45). Unfortunately, each variant is associated with an NP-hard problem. The relaxed problems can be written as11,26,45

. The adjacency matrix is a square matrix A, such that its element Aij is one when there is an edge from vertex vi to vertex vj and is zero when there is no edge. Two common variants of spectral clustering are average association and normalized cut45. The two partition criteria that maximize the association with the group and minimize the disassociation among groups are identical (the proof is provided in the literature45). Unfortunately, each variant is associated with an NP-hard problem. The relaxed problems can be written as11,26,45

In our algorithm, we will use the normalized cut as the partition criteria. The optimal solution to this problem is to set Z to be the eigenvectors that correspond to the k smallest eigenvalues of  . Then, all data points are projected to the eigen-space and the k-means algorithm is applied to the projected points to obtain the clusters. The focus of our work is the definition of the similarity matrix in the spectral clustering algorithm, i.e. computing the relaxed eigenvectors Zs with different similarity measurements.

. Then, all data points are projected to the eigen-space and the k-means algorithm is applied to the projected points to obtain the clusters. The focus of our work is the definition of the similarity matrix in the spectral clustering algorithm, i.e. computing the relaxed eigenvectors Zs with different similarity measurements.

Different similarity measures

In spectral clustering, a similarity matrix should be constructed to quantify the similarity among the data points. The performance of the spectral clustering algorithm heavily depends on the choice of similarity measures46. There are several constructions to transform a given set of data points into their similarities. A common approach in machine learning is to encode prior knowledge about the data vertices using a kernel27. The linear kernel which is given by the inner products between implicit representations of data points, is the simplest kernel function. Assume that the ith node in V can be represented by an m-dimensional feature vector  , and the distance between the ith and jth nodes in V is

, and the distance between the ith and jth nodes in V is  , which is the Euclidean distance. The linear kernel can be used as a type of similarity measure, i.e., similarity matrix W can be solved by

, which is the Euclidean distance. The linear kernel can be used as a type of similarity measure, i.e., similarity matrix W can be solved by  . The Gaussian kernel function is one of the most common similarity measures for spectral clustering11, which can be written as

. The Gaussian kernel function is one of the most common similarity measures for spectral clustering11, which can be written as  , where the standard deviation of the kernel σ is equal to the median of the pair-wise Euclidean distances between the data points.

, where the standard deviation of the kernel σ is equal to the median of the pair-wise Euclidean distances between the data points.

There are also some specific kernels for the similarity matrix. Fischer and Buhmann47 proposed a path-based similarity measure based on a connectedness criterion. Chang et al.48 proposed a robust path-based similarity measure based on the M-estimator to develop the robust path-based spectral clustering method.

Different similarity measures may reveal similarity between data points from different perspectives. For example, the Gaussian kernel function is based on Euclidean distances between the data points, whereas the linear kernel function is based on the inner products of the implicit representations of data points. Most studies of spectral clustering are based on one type of similarity measure, and notably few works consider multiple similarity measures. Therefore, we propose a method to consider multiple similarity measures in spectral clustering. In other words, our goal is to find a spectral clustering method based on multiple similarity matrice.

Multi-similarity spectral clustering

First, we introduce basic ideas on multi-similarity spectral clustering in the dynamic networks. We assume that the clustering from one similarity measurement should be consistent with the clustering from the other similarity measurements, and we bootstrapping the clustering of different similarities using information from one another by a dynamic co-training. The dynamic co-training method based on the idea of evolutionary clustering can preserve historic information of community structure. After a new similarity matrix is obtained by the dynamic co-training, we follow the standard procedures in traditional spectral clustering and obtain the clustering result. Figure 3 graphically illustrate the dynamic co-training process.

Specifically, we first compute the similarity matrices with different similarity measures at snapshot t, and the pth similarity matrix is denoted by  . Following most spectral clustering algorithms, a solution to the problem of minimizing the normalized cut is the relaxed cluster assignment matrix

. Following most spectral clustering algorithms, a solution to the problem of minimizing the normalized cut is the relaxed cluster assignment matrix  whose columns are the eigenvectors associated with the first k eigenvalues of the normalized Laplacian matrix

whose columns are the eigenvectors associated with the first k eigenvalues of the normalized Laplacian matrix  . Then all data points are projected to the eigen-space, and the clustering result is obtained usin the k-means algorithm. For a Laplacian matrix with exactly k connected components, its first k eigenvectors are are the cluster assignment vectors, i.e., these k eigenvectors only contain discriminative information among different clusters, while ignoring the details in the clusters11. However, if the Laplacian matrix is fully connected, the eigenvectors are no longer the cluster assignment vectors, but they contain discriminative information that can be used for clustering. From the co-training, we can use the eigenvectors from one similarity matrix to update the other one. The updated similarity matrix on the pth similarity measure at snapshot t can be defined as

. Then all data points are projected to the eigen-space, and the clustering result is obtained usin the k-means algorithm. For a Laplacian matrix with exactly k connected components, its first k eigenvectors are are the cluster assignment vectors, i.e., these k eigenvectors only contain discriminative information among different clusters, while ignoring the details in the clusters11. However, if the Laplacian matrix is fully connected, the eigenvectors are no longer the cluster assignment vectors, but they contain discriminative information that can be used for clustering. From the co-training, we can use the eigenvectors from one similarity matrix to update the other one. The updated similarity matrix on the pth similarity measure at snapshot t can be defined as

where  denotes the discriminative eigenvector in the Laplacian matrix from the qth similarity measure, p, q = 1, 2, 3, … s and p ≠ q. Equation (3) is the symmetrization operator to ensure that the projection of similarity matrix

denotes the discriminative eigenvector in the Laplacian matrix from the qth similarity measure, p, q = 1, 2, 3, … s and p ≠ q. Equation (3) is the symmetrization operator to ensure that the projection of similarity matrix  onto the eigenvectors is a symmetric matrix. Then, we use

onto the eigenvectors is a symmetric matrix. Then, we use  as the new similarity matrix to compute the Laplacians and solve for the first k eigenvectors to obtain a new cluster assignment matrix

as the new similarity matrix to compute the Laplacians and solve for the first k eigenvectors to obtain a new cluster assignment matrix  . After the co-training procedure is repeated for a pre-selected number of iterations, matrix

. After the co-training procedure is repeated for a pre-selected number of iterations, matrix  is constructed, where p is considered the most informative similarity measure in advance. Alternatively, if there is no prior knowledge of the similarity informativeness, matrix V can be set to be the column-wise concatenation of all

is constructed, where p is considered the most informative similarity measure in advance. Alternatively, if there is no prior knowledge of the similarity informativeness, matrix V can be set to be the column-wise concatenation of all  . For example, we generate two cluster assignment matrices

. For example, we generate two cluster assignment matrices  and

and  , which are combined to form

, which are combined to form  . Finally, the clusters are obtained using the k-means algorithm on V.

. Finally, the clusters are obtained using the k-means algorithm on V.

As descibed, we can solve the problem to accommodate multiple similarities. A further consideration is to follow the evolutionary clustering strategy to preserve the historic information of the community structure based on the co-training method. A general framework for evolutionary clustering was proposed by a linear combination of two costs26:

where CS measures the snapshot quality of the current clustering result with respect to the current data features, CT measures the goodness-of-fit of the current clustering result with respect to either historic data features or historic clustering results.

Here, we assume that the clusters at any snapshot should mainly depend on the current data and should not dramatically shift to the next snapshot. Then, a better approximation to the inner product of the feature matrix and its transposition is define as

where  , and

, and  is the temporal penalty parameter that controls the weight on the current information and historic information. Notice that

is the temporal penalty parameter that controls the weight on the current information and historic information. Notice that  is determined by both current eigenvectors and historic eigenvectors, so the updated similarity

is determined by both current eigenvectors and historic eigenvectors, so the updated similarity  defined in Equation (2), which considers the history, produces stable and consistent clusters. With the increase in

defined in Equation (2), which considers the history, produces stable and consistent clusters. With the increase in  , more weight is placed on the current information, and less weight is placed on the historic information. Algorithm 1 describes the MSSC algorithm in detail.

, more weight is placed on the current information, and less weight is placed on the historic information. Algorithm 1 describes the MSSC algorithm in detail.

Determining α

We have presented our proposed MSSC method. However, the temporal smoothing parameter  remains unknown, which prevents the clustering result at any snapshot from significantly deviating from the clustering result in the neighbouring snapshot. In many cases, the parameter depends on the subjective preference of the user. To work around this problem, Kevin S. Xu49 presented a framework that adaptively estimated the optimal smoothing parameter using shrinkage estimation. In this section, we propose a different approach to adaptively estimate the parameter, which can be defined as

remains unknown, which prevents the clustering result at any snapshot from significantly deviating from the clustering result in the neighbouring snapshot. In many cases, the parameter depends on the subjective preference of the user. To work around this problem, Kevin S. Xu49 presented a framework that adaptively estimated the optimal smoothing parameter using shrinkage estimation. In this section, we propose a different approach to adaptively estimate the parameter, which can be defined as

Note that  can be easily estimated because

can be easily estimated because  is known. In this model, more weight is placed on the current similarity, because the data should not dramatically shift to the neighbouring snapshot. Further more, a large difference in W indicates a small α, so it takes more information from the past.

is known. In this model, more weight is placed on the current similarity, because the data should not dramatically shift to the neighbouring snapshot. Further more, a large difference in W indicates a small α, so it takes more information from the past.

Changing community numbers

We have assumed that the number of community k is fixed, which is a notably strong restriction to the application of our approach. In fact, our approach can handle variations in community numbers. When the community numbers are different at two neighbouring snapshots, the approximation in Equation (5) is free from the effect of changes in clusters, i.e.,  and

and  is independent of the community numbers.

is independent of the community numbers.

Inserting and removing nodes

In many real-world networks, new nodes join or existing nodes leave the networks often. Assume that at time t, old nodes are removed from and new nodes are inserted into the network. We handle this problem by applying some heuristic solution to transform  and

and  to the same dimension as

to the same dimension as  and

and  , respectively26. When old nodes are removed, we can remove the corresponding rows from

, respectively26. When old nodes are removed, we can remove the corresponding rows from  in Equation (5) to obtain

in Equation (5) to obtain  (assuming that

(assuming that  is n1 × k). When new nodes are inserted, we must extend

is n1 × k). When new nodes are inserted, we must extend  to

to  , which has the identical dimension as

, which has the identical dimension as  (assuming the dimension of

(assuming the dimension of  is n2 × k). Then,

is n2 × k). Then,  is defined as

is defined as

For Equation (6), when old nodes are removed, we can remove the corresponding rows and columns from  to obtain

to obtain  (assuming that

(assuming that  is n1 × n1). When new nodes are inserted, we add the corresponding rows and columns to obtain

is n1 × n1). When new nodes are inserted, we add the corresponding rows and columns to obtain  , which has the identical dimension as

, which has the identical dimension as  (assuming that the dimension of

(assuming that the dimension of  is n2 × n2).

is n2 × n2).  can be defined as

can be defined as

Additional Information

How to cite this article: Qin, X. et al. A multi-similarity spectral clustering method for community detection in dynamic networks. Sci. Rep. 6, 31454; doi: 10.1038/srep31454 (2016).

References

Girvan, M. & Newman, M. E. J. Community structure in social and biological networks. PNAS 99, 7821–7826 (2002).

Adamic, L. A. & Adar, E. Friends and neighbors on the web. SN 25, 211–230 (2003).

Barabási, A. L. et al. Evolution of the social network of scientific collaborations. Phys. A. 311, 590–614 (2002).

Eckmann, J.-P. & Moses, E. Curvature of co-links uncovers hidden thematic layers in the World Wide Web. PNAS 99, 5825–5829 (2002).

Flake, G., Lawrence, S., Giles, C. & Coetzee, F. Self-organization and identification of Web communities. Computer 35, 66–71 (2002).

Ravasz, E., Somera, A. L., Mongru, D. A., Oltvai, Z. N. & Barabási, A. L. Hierarchical organization of modularity in metabolic networks. Science 297, 1551–1555 (2002).

Kernighan, B. & Lin, S. An Efficient Heuristic Procedure for Partitioning Graphs. AT&T. Tech. J. 49, 291–307 (1970).

Barnes, E. R. An algorithm for partitioning the nodes of a graph. SIAM J. Algebra Discr. 3, 541–550 (1982).

Dempster, A. P., Laird, N. M. & Rubin, D. B. Maximum likelihood from incomplete data via the em algorithm. J. Roy. Stat. Soc. B. 39, 1–38 (1977).

Newman, M. E. J. Modularity and community structure in networks. P. Natl. Acad. Sci. 103, 8577–8582 (2006).

Von Luxburg, U. A tutorial on spectral clustering. Stat. Comput. 17, 395–416 (2007).

Rohe, K., Chatterjee, S. & Yu, B. Spectral clustering and the high-dimensional stochastic blockmodel. Ann. Statist. 39, 1878–1915 (2011).

Alvari, H., Hajibagheri, A. & Sukthankar, G. Community detection in dynamic social networks: A game-theoretic approach. In Advances in Social Networks Analysis and Mining (ASONAM), 2014 IEEE/ACM International Conference on, 101–107 (2014).

Fortunato, Santo Community detection in graphs. Phys. Rep. 486, 75–174 (2010).

Asur, S., Parthasarathy, S. & Ucar, D. An event-based framework for characterizing the evolutionary behavior of interaction graphs. TKDD 3, 16:1–16:36 (2009).

Kumar, R., Novak, J., Raghavan, P. & Tomkins, A. On the bursty evolution of blogspace ACM Press, 568–576 (2003).

Kumar, R., Novak, J. & Tomkins, A. Structure and evolution of online social networks. In Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 611–617 (ACM, 2006).

Leskovec, J., Kleinberg, J. & Faloutsos, C. Graphs over time: Densification laws, shrinking diameters and possible explanations. In Proceedings of the Eleventh ACM SIGKDD International Conference on Knowledge Discovery in Data Mining 177–187 (ACM, 2005).

Lin, Y.-R., Sundaram, H., Chi, Y., Tatemura, J. & Tseng, B. L. Blog community discovery and evolution based on mutual awareness expansion. In Proceedings of the IEEE/WIC/ACM International Conference on Web Intelligence, 48–56 (IEEE Computer Society, 2007).

Palla, G., Barabasi, A.-l. & Vicsek, T. Quantifying social group evolution Nature 446, 664–667 (2007).

Spiliopoulou, M., Ntoutsi, I., Theodoridis, Y. & Schult, R. Monic: Modeling and monitoring cluster transitions. In Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 706–711 (ACM, 2006).

Toyoda, M. & Kitsuregawa, M. Extracting evolution of web communities from a series of web archives. In Proceedings of the Fourteenth ACM Conference on Hypertext and Hypermedia (eds Ashman, H., Brailsford, T. J., Carr, L. & Hardman, L. ) 28–37 (ACM, 2003).

Chakrabarti, D., Kumar, R. & Tomkins, A. Evolutionary clustering. In Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 554–560 (ACM, 2006).

Lin, Y.-R., Chi, Y., Zhu, S., Sundaram, H. & Tseng, B. L. Facetnet: A framework for analyzing communities and their evolutions in dynamic networks. In Proceedings of the 17th International Conference on World Wide Web, WWW ’08, 685–694 (ACM, 2008).

Kim, M.-S. & Han, J. A particle-and-density based evolutionary clustering method for dynamic networks. PVLDB 2, 622–633 (2009).

Chi, Y., Song, X., Zhou, D., Hino, K. & Tseng, B.-L. On evolutionary spectral clustering. ACM Trans. Knowl. Discov. Data 3, 17:1–17:30 (2009).

Srebro, N. How good is a kernel when used as a similarity measure. In Learning Theory: 20th Annual Conference on Learning Theory, COLT 2007, San Diego, CA, USA; June 13–15, 2007. Proceedings Vol. 4539 (eds Bshouty, N. H. & Gentile, C. ) 323–335 (Springer, 2007).

Kumar, A. & Daumé, H., III A co-training approach for multi-view spectral clustering. In ICML (eds Getoor, L. & Scheffer, T. ) 393–400 (Omnipress, 2011).

Blum, A. & Mitchell, T. Combining labeled and unlabeled data with co-training. In Proceedings of the Eleventh Annual Conference on Computational Learning Theory, 92–100 (ACM, 1998).

Lee, L. of referencing in Computer Science: Reflections on the Field, Reflections from the Field Ch. 6, 101–126 (The National Academies Press, 2004).

Greene, D., Doyle, D. & Cunningham, P. Tracking the evolution of communities in dynamic social networks. In Proceedings of the 2010 International Conference on Advances in Social Networks Analysis and Mining, 176–183 (IEEE Computer Society, 2010).

Lin, Y.-R., Chi, Y., Zhu, S., Sundaram, H. & Tseng, B. L. Analyzing Communities and Their Evolutions in Dynamic Social Networks. In ACM Trans. Knowl. Discov. Data 3, 8:1–8:31 (2009).

Newman, M. E. J. & Girvan, M. Finding and evaluating community structure in networks. Phys. Rev. E 69, 026113 (2004).

Folino, F. & Pizzuti, C. An evolutionary multiobjective approach for community discovery in dynamic networks. IEEE T. Knowl. Data En. 26, 1838–1852 (2014).

Yang, T., Chi, Y., Zhu, S., Gong, Y. & Jin, R. Detecting communities and their evolutions in dynamic social networks—abayesian approach. Mach. Learn. 82, 157–189 (2010).

Karlsruhe institue of technology Dynamic network of email communication at department of informatics at karlsruhe institue of technology (kit). Available at: http://i11www.iti.uni-karlsruhe.de/en/projects/spp1307/emaildata (Accessed: August 2015) (2011).

Airoldi, E. M., Blei, D. M., Fienberg, S. E. & Xing, E. P. Mixed membership stochastic blockmodels. J. Mach. Learn. Res. 9, 1981–2014 (2008).

Rosvall, M. & Bergstrom, C. T. An information-theoretic framework for resolving community structure in complex networks. P. Natl. Acad. Sci. 104, 7327–7331 (2007).

Hofman, J. M. & Wiggins, C. H. Bayesian approach to network modularity. Phys. Rev. Lett. 100 258701 (2008).

Chen, X. & Cai, D. Large scale spectral clustering with landmark-based representation. In AAAI (eds Burgard, W. & Roth, D. ) (AAAI Press, 2011).

Cai, D. Compressed spectral regression for efficient nonlinear dimensionality reduction. In IJCAI (eds Yang, Q. & Wooldridge, M. ) 3359–3365 (AAAI Press, 2015).

Cai, D. & Chen, X. Large scale spectral clustering via landmark-based sparse representation. IEEE T. Cybernetics 45, 1669–1680 (2015).

Liu, T.-Y., Yang, H.-Y., Zheng, X., Qin, T. & Ma, W.-Y. Fast large-scale spectral clustering by sequential shrinkage optimization. In ECIR, 4425 (eds Amati, G., Carpineto, C. & G, R. ) 319–330 (Springer, 2007).

Yan, D., Huang, L. & Jordan, M. I. Fast approximate spectral clustering. In KDD (eds IV, J. F. E., Fogelman-Soulié, F., Flach, P. A. & Zaki, M. ) 907–916 (ACM, 2009).

Shi, J. & Malik, J. Normalized cuts and image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 22, 888–905 (2000).

Bach, F. R. & Jordan, M. I. Learning spectral clustering. In NIPS (eds Thrun, S., Saul, L. K. & Schölkopf, B. ) 305–312 (MIT Press, 2003).

B, F. & Buhmann, J. M. Path-based clustering for grouping of smooth curves and texture segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 25, 513–518 (2003).

Chang, H. & Yeung, D.-Y. Robust path-based spectral clustering. Pattern Recogn. 41, 191–203 (2008).

Xu, Kevin S., Kliger, Mark & Hero, III, Alfred O. Adaptive evolutionary clustering. Data Min. Knowl. Discov. 28, 304–336 (2013).

Acknowledgements

This work was supported by the Major Project of National Social Science Fund(14ZDB153)and the major research plan of the National Natural Science Foundation (91224009, 51438009).

Author information

Authors and Affiliations

Contributions

X.Q. and P.J. conceived and designed the experiments; X.Q., P.J., W.D., W.W. and N.Y. analysed the results and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Qin, X., Dai, W., Jiao, P. et al. A multi-similarity spectral clustering method for community detection in dynamic networks. Sci Rep 6, 31454 (2016). https://doi.org/10.1038/srep31454

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep31454

This article is cited by

-

A community detection algorithm based on multi-similarity method

Cluster Computing (2019)

-

Detecting intrinsic communities in evolving networks

Social Network Analysis and Mining (2019)

-

Critical analysis of (Quasi-)Surprise for community detection in complex networks

Scientific Reports (2018)

-

MRHCA: a nonparametric statistics based method for hub and co‐expression module identification in large gene co‐expression network

Quantitative Biology (2018)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

represents the similarity matrix at snapshot t

represents the similarity matrix at snapshot t  represents the new similarity matrix after the dynamic co-training.

represents the new similarity matrix after the dynamic co-training.  denotes the discriminative eigenvector in the Laplacian matrix obtained from the 1, 2, 3 … sth except for the pth similarity measures.

denotes the discriminative eigenvector in the Laplacian matrix obtained from the 1, 2, 3 … sth except for the pth similarity measures.