Abstract

Super-resolution microscopy with phase masks is a promising technique for 3D imaging and tracking. Due to the complexity of the resultant point spread functions, generalized recovery algorithms are still missing. We introduce a 3D super-resolution recovery algorithm that works for a variety of phase masks generating 3D point spread functions. A fast deconvolution process generates initial guesses, which are further refined by least squares fitting. Overfitting is suppressed using a machine learning determined threshold. Preliminary results on experimental data show that our algorithm can be used to super-localize 3D adsorption events within a porous polymer film and is useful for evaluating potential phase masks. Finally, we demonstrate that parallel computation on graphics processing units can reduce the processing time required for 3D recovery. Simulations reveal that, through desktop parallelization, the ultimate limit of real-time processing is possible. Our program is the first open source recovery program for generalized 3D recovery using rotating point spread functions.

Similar content being viewed by others

Introduction

Single-molecule and super-resolution imaging1,2,3,4,5 have changed our understanding of biological processes6,7, dynamics at interfaces8,9,10,11,12 and functions of catalysts at the single molecule level13,14,15. Molecular motors are now known to move hand-over-hand5,16. Stochastic ligand clusters complicate chromatographic protein separations17,18. Cheaper and higher resolution gene mapping is made accessible19,20. The inner functions of live bacteria are unveiled21,22.

Underpinning each new piece of super-resolved knowledge are similarly revolutionary advancements in image analysis algorithms. For example, using 2D Gaussian fitting instead of the center of mass is critical to achieve nanometer scale resolution23. In order to speed up the analysis process, fast fitting algorithms that address both mathematical analysis24,25 and computational resources26 have been proposed. Algorithms focused on high-density imaging such as DAOSTORM27, compressed sensing interpolation28 and FALCON29, have inspired the development of super-resolution imaging with better time resolution. Today, we have a variety of sophisticated algorithms for recovery under different measurement conditions in 2D super-resolution imaging.

More recently, 3D super-resolution imaging is finding increased applications. Cells and organelles all have 3D structures30,31 and many biological processes32 and separation processes are 3D processes17. It is urgent to develop 3D super-resolution techniques with time and space resolution comparable to 2D techniques. One approach is to scan over different z positions and record multiple 2D images, with Ober’s group demonstrating simultaneous multiple detection planes to image 3D motion in living cells33. One advantage of such hardware-based methods is that the generated image can be analyzed by 2D processing algorithms.

Another popular method is to encode the phase information (which is related to the z position of the emitter) in the intensity distribution by using a cylindrical lens (astigmatism)30 or phase mask34,35,36 in the detection path so that 3D information is recorded in a single 2D image. Different phase masks generate different 3D point spread functions (PSFs), as shown in Supplementary Fig. S1 and references35,37,38,39. The advantages of astigmatism-based methods are that they are cheaper and have lower hardware requirements making them accessible to a broader group of researchers. However, data analysis becomes a challenge because, for most phase-based measurements, the PSF cannot be simply approximated and fit by simple equations like the 2D Gaussian function40,41. Algorithms such as 3D DAOSTORM can analyze astigmatism-based 3D images42. 3D FALCON43 can be used to analyze astigmatic images, biplane images, as well as hybrid images that combine astigmatic and biplane methods43. However, PSFs recorded in these types of 3D microscopies can be well-fit by Gaussians or elliptical Gaussians, which is usually not possible for phase mask engineered PSFs. Easy-DHPSF is a useful algorithm to analyze 3D single-particle tracking data using a double-helix phase mask41, but it requires the recorded PSFs to have no overlap, which, as we discuss below, is a serious challenge for most 3D recovery algorithms. Barsic et al. introduced an algorithm to analyze such 3D imaging data44, but the algorithm is not open source and thus not generalizable. Despite the promise of phase mask-based 3D imaging and isolated successful implementation, there is still a need for algorithms to analyze broad types of experimental images, as well as to provide reliable test-data for comparing performance between different phase masks.

A major challenge in phase mask based 3D super-resolution imaging is recovering accurate 3D localizations from a range of analyte densities45,46,47. As mentioned earlier, when using a phase mask, 3D information is projected onto 2D images with overlapped PSFs that are individually more complicated than a simple Gaussian. Often, the resulting overlapping PSFs can prevent accurate localization unless the distribution of the excited emitters is sparse in the space domain28,29. Extracting accurate 3D localizations with higher emitter densities is preferred, but this experimental requirement increases the challenges of subsequent image recovery. Therefore, the processing efficiency of 3D super-resolution recovery algorithms is important in practice.

In order to address the importance of accuracy, precision and processing speed, we introduce a 3D super-resolution recovery algorithm for emitters imaged with arbitrary 3D phase masks that generate rotating PSFs. We use an alternating direction method of multipliers (ADMM)48,49,50,51 based algorithm to deconvolute the sample positions from the 3D measurement, which records a single 2D image with encoded 3D information. We further improve the resolution by using a Taylor expansion to calculate the 1st order corrections between these grids29 using least squares fitting. ADMM is a powerful and efficient algorithm for convex optimization49,52. Moreover, we apply a threshold generated by machine learning (ML) to reject false positive identifications. Thresholding based on machine learning makes use of features from the data that are difficult to capture based on human observations53,54,55,56,57,58. In addition, we show how the recovery algorithm can be implemented both on a central processing unit (CPU) and a graphics processing unit (GPU). By using an affordable GPU, it is possible to increase processing speeds by an order of magnitude. Further estimation shows that by using a GPU array it would be possible to reach real-time data analysis of even dense phase mask data. To our knowledge, our algorithm will serve as the first open source algorithm for 3D recovery using phase mask imaging. Finally, as a proof-of-concept, we demonstrate that our algorithm can be used to localize single molecules within the 3D structure of a porous polystyrene film.

Results and Discussions

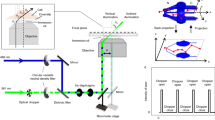

One common 3D super-resolution approach is to incorporate a 4f system into the detection path of a traditional wide field microscope (Fig. 1). This 4f system is composed of two identical lenses (L1 and L2) separated by twice the focal distance and a phase mask mounted in the focal plane35,59,60 between the two lenses. This plane is called the Fourier plane, which is the ideal location to manipulate the phase pattern in the detection path. The 4f system does not change the magnification. In x and y dimensions, the magnification is:

Schematic of our 3D super-resolution microscope using a phase mask.

FL is the focal lens. For a typical wide field microscope, the detector is placed at the focal point after FL. Lens 1 and Lens 2 are two identical lenses forming a 4f system. The phase mask is mounted in the Fourier plane, which is the center plane between Lens 1 and Lens 2. The detector is placed after the 4f system. The phase mask is made of transparent materials with different thicknesses generating different phase delays. The simulated phase mask pattern shown approximates the double helix phase mask reported elsewhere61 and the commercially available phase mask (Double Helix LLC) used in the experimental portions of the current work.

In the z dimension, the magnification is:

where Δx1 (Δx2) and Δz1 (Δz2) are the displacements in the x-y plane and in the z direction on the sample side (detector side) respectively, NA is the numerical aperture of the objective, n is the refraction index of the working medium for the objective, r is the effective beam radius, which should equal to the radius of the phase mask and f is the focal distance of Lens 1 and Lens 2, as shown in Fig. 1.

For a rotating PSF, the orientation (or shape) of the PSF as a response to different z positions is  , where Δϕ represents the orientation (or shape) change. Combining with equation (2), we have:

, where Δϕ represents the orientation (or shape) change. Combining with equation (2), we have:  . This means the orientation (or shape) response of a phase mask is only related to the objective. However, based on equation (1), the magnification of the PSF in x and y are inversely proportional to the ratio of beam radius and focal distance

. This means the orientation (or shape) response of a phase mask is only related to the objective. However, based on equation (1), the magnification of the PSF in x and y are inversely proportional to the ratio of beam radius and focal distance  .

.

The imaging process of this 3D microscope can be modeled as the convolution of a 3D PSF (such as the double helix PSF as shown in Fig. 2a) with emitters positioned in 3D (Fig. 2b), which generates overlapping 3D PSFs (Fig. 2c). The detector only records the 2D image at z = 0 plane, as shown in Fig. 2d. This incomplete sampling of the imaging space causes difficulty in later deconvolution for super-resolution recovery.

Illustration of the 3D imaging process with a double-helix phase mask (a–c) and the super-resolution recovery procedures (d–f).

(a) A double-helix 3D PSF is generated by a phase mask. Using this 3D PSF, the depth information of an emitter is indicated by the relative orientation of the two lobes in the x-y plane. (b) Two simulated emitters (dark green crosses) separated in 3D space. The dashed line is used to guide the eye. (c) The full 3D image space that results from a convolution of the double-helix PSF with the 3D positions of the two emitters. (d) A simulated CCD image that would occur from placing a photodetector at the focal plane (z = 0 μm) of the image space described by the convolution of the double-helix PSF and the two emitters. (e) The recovered positions of the emitters in front view (x-y plane) on the grids using ADMM algorithm. (f) The final recovered positions using least squares fitting and machine learning to avoid overfitting (magenta circles) compared with the ground truth (dark green crosses).

Recovering a super-resolution 3D image reduces to a convex optimization problem. We assume the emitter distribution (like Fig. 2b) is approximated by a 3D matrix x and the 3D PSF (like Fig. 2a) is represented by the 3D matrix A. We use a 2D matrix y to store our measured image (like Fig. 2d). To find x, we need to solve the optimization problem:

in which ⊗ means convolution, T is a 1D vector and ε is the tolerance of the noise. The minimization is over x. The only non-zero element in T corresponds to the z = 0 image from the convoluted 3D matrix. The 3D matrix A ⊗ x needs to be reshaped into a 2D matrix. The two horizontal dimensions become the first dimension and the z dimension is the second dimension. Multiply this reshaped 2D matrix with the 1D vector T selects the re-constructed image at z = 0, which can be used to compare with the measured image y. In single molecule experiments, the excited emitters in every recorded image are sparse28. Recent developments in compressive sensing and sparse sampling have demonstrated that using the L1 norm can recover sparse signals exactly28,62,63. The constraint in the second line ensures agreement between the measured image and the recovered image. However, directly solving this optimization problem requires a large amount of memory and computation resources, making this 3D optimization problem infeasible for most personal computers.

Recently published ADMM based deconvolution algorithms50,51 break down the optimization problem into multiple sub-problems to accelerate the computation and reduce the memory requirement by using circular convolution. Based on variable splitting and Lagrange multipliers, the solution of the optimization problem (eq. 3) can be found by solving:

Here we use u0 (u1) to replace A⊗x (x) in the first (second) term and force them to be the same in the third (fourth) penalty term; and η0, η1, μ and ν are related to Lagrange multipliers (for a complete understanding of Lagrange multipliers and ADMM, please review reference49). We use μ = 1 and ν = 20 based on ref. 50 for the best performance. The new optimization problem can be solved iteratively by updating one unknown at a time. In iteration k + 1, these unknowns can be updated in this way:

where argmin means argument of the minimum, which means finding the value of the variable that minimize the expression. Equation (5), (6) and (7) can be solved explicitly without any iteration, which is the major reason for the state-of-the-art speed of this algorithm. Moreover, A ⊗ x now can be calculated more efficiently using a fast Fourier transform (FFT) and variables like x, η0 and η1 can be updated in the Fourier domain without an inverse Fourier transform. This further reduces the number of required operations. We use 1000 iterations for all the analysis in this work. The sparsity of the solution is guaranteed by soft thresholding (eq. 6), which is equivalent to the L1 norm50,51. This has been shown in ref. 64. An ADMM algorithm handles much larger images at a time compared to directly solving the convex optimization problem (eq. 3)28,44. However, solutions resulting from ADMM deconvolution are on discrete grids and are vulnerable to overfitting. As shown in Fig. 2e, more than two emitters (corresponding to the bright pixels) are identified, meaning there are overfittings after ADMM deconvolution.

We further improve the resolution via least squares fitting and suppress overfitting using a machine learning determined threshold (Fig. 2f). In the deconvolution algorithm, the 3D PSF is approximated as a discrete 3D matrix. By adding the 1st order Taylor expansion in the x, y and z directions, we can approximate the 3D PSF in continuous space29 and refine the positions of the emitters by solving this least squares problem:

where Hi,  are the corresponding 2D PSFs and the 1st order Taylor expansions in x, y and z in the imaging plane for emitter i; Ii is the intensity of emitter i; dxi, dyi, dzi are 1st order differentials in x, y and z directions.

are the corresponding 2D PSFs and the 1st order Taylor expansions in x, y and z in the imaging plane for emitter i; Ii is the intensity of emitter i; dxi, dyi, dzi are 1st order differentials in x, y and z directions.

Least squares fitting alone cannot distinguish true positive emitters and false positive emitters. Research in super-resolution recovery frequently focuses on recall rates (the number of identified true positive emitters over the number of all true emitters) and focuses less on the false positive rate (the number of identified false positive emitters over the number of all the emitters identified by the algorithm). However, the false positive rate is equally, if not more, important. A lower recall rate is a matter of measuring time but a higher false positive rate potentially distorts the true structure. Usually researchers use pre-selected thresholds (such as 5% of the highest intensity) to remove false positives. We instead use labeled data and ML to find out a more objective threshold via multiple parameters (see SI for details). As shown in Fig. 3a, using this training data determined threshold, the recall rate decreases by a small amount, but the false positive rate decreases significantly.

Performance of the algorithm.

(a) Recall rate (recall %, left axis, in red color) and false positive rate (false %, right axis, in blue color) with or without the ML step. The standard deviation of each point is shown in Supplementary Fig. S3. (b) The standard deviation of the fitting error distribution in x (blue square), y (green circle) and z coordinates (red diamond). (c) Example recovery result of an image with 15 emitters (emitter density = 0.4 μm−2) in a 3D plot. The recovered vs. simulated true positions are indicated in magenta circles and cyan crosses, respectively. The simulated measured image is shown in the bottom of the 3D space. All the emitters are located with no overfitting. (d) Example recovery result of an image with 40 emitters (emitter density = 1.06 μm−2) in a 3D plot. There are many incorrect identifications, which can lead to misrepresentations about the sample.

Based on our simulations (simulation details are explained in the SI), the optimal emitter density in 3D imaging can be determined. As shown in Fig. 3a,b, as the emitter density increases, the recall rate decreases and false positive rate increases and the fitting error in every dimension increases. The fitting error as a function of emitter density increases in a linear trend, but the recall rate shows a gradual decrease when the emitter density is greater than 0.7 μm−2. The false positive rate for emitter density larger than 0.8 μm−2 is larger than 10%, meaning a larger possibility of identifying misleading structures. As illustrated in Fig. 3c, at lower emitter densities, we can recover almost all of the emitters with no overfitting. At higher densities (Fig. 3d), false positive emitters are more likely to be identified. Based on this simulation, we suggest keeping emitter density smaller than 0.7 μm−2 in 3D imaging measurements. Under these guidelines, simulations have shown we can correctly recover 3D structures with high labeling density with ~10 nm resolutions (Supplementary Fig. S4). Our choice of emitter densities range within commonly used in the field of super-resolution microscopy using a visible light laser65 and widely discussed in other works28,44. These general guidelines are consistent for a range of different phase masks other than the double-helix (Supplementary Fig. S2). This simulation test on a different phase mask also proves the performance of the machine learning determined threshold and further demonstrates that our algorithm can be used to evaluate the performance of new phase mask designs. This program can be downloaded from our website: http://lrg.rice.edu/Content.aspx?id=96.

Despite our efficient and generalizable algorithm, more than one hour is required to analyze a 512 × 512 image on a typical personal computer with a standard CPU (Intel i7-4770, 3.40 GHz). To optimize the processing time, we need to parallelize the computation. Such parallelization is easily possible using a GPU. If an algorithm can break down the problem into multiple independent floating point operations, parallel computation on a GPU has been shown to accelerate the processing by 10–100 times compared to computation on a high-end CPU26. A GPU conducts thousands to millions of independent floating point operations simultaneously. Image processing is an ideal application for GPUs. Each pixel of the image can be assigned to a thread and different threads can perform similar operations simultaneously.

ADMM based image deconvolution is accelerated by an order of magnitude through computation on a GPU (Table 1). Operations in ADMM algorithms are primarily related to 3D FFT and matrix-matrix element wise operations, in which the 3D FFT is the most time consuming part. The parallel computing platform created by NVIDIA, which is called compute unified device architecture (CUDA), provides a library for FFT on GPUs (http://www.nvidia.com/). We make use of this FFT library for fast convolution and deconvolution computations and distribute all other element wise operations to millions of threads. Parallel computation speeds up our algorithm by 10 times using a GeForce GTX 645 GPU (576 CUDA cores, 1GB global memory), as shown in Supplementary Fig. S5 and Table 1. The limits of achievable acceleration are the number of CUDA cores, which decides the number of threads being processed at a time and the amount of global memory, which limits the amount of data being processed at a time. Usually, the number of CUDA cores is the only limiting factor. If we use a high-end GPU, such as the NVIDIA Tesla K80 with 4992 CUDA cores, the speed of our computation can increase by an additional factor of ten (Table 1). For a typical single-molecule measurement, we can record 1000 images in 30 s and the data size is approximately 1 GB. The NVIDIA Tesla K80 has 24 GB of memory with 480 GB/s bandwidth, so data transfer time is instantaneous and we won’t face the limitation of the memory. Therefore, data analysis speed increases linearly as the number of Tesla K80 to be used in parallel. With the current development of parallel computation, one can envision that in the not-so-distance future, real-time analysis will be possible and affordable with the extension to parallel GPU processing.

As an example, we show the application of our recovery algorithm to nanoscale 3D structures in porous polystyrene films (Fig. 4). Engineered polymer films are used in chemical and biological separations and understanding analyte/film interactions has been of recent interest18. Correlating the connection between the 3D morphology of the polymer films and the separation efficiency might provide a means to produce films with improved separation performance8,11,17,66,67. Previous studies focused on dynamic interactions between analytes and clustered-charge ligands imbedded in the support film, but also suggested that nanoscale heterogeneities in film structure are also important17,44,63,65. The proof-of-concept analysis shown in Fig. 4 suggests that our algorithm can be combined with more complicated analytes and samples to directly relate nanoscale 3D film structure and dynamic interactions between the analyte and the film.

3D super-localization of 40 nm orange fluorescent bead adsorption onto porous polystyrene films.

Details about sample preparation can be found in the SI. (a) 3D localization of fluorescent beads on a porous polystyrene film with (b) correlated bright-field image of the corresponding area. The cross markers in (a,b) indicate the 2D positions of identified emitters. The color of each marker indicates the relative z position of each emitter, as shown in the corresponding color bar. The arrows highlight two emitters at different depths corresponding to positions within a pore and on the edge of a pore. (c) A dark-field image of the polystyrene film structure. The region studied in (a,b) is labelled in the green box. (d) 3D localization is demonstrated on another polystyrene film with a higher density of beads. The phase mask used to collect the experimental data was purchased from Double Helix LLC.

We prepared a porous polystyrene film and drop casted orange fluorescent beads onto the film (see SI for details about sample preparation and data acquisition). As shown in Fig. 4a, the local depth of each bead was extracted. Figure 4b and c are the bright-field (Fig. 4b) and dark-field (Fig. 4c) images of the same area. As would be expected with a porous film, the distribution of super-localized bead depths suggests that some of the beads are within pores (e.g. highlighted by the white arrow) whereas some are on the film surface (e.g. highlighted by the yellow arrow). The depth localizations of beads agree well with the underlying surface morphology of the porous film. Moreover, we also used our algorithm to analyze another porous film with a higher density of beads (Fig. 4d), strongly supporting that our algorithm can be used to perform 3D super-localization and super-resolution even when the complex PSFs generated by phase masks are overlapped.

Conclusion

We have demonstrated via simulation that our new algorithm can recover a 3D super-resolution image measured by a 3D microscope using phase masks in the Fourier plane. In the development of our algorithm, we leveraged state-of-the-art techniques in signal processing and optimization including ADMM and machine learning, as well as advanced computation resources to achieve the best possible algorithm performance and with computations completed in a few seconds via GPU processing. Our algorithm could play an important role in future data processing tasks including performance testing for new phase mask development. Our current algorithm still requires a good match between the experimental PSF and the simulated PSF. Motion blur in 3D single molecule tracking and complicated background in imaging will affect the performance of our algorithm. For our future work, we will further improve our algorithm for more complicated experimental conditions.

Additional Information

How to cite this article: Shuang, B. et al. Generalized recovery algorithm for 3D super-resolution microscopy using rotating point spread functions. Sci. Rep. 6, 30826; doi: 10.1038/srep30826 (2016).

References

Rust, M. J., Bates, M. & Zhuang, X. Sub-diffraction-limit imaging by stochastic optical reconstruction microscopy (STORM). Nat. Methods 3, 793–795 (2006).

Betzig, E. et al. Imaging intracellular fluorescent proteins at nanometer resolution. Science 313, 1642–1645 (2006).

Klar, T. A., Jakobs, S., Dyba, M., Egner, A. & Hell, S. W. Fluorescence microscopy with diffraction resolution barrier broken by stimulated emission. Proc. Natl Acad. Sci. USA 97, 8206–8210 (2000).

Moerner, W. & Kador, L. Optical detection and spectroscopy of single molecules in a solid. Phys. Rev. Lett. 62, 2535–2538 (1989).

Yildiz, A. et al. Myosin V walks hand-over-hand: single fluorophore imaging with 1.5-nm localization. Science 300, 2061–2065 (2003).

Wedeking, T. et al. Single Cell GFP-Trap Reveals Stoichiometry and Dynamics of Cytosolic Protein Complexes. Nano Lett. 15, 3610–3615 (2015).

Oswald, F., L. M. B., E., Bollen, Y. J. & Peterman, E. J. Imaging and quantification of trans-membrane protein diffusion in living bacteria. Phys. Chem. Chem. Phys. 16, 12625–12634 (2014).

Wang, D. et al. Scaling of Polymer Dynamics at an Oil-Water Interface in Regimes Dominated by Viscous Drag and Desorption-Mediated Flights. J. Am. Chem. Soc. 137, 12312–12320 (2015).

McUmber, A. C., Randolph, T. W. & Schwartz, D. K. Electrostatic Interactions Influence Protein Adsorption (but Not Desorption) at the Silica-Aqueous Interface. J. Phys. Chem. Lett. 6, 2583–2587 (2015).

McUmber, A. C., Larson, N. R., Randolph, T. W. & Schwartz, D. K. Molecular trajectories provide signatures of protein clustering and crowding at the oil/water interface. Langmuir 31, 5882–5890 (2015).

Kastantin, M., Langdon, B. B. & Schwartz, D. K. A bottom-up approach to understanding protein layer formation at solid-liquid interfaces. Adv. Colloid Interface Sci. 207, 240–252 (2014).

Woll, D., Kolbl, C., Stempfle, B. & Karrenbauer, A. A novel method for automatic single molecule tracking of blinking molecules at low intensities. Phys. Chem. Chem. Phys. 15, 6196–6205 (2013).

Upadhyay, S. P. et al. Fluorescent Dendrimeric Molecular Catalysts Demonstrate Unusual Scaling Behavior at the Single-Molecule Level. J. Phys. Chem. C 119, 19703–19714 (2015).

Andoy, N. M. et al. Single-molecule catalysis mapping quantifies site-specific activity and uncovers radial activity gradient on single 2D nanocrystals. J. Am. Chem. Soc. 135, 1845–1852 (2013).

Sambur, J. B. & Chen, P. Approaches to single-nanoparticle catalysis. Annu. Rev. Phys. Chem. 65, 395–422 (2014).

Purcell, T. J., Morris, C., Spudich, J. A. & Sweeney, H. L. Role of the lever arm in the processive stepping of myosin V. Proc. Natl. Acad. Sci. USA 99, 14159–14164 (2002).

Kisley, L. et al. Unified superresolution experiments and stochastic theory provide mechanistic insight into protein ion-exchange adsorptive separations. Proc. Natl. Acad. Sci. USA 111, 2075–2080 (2014).

Mabry, J. N., Skaug, M. J. & Schwartz, D. K. Single-molecule insights into retention at a reversed-phase chromatographic interface. Anal. Chem. 86, 9451–9458 (2014).

Neely, R. K., Deen, J. & Hofkens, J. Optical mapping of DNA: single-molecule-based methods for mapping genomes. Biopolymers 95, 298–311 (2011).

Chen, J., Bremauntz, A., Kisley, L., Shuang, B. & Landes, C. F. Super-Resolution mbPAINT for Optical Localization of Single-Stranded DNA. ACS Appl. Mater. Interfaces 5, 9338–9343 (2013).

Tuson, H. H. & Biteen, J. S. Unveiling the inner workings of live bacteria using super-resolution microscopy. Anal. Chem. 87, 42–63 (2015).

Lenhart, J. S., Pillon, M. C., Guarne, A., Biteen, J. S. & Simmons, L. A. Mismatch repair in Gram-positive bacteria. Res. Microbiol. (2015).

Cheezum, M. K., Walker, W. F. & Guilford, W. H. Quantitative Comparison of Algorithms for Tracking Single Fluorescent Particles. Biophys. J. 81, 2378–2388 (2001).

Parthasarathy, R. Rapid, accurate particle tracking by calculation of radial symmetry centers. Nat. Methods 9, 724–726 (2012).

Shuang, B., Chen, J., Kisley, L. & Landes, C. F. Troika of single particle tracking programing: SNR enhancement, particle identification and mapping. Phys. Chem. Chem. Phys. 16, 624–634 (2014).

Smith, C. S., Joseph, N., Rieger, B. & Lidke, K. A. Fast, single-molecule localization that achieves theoretically minimum uncertainty. Nat. Methods 7, 373–375 (2010).

Holden, S. J., Uphoff, S. & Kapanidis, A. N. DAOSTORM: an algorithm for high- density super-resolution microscopy. Nat. Methods 8, 279–280 (2011).

Zhu, L., Zhang, W., Elnatan, D. & Huang, B. Faster STORM using compressed sensing. Nat. Methods 9, 721–723 (2012).

Min, J. et al. FALCON: fast and unbiased reconstruction of high-density super-resolution microscopy data. Sci. Rep. 4, 4577 (2014).

Huang, B., Wang, W., Bates, M. & Zhuang, X. Three-dimensional super-resolution imaging by stochastic optical reconstruction microscopy. Science 319, 810–813 (2008).

Chen, B. C. et al. Lattice light-sheet microscopy: imaging molecules to embryos at high spatiotemporal resolution. Science 346, 1257998 (2014).

Lakadamyali, M., Babcock, H., Bates, M., Zhuang, X. & Lichtman, J. 3D multicolor super-resolution imaging offers improved accuracy in neuron tracing. PLoS One 7, e30826 (2012).

Prabhat, P., Ram, S., Ward, E. S. & Ober, R. J. Simultaneous imaging of different focal planes in fluorescence microscopy for the study of cellular dynamics in three dimensions. IEEE Trans. Nanobiosci. 3, 237–242 (2004).

Pavani, S. R. et al. Three-dimensional, single-molecule fluorescence imaging beyond the diffraction limit by using a double-helix point spread function. Proc. Natl. Acad. Sci. USA 106, 2995–2999 (2009).

Shechtman, Y., Weiss, L. E., Backer, A. S., Sahl, S. J. & Moerner, W. E. Precise Three-Dimensional Scan-Free Multiple-Particle Tracking over Large Axial Ranges with Tetrapod Point Spread Functions. Nano Lett. 15, 4194–4199 (2015).

Moerner, W. E., Shechtman, Y. & Wang, Q. Single-molecule spectroscopy and imaging over the decades. Faraday Discuss. 184, 9–36 (2015).

Backlund, M. P. et al. Simultaneous, accurate measurement of the 3D position and orientation of single molecules. Proc. Natl. Acad. Sci. USA 109, 19087–19092 (2012).

Pavani, S. R. & Piestun, R. High-efficiency rotating point spread functions. Opt. Express 16, 3484–3489 (2008).

Prasad, S. Rotating point spread function via pupil-phase engineering. Opt. Lett. 38, 585–587 (2013).

Lew, M. D. & Moerner, W. E. Azimuthal Polarization Filtering for Accurate, Precise and Robust Single-Molecule Localization Microscopy. Nano Lett. 14, 6407–6413 (2014).

Lew, M. D., von Diezmann, A. R. S. & Moerner, W. E. Easy-DHPSF open-source software for three-dimensional localization of single molecules with precision beyond the optical diffraction limit. Protoc. Exch. 2013, 026 (2013).

Babcock, H., Sigal, Y. M. & Zhuang, X. A high-density 3D localization algorithm for stochastic optical reconstruction microscopy. Optical Nanoscopy 1, 1–10 (2012).

Min, J. et al. 3D high-density localization microscopy using hybrid astigmatic/biplane imaging and sparse image reconstruction. Biomedical Optics Express 5, 3935–3948 (2014).

Barsic, A., Grover, G. & Piestun, R. Three-dimensional super-resolution and localization of dense clusters of single molecules. Sci. Rep. 4, 5388 (2014).

Welsher, K. & Yang, H. Multi-resolution 3D visualization of the early stages of cellular uptake of peptide-coated nanoparticles. Nat. Nanotechnol. 9, 198–203 (2014).

Moerner, W. E., Shechtman, Y. & Wang, Q. Single-molecule spectroscopy and imaging over the decades. Faraday Discuss. 184, 9–36 (2015).

Welsher, K. & Yang, H. Imaging the behavior of molecules in biological systems: breaking the 3D speed barrier with 3D multi-resolution microscopy. Faraday Discuss. 184, 359–379 (2015).

Gabay, D. & Mercier, B. A dual algorithm for the solution of nonlinear variational problems via finite element approximation. Comput. Math. Appl. 2, 17–40 (1976).

Boyd, S., Parikh, N., Chu, E., Peleato, B. & Eckstein, J. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers. Foundations and Trends® in Machine Learning 3, 1–122 (2011).

Almeida, M. S. C. & Figueiredo, M. A. T. Deconvolving Images With Unknown Boundaries Using the Alternating Direction Method of Multipliers. IEEE Ttran. Image Process. 22, 3074–3086 (2013).

Matakos, A., Ramani, S. & Fessler, J. A. Accelerated Edge-Preserving Image Restoration Without Boundary Artifacts. IEEE Ttran. Image Process. 22, 2019–2029 (2013).

Afonso, M. V., Bioucas-Dias, J. M. & Figueiredo, M. A. T. Fast Image Recovery Using Variable Splitting and Constrained Optimization. IEEE Ttran. Image Process. 19, 2345–2356 (2010).

James, G., Witten, D., Hastie, T. & Tibshirani, R. An Introduction to Statistical Learning with Applications in R. (Springer, 2014).

Joshi, A. J., Porikli, F. & Papanikolopoulos, N. in Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference on. 2372–2379.

Harchaoui, Z. & Bach, F. in Computer Vision and Pattern Recognition, 2007. CVPR ‘07. IEEE Conference on. 1–8.

Guillaumin, M., Verbeek, J. & Schmid, C. in Computer Vision and Pattern Recognition (CVPR), IEEE Conference on. 902–909 (2010).

Wernick, M. N., Yongyi, Y., Brankov, J. G., Yourganov, G. & Strother, S. C. Machine Learning in Medical Imaging. IEEE Signal Process. Mag. 27, 25–38 (2010).

Guzella, T. S. & Caminhas, W. M. A review of machine learning approaches to Spam filtering. Expert. Syst. Appl. 36, 10206–10222 (2009).

Grover, G., Quirin, S., Fiedler, C. & Piestun, R. Photon efficient double-helix PSF microscopy with application to 3D photo-activation localization imaging. Biomed. Opt. Express 2, 3010–3020 (2011).

Jia, S., Vaughan, J. C. & Zhuang, X. W. Isotropic three-dimensional super-resolution imaging with a self-bending point spread function. Nat. Photonics 8, 302–306 (2014).

Pavani, S. R., DeLuca, J. G. & Piestun, R. Polarization sensitive, three-dimensional, single-molecule imaging of cells with a double-helix system. Opt. Express 17, 19644–19655 (2009).

Duarte, M. F. et al. Single-pixel imaging via compressive sampling. IEEE Signal Process. Mag. 25, 83–91 (2008).

Candes, E. J. & Wakin, M. B. An introduction to compressive sampling. IEEE Signal Process. Mag. 25, 21–30 (2008).

Sorel, M. Removing Boundary Artifacts for Real-Time Iterated Shrinkage Deconvolution. IEEE Ttran. Image Process. 21, 2329–2334 (2012).

Mukamel, E. A., Babcock, H. & Zhuang, X. Statistical deconvolution for superresolution fluorescence microscopy. Biophys. J. 102, 2391–2400 (2012).

Tauzin, L. J. et al. Charge-dependent transport switching of single molecular ions in a weak polyelectrolyte multilayer. Langmuir 30, 8391–8399 (2014).

Regnier, F. High-performance liquid chromatography of biopolymers. Science 222, 245–252 (1983).

Acknowledgements

We thank the Welch Foundation (C-1787) and National Science Foundation (CHE-1151647) for support of this work. Special thanks also go to L. Kisley, C.P. Byers, B. Hoener and S. Indrasekara for their thoughts and discussions as well as S. Link and his research group for their feedback on this project.

Author information

Authors and Affiliations

Contributions

B.S. and W.W. designed and implemented the algorithm; H.S. provided the experimental tests; B.S., H.S. and C.F.L. wrote the manuscript; L.J.T., C.F., J.C., N.A.M., L.D.C.B. and K.F.K. commented on the results and the manuscript. All authors reviewed the manuscript.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Electronic supplementary material

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Shuang, B., Wang, W., Shen, H. et al. Generalized recovery algorithm for 3D super-resolution microscopy using rotating point spread functions. Sci Rep 6, 30826 (2016). https://doi.org/10.1038/srep30826

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep30826

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.