Abstract

Belief networks represent a powerful approach to problems involving probabilistic inference, but much of the work in this area is software based utilizing standard deterministic hardware based on the transistor which provides the gain and directionality needed to interconnect billions of them into useful networks. This paper proposes a transistor like device that could provide an analogous building block for probabilistic networks. We present two proof-of-concept examples of belief networks, one reciprocal and one non-reciprocal, implemented using the proposed device which is simulated using experimentally benchmarked models.

Similar content being viewed by others

Introduction

Probabilistic computing is a thriving field of computer science and mathematics and is widely viewed as a powerful approach for tackling the daunting problems of searching, detection and inference posed by the ever increasing amount of “big data”1,2,3,4,5,6,7,8,9,10,11. Much of this work, however, is software-based, utilizing standard general purpose hardware that is based on high precision deterministic logic12. The building block for this standard hardware is the ubiquitous transistor which has the key properties of gain and directionality that allow billions of them to be interconnected to perform complex tasks. This paper proposes a transistor-like device that could provide an analogous building block for probabilistic logic.

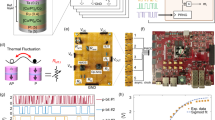

A number of authors13,14,15 have recognized that the physics of nanomagnets can be exploited for stochastic logic and natural random number generators to replace the complex circuitry that is normally used. However, these are individual stochastic circuit elements within the standard framework of complementary metal oxide semiconductor (CMOS) transistors, which provide the necessary gain and directionality. By contrast, what we are proposing in this paper are networks constructed out of magnet-based stochastic devices that have been individually engineered to provide transistor-like gain and directionality so that they can be used to construct large scale circuits without external transistors (Fig. 1a).

This paper defines a transynapse that can be interconnected to build probabilistic networks as shown schematically.

Next section describes a specific transynapse design based on experimentally benchmarked models which are then used to illustrate the use of transynapse networks to solve problems involving Boltzmann machines and Bayesian networks.

Feynman (1982) alluded to a probabilistic computer based on probabilistic hardware that could efficiently solve problems involving classical probability, contrasting it with a quantum computer based on quantum hardware that could efficiently solve quantum problems. This paper inspired much work on quantum computing, but we would like to draw attention to his description of a probabilistic computer: “… the other way to simulate a probabilistic nature, which I’ll call N .. is by a computer C which itself is probabilistic, .. in which the output is not a unique function of the input. … it simulates nature in this sense: that C goes from some .. initial state .. to some final state with the same probability that N goes from the corresponding initial state to the corresponding final state. … If you repeat the same experiment in the computer a large number of times … it will give the frequency of a given final state proportional to the number of times, with approximately the same rate … as it happens in nature.” The possibility of probabilistic computing machines has also been addressed by more recent authors16,17,18,19,20,21,22. The primary purpose of this paper is to introduce the concept of a ‘transynapse’, a device that can be interconnected in large numbers to build probabilistic computers (Fig. 1).

The transynapse combines a synapse-like function with a transistor-like gain and directionality and in the next section we describe a device that uses the established physics of nanomagnets to implement it. We present a specific design for the transynapse which is simulated using experimentally benchmarked models (Supplementary section 1) for established phenomena to demonstrate the stochastic sigmoid transfer function. Reciprocal and non-reciprocal networks are discussed next where the same models are used to show how transynapses can be used to build either of two fundamentally different class of networks, an Ising like network with symmetric interactions and a non-Ising network with directed interactions. We then present two proof-of-concept examples of belief networks, one reciprocal for Boltzmann machines and the other non-reciprocal for Bayesian networks, implemented using transynapses.

Transynapse: The Building Block

The transynapse consists of a WRITE unit and a READ unit (Figs 1 and 2), electrically isolated from each other. The WRITE unit of transynapse Ti sums a set of input signals IIN,j(t), integrates them over a characteristic time scale τr to obtain a quantity

Design for a transynapse: (a) Device structure: For our simulations we use the same design as that in Datta et al.23 which provides the required gain, fan-in and fan-out, making use of the established physics of the spin Hall effect (SHE) for the input and the magnetic tunnel junction (MTJ) for the output. (see also refs 32,33) However, instead of the deterministic mode described earlier, we operate it in a probabilistic mode as described next. (b) The WRITE and READ magnets are both initialized along their hard axis and allowed to relax in the presence of an exponentially decaying current (see inset) IIN(t) and decay time parameter τdec. The outputs obtained from a statistical average of 100 Monte Carlo runs for different input currents all fall on a single universal curve when plotted against Q, the time integrated current weighted by the factor  . The dashed curves 1 and 2 are obtained for nanomagnets with energy barriers 48 kBT and 12 kBT respectively and are described well by Eq. 4.

. The dashed curves 1 and 2 are obtained for nanomagnets with energy barriers 48 kBT and 12 kBT respectively and are described well by Eq. 4.

which determines the mean value of state S of the device through a sigmoidal function of the form

where I0 is a characteristic current. The READ unit produces multiple weighted output currents proportional to the average state  :

:

In this paper we use a specific design for this device following that described in Datta et al.23 which uses an input WRITE unit magnetically coupled to, but electrically isolated from an output READ unit. It provides the required gain, fan-in and fan-out, making use of experimentally benchmarked models (Supplementary section 1) for the established physics of the spin Hall effect (SHE) for the input and the magnetic tunnel junction (MTJ) for the output. However, for transynapse operation, we need to operate it in a probabilistic mode not considered before, where the input and output are not deterministic variables but stochastic ones. This could be done by using nanomagnets with low energy barriers (Eb < 5 kBT) that are in the super paramagnetic24 regime considering long enough programming times. The output (y-axis in Fig. 2b) could then be interpreted as the time averaged magnetization of a single magnet along its easy axis. However, in this paper we use a different approach as explained below.

For the simulations presented in this paper, nano-magnets are initialized along their hard axis at t = 0 and then allowed to relax. The output is obtained from a statistical average of the magnetization Mz along the easy axis obtained from 100 Monte Carlo runs based on the stochastic Landau-Lifshitz-Gilbert (LLG) equation, one for each magnet (WRITE and READ) coupled through a magnetic interaction as in ref. 23. The details are described in the methods section. Figure 2b shows that the numerically obtained average Mz is described well by the relation

where τr = fT(1 + α2)/(2αγHk), HK being the anisotropy field, γ, the gyromagnetic ratio and α is the damping parameter. Also  , where Ic is the switching current for the nanomagnet25, while the factor η depends on its energy barrier Eb and is given by the relation η ≈ 0.06(Eb/kBT)0.94 obtained from numerical simulations. Factor fT (6 for Eb = 48 kBT and 24 for Eb = 12 kBT) determines how fast the magnetization relaxes depending on the ambient temperature. The results were obtained using different (Fig. 2b inset) input currents of the form

, where Ic is the switching current for the nanomagnet25, while the factor η depends on its energy barrier Eb and is given by the relation η ≈ 0.06(Eb/kBT)0.94 obtained from numerical simulations. Factor fT (6 for Eb = 48 kBT and 24 for Eb = 12 kBT) determines how fast the magnetization relaxes depending on the ambient temperature. The results were obtained using different (Fig. 2b inset) input currents of the form  , but the resulting output is well described by a single curve Mz(Q) irrespective of the amplitude It0 and decay time parameter τdec. This independence to time-decay parameters suggests that the probability of finalizing a magnet in one of the two states essentially depends on the number (Ns where Q ∝ Ns) of Bohr magnetons (units of electron spin) imparted on it26. Indeed, similar underlying principles have been demonstrated experimentally in ref. 27 in somewhat different set ups for switching magnets from one state to the other in the short pulse regimes well above Ic.

, but the resulting output is well described by a single curve Mz(Q) irrespective of the amplitude It0 and decay time parameter τdec. This independence to time-decay parameters suggests that the probability of finalizing a magnet in one of the two states essentially depends on the number (Ns where Q ∝ Ns) of Bohr magnetons (units of electron spin) imparted on it26. Indeed, similar underlying principles have been demonstrated experimentally in ref. 27 in somewhat different set ups for switching magnets from one state to the other in the short pulse regimes well above Ic.

It is important to note the key attributes of the device that are needed to enable the construction of belief networks by interconnecting hundreds of devices. Firstly, it is important to ensure input-output isolation, which is achieved by having magnetically coupled WRITE and READ magnets separated by an insulator as shown. This separation would not be needed if the magnet itself were insulating (like YIG, Yttrium iron garnet). The second important attribute is its gain defined as the maximum output charge current relative to the minimum charge current needed to swing the probability from 0.5 (fully stochastic) to 1 (fully deterministic). This is the quantity that determines the maximum fan-out that is possible which is particularly important if we want a high degree of inter connectivity. The physics of SHE28,29,30,31 helps provide gain since for each device, it can be designed23,32,33 to provide more spin current to the WRITE magnet than the charge current provided by the READ unit of the preceding stage.

The third attribute of the proposed device is its ability to sum multiple inputs and this can be done conveniently since it is current-driven. A WRITE circuit consisting of a SHE metal like Tantalum provides a current-driven low impedance input, different from the voltage-driven high input impedance field-effect transistors (FET’s). The low input impedance ensures that the total current into the WRITE unit is determined by the output impedance of the READ units of preceding stages23,32,33. This impedance is set by the intrinsic resistance of the READ units which could be on the order of a kΩ if using magnetic tunnel junctions (MTJ’s) or could be much lower if using the inverse spin Hall effect (ISHE)34. In either case an external series resistor R could be used to raise the output impedance as shown in Fig. 2a.

VDD being the external voltage, RP and RAP, the parallel and anti-parallel resistance of the MTJ, RIN, the input resistance of the next device and Rj is the external series resistance which can be used to weight the outputs appropriately. The weighting of the output can also be accomplished by tuning VDD where multiple bipolar output weights sharing the same input can be implemented via a common WRITE unit with multiple READ units (An example of this is shown in the Bayesian network section)35.

We envision that the detailed physics used to implement the transynapse will evolve, especially the physics used for the WRITE, the READ and/or the weighting, since this field is in a stage of rapid development with new discoveries being reported on a regular basis. The input (or WRITE) circuit could utilize phenomena other than the SHE used here, just as the output (or READ) circuit could use mechanisms other than MTJ’s. Similarly, the nanomagnet can be initialized in a neutral state with modern voltage driven mechanisms36,37 like voltage controlled anisotropy, or with established methods like an external magnetic field38,39 or spin torque30,40,41, or thermal assistance42. Alternatively, as mentioned earlier nanomagnets in the super paramagnetic24 regime could be used with the mean state  defined by a time average instead of an ensemble average. The purpose of this manuscript is simply to establish the general concept of a transynapse that integrates a synapse-like behavior with a transistor-like gain and isolation, thus permitting the construction of compact large scale belief networks.

defined by a time average instead of an ensemble average. The purpose of this manuscript is simply to establish the general concept of a transynapse that integrates a synapse-like behavior with a transistor-like gain and isolation, thus permitting the construction of compact large scale belief networks.

Note also that our transynapses are assumed to communicate via charge current since that is a well-established robust form of communication. However, communication could be influenced through spin channels (as in all-spin logic40,43) or through spin waves requiring very different WRITE and READ units.

Reciprocal and Non-Reciprocal Networks

A key feature of transynapse is the flexibility it affords in adjusting the weight wji that determines the influence of one transynapse (Ti) on another (Tj), by adjusting the parameters of the READ unit of Ti. The weight wij on the other hand is controlled independently through the READ unit of Tj. If we choose wij = wji, we have a bidirectional or reciprocal network similar to the type described by an Ising model described by a Hamiltonian H. In such networks the probability Pn of a specific configuration,  is known to be given by the principles of equilibrium statistical mechanics.

is known to be given by the principles of equilibrium statistical mechanics.

where the energy En of configuration n is given by

Ising models are closely related to Boltzmann machines1,2,5,6,10,11 whose probabilities described by Eq. 6 seek configuration with low En. For example, with three transynapses connected through wij = wji > 0, En is minimized for configurations (111) and  with equal si. This is the ferromagnetic (FM) Ising model. But if wij = wji < 0, En would be a minimum if all si had opposite signs. Since this is impossible with three transynapses, the energy is lowest for all six configurations that have one ‘frustrated’ pair44,45:

with equal si. This is the ferromagnetic (FM) Ising model. But if wij = wji < 0, En would be a minimum if all si had opposite signs. Since this is impossible with three transynapses, the energy is lowest for all six configurations that have one ‘frustrated’ pair44,45:

The numerical simulation of the 3-transynapse network shows (Fig. 3a) this expected behavior with equal probabilities for configurations A, B, C and reduced probabilities for the two remaining configurations (111) and  for which all three pairs are frustrated. Situation is different when one of the bonds is directed as in Fig. 3b. Not surprisingly, the probability is highest for the configuration having T2 and T3 as the frustrated pair (configuration A in Eq. 8). Less obviously, configuration B with T1, T3 as the frustrated pair has a higher probability than configuration C with T1, T2 as the frustrated pair. This is because T2 only has one bond (from T1) dictating its state (no conflict) but T3 has two bonds (from T1 and T2) dictating its state which can be at odds with each other. Such configuration of bonds and the resulting configuration space probabilities as shown in Fig. 3b have no Ising analog.

for which all three pairs are frustrated. Situation is different when one of the bonds is directed as in Fig. 3b. Not surprisingly, the probability is highest for the configuration having T2 and T3 as the frustrated pair (configuration A in Eq. 8). Less obviously, configuration B with T1, T3 as the frustrated pair has a higher probability than configuration C with T1, T2 as the frustrated pair. This is because T2 only has one bond (from T1) dictating its state (no conflict) but T3 has two bonds (from T1 and T2) dictating its state which can be at odds with each other. Such configuration of bonds and the resulting configuration space probabilities as shown in Fig. 3b have no Ising analog.

(a) Three Transynapses are initialized and then left to relax while interacting in a pairwise manner. The strength of interactions depends on voltage VDD. The polarity of VDD for each transynapse is such that it favors the next transynapse to have an opposite state to its own as in anti-ferromagnetic (AF) ordering. Statistical information is then gathered from Monte-Carlo runs. There are a total of 23 configurations possible with their probabilities shown in (a). This is reminiscent of frustration in spin glasses44,45 also observed in Ising model as shown in the inset. Such bidirectional connections can be used for building Boltzmann machines10,11 closely related to Ising models. More on this in Fig. 4. (b) Changing one of the connections in part (a) to be directed as opposed to bi- directional lowers the probability of occurrence of some states in the final configuration resulting in reduced frustration. This is fundamentally not possible by inherently symmetric Hamiltonian based systems such as Ising model. Such directed connections can be used to represent causal influences in Bayesian networks3,4. More on this in Figs 5 and 6.

Note that our numerical results are all obtained directly by simulating a set of coupled LLG equations, one for each of the six magnets, two per transynapse. The time evolution of each magnet in each device is a function of its instantaneous state  , internal, external and thermally fluctuating fields (determined by temperature T), plus the spin torque

, internal, external and thermally fluctuating fields (determined by temperature T), plus the spin torque  it receives from other devices:

it receives from other devices:

Bi-directional interactions have both  and

and  but directional interactions have either

but directional interactions have either  or

or  . Methods section provides more detail.

. Methods section provides more detail.

Implementing Belief Networks

Boltzmann machine

The connection between Ising model of statistical mechanics46 and hard combinatorial optimization problems of mathematics has been known for decades47. Boltzmann machines10,11 and subsequently their restricted version for deep belief networks are Ising models in which the weights of interactions are learned and adjusted with breakthrough algorithms1,2,5. There is also widespread activity and innovation on the connection of inference, commonly used in belief networks and phase transitions in statistical physics (see e.g. ref. 48 for a thorough review). Figure 4 shows how networks described in this paper (Fig. 1) can mimic magnetic phase transition which is also a well known result of the Ising model. The caption provides more detail for the particular procedure used for obtaining this. Phase transition is evident as the rate of change of magnetization with respect to temperature exhibits a maximum followed by a decrease. This transition is not sharp because of the small lattice sizes used here (see ref. 49 for a more in-depth discussion). Solid line shows the analogous Ising model result with the same lattice size (4 by 4 array) using equilibrium laws of statistical mechanics. The peak exhibited is reminiscent of the Curie temperature of magnetic phase transition (Supplementary section 3). Indeed, the effective Curie temperature observed in these networks depends linearly on the strength of device to device communication set by VDD (Fig. 2). (Supplementary section 4 provides spontaneous magnetization curves with interactions of various strength). This is in agreement with Onsager’s50 results for a two dimensional array of ferromagnetic atoms for which TC is proportional to J-coupling strength. We take these as an indication that stochastic networks of transynapses could be used to construct (restricted) Boltzmann machines for deep belief networks where weights can be adjusted by the bipolar voltages applied to transypases or by load resistances at the output of transynapses (Fig. 2).

A 4 by 4 array of transynapses with nearest neighbor connections (The inset is intended to illustrate these aspects and not the directionality of connections).

Same VDD (VDD < 0) is applied to all transynapses making the interactions favor all devices in the same state similar to ferro-magentic ordering (FM). At each temperature T (scaled by T0 ≡ J/kB, where J is the coupling strength. See also Supplementary sections 3 and 4), the circuit is initialized and left to interact while the network decides on a final state out of 216 possible states. After each trial, the magnetization of the array is obtained by summing over all transynapse states leading to an average magnetization of the array based on the total number of Monte Carlo runs. From this data, differential of magnetization with respect to temperature can be obtained as shown. This is reminiscent of magnetic phase transition49 exhibiting a Curie temperature in Ising model depicted by the solid line. In deep belief networks1,2,8,9, the closely related restricted Boltzmann machines10,11 trained by breakthrough algorithms that determine the interactions are used to solve search, detection and inference problems5,7,8.

Bayesian network

Symmetric interactions are inherent to Hamiltonian based systems as in Ising model and Boltzmann machines. On the other hand, directed interactions have their own prominence in Bayesian networks3,4. Figure 5a shows a 3-transynapse network, with each transynapse representing one of three variables which we could call carrot, stick and performance as shown in Table 1. These variables can be in one of two possible states with distinct probabilities. The transynapse network is interconnected to reflect the causal interconnections among the three variables. The carrot affects both the state of stick and the state of performance through the voltages VSC and VPC which determine the weights wSC and wPC. The only other causal effect is that of the stick on the performance which is reflected in the voltage VPS and the resulting weight wPS.

(a) Directed circuits of Transynapses (Fig. 1) can represent Bayesian networks in which directionality can represent causality3,4. Unlike Hamiltonian systems (e.g. Ising model), the interactions are not symmetric. Here, carrot influences both the state of the stick and the performance while stick also affects performance. (b) Direct simulation of (a). Figure shows a probabilistic gate in which diagonal lines represent perfect correlation of stick with carrot (probabilistic COPY) using VSC = −1 and perfect anti-correlation (probabilistic NOT) using VSC = +1. (voltages and currents are normalized by the magnitude required for deterministic switching). When IS ≠ 0, statistical correlation varies e.g. the stick can be in the no-punishment mode irrespective of the carrot.

A direct simulation of this 3-transynapse network using coupled LLG equations (Eq. 10) yields the plot shown in Fig. 5b. With VSC = −1, we get the diagonal lines reflecting perfect correlation of the stick with the carrot, while with VSC = +1, we get the other diagonal line reflecting perfect anti-correlation. (Voltages and currents are normalized by the magnitude required for deterministic switching). We could view these respectively as a COPY gate and a NOT gate with probabilistic inputs and outputs. The other curves shown in Fig. 5b correspond to IS ≠ 0 reflecting a situation where the stick state is not entirely controlled by the carrot, but has a probability of no punishment irrespective of the carrot.

The network can naturally generate probabilities of various variables. Consider e.g. the triangle (scenario A) in Fig. 5b where carrot has 0.6 probability of reward (scenario B is the square). Instead of performing the necessary algebra of  to obtain the probability of stick being in the punishment mode, the transynapse network takes in IC = −0.02, VSC = 1, IS = 0.9 and produces the directly observable probability of stick being in punishment mode. (Voltages and currents are normalized by the magnitude required for deterministic switching). This generalizes to more variables and an example for three is discussed next.

to obtain the probability of stick being in the punishment mode, the transynapse network takes in IC = −0.02, VSC = 1, IS = 0.9 and produces the directly observable probability of stick being in punishment mode. (Voltages and currents are normalized by the magnitude required for deterministic switching). This generalizes to more variables and an example for three is discussed next.

Figure 6a,b shows how the network in Fig. 5a can be used in predictive mode based on known casual connections3,4 among different variables which determine the electrical signals Vij and Ii (explicitly provided in the caption). These in turn can provide the values in the (conditional) probability tables of Fig. 6a. For example, the element indexed by  in the p(S|C) table is the mean value of the state of stick in the

in the p(S|C) table is the mean value of the state of stick in the  mode when C = 1. (This can also be obtained independently by dictating the carrot is in the reward (C = 1) state e.g. by providing a strong bias (IC) and finding the mean value for the state of stick due to VSC) While the likelihood of better performance can be found from tables of Fig. 6a by calculating p(P = 1|, S, C), this is directly observable from the mean value of the state of ‘performance’ which is naturally generated by the network as provided in Fig. 6b. Alternatively, the network can address inference problems. Suppose performance is better, is it due to carrot or stick or both? For instance, the likelihood that performance is better because of reward is essentially p(C = 1|P = 1). This can be obtained by the algebra,

mode when C = 1. (This can also be obtained independently by dictating the carrot is in the reward (C = 1) state e.g. by providing a strong bias (IC) and finding the mean value for the state of stick due to VSC) While the likelihood of better performance can be found from tables of Fig. 6a by calculating p(P = 1|, S, C), this is directly observable from the mean value of the state of ‘performance’ which is naturally generated by the network as provided in Fig. 6b. Alternatively, the network can address inference problems. Suppose performance is better, is it due to carrot or stick or both? For instance, the likelihood that performance is better because of reward is essentially p(C = 1|P = 1). This can be obtained by the algebra,  , or directly observed by taking the mean value of the state of carrot in the reward mode when performance is better. The resulting values are provided in Fig. 6c.

, or directly observed by taking the mean value of the state of carrot in the reward mode when performance is better. The resulting values are provided in Fig. 6c.

(a) (Conditional) probability tables: Two scenarios ((A) triangle and (B) square in Fig. 5b) are considered for the state of carrot. Such scenarios are typically provided by the problem statement which determines the voltages and currents applied to transynapses based on their transfer function (Fig. 2b). They in turn ensure that the network generates the probability values as shown. VSC = 1, VPC = −0.4, VPS = −0.5, IC = −0.02, IS = 0.9, IP = −0.2 are used for scenario A. Same values are used for scenario B except that IC = 0.1. (voltages and currents are normalized by the magnitude required for deterministic switching). (b) Likelihood of better performance can be directly observed without using (a) and carrying out the algebra for p(P = 1|S, C). (c) Inference can be addressed by such networks. For example, the likelihood that reward has caused better performance is the mean value of carrot in the reward state for cases that have better performance.

Concluding Remarks

Probabilistic computing is a thriving field of computer science and mathematics that deals with extracting knowledge from available data to guide decisive action. The work in this area is largely based on deterministic hardware and major advances can be expected if one could build probabilistic hardware to simulate probabilistic logic. In this paper we define a building block for such stochastic networks, which we call a transynapse combining the transistor-like properties of gain and isolation with synaptic properties. In principle, many implementations are possible including those that make at least some use of standard CMOS circuitry. We present a possible implementation based on the established physics of nano magnets for the transynapse and use experimentally benchmarked models to illustrate the implementation of both Boltzmann machines and Bayesian networks. More realistic examples will be addressed in future publications51.

Methods and Verification

This section outlines the methodology and its implementation underlying the simulations that have been carried out. It includes the steps taken to verify the model against well known principles or experimental data.

The time evolution and final state of each nano-magnet  is represented and simulated by the Landau-Lifshitz-Gilbert (LLG) equation:

is represented and simulated by the Landau-Lifshitz-Gilbert (LLG) equation:

where q is the charge of electron, γ is the gyromagnetic ratio, α is the Gilbert damping coefficient and Ns ≡ MsΩ/μB (Ms: saturation magnetization, Ω: volume) is the net number of Bohr magnetons comprising the nanomagnet and  is the spin current entering the magnet.

is the spin current entering the magnet.

This equation is transformed to its standard mathematical form (see e.g. ref. 40) and is solved numerically using a second order Runge-Kutta method (a.k.a Heun’s method) in MATLAB. This methodology essentially applies the Stratonovich stochastic calculus to the stochastic integration during time dependent simulations involving thermal fluctuations. The inclusion of thermal fluctuations in LLG and its implementation has been verified against equilibrium laws of statistical mechanics in ref. 40.

, the spin current entering the magnet, can have components both due to the Slonczweski torque as well as the field-like torque. The inclusion of spin transfer torque in LLG (last term) and its implementation has been verified against experimental data in ref. 26. In this manuscript,

, the spin current entering the magnet, can have components both due to the Slonczweski torque as well as the field-like torque. The inclusion of spin transfer torque in LLG (last term) and its implementation has been verified against experimental data in ref. 26. In this manuscript,  is generated by the spin Hall effect as outlined in ref. 23 where I is the charge current entering the W unit generated by the READ stage of the previous device (see Figs 1, 2, 3)23. This is essentially how Transynapses communicated with each other whereby one drives the next; the dynamics of each one being governed by the coupled LLG equations that describe the dynamics of READ and WRITE magnets.

is generated by the spin Hall effect as outlined in ref. 23 where I is the charge current entering the W unit generated by the READ stage of the previous device (see Figs 1, 2, 3)23. This is essentially how Transynapses communicated with each other whereby one drives the next; the dynamics of each one being governed by the coupled LLG equations that describe the dynamics of READ and WRITE magnets.

The magnetic field,  , represents both internal and external fields:

, represents both internal and external fields:

where  is the uniaxial anisotropy field with z as the easy axis and

is the uniaxial anisotropy field with z as the easy axis and  is the demagnetizing field with y as the out of plane hard axis for in-plane magnets. Hd is zero for perpendicular anisotropy magnets.

is the demagnetizing field with y as the out of plane hard axis for in-plane magnets. Hd is zero for perpendicular anisotropy magnets.

The thermal fluctuating field,  , has the following statistical properties:

, has the following statistical properties:

where δ(t) is the Dirac delta function, δij is the Kronecker delta and indices i and j are labels for the field’s vector components. T is temperature and kB is the Boltzmann constant.

The coupling field,  , accounts for the magnetic interaction of the READ (R) and WRITE (W) magnets within each device as introduced and described in ref. 23 and illustrated in Fig. 2a of the main manuscript (There is no magnetic coupling envisioned between various devices here. Device to device communication happens via charge currents as described earlier.). This reference describes functionality of the spin switch and the governing equations and presents the coupled LLG equations describing the time dynamics of R and W magnets. The modeling of magnetic coupling between READ and WRITE magnets for in-plane magnetic materials has been described in detail in ref. 52 and verified against experimental data. Here, we review a brief description of how this coupling is calculated for perpendicular magnetic materials (PMA) along with the validation of its implementation against experimental data shown in the first figure of Supplementary information. For this, we follow the methodology described in ref. 53. Within each device, W and R magnets exert a magnetic field on the other. For example, the field exerted on the READ magnet from the WRITE magnet is

, accounts for the magnetic interaction of the READ (R) and WRITE (W) magnets within each device as introduced and described in ref. 23 and illustrated in Fig. 2a of the main manuscript (There is no magnetic coupling envisioned between various devices here. Device to device communication happens via charge currents as described earlier.). This reference describes functionality of the spin switch and the governing equations and presents the coupled LLG equations describing the time dynamics of R and W magnets. The modeling of magnetic coupling between READ and WRITE magnets for in-plane magnetic materials has been described in detail in ref. 52 and verified against experimental data. Here, we review a brief description of how this coupling is calculated for perpendicular magnetic materials (PMA) along with the validation of its implementation against experimental data shown in the first figure of Supplementary information. For this, we follow the methodology described in ref. 53. Within each device, W and R magnets exert a magnetic field on the other. For example, the field exerted on the READ magnet from the WRITE magnet is  where [D] is a 3 by 3 tensor describing the effect of each elemental volume of W magnet on each elemental volume of the R magnet integrated over the volume of both

where [D] is a 3 by 3 tensor describing the effect of each elemental volume of W magnet on each elemental volume of the R magnet integrated over the volume of both

To validate the approach and its implementation, we made use of the available experimental data for the coupling fields that have been measured in magnetic tunnel junctions. First figure of the Supplementary Information shows the comparisons between the calculated values from the model and the data from various experiments. These comparisons show that the model is generally in good agreement with experimental demonstrations.

Additional Information

How to cite this article: Behin-Aein, B. et al. A building block for hardware belief networks. Sci. Rep. 6, 29893; doi: 10.1038/srep29893 (2016).

References

G. E. Hinton, S. Osindero & Y. Teh . A fast learning algorithm for deep belief nets. Neural Computation 18, 1527–1554 (2006).

G. E. Hinton & R. R. Salakhutdinov . Reducing the dimensionality of data with neural networks. Science 313, 5786, 504–507 (2006).

J. Pearl . Causality: Models, Reasoning and Inference vol. 29, (Cambridge University Press, New York 2000).

J. Pearl . Probabilistic Reasoning in intelligent systems: Networks of plausible inference (Morgan Kaufmann 2014).

Y. Bengio, P. Lamblin, D. Popovici & H. Larochelle . Greedy layer-wise training of deep networks. In Advances in Neural Information Processing Systems 19, (NIPS’06), 153–160 (2007).

Y. Bengio . Learning deep architectures for AI. Foundations and trends in machine learning 2, 1, 1–27 (2009).

Y. Bengio, A. Courville & P. Vincent . Representation Learning: A Review and New Perspectives. IEEE Transactions on Pattern Analysis and Machine Intelligence 35, 8, 1798–1828 (2013).

G. E. Hinton . Training products of experts by minimizing contrastive divergence. Neural Computation 14, 1771–1800 (2002).

H. Lee, R. Grosse, R. Ranganath & A. Y. Ng . Convolutional deep belief networks for scalable unsupervised learning of hierarchical representations. In proceedings of the 26th Annual International Conference on Machine Learning, pp. 609–616, ACM (2009).

P. Smolensky . Parallel Distributed Processing : vol. 1, Foundations pp. 194–281, D. E. Rumelhart, J. L. McClelland Eds (MIT Press, Cambridge, 1986).

G. E. Hinton & T. J. Sejnowski . Learning and relearning in Boltzmann machines. Parallel Distributed Processing: Explorations in the Microstructure of Cognition Volume 1, Foundations (Cambridge University Press, New York, 1986).

J. Misra & A. Saha . Artificial neural networks in hardware: A survey of two decades of progress. Neurocomputing 74, 239–255 (2010).

R. Venkatesan et al. SPINTASTIC: Spin-based Stochastic Logic for Energy-efficient Computing. Design, Automation and Test in Europe Conference and Exhibition (DATE), 1575–1578 (2015).

W. H. Choi et al. A Magnetic Tunnel Junction Based True Random Number Generator with Conditional Perturb and Real-Time Output Probability Tracking . International Electron Devices Meeting (IEDM), 12.5.1–12.5.4 (2015).

A. F. Vincent et al. Spin-Transfer Torque Magnetic Memory as a Stochastic Memristive Synapse for Neuromorphic Systems. IEEE transactions on biomedical circuits and systems 9, 2, 166–174 (2015).

S. Khasanvis et al. Physically equivalent magneto-electric nanoarchitectures for probabilistic reasoning, Proceedings of International Symposium on Nanoscale Architectures (NANOARCH), pp. 25–26 (2015).

S. Khasanvis et al. Self-similar Magneto-electric Nanocircuit Technology for Probabilistic Inference Engines. IEEE Transactions on Nanotechnology 14(6), 980–991 (2015).

V. Mansinghka & E. Jonas . Building fast Bayesian computing machines out of intentionally stochastic, digital parts. Pre-print (arXiv: 1402.4914v1 (2014).

H. Chen, C. D. Fleury & A. F. Murray . Continuous-Valued Probabilistic Behavior in a VLSI Generative Model. IEEE Transactions on Neural Networks 17, 3, 755–770 (2006).

H. B. Hamid, A. F. Murray, D. A. Laurenson & B. Cheng . Probabilistic computing with future deep submicrometer devices: a modeling approach. International symposium on circuits and systems 2510–2513 (2005).

B. Behin-Aein, A. Sarkar & S. Datta . Tunable mesoscopic interactions in a nanomagnet array, see Chapter 5, PhD thesis, Angik Sarkar, Purdue University (2012).

B. Behin-Aein . Computing multi-magnet based devices and methods for solution of optimization problems, United States Patent, US 20140043061 A1 (2012).

S. Datta, S. Salahuddin & B. Behin-Aein . Non-volatile spin switch for Boolean and non-Boolean logic. Applied Physics Letters 101, 252411.1–5 (2012).

N. Locatelli et al. Noise-Enhanced Synchronization of Stochastic Magnetic Oscillators. Physical Review Applied 2, pp. 034009, 1–6 (2014).

J. Z. Sun . Spin-current interaction with a monodomain magnetic body: A model study. Physical Review B 62, 570–578 (2000).

B. Behin-Aein, A. Sarkar, S. Srinivasan & S. Datta . Switching energy-delay of all spin logic devices. Applied physics Letters 98, 123510.1–123510.3 (2011).

D. Bedau et al. Spin-transfer pulse switching: From the dynamic to the thermally activated regime. Applied Physics Letters 97, 262502.1–3 (2010).

L. Liu, Y. Li, H. W. Tseng, D. C. Ralph & R. A. Buhrman . Spin-torque switching with giant spin Hall effect. Science 336, 6081, 555–558 (2012).

L. Liu, O. J. Li, T. J. Gudmundsen, D. C. Ralph & R. A. Buhrman . Current-induced switching of perpendicularly magnetized layers using spin torque from the spin Hall effect. Physical Review Letters 109, 096602.1–4 (2012).

L. You et al. Switching of perpendicularly polarized nanomagnets with spin orbit torque without an external magnetic field by engineering a tilted anisotropy. Proceedings of National Academy of Sciences 112, 33, 10310–10315 (2015).

S. Fukami, C. Zhang, S. DuttaGupta, A. Kurenkov & H. Ohno . Magnetization switching by spin–orbit torque in an antiferromagnet–ferromagnet bilayer system. Nature Materials 15, 535–541 (2016).

S. Datta, V. Q. Diep & B. Behin-Aein . What constitutes a nanoswitch? A perspective. In Emerging Nanoelectronic Devices Chapter 2, A. Chen, J. Hutchby, V. Zhirnov, G. Bourianoff Eds (Wiley, New York, 2015).

B. Behin-Aein, J.-P. Wang & R. Weisendanger . Computing with Spins and Magnets. MRS Bulletin 39, 696–702 (August 2014).

Y. Niimi et al. Giant spin Hall effect induced by skew scattering from Bismuth impurities inside thin film CuBi alloys. Physical Review Letters 109, 156602–156606 (2012).

V. Q. Diep, B. Sutton, B. Behin-Aein & S. Datta . Spin switches for compact implementation of Neuron and Synapse. Applied Physics Letters 104, 222405.1–5 (2014).

W.-G. Wang, M. Li, S. Hageman & C. L. Chien . Electric-field-assisted switching in magnetic tunnel junctions. Nature materials 11, 64–68 (2012).

P. Khalili & K. Wang . Voltage-controlled MRAM: Status, challenges and prospects. EE Times (February 25, 2013).

A. Imre et al. Majority Logic Gate for Magnetic Quantum-Dot Cellular Automata. Science 311, 205–208 (2006).

B. Behin-Aein, S. Salahuddin & S. Datta . Switching energy of ferromagnetic logic bits. IEEE Transactions Nanotechnology 8, 505–514 (2009).

B. Behin-Aein, D. Datta, S. Salahuddin & S. Datta . Proposal for an all spin logic device with built-in memory. Nature Nanotechnology 5, 266–270 (2010).

A. Brataas, A. D. Kent & H. Ohno . Current induced torques in magnetic materials. Nature Materials 11, 372–381 (2012).

I. L. Prejbeanu et al. Thermally assisted switching in exchange-biased storage layer magnetic tunnel junctions. IEEE Transactions on Magnetics 40, 4 (2004).

B. Behin-Aein, A. Sarkar & S. Datta . Modeling circuits with spins and magnets for all-spin logic. Proceedings of European Solid-State Device Conference, pp. 36–40 (2012).

K. H. Fischer & J. A. Hertz . Spin Glasses (Cambridge University Press, New York 1991).

D. S. Fisher, G. M. Grinstein & A. Khurana . Theory of random magnets. Physics Today 41, 12, 56–67 (1988).

B. A. Cipra . An Introduction to the Ising Model. American Mathematics Monthly 94, 937–959 (1987).

S. Kirkpatrick, C. D. Gelatt & M. P. Vecchi . Optimization by simulated annealing. Science 220, 4598, 671–680 (1983).

L. Zdeborova & F. Krzakala . Statistical Physics of Inference: Thresholds and algorithms, http://arxiv.org/abs/1511.02476 (2015).

Murty S. S. Challa, D. P. Landau & K. Binder . Finite-size effects at temperature-driven first-order transitions, Physical Review B 34, 1841–1852 (1986).

L. Onsager . Crystal statistics I. A two-dimensional model with an order-disorder transition. Physical Review, Series II 65, 117–149 (1944).

B. M. Sutton, K. Y. Camsari, B. Behin-Aein & S. Datta . Intrinsic optimization using stochastic nanomagnets. preprint (SRC Publication ID 087933) (2016).

V. Diep . Transistor-like spin nano-swithces: physics AND applications, PhD Disertaion, Chapter 3 (2015).

A. J. Newell, W. Williams & D. J. Dunlop . A Generalization of the Demagnetizing Tensor for Nonuniform Magnetization. Journal of Geophysical Research-Solid Earth 98(B6), 9551–9555 (1993).

Acknowledgements

VD was supported by the Center for Science of Information (CSoI), an NSF Science and Technology Center, under Grant agreement No. CCF-0939370.

Author information

Authors and Affiliations

Contributions

B.B.-A. and S.D. wrote the paper and provided the conception and design of the research. B.B.-A. and V.D. performed the simulations. All authors discussed all figures and results of the paper.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Electronic supplementary material

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Behin-Aein, B., Diep, V. & Datta, S. A building block for hardware belief networks. Sci Rep 6, 29893 (2016). https://doi.org/10.1038/srep29893

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep29893

This article is cited by

-

Public support for counterterrorism efforts using probabilistic computing technologies to decipher terrorist communication on the internet

Current Psychology (2023)

-

Unconventional computing based on magnetic tunnel junction

Applied Physics A (2023)

-

Ising machines as hardware solvers of combinatorial optimization problems

Nature Reviews Physics (2022)

-

Hardware implementation of Bayesian network building blocks with stochastic spintronic devices

Scientific Reports (2020)

-

Demonstration of a pseudo-magnetization based simultaneous write and read operation in a Co60Fe20B20/Pb(Mg1/3Nb2/3)0.7Ti0.3O3 heterostructure

Scientific Reports (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.