Abstract

The movement of the eyes has been the subject of intensive research as a way to elucidate inner mechanisms of cognitive processes. A cognitive task that is rather frequent in our daily life is the visual search for hidden objects. Here we investigate through eye-tracking experiments the statistical properties associated with the search of target images embedded in a landscape of distractors. Specifically, our results show that the twofold process of eye movement, composed of sequences of fixations (small steps) intercalated by saccades (longer jumps), displays characteristic statistical signatures. While the saccadic jumps follow a log-normal distribution of distances, which is typical of multiplicative processes, the lengths of the smaller steps in the fixation trajectories are consistent with a power-law distribution. Moreover, the present analysis reveals a clear transition between a directional serial search to an isotropic random movement as the difficulty level of the searching task is increased.

Similar content being viewed by others

Introduction

It is a common misconception to believe that memories are stored in the brain the same way a movie is stored in a hard drive. Remembering, just like seeing and listening, is in fact an act of construction much more complex than usually thought, where vasts amounts of information are processed and interpreted by the brain in order to create what we call memories and pretty much everything else we call reality1. The field dedicated to the study of these types of processes is called Cognitive Science, which took its current form in the first half of the 20th century out of a mishmash of sciences, including, among others, Psychology, Linguistics and Computer Science. More precisely, the main challenge of the Cognitive Science is to answer questions related to the way in which the brain processes available information and how this shapes behaviour2.

As theoretical entities, cognitive processes cannot be directly observed and measured3. Thus, in order to be able to study them, we need to rely on observations about the behaviour of individuals. A very often utilized approach is to follow the eye movement during cognitive tasks. By the end of the XIX century, it was still thought that the eyes smoothly scanned the line of text during reading. Louis Émile Javal4, in his unprecedented study of 1879, observed that the eyes actually move in a succession of steps, called fixations, followed by jerk-like movements, called saccades, that are too fast to capture new visual information5. The method of eye-tracking as a fundamental source of information about cognition was finally introduced through the seminal work of Yarbus6. This study provided unambiguous demonstration for the fact that the movement of the eyes is strongly correlated with the cognitive objectives of the individual.

A cognitive process that benefits the most from the study of eye movement is the visual search for hidden objects7, like when trying to find a person in a crowded place, or a 2 inches nail inside a box of nails of various sizes. An early theory related to this process is due to Treismann and Gelade, called Feature Integration Theory (FIT)8. This theory deals with attention, a kind of mental focus that can be directed to a desired region of the visual scene, therefore enhancing the perceptual sensitivity in that region. The FIT proposes that visual search tasks are divided into two stages. The first is a detection stage, in which a small set of simple separable features like color, size and orientation are identified in the elements inside the optical array. This stage is a preattentive one, that is, attention need not be directed at each element of the image in order to perform detection, all feature registration takes place in parallel across the whole visual scene. In the second stage, called integration, the features identified in the previous stage are combined in order to conceive more complex characteristics. This is an attentive stage, thus it is much slower, requiring the observer to scan each element of the image serially.

It is interesting to note that the FIT resembles a broader category of paradigms, namely the dual process models9. Under this conceptual framework, complex cognitive tasks usually consist of two systems, that essentially differ in which Kahneman10 referred as (1) effortless intuition and (2) deliberate reasoning. The system (1) comprises processes that are fast, intuitive and can be performed automatically and in parallel, like when trying to identify the state of spirit of a person based on her/his facial expression. These processes are acquired through habit, being usually inflexible and hard do control or modify. The system (2), on the other hand, is characterized by slow, serial but extremely controlled processes, which is the case, for example, when one tries to solve a mathematical equation.

The FIT was thoroughly studied and expanded during subsequent years11. Although regarded, in its initial form, as an oversimplification12, it surely represents a formidable conceptual starting point point for research on the subject. Two particular assumptions of the FIT, namely that the whole optical array is homogeneously analyzed and that attention can be displaced independently of the eye movements (thus being called covert attention), are of interest to be expanded upon13. It is widely known that visual acuity falls rapidly from the point of fixation14, being confined to a small region called fovea. While there is little doubt about the existence of covert attention15, it has been argued that situations in which covert attention performs better than overt eye movements are unusual and restricted to laboratory tests13. These led to further investigation about the function of eye movements in visual search16,17,18.

Eye movements are composed of fixations and saccades, but even during fixations, the eyes are not completely still. In fact, fixational eye movements (FEyeM) include drift, tremor and microsacades. The drift corresponds to the erratic and low velocity component of FEyeM. The tremor is irregular and noise-like with very high frequency, while microsacades correspond to small rapid shifts in eye position akin to saccades, but preferentially taking place on horizontal and vertical directions19. Whether or not each one of these movements play an effective role in visual cognition still represents a rather controversial issue20,21,22, but it is known widespread that, if the FEyeM halt, visual perception stops completely. Previous attempts to model eye movements have been mainly devoted to describe the sequence of fixations and saccades in terms of stochastic processes23 like regular random walks24. Very often, the gaze is considered as a random walker subjected to a potential extracted from a saliency map, namely a field that depends on the particular features of the image under inspection, such as color, intensity and orientation25,26,27,28.

Recent research on visual cognition has been directed to the development of experimental and analytical methods that can potentially elucidate the interplay between different components of cognitive activities and how their interactions give rise to cognitive performance29. While the detection and integration processes mentioned before represent basic components of visual cognition that can be investigated separately, the way they interact should be relevant for the comprehension of more intricate visual tasks. Therefore, it is of paramount interest to determine if cognitive dynamics is dominated by components or interactions. Here we show through eye-tracking experiments that the cognitive task of visual search for hidden objects displays typical statistical signatures of interaction-dominated processes. Interestingly, by increasing the difficulty level of the visual task, our results also indicate that the eye movement changes from a serial reading-like (systematic) to an isotropic (random) searching strategy.

Results

Visual search experiments have been performed with targets hidden in two different types of disordered substrate images (see Methods for details). In the first, as depicted in Fig. 1, the subjects were asked to search for a target (number 5) in an image with distractors (numbers 2) placed on a regular array. Figure 2 shows an example of the second type of test, where we utilized images from the book series “Where's Wally?”30. These last can be considered as very complex images, since distractors are irregularly placed in an off-lattice configuration and specially drawn to closely resemble the target. The resulting image designed under these conditions frequently leads to a searching task of enhanced difficulty. The analysis of the results from the two tests enabled us to identify general statistical patterns as well as particular features in the eye movement that are related with the irregularity and complexity of the image adopted in the eye-tracking experiments.

Search over 5-2 lattices.

The subjects try to find a single number 5 in an array of red and green numbers 2 (distractors). Of course, the larger the size of the array (number of distractors), the more difficult is the searching task. Two distinct types of searching patterns are clearly observed. The systematic search shows an anisotropic characteristic, as in (a) and (b), with the eye moving more frequently in a particular direction, horizontally most often, but also vertically for some subjects. In the case of random search, as in (c), the eyes are likely to move equally in any direction. Our results also show that the frequency with which the subjects follow the systematic pattern decreases with the difficulty of the test. Two thirds of the recordings (42 out of 63), in the case of difficulty 0, correspond to systematic searches, while only half of the recordings (16 out of 32) displayed this behaviour with tests of difficulty 1. In the presence of a large number of distractors, most of the subjects prefer to follow a random search strategy. This is the case with tests of difficulty 2, where only one fourth of the recordings (6 out of 24) show systematic behaviour.

Where’s Wally?

On the left, we show the result of a typical eye-tracker recording of a search task on a complex landscape (not shown)30. The basic elements of eye movement are clearly present, namely the numerous sets of fixation points connected by large jumps (saccades)5. In this particular searching test, the points of fixation bunch up around some regions, where certain details of the image demand more attention than others, however, one can not perceive any systematic pattern in the trajectory of the eyes. On the right, part of the image is enlarged, where the red circle indicates the location of the target. The recording process ends when the target is found.

In the case of the 5-2 lattice tests, the typical trajectories shown in Fig. 1 indicate that, when the number of distractors is small, most subjects performed systematic searches, that is, the task is accomplished in a manner that resembles a person reading a text, for example, from left to right and/or from top to bottom. By increasing the number of distractors, a transition can be observed from this directional (systematic) trajectory to an isotropic random strategy of searching for the large majority of the experiments. Precisely, systematic patterns have been observed in two thirds of the eye-tracking recordings (42 out of 63) for difficulty 0 (see Fig. 1a), half of the recordings (16 out of 32) for difficulty 1 (see Fig. 1b) and only one fourth of the recordings (6 out of 24) for difficulty 2 (see Fig. 1c). No discernible systematic searches were observed in the case of “Where's Wally?” tests.

Next, we analyze the size distributions of gaze jumps calculated for the raw data obtained from eye-tracking experiments. By definition, the size of a jump in this case corresponds to the distance, measured in number of pixels, covered by the eye gaze during each recording step of the eye-tracker device, adjusted here for approximately 17 milliseconds. Strikingly, as depicted in Figs. 3, all tests produced alike distributions of gaze jumps, regardless of the subjects, complexity of the tests, or the search strategy (regular or random). This universal shape reflects the fixation-saccade duality of the eye movement and clearly points to a superposition of behaviours instead of a description in terms of pure monomodal distributions31,32.

Log-log plots of the distributions of gaze jump sizes.

The size of a jump corresponds to the distance, measured in number of pixels, covered by the eye gaze in a interval of 17 milliseconds. The logarithmic plots on the top are the distributions of jump sizes obtained for different subjects and for each of the tests performed, namely “Where’s Wally?” and 5-2 lattices with difficulties 0, 1 and 2. Despite small variations, all distributions show a similar quantitative behaviour. This “universal” statistical signature of the searching process can also be detected from the results displayed on the bottom panel, where the distributions of gaze jump sizes averaged over all subjects are shown. The similar shape observed in all distributions suggests that identical mechanisms control the amplitude of the gaze shift, regardless of the systematic or random aspects of the searching movements on the 5-2 lattices and the distinctive features of the underlying arrangement of distractors that compose the “Where’s Wally?” landscapes. The shaded area delimits a depression region that appears systematically in all distributions, where the sizes of fixational and saccadic eye movements overlap.

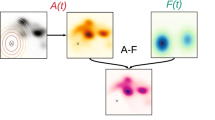

The presence of two modes separated by a slight depression that marks the overlap region can be observed in practically all jump size distributions of the raw data. Such a behaviour strongly suggests the need for a filtering process through which fixations and saccades can be adequately identified and their statistical properties independently studied. With this purpose, here we apply a modified version of the fixation filter developed by Olsson33, as described in the Methods section. As shown in Figs. 4 and 5, the resulting distributions of jump sizes for fixational movements obtained for 5-2 and “Where's Wally?” tests, respectively, also display the same statistical signature. Precisely, for gaze steps larger than 10 px, the distances Δr follow typical power-law distributions,

with a statistically identical exponent, α ≈ 2.9, for all tests (see Table 1). For gaze steps smaller than 10 px, the distributions display approximately uniform behaviour, possibly due to the fact that, in this scale, eye tremor is of the order of drift, although this hypothesis cannot be tested with the time resolution used in our measurements34. Once identified through the filtering process, the analysis of the saccadic movements in all tests reveals that the distributions of sizes for this type of eye jump can be well described in terms of a log-normal distribution,

where the parameters µ and σ correspond to the average and variance of the logarithm of the saccade length, respectively. Once more, the fact that a single distribution function can properly describe the general statistical features of different searching tests suggests that same underling mechanisms control the cognitive task under investigation. It is interesting to note, however, that the numerical values of the estimated parameters of the distributions depend on the details of the test. For instance, in the case of 5-2 tests, the mode of the distribution (the most probable length) decreases systematically with the difficulty of the searching task, indicating that saccadic movements somehow adapt to the complexity of the image.

. The error represents a bootstrap estimation of the 95% confidence interval45

. The error represents a bootstrap estimation of the 95% confidence interval45Jump sizes distributions of filtered data for the 5-2 searching tasks.

For each difficulty level, the corresponding data has been averaged over all subjects. By applying the filter developed by Olsson33 to the raw data, we can distinguish between fixational and saccadic movements, so that the jump size distributions for each mechanism of eye movement can be studied separately. For better visualization, the resulting distribution curves are shifted vertically by a factor of 1/16 and 16, in the case of difficulty 0 (black) and difficulty 2 (red), respectively. As depicted, the tails of the fixation curves can be adequately fitted by power-laws, P(Δr) ∝ Δr−α (dashed lines), with an exponent, α ≈ 2.9, for all tests (see Table 1). The distributions of jump sizes for the saccadic movements follow a quite different behaviour, which is compatible with a log-normal distribution, as the best fits to the three data sets show (dashed lines) on the left panel. The corresponding fitting parameters are presented in Table 1. Interestingly, the most frequent length of the saccades (vertical solid lines) decreases with the difficulty of the test. This could possibly happen either because the distractors (numbers 2) are simply smaller in the more difficult tests or the saccades are influenced by the colors of the distractors (that form relatively smaller clusters in more difficult settings), or a conjunction of both effects.

Jump sizes distributions of filtered data for the “Where’s Wally?” searching tasks.

The top panels on the left and right show the results for fixational and saccadic gaze jumps, respectively, calculated for different subjects. On the bottom, we show the same distributions, but now averaged over all subjects. The dashed lines correspond to the best fits to the data sets of power-laws, for the fixational movements and log-normal distributions, for the saccadic movements. The statistical features of both mechanisms of eye movement observed here are quite similar to the ones identified for the 5-2 searching tests (see Fig. 4).

Discussion

In summary, our results from eye-tracking tests in which subjects are asked to find a specific target hidden among a set of distractors (see Methods) reveal a gradual change on the searching strategy, from a directional reading-like (systematic) to an isotropic (random) movement as the number of distractors increases. However, regardless of the differences in image complexity, searching tasks and individual skills of the subjects, we observe universal statistical features related with the distributions of gaze jump sizes. These distributions generally show a characteristic bimodal behaviour, consequence of the intrinsic dual nature of eye movement32, that alternates between saccades and fixations.

The application of a fixation filter to the raw data enables us to study separately the distributions of jump sizes for fixational and saccadic gaze steps. We find that the distribution of fixational movements show long tails which obey power-laws35, while saccades, on the other hand, follow a log-normal type of behaviour. The fact that both log-normal and power-law distributions arise from multiplicative processes36 provide strong support to the hypothesis that the interactions between components dominates the cognition task of visual search31. In a dynamics governed by interactions, the organization of the components and the way they process information are context dependent, with no particular function being encapsulated in any of the components themselves. This non-linear response to the influx of information would give rise to multiplicative distributions like the ones we disclosed here.

These observations are in evident contrast with a component based scenario, where the final performance of a given cognitive task results from the simple addition of sub-tasks that usually process information in a specialized manner. Instead of log-normal or power-law distributions, a process like this would give rise to Gaussian or other additive distributions (e.g., exponential or gamma distributions)37. It is worth noting that our results are conceptually consistent with previous studies describing complex behaviour in visual cognition32,38,39,40. As a perspective for future work, it would be interesting to relate our findings with other potential approaches based on non-cognitive random strategies, where the searching task can be the result of an optimization process41,42,43,44.

Methods

Equipment

Eye movements were recorded with a Tobii T120 eye-tracking system (Tobii Technology). In this study we only consider data obtained after a valid calibration protocol is applied to both eyes of the subject. The stimuli were presented on a 17” TFT-LCD monitor with resolution 1024 × 1280 pixels and capture rate of 60 Hz.

Tests

Two types of tests consisting of visual searching for a hidden target randomly placed among a set of distractors were performed by 11 healthy subjects with an average age of 23 years. The stimuli of the first test consists of a square lattice composed of a single target number 5 and several number 2’s serving as distractors. All numbers (target and distractors) are randomly colored red or green, hindering the visual detection of the target through the identification of patterns on the peripheral vision. This images were organized in three difficulty levels according to the number of distractors, labeled 0, 1 and 2 for 207, 587 and 1399 distractors, respectively.

The stimuli of the second test are scanned images from the “Where’s Wally?” series of books30. The complexity of these images, where a large number of distractors (background characters) are irregularly placed together with Wally, the hidden target character, explains the high difficulty involved in this visual searching task. Not all images used had an actual target, since we had no intention to track the time taken to find the target. Instead, our objective was to induce the subjects to perform the searching task as naturally as possible.

In order to stimulate subjects to search efficiently, in all tests, they were told to have a limited time to find the target, but not informed exactly how much time would be available. In the case of the 5-2 lattice tests, 1, 1.5 and 2 minutes were given to search the target for the difficulties 0, 1 and 2, respectively. For the “Where’s Wally” tests, the subjects had 2 min. A summary of the parameters can be found in Table 2.

Fixation filter

We adopted a modified version of the fixation filter developed by Olsson33 in order to identify which gaze points belong to fixations and which belong to saccades. The basic idea is to distinguish between segments of the signal that are moving slowly due to drift, thus identified as part of a fixational sequence, from those moving faster, constituting the saccades. This is achieved here by taking the raw signal output, si, namely the position of the gaze captured at each timestamp i and calculating for each point the mean position of two sliding windows of size r, one retarded and the other advanced,

The distance between them is calculated as,

Since each timestamp has the same duration, the displacement given by Eq. 4 may be analyzed in the same way as the average velocity, thus if di is larger than its two neighbors (di−1 and di+1) and is also larger than a given velocity threshold, it is considered a peak. If two peaks are found within the interval of a single window, only the largest one is considered.

At this stage, the gaze points are divided into clusters separated by the peaks. In the original filter33, the median position of each cluster is used to locate the corresponding fixation. Since we are instead interested in separating the gaze points that correspond to fixations from those that belong to saccades, the radius of gyration for each cluster C is then calculated as,

where  is the mean position of the gaze points that belong to C. Steps that fall inside the circle area covered by the radius of gyration and are centered at

is the mean position of the gaze points that belong to C. Steps that fall inside the circle area covered by the radius of gyration and are centered at  , are considered to be fixational. The same applies to those steps that leave this area but return to it without passing through another fixation cluster. All other steps are considered saccadic jumps.

, are considered to be fixational. The same applies to those steps that leave this area but return to it without passing through another fixation cluster. All other steps are considered saccadic jumps.

References

Neisser, U. Cognitive Psychology (Appleton-Century-Crofts, Oxford, 1967).

Kintsch, W., Miller, J. R. & Polson, P. G. Method and Tactics in Cognitive Science (L. Erlbaum Associates, Hillsdale, 1984).

Heyes, C. M. & Huber, L. The Evolution of Cognition, (MIT Press, Cambridge, 2000).

Javal, E. Physiologie de la Lecture et de L’écriture (Cambridge University Press, Cambridge, 2010).

Inhoff, A. W. & Radach, R. Definition and computation of oculomotor measures in the study of cognitive processes. In Underwood G. D. M. (Ed.) Eye Guidance in Reading and Scene Perception (Elsevier, Oxford, 1998).

Yarbus, A. L. Eye Movements and Vision (Plenum Press, New York, 1967).

Liversedge, S. P. & Findlay, J. M. Saccadic eye movements and cognition. Trends Cogn. Sci. 4, 6–14 (2000).

Treisman, A. M. & Gelade, G. A. feature-integration theory of attention. Cogn. Psy. 12, 97–136 (1980).

Stanovich, K. E. & West, R. F. Individual differences in reasoning: Implications for the rationality debate? Behav. Brain Sci. 23, 645–665 (2000).

Kahneman, D. A. perspective on judgment and choice: Mapping bounded rationality. Am. Psychol. 58, 697–720 (2003).

Quinlan, P. T. Visual Feature Theory: Past, Present and Future. Psychol. Bull. 129, 643–673 (2003)

Treisman, A. & Sato, S. Conjunction search revisited. J. Exp. Psychol. Human. 16 (1990).

Findlay, J. M. & Gilchrist, L. D. Eye Guidance and visual search. In Underwood G. D. M. (Ed.)Eye Guidance in Reading and Scene Perception (Elsevier, Oxford, 1998).

Anstis, S. M. A chart demonstrating variations in acuity with retinal position. Vision Res. 14, 589–592 (1974).

Hoffman, D. & Subramaniam, B. The role of visual attention in saccadic eye movements. Atten. Percept. Psychol. 57, 787–795 (1995).

Viviani, P. & Swensson, R. G. Saccadic eye movements to peripherally discriminated visual targets. J. Exp. Psychol. Human 8, 113–126 (1982).

Findlay, J. M. Eye movements and peripheral vision. Optometry Vision Sci. 72, 461–466 (1995).

Findlay, J. M. & Walker, R. How are saccades generated? Behav. Brain Sci. 22, 706–713 (1999).

Rolfs, M. Microsaccades: Small steps on a long way. Vision Res. 49, 2415–2441 (2009).

Engbert, R. & Kliegl, R. Microsaccades Keep the Eyes’ Balance During Fixation. Psychol. Sci. 15, 431–436 (2004).

Engbert, R. & Mergenthaler, K. Microsaccades are triggered by low retinal image slip. P. Natl. Acad. Sci. USA 103, 7192–7197 (2006).

Engbert, R., Mergenthaler, K., Sinn, P. & Pikovsky, A. An integrated model of fixational eye movements and microsaccades. P. Natl. Acad. Sci. USA 108, E765–E770 (2011).

Crutchfield, J. P. Dynamical Embodiments of Computation in Cognitive Processes. Behav. Brain Sci. 21, 635–637 (1998).

Matin, L., Matin, E. & Pearce, D. G. Eye movements in the dark during the attempt to maintain a prior fixation position. Vision Res. 10, 837–857 (1970).

Itti, L., Koch, C. & Niebur, E. A. model of saliency-based visual attention for rapid scene analysis. IEEE T. Pattern. Anal. 20, 1254–1259 (1998);

Brockmann, D. & Geisel, T. The ecology of gaze shifts. Neurocomputing 2, 643–650 (1999).

Parkhurst, D., Law, K. & Niebur, E. Modeling the role of salience in the allocation of overt visual attention. Vision Res. 42, 107–123 (2002).

Boccignone, G. & Ferraro, M. Modelling gaze shift as a constrained random walk. Physica A 331, 207–218 (2004).

Holden, J. G., Van Orden, G. C. & Turvey, M. T. Dispersion of response times reveals cognitive dynamics. Psychol. Rev. 116, 318–342 (2009).

Handford, M. Where’s Wally? The Wonder Book (Walker Books, London, 1997).

Stephen, D. G., Mirman, D., Magnuson, J. S. & Dixon, J. A. Lévy-like diffusion in eye movements during spoken-language comprehension. Phys. Rev. E 79, 056114 (2009).

Bogartz, R. S. & Staub, A. Gaze step distributions reflect fixations and saccades: A comment on. Vision Res. 10, 837–857 (2012).

Olsson, P. Real-time and offline filters for eye tracking, Master thesis, Kungliga Tekniska högskolan. (2007).

Ralf, E. & Reinhold, K. Microsaccadesuncover the orientation of covertattention. Vision Res. 43, 1035–1045 (2003).

Sims, D. W., Righton, D. & Pitchford, J. W. Minimizing errors in identifying Lévy flight behaviour of organisms. J. Anim. Ecol. 76, 222–229 (2007).

West, B. J. & Schlesinger, M. F. On the ubiquity of 1/f noise. Int. J. Mod. Phys. B 3, 795–819 (1989).

Stephen, D. G. & Mirman, D. Interactions dominate the dynamics of visual cognition. Cognition 115, 154–165 (2010).

Aks, D. J., Zelinsky, G. J. & Sprott, J. C. Memory across eye-movements: 1/f dynamic in visual search. Nonlinear Dynam. Psychol. Lif. Sci. 6, 1–25 (2002).

Van Orden, G. C., Holden, J. G. & Turvey, M. T. Self-organization of cognitive performance. J. Exp. Psychol. Gen. 132, 331–350 (2003).

Shinde, D. P., Mehta, A. & Mishra, R. K. Searching and fixating: Scale-invariance vs. characteristic timescales in attentional processes. Europhys. Lett. 94, 68001 (2011).

Viswanathan, G. M. et al. Optimizing the success of random searches. Nature 401, 911–914 (1999).

Snider, J. Optimal random search for a single hidden target. Phys. Rev. E 83, 011105 (2011).

Bénichou, O., Loverdo, C., Moreau, M. & Voituriez, R. Intermittent search strategies. Rev. Mod. Phys. 83, 81–129 (2011).

Najemnik, J. & Geisler, W. S. Optimal eye movement strategies in visual search. Nature 434, 381–391 (2005).

Felsenstein, J. Confidence-limits on phylogenies - an approach using the bootstrap. Evolution 39, 783–791 (1985).

Acknowledgements

We thank the Brazilian Agencies CNPq, CAPES, FUNCAP and FINEP, the FUNCAP/CNPq Pronex grant and the National Institute of Science and Technology for Complex Systems in Brazil for financial support.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the paper.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/3.0/

About this article

Cite this article

Credidio, H., Teixeira, E., Reis, S. et al. Statistical patterns of visual search for hidden objects. Sci Rep 2, 920 (2012). https://doi.org/10.1038/srep00920

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep00920

This article is cited by

-

Gaze behavior and cognitive states during fingerprint target group localization

Cognitive Research: Principles and Implications (2019)

-

Online games: a novel approach to explore how partial information influences human random searches

Scientific Reports (2017)

-

A geometric method for computing ocular kinematics and classifying gaze events using monocular remote eye tracking in a robotic environment

Journal of NeuroEngineering and Rehabilitation (2016)

-

Persistence in eye movement during visual search

Scientific Reports (2016)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.