Abstract

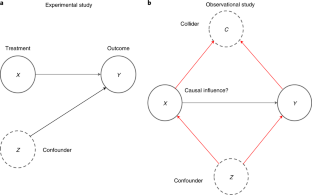

Estimating causality from observational data is essential in many data science questions but can be a challenging task. Here we review approaches to causality that are popular in econometrics and that exploit (quasi) random variation in existing data, called quasi-experiments, and show how they can be combined with machine learning to answer causal questions within typical data science settings. We also highlight how data scientists can help advance these methods to bring causal estimation to high-dimensional data from medicine, industry and society.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$99.00 per year

only $8.25 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Code availability

We provide interactive widgets of Figs. 2–4 in a Jupyter Notebook hosted in a public GitHub repository (https://github.com/tliu526/causal-data-science-perspective) and served through Binder (see link in the GitHub repository).

References

van Dyk, D. et al. ASA statement on the role of statistics in data science. Amstat News https://magazine.amstat.org/blog/2015/10/01/asa-statement-on-the-role-of-statistics-in-data-science/ (2015).

Pearl, J. The seven tools of causal inference, with reflections on machine learning. Commun. ACM 62, 54–60 (2019).

Hernán, M. A., Hsu, J. & Healy, B. Data science is science’s second chance to get causal inference right: a classification of data science tasks. Chance 32, 42–49 (2019).

Caruana, R. et al. Intelligible models for healthcare: predicting pneumonia risk and hospital 30-day readmission. In Proc. 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 1721–1730 (ACM Press, 2015); https://doi.org/10.1145/2783258.2788613

Finkelstein, A. et al. The Oregon health insurance experiment: evidence from the first year. Q. J. Econ. 127, 1057–1106 (2012).

Forney, A., Pearl, J. & Bareinboim, E. Counterfactual data-fusion for online reinforcement learners. In International Conference on Machine Learning (eds. Precup, D. & Teh, Y. W.) 1156–1164 (PMLR, 2017).

Thomas, P. S. & Brunskill, E. Data-efficient off-policy policy evaluation for reinforcement learning. International Conference on Machine Learning (eds. Balcan, M. F. & Weinberger, K.) 2139–2148 (PMLR, 2016).

Athey, S. & Wager, S. Policy learning with observational data. Econometrica (in the press).

Angrist, J. D. & Pischke, J.-S. Mostly Harmless Econometrics: An Empiricist’s Companion (Princeton Univ. Press, 2008).

Imbens, G. & Rubin, D. B. Causal Inference: For Statistics, Social and Biomedical Sciences: An Introduction (Cambridge Univ. Press 2015).

Pearl, J. Causality (Cambridge Univ. Press, 2009).

Hernán, M. A. & Robins, J. M. Causal Inference: What If (Chapman & Hall/CRC, 2020).

Pearl, J. Causal inference in statistics: an overview. Stat. Surv. 3, 96–146 (2009).

Peters, J., Janzing, D. & Schölkopf, B. Elements of Causal Inference: Foundations and Learning Algorithms (MIT Press, 2017).

Rosenbaum, P. R. & Rubin, D. B. The central role of the propensity score in observational studies for causal effects. Biometrika 70, 41–55 (1983).

Chernozhukov, V. et al. Double/debiased machine learning for treatment and structural parameters. Econ. J. 21, C1–C68 (2018).

Spirtes, P., Glymour, C. N. & Scheines, R. Causation, Prediction, and Search (MIT Press, 2000).

Schölkopf, B. Causality for machine learning. Preprint at https://arxiv.org/abs/1911.10500 (2019).

Mooij, J. M., Peters, J., Janzing, D., Zscheischler, J. & Schölkopf, B. Distinguishing cause from effect using observational data: methods and benchmarks. J. Mach. Learn. Res. 17, 1103–1204 (2016).

Huang, B. et al. Causal discovery from heterogeneous/nonstationary data. J. Mach. Learn. Res. 21, 1–53 (2020).

Wang, Y. & Blei, D. M. The blessings of multiple causes. J. Am. Stat. Assoc. 114, 1574–1596 (2019).

Leamer, E. E. Let’s take the con out of econometrics. Am. Econ. Rev. 73, 31–43 (1983).

Angrist, J. D. & Pischke, J.-S. The credibility revolution in empirical economics: how better research design is taking the con out of econometrics. J. Econ. Perspect. 24, 3–30 (2010).

Angrist, J. D. & Krueger, A. B. Instrumental variables and the search for identification: from supply and demand to natural experiments. J. Econ. Perspect. 15, 69–85 (2001).

Angrist, J. D. & Krueger, A. B. Does compulsory school attendance affect schooling and earnings? Q. J. Econ. 106, 979–1014 (1991).

Wooldridge, J. M. Econometric Analysis of Cross Section and Panel Data (MIT Press, 2010).

Angrist, J. D., Imbens, G. W. & Krueger, A. B. Jackknife instrumental variables estimation. J. Appl. Econom. 14, 57–67 (1999).

Newhouse, J. P. & McClellan, M. Econometrics in outcomes research: the use of instrumental variables. Annu. Rev. Public Health 19, 17–34 (1998).

Imbens, G. Potential Outcome and Directed Acyclic Graph Approaches to Causality: Relevance for Empirical Practice in Economics Working Paper No. 26104 (NBER, 2019); https://doi.org/10.3386/w26104

Hanandita, W. & Tampubolon, G. Does poverty reduce mental health? An instrumental variable analysis. Soc. Sci. Med. 113, 59–67 (2014).

Angrist, J. D., Graddy, K. & Imbens, G. W. The interpretation of instrumental variables estimators in simultaneous equations models with an application to the demand for fish. Rev. Econ. Stud. 67, 499–527 (2000).

Thistlethwaite, D. L. & Campbell, D. T. Regression-discontinuity analysis: an alternative to the ex post facto experiment. J. Educ. Psychol. 51, 309–317 (1960).

Fine, M. J. et al. A prediction rule to identify low-risk patients with community-acquired pneumonia. N. Engl. J. Med. 336, 243–250 (1997).

Lee, D. S. & Lemieux, T. Regression discontinuity designs in economics. J. Econ. Lit. 48, 281–355 (2010).

Cattaneo, M. D., Idrobo, N. & Titiunik, R. A Practical Introduction to Regression Discontinuity Designs (Cambridge Univ. Press, 2019).

Imbens, G. & Kalyanaraman, K. Optimal Bandwidth Choice for the Regression Discontinuity Estimator Working Paper No. 14726 (NBER, 2009); https://doi.org/10.3386/w14726

Calonico, S., Cattaneo, M. D. & Titiunik, R. Robust data-driven inference in the regression-discontinuity design. Stata J. 14, 909–946 (2014).

McCrary, J. Manipulation of the running variable in the regression discontinuity design: a density test. J. Econ. 142, 698–714 (2008).

Imbens, G. & Lemieux, T. Regression discontinuity designs: a guide to practice. J. Economet. 142, 615–635 (2008).

NCI funding policy for RPG awards. NIH: National Cancer Institute https://deainfo.nci.nih.gov/grantspolicies/finalfundltr.htm (2020).

NIAID paylines. NIH: National Institute of Allergy and Infectious Diseases http://www.niaid.nih.gov/grants-contracts/niaid-paylines (2020).

Keele, L. J. & Titiunik, R. Geographic boundaries as regression discontinuities. Polit. Anal. 23, 127–155 (2015).

Card, D. & Krueger, A. B. Minimum Wages and Employment: A Case Study of the Fast Food Industry in New Jersey and Pennsylvania Working Paper No. 4509 (NBER, 1993); https://doi.org/10.3386/w4509

Ashenfelter, O. & Card, D. Using the Longitudinal Structure of Earnings to Estimate the Effect of Training Programs Working Paper No. 1489 (NBER, 1984); https://doi.org/10.3386/w1489

Angrist, J. D. & Krueger, A. B. in Handbook of Labor Economics Vol. 3 (eds. Ashenfelter, O. C. & Card, D.) 1277–1366 (Elsevier, 1999).

Athey, S. & Imbens, G. W. Identification and inference in nonlinear difference-in-differences models. Econometrica 74, 431–497 (2006).

Abadie, A. Semiparametric difference-in-differences estimators. Rev. Econ. Stud. 72, 1–19 (2005).

Lu, C., Nie, X. & Wager, S. Robust nonparametric difference-in-differences estimation. Preprint at https://arxiv.org/abs/1905.11622 (2019).

Besley, T. & Case, A. Unnatural experiments? estimating the incidence of endogenous policies. Econ. J. 110, 672–694 (2000).

Nunn, N. & Qian, N. US food aid and civil conflict. Am. Econ. Rev. 104, 1630–1666 (2014).

Christian, P. & Barrett, C. B. Revisiting the Effect of Food Aid on Conflict: A Methodological Caution (The World Bank, 2017); https://doi.org/10.1596/1813-9450-8171.

Angrist, J. & Imbens, G. Identification and Estimation of Local Average Treatment Effects Technical Working Paper No. 118 (NBER, 1995); https://doi.org/10.3386/t0118

Hahn, J., Todd, P. & Van der Klaauw, W. Identification and estimation of treatment effects with a regression-discontinuity design. Econometrica 69, 201–209 (2001).

Angrist, J. & Rokkanen, M. Wanna Get Away? RD Identification Away from the Cutoff Working Paper No. 18662 (NBER, 2012); https://doi.org/10.3386/w18662

Rothwell, P. M. External validity of randomised controlled trials: “To whom do the results of this trial apply?”. The Lancet 365, 82–93 (2005).

Rubin, D. B. For objective causal inference, design trumps analysis. Ann. Appl. Stat. 2, 808–840 (2008).

Chaney, A. J. B., Stewart, B. M. & Engelhardt, B. E. How algorithmic confounding in recommendation systems increases homogeneity and decreases utility. In Proc. 12th ACM Conference on Recommender Systems 224–232 (Association for Computing Machinery, 2018); https://doi.org/10.1145/3240323.3240370.

Sharma, A., Hofman, J. M. & Watts, D. J. Estimating the causal impact of recommendation systems from observational data. In Proc. Sixteenth ACM Conference on Economics and Computation 453–470 (Association for Computing Machinery, 2015); https://doi.org/10.1145/2764468.2764488

Lawlor, D. A., Harbord, R. M., Sterne, J. A. C., Timpson, N. & Smith, G. D. Mendelian randomization: using genes as instruments for making causal inferences in epidemiology. Stat. Med. 27, 1133–1163 (2008).

Zhao, Q., Chen, Y., Wang, J. & Small, D. S. Powerful three-sample genome-wide design and robust statistical inference in summary-data Mendelian randomization. Int. J. Epidemiol. 48, 1478–1492 (2019).

Moscoe, E., Bor, J. & Bärnighausen, T. Regression discontinuity designs are underutilized in medicine, epidemiology, and public health: a review of current and best practice. J. Clin. Epidemiol. 68, 132–143 (2015).

Blake, T., Nosko, C. & Tadelis, S. Consumer heterogeneity and paid search effectiveness: a large-scale field experiment. Econometrica 83, 155–174 (2015).

Dimick, J. B. & Ryan, A. M. Methods for evaluating changes in health care policy: the difference-in-differences approach. JAMA 312, 2401–2402 (2014).

Kallus, N., Puli, A. M. & Shalit, U. Removing hidden confounding by experimental grounding. Adv. Neural Inf. Process. Syst. 31, 10888–10897 (2018).

Zhang, J. & Bareinboim, E. Markov Decision Processes with Unobserved Confounders: A Causal Approach. Technical Report (R-23) (Columbia CausalAI Laboratory, 2016).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015).

Lansdell, B., Triantafillou, S. & Kording, K. Rarely-switching linear bandits: optimization of causal effects for the real world. Preprint at https://arxiv.org/abs/1905.13121 (2019).

Adadi, A. & Berrada, M. Peeking inside the black-box: a survey on explainable artificial intelligence (XAI). IEEE Access 6, 52138–52160 (2018).

Zhao, Q. & Hastie, T. Causal interpretations of black-box models. J. Bus. Econ. Stat. 39, 272–281 (2021).

Moraffah, R., Karami, M., Guo, R., Raglin, A. & Liu, H. Causal interpretability for machine learning—problems, methods and evaluation. ACM SIGKDD Explor. Newsl. 22, 18–33 (2020).

Ribeiro, M. T., Singh, S. & Guestrin, C. ‘Why should I trust you?’: Explaining the predictions of any classifier. In Proc. 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 1135–1144 (Association for Computing Machinery, 2016); https://doi.org/10.1145/2939672.2939778

Mothilal, R. K., Sharma, A. & Tan, C. Explaining machine learning classifiers through diverse counterfactual explanations. In Proc. 2020 Conference on Fairness, Accountability, and Transparency 607–617 (Association for Computing Machinery, 2020); https://doi.org/10.1145/3351095.3372850

Hooker, G. & Mentch, L. Please stop permuting features: an explanation and alternatives. Preprint at https://arxiv.org/abs/1905.03151 (2019).

Mullainathan, S. & Spiess, J. Machine learning: an applied econometric approach. J. Econ. Perspect. 31, 87–106 (2017).

Belloni, A., Chen, D., Chernozhukov, V. & Hansen, C. Sparse models and methods for optimal instruments with an application to eminent domain. Econometrica 80, 2369–2429 (2012).

Singh, R., Sahani, M. & Gretton, A. Kernel instrumental variable regression. Adv. Neural Inf. Process. Syst. 32, 4593–4605 (2019).

Hartford, J., Lewis, G., Leyton-Brown, K. & Taddy, M. Deep IV: a flexible approach for counterfactual prediction. In Proc. 34th International Conference on Machine Learning Vol. 70 (eds. Precup, D. & Teh Y. W.) 1414–1423 (JMLR.org, 2017).

Athey, S., Bayati, M., Doudchenko, N., Imbens, G. & Khosravi, K. Matrix Completion Methods for Causal Panel Data Models Working Paper No. 25132 (NBER, 2018); https://doi.org/10.3386/w25132

Athey, S., Bayati, M., Imbens, G. & Qu, Z. Ensemble methods for causal effects in panel data settings. AEA Pap. Proc. 109, 65–70 (2019).

Kennedy, E. H., Balakrishnan, S. & G’Sell, M. Sharp instruments for classifying compliers and generalizing causal effects. Ann. Stat. 48, 2008–2030 (2020).

Kallus, N. Classifying treatment responders under causal effect monotonicity. In Proc. 36th International Conference on Machine Learning Vol. 97 (eds. Chaudhuri, K. & Salakhutdniov, R.) 3201–3210 (PMLR, 2019).

Li, A. & Pearl, J. Unit selection based on counterfactual logic. In Proc. Twenty-Eighth International Joint Conference on Artificial Intelligence (ed. Kraus, S.) 1793–1799 (International Joint Conferences on Artificial Intelligence Organization, 2019); https://doi.org/10.24963/ijcai.2019/248

Dong, Y. & Lewbel, A. Identifying the effect of changing the policy threshold in regression discontinuity models. Rev. Econ. Stat. 97, 1081–1092 (2015).

Marinescu, I. E., Triantafillou, S. & Kording, K. Regression discontinuity threshold optimization. SSRN https://doi.org/10.2139/ssrn.3333334 (2019).

Varian, H. R. Big data: new tricks for econometrics. J. Econ. Perspect. 28, 3–28 (2014).

Athey, S. & Imbens, G. W. Machine learning methods that economists should know about. Annu. Rev. Econ. 11, 685–725 (2019).

Hudgens, M. G. & Halloran, M. E. Toward causal inference with interference. J. Am. Stat. Assoc. 103, 832–842 (2008).

Graham, B. & de Paula, A. The Econometric Analysis of Network Data (Elsevier, 2019).

Varian, H. R. Causal inference in economics and marketing. Proc. Natl. Acad. Sci. USA 113, 7310–7315 (2016).

Marinescu, I. E., Lawlor, P. N. & Kording, K. P. Quasi-experimental causality in neuroscience and behavioural research. Nat. Hum. Behav. 2, 891–898 (2018).

Abadie, A. & Cattaneo, M. D. Econometric methods for program evaluation. Annu. Rev. Econ. 10, 465–503 (2018).

Huang, A. & Levinson, D. The effects of daylight saving time on vehicle crashes in Minnesota. J. Safety Res. 41, 513–520 (2010).

Lepperød, M. E., Stöber, T., Hafting, T., Fyhn, M. & Kording, K. P. Inferring causal connectivity from pairwise recordings and optogenetics. Preprint at bioRxiv https://doi.org/10.1101/463760 (2018).

Bor, J., Moscoe, E., Mutevedzi, P., Newell, M.-L. & Bärnighausen, T. Regression discontinuity designs in epidemiology. Epidemiol. Camb. Mass 25, 729–737 (2014).

Chen, Y., Ebenstein, A., Greenstone, M. & Li, H. Evidence on the impact of sustained exposure to air pollution on life expectancy from China’s Huai River policy. Proc. Natl. Acad. Sci. USA 110, 12936–12941 (2013).

Lansdell, B. J. & Kording, K. P. Spiking allows neurons to estimate their causal effect. Preprint at bioRxiv https://doi.org/10.1101/253351 (2019).

Patel, M. S. et al. Association of the 2011 ACGME resident duty hour reforms with mortality and readmissions among hospitalized medicare patients. JAMA 312, 2364–2373 (2014).

Rishika, R., Kumar, A., Janakiraman, R. & Bezawada, R. The effect of customers’ social media participation on customer visit frequency and profitability: an empirical investigation. Inf. Syst. Res. 24, 108–127 (2012).

Butsic, V., Lewis, D. J., Radeloff, V. C., Baumann, M. & Kuemmerle, T. Quasi-experimental methods enable stronger inferences from observational data in ecology. Basic Appl. Ecol. 19, 1–10 (2017).

Acknowledgements

We thank R. Ladhania and B. Lansdell for their comments and suggestions on this work. We acknowledge support from National Institutes of Health grant R01-EB028162. T.L. is supported by National Institute of Mental Health grant R01-MH111610.

Author information

Authors and Affiliations

Contributions

T.L. helped write and prepare the manuscript. L.U. and K.K. jointly supervised this work and helped write the manuscript. All authors discussed the structure and direction of the manuscript throughout its development.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Fernando Chirigati was the primary editor on this Perspective and managed its editorial process and peer review in collaboration with the rest of the editorial team. Nature Computational Science thanks Jesper Tegnér and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, T., Ungar, L. & Kording, K. Quantifying causality in data science with quasi-experiments. Nat Comput Sci 1, 24–32 (2021). https://doi.org/10.1038/s43588-020-00005-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s43588-020-00005-8

This article is cited by

-

Gendered beliefs about mathematics ability transmit across generations through children’s peers

Nature Human Behaviour (2022)

-

Integrating explanation and prediction in computational social science

Nature (2021)