Abstract

Machine learning is playing an increasing role in the discovery of new materials and may also facilitate the search for optimum growth conditions for crystals and thin films. Here, we perform kinetic Monte-Carlo simulations of sub-monolayer growth. We consider a generic homoepitaxial growth scenario that covers a wide range of conditions with different diffusion barriers (0.4–0.55 eV) and lateral binding energies (0.1–0.4 eV). These simulations are used as a training data set for a convolutional neural network that can predict diffusion barriers and binding energies. Specifically, a single Monte-Carlo image of the morphology is sufficient to determine the energy barriers with an accuracy of approximately 10 meV and the neural network is tolerant to images with noise and lower than atomic-scale resolution. We believe this new machine learning method will be useful for fundamental studies of growth kinetics and growth optimization through better knowledge of microscopic parameters.

Similar content being viewed by others

Introduction

Data-driven materials science makes use of machine-learning methods to accelerate the discovery and design of novel materials with desired properties. In recent years, there has been significant progress in predicting stable crystal structures1,2,3,4,5,6 and finding promising compound materials through combinatorial approaches using elements of the periodic table7,8,9. Beyond this, also materials properties such as bandgaps10, or thermoelectric efficiency11,12,13 have been predicted by using data from crystal structure databases, high-throughput experiments14, and material-modeling databases such as the Novel Materials Discovery (NOMAD) repository15. These works demonstrate the power of machine learning for materials discovery and exploration. So far, however, most studies were concerned with equilibrium material properties only. Therefore, it is of great interest to see whether machine learning can also help to fabricate materials by rationalizing time-dependent nonequilibrium processes, such as nucleation and growth. In a few recent studies, machine learning has already been suggested in the spirit of a phenomenological optimization, e.g., to count the number of nucleation events and crystals16, as well as for phenomenological optimization of process parameters from real-time datasets17. These studies have indicated that machine-learning techniques can indeed help to handle the significant challenge of finding optimum growth conditions. This is particularly important in the multidimensional parameter space arising in the fabrication of thin films of an ever-increasing number of novel materials found by experiments or machine learning itself.

Controlled growth of crystalline thin films of novel materials is indeed a crucial ingredient for device applications and rapid prototyping of advanced materials. However, it is challenging to achieve the desired material quality as device applications have specific requirements regarding grain size and shape, defect densities, and film roughness. Equilibrium considerations of the surface- and interface energies lead to the simplified Bauer classification of epitaxial growth processes into layer-by-layer (Frank–van der Merwe), layer-plus-island (Stranski–Krastanov), or island (Vollmer–Weber) growth modes18. However, this equilibrium picture disregards the fact that growth is intrinsically a nonequilibrium phenomenon. On the atomic scale, thin-film growth kinetics is governed by a large number of stochastic processes that can occur in a myriad of local atomic environments. These include the arrival of adatoms (atoms, molecules, or colloids) from the evaporator or a chemical precursor gas, and different types of diffusion processes of adatoms on the crystal surface, such as “free” diffusion on a terrace, diffusion involving neighbors, or step-edge diffusion. Microscopically, surface diffusion corresponds to hopping of adatoms between neighboring adsorption sites on the crystal surface, with rates that crucially depend on the (free) energy landscape. Based on an Arrhenius-like ansatz for diffusion rates, the activation energies for hopping include, already in the simplest case19, a barrier for free diffusion, \({E}_{D}\), an effective interatomic bond strength to neighboring adatoms (sometimes called “binding energy”), \({E}_{B}\), and an Ehrlich–Schwoebel barrier \({E}_{S}\) for crossing a step-edge. More refined models involve a larger number of parameters pertaining to specific diffusion processes20,21. The complexity of growth stems from the fact that diffusion of adatoms is not independent of each other but highly correlated as they can form islands and crystal nuclei that hinder further movement22.

Modeling growth on an atomic level and understanding the energy landscape and kinetics is challenging due to the wide range of length- and timescales involved. One possibility to determine energy barriers is via atomistic nucleation theory, which predicts scaling relations, e.g., for the maximum of the island density \({n}_{x}\,\) as a function of the growth rate and substrate temperature23,24,25,26. Together with certain assumptions about the coverage where \({n}_{x}\,\) is maximum, one can then determine \({E}_{D}\) from an experimental Arrhenius plot of \({n}_{x}\) vs. inverse temperature. This is achieved by determining the slope in a temperature region where the critical island size \({i}^{\ast }\) is equal to one as illustrated in Fig. 1a. To this end, one needs to perform a series of experiments at different experimental growth conditions i.e., different temperatures and/or growth rates and then evaluate the maximum of \({n}_{x}\) using high-resolution techniques, such a scanning probe microscopy27,28, high-energy electron diffraction29, or X-ray scattering30. Despite this effort of isolating the influence of and determining \({E}_{D}\), the effective bond strength \({E}_{B}\) or, more generally, a set of energy parameters describing surface migration with different local atomistic environments is still unknown. Additional information is needed to estimate the dimer-binding energy associated to nearest-neighbor interactions. This information can for example come from a measurement of the threshold temperature for the onset of Ostwald ripening in a sample consisting mostly of dimers25. Alternatively, one can analyze distinct slope changes in the Arrhenius plot for higher temperatures where \({i}^{\ast }\) becomes larger than one22,31. Theoretically, all of these energy parameters can be obtained, in principle, from the potential energy landscape (assuming that entropic, i.e., vibrational contributions to the free energy can be neglected32,33), which can be calculated from density functional calculations in the ground state, and corresponding molecular dynamics simulations revealing the relevant processes. However, this is a rather elaborate procedure32,33. Another route to determine the relevant set of energy parameters involves a fitting procedure based on kinetic Monte-Carlo (KMC) simulations21,30. However, finding diffusion barriers (including effective energy parameters) such that the resulting KMC morphologies fit experimental morphologies not only for one but several growth conditions (e.g., several temperatures and deposition rates) are usually a laborious and time-consuming procedure. Therefore, the required set of parameters is only known for selected systems such as Au34, Ag35, Pt27,36, or fullerene \({{{{{{\rm{C}}}}}}}_{60}\)21,30.

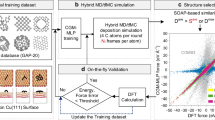

a Comparison of a conventional fitting procedure to extract surface migration barriers from surface growth experiments with (b) our proposed method of applying machine learning trained with simulated data to predict energy barriers. c Image recognition can determine the atomistic energy parameters \({E}_{D}\) (diffusion barrier) and \({E}_{B}\) (bond strength) from a single image of a thin film in the sub-monolayer growth regime. We use a deep learning CNN architecture very similar to the VGG16 network used in image classification tasks. Convolutional layers Conv1_1 to Conv5_3 use a 3 x 3 pixel filter kernel and the number of filters increases from 16 to 128 in deeper layers.

Given these challenges, we here propose a new strategy to determine microscopic growth parameters based on a combination of KMC and machine learning. Specifically, we demonstrate that a convolutional neural network (CNN) can predict the energetics of the underlying diffusion and binding events with high accuracy from a single microscopic image of a sub-monolayer film before the coalescence of islands (see Fig. 1b). This makes it unnecessary to perform a series of time-consuming experiments and simulations at different growth conditions. The CNN performs well also if noise is introduced in the images and the resolution is degraded to lower than atomic resolution. In this study, such images are obtained from KMC snapshots. However, our results indeed suggest that the analysis by CNNs is a comparatively simple way of extracting the parameters \({E}_{D}\) and \({E}_{B}\) from experimental data of scanning probe microscope images of thin-film growth in the sub-monolayer growth regime. We here deliberately focus, as a proof of concept, on a simplistic description involving only one (free) diffusion barrier and effective bond strength, but the approach can be generalized towards a more refined description. Moreover, even within the present description, we can obtain additional microscopic information by analyzing specific processes e.g. at step edges.

Results

CNN architecture

We use a standard CNN architecture as shown in Fig. 1c for predicting the diffusion energy barrier \({E}_{D}\) and effective bond strength \({E}_{B}\) from KMC images of atomistic sub-monolayer growth. Specifically, we use a deep VGG16-type network37, which we found to perform well in comparison to other neural network architectures (see “Methods”). The CNN uses an increasing number of 3 × 3 pixel filters in deeper convolutional layers. The CNN was first trained on noise-free images of sub-monolayer morphologies at varying \({E}_{D}\) and \({E}_{B}\), but with fixed growth conditions which are defined as follows: coverage \(\theta =0.15\), temperature \(T=273\) K and deposition (or growth) rate \(f=0.0167\;{{{{{\rm{monolayer}}}}}}/{{{{{\rm{s}}}}}}\). In a second step, training of the CNN is performed with imperfect input data. Specifically, noise was introduced such that in the KMC images a certain percentage of lattice sites is filled or emptied at random. Further, Gaussian smoothing with different standard deviations of the Gaussian filter kernel was used as detailed in “Methods” to simulate the limited image resolution of experimentally obtained scanning probe images (e.g., scanning tunneling microscopy (STM) or atomic force microscopy (AFM)).

KMC simulations of nucleation and growth

We employ KMC simulations to generate a dataset for training and validation of the CNN. In KMC simulations, nucleation and the subsequent growth of islands occur via the two stochastic processes of adatom deposition and surface diffusion. To this end, coarse-grained particles (i.e., internal rotational and vibrational degrees of freedom are neglected) arrive with an effective deposition rate \(f\) (given in monolayers/s) on randomly chosen sites \({{{{{\boldsymbol{i}}}}}}\,{{{{{\boldsymbol{=}}}}}}\,\left(m,n\right)\) of a discrete square lattice of lateral length \(L\), where \(m,n\in \left[1,L\right]\). Subsequently, adatoms explore their immediate vicinity via stochastic hopping processes to adjacent lattice sites, \({{{{{\boldsymbol{i}}}}}}\to {{{{{\boldsymbol{j}}}}}}\). At a given substrate temperature \(T\), these hopping rates are defined in an Arrhenius-like manner according to the Clarke–Vvedensky bond-counting Ansatz19

Depending on the local energetic environment of a particle at site \({{{{{\boldsymbol{i}}}}}}\), the activation energy \({E}_{A}=({E}_{D}+{n}_{i}{E}_{B}+{s}_{{ij}}{E}_{S})\) for a diffusion process \({{{{{\boldsymbol{i}}}}}}\to {{{{{\boldsymbol{j}}}}}}\), consists of up to three distinct energy barriers which, in interplay with the effective deposition rate \(f\) and the substrate temperature \(T\), eventually determine the resulting thin-film morphology. Here, \({E}_{D}\) represents the barrier for free adatom diffusion, and the parameter \({E}_{B}\) corresponds to the bond energy between neighboring particles in the lateral direction, with \({n}_{{{{{{\boldsymbol{i}}}}}}}\) being the number of in-plane bonds. For lateral diffusion, \({s}_{{{{{{\boldsymbol{ij}}}}}}}=0\), the barrier \({E}_{S}\), representing the Ehrlich–Schwoebel barrier, becomes irrelevant. In case of diffusional moves across step-edges, \({s}_{{{{{{\boldsymbol{ij}}}}}}}=1\), \({E}_{S}\) contributes to \({E}_{A}\). We apply periodic boundary conditions and the solid-on-solid condition, which forbids vacancies and overhanging particles. The surface coverage \({{{{{\rm{\theta }}}}}}\) corresponds to the number of deposited particles, which is the ratio of filled lattice sites to all lattice sites \({L}^{2}\) in the first monolayer. In our simulation, growth proceeds at a constant speed via \(\theta ={ft}\), with \(t\) being the simulation time. While the adatoms (i.e., atoms, molecules, or colloids) are characterized by the corresponding set of energy parameters, \({E}_{D}\), \({E}_{B}\) and \({E}_{S}\), the attempt frequency is set to \(2{k}_{b}T/h\), as given in Eq. (1). Here, \({k}_{b}\) represents the Boltzmann constant, while \(h\) is the Planck constant. We perform simulations on lattices with \(L=200\) until a coverage of \(\theta =0.15\), that is 15% surface coverage is reached. In this study, we do not consider the Ehrlich–Schwoebel barrier, i.e., \({E}_{S}=0\) eV. The exact value of \({E}_{S}\) is not critical for the morphology, because we investigate the low coverage, sub-monolayer regime and we have observed no second layer nucleation also for \({E}_{S}=0.1\,{{{{{{\mathrm{eV}}}}}}}\).

Our choice for the parameter range for \({E}_{D}\) and \({E}_{B}\) is driven by two considerations. First, a broad range of real atomic and molecular systems fall into the range of diffusion energies \({E}_{D}\) and effective binding energies \({E}_{B}\) that we have chosen for our KMC simulations21,27,30. Second, we chose the values for \({E}_{D}\) and \({E}_{B}\) such that, at the given substrate temperature, we observe a large variability in the resulting cluster shapes and islands densities. The specific lattice geometry (square lattice) is chosen for simplicity, but our approach can easily be generalized to other geometries, e.g., triangular or honeycomb lattices. Within the simulation range of the diffusion and binding energies, 0.4 eV \(\le\; {E}_{D}\;\le\) 0.55 eV and 0.1 eV \(\le\; {E}_{B}\;\le\) 0.4 eV, strongly different surface morphologies emerge in the KMC simulations. Some trends can be identified in Fig. 2a. For increasing \({E}_{D}\), molecular diffusion is hindered and adatoms are less likely to diffuse to an existing nucleus so that more islands nucleate on the substrate. Similarly, an increase in \({E}_{B}\) leads to increased nucleation and higher island density. Different combinations of \({E}_{D}\) and \({E}_{B}\) then result in varying island shapes ranging from compact rounded to rectangular and dendritic islands with different fractal dimensions.

a Surface morphologies obtained from KMC simulations of sub-monolayer growth for different values of \({E}_{D}\) and \({E}_{B}\) at fixed adatom deposition rate \(f=0.0167\) monolayer/s, temperature \(T=273\) K, and coverage \(\theta =0.15\), result in a wide range of island sizes, island densities, and compact or dendritic island shapes. The images shown have added noise and smoothing, i.e., lowered resolution, to mimic surface images obtained from scanning probe microscopy experiments. The scale corresponds to 100 × 200 lattice sites, that is roughly 50 × 100 nm image sizes for typical lattice constants in experiments. b Histograms of the probability of single-particle processes occurring on the lattice at \({E}_{D}=0.425\) eV and three different values for \({E}_{B}\). \({n}_{i}\) is the number of lateral bonds of particles at site \(i\) before diffusion to site \(j\), and \({n}_{j}\) is the number of bonds at site \(j\) after the diffusion process.

We note that besides the island distribution and shapes, the combination of \({E}_{D}\) and \({E}_{B}\) also controls the dominant microscopic single-particle processes that occur on the lattice. This is illustrated in Fig. 2b where we show the relative probability of single-particle diffusion events. These were calculated by monitoring the involved changes of atomistic neighborhood for a fixed \({E}_{D}=0.425\) eV and three distinct values of \({E}_{B}\,\) from 0.1 to 0.4 eV. In all histograms, diffusion of free particles as the most prominent process is neglected. At the lowest bond strength parameter of \({E}_{B}=0.1\) eV, the most frequent processes are edge diffusion, edge detachment as well as kink-detachment processes. In contrast, particles in kink positions become essentially immobile at \({E}_{B}=0.25\) eV, leading to a vanishing probability of kink processes. As \({E}_{B}\) is further increased to 0.4 eV, free attachment processes are by far the most probable ones, while there is only a very small probability for edge processes, that is, particles have little chance to diffuse along or detach from cluster boundary sites. A similar trend is observed when increasing \({E}_{D}\) at fixed \({E}_{B}\) (not shown). We conclude that even within the present, simplified picture, we can obtain microscopic information beyond the parameters of \({E}_{D}\) and \({E}_{B}\) by analyzing the actual diffusion processes corresponding to these parameters.

CNN training and prediction performance

The training dataset consists of simulated images of surface growth (as shown in Fig. 2a), which reveals a wide range of island sizes and densities. For training of the CNN, the KMC images were altered through varying amounts of random noise and Gaussian smoothing (see “Methods”). For example, the training images of the surface morphology in Fig. 2a contain background noise as random pixels are flipped for 5% of the pixels. Further, smoothing via convolution with a two-dimensional (2D) Gaussian with one pixel (i.e., one lattice constant) standard deviation has been applied.

We tested the performance of the CNN after the training procedure with a separate test dataset consisting of 1800 individual KMC simulations (with the same fixed growth conditions as used for training) that were not part of the training dataset. That means, for each “true” value of \({E}_{D}\) and \({E}_{B}\) used in the KMC simulations, the trained CNN predicts these energy barriers. The predictions of the energy barriers, \({E}_{D}\) and \({E}_{B}\), are shown in Fig. 3. As can be seen, the plot of the predicted diffusion barrier \({E}_{D}\) vs. the true \({E}_{D}\) of the KMC simulation (in Fig. 3a) shows that, on average, the values predicted by the CNN (black line) are very close to the true values (diagonal dashed line). To better understand the deviation of individual \({E}_{D}\) predictions (blue dots), Fig. 3b shows a density map of the absolute error of \({E}_{D}\) predictions for various true \({E}_{D}\) and true \({E}_{B}\) values. The error is fairly low across the whole density map with a mean prediction error for \({E}_{D}\) of 4.5 meV.

The CNN determination of the diffusion barrier \({E}_{D}\) in (a) and binding energy \({E}_{B}\) in (c) follow closely the true \({E}_{D}\) and \({E}_{B}\) values (predictions: blue dots, mean value: black line, true values: diagonal dashed line). b The absolute prediction error of \({E}_{D}\) is low across the whole parameter range with a mean deviation of 4.5 meV. d The mean \({E}_{B}\) error across the whole parameter space is 9.1 meV, however, the \({E}_{B}\) predictions have larger errors for large true \({E}_{B}\) and \({E}_{D}\) values where fractal islands become very small.

Similarly, predicting \({E}_{B}\) from the test dataset of sub-monolayer images works well as shown in Fig. 3c, since the mean predicted value (black line) is close to the true binding energies \({E}_{B}\) (dashed diagonal line) and the average prediction error of \({E}_{B}=\) 9.1 meV is low across the whole parameter range (see Fig. 3d). While the error in \({E}_{B}\) is generally low over most of the \({E}_{D}\)–\({E}_{B}\) range, some deviations occur for large \({E}_{D}\) and \({E}_{B}\) (area marked with a dashed line in Fig. 3d). In this small parameter region, the CNN does not predict the correct \({E}_{B}\) value but tends to predict a median value. This may be a consequence of the low variation in island densities in that parameter range, and the fact that it is harder to distinguish dendritic or compact island shapes from each other for very small islands as can be seen in the top right corner in Fig. 2a.

Convolutional neural network performance with imperfect data

We also studied the prediction accuracy of the CNN for noisy data with added salt and pepper noise and data with a limited resolution where smoothing has been applied as shown in Fig. 4a, b. Here, our goal was to investigate whether image recognition may, in principle, be applicable to imperfect experimental images such as e.g., STM or AFM images. To this end, we plot the coefficient of determination R2, which is defined as 100% for perfectly accurate predictions and 0% when the accuracy corresponds to the null hypothesis. This null hypothesis is \({E}_{D}=0.475\) eV and \({E}_{B}=0.25\) eV, that is the mid-range values of the parameter space of the investigated diffusion and binding energies. The R2 measure of the accuracy is better suited than a percentage or absolute error, because indeed for noisy images the CNN tends to make wrong predictions close to the null hypothesis. The R2 values have been calculated for the test dataset across the full range of \({E}_{D}\) and \({E}_{B}\) values for an increasing noise level. The results show a surprising tolerance of the CNN performance to noise. Even for 60% of the pixels replaced by noise, the CNN still has an R2 value above 90%. Similarly, images with lower than atomic resolution still can be used for meaningful predictions, as shown in Fig. 4b where image resolution has been degraded by smoothing through convolution with a two-dimensional Gaussian. If the standard deviation of the Gaussian is chosen to be three pixels, roughly corresponding to an image resolution of three lattice constants, the R2 value is above 90%. For a standard deviation above five pixels or lattice constants, however, the prediction quality suffers significantly. In both graphs of Fig. 4a, b, the predictions of \({E}_{D}\) are less sensitive to image degradation than the prediction of \({E}_{B}\). This may be because \({E}_{B}\) predictions depend more strongly on fine detail of dendritic islands, that get shadowed by noise or blurred more easily with degraded resolution. We note that the same CNN was used for all predictions with or without noise and with degraded or perfect resolution. In principle, a CNN which is trained for specific noise levels or a specific image resolution can outperform the more general CNN used here. Indeed, for applying a CNN to experimental data, the KMC training images should be modified such that it reflects as closely as possible the experimental resolution and noise types present in the STM or AFM images used as input.

Dependence of the quality of \({E}_{D}\) and \({E}_{B}\) prediction on image noise and resolution in the CNN input data. a The R2 coefficient of determination is a measure for prediction quality and exhibits a surprising tolerance for image noise, as up to 60% pixels can be replaced with random values before predictions get significantly worse. b Reducing the resolution of images from single-pixel “atomic” resolution to a resolution of 3–5 pixels or lattice constants still yields acceptable prediction quality. The image series above the graphs serve to illustrate how increasing noise and decreasing resolution impact a single example image with 200 × 200 lattice sites in (a), zoom of 100 × 100 lattice sites in (b).

Discussion

Our work demonstrates how artificial intelligence methods can help to unravel microscopic details of nonequilibrium surface processes that are crucially important during thin-film growth. Our machine-learning-based approach of using a single image to extract the energy barriers involved in its formation is quintessentially different from well-established procedures which require information from several iteratively repeated laboratory experiment series at different growth conditions, e.g., different temperatures \(T\) and adatom deposition rates \(f\). The physical information that is gained from such measurement series allows one to calculate mesoscopic parameters, such as the island density \({n}_{x}\), from which the value of \({E}_{D}\) can be determined under certain conditions using scaling relations based on atomistic nucleation theory (see “Introduction” and Fig. 1a). Alternatively, the experimentally obtained value of \({n}_{x}\) can be used to start an elaborate fitting procedure employing KMC simulations to find the energy barriers that lead to island densities matching the experiment30. The present approach significantly facilitates the problem: it only requires a single image of the surface morphology at fixed growth conditions (defined by \(T\), \(f\), and \(\theta\)) in combination with an appropriately trained CNN to determine both, the values of \({E}_{D}\) and \({E}_{B}\). In this study, we performed numerical (KMC) “experiments” at \(T=273\) K, \(f=0.0167\) monolayer/s and \(\theta =0.15\) to generate, on the one hand, the training data for the CNN (i.e., images for a range of values of \({E}_{D}\) and \({E}_{B}\)) and, on the other hand, single images of surface morphologies which the trained CNN then analyses in terms of \({E}_{D}\) and \({E}_{B}\). We have demonstrated that this analysis works very well in terms of prediction of energy barriers, even in presence of strong noise and highly reduced resolution of the images, as shown in Fig. 4. Therefore, we are confident that a single STM or AFM image obtained in a real growth experiment (performed at fixed growth conditions) would be sufficient to estimate the values of \({E}_{D}\) and \({E}_{B}\), provided that the CNN was trained by appropriate KMC simulations.

Our method still requires many KMC simulation runs, as simulations with different energy barriers must be performed over a range of values typical for the class of materials under study, but the number of simulations needed may be reduced in the future. Preliminary investigations with a training dataset containing a sparser sampling of the \({E}_{D}\)–\({E}_{B}\) space indicate that the CNN is, to some extent, capable of interpolating in between \({E}_{D}\) and \({E}_{B}\) values of KMC simulations. The sparse training data was using only \(5\times 9=45\) energy combinations with a step size of 37.5 meV instead of the 637 energy combinations with a 12.5 meV step size used above. With this sparsely sampled training dataset, the validation dataset with the full 637 energy combinations could be predicted with a mean error of 8 meV for \({E}_{D}\) and 20 meV for \({E}_{B}\), that is two to four times lower than the sampling step. A sparser sampling can substantially reduce KMC simulation time as compared to the present analysis. This potential reduction of computational effort and the analysis of the necessary sampling density in different regions of the \({E}_{D}\)–\({E}_{B}\) space is a topic of further studies.

Once the values of \({E}_{D}\) and \({E}_{B}\) of a material are calibrated at the growth conditions at hand via the KMC-trained CNN, one can once again employ KMC simulations to predict the surface morphology at a deposition rate \(f\), temperature \(T\) or sub-monolayer coverage \({{{{{\rm{\theta }}}}}}\) different from the training growth conditions. Of course, such an investigation foots on the implicit assumption that the obtained energy barriers are transferable between, e.g., different temperatures or deposition rates. Given that the energy barriers in KMC are essentially effective, i.e., coarse-grained, quantities, the assumption of transferability can indeed be an issue as in any coarse-grained theoretical approach38,39. Whether the energy barriers can be transferred should therefore either be tested by comparison of KMC results and experiments at different growth conditions or, alternatively, the suitability of a KMC model for the material class under study must be previously established. Even though the precise \({E}_{D}\) and \({E}_{B}\) values may depend on the type of KMC algorithm21 and the choice of attempt frequencies, the above procedure of training and predicting with a certain KMC model will be self-consistent.

Taken together, predicting the deposition rate and temperature dependency of growth enables data-driven materials research as costly experimental growth parameter searches can be guided through faster KMC simulations. This becomes even more important as the dimensionality of the growth parameter space is extended beyond substrate temperature and adatom deposition rate. For example, in the popular technique of pulsed laser deposition40, the experimenter can use a parameter space with dimensions of temperature, growth rate, laser pulse duration for target sublimation, and laser dwell time where surface relaxation occurs. These two additional dimensions of the pulse duration and dwell time enable new possibilities e.g., for smoother film growth but make it much more complicated to sample the growth conditions for optimum values due to the “curse of dimensionality”.

We would like to mention that our method can be extended to different lattice symmetries, e.g., hexagonal, triangular, or rectangular substrates, and, in principle, also to other particle shapes, e.g., elongated organic molecules41,42,43. In the latter case, the underlying KMC algorithm clearly has to include additional processes (and thus, energy barriers) related to internal and orientational degrees of freedom. While the intentionally simple model used in this work may be sufficiently precise for a self-consistent description and prediction of energy parameters in many atomic systems, we are aware that even in these seemingly simple cases, additional microscopic processes such as diffusion along step-edges, particle exchange or the presence of grain boundaries can impact the resulting surface morphology. The presence of these processes may be identified in molecular dynamics simulations or studies based on density functional theory32,33. For example, additional surface processes have been included in KMC simulations of atomic systems such as Pt on Pt(111)27,44 or \({{{{{{\mathrm{C}}}}}}}_{60}\) on \({{{{{{\mathrm{C}}}}}}}_{60}\)21 to refine the morphology predictions for these systems. In this study, we have only obtained indirect information about the relevant processes by monitoring the occurring changes of the neighborhood at fixed \({E}_{D}\) and \({E}_{B}.\) For future research, it would be very interesting to investigate whether a CNN can precisely predict the refined energy parameters and rates of simulation setups that include more surface processes and energy parameters.

We also want to point out that the approach presented here can be extended to predict the Ehrlich–Schwoebel barrier. Indeed, we have performed first tests by training a CNN with images at higher coverages \(\theta\), where second layer nucleation has already set in. The results show that, after determining \({E}_{D}\) and \({E}_{B}\) at a sub-monolayer coverage of \(\theta =0.15\), the value of the interlayer diffusion barrier \({E}_{{ES}}\) can be predicted from a second image of the surface at a coverage of \(\theta =0.5\) with high accuracy that is comparable to the results for \({E}_{D}\) and \({E}_{B}\). Future work may investigate whether all three parameters can be predicted at the same time from a single image in a coverage regime where stable islands in the second layer are present. Other extensions of the current work may help to elucidate the black box nature of the artificial neural network. Interpretable machine learning using e.g., deep Taylor decomposition of neural networks would be interesting to find out more about the salient features in morphologies that are used for predictions by our neural network. Interpretability of neural networks together with other data analytics techniques such as a principal component analysis for images would help to find the most important “hidden variable”, especially if the KMC model is enlarged to include even more surface migration barriers.

In conclusion, machine-learning techniques such as CNNs represent a significant untapped potential for advanced data analysis in surface science and material engineering. This holds, in particular, for complex nonequilibrium processes such as nucleation and crystal growth where various microscopic processes with different activation energies strongly influence the resulting morphologies. Here, a nearly instantaneous method to extract the underlying kinetics and energetics of a growth process seems especially valuable. Encapsulating the knowledge about a wide range of energy parameters and possible surface morphologies in a CNN enables a novel and direct analysis of microscope images that circumvents the need for iteratively fitting KMC simulations to data at different growth conditions. Therefore, in light of the demonstrated tolerance of the CNN to noise and lower resolution images, we expect the here presented approach to be very applicable to experiments and to speed up the optimization of growth conditions for defect-free materials. This is particularly needed at the moment, as machine-learning algorithms also suggest an ever-increasing number of candidate materials that have to be grown and tested. Finally, we want to note that the method of using image recognition to predict nanoscale processes from microscopic morphology can potentially be extended beyond growth studies to research fields such as catalysis at surfaces.

Methods

Implementation details of the KMC simulation setup

In line with previous KMC studies19,30,45, we use the attempt rate \({{{{{{\rm{\nu }}}}}}}_{0}=2{k}_{b}T/h\), which is of the order of typical lattice vibrations, \(\sim \kern-3pt{10}^{12}-{10}^{13}\,1/{{{{{\mathrm{s}}}}}}\). After every step in the KMC simulations, where either a particle hops to a neighboring lattice site or a new particle gets adsorbed, the simulation time \(t\) is stochastically updated, \({t}^{{\prime} }=t+\Delta t\), with \(\Delta {t}=-{{{{{{\mathrm{ln}}}}}}}\left(r\right)/{d}_{{all}}\) being the time increment that is added after each simulation step. Here, \(r\in \left({{{{\mathrm{0,1}}}}}\right)\) is a uniform random number and \({d}_{all}={\sum }_{i}{\sum }_{j}({d}_{{{{{{\boldsymbol{ij}}}}}}}+f)\) represents the sum over all possible diffusion processes \({d}_{{{{{{\boldsymbol{ij}}}}}}}\) of adatoms in the topmost layer of the crystal plus the effective deposition rate \(f\) per lattice site. The surface height at lattice position \({{{{{\boldsymbol{i}}}}}}\) represents the number of stacked particles and takes integer values \({h}_{{{{{{\boldsymbol{i}}}}}}}\in {N}_{0}\), with \({h}_{{{{{{\boldsymbol{i}}}}}}}=0\) representing an empty site.

Generation of the dataset for training and validation of the CNN

We carried out multiple realizations for each of the 637 \(\left({E}_{D}-{E}_{B}\right)\) pairs at a substrate temperature of \(T=273\) K and an adsorption rate of \(f=0.0167\) monolayer/s on square lattices of lateral length \(L=200\) sites. Therefore, the unaltered KMC surface images consist of \(200\times 200=40000\) pixels. All the surface images used in this work corresponds to a coverage of \({{{{{\rm{\theta }}}}}}=0.15\). We made sure that training and validation data are strictly separated from each other, irrespective of the data augmentation via rotations and shifting. We used five to six individual realizations of each combination of \({E}_{D}\) and \({E}_{B}\) for training and three for validation.

Machine learning

We employ two-dimensional convolutional neural networks (CNNs) as commonly used for image recognition to predict energy barriers from images of sub-monolayer growth in this work (see Fig. 1c). Our CNN architecture is a smaller version of the standard VGG16 CNN used for image recognition. It has also 16 layers but fewer filters in the convolutional layers and fewer neurons in the last, fully connected layers. The reduced width of the network has proven to be sufficient because the prediction errors are very similar to the standard VGG16 and even deeper VGG19 models37, while the narrower VGG16 model trains faster than those larger networks. A comparison with an AlexNet inspired CNN, which is not as deep as VGG16 showed larger errors so that the deeper networks were used.

Our variant of the VGG16 network was implemented in TensorFlow and trained in two stages using only the training KMC dataset and leaving the test dataset for later performance checks. In a first step, the CNN was trained for 500 epochs (~55,000 augmented images per epoch) on a GPU (Nvidia GeForce RTX 2080) with noise-free, full-resolution data. Each batch of images was augmented on the fly by rotating by a random multiple of 90° and shifting the images by a random number of pixels in x and y directions. Due to the periodic boundary conditions in the KMC simulations, these random shifts with image wrapping result in images without any border effects. This training achieved mean absolute percentage errors of 0.8 % in \({E}_{D}\) and 4.0 % in \({E}_{B}\) without any signs of overfitting in the 30% of the training dataset aside for validation. Using the investigate toolkit46 for interpretable machine learning, we found that the rim of islands is important for predictions but sometimes also individual pixels representing unbound, freely diffusing adatoms had a significant role in coming to a prediction. As individual adatoms are very difficult to observe in experiments at higher temperatures due to their high mobility, predictions making use of adatom densities are undesirable. Consequently, we introduced noise terms that stochastically add adatoms and performed a second training step.

We performed a second training step with noisy and blurred images to reduce the effect of the free adatom density on predictions and to check the ability to make predictions from experimental images with noise and limited lateral resolution. We used on the fly image degradation with salt and pepper noise and gaussian blurring. Salt and pepper noise randomly flips a certain percentage of pixels, that is it generates “adatom” noise from empty lattice sites and holes within islands (see Fig. 4a). Through this generation of a random number of adatoms in every image, we reduce the correlation of adatom density with certain energy barrier regimes. Note that for a specific experimental technique, other noise contributions such as background noise, or e.g., line-scan artifacts as found in scanning probe techniques may be added during the training for optimum performance on experimental data. As we do not focus on a specific experimental technique, we added no such specific noise terms. After generating noise, a Gaussian smoothing filter was used to simulate a limited, sub-atomic resolution that some experimental techniques may have. The Gaussian smoothing was implemented in Python using multidimensional image processing (scipy.ndimage) with the gaussian_filter function. This function corresponds to a convolution of the image with a Gaussian kernel whose standard deviation is varied between 0 and 20 pixels, corresponding to a blurring of 0–20 lattice constants. Both the percentage of pixels affected by salt and pepper noise and the width of the Gaussian blur were randomly set for each on-the-fly augmentation. Large values of noise and blurring were exponentially less likely so that images with higher information content are still emphasized in training, but the CNN also learns to handle degraded images. The training was continued for another 500 epochs resulting in a validation performance of 1% error in \({E}_{D}\) predictions and 4.5% error in \({E}_{B}\) predictions. As expected, the network which is trained to accept a wide range of clean and noisy images performs slightly worse on clean images compared to a network only trained for clean data. While the CNN used here is trained to accept a wide range of noise levels and resolution settings, for a given experiment even higher CNN performance may be achieved by training with synthetic data for the specific noise and resolution of the experiment. In the context of this work, our training procedure however enables direct comparisons of the performance of one CNN with different noise and resolution settings.

Data availability

The complete set of KMC images of sub-monolayer growth is available in Supplementary Data 1 so that the results can be reproduced.

Code availability

The computer codes used for the numerical calculations and CNN predictions are available from the corresponding author upon request.

References

Lookman, T., Eidenbenz, S., Alexander, F. & Barnes, C. (Eds.) Materials Discovery and Design by Means of Data Science and Optimal Learning In Springer Series in Materials Science Vol. 280 (Springer, 2018).

Ryan, K., Lengyel, J. & Shatruk, M. Crystal structure prediction via deep learning. J. Am. Chem. Soc. 140, 10158–10168 (2018).

Graser, J., Kauwe, S. K. & Sparks, T. D. Machine learning and energy minimization approaches for crystal structure predictions: a review and new horizons. Chem. Mater. 30, 3601–3612 (2018).

Seko, A., Hayashi, H., Nakayama, K., Takahashi, A. & Tanaka, I. Representation of compounds for machine-learning prediction of physical properties. Phys. Rev. B 95, 1–11 (2017).

Xie, T. & Grossman, J. C. Crystal graph convolutional neural networks for an accurate and interpretable prediction of material properties. Phys. Rev. Lett. 120, 145301 (2018).

Zheng, X., Zheng, P. & Zhang, R. Z. Machine learning material properties from the periodic table using convolutional neural networks. Chem. Sci. 9, 8426–8432 (2018).

Oliynyk, A. O., Adutwum, L. A., Harynuk, J. J. & Mar, A. Classifying crystal structures of binary compounds AB through cluster resolution feature selection and support vector machine analysis. Chem. Mater. 28, 6672–6681 (2016).

Faber, F. A., Lindmaa, A., Von Lilienfeld, O. A. & Armiento, R. Machine learning energies of 2 million elpasolite (ABC2D6) crystals. Phys. Rev. Lett. 117, 2–7 (2016).

Balachandran, P. V., Kowalski, B., Sehirlioglu, A. & Lookman, T. Experimental search for high-temperature ferroelectric perovskites guided by two-step machine learning. Nat. Commun. 9, 1–9 (2018).

Isayev, O. et al. Universal fragment descriptors for predicting properties of inorganic crystals. Nat. Commun. 8, 1–12 (2017).

Carrete, J., Li, W., Mingo, N., Wang, S. & Curtarolo, S. Finding unprecedentedly low-thermal-conductivity half-heusler semiconductors via high-throughput materials modeling. Phys. Rev. X 4, 1–9 (2014).

Ju, S. et al. Designing nanostructures for phonon transport via Bayesian optimization. Phys. Rev. X 7, 1–10 (2017).

Gaultois, M. W. et al. Perspective: web-based machine learning models for real-time screening of thermoelectric materials properties. APL Mater. 4, 053213 (2016).

Curtarolo, S. et al. The high-throughput highway to computational materials design. Nat. Mater. 12, 191–201 (2013).

Draxl, C. & Scheffler, M. NOMAD: the FAIR concept for big data-driven materials science. MRS Bull. 43, 676–682 (2018).

Liotti, E. et al. Crystal nucleation in metallic alloys using x-ray radiography and machine learning. Sci. Adv. 4, 1–10 (2018).

Dropka, N. & Holena, M. Application of artificial neural networks in crystal growth of electronic and opto-electronic materials. Crystals 10, 1–17 (2020).

Venables, J. A. Introduction to Surface and Thin Film Processes (Cambridge University Press, 2000).

Clarke, S. & Vvedensky, D. D. Origin of reflection high-energy electron-diffraction intensity oscillations during molecular-beam epitaxy: a computational modeling approach. Phys. Rev. Lett. 58, 2235–2238 (1987).

Lü, B., Almyras, G. A., Gervilla, V., Greene, J. E. & Sarakinos, K. Formation and morphological evolution of self-similar 3D nanostructures on weakly interacting substrates. Phys. Rev. Mater. 2, 63401 (2018).

Janke, W. & Speck, T. Modeling of epitaxial film growth of C60 revisited. Phys. Rev. B 101, 1–11 (2020).

Michely, T. & Krug, J. Islands, Mounds and Atoms In Springer Series in Surface Sciences, Vol. 42 (Springer, Berlin, 2004).

Zhang, Z. & Lagally, M. G. Atomistic processes in the early stages of thin-film growth. Science 276, 377 LP–377383 (1997).

Brune, H., Röder, H., Boragno, C. & Kern, K. Microscopic view of nucleation on surfaces. Phys. Rev. Lett. 73, 1955–1958 (1994).

Brune, H. Microscopic view of epitaxial metal growth: nucleation and aggregation. Surf. Sci. Rep. 31, 125–229 (1998).

Einax, M., Dieterich, W. & Maass, P. Colloquium: cluster growth on surfaces: densities, size distributions, and morphologies. Rev. Mod. Phys. 85, 921–939 (2013).

Hohage, M. et al. Atomic processes in low temperature Pt-dendrite growth on Pt(111). Phys. Rev. Lett. 76, 2366–2369 (1996).

N’Diaye, A., Bleikamp, S., Feibelman, P. J. & Michely, T. Two dimensional Ir-cluster lattices on Moiré of graphene with Ir(111). Cond-mat 1, 609286 (2006).

Neave, J. H., Joyce, B. A., Dobson, P. J. & Norton, N. Dynamics of film growth of GaAs by MBE from Rheed observations. Appl. Phys. A Solids Surfaces 31, 1–8 (1983).

Bommel, S. et al. Unravelling the multilayer growth of the fullerene C 60 in real time. Nat. Commun. 5, 1–8 (2014).

Einax, M., Dieterich, W. & Maass, P. Binding energies between unlike atoms determined from island densities. J. Appl. Phys. 105, 54312 (2009).

Gervilla, V., Zarshenas, M., Sangiovanni, D. G. & Sarakinos, K. Anomalous versus normal room-temperature diffusion of metal adatoms on graphene. J. Phys. Chem. Lett. 11, 8930–8936 (2020).

Sangiovanni, D. G. Copper adatom, admolecule transport, and island nucleation on TiN(001) via ab initio molecular dynamics. Appl. Surf. Sci. 450, 180–189 (2018).

Brune, H. et al. Effect of strain on surface diffusion and nucleation. Phys. Rev. B 52, R14380 (1995).

Teichert, C., Ammer, C. & Klaua, M. Step formation on the ion-bombarded Ag(100) surface studied by LEED and Monte Carlo simulations. Phys. Stat. Sol. (a) 146, 223–242 (1994).

Rosenfeld, G., Poelsema, B. & Comsa, G. The concept of two mobilities in homoepitaxial growth. J. Cryst. Growth 151, 230–233 (1995).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556 (2014).

Rosenberger, D. & van der Vegt, N. F. A. Addressing the temperature transferability of structure based coarse graining models. Phys. Chem. Chem. Phys. 20, 6617–6628 (2018).

Heinemann, T., Palczynski, K., Dzubiella, J. & Klapp, S. H. L. Angle-resolved effective potentials for disk-shaped molecules. J. Chem. Phys. 141, 214110 (2014).

Lowndes, D. H., Geohegan, D. B., Puretzky, A. A., Norton, D. P. & Rouleau, C. M. Synthesis of novel thin-film materials by pulsed laser deposition. Science 273, 898 LP–898903 (1996).

Meyer zu Heringdorf, F. J., Reuter, M. C. & Tromp, R. M. Growth dynamics of pentacene thin films. Nature 412, 517–520 (2001).

Kleppmann, N. & Klapp, S. H. L. Nonequilibrium surface growth in a hybrid inorganic-organic system. Phys. Rev. B 94, 1–15 (2016).

Dixit, M., Schilling, T. & Oettel, M. Growth of films with anisotropic particles: simulations and rate equations. J. Chem. Phys. 149, 064903 (2018).

Esch, S., Breeman, M., Morgenstern, M., Michely, T. & Comsa, G. Nucleation and morphology of homoepitaxial Pt(111)-films grown with ion beam assisted deposition. Surf. Sci. 365, 187–204 (1996).

Martynec, T. & Klapp, S. H. L. Modeling of nonequilibrium surface growth by a limited-mobility model with distributed diffusion length. Phys. Rev. E 100, 33307 (2019).

Alber, M. et al. iNNvestigate neural networks! J. Mach. Learn. Res. 20, 1–8 (2019).

Acknowledgements

The authors acknowledge the financial support by the University of Graz. C.K. acknowledges the financial support by the PhD-program of Bundesanstalt für Materialforschung und -prüfung (BAM).

Author information

Authors and Affiliations

Contributions

S.K. and T.M. made a concept of the study, T.M. performed KMC simulations, S.K. and C.K. created the CNN, S.K., T.M., and S.H.L.K. drafted the manuscript, while all authors made substantial contributions to the interpretation and revision of the work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Communications Materials thanks Martin Oettel and the other, anonymous, reviewers for their contribution to the peer review of this work. Primary Handling Editor: John Plummer. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Martynec, T., Karapanagiotis, C., Klapp, S.H.L. et al. Machine learning predictions of surface migration barriers in nucleation and non-equilibrium growth. Commun Mater 2, 90 (2021). https://doi.org/10.1038/s43246-021-00188-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s43246-021-00188-1