Abstract

The stochastic reaction network in which chemical species evolve through a set of reactions is widely used to model stochastic processes in physics, chemistry and biology. To characterize the evolving joint probability distribution in the state space of species counts requires solving a system of ordinary differential equations, the chemical master equation, where the size of the counting state space increases exponentially with the type of species. This makes it challenging to investigate the stochastic reaction network. Here we propose a machine learning approach using a variational autoregressive network to solve the chemical master equation. Training the autoregressive network employs the policy gradient algorithm in the reinforcement learning framework, which does not require any data simulated previously by another method. In contrast with simulating single trajectories, this approach tracks the time evolution of the joint probability distribution, and supports direct sampling of configurations and computing their normalized joint probabilities. We apply the approach to representative examples in physics and biology, and demonstrate that it accurately generates the probability distribution over time. The variational autoregressive network exhibits plasticity in representing the multimodal distribution, cooperates with the conservation law, enables time-dependent reaction rates and is efficient for high-dimensional reaction networks, allowing a flexible upper count limit. The results suggest a general approach to study stochastic reaction networks based on modern machine learning.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The authors declare that the data supporting this study are available within the paper. Source data are provided with this paper.

Code availability

A PyTorch implementation of the algorithm can be found at GitHub (https://github.com/jamestang23/NNCME), Code Ocean (https://doi.org/10.24433/CO.9625043.v1)54 and Zenodo (https://doi.org/10.5281/zenodo.7623370)55.

References

Weber, M. F. & Frey, E. Master equations and the theory of stochastic path integrals. Rep. Prog. Phys. 80, 046601 (2017).

Gillespie, D. T. Stochastic simulation of chemical kinetics. Annu. Rev. Phys. Chem. 58, 35–55 (2007).

Ge, H., Qian, M. & Qian, H. Stochastic theory of nonequilibrium steady states. Part II: applications in chemical biophysics. Phys. Rep. 510, 87–118 (2012).

Elowitz, M. B., Levine, A. J., Siggia, E. D. & Swain, P. S. Stochastic gene expression in a single cell. Science 297, 1183–1186 (2002).

Blythe, R. A. & McKane, A. J. Stochastic models of evolution in genetics, ecology and linguistics. J. Stat. Mech. 2007, P07018 (2007).

Jafarpour, F., Biancalani, T. & Goldenfeld, N. Noise-induced mechanism for biological homochirality of early life self-replicators. Phys. Rev. Lett. 115, 158101 (2015).

Gardiner, C. W. Handbook of Stochastic Methods 3rd edn (Springer-Verlag, 2004).

Frank, F. C. On spontaneous asymmetric synthesis. Biochim. Biophys. Acta 11, 459–463 (1953).

Bressloff, P. C. Stochastic Processes in Cell Biology Vol. 41 (Springer, 2014).

Raj, A. & van Oudenaarden, A. Nature, nurture, or chance: stochastic gene expression and its consequences. Cell 135, 216–226 (2008).

van Kampen, N. G. Stochastic Processes in Physics and Chemistry (Elsevier, 2007).

Doob, J. L. Topics in the theory of markoff chains. Trans. Am. Math. Soc. 52, 37–64 (1942).

Gillespie, D. T. A general method for numerically simulating the stochastic time evolution of coupled chemical reactions. J. Comput. Phys. 22, 403–434 (1976).

Weinan, E., Li, T. & Vanden-Eijnden, E. Applied Stochastic Analysis Vol. 199 (American Mathematical Society, 2021).

Terebus, A., Liu, C. & Liang, J. Discrete and continuous models of probability flux of switching dynamics: uncovering stochastic oscillations in a toggle-switch system. J. Chem. Phys. 151, 185104 (2019).

Terebus, A., Manuchehrfar, F., Cao, Y. & Liang, J. Exact probability landscapes of stochastic phenotype switching in feed-forward loops: phase diagrams of multimodality. Front. Genet. 12, 645640 (2021).

Gillespie, D. T. The chemical Langevin equation. J. Chem. Phys. 113, 297–306 (2000).

Munsky, B. & Khammash, M. The finite state projection algorithm for the solution of the chemical master equation. J. Chem. Phys. 124, 044104 (2006).

Henzinger, T. A., Mateescu, M. & Wolf, V. in Computer Aided Verification (eds Bouajjani, A. et al.) 337–352 (Springer, 2009).

Cao, Y., Terebus, A. & Liang, J. Accurate chemical master equation solution using multi-finite buffers. Multiscale Model. Simul. 14, 923–963 (2016).

Cao, Y., Terebus, A. & Liang, J. State space truncation with quantified errors for accurate solutions to discrete chemical master equation. Bull. Math. Biol. 78, 617–661 (2016).

MacNamara, S., Burrage, K. & Sidje, R. B. Multiscale modeling of chemical kinetics via the master equation. Multiscale Model. Simul. 6, 1146–1168 (2008).

Kazeev, V., Khammash, M., Nip, M. & Schwab, C. Direct solution of the chemical master equation using quantized tensor trains. PLoS Comput. Biol. 10, e1003359 (2014).

Ion, I. G., Wildner, C., Loukrezis, D., Koeppl, H. & De Gersem, H. Tensor-train approximation of the chemical master equation and its application for parameter inference. J. Chem. Phys. 155, 034102 (2021).

Gupta, A., Schwab, C. & Khammash, M. DeepCME: a deep learning framework for computing solution statistics of the chemical master equation. PLoS Comput. Biol. 17, e1009623 (2021).

Mehta, P. et al. A high-bias, low-variance introduction to machine learning for physicists. Phys. Rep. 810, 1–124 (2019).

Carleo, G. et al. Machine learning and the physical sciences. Rev. Mod. Phys. 91, 045002 (2019).

Tang, Y. & Hoffmann, A. Quantifying information of intracellular signaling: progress with machine learning. Rep. Prog. Phys. 85, 086602 (2022).

Wu, D., Wang, L. & Zhang, P. Solving statistical mechanics using variational autoregressive networks. Phys. Rev. Lett. 122, 080602 (2019).

Hibat-Allah, M., Ganahl, M., Hayward, L. E., Melko, R. G. & Carrasquilla, J. Recurrent neural network wave functions. Phys. Rev. Res. 2, 023358 (2020).

Sharir, O., Levine, Y., Wies, N., Carleo, G. & Shashua, A. Deep autoregressive models for the efficient variational simulation of many-body quantum systems. Phys. Rev. Lett. 124, 020503 (2020).

Barrett, T. D., Malyshev, A. & Lvovsky, A. Autoregressive neural-network wavefunctions for ab initio quantum chemistry. Nat. Mach. Intell. 4, 351–358 (2022).

Luo, D., Chen, Z., Carrasquilla, J. & Clark, B. K. Autoregressive neural network for simulating open quantum systems via a probabilistic formulation. Phys. Rev. Lett. 128, 090501 (2022).

Carrasquilla, J. et al. Probabilistic simulation of quantum circuits using a deep-learning architecture. Phys. Rev. A 104, 032610 (2021).

Shin, J.-E. et al. Protein design and variant prediction using autoregressive generative models. Nat. Commun. 12, 2403 (2021).

A., Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing Systems Vol. 30 (eds Guyon, I. et al.) (Curran Associates, 2017).

Jiang, Q. et al. Neural network aided approximation and parameter inference of non-markovian models of gene expression. Nat. Commun. 12, 2618 (2021).

Sukys, A., Öcal, K. & Grima, R. Approximating solutions of the chemical master equation using neural networks. iScience 25, 105010 (2022).

Bortolussi, L. & Palmieri, L. Deep abstractions of chemical reaction networks. In Computational Methods in Systems Biology 21–38 (Springer, 2018).

Thanh, V. H. & Priami, C. Simulation of biochemical reactions with time-dependent rates by the rejection-based algorithm. J. Chem. Phys. 143, 054104 (2015).

Germain, M., Gregor, K., Murray, I. & Larochelle, H. Made: Masked autoencoder for distribution estimation. In Int. Conf. Machine Learning 881–889 (PMLR, 2015).

Van Oord, A., Kalchbrenner, N. & Kavukcuoglu, K. Pixel recurrent neural networks. In Int. Conf. Machine Learning 1747–1756 (PMLR, 2016).

Williams, R. J. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Machine Learning 8, 229–256 (1992).

Gardner, T. S., Cantor, C. R. & Collins, J. J. Construction of a genetic toggle switch in Escherichia coli. Nature 403, 339–342 (2000).

Neal, R. M. Annealed importance sampling. Stat. Comput. 11, 125–139 (2001).

Hibat-Allah, M., Inack, E. M., Wiersema, R., Melko, R. G. & Carrasquilla, J. Variational neural annealing. Nat. Mach. Intell. 3, 952–961 (2021).

Tang, Y., Liu, J., Zhang, J. & Zhang, P. Solving nonequilibrium statistical mechanics by evolving autoregressive neural networks. Preprint at https://doi.org/10.48550/arXiv.2208.08266 (2022).

Cao, Y. & Liang, J. Optimal enumeration of state space of finitely buffered stochastic molecular networks and exact computation of steady state landscape probability. BMC Syst. Biol. 2, 30 (2008).

Causer, L., Bañuls, M. C. & Garrahan, J. P. Finite time large deviations via matrix product states. Phys. Rev. Lett. 128, 090605 (2022).

Cho, K., Van Merriënboer, B., Bahdanau, D. & Bengio, Y. On the properties of neural machine translation: encoder–decoder approaches. In Proc. 8th Worksh. on Syntax, Semantics and Structure in Statistical Translation 103−111 (Association for Computational Linguistics, 2014).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. Preprint at https://doi.org/10.48550/arXiv.1412.6980 (2014).

Acharya, A., Rudolph, M., Chen, J., Miller, J. & Perdomo-Ortiz, A. Qubit seriation: improving data-model alignment using spectral ordering. Preprint at https://doi.org/10.48550/arXiv.2211.15978 (2022).

Suzuki, M. Generalized Trotter’s formula and systematic approximants of exponential operators and inner derivations with applications to many-body problems. Commun. Math. Phys. 51, 183–190 (1976).

Tang, Y., Weng, J. & Zhang, P. Neural-network solutions to stochastic reaction networks. Code Ocean https://doi.org/10.24433/CO.9625043.v1 (2023).

Tang, Y., Weng, J. & Zhang, P. Nncme: publication code (v1.0.0). Zenodo https://doi.org/10.5281/zenodo.7623370 (2023).

Acknowledgements

We thank J. Liu for sharing the code of the transformer. We acknowledge J. Liang, M. Khammash, A. Gupta, A. Hoffmann, A. Farhat, F. Manuchehrfar and members of Online Club Nanothermodynamica for helpful discussions. This work is supported by projects 12105014 (Y.T.), 11747601 (P.Z.) and 11975294 (P.Z.) of the National Natural Science Foundation of China. P.Z. acknowledges the WIUCASICTP2022 grant. The high-performance computing is supported by Dawning Information Industry and the Interdisciplinary Intelligence SuperComputer Center of Beijing Normal University, Zhuhai.

Author information

Authors and Affiliations

Contributions

Y.T. and P.Z. had the original idea for this work, Y.T. and J.W. performed the study. All authors contributed to the preparation of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Nigel Goldenfeld and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Notes 1–2, Figs. 1–13 and Tables 1–2.

Source data

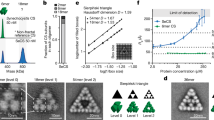

Source Data Fig. 2

Zipped source data file for Fig. 2.

Source Data Fig. 3

Zipped source data file for Fig. 3.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tang, Y., Weng, J. & Zhang, P. Neural-network solutions to stochastic reaction networks. Nat Mach Intell 5, 376–385 (2023). https://doi.org/10.1038/s42256-023-00632-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-023-00632-6

This article is cited by

-

Learning nonequilibrium statistical mechanics and dynamical phase transitions

Nature Communications (2024)

-

Language models for quantum simulation

Nature Computational Science (2024)