Abstract

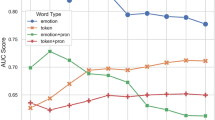

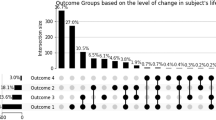

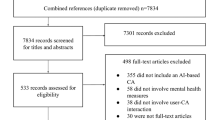

Advances in artificial intelligence (AI) are enabling systems that augment and collaborate with humans to perform simple, mechanistic tasks such as scheduling meetings and grammar-checking text. However, such human–AI collaboration poses challenges for more complex tasks, such as carrying out empathic conversations, due to the difficulties that AI systems face in navigating complex human emotions and the open-ended nature of these tasks. Here we focus on peer-to-peer mental health support, a setting in which empathy is critical for success, and examine how AI can collaborate with humans to facilitate peer empathy during textual, online supportive conversations. We develop HAILEY, an AI-in-the-loop agent that provides just-in-time feedback to help participants who provide support (peer supporters) respond more empathically to those seeking help (support seekers). We evaluate HAILEY in a non-clinical randomized controlled trial with real-world peer supporters on TalkLife (N = 300), a large online peer-to-peer support platform. We show that our human–AI collaboration approach leads to a 19.6% increase in conversational empathy between peers overall. Furthermore, we find a larger, 38.9% increase in empathy within the subsample of peer supporters who self-identify as experiencing difficulty providing support. We systematically analyse the human–AI collaboration patterns and find that peer supporters are able to use the AI feedback both directly and indirectly without becoming overly reliant on AI while reporting improved self-efficacy post-feedback. Our findings demonstrate the potential of feedback-driven, AI-in-the-loop writing systems to empower humans in open-ended, social and high-stakes tasks such as empathic conversations.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Data used for training the empathy classification model used for automatic evaluation are available at https://github.com/behavioral-data/Empathy-Mental-Health29,83. Data used for training PARTNER and the data collected in our randomized controlled trial are available on request from the corresponding author with a clear justification and a license agreement from TalkLife.

Code availability

Source code of the empathy classification model used for automatic evaluation is available at https://github.com/behavioral-data/Empathy-Mental-Health29,83. Source code of PARTNER is available at https://github.com/behavioral-data/PARTNER47,56. Code used for designing the interface of HAILEY is available at https://github.com/behavioral-data/Human-AI-Collaboration-Empathy84. Code used for the analysis of the study data is available on request from the corresponding author. For the most recent project outcomes and resources, please visit https://bdata.uw.edu/empathy.

References

Rajpurkar, P., Chen, E., Banerjee, O. & Topol, E. J. AI in health and medicine. Nat. Med. 28, 31–38 (2022).

Hosny, A. & Aerts, H. J. Artificial intelligence for global health. Science 366, 955–956 (2019).

Patel, B. N. et al. Human–machine partnership with artificial intelligence for chest radiograph diagnosis. npj Digit. Med. 2, 111 (2019).

Tschandl, P. et al. Human–computer collaboration for skin cancer recognition. Nat. Med. 26, 1229–1234 (2020).

Cai, C. J., Winter, S., Steiner, D., Wilcox, L. & Terry, M. ‘Hello AI’: uncovering the onboarding needs of medical practitioners for human–AI collaborative decision-making. Proc. ACM Hum.-Comput. Interact. 3, 1–24 (2019).

Suh, M., Youngblom, E., Terry, M. & Cai, C. J. AI as social glue: uncovering the roles of deep generative AI during social music composition. In CHI Conference on Human Factors in Computing Systems, 1–11 (Association for Computing Machinery, 2021).

Wen, T.-H. et al. A network-based end-to-end trainable task-oriented dialogue system. In European Chapter of the Association for Computational Linguistics: Volume 1, Long Papers, 438–449 (Association for Computational Linguistics, 2017).

Baek, M. et al. Accurate prediction of protein structures and interactions using a three-track neural network. Science 373, 871–876 (2021).

Jumper, J. et al. Highly accurate protein structure prediction with alphafold. Nature 596, 583–589 (2021).

Verghese, A., Shah, N. H. & Harrington, R. A. What this computer needs is a physician: humanism and artificial intelligence. J. Am. Med. Assoc. 319, 19–20 (2018).

Bansal, G. et al. Does the whole exceed its parts? The effect of AI explanations on complementary team performance. In CHI Conference on Human Factors in Computing Systems, 1–16 (Association for Computing Machinery, 2021).

Yang, Q., Steinfeld, A., Rosé, C. & Zimmerman, J. Re-examining whether, why, and how human–AI interaction is uniquely difficult to design. In CHI Conference on Human Factors in Computing Systems, 1–13 (Association for Computing Machinery, 2020).

Li, R. C., Asch, S. M. & Shah, N. H. Developing a delivery science for artificial intelligence in healthcare. npj Digit. Med. 3, 107 (2020).

Gillies, M. et al. Human-centred machine learning. In CHI Conference Extended Abstracts on Human Factors in Computing Systems, 3558–3565 (Association for Computing Machinery, 2016).

Amershi, S. et al. Guidelines for human–AI interaction. In CHI Conference on Human Factors in Computing Systems, 1–13 (Association for Computing Machinery, 2019).

Norman, D. A. How might people interact with agents. Commun. ACM 37, 68–71 (1994).

Hirsch, T., Merced, K., Narayanan, S., Imel, Z. E. & Atkins, D. C. Designing contestability: interaction design, machine learning, and mental health. Des Interact Syst Conf 2017, 95–99 (2017).

Clark, E., Ross, A. S., Tan, C., Ji, Y. & Smith, N. A. Creative writing with a machine in the loop: case studies on slogans and stories. In 23rd International Conference on Intelligent User Interfaces, 329–340 (Association for Computing Machinery, 2018).

Roemmele, M. & Gordon, A. S. Automated assistance for creative writing with an RNN language model. In 23rd Intl Conference on Intelligent User Interfaces Companion, 1–2 (Association for Computing Machinery, 2018).

Lee, M., Liang, P. & Yang, Q. Coauthor: designing a human–AI collaborative writing dataset for exploring language model capabilities. In CHI Conference on Human Factors in Computing Systems, 1–19 (Association for Computing Machinery, 2022).

Paraphrasing tool. QuillBot https://quillbot.com/ (2022).

Buschek, D., Zürn, M. & Eiband, M. The impact of multiple parallel phrase suggestions on email input and composition behaviour of native and non-native English writers. In CHI Conference on Human Factors in Computing Systems, 1–13 (Association for Computing Machinery, 2021).

Gero, K. I., Liu, V. & Chilton, L. B. Sparks: inspiration for science writing using language models. In Designing Interactive Systems Conference, 1002–1019 (2022).

Chilton, L. B., Petridis, S. & Agrawala, M. Visiblends: a flexible workflow for visual blends. In CHI Conference on Human Factors in Computing Systems, 1–14 (Association for Computing Machinery, 2019).

Elliott, R., Bohart, A. C., Watson, J. C. & Greenberg, L. S. Empathy. Psychotherapy 48, 43–49 (2011).

Elliott, R., Bohart, A. C., Watson, J. C. & Murphy, D. Therapist empathy and client outcome: an updated meta-analysis. Psychotherapy 55, 399–410 (2018).

Bohart, A. C., Elliott, R., Greenberg, L. S. & Watson, J. C. in Psychotherapy Relationships That Work: Therapist Contributions and Responsiveness to Patients (ed. Norcross, J. C.) Vol. 452, 89–108 (Oxford Univ. Press, 2002).

Watson, J. C., Goldman, R. N. & Warner, M. S. Client-Centered and Experiential Psychotherapy in the 21st Century: Advances in Theory, Research, and Practice (PCCS Books, 2002).

Sharma, A., Miner, A. S., Atkins, D. C. & Althoff, T. A computational approach to understanding empathy expressed in text-based mental health support. In Conference on Empirical Methods in Natural Language Processing, 5263–5276 (Association for Computational Linguistics, 2020).

Davis, M. H. A. et al. A multidimensional approach to individual differences in empathy. JSAS Catalog of Selected Documents in Psychology 10, 85–103 (1980).

Blease, C., Locher, C., Leon-Carlyle, M. & Doraiswamy, M. Artificial intelligence and the future of psychiatry: qualitative findings from a global physician survey. Digit. Health 6, 2055207620968355 (2020).

Doraiswamy, P. M., Blease, C. & Bodner, K. Artificial intelligence and the future of psychiatry: Insights from a global physician survey. Artif. Intell. Med. 102, 101753 (2020).

Riess, H. The science of empathy. J. Patient Exp. 4, 74–77 (2017).

Mental disorders. World Health Organization https://www.who.int/news-room/fact-sheets/detail/mental-disorders (2022).

Kazdin, A. E. & Blase, S. L. Rebooting psychotherapy research and practice to reduce the burden of mental illness. Perspect. Psychol. Sci. 6, 21–37 (2011).

Olfson, M. Building the mental health workforce capacity needed to treat adults with serious mental illnesses. Health Aff. 35, 983–990 (2016).

Naslund, J. A., Aschbrenner, K. A., Marsch, L. A. & Bartels, S. J. The future of mental health care: peer-to-peer support and social media. Epidemiol. Psychiatr. Sci. 25, 113–122 (2016).

Kemp, V. & Henderson, A. R. Challenges faced by mental health peer support workers: peer support from the peer supporter’s point of view. Psychiatr. Rehabil. J. 35, 337–340 (2012).

Mahlke, C. I., Krämer, U. M., Becker, T. & Bock, T. Peer support in mental health services. Curr. Opin. Psychiatry 27, 276–281 (2014).

Schwalbe, C. S., Oh, H. Y. & Zweben, A. Sustaining motivational interviewing: a meta-analysis of training studies. Addiction 109, 1287–1294 (2014).

Goldberg, S. B. et al. Do psychotherapists improve with time and experience? A longitudinal analysis of outcomes in a clinical setting. J. Couns. Psychol. 63, 1–11 (2016).

Nunes, P., Williams, S., Sa, B. & Stevenson, K. A study of empathy decline in students from five health disciplines during their first year of training. J. Int. Assoc. Med. Sci. Educ. 2, 12–17 (2011).

Hojat, M. et al. The devil is in the third year: a longitudinal study of erosion of empathy in medical school. Acad. Med. 84, 1182–1191 (2009).

Stebnicki, M. A. Empathy fatigue: healing the mind, body, and spirit of professional counselors. Am. J. Psychiatr. Rehabil. 10, 317–338 (2007).

Imel, Z. E., Steyvers, M. & Atkins, D. C. Computational psychotherapy research: scaling up the evaluation of patient–provider interactions. Psychotherapy 52, 19–30 (2015).

Miner, A. S. et al. Key considerations for incorporating conversational AI in psychotherapy. Front. Psychiatry 10, 746 (2019).

Sharma, A., Lin, I. W., Miner, A. S., Atkins, D. C. & Althoff, T. Towards facilitating empathic conversations in online mental health support: a reinforcement learning approach. In Proc. of the Web Conference, 194–205 (Association for Computing Machinery, 2021).

Lin, Z., Madotto, A., Shin, J., Xu, P. & Fung, P. MoEL: mixture of empathetic listeners. In Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, 121–132 (Association for Computational Linguistics, 2019).

Majumder, N. et al. Mime: mimicking emotions for empathetic response generation. In Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, 8968–8979 (Association for Computational Linguistics, 2020).

Rashkin, H., Smith, E. M., Li, M. & Boureau, Y.-L. Towards empathetic open-domain conversation models: a new benchmark and dataset. In Annual Meeting of the Association for Computational Linguistics, 5370–5381 (Association for Computational Linguistics, 2019).

Chen, J. H. & Asch, S. M. Machine learning and prediction in medicine – beyond the peak of inflated expectations. N. Engl. J. Med. 376, 2507–2509 (2017).

Tanana, M. J., Soma, C. S., Srikumar, V. et al. Development and evaluation of ClientBot: patient-like conversational agent to train basic counseling skills. J. Med. Internet Res. 21, e12529 (2019).

Peng, Z., Guo, Q., Tsang, K. W. & Ma, X. Exploring the effects of technological writing assistance for support providers in online mental health community. In CHI Conference on Human Factors in Computing Systems, 1–15 (Association for Computing Machinery, 2020).

Hui, J. S., Gergle, D. & Gerber, E. M. IntroAssist: a tool to support writing introductory help requests. In CHI Conference on Human Factors in Computing Systems, 1–13 (Association for Computing Machinery, 2018).

Kelly, R., Gooch, D. & Watts, L. ‘It’s more like a letter’: an exploration of mediated conversational effort in message builder. Proc. ACM Hum. Comput. Interact. 2, 1–18 (2018).

Sharma, A. behavioral-data/partner: code for the WWW 2021 paper on empathic rewriting. Zenodo https://doi.org/10.5281/ZENODO.7053967 (2022).

Hernandez-Boussard, T., Bozkurt, S., Ioannidis, J. P. & Shah, N. H. Minimar (minimum information for medical AI reporting): developing reporting standards for artificial intelligence in health care. J. Am. Med. Inf. Assoc. 27, 2011–2015 (2020).

Barrett-Lennard, G. T. The empathy cycle: refinement of a nuclear concept. J. Couns. Psychol. 28, 91–100 (1981).

Collins, P. Y. Grand challenges in global mental health. Nature 475, 27–30 (2011).

Kaplan, B. H., Cassel, J. C. & Gore, S. Social support and health. Med. Care 15, 47–58 (1977).

Rathod, S. et al. Mental health service provision in low- and middle-Income countries. Health Serv. Insights 10, 1178632917694350 (2017).

Lee, E. E. et al. Artificial intelligence for mental health care: clinical applications, barriers, facilitators, and artificial wisdom. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 6, 856–864 (2021).

Vaidyam, A. N., Linggonegoro, D. & Torous, J. Changes to the psychiatric chatbot landscape: a systematic review of conversational agents in serious mental illness. Can. J. Psychiatry 66, 339–348 (2021).

Richardson, J. P. et al. Patient apprehensions about the use of artificial intelligence in healthcare. npj Digit. Med 4, 140 (2021).

Collings, S. & Niederkrotenthaler, T. Suicide prevention and emergent media: surfing the opportunity. Crisis 33, 1–4 (2012).

Luxton, D. D., June, J. D. & Fairall, J. M. Social media and suicide: a public health perspective. Am. J. Public Health 102, S195–200 (2012).

Martinez-Martin, N. & Kreitmair, K. Ethical issues for direct-to-consumer digital psychotherapy apps: addressing accountability, data protection, and consent. JMIR Ment. Health 5, e32 (2018).

Tanana, M., Hallgren, K. A., Imel, Z. E., Atkins, D. C. & Srikumar, V. A comparison of natural language processing methods for automated coding of motivational interviewing. J. Subst. Abuse Treat. 65, 43–50 (2016).

De Choudhury, M., Sharma, S. S., Logar, T. et al. Gender and cross-cultural differences in social media disclosures of mental illness. In ACM Conference on Computer Supported Cooperative Work and Social Computing, 353–369 (Association for Computing Machinery, 2017).

Cauce, A. M. et al. Cultural and contextual influences in mental health help seeking: a focus on ethnic minority youth. J. Consult. Clin. Psychol. 70, 44–55 (2002).

Satcher, D. Mental Health: Culture, Race, and Ethnicity—A Supplement to Mental Health: a Report of the Surgeon General (U.S. Department of Health and Human Services, 2001).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Vol. 1, 4171–4186 (Association for Computational Linguistics, 2019).

Li, J., Galley, M., Brockett, C., Gao, J. & Dolan, W. B. A diversity-promoting objective function for neural conversationmodels. In NAACL-HLT (2016).

Wolf, M. J., Miller, K. & Grodzinsky, F. S. Why we should have seen that coming: comments on microsoft’s tay ‘experiment,’ and wider implications. ACM SIGCAS Comput. Soc. 47, 54–64 (2017).

Bolukbasi, T., Chang, K.-W., Zou, J. Y. et al. Man is to computer programmer as woman is to homemaker? debiasing word embeddings. In Advances in Neural Information Processing Systems, 29 (2016).

Daws, R. Medical chatbot using OpenAI’s GPT-3 told a fake patient to kill themselves. AI News https://artificialintelligence-news.com/2020/10/28/medical-chatbot-openai-gpt3-patient-kill-themselves/ (2020).

Radford, A. et al. Language models are unsupervised multitask learners. CloudFront https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf (2022).

Lee, F.-T., Hull, D., Levine, J. et al. Identifying therapist conversational actions across diverse psychotherapeutic approaches. In Proc. of the 6th Workshop on Computational Linguistics and Clinical Psychology, 12–23 (Association for Computational Linguistics, 2019).

Zheng, C., Liu, Y., Chen, W. et al. CoMAE: a multi-factor hierarchical framework for empathetic response generation. In Findings of the Association for Computational Linguistics, 813–824 (Association for Computational Linguistics, 2021).

Wambsganss, T., Niklaus, C., Söllner, M. et al. Supporting cognitive and emotional empathic writing of students. In Proc. of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, 4063–4077 (Association for Computational Linguistics, 2021).

Majumder, N. et al. Exemplars-guided empathetic response generation controlled by the elements of human communication. IEEE Access 10, 77176–77190 (2022).

Elbow method (clustering). Wikipedia https://en.wikipedia.org/wiki/Elbow_method_(clustering) (2022).

Sharma, A. Behavioral-data/empathy-mental-health: code for the EMNLP 2020 paper on empathy. Zenodo https://doi.org/10.5281/ZENODO.7061732 (2022).

Sharma, A. Behavioral-data/human–AI-collaboration-empathy: code for HAILEY. Zenodo https://doi.org/10.5281/ZENODO.7295902 (2022).

Acknowledgements

We thank TalkLife and J. Druitt for supporting this work, for advertising the study on their platform and for providing us access to a TalkLife data set. We also thank members of the UW Behavioral Data Science Group, Microsoft AI for Accessibility team and D.S. Weld for their suggestions and feedback. T.A., A.S. and I.W.L. were supported in part by NSF grant IIS-1901386, NSF CAREER IIS-2142794, NSF grant CNS-2025022, NIH grant R01MH125179, Bill & Melinda Gates Foundation (INV-004841), the Office of Naval Research (#N00014-21-1-2154), a Microsoft AI for Accessibility grant and a Garvey Institute Innovation grant. A.S.M. was supported by grants from the National Institutes of Health, National Center for Advancing Translational Science, Clinical and Translational Science Award (KL2TR001083 and UL1TR001085) and the Stanford Human-Centered AI Institute. D.C.A. was supported by NIH career development award K02 AA023814.

Author information

Authors and Affiliations

Contributions

A.S., I.W.L., A.S.M., D.C.A. and T.A. were involved with the design of HAILEY and the formulation of the study. A.S. and I.W.L. conducted the study. All authors interpreted the data, drafted the manuscript and made significant intellectual contributions to the manuscript.

Corresponding author

Ethics declarations

Competing interests

D.C.A. is a co-founder with equity stake in a technology company, Lyssn.io, focused on tools to support training, supervision and quality assurance of psychotherapy and counselling. The remaining authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Ryan Kelly and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Table 1 and Figs. S1–S38.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sharma, A., Lin, I.W., Miner, A.S. et al. Human–AI collaboration enables more empathic conversations in text-based peer-to-peer mental health support. Nat Mach Intell 5, 46–57 (2023). https://doi.org/10.1038/s42256-022-00593-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-022-00593-2

This article is cited by

-

Large language models could change the future of behavioral healthcare: a proposal for responsible development and evaluation

npj Mental Health Research (2024)

-

ChatGPT in ophthalmology: the dawn of a new era?

Eye (2024)

-

The AI generation gap: Are Gen Z students more interested in adopting generative AI such as ChatGPT in teaching and learning than their Gen X and millennial generation teachers?

Smart Learning Environments (2023)

-

Will ChatGPT transform healthcare?

Nature Medicine (2023)

-

Natural language processing for mental health interventions: a systematic review and research framework

Translational Psychiatry (2023)