Abstract

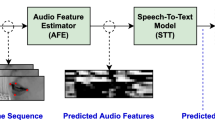

Visual speech recognition (VSR) aims to recognize the content of speech based on lip movements, without relying on the audio stream. Advances in deep learning and the availability of large audio-visual datasets have led to the development of much more accurate and robust VSR models than ever before. However, these advances are usually due to the larger training sets rather than the model design. Here we demonstrate that designing better models is equally as important as using larger training sets. We propose the addition of prediction-based auxiliary tasks to a VSR model, and highlight the importance of hyperparameter optimization and appropriate data augmentations. We show that such a model works for different languages and outperforms all previous methods trained on publicly available datasets by a large margin. It even outperforms models that were trained on non-publicly available datasets containing up to to 21 times more data. We show, furthermore, that using additional training data, even in other languages or with automatically generated transcriptions, results in further improvement.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The datasets used in the current study are available from the original authors on the LRS2 (https://www.robots.ox.ac.uk/~vgg/data/lip_reading/lrs2.html), LRS3 (https://www.robots.ox.ac.uk/~vgg/data/lip_reading/lrs3.html), CMLR (https://www.vipazoo.cn/CMLR.html), Multilingual (http://www.openslr.org/100) and CMU-MOSEAS (http://immortal.multicomp.cs.cmu.edu/cache/multilingual) repositories. Qualitative results and the list of cleaned videos for the training and test sets of CMU-MOSEAS and Multilingual TEDx are available on the authors’ GitHub repository (https://mpc001.github.io/lipreader.html).

Code availability

Pre-trained networks and testing code are available on a GitHub repository (https://mpc001.github.io/lipreader.html) or at Zenodo66 under an Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) licence.

References

Potamianos, G., Neti, C., Gravier, G., Garg, A. & Senior, A. W. Recent advances in the automatic recognition of audiovisual speech. Proc. IEEE 91, 1306–1326 (2003).

Dupont, S. & Luettin, J. Audio-visual speech modeling for continuous speech recognition. IEEE Trans. Multimedia 2, 141–151 (2000).

Chung, J. S., Senior, A., Vinyals, O. & Zisserman, A. Lip reading sentences in the wild. In Proc. 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition 3444–3453 (IEEE, 2017).

Afouras, T., Chung, J. S., Senior, A., Vinyals, O. & Zisserman, A. Deep audio-visual speech recognition. In IEEE Transactions on Pattern Analysis and Machine Intelligence, 1 (IEEE, 2018); https://doi.org/10.1109/TPAMI.2018.2889052

Shillingford, B. et al. Large-scale visual speech recognition. In Proc. 20th Annual Conference of International Speech Communication Association 4135–4139 (ISCA, 2019).

Serdyuk, D., Braga, O. & Siohan, O. Audio-visual speech recognition is worth 32 × 32 × 8 voxels. In Proc. IEEE Automatic Speech Recognition and Understanding Workshop 796–802 (IEEE, 2021).

Zhang, X. et al. Understanding pictograph with facial features: end-to-end sentence-level lip reading of Chinese. In Proc. 33rd AAAI Conference on Artificial Intelligence 9211–9218 (AAAI, 2019).

Zhao, Y., Xu, R. & Song, M. A cascade sequence-to-sequence model for Chinese Mandarin lip reading. In Proc. 1st ACM International Conference on Multimedia in Asia 1–6 (ACM, 2019).

Ma, S., Wang, S. & Lin, X. A transformer-based model for sentence-level Chinese Mandarin lipreading. In Proc. 5th IEEE International Conference on Data Science in Cyberspace 78–81 (IEEE, 2020).

Ma, P., Petridis, S. & Pantic, M. End-to-end audio-visual speech recognition with conformers. In Proc. 46th IEEE International Conference on Acoustics, Speech and Signal Processing 7613–7617 (IEEE, 2021).

Gulati, A. et al. Conformer: convolution-augmented transformer for speech recognition. In Proc. 21st Annual Conference of International Speech Communication Association 5036–5040 (ISCA, 2020).

Makino, T. et al. Recurrent neural network transducer for audio-visual speech recognition. In Proc. IEEE Automatic Speech Recognition and Understanding Workshop 905–912 (IEEE, 2019).

McGurk, H. & MacDonald, J. Hearing lips and seeing voices. Nature 264, 746–748 (1976).

Sumby, W. H. & Pollack, I. Visual contribution to speech intelligibility in noise. J. Acoust. Soc. Am. 26, 212–215 (1954).

Petridis, S., Stafylakis, T., Ma, P., Tzimiropoulos, G. & Pantic, M. Audio-visual speech recognition with a hybrid CTC/attention architecture. In Proc. IEEE Spoken Language Technology Workshop 513–520 (IEEE, 2018).

Yu, J. et al. Audio-visual recognition of overlapped speech for the LRS2 dataset. In Proc. 45th IEEE International Conference on Acoustics, Speech and Signal Processing 6984–6988 (IEEE, 2020).

Yu, W., Zeiler, S. & Kolossa, D. Fusing information streams in end-to-end audio-visual speech recognition. In Proc. 46th IEEE International Conference on Acoustics, Speech and Signal Processing 3430–3434 (IEEE, 2021).

Sterpu, G., Saam, C. & Harte, N. How to teach DNNs to pay attention to the visual modality in speech recognition. IEEE/ACM Trans. Audio Speech Language Process. 28, 1052–1064 (2020).

Afouras, T., Chung, J. S. & Zisserman, A. The conversation: deep audio-visual speech enhancement. In Proc. 19th Annual Conference of International Speech Communication Association 3244–3248 (ISCA, 2018).

Ephrat, A. et al. Looking to listen at the cocktail party: a speaker-independent audio-visual model for speech separation. ACM Trans. Graph. 37, 112:1–112:11 (2018).

Yoshimura, T., Hayashi, T., Takeda, K. & Watanabe, S. End-to-end automatic speech recognition integrated with CTC-based voice activity detection. In Proc. 45th IEEE International Conference on Acoustics, Speech and Signal Processing 6999–7003 (IEEE, 2020).

Kim, Y. J. et al. Look who’s talking: active speaker detection in the wild. In Proc. 22nd Annual Conference of International Speech Communication Association 3675–3679 (ISCA, 2021).

Chung, J. S., Huh, J., Nagrani, A., Afouras, T. & Zisserman, A. Spot the conversation: speaker diarisation in the wild. In Proc. 21st Annual Conference of International Speech Communication Association 299–303 (ISCA, 2020).

Denby, B. et al. Silent speech interfaces. Speech Commun. 52, 270–287 (2010).

Haliassos, A., Vougioukas, K., Petridis, S. & Pantic, M. Lips don’t lie: a generalisable and robust approach to face forgery detection. In Proc. 34th IEEE/CVF Conference on Computer Vision and Pattern Recognition 5039–5049 (IEEE, 2021).

Mira, R. et al. End-to-end video-to-speech synthesis using generative adversarial networks. IEEE Transactions on Cybernetics. 1–13 (IEEE, 2022).

Prajwal, K., Mukhopadhyay, R., Namboodiri, V. P. & Jawahar, C. Learning individual speaking styles for accurate lip to speech synthesis. In Proc. 33rd IEEE/CVF Conference on Computer Vision and Pattern Recognition 13796–13805 (IEEE, 2020).

Dungan, L., Karaali, A. & Harte, N. The impact of reduced video quality on visual speech recognition. In Proc. 25th IEEE International Conference on Image Processing 2560–2564 (IEEE, 2018).

Bear, H. L., Harvey, R., Theobald, B.-J. & Lan, Y. Resolution limits on visual speech recognition. In Proc. 21st IEEE International Conference on Image Processing 1371–1375 (IEEE, 2014).

Geirhos, R. et al. ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness. In Proc. 7th International Conference on Learning Representations (OpenReview, 2019).

Cheng, S. et al. Towards pose-invariant lip-reading. In Proc. 45th IEEE International Conference on Acoustics, Speech and Signal Processing 4357–4361 (IEEE, 2020).

Wand, M. & Schmidhuber, J. Improving speaker-independent lipreading with domain-adversarial training. In Proc. 18th Annual Conference of International Speech Communication Association 3662–3666 (ISCA, 2017).

Petridis, S., Wang, Y., Li, Z. & Pantic, M. End-to-end multi-view lipreading. In Proc. 28th British Machine Vision Conference (BMVA, 2017); https://doi.org/10.5244/C.31.161

Bicevskis, K. et al. Effects of mouthing and interlocutor presence on movements of visible vs. non-visible articulators. Can. Acoust. 44, 17–24 (2016).

Šimko, J., Beňuš, Š. & Vainio, M. Hyperarticulation in Lombard speech: global coordination of the jaw, lips and the tongue. J. Acoust. Soc. Am. 139, 151–162 (2016).

Ma, P., Petridis, S. & Pantic, M. Investigating the Lombard effect influence on end-to-end audio-visual speech recognition. In Proc. 20th Annual Conference of International Speech Communication Association 4090–4094 (ISCA, 2019).

Petridis, S., Shen, J., Cetin, D. & Pantic, M. Visual-only recognition of normal, whispered and silent speech. In Proc. 43rd IEEE International Conference on Acoustics, Speech and Signal Processing 6219–6223 (IEEE, 2018).

Heracleous, P., Ishi, C. T., Sato, M., Ishiguro, H. & Hagita, N. Analysis of the visual Lombard effect and automatic recognition experiments. Comput. Speech Language 27, 288–300 (2013).

Efforts to acknowledge the risks of new A.I. technology. New York Times (22 October 2018); https://www.nytimes.com/2018/10/22/business/efforts-to-acknowledge-the-risks-of-new-ai-technology.html

Feathers, T. Tech Companies Are Training AI to Read Your Lips https://www.vice.com/en/article/bvzvdw/tech-companies-are-training-ai-to-read-your-lips (2021).

Liopa. https://liopa.ai. Accessed 24 November 2021.

Crawford, S. Facial recognition laws are (literally) all over the map. Wired (16 December 2019); https://www.wired.com/story/facial-recognition-laws-are-literally-all-over-the-map/

Flynn, S. 13 cities where police are banned from using facial recognition tech. Innovation & Tech Today (18 November 2020); https://innotechtoday.com/13-cities-where-police-are-banned-from-using-facial-recognition-tech/

An update on our use of face recognition. FaceBook (2 November 2021); https://about.fb.com/news/2021/11/update-on-use-of-face-recognition/

Metz, R. Amazon will block police indefinitely from using its facial-recognition software. CNN (18 May 2021); https://edition.cnn.com/2021/05/18/tech/amazon-police-facial-recognition-ban/index.html

Greene, J. Microsoft won’t sell police its facial-recognition technology, following similar moves by Amazon and IBM. Washington Post (11 June 2020) https://www.washingtonpost.com/technology/2020/06/11/microsoft-facial-recognition

Afouras, T., Chung, J. S. & Zisserman, A. LRS3-TED: a large-scale dataset for visual speech recognition. Preprint at https://arxiv.org/abs/1809.00496 (2018).

Zadeh, A. B. et al. CMU-MOSEAS: a multimodal language dataset for Spanish, Portuguese, German and French. In Proc. 2020 Conference on Empirical Methods in Natural Language Processing 1801–1812 (ACL, 2020).

Salesky, E. et al. The multilingual TEDx corpus for speech recognition and translation. In Proc. 22nd Annual Conference of International Speech Communication Association 3655–3659 (ISCA, 2021).

Valk, J. & Alumäe, T. VoxLingua107: a dataset for spoken language recognition. In Proc. IEEE Spoken Language Technology Workshop 652–658 (IEEE, 2021).

Deng, J. et al. RetinaFace: single-stage dense face localisation in the wild. In Proc. 33rd IEEE/CVF Conference on Computer Vision and Pattern Recognition 5203–5212 (IEEE, 2020).

Bulat, A. & Tzimiropoulos, G. How far are we from solving the 2D & 3D face alignment problem? (and a dataset of 230,000 3D facial landmarks). In Proc. 16th IEEE/CVF International Conference on Computer Vision 1021–1030 (IEEE, 2017).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proc. 3rd International Conference on Learning Representations (OpenReview, 2015).

Assael, Y., Shillingford, B., Whiteson, S. & De Freitas, N. LipNet: end-to-end sentence-level lipreading. Preprint at https://arxiv.org/abs/1611.01599 (2016).

Ma, P., Martinez, B., Petridis, S. & Pantic, M. Towards practical lipreading with distilled and efficient models. In Proc. 46th IEEE International Conference on Acoustics, Speech and Signal Processing 7608–7612 (IEEE, 2021).

Park, D. S. et al. SpecAugment: a simple data augmentation method for automatic speech recognition. In Proc. 20th Annual Conference of International Speech Communication Association 2613–2617 (ISCA, 2019).

Liu, C. et al. Improving RNN transducer based ASR with auxiliary tasks. In Proc. IEEE Spoken Language Technology Workshop 172–179 (IEEE, 2021).

Toshniwal, S., Tang, H., Lu, L. & Livescu, K. Multitask learning with low-level auxiliary tasks for encoder-decoder based speech recognition. In Proc. 18th Annual Conference of International Speech Communication Association 3532–3536 (ISCA, 2017).

Lee, J. & Watanabe, S. Intermediate loss regularization for CTC-based speech recognition. In Proc. 46th IEEE International Conference on Acoustics, Speech and Signal Processing 6224–6228 (IEEE, 2021).

Pascual, S., Ravanelli, M., Serrà, J., Bonafonte, A. & Bengio, Y. Learning problem-agnostic speech representations from multiple self-supervised tasks. In Proc. 20th Annual Conference of International Speech Communication Association 161–165 (ISCA, 2019).

Shukla, A., Petridis, S. & Pantic, M. Learning speech representations from raw audio by joint audiovisual self-supervision. In Proc. 37th International Conference on Machine Learning Workshop (PMLR, 2020).

Ma, P., Mira, R., Petridis, S., Schuller, B. W. & Pantic, M. LiRA: learning visual speech representations from audio through self-supervision. In Proc. 22nd Annual Conference of International Speech Communication Association 3011–3015 (ISCA, 2021).

Serdyuk, D., Braga, O. & Siohan, O. Transformer-Based Video Front-Ends for Audio-Visual Speech Recognition for Single and Muti-Person Video. In Proc. 23rd Annual Conference of International Speech Communication Association 2833–2837 (ISCA, 2022).

Watanabe, S. et al. ESPnet: End-to-end speech processing toolkit. In Proc. 19th Annual Conference of International Speech Communication Association 2207–2211 (ISCA, 2018).

Kingma, D. & Ba, J. Adam: a method for stochastic optimization. In Proc. 2nd International Conference on Learning Representations (OpenReview, 2014).

Ma, P., Petridis, S. & Pantic, M. 2022. mpc001/Visual_Speech_Recognition_for_Multiple_Languages: visual speech recognition for multiple languages. Zenodo https://doi.org/10.5281/zenodo.7065080

Afouras, T., Chung, J. S. & Zisserman, A. ASR is all you need: cross-modal distillation for lip reading. In Proc. 45th IEEE International Conference on Acoustics, Speech and Signal Processing 2143–2147 (IEEE, 2020).

Ren, S., Du, Y., Lv, J., Han, G. & He, S. Learning from the master: distilling cross-modal advanced knowledge for lip reading. In Proc. 34th IEEE/CVF Conference on Computer Vision and Pattern Recognition 13325–13333 (IEEE, 2021).

Zhao, Y. et al. Hearing lips: improving lip reading by distilling speech recognizers. In Proc. 34th AAAI Conference on Artificial Intelligence 6917–6924 (AAAI, 2020).

Acknowledgements

All training, testing and ablation studies were conducted at Imperial College London.

Author information

Authors and Affiliations

Contributions

The code was written by P.M., and the experiments were conducted by P.M. and S.P. The manuscript was written by P.M., S.P. and M.P. M.P. supervised the entire project.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Joon Son Chung, Olivier Siohan and Mingli Song for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary text, Fig. 1, Tables 1–28 and references.

Supplementary Video 1

A demo of visual speech recognition for multiple languages.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ma, P., Petridis, S. & Pantic, M. Visual speech recognition for multiple languages in the wild. Nat Mach Intell 4, 930–939 (2022). https://doi.org/10.1038/s42256-022-00550-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-022-00550-z

This article is cited by

-

Research of ReLU output device in ternary optical computer based on parallel fully connected layer

The Journal of Supercomputing (2024)