Abstract

The widespread use of robots in service fields requires humanoid robots that mimic human social behaviour. Previous quantitative studies exist in human social behaviour, but engineering social robots requires translating these findings into algorithms to enable reliable and safe robot locomotion. To bridge this gap, we first quantitatively investigate the social rules that apply when people pass one another in social settings in laboratory and real-world experiments. We then developed a social locomotion model based on these observations to predict human path selections and walking trajectories in complex dynamic social scenes. The model was implemented into a socially aware navigation algorithm for a service robot. The robot navigating by the social locomotion algorithm behaved more like humans and received higher comfort ratings compared with previous social navigation algorithms tested. The model sheds new light on how to directly translate the findings of human behavioural experiments into robotic engineering.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

All data that support our findings are publicly available at https://doi.org/10.6084/m9.figshare.19937879.v249.

The data provided were raw data of human walking trajectories in (x, y) format or human behavioural judgments alongside experimental conditions in all experiments of the study.

Code availability

The code for data analysis and modelling is available at https://github.com/VRLab-ECNU/Social-Locomotion-Model50.

References

Belanche, D., Casaló, L. V., Flavián, C. & Schepers, J. Service robot implementation: a theoretical framework and research agenda. Serv. Ind. J. 40, 203–225 (2020).

Sheridan, T. B. Human–robot interaction: status and challenges. Hum. Factors 58, 525–532 (2016).

Althaus, P., Ishiguro, H., Kanda, T., Miyashita, T. & Christensen, H. I. Navigation for human-robot interaction tasks. in Proc. IEEE International Conference on Robotics and Automation 1894–1900 (2004).

Knorr, A. G., Willacker, L., Hermsdörfer, J., Glasauer, S. & Krüger, M. Influence of person- and situation-specific characteristics on collision avoidance behavior in human locomotion. J. Exp. Psychol. Hum. Percept. Perform. 42, 1332–1343 (2016).

Hayduk, L. A. Personal space: where we now stand. Psychol. Bull. 94, 293–335 (1983).

Kendon, A. in Conducting Interaction: Patterns of Behavior in Focused Encounters 209–237 (Cambridge Univ. Press, 1990).

Basili, P. et al. Strategies of locomotor collision avoidance. Gait Posture 37, 385–390 (2013).

Ding, X., Gao, Z. & Shen, M. Two equals one: two human actions during social interaction are grouped as one unit in working memory. Psychol. Sci. 28, 1311–1320 (2017).

Kendal, R. L. et al. Social learning strategies: bridge-building between fields. Trends Cogn. Sci. 22, 651–665 (2018).

Kruse, T., Pandey, A. K., Alami, R. & Kirsch, A. Human-aware robot navigation: a survey. Rob. Auton. Syst. 61, 1726–1743 (2013).

Avrunin, E. & Simmons, R. Socially-appropriate approach paths using human data. in The 23rd IEEE International Symposium on Robot and Human Interactive Communication 1037–1042 (IEEE, 2014).

Karunarathne, D., Morales, Y., Kanda, T. & Ishiguro, H. Model of side-by-side walking without the robot knowing the goal. Int. J. Soc. Robot. 10, 401–420 (2018).

Senft, E., Satake, S. & Kanda, T. Would you mind me if I pass by you?: Socially-appropriate behaviour for an omni-based social robot in narrow environment. in Proc. 2020 ACM/IEEE International Conference on Human-Robot Interaction 539–547 (IEEE, 2020).

Repiso, E., Zanlungo, F., Kanda, T., Garrell, A. & Sanfeliu, A. People’s V-formation and side-by-side model adapted to accompany groups of people by social robots. In IEEE International Conference on Intelligent Robots and Systems 2082–2088 (IEEE, 2019).

Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 1, 206–215 (2019).

Pedro, D. A few useful things to know about machine learning. Commun. ACM 55, 9–48 (2012).

Knowles, E. S. et al. Group size and the extension of social space boundaries. J. Pers. Soc. Psychol. 33, 647–654 (1976).

Hall, E. T. A system for the notation of proxemic behavior. Am. Anthropol. 65, 1003–1026 (1963).

Setti, F., Lanz, O., Ferrario, R., Murino, V. & Cristani, M. Multi-scale f-formation discovery for group detection. in 2013 IEEE International Conference on Image Processing 3547–3551 (IEEE, 2013).

Satake, S. et al. How to approach humans? Strategies for social robots to initiate interaction. in Proc. 4th ACM/IEEE International Conference on Human-Robot Interaction, HRI’09 109–116 (2008).

Ishiguro, H. et al. Robovie: an interactive humanoid robot. Ind. Robot An Int. J. 28, 498–503 (2001).

Pacchierotti, E., Christensen, H. I. & Jensfelt, P. Evaluation of distance for passage for a social robot. In 15th Annual IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN06) 315–320 (2006).

Pacchierotti, E., Jensfelt, P. & Christensen, H. I. Tasking everyday interaction. in Autonomous Navigation in Dynamic Environments 151–168 (Springer, 2007).

Truong, X. T. & Ngo, T. D. Dynamic social zone based mobile robot navigation for human comfortable safety in social environments. Int. J. Soc. Robot. 8, 663–684 (2016).

Kirby, R., Simmons, R. & Forlizzi, J. COMPANION: a constraint-optimizing method for person–acceptable navigation. In The 18th IEEE International Symposium on Robot and Human Interactive Communication 607–612 (IEEE, 2009).

Kirby, R. Social Robot Navigation. PhD thsis, Carnegie Mellon Univ. (2010).

Helbing, D. & Molnár, P. Social force model for pedestrian dynamics. Phys. Rev. E 51, 4282–4286 (1995).

Shiomi, M., Zanlungo, F., Hayashi, K. & Kanda, T. Towards a socially acceptable collision avoidance for a mobile robot navigating among pedestrians using a pedestrian model. Int. J. Soc. Robot. 6, 443–455 (2014).

Vassallo, C. et al. How do walkers behave when crossing the way of a mobile robot that replicates human interaction rules? Gait Posture 60, 188–193 (2018).

Sisbot, E. A., Martin-Urias, L. F., Alami, R. & Simeon, T. A human aware mobile robot motion planner. in IEEE Transactions on Robots Vol. 23, 874–883 (IEEE, 2007).

Mac, T. T., Copot, C., Tran, D. T. & De Keyser, R. Heuristic approaches in robot path planning: A survey. Rob. Auton. Syst. 86, 13–28 (2016).

Fisac, J. et al. Probabilistically safe robot planning with confidence-based human predictions. in Robotics: Science and Systems (2018).

Nocentini, O. et al. A survey of behavioral models for social robots. Robotics 8, 54 (2019).

Armougum, A., Orriols, E., Gaston-Bellegarde, A., La Marle, C. J. & Piolino, P. Virtual reality: a new method to investigate cognitive load during navigation. J. Environ. Psychol. 65, 101338 (2019).

Fajen, B. R. & Warren, W. H. Behavioral dynamics of steering, obstacle avoidance, and route selection. J. Exp. Psychol. Hum. Percept. Perform. 29, 343–362 (2003).

Kalakrishnan, M., Chitta, S., Theodorou, E., Pastor, P. & Schaal, S. STOMP: Stochastic trajectory optimization for motion planning. in 2011 IEEE International Conference on Robotics and Automation 4569–4574 (IEEE, 2011).

Yi, S., Li, H. & Wang, X. Understanding pedestrian behaviors from stationary crowd groups. in Proc. IEEE Computer Society Conference on Computer Vision and Pattern Recognition 488–496 (IEEE, 2015).

van den Berg, J., Guy, S. J., Lin, M. & Manocha, D. In Springer Tracts in Advanced Robotics Vol. 70, 3–19 (Springer, 2011).

Moussaid, M., Helbing, D. & Theraulaz, G. How simple rules determine pedestrian behavior and crowd disasters. Proc. Natl Acad. Sci. USA 108, 6884–6888 (2011).

Moussaïd, M. et al. Experimental study of the behavioural mechanisms underlying self-organization in human crowds. Proc. R. Soc. B 276, 2755–2762 (2009).

Bain, N. & Bartolo, D. Dynamic response and hydrodynamics of polarized crowds. Science 363, 46–49 (2019).

Rio, K. W., Rhea, C. K. & Warren, W. H. Follow the leader: visual control of speed in pedestrian following. J. Vis. 14, 1–16 (2014).

Rio, K. W., Dachner, G. C. & Warren, W. H. Local interactions underlying collective motion in human crowds. Proc. R. Soc. B 285, 20180611 (2018).

Kim, Y. & Mutlu, B. How social distance shapes human–robot interaction. Int. J. Hum. Comput. Stud. 72, 783–795 (2014).

Bera, A., Randhavane, T., Prinja, R. & Manocha, D. SocioSense: Robot navigation amongst pedestrians with social and psychological constraints. in IEEE International Conference on Intelligent Robots and Systems (IROS) 7018–7025 (IEEE, 2017).

Zhou, C., Han, M., Liang, Q., Hu, Y. F. & Kuai, S. G. A social interaction field model accurately identifies static and dynamic social groupings. Nat. Hum. Behav. 3, 847–855 (2019).

Wellman, H. M. Making Minds: How Theory of Mind Develops (Oxford Univ. Press, 2014).

Sethian, J. A. A fast marching level set method for monotonically advancing fronts. Proc. Natl Acad. Sci. USA 93, 1591–1595 (1996).

Zhou, C. et al. Social Locomotion Model FigShare https://doi.org/10.6084/m9.figshare.19937879.v2 (2022).

Zhou, C. et al. Social Locomotion Model v1.1 Zenodo https://doi.org/10.5281/zenodo.6958447 (2022).

Acknowledgements

This research was supported by the National Natural Science Foundation of China (grant no. 32022031) to S.-G.K., the Basic Research Project of Shanghai Science and Technology Commission (grant no. 19JC1410101) to S.-G.K. and the China Postdoctoral Science Foundation (grant no. 2021M701227) to C.Z. We are grateful for the helpful comments on our manuscript from Q. Liang, H.-N. Wu, C.-L. Deng and X.-M. Wang for technical support from S.-Y. Chen and for help on data collection from T. Zhang and S.-L. Ni.

Author information

Authors and Affiliations

Contributions

S.-G.K., C.Z., M.-C.M., Y.-F.H., X.-R.C. and Q.C. developed the concept and conceived the design of the experiments. C.Z., M.-C.M., X.-R.C., Q.C., M.-Y.Y. and Y.-F.H. collected experimental data. C.Z., M.-C.M., X.-R.C., Q.C., Y.-F.H. and M.-Y.Y. derived the models and analysed data under the supervision of S.-G.K.; S.-G.K., C.Z., M.-C.M., Q.C., X.-R.C. and Y.-F.H. wrote and revised the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Tony Belpaeme, Henrik Iskov Christensen and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

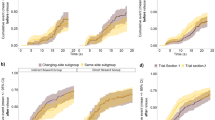

Extended Data Fig. 1 Model prediction for participants’ walking trajectories in Experiment 4 (n = 24 human participants).

The green solid lines show human walking trajectories. The error bands represent the 95% confidence interval of the observed data. The centres of the error bands represent the means of the observed data. The cyan dashed lines represent the predicted trajectories of the social locomotion model, the pink dashed lines represent the prediction of the COMPANIAN model and the blue dashed lines represent the predicted trajectories of the n-Body model.

Extended Data Fig. 2 Model performance in predicting the walking trajectories and path selections in Experiment 6.

a, An illustration of the experimental conditions from a bird’s eye view. The virtual human appears in one of nine positions with one of four orientations. b, The path selections predicted by the social locomotion model (left panel), the COMPANIAN model (middle panel), and the n-Body model (right panel) (n = 25 human participants). c, Model prediction for participants’ walking trajectories (n = 25 human participants). The green solid lines show human walking trajectories. The error bands represent the 95% confidence interval for the observed data. The centres of the error bands represent the means of the observed data. The cyan dashed lines represent the predicted trajectories of the social locomotion model, the pink dashed lines represent the predicted trajectories of the COMPANIAN model and the blue dashed lines represent the predicted trajectories of the n-Body model.

Extended Data Fig. 3 Model performance in predicting the walking trajectories and path selections in Experiment 7.

a, Illustration of the experimental conditions from a bird’s eye view. The condition of lateral distance represents the distance from virtual human A to the line connecting the starting point and target. The condition of interpersonal distance represents the distance between virtual human A and B. b, The path selections predicted by the social locomotion model (left panel), the COMPANIAN model (middle panel), and the n-Body model (right panel) (n = 25 human participants). Solid lines represent the human path selections, dashed lines represent the path selections predicted by models. Green, pink, and cyan lines respectively represent the interpersonal distance of 2 m, 3 m, and 4 m. The error bars represent s.e.m. for each condition. c, Walking trajectories predicted by the social locomotion model, the COMPANIAN model, and the n-Body model (n = 25 human participants). The green solid lines represent human walking trajectories. The error bands represent the 95% confidence interval for the observed data. The centres of the error bands represent the means of the observed data. The cyan, pink and blue dashed lines respectively show the predicted trajectories of the social locomotion model, the COMPANIAN model, and the n-Body model.

Extended Data Fig. 4 Experimental procedure in Experiment 8.

a, An illustration of the procedure in Experiment 8 from a bird’s eye view. A participant stood at the starting point and walked towards the target position 13 m away. After walking for three meters, the participant entered a circular area (labelled by dashed lines), a virtual human appeared at one of five positions labelled on the circle and started walking. b, An example of a walking trajectory illustrating the participant avoiding a walking virtual human. The dashed line represents the walking trajectory of the participant. The solid line represents the walking trajectory of the virtual human.

Extended Data Fig. 5 Model performance in predicting the walking trajectories and path selections in Experiment 8.

a, The path selections predicted by the social locomotion model (left panel), the COMPANIAN model (middle panel), and the n-Body model (right panel) (n = 25 human participants). Solid lines represent the human path selections, dashed lines represent the path selections predicted by models. Lines in different colours represent the speeds of the virtual human, which are 0.8 (green), 1.0 (pink), and 1.2 (cyan) times the natural walking speed of each participant. The error bars represent s.e.m. for each condition. b, Walking trajectories of participants predicted by the social locomotion model, the COMPANIAN model, and the n-Body model (n = 25 human participants). The green solid lines represent human walking trajectories. The error bands represent the 95% confidence interval for the observed data. The centres of the error bands represent the means of the observed data. The cyan, pink and blue dashed lines respectively show the predicted trajectories of the social locomotion model, the COMPANIAN model, and the n-Body model.

Extended Data Fig. 6 Comparison between model predicted trajectories and human walking trajectories in Experiment 9.

The green solid lines show human walking trajectories. The cyan dashed lines represent the predicted trajectories of the social locomotion model, the pink dashed lines represent the prediction of the COMPANIAN model and the blue dashed lines represent the prediction of the n-Body model. The error bands represent the 95% confidence interval of the observed data. The centres of the error bands represent the means of the observed data.

Extended Data Fig. 7 Complete results of model predictions for walking trajectories in Experiment 10 (n = 5 human participants).

The green solid lines show human walking trajectories. The cyan dashed lines represent the predicted trajectories of the social locomotion model, the pink dashed lines represent the prediction of the COMPANIAN model and the blue dashed lines represent the prediction of the n-Body model.

Supplementary information

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhou, C., Miao, MC., Chen, XR. et al. Human-behaviour-based social locomotion model improves the humanization of social robots. Nat Mach Intell 4, 1040–1052 (2022). https://doi.org/10.1038/s42256-022-00542-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-022-00542-z