Abstract

Collaborative robots are expected to physically interact with humans in daily living and the workplace, including industrial and healthcare settings. A key related enabling technology is tactile sensing, which currently requires addressing the outstanding scientific challenge to simultaneously detect contact location and intensity by means of soft conformable artificial skins adapting over large areas to the complex curved geometries of robot embodiments. In this work, the development of a large-area sensitive soft skin with a curved geometry is presented, allowing for robot total-body coverage through modular patches. The biomimetic skin consists of a soft polymeric matrix, resembling a human forearm, embedded with photonic fibre Bragg grating transducers, which partially mimics Ruffini mechanoreceptor functionality with diffuse, overlapping receptive fields. A convolutional neural network deep learning algorithm and a multigrid neuron integration process were implemented to decode the fibre Bragg grating sensor outputs for inference of contact force magnitude and localization through the skin surface. Results of 35 mN (interquartile range 56 mN) and 3.2 mm (interquartile range 2.3 mm) median errors were achieved for force and localization predictions, respectively. Demonstrations with an anthropomorphic arm pave the way towards artificial intelligence based integrated skins enabling safe human–robot cooperation via machine intelligence.

Similar content being viewed by others

Main

Collaborative robots, or cobots, should have the capability to interact with humans in a shared workspace1 in different scenarios, ranging from industrial production, transportation and delivery of goods to medical aid and rehabilitation2,3,4. Workers and cobots are expected to physically cooperate in unstructured common spaces, and with the ongoing Industry 4.0 transition the machine will no longer be considered a potential substitute but rather a companion, assisting and complementing human abilities in performing a wide range of tasks.

Current cobots typically integrate sensing technologies for contact detection, such as force/torque sensors5, complementing proximity identification6,7. These technologies lead to constraints on modularity, scalability and retrofit to the installed non-collaborative machines and require low inertial and payload configurations too. Since their presence might be harmful for humans, robots still operate inside closed cages and are kept separated from workers in most processes. In this domain, accidental or voluntary contact might occur; thus, the availability of intelligent sensing systems would be essential towards a coexistence in unstructured environments. A robot able to sense, categorize and respond to touch throughout its body, ideally mimicking the human sensory performance, might lead to more meaningful and intuitive interactions8 and enhanced flexibility, reproducibility, productivity and risk reduction.

Thus, safe physical cooperations and interactions with the surroundings depend on the availability of tactile feedback, touch being the sensory modality that enables humans to gather a variety of haptic information about the external world by exploring object properties through contact and manipulation9,10. The main families of human mechanoreceptors that provide the brain with short-latency feedback to enable closed-loop sensorimotor control are innervated by myelinated fibres and are classified in categories depending on end-organ morphology and positioning with respect to the skin structure, determining the neural encoding of the mechanical input signal11. Mechanoreceptors are defined as slowly adapting (SA) or fast adapting (FA) depending on their temporal response, with SA units responding to sustained indentations and FA ones mainly encoding stimulation transients. Type 1 (surface-located) or type 2 (deeply located) classes instead reflect the positioning referred to the epidermal layer, with impact mainly on the receptive field and definition of the borders. Specifically, type 1 units have smaller and well defined fields with multiple hotspots (that is, regions with maximal sensitivity within the receptive field), whereas type 2 ones present blurred sensitive regions with single larger spots12,13. The combination of temporal response and spatial properties returns four main classes, namely FA1 (Meissner corpuscles), SA1 (Merkel cells), FA2 (Pacinian corpuscles) and SA2 (Ruffini corpuscles)14. The integration of multiple mechanoreceptor spiking outputs gives rise to perceptual functions in the brain, such as the ability to determine the location and the magnitude of physical contact through the skin15. These physiological properties, together with explanatory mechanical models describing interactions with soft materials for tactile sensing16,17, became a source of bio-inspiration for the present study.

An ideal bio-inspired artificial skin should consist of tactile sensors distributed over large curved areas, able to solve tactile stimuli with millimetric localization, millinewton magnitude sensing and millisecond temporal accuracy10,12,18. Artificial skins should also be soft19, stretchable20,21,22,23, lightweight24 and conformable25 and have minimal wiring encumbrance. Therefore, the application of soft components for tactile feedback is pivotal for both their high flexibility and their intrinsic compliance to enable safe interactions.

Soft e-skin for sensorizing large areas of robot bodies

In the last decade, remarkable sensing patches leveraging different transduction mechanisms have been presented26,27,28,29,30,31 and soft e-skin solutions have been developed for application in collaborative anthropomorphic robots32 and neuroprosthetics33,34,35,36,37. However, artificial skins are not yet an integral component of robotic technologies, unlike vision sensors38. Conventional tactile and proximity sensors have usually been developed using bulky and rigid components. Limited flexibility, deformability and adaptability to unconstrained environments have prompted, so far, the usage of robots in confined spaces. On the other hand, the emerging class of soft sensing devices, provided with deformable substrates such as polymers, gels and fluids39,40,41 in combination with miniaturized sensors42, suggests an approach for the growing call for flexibility.

Covering the whole body of anthropomorphic robots with soft and curved sensing components is a major challenge that is being addressed by state-of-the-art technologies. As an example, the HRP-2 humanoid embeds a multimodal artificial sensor system that mimics the functional layers of the human skin by means of an optical proximity sensor, a three-axis accelerometer, a normal force sensor and a temperature-sensitive element43. Similarly, the iCub integrates a soft skin endowed with three-axis force-sensitive elements44. A step towards highly accurate and reliable soft robotics has been presented with a combination of advanced electronic functionalities and skin-like stretchability to develop a 347-element transistor array for force mapping45. Another soft e-skin was recently based on a capacitor array within a polyurethane matrix and tested when mounted onto the end-effector of a robotic arm. This system achieved both normal and shear force estimation in real time with high sensitivity and excellent cycling stability, with the tracked signal being also used to control and stop the system during predefined tasks46.

Recently developed pressure-sensitive e-skins47 presented stretchability and conformability, even when simultaneously detecting more than two stimuli48. Nevertheless, their row–column taxel addressing modality entails major wiring issues, especially when integrating a high number of sensors over complex curved shapes for applications in humanoid robots, human–machine interfaces and healthcare machines.

Sensor data and artificial intelligence (AI) to enable human–robot cooperation (HRC)

The ongoing Industry 4.0 manufacturing framework and, in particular, personalized mass production, enabled by HRC, require the enrolment of cobots, which are able to dynamically change their preprogrammed tasks and share the workspace with human operators49. Traditional control methods do not often match the needs of a sensor-based, flexible and integrated solution; therefore, HRC applications may benefit from deep learning (DL) algorithms to overcome the current limitations in modelling and mimicking human activities50.

The viability of DL has been proven in several HRC applications, such as computer vision51, object identification52 and speech and body posture recognition53. Furthermore, the potentiality of DL with the implementation of convolutional neural networks (CNNs) has also been investigated towards the development of electronic skins with biologically inspired tactile properties, thus mimicking the mechanoreceptors of the human skin54.

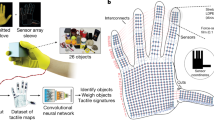

This work presents a bio-inspired sensitive skin, able to detect external tactile stimuli in terms of both contact location and magnitude, thus providing robots with the ability to dynamically interact with the environment. Such an intelligent artificial skin will foster robots’ awareness and understanding of the surroundings in dynamic tasks, by means of an integrated tactile sensor array inspired to some extent by the large and single-hotspot receptive fields of Ruffini SA2 corpuscles11 combined with DL strategies. The degree of bio-inspiration is mainly achieved with the deep positioning and shape of fibre Bragg grating (FBG) sensors in the soft polymeric materials, to emulate the spatial properties of the receptive fields of fusiform SA2 units. The developed curved modular sensorized e-skin patches integrating photonic FBG sensors allow the whole body of collaborative robots to be endowed with tactile sensing capabilities (Fig. 1a).

An outstanding feature of the FBG technology is the possibility to inscribe multiple sensing gratings within one single optical fibre core, each associated with its nominal wavelength λB and fully customizable in terms of length (from 1 mm up to 20 mm) and placement (along the fibre). This asset allows minimization of the number of necessary communication channels, and therefore reduces wiring management issues. The presented e-skin integrated with FBG tactile sensors advances the state of the art by enabling complete coverage of the cobot surface with a single wiring element connecting multiple wavelength-separated transducers. Grounding on the Ruffini-like sensor spatial outputs and DL methods, each patch was demonstrated to efficiently decode both the magnitude and the localization of the contact force distributed over the large-area artificial skin. This goal was achieved by leveraging the overlapping receptive fields of the FBGs, which were further interpreted by means of AI strategies.

Results

e-skin mimicking spatial properties of Ruffini corpuscles

The presented artificial skin was aimed at emulating the human skin functionality by embedding FBGs within a soft polymeric substrate that conveys the applied load to the optical sensors. Specifically, it imitated to some extent the functional role of the human SA2 afferents, through the cross-talk of neighbouring sensors with overlapping receptive fields, each having a single large hotspot, to achieve contact localization and force estimation (Fig. 2a,b).

a, Human skin with positioning and receptive fields of Ruffini corpuscles highlighted. b, Artificial skin with FBGs. c, Strain (RR component) experienced by the encapsulation material at 50 mN. d, Optical fibre path and positioning of the FBGs in the skin. e, Receptive fields of three FBGs characterized by indenting with a hemispherical probe (11 mm diameter) and plotting the force required to achieve a 0.02 nm wavelength variation, corresponding to ten times the threshold used for contact detection.

The FBG positioning along the optical fibre implemented a varying spatial density, to more coarsely reproduce that of the human mechanoreceptors in the forearm, which increases from the elbow to the wrist13,55.

Therefore, the developed artificial skin exhibits a bio-inspired variable transducer density, higher closer to the wrist and decreasing towards the elbow (with distances between neighbouring FBGs ranging from 12.9 mm to 24.5 mm, Fig. 2d). The FBG technology is suitable for integrating a mesh of transducers since its wavelength-multiplexing functioning guarantees low wiring bulkiness (Fig. 2d). The thickness of the polymeric artificial forearm soft cover, encapsulating the optical fibre in the medial plane, was 8 mm to achieve a trade-off between FBG sensitivity and receptive field size (Fig. 2c)56 resulting in the triangulation of neighbouring sensors that enabled simultaneous contact localization and force reconstruction via DL methods. This was modelled via finite element method (FEM) analysis with a parametric sweep (in the range 4–12 mm) on the polymer thickness while applying a load on its top surface (Fig. 2c; boundary conditions and simulation details are given in Methods). The simulation results confirmed that the radial RR component of the strain tensor measured in the centre of the polymer layer decreases with the thickness; conversely, the receptive field size increases (Extended Data Fig. 1). The application of loads through calibrated Von Frey hairs to characterize the sensitivity of the skin in human–robot interaction (Supplementary Video 1) resulted in a sigmoid contact detection rate (Extended Data Fig. 2b; a = 2.2 mN−1, b = 12.4 mN, where a and b are the curve steepness and the force value that results in a 50% contact detection probability, respectively. See methods for the definition of the sigmoid fitting curve coefficients and code published in the Code Ocean repository for details) for increasing microfilament diameters, with a 50.6 mN 75% probability threshold.

Raw FBG sensor data showed the activation of neighbouring FBGs (Fig. 2d), depending on the location and the intensity of the force applied via a hemispherical probe controlled with a mechatronic platform, with 15.9 mm2 median area (9.0 mm2 first quartile, 20.9 mm2 third quartile) of the hotspot across all FBGs (shown in black in Fig. 2e). In particular, wavelength changes are correlated with the distance/magnitude from/of the applied load (Fig. 3).

DL for contact force and localization inference

Considering fine manipulation activities that are typically categorized as gentle touch57,58,59, both a CNN and multilayer perceptrons (MLPs) were trained (Fig. 4) on the basis of a series of force-controlled indentations, up to 2.5 N, performed on the surface of the skin in random positions uniformly distributed over 120 mm in length (Y) and 90° rotation span (R) around the elbow–wrist Y axis (Fig. 5b). The CNN reconstructed the applied external force and a system of four MLPs localized the contact source, both relying on the 16 FBG readouts that were the network inputs.

A CNN is developed for force intensity detection. For forces above the 50 mN threshold, a feedforward neural network and subsequent multigrid NIP implement the localization of the contact of the load applied onto the skin surface. The table in the bottom right corner shows the main results, namely the error on force intensity detection and on the localization of a stimulus applied onto the skin surface.

a, Path of the optical fibre integrating FBGs into the artificial skin. b, Test indentation points with uniform random distribution over 120 mm in length (Y) and 90° rotation span (R) around the elbow–wrist Y axis. c,d, Force intensity median error and contact localization median error for each indentation over the whole area, with higher error fluctuations observed just at the edges.

The CNN (flowchart of Fig. 4) was the first step of the processing pipeline (that is, intensity detection block). A fivefold cross-validation was performed and resulted in a median cross-validation error of 30 mN with 1 mN interquartile range (IQR) (Extended Data Table 1). Furthermore, a consistent prediction error across the folds was achieved since the median of the IQRs of the single validation fold resulted in 56 mN with 2 mN IQR (Extended Data Table 1). It is worth mentioning that the implemented neural network correctly predicted the force across the whole 0–2.5 N range, as shown in Fig. 4 (upper right) and in the moving average box plot of Fig. 6. In fact, the CNN predictions also followed the changes in the force slope occurring during the initial phase of the contact (0.05–0.5 N).

The contact localization block was the second part of the processing pipeline (Fig. 4), and its activation is contingent upon the intensity detection block prediction for forces above 50 mN. It consisted of four feedforward neural networks followed by a multigrid neuron integration process (NIP). In more detail, by relying on the predictions of the individual neural network, each area that represented a target class of the neural network was associated with a confidence level. Therefore, by geometrically overlapping the subareas and adding the corresponding weights provided by the individual neural networks, the coordinates of the indentation were predicted. The resulting cross-validation median error was 3.6 mm (IQR = 0.1 mm; Extended Data Table 2), that is, about one-quarter of the minimal distance among nearest-neighbour sensing elements thanks to triangulation rules learnt by means of DL strategies. The NIP resulted in substantial improvements on the prediction accuracies compared with the single neural networks. Namely, the SG-, DSG-, HSG- and VSG-NN (Extended Data Table 2) resulted in cross-validation median errors of 12.5 mm (IQR = 8.4 mm), 6.5 mm (IQR = 4.4 mm), 11.0 mm (IQR = 9.3 mm) and 8.6 mm (IQR = 4.9 mm).

Extensive training and cross-validation results for both the contact localization block and the intensity detection block are presented in Extended Data Tables 1 and 2, respectively.

The test set resulted in a median error of 35 mN (IQR = 56 mN) for force prediction and of 3.2 mm (IQR = 2.3 mm) for position prediction (Fig. 4, bottom right). Both the force intensity and the spatial accuracy predictions were robust over the skin surface, although force and localization errors increased at the edges of the skin, because of the relatively limited number of sensors to learn triangulation rules near the boundary (Fig. 5c,d).

The force prediction error increased linearly with the actual force (R2 = 0.97) and by comparing the force absolute error between the force ranges (Fig. 6a,b). Conversely, when the actual force increases, the accuracy in assessing contact position was enhanced in the force range 0.05–0.5 N and stabilized up to 2.5 N (Fig. 6c,d).

In addition, as a benchmark for our machine learning solution, random guess (RG) models, based on available data, were implemented. For the intensity detection RG model, predictions were set equal to the median of the force in the training set, resulting in a median absolute error of 194 mN (IQR = 714 mN). By comparing it with our CNN solution, via a Wilcoxon signed-rank test, a significant difference was found (P < 0.001, Cohen’s d = 0.84). In the same way, the localization RG model, setting the predictions equal to the position target median from the training set, presented a median absolute error of 34.72 mm (IQR = 24.68 mm). Applying a Wilcoxon signed-rank test between the RG model and our model, a significant difference was found (P < 0.001, Cohen’s d = 1.90), thus confirming the effectiveness of the proposed neural network based model for contact localization.

Finally, we demonstrated real-time capabilities of the intensity detection and contact localization blocks via random force-controlled indentations performed on the artificial skin surface (Supplementary Video 3). Furthermore, we reported how the artificial skin responds appropriately in a real-time framework to the interaction with human impressed forces (Supplementary Video 4), providing evidence of potential generalization ability, notwithstanding the intrinsic difference in related contact mechanics in comparison with the hemispherical rigid probe used for training the DL model for contact localization and force estimation.

Discussion

The proposed skin fosters HRC using modular tactile patches, that could potentially fit any robot architecture (Supplementary Video 5 shows the integration on a seven-degree-of-freedom (DoF) robot). More specifically, the integrated skin with optical sensors can be used either to cover purposely designed robots or to retrofit existing ones.

Currently, multimodal approaches combining state-of-the-art hardware development and fine sensing skills with advanced AI approaches are showing promising results in artificial skins60. Our study targeted this objective, integrating physical and computational intelligence in the presented soft sensitive skin for collaborative robotics, demonstrating the ability to simultaneously predict the location and the intensity of an external load applied on the patch surface. A polymeric matrix embedding FBG transducers was developed and integrated in a human-scale forearm. The functional role of Ruffini corpuscles was a source of bio-inspiration in terms of properties of the receptive fields, which physiologically have large single high-sensitivity spots, in contrast to, as an example, the Merkel corpuscles, which present multiple smaller hotspots13. In this work we have shown that most of the FBG sensors integrated in the developed skin exhibited a single large responsive area (FBGs 1, 3–5, 7–11, 14–16; see code published in the Code Ocean repository for details), one featured a single responsive area provided by clustered adjacent subregions (FBG 6) and, probably because of local irregularities of the soft encapsulation polymer, a subset exhibited two large responsive areas (FBGs 2, 12 and 13, see published code for details). These results are to some extent comparable to the findings of a background study characterizing the physiological properties of human mechanoreceptors in the forearm, with a single responsive area in nine out of ten SA2 mechanoreceptors13.

Conventional sensing technologies require a wired electrical dipole for each sensor, limiting the number of elements that can be integrated and, thus, the spatial resolution of the large-area sensing skins. With respect to these solutions, FBGs offer competitive advantages, such as intrinsic multiplexing capabilities, high sensitivity, ease of dense integration and immunity to electromagnetic interference (enabling, for example, magnetic resonance compatibility in collaborative healthcare applications)61,62. Therefore, this approach may be considered a disruptive solution to overcome several constraints within the development of the ideal sensitive skin.

DL networks were implemented to retrieve the distributed contact localization (through four MLPs) and the force intensity estimation (through one CNN), starting from the raw FBG wavelengths. Moreover, the NIP strategy permitted us to improve the localization results, leveraging the integrated prediction of four MLPs based on half-pitch shifted grids. The median test set error was 35 mN (IQR = 56 mN) and 3.2 mm (IQR = 2.3 mm) for the estimation of the force and the localization of the source, respectively. In particular, the error distribution of localization all over the skin surface (120 mm along Y and 90° along R) and across the whole range of force (0–2.5 N) was uniform, as shown in Figs. 5 and 6, with lower accuracy and higher error fluctuations observed just at the edges. This suggests exploring the development of a continuous skin in critical applications, rather than discrete patches, so as to remove/mitigate potential boundary effects on sensing accuracy.

The present work illustrated multiple breakthroughs with respect to the previous one-dimensional sensing approaches56. In particular, a soft tactile skin, made of FBG transducers embedded in a soft matrix, proved that normal load exerted onto its curved and complex surface can be identified in terms of both magnitude and application location. Here, the localization capability throughout the curved and large-area sensorized surface has been demonstrated, whereas in the previous study56 contact information was retrieved alongside the optical fibre only.

Future works will further investigate the generalization ability and robustness of the DL strategies, as an example with respect to the possibility of changing skin curvature between training and operation phases, and will address the implementation of collaborative behaviours enabled by the availability of such skin patches integrated within a robotic arm (Supplementary Video 5) to achieve interaction management, path replanning and robot programming by demonstration.

Methods

Biomimetic sensitive e-skin

The skin was a soft-material tactile sensor array system distributed over a large area to enable intensity detection and localization of an external force. This skin was designed and developed to cover a 3D-printed, custom-made anthropomorphic robotic arm and to make its forearm sensitive56,63. The sensing system consisted of a 8-mm-thick stretchable polymeric layer (Dragon Skin 10 Medium, Smooth-On), integrating a 430-mm-long optical fibre (FemtoPlus Grating, FemtoFiberTec), whose diameter was 80 μm (102 ± 5 μm with polyimide coating) and bending radius smaller than 4 mm.

FBGs are microresonant structures with a typical length in the range of millimetres, that are inscribed along the core of an optical fibre by means of a laser beam passing through a phase mask. The resulting spatial periodic structure is an interference pattern inside the fibre core, which acts as a narrow-band optical filter. When a light source illuminates the FBG, part of the light spectrum is transmitted, whereas the residual one, centred around the so-called Bragg wavelength (λB), is reflected backward. λB (Fig. 1b) depends on both the effective refractive index of the fibre core (ηeff) and the grating period (ΔB), or pitch, as defined in

FBGs are sensitive to strain, since it affects both ΔB and ηeff, so the reflected signal (λB) changes accordingly, as shown in Fig. 1b. The efficacy of this technology has been previously assessed in robotics, ranging from simple integration in soft polymers64,65,66 to more complex tactile sensors56 and robotic hand prototypes67, often in combination with AI techniques and FEMs56. These works share the idea of encapsulating optical fibres in silicone rubbers for an efficient transfer of the external contact pressures to the embedded FBGs and to mimic the soft properties of human skin. The strain experienced by a grating results in a shift of its central wavelength, which is proportional to the distance from/intensity of the contact interaction (Supplementary Video 2). Furthermore, the polymeric substrate enhances the robustness of the encapsulated optical fibre.

The integrated optical fibre was endowed with nFBG = 16 FBGs, each 8 mm in length, with λB ranging from 1,530 nm to 1,564.5 nm and a pitch of 2.3 nm (pattern shown in Fig. 2d). The skin dimensions were 150 mm along the vertical elbow–wrist axis (Y), covering a 145° region (labelled with rotational coordinate R).

e-skin fabrication process

The manufacturing process of the soft curved skin for the covering of the anthropomorphic forearm is reported in Extended Data Fig. 3. Several custom moulds were designed and fabricated by means of a 3D printer (Ultimaker S5, Ultimaker). A first polymeric layer was cast by pouring Dragon Skin 10 into a mould consisting of the forearm support and a cover with the pattern (1 mm extrusion) for hosting the optical sensor. Once the optical fibre equipped with the 16 FBGs was encapsulated within the dedicated channel, the closing silicone layer was poured into a smooth surface mould.

FEM simulation

An FEM analysis of the sensitive skin behaviour was performed in COMSOL Multiphysics (COMSOL). The simulations addressed the evaluation of a load applied onto the skin top surface when a hemispherical indenter was used. The scope of this preliminary study was to evaluate the effect of an increasing thickness of the encapsulation material, searching for a proper trade-off between the receptive field size and the sensitivity of the sensors. The skin model consisted of a soft polymeric layer with parametric thickness in the range 4–12 mm. The lower limit was set to prevent delaminations of the soft polymer and to ease integration of the optical fibre, whereas the upper limit was enforced to guarantee enough space for the actuation units embedded in the robotic arm. To simplify the model and reduce the computational burden, a two-dimensional axisymmetric simulation was run, by considering the mid-thickness skin radial strain as representative of that experienced by the fibre. The polymeric substrate (density ρ = 1,070 kg m−3, Young’s modulus E = 152 kPa, Poisson coefficient ν = 0.49) was modelled as Yeoh hyperelastic material, i.e., through a third order polynomial expression with coefficients c1, c2 and c3 (for the considered polymer68: c1 = 36 kPa, c2 = 258 Pa, c3 = −0.56 Pa), which reduces to the classical neo-Hookean model when only considering c1, that in turn represents half of the material shear modulus in the undeformed configuration. The steel indenter was modelled as a linear elastic material, whose mechanical properties were E = 200 GPa, ν = 0.30 and ρ = 7,850 kg m−3. The simulation consisted in applying loads by means of the indenter on the top surface of the soft object, replicating the experimental indentations. In the FEM simulation, a null displacement at the bottom surface of the skin was set, since it was attached to the support rigid surface. Moreover, mesh independence was achieved by performing a mesh refinement study until robust results were reached.

Testing of e-skin sensitivity with Von Frey hairs

The sensitivity of the FBG-based artificial skin was evaluated by means of Von Frey hairs to characterize the human–machine interaction potential. These calibrated microfilaments, widely employed in neurophysiological tests for assessing human skin sensitivity to mechanical pressure, are nylon filaments with varying diameter: the smaller the diameter, the less force the filament exerts on the skin during application before buckling. In particular, eight Von Frey hairs (60 g, 26 g, 10 g, 4 g, 2 g, 1 g, 0.6 g and 0.4 g) were selected to manually stimulate the artificial skin. A cohort of 12 subjects was asked to provide 20 indentations for each of the eight filaments (12 subjects × 20 sites × 8 filaments = 1,920 stimulations) randomly across the artificial skin surface covering the forearm of the human-like robotic arm (Extended Data Fig. 2a and Supplementary Video 1). A graphical user interface (LabVIEW, National Instruments) was developed for data collection and real-time processing, allowing us to continuously read and detect the 16 FBG wavelength variations. When at least one sensor output exceeded a wavelength variation threshold, a stimulus was classified as a touch detection. This wavelength threshold was heuristically set to 2 pm to avoid background noise determining a spurious contact identification. The stimulus detection rate was then calculated for each microfilament to compute a psychometric-like fitting and thus the force sensitivity threshold of the artificial skin. Detection rates were fitted using a sigmoid curve:

where x is the force associated with the calibrated Von Frey hair, and a and b are the curve steepness and the force value that results in a 50% contact detection probability. The sigmoid coefficients were computed via a nonlinear least squares method (MATLAB, MathWorks). The force sensitivity threshold was estimated, as commonly done in psychophysical research59, by computing the stimulus force value that returns a 75% event probability on the sigmoid fitting.

Automated testing of the e-skin with a mechatronic platform

A four-DoF mechatronic platform was used to collect data about position and intensity arising from the force-controlled indentations on the forearm skin. As shown in Extended Data Fig. 4, the apparatus consisted of (i) two motorized stages (8MTF-102LS05, STANDA; 2.5 µm full step resolution and 102 mm × 102 mm travel range) for horizontal displacements (X–Y), (ii) a precision motorized positioner (8MVT120-25-4247, STANDA; 5 µm full step resolution and 25.4 mm travel range) for vertical translations (Z) and (iii) a motorized rotator (8MR190-2, STANDA; 0.01° full step resolution and 360° rotation range around the Y axis, resulting in the R rotational coordinate). In addition, a six-axis load cell (Nano-43 with SI-18-0.25 calibration, ATI Industrial Automation; 1/256 N resolution up to 18 N sensing range), equipped with a cylindrical steel probe (that is, an indenter 21 mm in length and with a hemispherical tip 11 mm in diameter) mimicking the size of a human fingertip, provided the measurement of the force component exerted along the loading direction of the skin (Z). A benchmark optical interrogator (FBG-Scan 904, FBGS; 0.3 pm 1σ precision in the 1,510–1,590 nm wavelength range) was used to illuminate the FBGs with a broad spectrum and to detect the reflected wavelengths. Data streaming was achieved via the interrogator’s built-in software (ILLumiSense, FBGS). The control of the overall experimental set-up and data recording were carried out by means of a dedicated graphical user interface (LabVIEW, National Instruments).

Automated data collection protocol with mechatronic platform

An experimental protocol consisting of 2,700 force-controlled indentations was carried out. Such indentations were spatially randomized throughout the artificial skin surface and performed by means of the steel indenter. Each selected point was loaded up to 2.5 N along the vertical direction (Z) and only data taken during the increasing-load phase were retained for further elaborations. The force intensity time series (Fz), the location data (Y; R) and the readouts of the FBG array were collected at a sampling rate of 100 Hz, during each indentation. The total indented area was set to 120 mm along Y and 90° along R and the final dataset included a total of more than 2.7 million samples.

Evaluation of receptive fields of e-skin FBG sensors

The receptive field of each FBG was assessed by evaluating the spatial distribution of contact force required to achieve a wavelength variation of at least 20 pm. These force levels and the areas underpinning them were represented by means of filled two-dimensional contour plots, where the hotspot regions with maximal sensitivity are represented in black (mapping the spatial regions of the receptive field responsive up to the first sixth of indentation force magnitude).

DL model and multigrid NIP

A DL model was developed and assessed with the Ngene LabVIEW module. The model was made up of two main blocks: the intensity detection block, that is, a CNN, chosen for its capability of handling time series69, and the localization block, consisting of four dense MLPs. The first block provided the estimation of the force applied onto the skin surface, whereas the second block output estimated contact point coordinates. In particular, when the CNN predicted a force higher than a 50 mN threshold, the localization block was triggered and, after the multigrid NIP, the contact point was estimated (Fig. 4). The CNN consisted of three identical three-dimensional convolutional layers for feature extraction from the raw data. Each of them presented 16 kernel filters (size 16 × 1 × 1) and a convolutional stride (S = 1), thus matching the input size rectified linear unit activation function70. A max-pooling layer followed each convolutional layer, and afterwards a flattening layer was added. At the end of the stacked convolutional module, two fully connected layers of 100 and 1 neurons, respectively, were connected to perform regression. The 100-neuron layer responded with a rectified linear unit activation function while the last neuron output was implemented via a linear activation function. Then, the nFBG inputs were processed by the second block to perform localization. The four classification networks consisted of three hidden layers, one dropout layer to prevent overfitting71 and an output layer with a softmax activation function.

As regards the NIP, a multigrid strategy was pursued by training four neural networks using four different grid configurations as target classes. Four different virtual grids of 30, 35, 36 and 42 squares (18 mm × 20 mm) were the targets of the classification networks (Fig. 4). With respect to the grid of 30 virtual areas (SG in Fig. 4), the other grids were vertically (VSG), horizontally (HSG) and diagonally (DSG) shifted by half a square. Each neural network provided a weight, and hence a classification percentage, for each area of the corresponding grid.

By virtually subdividing every single square into four smaller squares (9 mm × 10 mm) and overlapping the four grids, one single finer grid was obtained. The location of the applied load was retrieved with the weighted barycentre of each square.

All indentation tests involving AI strategies were performed on the same sample of sensorized skin, whereas the demonstrations involving the anthropomorphic robotic arm (Supplementary Videos 2 and 5) were performed with a different sample.

Model validation

The implemented validation approach consisted in (1) spatial random subdivision of the 2,700 indentations in training set (85%) and test set (15%), the latter then used to externally evaluate the model performances, and (2) a k-fold cross-validation (k = 5) within the training set used to internally validate the model. Model training was implemented via a backpropagation algorithm and stochastic gradient descent optimization algorithm (with momentum 0.9), and the samples were grouped into 50 minibatches to decrease the training time.

It is worth mentioning that each indentation was fully assigned, hence not split, to one of the datasets (that is, training, validation or test set). This choice ensured that no information about the validation/test set was provided during the model training, hence any form of double-dipping was avoided. Moreover, a z-score normalization was applied to both the training set and to the test set, using the mean and s.d. of the training set.

In addition, to test the algorithm force and position predictions against a random benchmark, RG models were developed. For the intensity detection RG model, the predictions were set equal to the median force value of the training set (176 mN), while for the localization RG model the prediction coordinates were set equal to the median value of the target positions (X = 0 mm and Y = 62.5 mm). Then the absolute error on the test set was computed using the RG model predictions and compared via a two-sided Wilcoxon signed-rank test (on SPSS 27.0, IBM SPSS) with the CNN and MLP/NIP model test absolute respectively.

Demonstration of collaborative application

A collaborative application of the developed skin was demonstrated on the basis of its integration into a seven-DoF robotic arm (three DoFs for the shoulder, one for the elbow, one for forearm link pronosupination and two for the wrist). The two DoFs of the wrist were actuated with linear motors (P16-50-22-12-P Micro Linear Actuator, Actuonix), which were operated in static position control mode. The remaining five DoFs were actuated with servomotors (XM540-W270-R Dynamixel for the shoulder and elbow DoFs, and XH430-W350-R Dynamixel for forearm link pronosupination), which were controlled with two policies. First, the robot arm was in backdrivable configuration and it was freely moved by a human operator, while the joint encoders of the servomotors tracked the operated motion. Afterwards, the recorded trajectories were autonomously replicated by the robot arm, and the skin presented in this study was used to detect contact and demonstrate the feasibility of collaborative functionality by temporarily stopping motion in real time upon contact detection.

Reporting Summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The test data reported here are freely available via Code Ocean at https://codeocean.com/capsule/3018603/tree/v1 (ref. 72).

Code availability

The MATLAB scripts used to elaborate the dataset and retrieve the figures reported here are freely available via Code Ocean at https://codeocean.com/capsule/3018603/tree/v1 (ref. 72).

References

Bitonneau, D. et al. Design of an industrial human-robot system through participative simulations – Tank cleaning case study. In IEEE/SICE International Symposium on System Integration (SII) 1059-1066 (IEEE, 2017).

Villani, V., Pini, F., Leali, F. & Secchi, C. Survey on human–robot collaboration in industrial settings: safety, intuitive interfaces and applications. Mechatronics 55, 248–266 (2018).

Peters, B. S., Armijo, P. R., Krause, C., Choudhury, S. A. & Oleynikov, D. Review of emerging surgical robotic technology. Surg. Endosc. 32, 1636–1655 (2018).

Matheson, E., Minto, R., Zampieri, E. G. G., Faccio, M. & Rosati, G. Human–robot collaboration in manufacturing applications: a review. Robotics 8, 100 (2019).

Jung, B. J., Kim, B., Koo, J. C., Choi, H. R. & Moon, H. Joint torque sensor embedded in harmonic drive using order tracking method for robotic application. IEEE/ASME Trans. Mechatron. 22, 1594–1599 (2017).

Orekhov, A. L., Johnston, G. L., Abah, C., Choset, H. & Simaan, N. Towards collaborative robots with sensory awareness: preliminary results using multi-modal sensing. In Proceedings of the IEEE ICRA workshop on Physical human-robot interaction: a design focus 1-5 (2019).

Tsuji, S. & Kohama, T. Proximity skin sensor using time-of-flight sensor for human collaborative robot. IEEE Sens. J. 19, 5859–5864 (2019).

Silvera-tawil, D., Rye, D. & Velonaki, M. Artificial skin and tactile sensing for socially interactive robots: a review. Robot. Auton. Syst. 63, 230–243 (2015).

Johansson, R. S. & Westling, G. Roles of glabrous skin receptors and sensorimotor memory in automatic control of precision grip when lifting rougher or more slippery objects. Exp. Brain Res. 56, 550–564 (1984).

Johansson, R. S. & Flanagan, J. R. Coding and use of tactile signals from the fingertips in object manipulation tasks. Nat. Rev. Neurosci. 10, 345–359 (2009).

Abraira, V. E. & Ginty, D. D. The sensory neurons of touch. Neuron 79, 618–639 (2013).

Vallbo, A. B. & Johansson, R. S. Properties of cutaneous mechanoreceptors in the human hand related to touch sensation. Hum. Neurobiol. 3, 3–14 (1984).

Vallbo, A. B., Olausson, H., Wessberg, J. & Kakuda, N. Receptive field characteristics of tactile units with myelinated afferents in hairy skin of human subjects. J. Physiol. 483, 783–795 (1995).

Fleming, M. S. & Luo, W. The anatomy, function, and development of mammalian Aβ low-threshold mechanoreceptors. Front. Biol. 8, 408–420 (2013).

Birznieks, I., Jenmalm, P., Goodwin, A. W. & Johansson, R. S. Encoding of direction of fingertip forces by human tactile afferents. J. Neurosci. 21, 8222–8237 (2001).

Vásárhelyi, G., Ádám, M., Vázsonyi, É., Bársony, I. & Dücso, C. Effects of the elastic cover on tactile sensor arrays. Sensors Actuators A 132, 245–251 (2006).

Vásárhelyi, G., Fodor, B. & Roska, T. Tactile sensing–processing: interface-cover geometry and the inverse-elastic problem. Sensors Actuators A 140, 8–18 (2007).

Johansson, R. S. & Birznieks, I. First spikes in ensembles of human tactile afferents code complex spatial fingertip events. Nat. Neurosci. 7, 170–177 (2004).

Tee, B. C. K. & Ouyang, J. Soft electronically functional polymeric composite materials for a flexible and stretchable digital future. Adv. Mater. 30, 1802560 (2018).

Ho, D. H. et al. Stretchable and multimodal all graphene electronic skin. Adv. Mater. 28, 2601–2608 (2016).

Park, J. et al. Tactile-direction-sensitive and stretchable electronic skins based on human-skin-inspired interlocked microstructures. ACS Nano 8, 12020–12029 (2014).

Kim, J. et al. Stretchable silicon nanoribbon electronics for skin prosthesis. Nat. Commun. 5, 5747 (2014).

Sekitani, T. et al. A rubberlike stretchable active matrix using elastic conductors. Science 321, 1468–1472 (2008).

Kaltenbrunner, M. et al. An ultra-lightweight design for imperceptible plastic electronics. Nature 499, 458–463 (2013).

Someya, T. et al. Conformable, flexible, large-area networks of pressure and thermal sensors with organic transistor active matrixes. Proc. Natl Acad. Sci. USA 102, 2321–12325 (2005).

Khan, S., Dahiya, R., Tinku, S. & Lorenzelli, L. Conformable tactile sensing using screen printed P (VDF-TrFE) and MWCNT-PDMS composites. In SENSORS, 2014 IEEE 862–865 (IEEE, 2014).

Chen, X. et al. Self-powered flexible pressure sensors with vertically well-aligned piezoelectric nanowire arrays for monitoring vital signs. J. Mater. Chem. C 3, 11806–11814 (2015).

Pan, L. et al. An ultra-sensitive resistive pressure sensor based on hollow-sphere microstructure induced elasticity in conducting polymer film. Nat. Commun. 5, 3002 (2014).

Zhu, G. et al. Self-powered, ultrasensitive, flexible tactile sensors based on contact electrification. Nano Lett. 14, 3208–3213 (2014).

Yun, S. et al. Polymer-waveguide-based flexible tactile sensor array for dynamic response. Adv. Mater. 26, 4474–4480 (2014).

Yogeswaran, N. et al. Piezoelectric graphene field effect transistor pressure sensors for tactile sensing. Appl. Phys. Lett. 113, 014102 (2018).

Asfour, T. et al. ARMAR-4: a 63 DOF torque controlled humanoid robot. In IEEE-RAS International Conference on Humanoid Robots 390–396 (IEEE, 2015).

Micera, S. Neuroprosthetics: restoring multi-joint motor control. Nat. Biomed. Eng. 1, 0073 (2017).

Chortos, A. & Bao, Z. Skin-inspired electronic devices. Mater. Today 17, 321–331 (2014).

Tan, D. W. et al. A neural interface provides long-term stable natural touch perception. Sci. Transl. Med. 6, 257ra138 (2014).

Lee, W. W. et al. A neuro-inspired artificial peripheral nervous system for scalable electronic skins. Sci. Robot. 4, eaax2198 (2019).

Osborn, L. E. et al. Prosthesis with neuromorphic multilayered e-dermis perceives touch and pain. Sci. Robot. 3, eaat3818 (2018).

Dahiya, R. et al. Large-area soft e-skin: the challenges beyond sensor designs. Proc. IEEE 107, 2016–2033 (2019).

Majidi, C. Soft robotics: a perspective—current trends and prospects for the future. Soft Robot. 1, 5–11 (2014).

Trivedi, D., Rahn, C. D., Kier, W. M. & Walker, I. D. Soft robotics: biological inspiration, state of the art, and future research. Appl. Bionics Biomech. 5, 99–117 (2008).

Kim, S., Laschi, C. & Trimmer, B. Soft robotics: a bioinspired evolution in robotics. Trends Biotechnol. 31, 287–294 (2013).

Ge, J. et al. A bimodal soft electronic skin for tactile and touchless interaction in real time. Nat. Commun. 10, 4405 (2019).

Mittendorfer, P., Yoshida, E. & Cheng, G. Realizing whole-body tactile interactions with a self-organizing, multi-modal artificial skin on a humanoid robot. Adv. Robot. 29, 51–67 (2015).

Tomo, T. P. et al. A new silicone structure for uSkin—a soft, distributed, digital 3-axis skin sensor and its integration on the humanoid robot iCub. IEEE Robot. Autom. Lett. 3, 2584–2591 (2018).

Wang, S. et al. Skin electronics from scalable fabrication of an intrinsically stretchable transistor array. Nature 555, 83–88 (2018).

Boutry, C. M. et al. A hierarchically patterned, bioinspired e-skin able to detect the direction of applied pressure for robotics. Sci. Robot. 3, eaau6914 (2018).

Núñez, C. G., Navaraj, W. T., Polat, E. O. & Dahiya, R. Energy-autonomous, flexible, and transparent tactile skin. Adv. Funct. Mater. 27, 1606287 (2017).

Hua, Q. et al. Skin-inspired highly stretchable and conformable matrix networks for multifunctional sensing. Nat. Commun. 9, 244 (2018).

Bragança, S., Costa, E., Castellucci, I., Arezes, P.M. (2019). in Occupational and Environmental Safety and Health (eds Arezes, P. M. et al.) 641–650 (Studies in Systems, Decision and Control, Vol. 202, Springer, 2019).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Heo, Y. J. et al. Collision detection for industrial collaborative robots: a deep learning approach. IEEE Robot. Autom. Lett. 4, 740–746 (2019).

Sundaram, S. et al. Learning the signatures of the human grasp using a scalable tactile glove. Nature 569, 698–702 (2019).

Lepora, N. F., Church, A., De Kerckhove, C., Hadsell, R. & Lloyd, J. From pixels to percepts: highly robust edge perception and contour following using deep learning and an optical biomimetic tactile sensor. IEEE Robot. Autom. Lett. 4, 2101–2107 (2019).

Sohn, K. S. et al. An extremely simple macroscale electronic skin realized by deep machine learning. Sci. Rep. 7, 11061 (2017).

Corniani, G. & Saal, H. P. Tactile innervation densities across the whole body. J. Neurophysiol. 124, 1229–1240 (2020).

Massari, L. et al. A machine-learning-based approach to solve both contact location and force in soft material tactile sensors. Soft Robot. 7, 409–420 (2020).

Caldwell, D. G., Tsagarakis, N. & Giesler, C. An integrated tactile/shear feedback array for stimulation of finger mechanoreceptor. In Proc. 1999 IEEE International Conference on Robotics and Automation Vol. 1, 287–292 (IEEE, 1999).

Cutkosky, M. R., Howe, R. D. & Provancher, W. R. in Springer Handbook of Robotics (eds Siciliano B. & Khatib O.) 455–476 (Springer, 2008).

Jones, L. A. & Lederman, S. J. Human Hand Function (Oxford Scholarship Online, 2007); https://doi.org/10.1093/acprof:oso/9780195173154.001.0001

Yan, Y. et al. Soft magnetic skin for super-resolution tactile sensing with force self-decoupling. Sci. Robot. 6, eabc8801 (2021).

Massaroni, C. et al. Design and feasibility assessment of a magnetic resonance-compatible smart textile based on fiber Bragg grating sensors for respiratory monitoring. IEEE Sens. J. 16, 8103–8110 (2016).

Saccomandi, P. et al. Feedforward neural network for force coding of an MRI-compatible tactile sensor array based on fiber Bragg grating. J. Sensors 2015, 367194 (2015).

D’Abbraccio, J. et al. Design and development of large-area FBG-based sensing skin for collaborative robotics. In 2019 IEEE International Workshop on Metrology for Industry 4.0 and IoT, MetroInd 4.0 and IoT 2019—Proc. 410–413 (IEEE, 2019).

Lo Presti, D. et al. Wearable system based on flexible FBG for respiratory and cardiac monitoring. IEEE Sens. J. 19, 7391–7398 (2019).

Lo Presti, D. et al. A multi-parametric wearable system to monitor neck movements and respiratory frequency of computer workers. Sensors 20, 536 (2020).

Lo Presti, D. et al. Cardio-respiratory monitoring in archery using a smart textile based on flexible fiber Bragg grating sensors. Sensors 19, 3581 (2019).

Massari, L. et al. Tactile sensing and control of robotic manipulator integrating fiber Bragg grating strain-sensor. Front. Neurorobot. 13, 8 (2019).

Hao, Y. et al. Modeling and experiments of a soft robotic gripper in amphibious environments. Int. J. Adv. Robot. Syst. 14, 1729881417707148 (2017).

Zhao, B., Lu, H., Chen, S., Liu, J. & Wu, D. Convolutional neural networks for time series classification. J. Syst. Eng. Electron. 28, 162–169 (2017).

Nwankpa, C., Ijomah, W., Gachagan, A. & Marshall, S. Activation functions: comparison of trends in practice and research for deep learning. Preprint at https://doi.org/10.48550/arXiv.1811.03378 (2018).

Jimmy, L. & Brendan, B. Adaptive dropout for training deep neural networks. In Advances in Neural Information Processing Systems (eds Burges, C. J. et al.) 3084–3092 (Curran Associates, 2013).

Massari, L. et al. Functional mimicry of Ruffini receptors with Fiber Bragg Gratings and Deep Neural Networks enables a bio-inspired large-area tactile sensitive skin. Code Ocean https://doi.org/10.24433/CO.7914052.v1 (2022).

Acknowledgements

This study was supported in part by the Italian Ministry of Universities and Research through the PARLOMA project (SIN_00132, C.M.O.), by the Italian Ministry of Economic Development through the Industry 4.0 Competence Center on Advanced Robotics and Enabling Digital Technologies and Systems (ARTES4.0, C.M.O.), by the Tuscany Region through the Tuscany Network for Bioelectronic Approaches in Medicine: AI-based predictive algorithms for fine-tuning of electroceutical treatments in neurological, cardiovascular and endocrinological diseases (TUNE-BEAM, H14I20000300002, C.M.O.) and by the European Union’s Horizon 2020 research and innovation programme under Marie Skłodowska-Curie grant agreement 956745 (European Training Network for Industry Digital Transformation across Innovation Ecosystems, EINST4INE, 956745, C.M.O.). Results reflect the authors’ view only. The funding agencies are not responsible for any use that may be made of the information contained. C.M.O. gratefully thanks S. Micera for comments made on an earlier version of the manuscript.

Author information

Authors and Affiliations

Contributions

L.M., J.D.A., G.T., M.Z., E.D.S. and C.M.O. designed and developed the artificial skin embedding the optical fibre. M.F., E.P., E.M.S., E.D.S. and C.M.O. contributed to the development of the experimental set-up. L.M., G.F., J.D.A. and M.F. carried out the experiments and L.M., G.F., M.F. and G.D.A. performed data analysis supported by A.A., J.D.A. and E.P. L.M., G.F., E.D.S. and C.M.O. conceived and developed the AI algorithms presented in the study. L.M. and M.F. performed FEM simulations. C.M.O. conceived the study and was responsible for planning and supervising the scientific work, for defining the experimental protocols and for the research grants supporting the study. E.D.S. was co-supervisor of the scientific work, of the definition of the experimental protocols and of FEM simulations. L.M., G.F., J.D.A., M.F., E.D.S. and C.M.O. wrote the manuscript. A.A. was responsible for the artwork, the figures and the Supplementary Information. All the authors discussed the results, critically revised the paper and approved the final version. Correspondence and requests for materials should be addressed to C.M.O. and E.D.S.

Corresponding authors

Ethics declarations

Competing interests

The authors declare the following competing interests: L.M., J.D.A., G.T., M.Z., E.P., E.M.S., E.D.S. and C.M.O. disclose a patent filed on the developed artificial skin and collaborative robot arm integrating FBG transducers (application number IT201900003657A1). The remaining authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Luke E. Osborn and Jie Xu for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Thickness effect of the encapsulation material on the FBG receptive fields and sensitivity within the range 4 to 12 mm at 50 mN.

Higher material thickness reduces sensitivity over the sensor and shifts the curve minimum.

Extended Data Fig. 2 Experiment with 12 subjects involving random administration of calibrated Von Frey hairs over the artificial skin surface to evaluate its detection rate as a function of stimulation force intensity.

a) Illustration of experimental setup and procedure. b) Sigmoid fitting of contact detection rate as a function of the nominal force exerted by the von Frey microfilament. X-axis is represented with logarithmic scale.

Extended Data Fig. 3 Fabrication process of the biomimetic skin.

Step 1) Integration of the 1st mold; Step 2) Dragon Skin 10 casting in the 1st mold; Step 3) Removal of the 1st layer of the artificial skin; Step 4) Integration of the optical fiber embedding the FBGs in the soft skin; Step 5) Integration of the 2nd mould; Step 6) Removal of the 2nd layer of the artificial skin and demolding at the end of the procedure.

Extended Data Fig. 4 Experimental setup and protocol.

a) 4-axis mechatronic platform for force-controlled indentations over the skin surface; b) Stimulation force profiles adopted for the indentation experiments (contact force up to 2.5N) to train the neural network.

Supplementary information

Supplementary Information

Captions of Supplementary Videos 1–5.

Supplementary Video 1

Example experimental testing of artificial skin sensitivity with Von Frey hairs.

Supplementary Video 2

Raw wavelength variations of FBG transducers due to pressure applied throughout the sensitive skin.

Supplementary Video 3

Real-time force intensity and contact localization while indenting the skin in the test set with a mechatronic platform.

Supplementary Video 4

Demonstration of skin’s ability to decode force intensity and contact location in real time while touching the sensorized patch via the experimenter’s own finger.

Supplementary Video 5

Demonstration of collaborative behaviours that could be implemented by a robot arm endowed with the developed sensitive skin while interacting with humans.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Massari, L., Fransvea, G., D’Abbraccio, J. et al. Functional mimicry of Ruffini receptors with fibre Bragg gratings and deep neural networks enables a bio-inspired large-area tactile-sensitive skin. Nat Mach Intell 4, 425–435 (2022). https://doi.org/10.1038/s42256-022-00487-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-022-00487-3

This article is cited by

-

Degradable silk fibroin based piezoresistive sensor for wearable biomonitoring

Discover Nano (2024)

-

Mechatronic automatic control system of electropneumatic manipulator

Scientific Reports (2024)

-

Artificial Intelligence Meets Flexible Sensors: Emerging Smart Flexible Sensing Systems Driven by Machine Learning and Artificial Synapses

Nano-Micro Letters (2024)

-

On-Chip Sub-Picometer Continuous Wavelength Fiber-Bragg-Grating Interrogator

Photonic Sensors (2024)

-

Artificial intelligence-powered electronic skin

Nature Machine Intelligence (2023)