Abstract

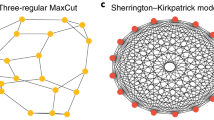

Combinatorial optimization problems are pervasive across science and industry. Modern deep learning tools are poised to solve these problems at unprecedented scales, but a unifying framework that incorporates insights from statistical physics is still outstanding. Here we demonstrate how graph neural networks can be used to solve combinatorial optimization problems. Our approach is broadly applicable to canonical NP-hard problems in the form of quadratic unconstrained binary optimization problems, such as maximum cut, minimum vertex cover, maximum independent set, as well as Ising spin glasses and higher-order generalizations thereof in the form of polynomial unconstrained binary optimization problems. We apply a relaxation strategy to the problem Hamiltonian to generate a differentiable loss function with which we train the graph neural network and apply a simple projection to integer variables once the unsupervised training process has completed. We showcase our approach with numerical results for the canonical maximum cut and maximum independent set problems. We find that the graph neural network optimizer performs on par or outperforms existing solvers, with the ability to scale beyond the state of the art to problems with millions of variables.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data necessary to reproduce our numerical benchmark results are publicly available at https://web.stanford.edu/~yyye/yyye/Gset/. Random d-regular graphs have been generated using the open-source networkx library (https://networkx.org).

Code availability

An end-to-end open source demo version of the code implementing our approach has been made publicly available at https://github.com/amazon-research/co-with-gnns-example116.

References

Glover, F., Kochenberger, G. & Du, Y. Quantum bridge analytics I: a tutorial on formulating and using QUBO models. 4OR 17, 335 (2019).

Kochenberger, G. et al. The unconstrained binary quadratic programming problem: a survey. J. Comb. Optim. 28, 58–81 (2014).

Anthony, M., Boros, E., Crama, Y. & Gruber, A. Quadratic reformulations of nonlinear binary optimization problems. Math. Program. 162, 115–144 (2017).

Papadimitriou, C. H. & Steiglitz, K. Combinatorial Optimization: Algorithms and Complexity (Courier Corporation, 1998).

Korte, B. & Vygen, J. Combinatorial Optimization Vol. 2 (Springer, 2012).

Johnson, M. W. et al. Quantum annealing with manufactured spins. Nature 473, 194–198 (2011).

Bunyk, P. et al. Architectural considerations in the design of a superconducting quantum annealing processor. IEEE Trans. Appl. Supercond. 24, 1–10 (2014).

Katzgraber, H. G. Viewing vanilla quantum annealing through spin glasses. Quantum Sci. Technol. 3, 030505 (2018).

Hauke, P., Katzgraber, H. G., Lechner, W., Nishimori, H. & Oliver, W. Perspectives of quantum annealing: methods and implementations. Rep. Prog. Phys. 83, 054401 (2020).

Kadowaki, T. & Nishimori, H. Quantum annealing in the transverse Ising model. Phys. Rev. E 58, 5355 (1998).

Farhi, E. et al. A quantum adiabatic evolution algorithm applied to random instances of an NP-complete problem. Science 292, 472–475 (2001).

Mandrà, S., Zhu, Z., Wang, W., Perdomo-Ortiz, A. & Katzgraber, H. G. Strengths and weaknesses of weak-strong cluster problems: a detailed overview of state-of-the-art classical heuristics versus quantum approaches. Phys. Rev. A 94, 022337 (2016).

Mandrà, S. & Katzgraber, H. G. A deceptive step towards quantum speedup detection. Quantum Sci. Technol. 3, 04LT01 (2018).

Barzegar, A., Pattison, C., Wang, W. & Katzgraber, H. G. Optimization of population annealing Monte Carlo for large-scale spin-glass simulations. Phys. Rev. E 98, 053308 (2018).

Hibat-Allah, M. et al. Variational neural annealing. Nat. Mach. Intell. 3, 952–961 (2021).

Wang, Z., Marandi, A., Wen, K., Byer, R. L. & Yamamoto, Y. Coherent Ising machine based on degenerate optical parametric oscillators. Phys. Rev. A 88, 063853 (2013).

Hamerly, R. et al. Scaling advantages of all-to-all connectivity in physical annealers: the coherent Ising machine vs. D-wave 2000Q. Sci. Adv. 5, eaau0823 (2019).

Di Ventra, M. & Traversa, F. L. Perspective: memcomputing: leveraging memory and physics to compute efficiently. J. Appl. Phys. 123, 180901 (2018).

Traversa, F. L., Ramella, C., Bonani, F. & Di Ventra, M. Memcomputing NP-complete problems in polynomial time using polynomial resources and collective states. Sci. Adv. 1, e1500031 (2015).

Matsubara, S. et al. in Complex, Intelligent and Software Intensive Systems (CISIS-2017) (eds Terzo, O. & Barolli, L.) 432–438 (Springer, 2017).

Tsukamoto, S., Takatsu, M., Matsubara, S. & Tamura, H. An accelerator architecture for combinatorial optimization problems. FUJITSU Sci. Tech. J. 53, 8–13 (2017).

Aramon, M., Rosenberg, G., Miyazawa, T., Tamura, H. & Katzgraber, H. G. Physics-inspired optimization for constraint-satisfaction problems using a digital annealer. Front. Phys. 7, 48 (2019).

Gori, M., Monfardini, G. & Scarselli, F. A new model for learning in graph domains. In Proc. 2005 IEEE International Joint Conference on Neural Networks Vol. 2 729–734 (IEEE, 2005).

Scarselli, F., Gori, M., Tsoi, A. C., Hagenbuchner, M. & Monfardini, G. The graph neural network model. IEEE Trans. Neural Netw. 20, 61–80 (2008).

Micheli, A. Neural network for graphs: a contextual constructive approach. IEEE Trans. Neural Netw. 20, 498–511 (2009).

Duvenaud, D. K. et al. Convolutional networks on graphs for learning molecular fingerprints. Adv. Neural Inf. Process. Syst. 28, 2224–2232 (2015).

Hamilton, W. L., Ying, R. & Leskovec, J. Representation learning on graphs: methods and applications. Preprint at https://arxiv.org/abs/1709.05584 (2017).

Xu, K., Hu, W., Leskovec, J. & Jegelka, S. How powerful are graph neural networks? Preprint at https://arxiv.org/abs/1810.00826 (2018).

Kipf, T. N. & Welling, M. Semi-supervised classification with graph convolutional networks. Preprint at https://arxiv.org/abs/1609.02907 (2016).

Wu, Z. et al. A comprehensive survey on graph neural networks. IEEE Trans. Neural Netw. 32, 4–24 (2021).

Perozzi, B., Al-Rfou, R. & Skiena, S. Deepwalk: online learning of social representations. In Proc. 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 701–710 (ACM, 2014).

Sun, Z., Deng, Z. H., Nie, J. Y. & Tang, J. Rotate: knowledge graph embedding by relational rotation in complex space. Preprint at https://arxiv.org/abs/1902.10197 (2019).

Ying, R. et al. Graph convolutional neural networks for web-scale recommender systems. In Proc. 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (KDD ’18) 974–983 (ACM, 2018).

Strokach, A., Becerra, D., Corbi-Verge, C., Perez-Riba, A. & Kim, P. M. Fast and flexible protein design using deep graph neural networks. Cell Syst. 11, 402–411 (2020).

Gaudelet, T. et al. Utilising graph machine learning within drug discovery and development. Preprint at https://arxiv.org/abs/2012.05716 (2020).

Pal, A. et al. Pinnersage: multi-modal user embedding framework for recommendations at Pinterest. In Proc. 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining 2311–2320 (ACM, 2020).

Rossi, E. et al. Temporal graph networks for deep learning on dynamic graphs. Preprint at https://arxiv.org/abs/2006.10637 (2020).

Monti, F., Frasca, F., Eynard, D., Mannion, D. & Bronstein, M. Fake news detection on social media using geometric deep learning. Preprint at https://arxiv.org/abs/1902.06673 (2019).

Choma, N. et al. Graph neural networks for icecube signal classification. In 17th IEEE International Conference on Machine Learning and Applications (ICMLA) 386–391 (IEEE, 2018).

Shlomi, J., Battaglia, P. & Vlimant, J.-R. Graph neural networks in particle physics. Mach. Learn. Sci. Technol. 2, 021001 (2020).

Li, Y., Tarlow, D., Brockschmidt, M. & Zemel, R. Gated graph sequence neural networks. Preprint at https://arxiv.org/abs/1511.05493 (2015).

Veličković, P. et al. Graph attention networks. Preprint at https://arxiv.org/abs/1710.10903 (2017).

Gilmer, J., Schoenholz, S. S., Riley, P. F., Vinyals, O. & Dahl, G. E. Neural message passing for quantum chemistry. In International Conference on Machine Learning 1263–1272 (PMLR, 2017).

Xu, K. et al. Representation learning on graphs with jumping knowledge networks. In International Conference on Machine Learning 5453–5462 (PMLR, 2018).

Zheng, D. et al. DistDGL: distributed graph neural network training for billion-scale graphs. In IEEE/ACM 10th Workshop on Irregular Applications: Architectures and Algorithms (IA3) 36–44 (IEEE, 2020).

Kotary, J., Fioretto, F., Van Hentenryck, P. & Wilder, B. End-to-end constrained optimization learning: a survey. Preprint at https://arxiv.org/abs/2103.16378 (2021).

Cappart, Q. et al. Combinatorial optimization and reasoning with graph neural networks. Preprint at https://arxiv.org/abs/2102.09544 (2021).

Mills, K., Ronagh, P. & Tamblyn, I. Finding the ground state of spin Hamiltonians with reinforcement learning. Nat. Mach. Intell. 2, 509–517 (2020).

Vinyals, O., Fortunato, M. & Jaitly, N. Pointer networks. Adv. Neural Inf. Process. Syst. 28, 2692–2700 (2015).

Nowak, A., Villar, S., Bandeira, A. S. & Bruna, J. Revised note on learning algorithms for quadratic assignment with graph neural networks. Preprint at https://arxiv.org/abs/1706.07450 (2017).

Bai, Y. et al. SimGNN: a neural network approach to fast graph similarity computation. Preprint at https://arxiv.org/abs/1808.05689 (2018).

Lemos, H., Prates, M., Avelar, P. & Lamb, L. Graph colouring meets deep learning: effective graph neural network models for combinatorial problems. In 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI) 879–885 (IEEE, 2019).

Li, Z., Chen, Q. & Koltun, V. Combinatorial optimization with graph convolutional networks and guided tree search. In Proc. NeurIPS 536–545 (2018).

Joshi, C. K., Laurent, T. & Bresson, X. An efficient graph convolutional network technique for the travelling salesman problem. Preprint at https://arxiv.org/abs/1906.01227 (2019).

Karalias, N. & Loukas, A. Erdos goes neural: an unsupervised learning framework for combinatorial optimization on graphs. Preprint at https://arxiv.org/abs/2006.10643 (2020).

Yehuda, G., Gabel, M. & Schuster, A. It’s not what machines can learn, it’s what we cannot teach. Preprint at https://arxiv.org/abs/2002.09398 (2020).

Bello, I., Pham, H., Le, Q. V., Norouzi, M. & Bengio, S. Neural combinatorial optimization with reinforcement learning. Preprint at https://arxiv.org/abs/1611.09940 (2017).

Kool, W., van Hoof, H. & Welling, M. Attention, learn to solve routing problems! Preprint at https://arxiv.org/abs/1803.08475 (2019).

Ma, Q., Ge, S., He, D., Thaker, D. & Drori, I. Combinatorial optimization by graph pointer networks and hierarchical reinforcement learning. Preprint at https://arxiv.org/abs/1911.04936 (2019).

Dai, H., Khalil, E. B., Zhang, Y., Dilkina, B. & Song, L. Learning combinatorial optimization algorithms over graphs. In Annual Conference on Neural Information Processing Systems (NIPS) 6351–6361 (2017).

Toenshoff, J., Ritzert, M., Wolf, H. & Grohe, M. RUN-CSP: unsupervised learning of message passing networks for binary constraint satisfaction problems. Preprint at https://arxiv.org/abs/1909.08387 (2019).

Yao, W., Bandeira, A. S. & Villar, S. Experimental performance of graph neural networks on random instances of max-cut. In Wavelets and Sparsity XVIII Vol. 11138 111380S (International Society for Optics and Photonics, 2019).

Ising, E. Beitrag zur Theorie des Ferromagnetismus. Z. Phys. 31, 253–258 (1925).

Matsubara, S., et al. Ising-model optimizer with parallel-trial bit-sieve engine. In Conference on Complex, Intelligent, and Software Intensive Systems 432–438 (Springer, 2017).

Hamerly, R. et al. Experimental investigation of performance differences between coherent Ising machines and a quantum annealer. Sci. Adv. 5, eaau0823 (2019).

Lucas, A. Ising formulations of many NP problems. Front. Phys. 2, 5 (2014).

Alon, U. & Yahav, E. On the bottleneck of graph neural networks and its practical implications. Preprint at https://arxiv.org/abs/2006.05205 (2020).

Fey, M. & Lenssen, J. E. Fast graph representation learning with PyTorch Geometric. Preprint at https://arxiv.org/abs/1903.02428 (2019).

Wang, M. et al. Deep Graph Library: a graph-centric, highly-performant package for graph neural networks. Preprint at https://arxiv.org/abs/1909.01315 (2019).

Alidaee, B., Kochenberger, G. A. & Ahmadian, A. 0-1 Quadratic programming approach for optimum solutions of two scheduling problems. Int. J. Syst. Sci. 25, 401–408 (1994).

Neven, H., Rose, G. & Macready, W. G. Image recognition with an adiabatic quantum computer I. Mapping to quadratic unconstrained binary optimization. Preprint at https://arxiv.org/abs/0804.4457 (2008).

Deza, M. & Laurent, M. Applications of cut polyhedra. J. Comput. Appl. Math. 55, 191–216 (1994).

Farhi, E., Goldstone, J. & Gutmann, S. A. A quantum approximate optimization algorithm. Preprint at https://arxiv.org/abs/1411.4028 (2014).

Zhou, L., Wang, S.-T., Choi, S., Pichler, H. & Lukin, M. D. Quantum approximate optimization algorithm: performance, mechanism and implementation on near-term devices. Phys. Rev. X 10, 021067 (2020).

Guerreschi, G. G. & Y., A. QAOA for Max-Cut requires hundreds of qubits for quantum speed-up. Nat. Sci. Rep. 9, 6903 (2019).

Crooks, G. E. Performance of the quantum approximate optimization algorithm on the maximum cut problem. Preprint at https://arxiv.org/abs/1811.08419 (2018).

Lotshaw, P. C. et al. Empirical performance bounds for quantum approximate optimization. Quantum Inf. Process. 20, 403 (2021).

Patti, T. L., Kossaifi, J., Anandkumar, A. & Yelin, S. F. Variational Quantum Optimization with Multi-Basis Encodings. Preprint at https://arxiv.org/abs/2106.13304 (2021).

Zhao, T., Carleo, G., Stokes, J. & Veerapaneni, S. Natural evolution strategies and variational Monte Carlo. Mach. Learn. Sci. Technol. 2, 02LT01 (2020).

Goemans, M. X. & Williamson, D. P. Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. J. ACM 42, 1115–1145 (1995).

Halperin, E., Livnat, D. & Zwick, U. MAX CUT in cubic graphs. J. Algorithms 53, 169–185 (2004).

Dembo, A., Montanari, A. & Sen, S. Extremal cuts of sparse random graphs. Ann. Probab. 45, 1190–1217 (2017).

Sherrington, D. & Kirkpatrick, S. Solvable model of a spin glass. Phys. Rev. Lett. 35, 1792–1795 (1975).

Binder, K. & Young, A. P. Spin glasses: experimental facts, theoretical concepts and open questions. Rev. Mod. Phys. 58, 801–976 (1986).

Alizadeh, F. Interior point methods in semidefinite programming with applications to combinatorial optimization. SIAM J. Optimization 5, 13 (1995).

Haribara, Y. & Utsunomiya, S. in Principles and Methods of Quantum Information Technologies. Lecture Notes in Physics Vol. 911 (eds Semba, K. & Yamamoto, Y.) 251–262 (Springer, 2016).

Ye, Y. The Gset Dataset (Stanford, 2003); https://web.stanford.edu/~yyye/yyye/Gset/

Kochenberger, G. A., Hao, J.-K., Lu, Z., Wang, H. & Glover, F. Solving large scale Max Cut problems via tabu search. J. Heuristics 19, 565–571 (2013).

Benlic, U. & Hao, J.-K. Breakout local search for the Max-Cut problem. Eng. Appl. Artif. Intell. 26, 1162–1173 (2013).

Choi, C. & Ye, Y. Solving Sparse Semidefinite Programs Using the Dual Scaling Algorithm with an Iterative Solver Working Paper (Department of Management Sciences, Univ. Iowa, 2000).

Hale, W. K. Frequency assignment: theory and applications. Proc. IEEE 68, 1497–1514 (1980).

Boginski, V., Butenko, S. & Pardalos, P. M. Statistical analysis of financial networks. Comput. Stat. Data Anal. 48, 431–443 (2005).

Yu, H., Wilczek, F. & Wu, B. Quantum algorithm for approximating maximum independent sets. Chin. Phys. Lett. 38, 030304 (2021).

Pichler, H., Wang, S.-T., Zhou, L., Choi, S. & Lukin, M. D. Quantum optimization for maximum independent set using Rydberg atom arrays. Preprint at https://arxiv.org/abs/1808.10816 (2018).

Djidjev, H. N., Chapuis, G., Hahn, G. & Rizk, G. Efficient combinatorial optimization using quantum annealing. Preprint at https://arxiv.org/abs/1801.08653 (2018).

Boppana, R. & Halldórsson, M. M. Approximating maximum independent sets by excluding subgraphs. BIT Numer. Math. 32, 180–196 (1992).

Duckworth, W. & Zito, M. Large independent sets in random regular graphs. Theor. Comput. Sci. 410, 5236–5243 (2009).

McKay, B. D. Independent sets in regular graphs of high girth. Ars Combinatoria 23A, 179 (1987).

Grötschel, M., Jünger, M. & Reinelt, G. An application of combinatorial optimization to statistical physics and circuit layout design. Oper. Res. 36, 493–513 (1988).

Laughhunn, D. J. Quadratic binary programming with application to capital-budgeting problems. Oper. Res. 18, 454–461 (1970).

Krarup, J. & Pruzan, A. Computer aided layout design. Math. Program. Study 9, 75–94 (1978).

Gallo, G., Hammer, P. & Simeone, B. Quadratic knapsack problems. Math. Program. 12, 132–149 (1980).

Witsgall, C. Mathematical Methods of Site Selection for Electronic System (EMS) NBS Internal Report (NBS, 1975).

Chardaire, P. & Sutter, A. A decomposition method for quadratic zero-one programming. Manag. Sci. 41, 704–712 (1994).

Phillips, A. & Rosen, J. B. A quadratic assignment formulation of the molecular conformation problem. J. Glob. Optim. 4, 229–241 (1994).

Iasemidis, L. D. et al. Prediction of human epileptic seizures based on optimization and phase changes of brain electrical activity. Optim. Methods Software 18, 81–104 (2003).

Kalra, A., Qureshi, F. & Tisi, M. Portfolio asset identification using graph algorithms on a quantum annealer. SSRN https://ssrn.com/abstract=3333537 (2018).

Markowitz, H. Portfolio selection. J. Finance 7, 77–91 (1952).

Kolen, A. Interval scheduling: a survey. Naval Res. Logist. 54, 530–543 (2007).

Bar-Noy, A., Bar-Yehuda, R., Freund, A., Naor, J. & Schieber, B. A unified approach to approximating resource allocation and scheduling. J. ACM 48, 1069–1090 (2001).

Speziali, S. et al. Solving sensor placement problems in real water distribution networks using adiabatic quantum computation. In 2021 IEEE International Conference on Quantum Computing and Engineering (QCE) 463–464 (IEEE, 2021).

McMahon, P. L. et al. A fully programmable 100-spin coherent Ising machine with all-to-all connections. Science 354, 614–617 (2016).

Bansal, N. & Khot, S. Inapproximability of hypergraph vertex cover and applications to scheduling problems. In Proc. Automata, Languages and Programming (ICALP 2010) Lecture Notes in Computer Science Vol. 6198 (eds Abramsky, S. et al.) 250–261 (Springer, 2010).

Hernandez, M., Zaribafiyan, A., Aramon, M. & Naghibi, M. A novel graph-based approach for determining molecular similarity. Preprint at https://arxiv.org/abs/1601.06693 (2016).

Terry, J. P., Akrobotu, P. D., Negre, C. F. & Mniszewski, S. M. Quantum isomer search. PLoS ONE 15, e0226787 (2020).

Combinatorial optimization with graph neural networks. GitHub https://github.com/amazon-research/co-with-gnns-example (2022).

Acknowledgements

We thank F. Brandao, G. Karypis, M. Kastoryano, E. Kessler, T. Mullenbach, N. Pancotti, M. Resende, S. Roy, G. Salton, S. Severini, A. Urweisse and J. Zhu for fruitful discussions.

Author information

Authors and Affiliations

Contributions

All authors contributed to the ideation and design of the research. M.J.A.S. and J.K.B. developed and ran the computational experiments and also wrote the initial draft of the the manuscript. H.G.K. supervised this work and revised the manuscript.

Corresponding authors

Ethics declarations

Competing interests

M.J.A.S., J.K.B. and H.G.K. are listed as inventors on a US provisional patent application (no. 7924-38500) on combinatorial optimization with graph neural networks.

Peer review

Peer review information

Nature Machine Intelligence thanks Thomas Vandal and Estelle Inack for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary listings 1 and 2 and Table 1.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Schuetz, M.J.A., Brubaker, J.K. & Katzgraber, H.G. Combinatorial optimization with physics-inspired graph neural networks. Nat Mach Intell 4, 367–377 (2022). https://doi.org/10.1038/s42256-022-00468-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-022-00468-6

This article is cited by

-

Generic model to unravel the deeper insights of viral infections: an empirical application of evolutionary graph coloring in computational network biology

BMC Bioinformatics (2024)

-

Quantum approximate optimization via learning-based adaptive optimization

Communications Physics (2024)

-

A unified pre-training and adaptation framework for combinatorial optimization on graphs

Science China Mathematics (2024)

-

Multimodal learning with graphs

Nature Machine Intelligence (2023)

-

Partial-Neurons-Based \(H_{\infty }\) State Estimation for Time-Varying Neural Networks Subject to Randomly Occurring Time Delays under Variance Constraint

Neural Processing Letters (2023)