Abstract

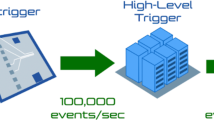

To study the physics of fundamental particles and their interactions, the Large Hadron Collider was constructed at CERN, where protons collide to create new particles measured by detectors. Collisions occur at a frequency of 40 MHz, and with an event size of roughly 1 MB it is impossible to read out and store the generated amount of data from the detector and therefore a multi-tiered, real-time filtering system is required. In this paper, we show how to adapt and deploy deep-learning-based autoencoders for the unsupervised detection of new physics signatures in the challenging environment of a real-time event selection system at the Large Hadron Collider. The first-stage filter, implemented on custom electronics, decides within a few microseconds whether an event should be kept or discarded. At this stage, the rate is reduced from 40 MHz to about 100 kHz. We demonstrate the deployment of an unsupervised selection algorithm on this custom electronics, running in as little as 80 ns and enhancing the signal-over-background ratio by three orders of magnitude. This work enables the practical deployment of these networks during the next data-taking campaign of the Large Hadron Collider.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Code availability

The QKeras library is available at github.com/google/qkeras, where the work presented here is using QKeras version 0.9.0. The hls4ml library with custom layers used in the paper are under AE_L1_paper branch and available at https://github.com/fastmachinelearning/hls4ml/tree/AE_L1_paper.

Change history

12 April 2022

A Correction to this paper has been published: https://doi.org/10.1038/s42256-022-00486-4

References

LHC Machine. JINST 3, S08001 (2008).

Aad, G. et al. The ATLAS Experiment at the CERN Large Hadron Collider. J. Instrum. 3, S08003 (2008).

Chatrchyan, S. et al. The CMS Experiment at the CERN LHC. J. Instrum. 3, S08004 (2008).

Sirunyan, A. M. et al. Performance of the CMS Level-1 trigger in proton-proton collisions at \(\sqrt{s}=\) 13 TeV. J. Instrum. 15, P10017 (2020).

The Phase-2 upgrade of the CMS Level-1 trigger. CMS Technical Design Report CERN-LHCC-2020-004 CMS-TDR-021 (2020).

Aad, G. et al. Operation of the ATLAS trigger system in Run 2. J. Instrum. 15, P10004 (2020).

Technical Design Report for the Phase-II Upgrade of the ATLAS TDAQ System. ATLAS Technical Design Report CERN-LHCC-2017-020 ATLAS-TDR-029 (2017).

Aad, G. et al. Observation of a new particle in the search for the standard model Higgs boson with the ATLAS detector at the LHC. Phys. Lett. B 716, 1 (2012).

Chatrchyan, S. et al. Observation of a new boson at a mass of 125 GeV with the CMS experiment at the LHC. Phys. Lett. B 716, 30 (2012).

Aarrestad, T. et al. The dark machines anomaly score challenge: Benchmark data and model independent event classification for the large hadron collider. SciPost Phys. 12, 2542 (2022).

Kasieczka, G. et al. The LHC olympics 2020: A community challenge for anomaly detection in high energy physics. Rep. Prog. Phys. 84, 124201 (2021).

Kingma, D. P. & Welling, M. Auto-encoding variational Bayes. Preprint at https://arxiv.org/abs/1312.6114 (2014).

Rezende, D. J., Mohamed, S. & Wierstra, D. Stochastic backpropagation and approximate inference in deep generative models. Preprint at https://arxiv.org/abs/1401.4082 (2014).

Heimel, T., Kasieczka, G., Plehn, T. & Thompson, J. M. QCD or What? SciPost Phys. 6, 30 (2019).

Farina, M., Nakai, Y. & Shih, D. Searching for new physics with deep autoencoders. Phys. Rev. D 101, 075021 (2020).

Cerri, O. et al. Variational autoencoders for new physics mining at the Large Hadron Collider. J. High Eenergy Phys. 2019, 36 (2019).

Knapp, O. et al. Adversarially Learned Anomaly Detection on CMS Open Data: re-discovering the top quark. Eur. Phys. J. Plus 136, 236 (2021).

Venieris, S. I., Kouris, A. & Bouganis, C.-S. Toolflows for mapping convolutional neural networks on FPGAs: A survey and future directions. Preprint at https://arxiv.org/abs/1803.05900 (2018).

Guo, K., Zeng, S., Yu, J., Wang, Y. & Yang, H. A survey of FPGA-based neural network inference accelerators. https://arxiv.org/abs/1712.08934 (2019).

Shawahna, A., Sait, S. M. & El-Maleh, A. FPGA-based accelerators of deep learning networks for learning and classification: a review. IEEE Access 7, 7823 (2019).

Umuroglu, Y. et al. FINN: A framework for fast, scalable binarized neural network inference. In Proc. 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays 65 (ACM, 2017).

Blott, M. et al. FINN-R: An end-to-end deep-learning framework for fast exploration of quantized neural networks. Preprint at https://arxiv.org/abs/1809.04570 (2018).

Summers, S. et al. Fast inference of boosted decision trees in FPGAs for particle physics. J. Instrum. 15, P05026 (2020).

Hong, T. M. et al. Nanosecond machine learning event classification with boosted decision trees in FPGA for high energy physics. J. Instrum. 16, P08016 (2021).

Duarte, J. et al. Fast inference of deep neural networks in FPGAs for particle physics. J. Instrum. 13, P07027 (2018).

Ngadiuba, J. et al. Compressing deep neural networks on FPGAs to binary and ternary precision with HLS4ML. Mach. Learn. Sci. Technol. 2, 2632 (2020).

Iiyama, Y. et al. Distance-weighted graph neural networks on FPGAs for real-time particle reconstruction in high energy physics. Front. Big Data 3, 598927 (2020).

Aarrestad, T. et al. Fast convolutional neural networks on FPGAs with HLS4ML. Mach. Learn. Sci. Technol. 2, 045015 (2021).

Heintz, A. et al. Accelerated charged particle tracking with graph neural networks on FPGAs. In 34th Conference on Neural Information Processing Systems (2020).

Summers, S. et al. Fast inference of boosted decision trees in FPGAs for particle physics. J. Instrum. 15, P05026 (2020).

Coelho, C. Qkeras https://github.com/google/qkeras (2019).

Coelho, C. N. et al. Automatic heterogeneous quantization of deep neural networks for low-latency inference on the edge for particle detectors. Nat. Mach. Intell. 3, 675–686 (2021).

D’Agnolo, R. T. & Wulzer, A. Learning new physics from a machine. Phys. Rev. D 99, 015014 (2019).

Mikuni, V., Nachman, B. & Shih, D. Online-compatible unsupervised non-resonant anomaly detection. Preprint at https://arxiv.org/abs/2111.06417 (2021).

LeCun, Y., Denker, J. S. & Solla, S. A. Optimal brain damage. In Advances in Neural Information Processing Systems (ed. Touretzky, D. S.) Vol. 2, 598 (Morgan-Kaufmann, 1990).

Han, S., Mao, H. & Dally, W. J. Deep compression: compressing deep neural networks with pruning, trained quantization and Huffman coding. In 4th Int. Conf. Learning Representations (ed. Bengio, Y. & LeCun, Y.) (2016).

Blalock, D., Ortiz, J. J. G., Frankle, J. & Guttag, J. What is the state of neural network pruning? In Proc. Machine Learning and Systems Vol. 2, 129 (2020).

Moons, B., Goetschalckx, K., Berckelaer, N. V. & Verhelst, M. Minimum energy quantized neural networks. In 2017 51st Asilomar Conf. Signals, Systems, and Computers (ed. Matthews, M. B.) 1921 (2017).

Courbariaux, M., Bengio, Y. & David, J.-P. BinaryConnect: Training deep neural networks with binary weights during propagations. In Adv. Neural Information Processing Systems (eds. Cortes, C., Lawrence, N. D., Lee, D. D., Sugiyama, M. & Garnett, R.) Vol. 28, 3123 (Curran Associates, 2015).

Zhang, D., Yang, J., Ye, D. & Hua, G. LQ-nets: Learned quantization for highly accurate and compact deep neural networks. In Proc. European Conference on Computer Vision (eds. Ferrari, V., Hebert, M., Sminchisescu, C. & Weiss, Y.) (2018).

Hubara, I., Courbariaux, M., Soudry, D., El-Yaniv, R. & Bengio, Y. Quantized neural networks: training neural networks with low precision weights and activations. J. Mach. Learn. Res. 18, 6869–6898 (2018).

Rastegari, M., Ordonez, V., Redmon, J. & Farhadi, A. XNOR-Net: ImageNet classification using binary convolutional neural networks. In 14th European Conf. Computer Vision 525 (Springer, 2016).

Micikevicius, P. et al. Mixed precision training. In 6th Int. Conf. Learning Representations (2018).

Zhuang, B., Shen, C., Tan, M., Liu, L. & Reid, I. Towards effective low-bitwidth convolutional neural networks. In 2018 IEEE/CVF Conf. Computer Vision and Pattern Recognition 7920 (2018).

Wang, N., Choi, J., Brand, D., Chen, C.-Y. & Gopalakrishnan, K. Training deep neural networks with 8-bit floating point numbers. In Adv. Neural Information Processing Systems (eds. Bengio, S. et al.) Vol. 31, 7675 (Curran Associates, 2018).

An, J. & Cho, S. Variational autoencoder based anomaly detection using reconstruction probability. Special Lecture IE 2, 1–18 (2015).

Nagel, M., van Baalen, M., Blankevoort, T. & Welling, M. Data-free quantization through weight equalization and bias correction. In 2019 IEEE/CVF International Conf. Computer Vision 1325 (2019).

Meller, E., Finkelstein, A., Almog, U. & Grobman, M. Same, same but different: Recovering neural network quantization error through weight factorization. In Proc. 36th International Conf. Machine Learning (eds. Chaudhuri, K. & Salakhutdinov, R.) Vol. 97, 4486 (PMLR, 2019).

Zhao, R., Hu, Y., Dotzel, J., Sa, C. D. & Zhang, Z. Improving neural network quantization without retraining using outlier channel splitting. In Proc. 36th Int. Conference on Machine Learning (eds. Chaudhuri, K. & Salakhutdinov, R.) Vol. 97, 7543 (PMLR, 2019).

Banner, R., Nahshan, Y., Hoffer, E. & Soudry, D. Post-training 4-bit quantization of convolution networks for rapid-deployment. In Adv. Neural Information Processing Systems (eds. Wallach, H. et al.) Vol. 32, 7950 (Curran Associates, 2019).

Pappalardo, A. brevitas https://github.com/Xilinx/brevitas (2020).

Shin, S., Boo, Y. & Sung, W. Knowledge distillation for optimization of quantized deep neural networks. In 2020 IEEE Workshop on Signal Processing Systems (2020).

Polino, A., Pascanu, R. & Alistarh, D. Model compression via distillation and quantization. In Int. Conf. Learning Representations (2018).

Gao, M. et al. An embarrassingly simple approach for knowledge distillation. Preprint at https://arxiv.org/abs/1812.01819 (2019).

Mishra, A. & Marr, D. Apprentice: using knowledge distillation techniques to improve low-precision network accuracy. In Int. Conf. Learning Representations (2018).

Nguyen, T. Q. et al. Topology classification with deep learning to improve real-time event selection at the LHC. Comput. Softw. Big Sci. 3, 12 (2019).

Govorkova, E. et al. Unsupervised new physics detection at 40 mhz: LQ → b τ signal benchmark dataset. Zenodo https://doi.org/10.5281/zenodo.5055454 (2021).

Govorkova, E. et al. Unsupervised new physics detection at 40 mhz: A → 4 leptons signal benchmark dataset. Zenodo https://doi.org/10.5281/zenodo.5046446 (2021).

Govorkova, E. et al. Unsupervised new physics detection at 40 mhz: h0 → ττ signal benchmark dataset. Zenodo https://doi.org/10.5281/zenodo.5061633 (2021).

Govorkova, E. et al. Unsupervised new physics detection at 40 mhz: h+ → τν signal benchmark dataset. Zenodo https://doi.org/10.5281/zenodo.5061688 (2021).

Govorkova, E. et al. LHC physics dataset for unsupervised new physics detection at 40 MHz. Preprint at https://arxiv.org/abs/2107.02157 (2021).

Govorkova, E. et al. Unsupervised new physics detection at 40 mhz: training dataset. Zenodo https://doi.org/10.5281/zenodo.5046389 (2021).

Ioffe, S. & Szegedy, C. Batch normalization: accelerating deep network training by reducing internal covariate shift. In Proc. 32nd International Conference on Machine Learning (eds. Bach, F. & Blei, D.) Vol. 37, 448 (PMLR, 2015).

Maas, A. L., Hannun, A. Y. & Ng, A. Y. Rectifier nonlinearities improve neural network acoustic models. In ICML Workshop on Deep Learning for Audio, Speech and Language Processing (2013).

Nair, V. & Hinton, G. E. Rectified linear units improve restricted boltzmann machines. In ICML (eds. Fürnkranz, J. & Joachims, T.) 807 (Omnipress, 2010).

Kingma, D. P. & Ba, J. Adam: A Method for Stochastic Optimization. Preprint at https://arxiv.org/abs/1412.6980 (2014).

Joyce, J. M. in International Encyclopedia of Statistical Science 720–722 (Springer, 2011); https://doi.org/10.1007/978-3-642-04898-2_327

Higgins, I. et al. beta-vae: Learning basic visual concepts with a constrained variational framework (2016).

Chollet, F. et al. Keras https://keras.io (2015).

Xilinx. Vivado design suite user guide: High-level synthesis. https://www.xilinx.com/support/documentation/sw_manuals/xilinx2020_1/ug902-vivado-high-level-synthesis.pdf (2020).

EMP Collaboration. emp-fwk homepage. https://serenity.web.cern.ch/serenity/emp-fwk/ (2019).

D’Agnolo, R. T. & Wulzer, A. Learning new physics from a machine. Phys. Rev. D 99, 015014 (2019).

Acknowledgements

This work is supported by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement no. 772369) and the ERC-POC programme (grant no. 996696).

Author information

Authors and Affiliations

Contributions

V.L., M.P., A.A.P., N.G., M.G., S.S., J.D. and Z.W. conceived and designed the hls4ml software library. M.P., T.Q.N. and Z.W. designed and prepared the dataset format. E.G., E.P., T.A., T.J., V.L., M.P., J.N., T.Q.N. and Z.W. designed and implemented autoencoders in hls4ml. E.G., E.P., T.A., T.J., M.P. and J.D. wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks the anonymous reviewers for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Network architectures.

Network architecture for the DNN AE (top) and CNN AE (bottom) models. The corresponding VAE models are derived introducing the Gaussian sampling in the latent space, for the same encoder and decoder architectures (see text).

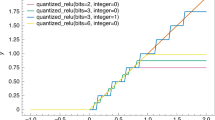

Extended Data Fig. 2 TPR ratios for different bit width.

TPR ratios versus model bit width for the VAE CNN (left) and DNN (right) models tested on four new physics benchmark models, using DKL as figure of merit for PTQ (top) and QAT (bottom) strategies.

Rights and permissions

About this article

Cite this article

Govorkova, E., Puljak, E., Aarrestad, T. et al. Autoencoders on field-programmable gate arrays for real-time, unsupervised new physics detection at 40 MHz at the Large Hadron Collider. Nat Mach Intell 4, 154–161 (2022). https://doi.org/10.1038/s42256-022-00441-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-022-00441-3

This article is cited by

-

Detecting abnormal cell behaviors from dry mass time series

Scientific Reports (2024)

-

The analysis of Iris image acquisition and real-time detection system using convolutional neural network

The Journal of Supercomputing (2024)

-

Non-resonant anomaly detection with background extrapolation

Journal of High Energy Physics (2024)

-

Simulation-based anomaly detection for multileptons at the LHC

Journal of High Energy Physics (2023)

-

Search for electroweakinos in R-parity violating SUSY with long-lived particles at HL-LHC

Journal of High Energy Physics (2023)