Abstract

Triaging unpromising lead molecules early in the drug discovery process is essential for accelerating its pace while avoiding the costs of unwarranted biological and clinical testing. Accordingly, medicinal chemists have been trying for decades to develop metrics—ranging from heuristic measures to machine-learning models—that could rapidly distinguish potential drugs from small molecules that lack drug-like features. However, none of these metrics has gained universal acceptance and the very idea of ‘drug-likeness’ has recently been put into question. Here, we evaluate drug-likeness using different sets of descriptors and different state-of-the-art classifiers, reaching an out-of-sample accuracy of 87–88%. Remarkably, because these individual classifiers yield different Bayesian error distributions, their combination and selection of minimal-variance predictions can increase the accuracy of distinguishing drug-like from non-drug-like molecules to 93%. Because total variance is comparable with its aleatoric contribution reflecting irreducible error inherent to the dataset (as opposed to the epistemic contribution due to the model itself), this level of accuracy is probably the upper limit achievable with the currently known collection of drugs.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Datasets used in this work are deposited at https://doi.org/10.5281/zenodo.3776449. Source Data are provided with this paper.

Code availability

Computer codes underlying this work are made freely available for non-commercial uses under a Creative Commons Attribution Non Commercial-No Derivatives 4.0 International (CC BY-NC-ND 4.0) license and are deposited at https://doi.org/10.5281/zenodo.3776449.

References

Lipinski, C. A. Lead- and drug-like compounds: the rule-of-five revolution. Drug Discov. Today Technol. 1, 337–341 (2004).

Shultz, M. D. Two decades under the influence of the rule of five and the changing properties of approved oral drugs. J. Med. Chem. 62, 1701–1714 (2018).

Bickerton, G. R., Paolini, G. V., Besnard, J., Muresan, S. & Hopkins, A. L. Quantifying the chemical beauty of drugs. Nat. Chem. 4, 90–98 (2012).

Berman, H., Henrick, K. & Nakamura, H. Announcing the worldwide Protein Data Bank. Nat. Struct. Mol. Biol. 10, 980–980 (2003).

Yusof, I. & Segall, M. D. Considering the impact drug-like properties have on the chance of success. Drug Discov. Today 18, 659–666 (2013).

Mochizuki, M., Suzuki, S. D., Yanagisawa, K., Ohue, M. & Akiyama, Y. QEX: target-specific druglikeness filter enhances ligand-based virtual screening. Mol. Divers. 23, 11–18 (2018).

Olivecrona, M., Blaschke, T., Engkvist, O. & Chen, H. Molecular de-novo design through deep reinforcement learning. J. Cheminform. 9, 48 (2017).

Gómez-Bombarelli, R. et al. Automatic chemical design using a data-driven continuous representation of molecules. ACS Cent. Sci. 4, 268–276 (2018).

Griffiths, R.-R. & Hernández-Lobato, J. M. Constrained Bayesian optimization for automatic chemical design using variational autoencoders. Chem. Sci. 11, 577–586 (2020).

Putin, E. et al. Adversarial threshold neural computer for molecular de novo design. Mol. Pharmaceutics 15, 4386–4397 (2018).

Mignani, S. et al. Present drug-likeness filters in medicinal chemistry during the hit and lead optimization process: how far they can be simplified?Drug Discov. Today 23, 650–615 (2018).

Li, Q., Bender, A., Pei, J. & Lai, L. A large descriptor set and a probabilistic Kernel-based classifier significantly improve druglikeness classification. J. Chem. Inf. Model. 47, 1776–1786 (2007).

Hu, Q., Feng, M., Lai, L. & Pei, J. Prediction of drug-likeness using deep autoencoder neural networks. Front. Genet. 9, 585 (2018).

Wishart, D. S. et al. DrugBank 5.0: a major update to the DrugBank database for 2018. Nucl. Acids Res. 46, D1074–D1082 (2017).

Irwin, J. J., Sterling, T., Mysinger, M. M., Bolstad, E. S. & Coleman, R. G. ZINC: a free tool to discover chemistry for biology. J. Chem. Inf. Model. 52, 1757–1768 (2012).

Fialkowski, M., Bishop, K. J. M., Chubukov, V. A., Campbell, C. J. & Grzybowski, B. A. Architecture and evolution of organic chemistry. Angew. Chem. Int. Ed. 44, 7263–7269 (2005).

Kowalik, M. et al. Parallel optimization of synthetic pathways within the Network of Organic Chemistry. Angew. Chem. Int. Ed. 124, 8052–8056 (2012).

Rogers, D. & Hahn, M. Extended-connectivity fingerprints. J. Chem. Inf. Model. 50, 742–754 (2010).

RDKit: Open-source cheminformatics (RDKit); http://www.rdkit.org

Hong, H. et al. Mold2, molecular descriptors from 2D structures for chemoinformatics and toxicoinformatics. J. Chem. Inf. Model. 48, 1337–1344 (2008).

Cadeddu, A., Wylie, E. K., Jurczak, J., Wampler-Doty, M. & Grzybowski, B. A. Organic chemistry as a language and the implications of chemical linguistics for structural and retrosynthetic analyses. Angew. Chem. Int. Ed. 53, 8108–8112 (2014).

Woźniak, M. et al. Linguistic measures of chemical diversity and the ‘keywords’ of molecular collections. Sci. Rep. 8, 7598 (2018).

Defferrard, M., Bresson, X. & Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. In Advances in Neural Information Processing Systems Vol. 29, 3844–3852 (NIPS, 2016).

Gilmer, J., Schoenholz, S. S., Riley, P. F., Vinyals, O. & Dahl, G. E. Neural message passing for quantum chemistry. In Proc. 34th International Conference on Machine Learning 1263–1272 (PMLR, 2017).

Coley, C. W. et al. A graph-convolutional neural network model for the prediction of chemical reactivity. Chem. Sci. 10, 370–377 (2019).

Duvenaud, D. K. et al. Convolutional networks on graphs for learning molecular fingerprints. In Advances in Neural Information Processing Systems 2224–2232 (NIPS, 2015).

Kearnes, S., McCloskey, K., Berndl, M., Pande, V. & Riley, P. Molecular graph convolutions: moving beyond fingerprints. J. Comput. Aided Mol. Des. 30, 595–608 (2016).

Roszak, R., Beker, W., Molga, K. & Grzybowski, B. A. Rapid and accurate prediction of pKa values of C–H acids using graph convolutional neural networks. J. Am. Chem. Soc. 141, 17142–17149 (2019).

Leshno, M., Lin, V. Y., Pinkus, A. & Schocken, S. Multilayer feedforward networks with a nonpolynomial activation function can approximate any function. Neural Netw. 6, 861–867 (1993).

Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. 2, 303–314 (1989).

Liu, B., Dai, Y., Li, X., Lee, W. S. & Yu, P. S. Building text classifiers using positive and unlabeled examples. In Proceedings of 3rd IEEE International Conference on Data Mining 179–186 (IEEE, 2003).

Fusilier, D. H., Montes-Y-Gómez, M., Rosso, P. & Cabrera, R. G. Detecting positive and negative deceptive opinions using PU-learning. Inf. Process. Manag. 51, 433–443 (2015).

Kwon, Y., Won, J.-H., Kim, B. J. & Paik, M. C. Uncertainty quantification using Bayesian neural networks in classification: application to biomedical image segmentation. Comput. Stat. Data Anal. 142, 106816 (2020).

Doak, B. C., Zheng, J., Dobritzsch, D. & Kihlberg, J. How beyond rule of 5 drugs and clinical candidates bind to their targets. J. Med. Chem. 59, 2312–2327 (2015).

Kiureghian, A. D. & Ditlevsen, O. Aleatory or epistemic? Does it matter? Struct. Saf. 31, 105–112 (2009).

Chao, C., Liaw, A. & Breiman, L. Using Random Forest to Learn Imbalanced Data (Univ. California, 2004).

Wu, Z. et al. MoleculeNet: a benchmark for molecular machine learning. Chem. Sci. 9, 513–530 (2018).

Tox21 Challenge (National Institutes of Health, accessed 3 February 2020); http://tripod.nih.gov/tox21/challenge/

Gaulton, A. et al. The ChEMBL database in 2017. Nucleic Acids Res. 45, D945–D954 (2016).

Jaeger, S., Fulle, S. & Turk, S. Mol2vec: unsupervised machine learning approach with chemical intuition. J. Chem. Inf. Model. 58, 27–35 (2018).

Acknowledgements

This research was developed with funding from the Defense Advanced Research Projects Agency (DARPA) under AMD award HR00111920027. The views, opinions and/or findings expressed are those of the authors and should not be interpreted as representing the official views or policies of the Department of Defense or the US Government. A.W. and B.A.G. gratefully acknowledge funding from the Symfonia Award, grant 2014/12/W/ST5/00592, from the Polish National Science Centre (NCN). Data analysis and writing of the paper by B.A.G. was supported by the Institute for Basic Science, Korea (project code IBS-R020-D1). We also thank R. Zagórowicz and colleagues from IMAPP, sp., z.o.o. for technical help in code development, and M. Eder and colleagues from the Institute of Polish Language, Cracow, Poland, for helpful discussions.

Author information

Authors and Affiliations

Contributions

W.B. and A.W. designed and performed most of the analyses and calculations. S.S. helped with data collection and analysis. B.A.G. conceived and supervised research. All authors wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors are consultants and/or stakeholders of Allchemy, Inc.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Comparison of Receiver Operational Characteristic curves of selected classifiers for certain types of compounds removed from the training set.

The Quantitative Estimate of Drug-likeness (QED, dark blue curves)3 is compared with Bayesian Neural Network (BNN) extension to RDKit AE model and the combination of BNN classifiers according to their prediction uncertainty. The models were evaluated on the subset of auxiliary test set obeying either classic (Ro5; n = 2071 molecules) or extended Rule of 5 (eRo5; n = 63), whereas the training (with exception of QED which is an already defined quantity) was performed on DRUGS+ZINC training set from which Ro5+eRo5 compounds were excluded (leaving n = 1127 molecules). Definitions of Ro5, eRo5 and bRo5 rules were taken from ref. 34.

Extended Data Fig. 2 Distributions of drug-likeness probability for drug candidates considered in Phase 3 clinical trials.

The figure quantifies the performance of our classifiers on drug candidates in advanced clinical trials – we expected that these molecules, having made it so far in the development pipeline, would be thoroughly evaluated by expert medicinal chemists and therefore free of any obvious, non-drug-like features. In panel a), this hypothesis is tested using GCNN+RDKit classifier applied to a set of n = 727 Phase 3 candidates retrieved from ChEMBL39. As seen, ~15% of these candidates are predicted to be below 0.5 drug-likeness level, and 32% below 0.85 level. In other words, the majority – but not all – of the molecules are found to be drug-like, as could be expected. b) A related question is whether the classifiers could provide some information about molecules that are likely to fail a clinical trial, though it should be remembered that such failure can be due not only to scientific reasons, but also economic, IP factors, or superior performance of a similar-indication product from a competitor. We were able to identify only a limited number of molecules that failed Phase 3 investigations (n = 89 from ClinTox dataset37). The histogram evidences that on this set and using GCNN+RDKit classifier, ~15% of compounds have drug-likeness below 0.5 and 37% below 0.85. In other words, factors that make these drugs fail are not picked up by our filters. This result reinforces our belief that the classifiers we report are most useful to triage candidates considered early in the drug-discovery process and containing more obvious non-drug-like features.

Extended Data Fig. 3 Performance of a ‘naïve’ 1-Nearest Neighbor classifier based on Tanimoto similarity.

As described in the main text, the negative, non-drug sets were pruned for molecules whose similarity to drugs was above 0.85 threshold of Tanimoto (ECFP4-based) similarity. This pre-processing step, after Bickerton’s QED work3, is intended to remove from the sets of non-drugs, the drugs’ metabolites or immediate synthetic precursors. Tanimoto similarity and the more accurate models we developed in the present work are, indeed, correlated to some degree – for instance, the Pearson correlation coefficient between the Tanimoto similarity to known drugs and the drug-likeness computed with our GCNN+ RDKit AE classifier is 0.615. To quantify the accuracy of the “naïve” Tanimoto-based measure, we constructed a Nearest-Neighbor (1-NN) classifier on the dataset used throughout our study, using the same train-test splits as for MLPs reported in Table 1. In this approach, the training set (comprised of molecules labeled as ‘drug’ or ‘non-drug’) is stored in memory – there is no parameter optimization of any kind. Prediction of drug-likeness for each test molecule (query) is involves the following steps: (i) Tanimoto similarities (based on ECFP4) between the test molecule and all molecules in the training set are calculated; (ii) The most similar one of these training-set molecules is selected; (iii) If this most similar molecule from the training set is a drug, then our test molecule is also classified as a drug; (iv) If this most similar molecule from the training set is a non-drug, then our test molecule is also classified as a non-drug. As shown in the Table, this conceptually simplistic classifier provides a surprisingly strong baseline of 82.6% (on the ZINC non-drug dataset), only 3-4% lower than MLP based on ECFP4 reported in the main text. This means that in terms of accuracy, all models with score below ~82% are actually not better than choices by Tanimoto similarity.

Extended Data Fig. 4 Counts and percentages of compounds obeying Rule of 5, extended Rule of 5 and ‘beyond’ Rule of 5 in selected datasets.

Definitions of Ro5, eRo5 and bRo5 were taken from34. These rules are disjoint and do not cover the whole chemical space. Regarding ZINC (non-drugs), a random sample of 10,000 compounds (before removal of those with high Tanimoto similarity to FDA drugs) was analyzed.

Extended Data Fig. 5 Percentages of drug-similar compounds estimated with PU-learning.

Two approaches were considered: incremental extension of reliable negatives (RN) from Liu et al.31 and decremental refinement of RN from Fusilier and co-workers32. In the incremental variant, the drug-likeness threshold for RN detection was set to 0.2, whereas in the latter method a threshold of 0.5 was used.

Extended Data Fig. 6 Comparison of toxicity and drug-likeness predictions.

Evaluation was performed by RDKit AE on compounds listed in Supplementary Table 4 and grouped here as a, “Toxic compounds” (n = 23) and b, “Organic solvents” (n = 13). Auxiliary test set data were used in c, non-US drugs (n = 1281) and d, ZINC (n = 1281). The probability of toxicity was evaluated with a balanced Random Forest classifier trained on ClinTox dataset37. For results of other classifiers including toxicity predictions trained on the Tox21 dataset38, see SI, Section 6.

Supplementary information

Supplementary Information

Supplementary Figs. 1–11 and Tables 1–7.

Source data

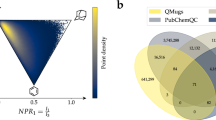

Source Data Fig. 1

Raw data used to compute histograms.

Source Data Fig. 2

Raw data used to compute histograms.

Source Data Fig. 4

Raw data used to prepare scatterplots.

Source Data Fig. 5

Raw data used for plotting precision-sensitivity curves.

Source Data Fig. 6

Raw data used for calculation of ROC curves.

Source Data Extended Data Fig. 1

Raw data used for calculation of ROC curves.

Source Data Extended Data Fig. 2

Raw data used to computed histograms.

Source Data Extended Data Fig. 3

Data presented in the table.

Source Data Extended Data Fig. 4

Data presented in the table.

Source Data Extended Data Fig. 5

Data presented in the table.

Source Data Extended Data Fig. 6

Raw data used to prepare scatterplots.

Rights and permissions

About this article

Cite this article

Beker, W., Wołos, A., Szymkuć, S. et al. Minimal-uncertainty prediction of general drug-likeness based on Bayesian neural networks. Nat Mach Intell 2, 457–465 (2020). https://doi.org/10.1038/s42256-020-0209-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-020-0209-y

This article is cited by

-

DBPP-Predictor: a novel strategy for prediction of chemical drug-likeness based on property profiles

Journal of Cheminformatics (2024)

-

MolFilterGAN: a progressively augmented generative adversarial network for triaging AI-designed molecules

Journal of Cheminformatics (2023)

-

Quantitatively mapping local quality of super-resolution microscopy by rolling Fourier ring correlation

Light: Science & Applications (2023)

-

Evaluation guidelines for machine learning tools in the chemical sciences

Nature Reviews Chemistry (2022)

-

A hybrid framework for improving uncertainty quantification in deep learning-based QSAR regression modeling

Journal of Cheminformatics (2021)